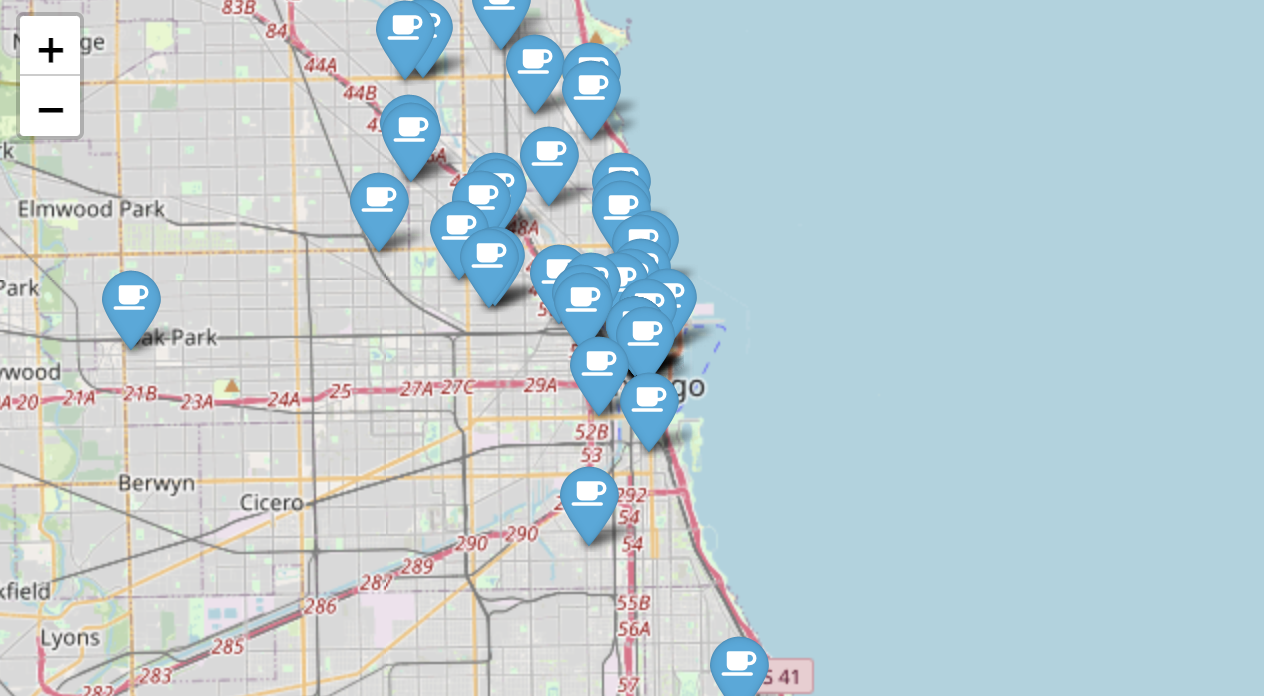

시카고 샌드위치 맛집 데이터 분석

메인페이지

-

url 접근

-ua = UserAgent()

-req = Request(url, headers={"user-agent":ua.ie})

-req = Request(url, headers={"user-agent" : "Chrome"}) -

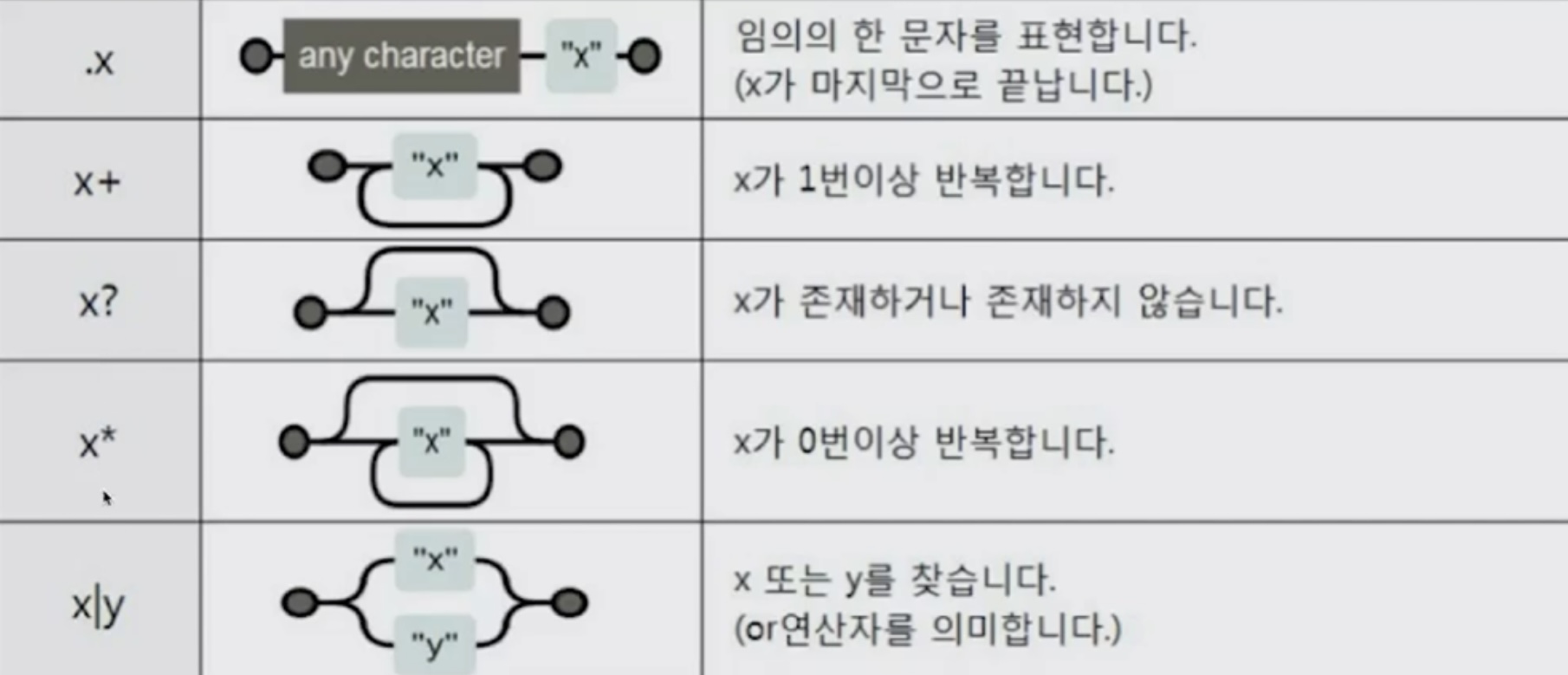

정규식

-re.split(("\n|\r\n"), tmp_string)

-re.search("$\d+.(\d+)?", price_tmp).group()

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

from fake_useragent import UserAgent

url_base = "https://www.chicagomag.com/"

url_sub = "chicago-magazine/november-2012/best-sandwiches-chicago/"

url = url_base + url_sub

ua = UserAgent()

req = Request(url, headers={"user-agent":ua.ie})

# req = Request(url, headers={"user-agent" : "Chrome"})

html = urlopen(req)

soup = BeautifulSoup(html, "html.parser")

print(soup.prettify())

from urllib.parse import urljoin

import re

url_base = "https://www.chicagomag.com/"

rank = []

main_menu = []

cafe_name = []

url_add = []

list_soup = soup.find_all("div", "sammy")

for item in list_soup:

rank.append(item.find(class_="sammyRank").text)

tmp_string = item.find(class_="sammyListing").text

main_menu.append(re.split(("\n|\r\n"), tmp_string)[0])

cafe_name.append(re.split(("\n|\r\n"), tmp_string)[1])

url_add.append(urljoin(url_base, item.find("a")["href"]))

import pandas as pd

data = {

"Rank":rank,

"Menu":main_menu,

"Cafe":cafe_name,

"URL":url_add,

}

df = pd.DataFrame(data)

df = pd.DataFrame(data, columns=["Rank", "Cafe", "Menu", "URL"])

df.to_csv("../data/03. best_sandwiches_list_chicago.csv", sep=",", encoding="utf-8")하위페이지

import pandas as pd

from urllib.request import urlopen, Request

from fake_useragent import UserAgent

from bs4 import BeautifulSoup

df = pd.read_csv("../data/03. best_sandwiches_list_chicago.csv", index_col=0)

price=[]

address=[]

for idx, row in df.iterrows():

req = Request(row["URL"], headers={"user-agent":"Chrome"})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, "html.parser")

gettings = soup_tmp.find("p", "addy").get_text()

price_tmp = re.split(".,", gettings)[0]

tmp = re.search("\$\d+\.(\d+)?", price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp)+2:])

df["Price"] = price

df["Address"] = address

df=df.loc[:, ["Rank", "Cafe", "Menu", "Price", "Address"]]

df.set_index("Rank", inplace=True)

df.to_csv("../data/03. best_sandwiches_list_chicago2.csv", sep=",", encoding="utf-8")지도시각화

import folium

import pandas as pd

import numpy as np

import googlemaps

from tqdm import tqdm

df = pd.read_csv("../data/03. best_sandwiches_list_chicago2.csv", index_col=0)

gmaps_key = “키 값”

gmaps = googlemaps.Client(key=gmaps_key)

lat = []

lng = []

for idx, row in tqdm(df.iterrows()):

if not row["Address"] == "Multi location":

target_name = row["Address"] + ", Chicago"

gmaps_output = gmaps.geocode(target_name)

location_output = gmaps_output[0].get("geometry")

lat.append(location_output["location"]["lat"])

lng.append(location_output["location"]["lng"])

else:

lat.append(np.nan)

lng.append(np.nan)

df["lat"] = lat

df["lng"] = lng

mapping = folium.Map(location=[41.8781136, -87.6297982], zoom_start=11)

for idx, row in df.iterrows():

if not row["Address"] == "Multi location":

folium.Marker(

location=[row["lat"], row["lng"]],

popup=row["Cafe"],

tooltip=row["Menu"],

icon=folium.Icon(

icon="coffee",

prefix="fa"

)

).add_to(mapping)

mapping