파이토치

-view 함수가 있어서 expanddims 같은 함수가 따로 필요 없다.

-함수 끝에 를 붙이면 inplace 명령이 된다. (안되는 함수도 존재)

-y.backward() 함수 실행하면 x의 미분값이 자동으로 갱신 # y.grad로 확인

-torch.autograd.grad() 함수를 사용해 tf.GradientTape 처럼 사용할 수 있다.

import torch

import torch.nn.functional as F

import torch.optim as optim

from torch import nn

from torchvision import datasets, transforms

torch.manual_seed(777)

batch_size = 32train_loader = torch.utils.data.DataLoader(

datasets.MNIST('dataset/', download=True, train=True,

transform=transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize(mean=(0.5,), std=(0.5,))

]

)),

batch_size=batch_size,

shuffle=True

)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('dataset/', download=True, train=False,

transform=transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize(mean=(0.5,), std=(0.5,))

]

)),

batch_size=batch_size,

shuffle=False

)

images, labels = next(iter(train_loader))

images.shape, images.dtype

# (torch.Size([32, 1, 28, 28]), torch.float32)

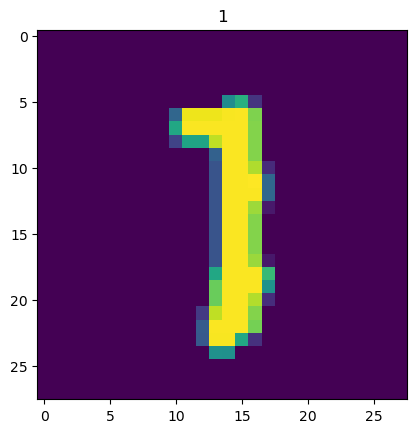

torch_image = torch.squeeze(images[0])

torch_image.shape

# torch.Size([28, 28])

image = torch_image.numpy()

label = labels[0].numpy()

plt.title(label)

plt.imshow(image)

plt.show()

모델 정의

epoch

- batch

- model 연산

- loss 구하고

- grad (loss값 이용해서) grad 구하고

- model update 모델 업뎃

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 20, 5, 1)

self.conv2 = nn.Conv2d(20, 50, 5, 1)

self.fc1 = nn.Linear(4*4*50, 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4*4*50)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return F.log_softmax(x, dim=1)device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = Net().to(device)

x, y = next(iter(train_loader))

x.shape, y.shape

# (torch.Size([32, 1, 28, 28]), torch.Size([32]))opt = optim.SGD(model.parameters(), 0.03)

for epoch in range(1):

# train

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target = data.to(device), target.to(device)

opt.zero_grad()

output = model(data)

# 예측 # 정답

loss = F.nll_loss(output, target)

# log_softmax랑 nll_loss 짝꿍. crossentropy 쓴거랑 같은 효과

loss.backward() # 미분

opt.step()

print('batch {} loss : {}'.format(batch_idx, loss.item()))

# eval

model.eval()

test_loss = 0

with torch.no_grad():

for data, target in test_loader:

output = model(data)

test_loss += F.nll_loss(output, target).item()

test_loss /= (len(test_loader.dataset) // batch_size)

print('Epoch {} test loss : {}'.format(epoch, test_loss))모델링

-nn.Sequentital

-Sub-class of nn.Module

nn.Sequentital

import torchsummarynn.Conv2d(

in_channels=3,

out_channels=32,

kernel_size=3,

stride=1,

padding=1,

bias=False

)

nn.Linear(

in_features=784,

out_features=500,

bias=False

)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = nn.Sequential(

nn.Linear(784, 15),

nn.Sigmoid(),

nn.Linear(15, 10),

nn.Sigmoid()

)

torchsummary.summary(model, (784,))Sub-class of nn.Module

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 20, kernel_size=3, padding=1)

self.conv2 = nn.Conv2d(20, 50, kernel_size=3, padding=1)

self.fc1 = nn.Linear(4900, 500)

self.fc2 = nn.Linear(500, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(-1, 4900)

x = F.relu(self.fc1(x))

x = F.log_softmax(self.fc2(x), dim=1)

return x

model = Net()

torchsummary.summary(model, (1,28,28))ResNet

class ResidualBlock(nn.Module):

def __init__(self, in_channel, out_channel):

super(ResidualBlock, self).__init__()

self.conv1 = nn.Conv2d(in_channel, out_channel, kernel_size=1, padding=0)

self.conv2 = nn.Conv2d(out_channel, out_channel, kernel_size=3, padding=1)

self.conv3 = nn.Conv2d(out_channel, out_channel, kernel_size=1, padding=0)

if in_channel != out_channel:

self.shortcut = nn.Sequential(

nn.Conv2d(in_channel, out_channel, kernel_size=1, padding=0)

)

else:

self.shortcut = nn.Sequential()

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.relu(self.conv2(out))

out = F.relu(self.conv3(out))

out += self.shortcut(x)

return out

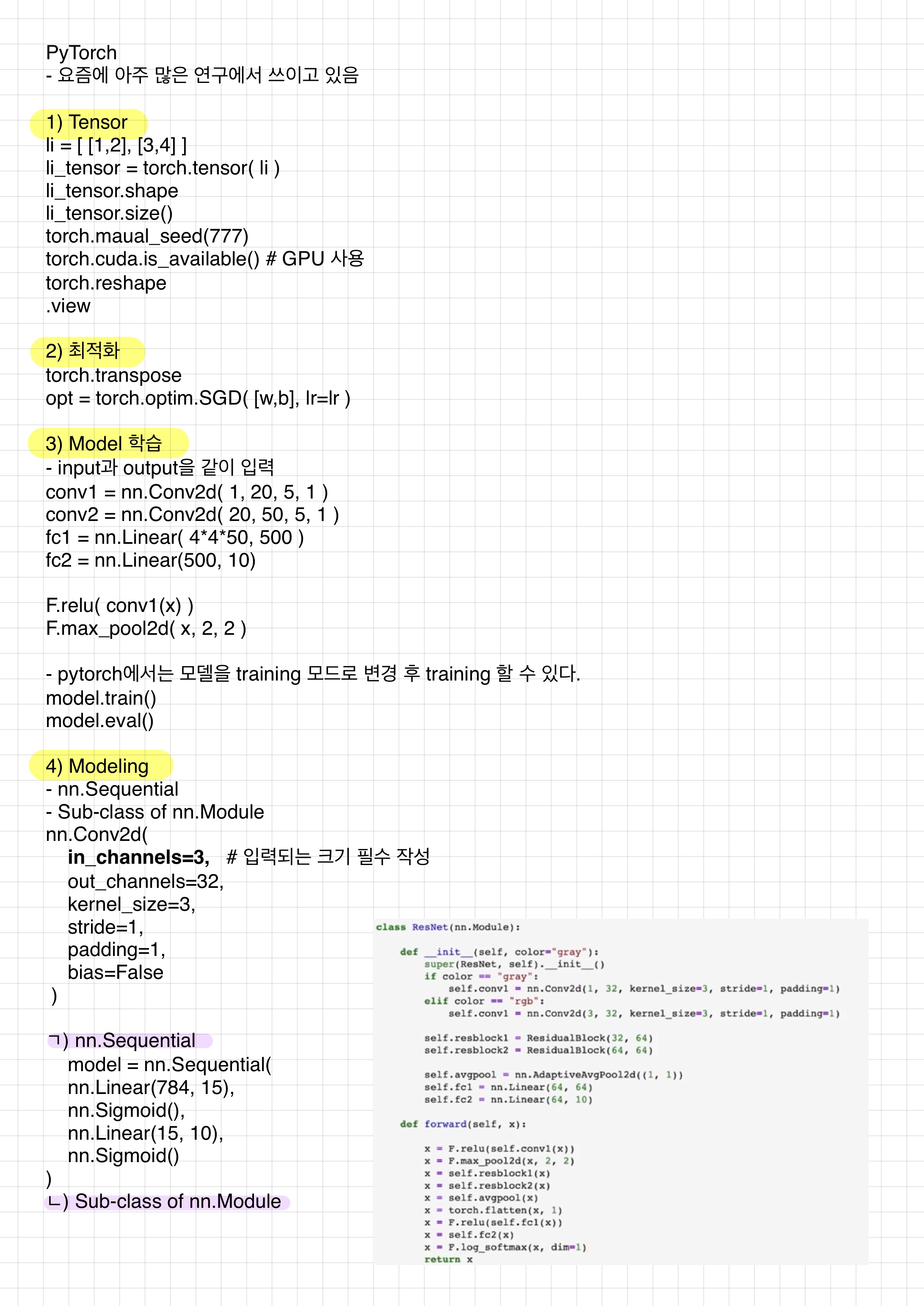

class ResNet(nn.Module):

def __init__(self, color='gray'):

super(ResNet, self).__init__()

if color == 'gray':

self.conv1 = nn.Conv2d(1, 32, kernel_size=3, stride=1, padding=1)

elif color == 'rgb':

self.conv1 = nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1)

self.resblock1 = ResidualBlock(32, 64)

self.resblock2 = ResidualBlock(64, 64)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc1 = nn.Linear(64, 64)

self.fc2 = nn.Linear(64, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = self.resblock1(x)

x = self.resblock2(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = F.relu(self.fc1(x))

x = self.fc2(x)

x = F.log_softmax(x, dim=1)

return xmodel = ResNet()

torchsummary.summary(model, (1, 28, 28))모델 학습

from torch.optim.lr_scheduler import ReduceLROnPlateauoptimizer = optim.Adam(model.parameters(), lr=0.03)

scheduler = ReduceLROnPlateau(optimizer, mode='min', verbose=True)def train_loop(dataloader, model, loss_fn, optimizer, scheduler, epoch):

model.train()

size = len(dataloader)

for batch, (x, y) in enumerate(dataloader):

x, y = x.to(device), y.to(device)

pred = model(x)

loss = loss_fn(pred, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss = loss.item()

print(f'Epoch {epoch} : [{batch}/{size}] loss : {loss.item()}')

scheduler.step(loss)

return loss.item()for epoch in range(10):

loss = train_loop(train_loader, model, F.nll_loss, optimizer, scheduler, epoch)

print(f'Epoch : {epoch} loss : {loss}')모델 저장

# 1. weight만 저장

torch.save(model.state_dict(), 'model_weights.pth')

model.load_state_dict(torch.load('model_weights.pth'))

# 2. 구조도 함께 저장

torch.save(model, 'model.pth')

model = torch.load('model.pth')데이터

from torchvision import datasets, transforms

import ostrain_dir = '../../deep_learning_team3/data/Car_Brand_Logos/Train/'

test_dir = '../../deep_learning_team3/data/Car_Brand_Logos/Test/'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

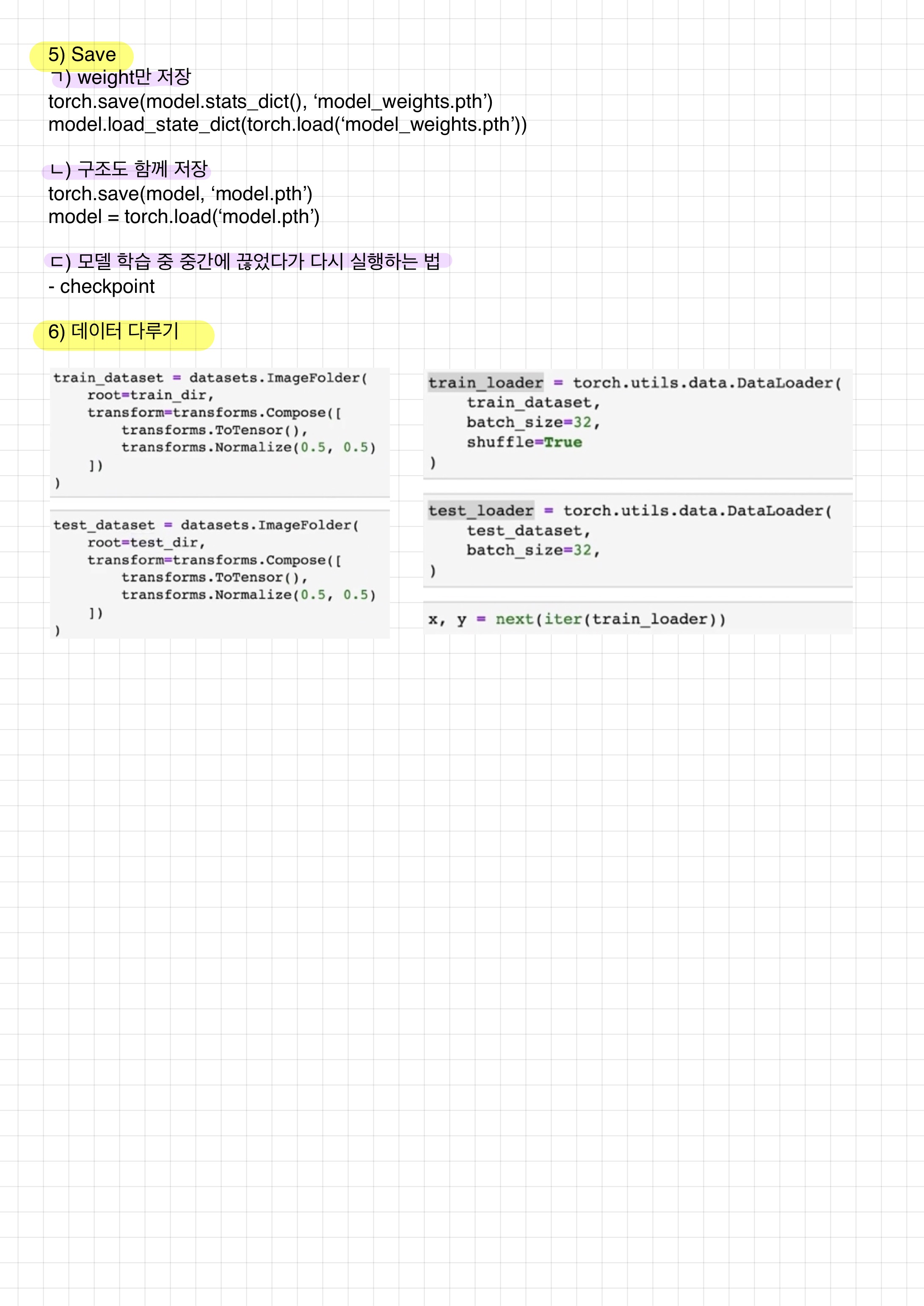

os.listdir(train_dir + 'hyundai')train_dataset = datasets.ImageFolder(

root=train_dir,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(0.5, 0.5)

])

)

test_dataset = datasets.ImageFolder(

root=test_dir,

transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize(0.5, 0.5)

])

)

train_loader = torch.utils.data.DataLoader(

train_dataset,

batch_size=32,

shuffle=True

)

test_loader = torch.utils.data.DataLoader(

test_dataset,

batch_size=32

)

x.shape, y.shape데이터셋 구현

train_paths = glob(train_dir + '/*.png')

test_paths = glob(test_dir + '/*.png')class Dataset(torch.utils.data.Dataset):

def __init__(self, data_paths, transform=None):

super(Dataset).__init__()

self.data_paths = data_paths

self.transform = transform

def __len__(self,):

return len(self.data_paths)

def __getitem__(self, idx):

path = self.data_paths(idx)

image = Image.open(path)

label_name = path.split('.png')[0].split('_')[-1].strip()

label = label_list.index(label_name)

if self.transform:

image = self.transform(image)

return image, label

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

batch_size = 32train_loader = torch.utils.data.DataLoader(

Dataset(train_paths, transform=transforms.ToTensor()),

batch_size=32,

shuffle=True

)

test_loader = torch.utils.data.DataLoader(

Dataset(test_paths, transform=transforms.ToTensor()),

batch_size=32

)

x, y = next(iter(train_loader))

x.shape, y.shape