서버 정보

- 서버 사양

| CPU | Mem | Disk | OS |

|---|---|---|---|

| 4Core | 8GB | 50GB | Ubuntu 22.04 |

- Network

| Node Name | Host Name | Private IP |

|---|---|---|

| Master | master01 | 10.101.0.4 |

| Worker1 | worker01 | 10.101.0.14 |

| Worker2 | worker02 | 10.101.0.16 |

사전 설정

- 호스트명 설정

각 서버에 접속하여 올바른 호스트 명으로 입력하여 설정한다.

터미널을 재접속해야 적용된 호스트 명으로 출력된다.

hostnamectl set-hostname --static master01 | worker01 | worker02- swap 메모리 해제

스왑 메모리를 해제해야 kubelet이 정상 작동한다.

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# 위 명령어 후 적용하려면 리부팅이 필수 동반된다.

reboot 또는 init 6- 방화벽 설정

기본 방화벽 서비스 데몬 비활성화

systemctl stop ufw

systemctl disable ufw- 사용할 일반 계정 추가

sudo 권한을 가진 일반 계정으로 작업을 진행할 예정이므로 아래와 같이 추가한다.

root@master01:~# adduser mimic1995 # 사용할 계정 명으로 입력

Adding user `mimic1995' ...

Adding new group `mimic1995' (1001) ...

Adding new user `mimic1995' (1001) with group `mimic1995' ...

Creating home directory `/home/mimic1995' ...

Copying files from `/etc/skel' ...

New password: # 비밀번호 입력

Retype new password: # 비밀번호 재확인 입력

passwd: password updated successfully

Changing the user information for mimic1995

Enter the new value, or press ENTER for the default

Full Name []: # Enter

Room Number []: # Enter

Work Phone []: # Enter

Home Phone []: # Enter

Other []: # Enter

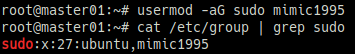

Is the information correct? [Y/n] Y # Y 입력이제 생성한 계정에 sudo 권한을 부여한다.

usermod -aG sudo mimic1995

cat /etc/group | grep sudo위 이미지와 같이 sudo 그룹에 생성한 일반 계정 명이 포함되어 있으면 된다.

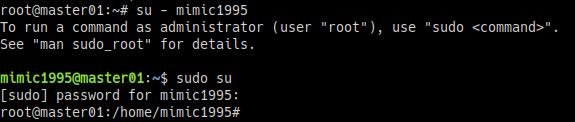

한 번 더 확인하고자 생성한 일반 계정으로 접속하여 sudo 명령어를 테스트해본다.

su - mimic1995

sudo su위 이미지와 같이 root 로그인이 되면 계정 설정은 완료되었다.

아래부터는 실제 설치 과정이며, 생성한 일반 계정으로 터미널 접속하여 진행한다.

container runtime setting (모든 노드 서버에서 진행)

- Using Docker Repository

sudo apt update

sudo apt install -y ca-certificates curl gnupg lsb-release

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

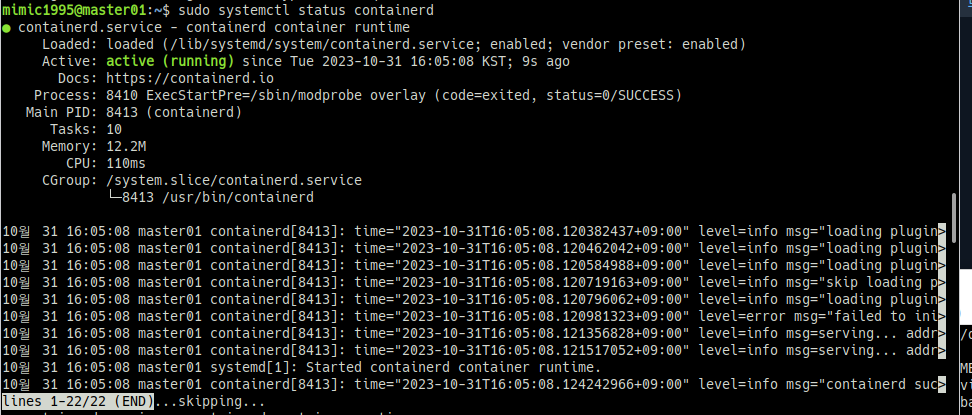

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list- Install Containerd

sudo apt update

sudo apt install -y containerd.io

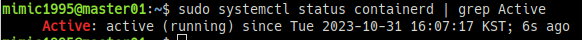

sudo systemctl status containerd- Containerd configuration for Kubernetes

cat <<EOF | sudo tee -a /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

EOF

sudo sed -i 's/^disabled_plugins \=/\#disabled_plugins \=/g' /etc/containerd/config.toml

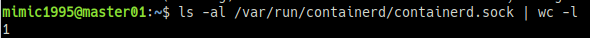

sudo systemctl restart containerdcontainerd의 정상 동작 여부를 확인하기 위해 데몬 상태와 socket을 확인한다.

sudo systemctl status containerd | grep Activels -al /var/run/containerd/containerd.sock | wc -l참고 URL

https://www.itzgeek.com/how-tos/linux/ubuntu-how-tos/install-containerd-on-ubuntu-22-04.html

kubeadm, kubelet, kubectl 설치 (모든 노드 서버에서 진행)

- 패키지 설명

kubeadm: 클러스터 부트스트랩 명령어, 클러스터 초기화 및 관리 명령 가능

kubelet: 클러스터 모든 머신에서 실행되는 파드, 컨테이너 시작 등의 작업을 수행하는 컴포넌트, 데몬 형태로 동작하며 컨테이너를 관리

kubectl: 클러스터 통신 커맨드 라인 유틸리티, 클라이언트 전용

참고 URL

https://kubernetes.io/ko/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

- 설치 1.30.x (2024/07/29 수정)

cat <<EOF > kube_install.sh

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl gnupg

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

sudo chmod 644 /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo chmod 644 /etc/apt/sources.list.d/kubernetes.list

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

EOF

sudo sh kube_install.sh참고 URL

https://kubernetes.io/docs/tasks/tools/install-kubectl-linux/

- 설치 1.28.x

cat <<EOF > kube_install.sh

# 1. apt 패키지 색인을 업데이트하고, 쿠버네티스 apt 리포지터리를 사용하는 데 필요한 패키지를 설치한다.

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

# 2. 공개 사이닝 키를 다운로드 한다.

# google 막힘

# sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://dl.k8s.io/apt/doc/apt-key.gpg

# 3. 쿠버네티스 apt 리포지터리를 추가한다.

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

# 4. apt 패키지 색인을 업데이트하고, kubelet, kubeadm, kubectl을 설치하고 해당 버전을 고정한다.

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

EOF

sudo sh kube_install.sh참고 URL

위 파일로 404 Not found가 뜰 경우 아래 파일로 재시도

sudo rm -f /etc/apt/keyrings/kubernetes-archive-keyring.gpgcat <<EOF > kube_install.sh

# 1. apt 패키지 색인을 업데이트하고, 쿠버네티스 apt 리포지터리를 사용하는 데 필요한 패키지를 설치한다.

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl

# 2. 구글 클라우드의 공개 사이닝 키를 다운로드 한다.

curl -fsSL https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-archive-keyring.gpg

# 3. 쿠버네티스 apt 리포지터리를 추가한다.

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /" | sudo tee /etc/apt/sources.list.d/kubernetes.list

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

# 4. apt 패키지 색인을 업데이트하고, kubelet, kubeadm, kubectl을 설치하고 해당 버전을 고정한다.

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

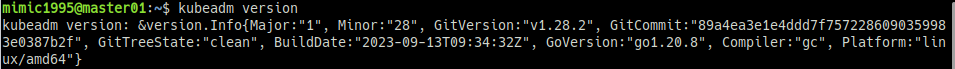

EOFkubeadm 버전을 확인한다.

kubeadm version- netfilter bridge 설정

sudo -i

modprobe br_netfilter

echo 1 > /proc/sys/net/ipv4/ip_forward

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

exit터미널을 완전히 접속 종료하고 재접속한다.

(su - 로 다른 계정을 통해 접속한 거라면 해당 터미널 창을 완전히 종료하고 난 뒤에 재접속해야 다음 초기화 명령어가 정상동작함)

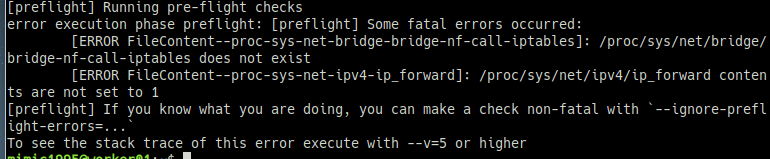

이런 에러가 발생한다면 netfilter 설정이 제대로 되지 않은 것이므로 재확인해봐야 한다.

설정이 잘 되지 않는다면 그냥 root로 터미널 접근하여 modprobe ~ 부터 ~call-iptables까지 세 줄을 그냥 치고 로그아웃하고 재접속 하는 것도 방법이다.

Master Node 세팅

- 마스터 노드 초기화

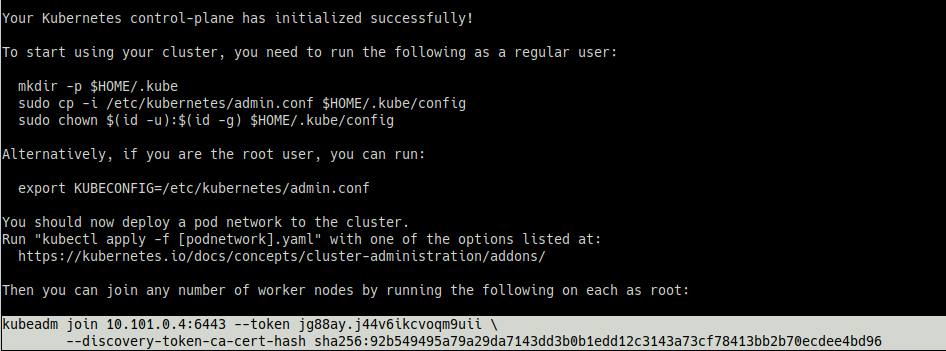

sudo kubeadm initmimic1995@master01:~$ sudo kubeadm init [sudo] password for mimic1995: [init] Using Kubernetes version: v1.28.3 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' W1031 16:35:18.302193 1263 checks.go:835] detected that the sandbox image "registry.k8s.io/pause:3.6" of the container runtime is inconsistent with that used by kubeadm. It is recommended that using "registry.k8s.io/pause:3.9" as the CRI sandbox image. [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master01] and IPs [10.96.0.1 10.101.0.4] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [localhost master01] and IPs [10.101.0.4 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [localhost master01] and IPs [10.101.0.4 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 9.005218 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node master01 as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers] [mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule] [bootstrap-token] Using token: jg88ay.j44v6ikcvoqm9uii [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 10.101.0.4:6443 --token jg88ay.j44v6ikcvoqm9uii \ --discovery-token-ca-cert-hash sha256:92b549495a79a29da7143dd3b0b1edd12c3143a73cf78413bb2b70ecdee4bd96

5분 내외로 완료되며, success 시 아래 문구가 출력된다. 마지막에 출력되는 kubeadm join~ 구문은 워커 노드를 연동시킬 때 사용되는 토큰(Token) 정보와 해시(hash) 값이 포함되어 있으므로 별도로 메모해두었다가 워커 노드 설정 후 연동하는 단계에서 사용한다.

# kubeadm join~ 부분 복사

echo "kubeadmin join~(끝까지 입력)" > join_command.txt- kubeadm init 에러 발생 1

I0729 15:59:25.556083 11609 version.go:256] remote version is much newer: v1.30.3; falling back to: stable-1.28

[init] Using Kubernetes version: v1.28.12

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR DirAvailable--var-lib-etcd]: /var/lib/etcd is not empty

[preflight] If you know what you are doing, you can make a check non-fatal with --ignore-preflight-errors=...

To see the stack trace of this error execute with --v=5 or highersudo rm -rf /var/lib/etcd

sudo mkdir -p /var/lib/etcd

sudo chown -R $(whoami):$(whoami) /var/lib/etcd- kubeadm init 에러 발생 2

[ERROR Port-10250]: Port 10250 is in usesudo lsof -i :10250

sudo kill -9 <PID>- kubeadm init 에러 발생 3

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables does not exist

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1sudo modprobe br_netfilter

echo 'br_netfilter' | sudo tee /etc/modules-load.d/br_netfilter.conf

echo 'net.bridge.bridge-nf-call-iptables = 1' | sudo tee /etc/sysctl.d/99-kubernetes.conf

sudo sysctl --system

echo 'net.ipv4.ip_forward = 1' | sudo tee -a /etc/sysctl.conf

sudo sysctl -p- join 명령어 관련 번외

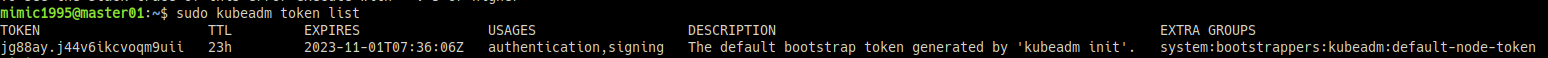

만일 토큰 값을 분실한 경우 kubeadm 명령어로 재확인이 가능하다. 만료일도 EXPIRES 부분으로 출력된다.

sudo kubeadm token list토큰 값은 만료기간이 있기 때문에 토큰 값이 만료되고 나서 join을 진행하는 경우 새로운 토큰을 생성해주면 된다.

# 단순 create

sudo kubeadm token create

# 생성된 토큰 값을 이용한 join 명령어까지 동시출력

sudo kubeadm token create --print-join-command

sudo kubeadm token list해시 값 확인은 아래 명령어로 가능하다.

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'join 명령어 default format은 아래와 같다.

kubeadm join <Kubernetes API Server:PORT> --token <Token 값> --discovery-token-ca-cert-hash sha256:<ash 값>- .kube/config 설정

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config만일 아래와 같은 에러가 발생한다면 환경 변수로 config 파일을 등록해줍니다.

# 에러 내역

E1101 11:17:08.556340 14581 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E1101 11:17:08.557231 14581 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E1101 11:17:08.558598 14581 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E1101 11:17:08.560351 14581 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

E1101 11:17:08.562372 14581 memcache.go:265] couldn't get current server API group list: Get "http://localhost:8080/api?timeout=32s": dial tcp 127.0.0.1:8080: connect: connection refused

The connection to the server localhost:8080 was refused - did you specify the right host or port?# 임시 환경 변수 등록

export KUBECONFIG=$HOME/.kube/config

echo $KUBECONFIG

# 영구 등록 전역 등록

vi ~/.bashrc

# 맨 아래에 내용 추가

export KUBECONFIG=$HOME/.kube/config

:wq

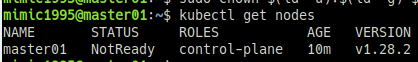

source ~/.bashrc- node 목록에 master 확인

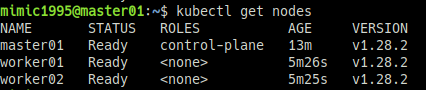

kubectl get nodesWorker Node join

- join command 입력 (워커 노드에서 입력)

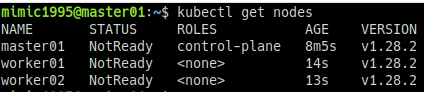

sudo kubeadm join ~- 마스터 노드에서 연동 확인 (마스터 노드에서 입력)

kubectl get nodes- init, join 관련 번외

혹시 잘못된 경우 아래 명령어로 초기 설정으로 원복할 수 있다.

sudo kubeadm resetCilium 설치

- 패키지 설명

cilium : Pod Network를 구축하는 CNI Plugin, 리눅스 BPF를 사용하고 각 클러스터 노드에서 파드 형태로 실행된다.

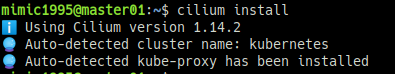

- cilium 설치 (마스터 노드에서 입력)

curl -LO https://github.com/cilium/cilium-cli/releases/latest/download/cilium-linux-amd64.tar.gz

sudo tar xzvfC cilium-linux-amd64.tar.gz /usr/local/bin

cilium install참고 URL

https://kubernetes.io/docs/tasks/administer-cluster/network-policy-provider/cilium-network-policy/

- 마스터 노드에서 상태 확인 (마스터 노드에서 입력)

kubectl get nodesSTATUS 부분이 Ready 인지 확인한다.

install 완료 시 process 확인

mimic1995@master01:~$ ps -ef | grep cilium root 17635 17218 1 11:25 ? 00:00:03 cilium-agent --config-dir=/tmp/cilium/config-map root 17943 17635 0 11:25 ? 00:00:00 cilium-health-responder --listen 4240 --pidfile /var/run/cilium/state/health-endpoint.pid mimic19+ 18913 9422 0 11:30 pts/0 00:00:00 grep --color=auto cilium```