2023.10.23

Today's agenda

- SIFT algorithm

- OpenCV Feature matching

- Homography

1. SIFT algorithm

When we see an image there is like a corner and edge and some features there

if we just see that it looke like a shape of something, but if zoom it it can not be a shape. it can be a edge or just a line

SIFT algorithm used for such a image in another image. it detects some of feature point and matching the original image

SIFT(Scale Invariant Feature Transform) algorithm

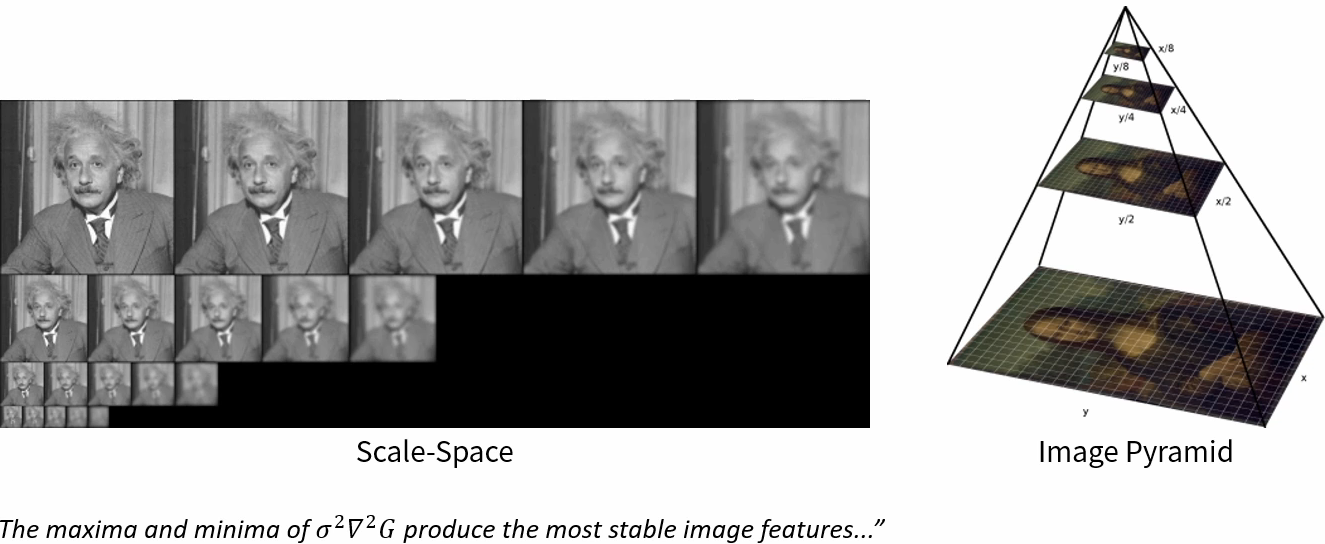

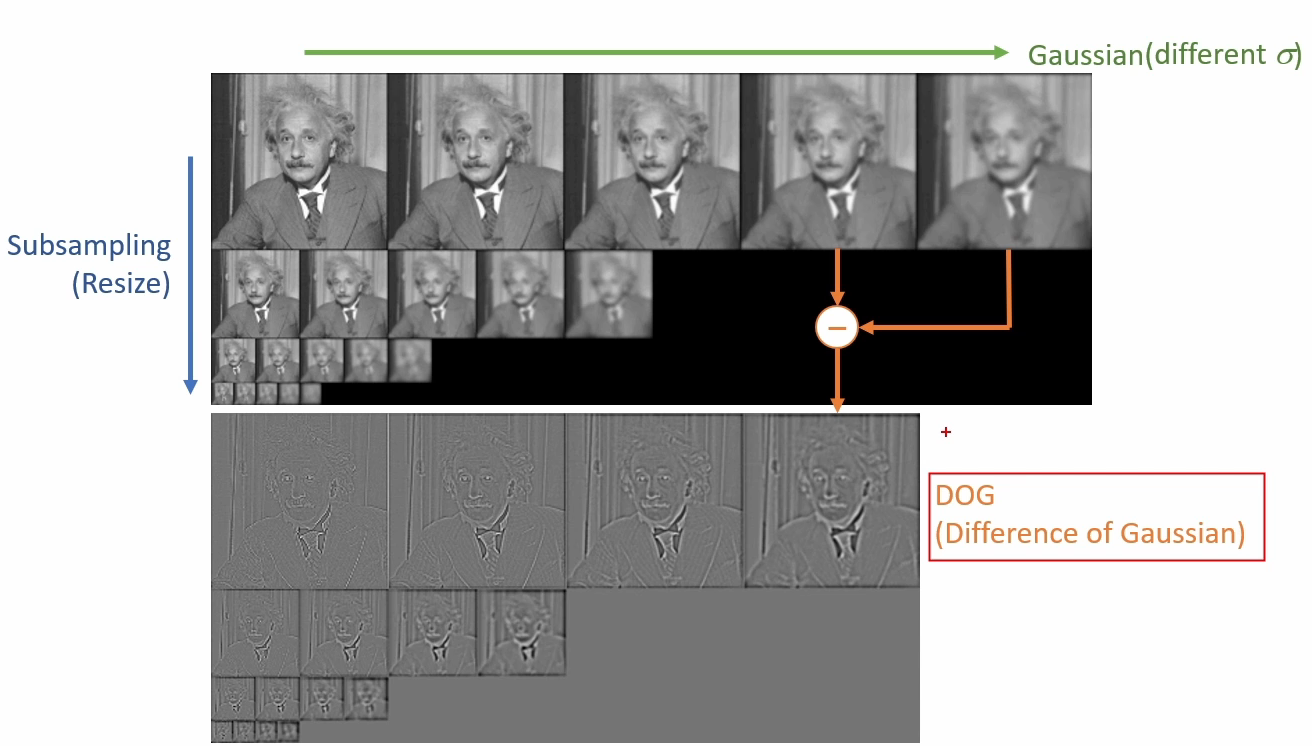

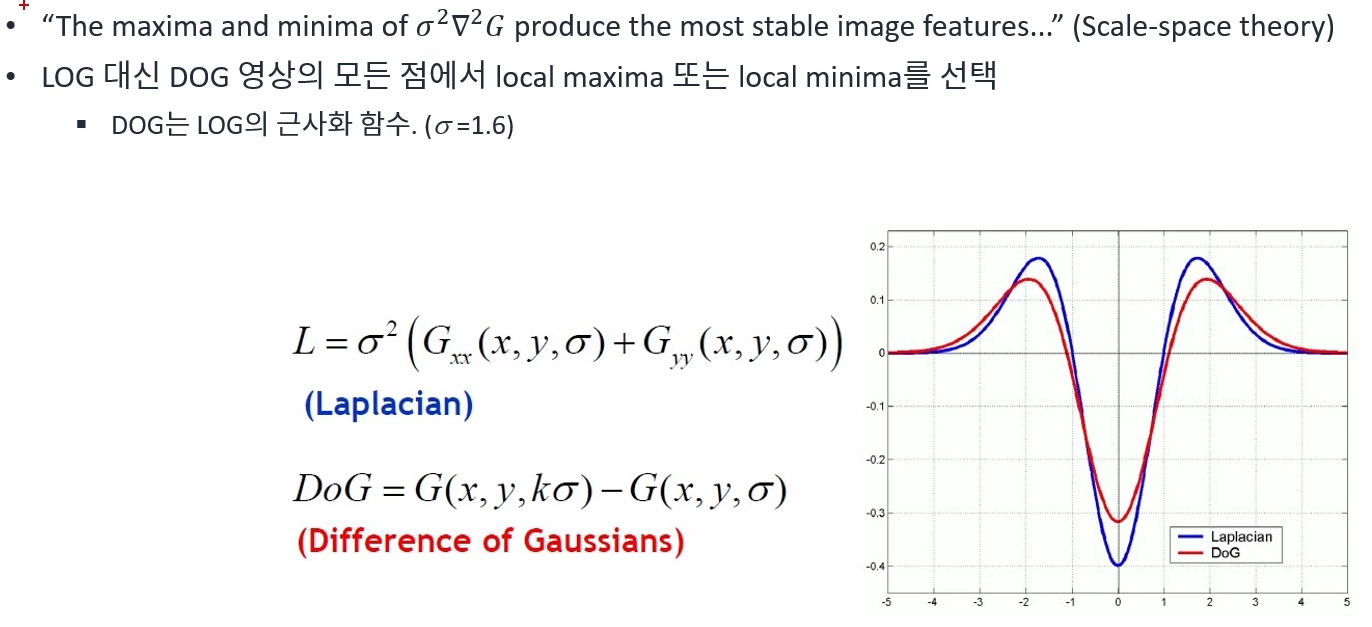

Using scale-space or image pyramid find the features in image that keep detecting one shape or feature, we can find a feature point

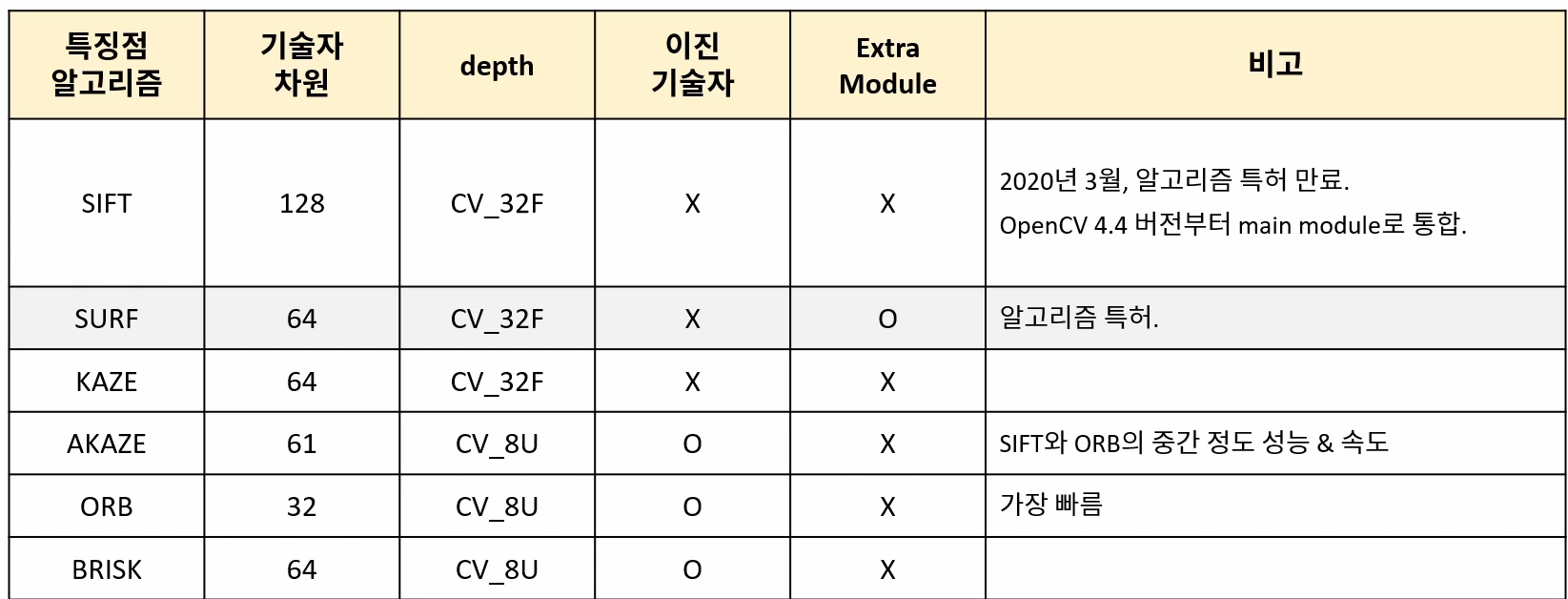

SIFT algorithm has 5 more algorithms(SIFT, SURF, KAZE, AKAZE, ORB)

feature point keypoint interest point

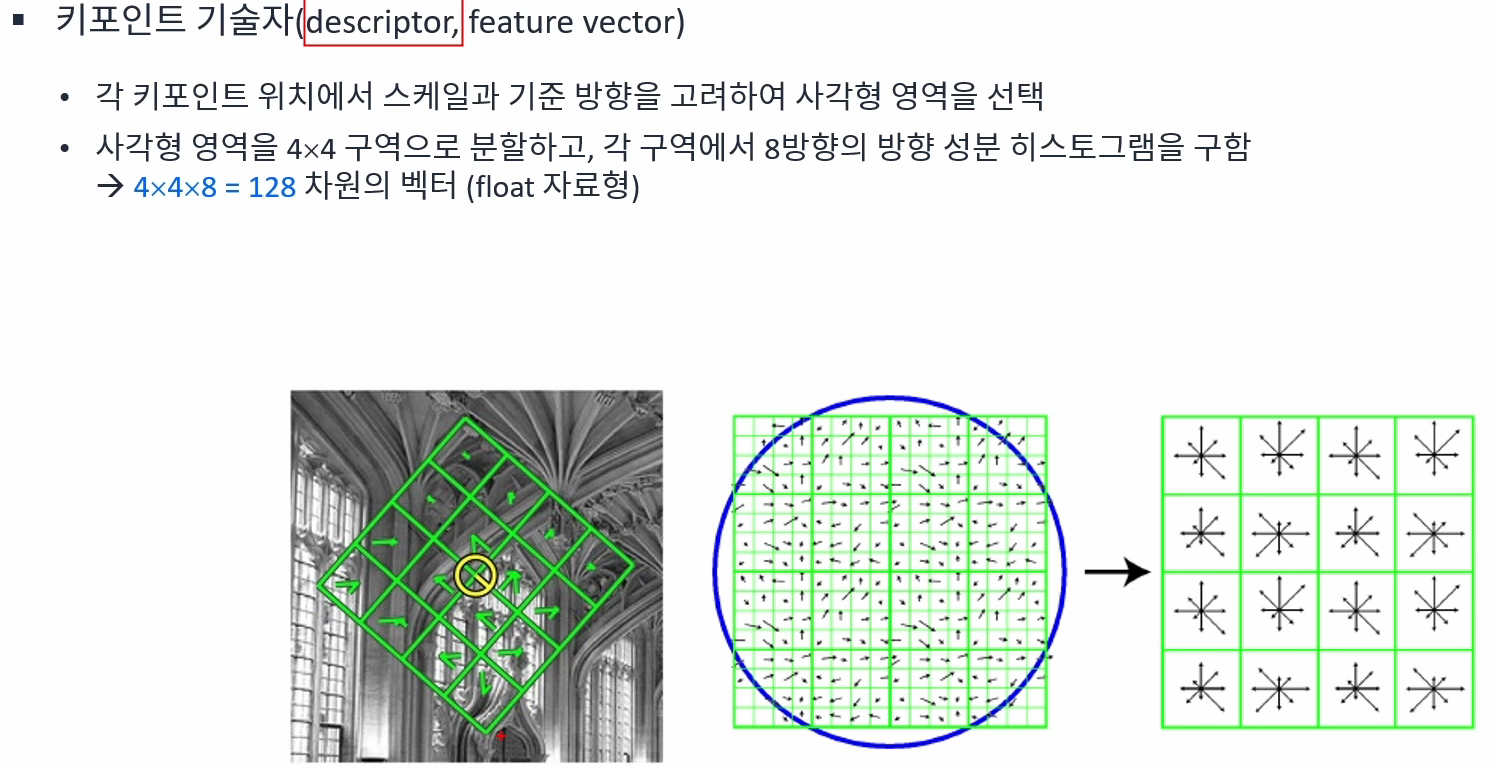

descriptor feature vector

SIFT order

Detector

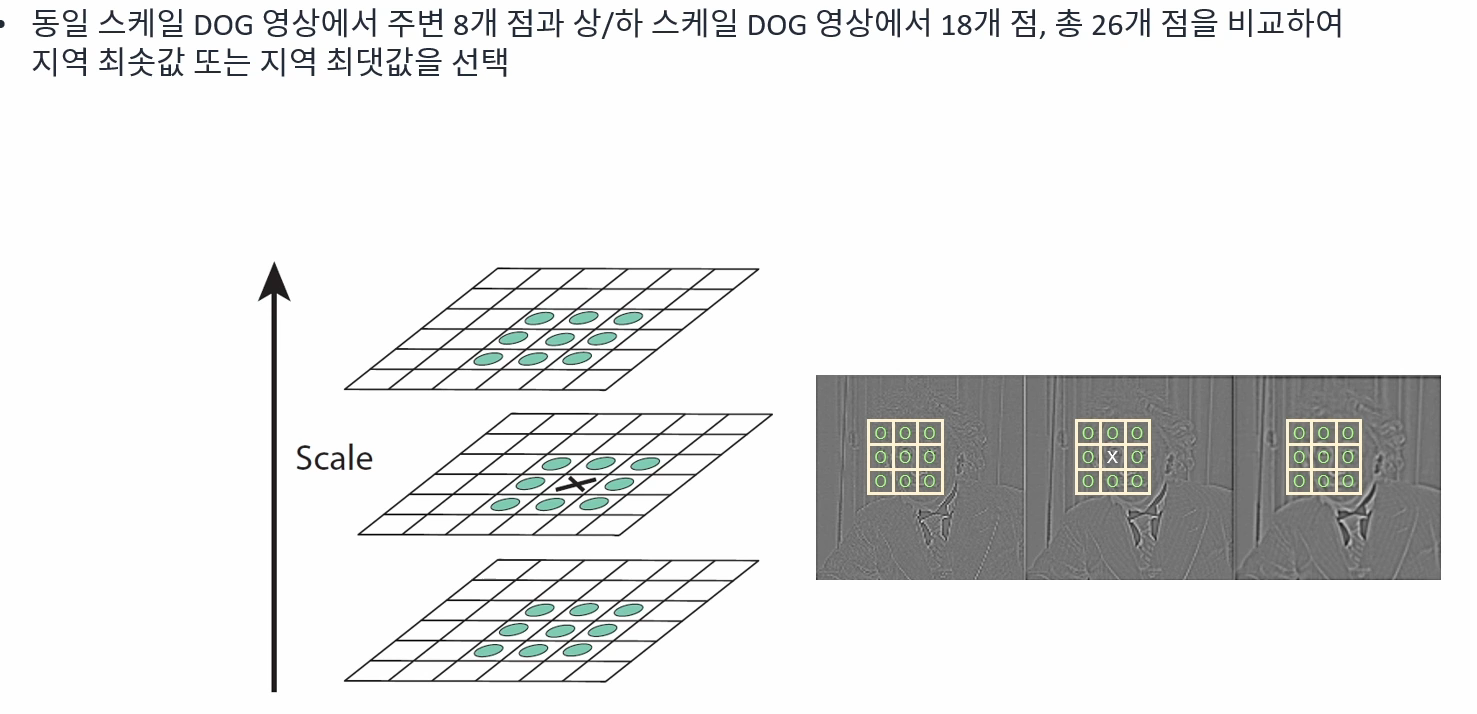

1. Scale-space extrema detection

- Keypoint localization

Descriptor

3. Orientation assignment

Extract part image around of keypoint for vector data

In part image, calculate all of gradiant feature vector data histogral(36 numbers of bin for 360 degrees)

- Keypoint description

2. OpenCV Feature matching

virtual void Feature2D::detect(InputArray image, std::vector<KeyPoint>& keypoints, InputArray mask = noArray());

parameters

- image : input image

- keypoints : returned feature matching vector, vector type

- mask : mask image

void drawKeypoints(InputArray image, const std::vector<KeyPoint>& keypoints, InputOutputArray outImage, const Scalar& color = Scalar::all(-1), int flags = DrawmatchesFlags::DEFAULT);

parameters

- image : input image

- keypoints : feature matching point from input image

- outImage : output image

- color : feature matching point's color

- flags : feature matching point draw flags

- DrawMatchesFlags::DEFAULT : just small circle for feature matching point

- DrawMatchesFlags::DRAW_RICH_KEYPOINTS : draw feature point's size and vector

virtual void Feature2D::detectAndCompute(InputArray image, InputArray mask, std::vector<KeyPoint>& keypoints, OutputArray descriptors, bool useProvidedKeypoints = false);

parameters

- iamge : input image

- mask : mask image

- keypoints : feature matching points data

- descriptors : feature matching descriptors data

- useProvidedKeyPoints : use keypoints value use or not flags

void DescriptorMatcher::match(InputArrat queryDescriptors, InputArray trainDescriptors, std::vector<DMatch>& matches, InputArray mask = noArray()) const

- queryDescriptors : queried feature matching descriptors vector

- train Descriptors : trained feature matching descriptors vector

- matches : matching result

- mask : mask

void DescriptorMatcher::knnMatch(InputArray queryDescriptors, InputArray trainDescriptors, std::vector<std::vector<DMatch>>& matches, int k, InputArray mask = noArray(), bool compactResult = false) const

- queryDescriptors : queried feature matching descriptors vector

- train Descriptors : trained feature matching descriptors vector

- matches : matching result

- k : number of best matches use

- mask : mask

- compactResult : if mask is not empty use

void drawMatches(InputArray img1, const std::vector<KeyPoint>& keypoints1, InputArray img2, const std::vector<KeyPoint>& keypoints2, const td::vector<DMatch>& matches1to2, InputOutputArray outImg, const Scalar& matchColor = Scalar::all(-1), const Scalar& singlePointColor = Scalar::all(-1), const std::vector<char>& matchesMask = std::vector<char>(), int flags = DrawMatchesFlags::DEFAULT);

Make outImg using keypoints1 from img1, keypoints 2 from img2 and feature matching result matches1to2

example code

#include <iostream>

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

int main(){

Mat src1 = imread("lenna.bmp", IMREAD_GREYSCALE);

if(src.empty()){

cerr << "image load failed" << "\n";

return -1;

}

Mat src2;

resize(src1, src2, Size(), 0.8, 0.8);

Point cp(src2.cols / 2, src.rows / 2);

Mat rot = getRotationMatrix2D(cp, 10, 1);

warpAffine(src2, src2, rot, Size());

Ptr<Feature2D> detector = SIFT::create();

vector<KeyPoint> kp1, kp2;

detector->detectAndCompute(src1, Mat(), kp1, desc1);

detector->detectAndCompute(src2, Mat(), kp2, desc2);

auto matcher = BFMatcher::create();

#if 1

vector<DMatch> matches;

matcher->match(desc1, desc2, matches);

std::sort(matches.begin(), matches.end());

vector<DMatch> good_matches(matches.begin(), matches.end() + 80);

#else

vector<vector<DMatch>> matches;

matcher->knnMatch(desc1, desc2, matches, 2);

vector<DMatch> good_matches;

for(auto& m : matches){

if(m[0].distance / m[1].distance < 0.7)

good_matches.emplace_back(m[0]);

}

#endif

Mat dst;

drawMatches(src1, kp1, src2, kp2, good_matches, dst);

imshow("dst", dst);

waitKey();

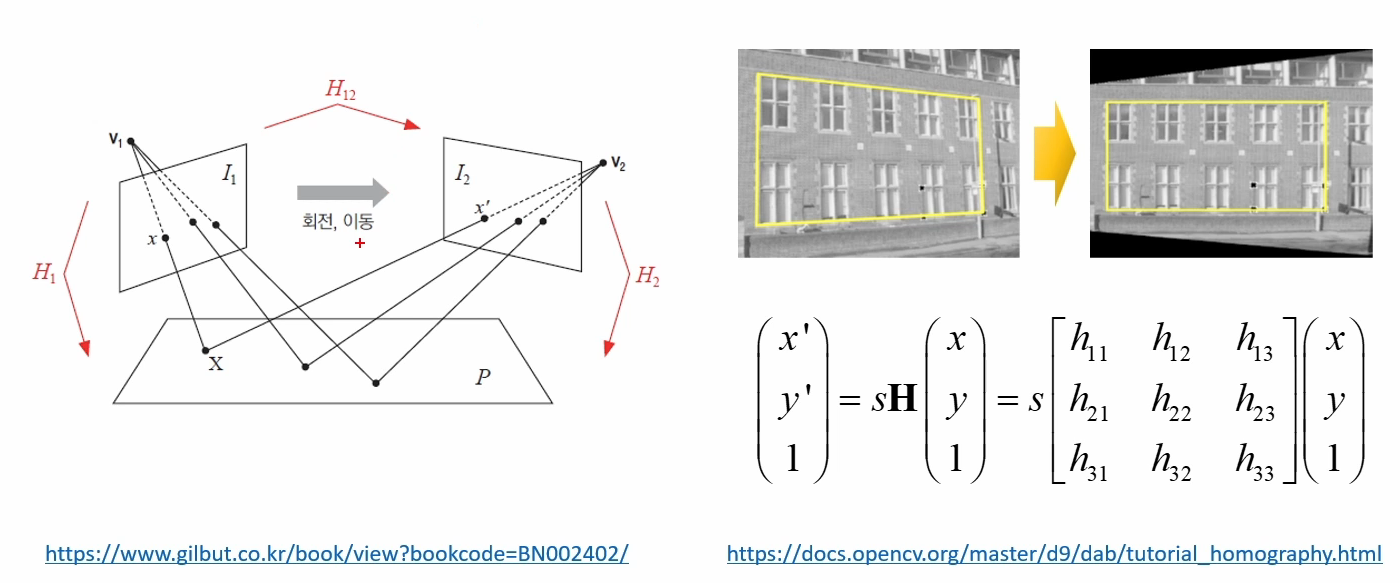

} 3. Homography

Perspective transform between two images. if the view of image turned, transform image to the front of view