Image dataset

여러 이미지를 train_data형식으로 만드는 방법

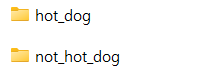

1. ImageFolder

- 폴더내 class별로 이미지가 나뉘어 있을 때

from torchvision import transforms, datasets

# transform 정의

train_transform = transforms.Compose([

transforms.Resize((224, 224)),

transforms.RandomHorizontalFlip(),

transforms.RandomVerticalFlip(),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

])

# Dataset 만들기

train_dataset = datasets.ImageFolder(

root=r'../chap08/data/archive/train', # root는 폴더들이 있는 경로

transform=train_transform

)

# Dataloader

train_dataloader = torch.utils.data.DataLoader(

train_dataset, batch_size=32, shuffle=True,

)2. os.listdir

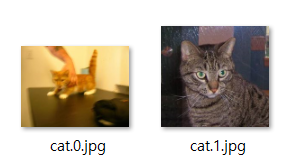

- 한 폴더내에 이미지들이 섞여있을 때

- 보통 파일이름에 label이 들어있거나 csv파일처럼 img파일이름과 label이 매핑된 파일이 있을 때 사용

cat_directory = r'../chap06/data/dogs-vs-cats/Cat/'

dog_directory = r'../chap06/data/dogs-vs-cats/Dog/'

cat_images_filepaths = sorted([os.path.join(cat_directory, f) for f in os.listdir(cat_directory)])

dog_images_filepaths = sorted([os.path.join(dog_directory, f) for f in os.listdir(dog_directory)])

images_filepaths = [*cat_images_filepaths, *dog_images_filepaths]

correct_images_filepaths = [i for i in images_filepaths if cv2.imread(i) is not None]

random.seed(42)

random.shuffle(correct_images_filepaths)

train_images_filepaths = correct_images_filepaths[:400]

val_images_filepaths = correct_images_filepaths[400:-10]

test_images_filepaths = correct_images_filepaths[-10:]

print(len(train_images_filepaths), len(val_images_filepaths), len(test_images_filepaths))

# Dataset 클래스 정의 : 위에선 파일이름만 list로 가지고 있음

class DogvsCatDataset(Dataset):

def __init__(self, file_list, transform=None, phase='train'):

self.file_list = file_list

self.transform = transform

self.phase = phase

def __len__(self):

return len(self.file_list)

def __getitem__(self, idx):

img_path = self.file_list[idx]

img = Image.open(img_path)

img_transformed = self.transform(img, self.phase)

label = img_path.split('/')[-1].split('.')[0]

if label == 'dog':

label = 1

elif label == 'cat':

label = 0

return img_transformed, label

train_dataset = DogvsCatDataset(train_images_filepaths, transform=ImageTransform(size, mean, std), phase='train')

train_dataloader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)3. gz파일

- Fashion MNIST파일은 gz로 압축되어있다.

path = os.getcwd() # 현재경로

labels_path = os.path.join(path,'data\\FashionMNIST','raw','%s-labels-idx1-ubyte.gz'%'train')

images_path = os.path.join(path,'data\\FashionMNIST','raw','%s-images-idx3-ubyte.gz'%'train')

with gzip.open(labels_path, 'rb') as lbpath: # .gz파일 압축해제 & offset은 데이터의 시작위치

y = np.frombuffer(lbpath.read(), dtype=np.uint8,offset=8)

with gzip.open(images_path, 'rb') as imgpath:

X = np.frombuffer(imgpath.read(), dtype=np.uint8,offset=16).reshape(len(y), 28,28)X와 y는 각각 numpy array로 Dataset 클래스 만들고 Dataloader로 불러오면 됨

class MyDataset(Dataset):

def __init__(self, x, y, transform=None):

self.x = x

self.y = y

self.transform = transform

def __len__(self):

return len(self.x)

def __getitem__(self, idx):

img, label = self.x[idx], self.y[idx]

if self.transform:

img = self.transform(img)

return img, label

transform = transforms.Compose([

#transforms.RandomHorizontalFlip(),

transforms.ToTensor() # (28,28) -> (1,28,28)로 바꿈

])

trainset = MyDataset(X, y, transform=transform)

# 데이터 로더 객체 생성

batch_size = 4

trainloader = torch.utils.data.DataLoader(trainset, batch_size=batch_size, shuffle=True)