0718[kubernetes]

📌 Kubernetes

📙 Deployment

✔️ 1. deployment yaml (매니페스트) 생성

#mkdir deployment && cd $_

#vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

image: nginx

ports:

- containerPort: 80

# kubectl apply -f deployment.yaml

# kubectl get deployments.apps -o wide

# kubectl describe deployments.apps nginx-deployment✏️ kubectl describe [kind][그 kind 중에서 이름]

✔️ 2. cluster IP

# vi clusterip-deployment.yaml # 클러스터아이피 야믈

apiVersion: v1

kind: Service

metadata:

name: clusterip-service-deployment

spec:

type: ClusterIP

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f clusterip-deployment.yaml

# kubectl get svc -o wide

# kubectl describe svc clusterip-service-deployment✔️ 3 . nodeport

# vi nodeport-deployment.yaml # 노드포트 야믈

apiVersion: v1

kind: Service

metadata:

name: nodeport-service-deployment

spec:

type: NodePort

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

# kubectl apply -f nodeport-deployment.yaml

# kubectl get svc -o wide

# kubectl describe svc nodeport-service-deployment✔️ 4. load balnacer

# vi loadbalancer-deployment.yaml # 로드밸런서 야믈

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment

spec:

type: LoadBalancer

externalIPs:

- 192.168.1.190

- 192.168.1.234

- 192.168.1.235

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f loadbalancer-deployment.yaml

# kubectl get svc -o wide

# kubectl describe svc loadbalancer-service-deployment✔️ 5. 모든 리소스 확인하기.

[root@master1 deployment]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-55cb6f9cb7-dpj9z 1/1 Running 0 62m

pod/nginx-deployment-55cb6f9cb7-gncvq 1/1 Running 0 62m

pod/nginx-deployment-55cb6f9cb7-nqlw2 1/1 Running 0 62m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/clusterip-service-deployment ClusterIP 10.96.3.147 <none> 80/TCP 47m

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d23h

service/loadbalancer-service-deployment LoadBalancer 10.111.32.125 192.168.1.190,192.168.1.234,192.168.1.235 80:31169/TCP 9m57s

service/nodeport-service-deployment NodePort 10.108.156.156 <none> 80:30080/TCP 23m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 3/3 3 3 62m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deployment-55cb6f9cb7 3 3 3 62m

deployment 하윗단에 replicaset이 있음.

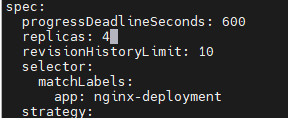

✔️ 6. scale out

# kubectl edit deployments.apps nginx-deployment

[root@master1 deployment]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-55cb6f9cb7-66lh5 1/1 Running 0 106s 10.244.2.3 worker2 <none> <none>

nginx-deployment-55cb6f9cb7-dpj9z 1/1 Running 0 70m 10.244.2.2 worker2 <none> <none>

nginx-deployment-55cb6f9cb7-gncvq 1/1 Running 0 70m 10.244.1.2 worker1 <none> <none>

nginx-deployment-55cb6f9cb7-nqlw2 1/1 Running 0 70m 10.244.1.3 worker1 <none> <none>

✔️ 7. deployment 롤링 업데이트 제어 ( replicas는 롤링업데이트 못함.)

# kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=mj030kk/test_commit:v1.0 ## 교체할 이미지 설정

# kubectl get all

업데이트 완료

# kubectl rollout history deployment nginx-deployment

deployment.apps/nginx-deployment

REVISION CHANGE-CAUSE

1 <none>

2 <none>

# kubectl rollout history deployment nginx-deployment --revision=2 # 리비전2 상세보기

deployment.apps/nginx-deployment with revision #2

Pod Template:

Labels: app=nginx-deployment

pod-template-hash=78f8b95f97

Containers:

nginx-deployment-container:

Image: mj030kk/test_commit:v1.0

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

# kubectl rollout undo deployment nginx-deployment # 롤백(전 단계로 복원)

[root@master1 deployment]# kubectl rollout history deployment nginx-deployment

deployment.apps/nginx-deployment

REVISION CHANGE-CAUSE

2 <none>

3 <none>

📢 rollback 하였으나 다시 또 이미지 못가져오는 일 발생..사설 레지스트리 다시 사용해야함.

✔️ 아래 오류 발생

[root@master1 deployment]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deployment-55cb6f9cb7-bs8nj 0/1 ErrImagePull 0 3m35s

nginx-deployment-55cb6f9cb7-j9965 0/1 ErrImagePull 0 3m35s

nginx-deployment-78f8b95f97-chrvk 1/1 Running 0 12m

nginx-deployment-78f8b95f97-lzjn4 1/1 Running 0 12m

nginx-deployment-78f8b95f97-rmz4w 1/1 Running 0 12m

✔️사설 레지스트리 생성

[root@master1 deployment]# docker run -d -p 5000:5000 --restart=always --name private-docker-registry registry

->master

# vi /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"insecure-registries":["192.168.1.190:5000"]

}

# systemctl restart docker

-> master, worker

✔️ 원래 설치되어있던 자원들 다 지우기 (deployment폴더에 있던 것)

[root@master1 deployment]# kubectl delete -f .

[root@master1 deployment]# kubectl get all ## 삭제 확인

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d

✔️ 이미지 가져오기

[root@master1 deployment]# docker images ## 이미지 목록 확인

[root@master1 deployment]# docker pull nginx

[root@master1 deployment]# docker pull mj030kk/test_commit:v1.0

[root@master1 deployment]# docker tag nginx:latest 192.168.1.190:5000/nginx:latest

[root@master1 deployment]# docker tag mj030kk/test_commit:v1.0 192.168.1.190:5000/test_commit:v1.0

# docker push 192.168.1.190:5000/nginx:latest

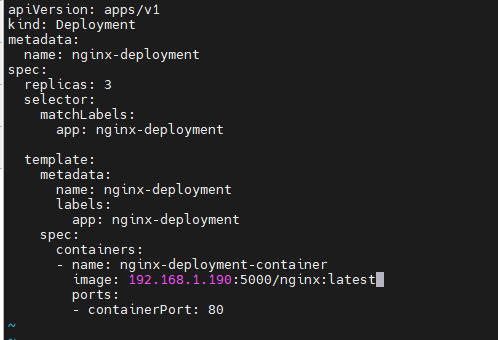

# docker push 192.168.1.190:5000/test_commit:v1.0✔️이미지를 사설 레지스트리 이미지로 설정

#vi deployment.yaml

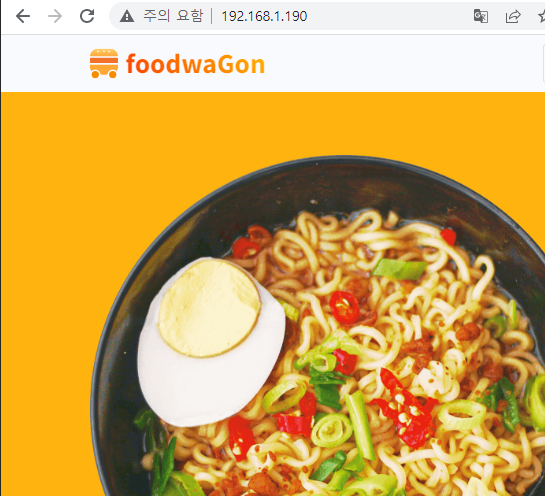

✔️ 위에 설정 적용하고 업데이트 했다가 undo로 롤백하기

[root@master1 deployment]# kubectl apply -f deployment.yaml

[root@master1 deployment]# kubectl apply -f loadbalancer-deployment.yaml

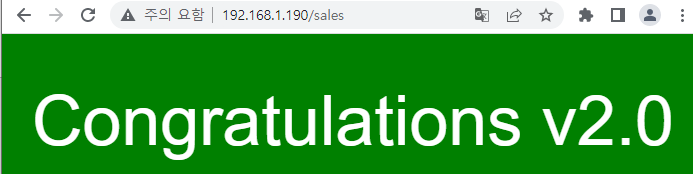

[root@master1 deployment]# kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=192.168.1.190:5000/test_commit:v1.0

# kubectl rollout history deployment nginx-deployment

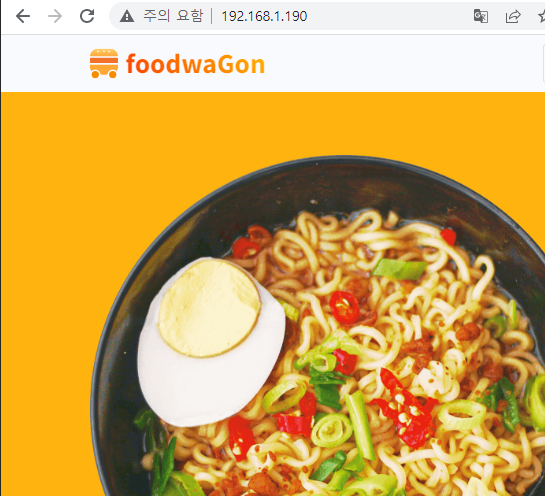

[root@master1 deployment]# kubectl rollout undo deployment nginx-deployment --to-revision=1 ##특정 버전으로 롤백.

업데이트 완료

롤백 확인

아래는 참고.

# kubectl get all

# kubectl rollout history deployment nginx-deployment

# kubectl rollout history deployment nginx-deployment --revision=3 # 리비전3 상세보기

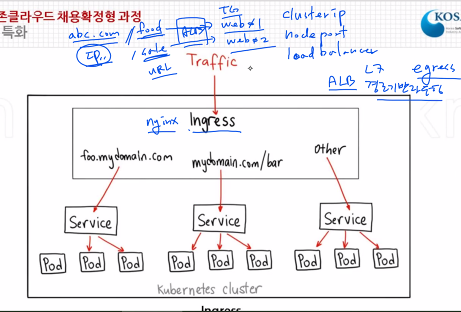

# kubectl rollout undo deployment nginx-deployment ## 그냥 전 버전으로 롤백📙 ingress

서비스의 한 종류( cluster ip, nodeport와 같은..)

✔️ 이론

L7 , 경로기반라우팅 . nginx에서 가져와서 사용.

✔️ 준비

[root@master1 deployment]# yum install -y git

# cd ~

# git clone https://github.com/hali-linux/_Book_k8sInfra.git

# kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.2/ingress-nginx.yaml

# kubectl get pods -n ingress-nginx ##namespace지정해서 pod 보기

[root@master1 deployment]# kubectl delete -f .

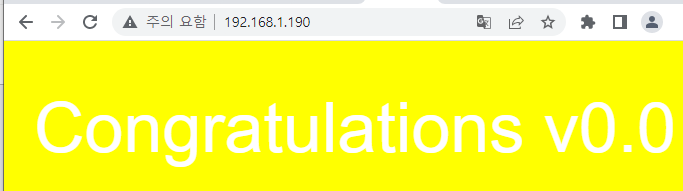

[root@master1 ~]# mkdir ingress && cd $_

# docker pull halilinux/test-home:v0.0

# docker pull halilinux/test-home:v1.0

# docker pull halilinux/test-home:v2.0

# docker tag halilinux/test-home:v0.0 192.168.1.190:5000/test-home:v0.0

# docker tag halilinux/test-home:v1.0 192.168.1.190:5000/test-home:v1.0

# docker tag halilinux/test-home:v2.0 192.168.1.190:5000/test-home:v2.0

# docker push 192.168.1.190:5000/test-home:v0.0

# docker push 192.168.1.190:5000/test-home:v1.0

# docker push 192.168.1.190:5000/test-home:v2.0

✔️yaml파일 생성(pod,service 등 한번에 생성)

# vi ingress-deploy.yaml

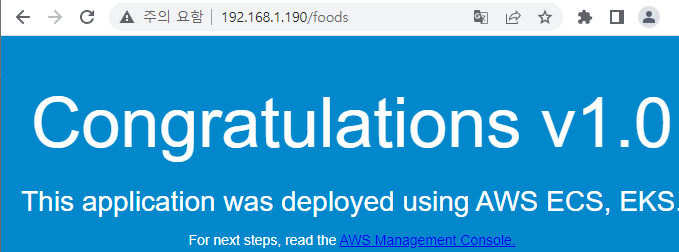

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

image: 192.168.1.190:5000/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

image: 192.168.1.190:5000/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: 192.168.1.190:5000/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80✔️ 적용 및 확인

# kubectl apply -f ingress-deploy.yaml

# kubectl get all

✔️경로기반라우팅

# vi ingress-config.yaml ->backend

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations: # 주석 (주로 설명에 사용 예; 저자,연락처 이번엔 경로주소설정에 사용 ( ingress에서는 기능이 있음))

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http: ##필요하면 https도 사용 가능.

paths:

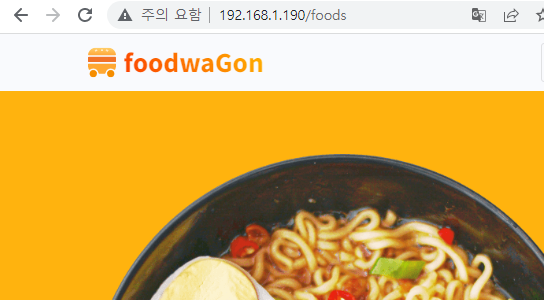

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80

# kubectl apply -f ingress-config.yaml

# kubectl get namespaces

# kubectl get all -n ingress-nginx

# kubectl describe deployment.apps/nginx-ingress-controller -n ingress-nginx

-> 아래 service yaml의 selector 부분(labels) 확인 가능.

# vi ingress-service.yaml ->frontend

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

externalIPs:

- 192.168.1.190

# kubectl apply -f ingress-service.yaml

# kubectl get svc -n ingress-nginx

# kubectl get svc -n ingress-nginx

-> 경로기반 라우팅 확인

✔️ 롤링 업데이트 제어(foods)

# kubectl set image deployment.apps/foods-deploy foods-deploy=mj030kk/test_commit:v1.0

# kubectl get all

->업데이트 확인

kubectl rollout history deployment foods-deploy

deployment.apps/foods-deploy

REVISION CHANGE-CAUSE

4 <none>

5 <none>

6 <none>

[root@master1 ingress]# kubectl rollout undo deployment foods-deploy --to-revision=4 => 롤백

deployment.apps/foods-deploy rolled back

->롤백 완료

📙 volume(pv-pvc-pod)

MATER에서 시작

✔️준비

# mkdir pv-pvc-pod && cd $_

✔️yaml파일 생성 - [pv-pvc-pod]

# vi pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi # 가상 실습환경에서는 큰 의미가 없다. 퍼블릭클라우드에서는 의미 O

accessModes:

- ReadWriteOnce # ReadWriteMany, ReadOnlyMany(접근 보안)

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi # 가상 실습환경에서는 큰 의미가 없다. 퍼블릭클라우드에서는 의미 O

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

# kubectl apply -f pv-pvc-pod.yaml

# kubectl get pv

# kubectl get pvc

# kubectl describe pod task-pv-pod

[root@master1 pv-pvc-pod]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

foods-deploy-5797bff46f-94vf4 1/1 Running 0 63m 10.244.1.21 worker1 <none> <none>

home-deploy-cccdcb7c6-6dfxw 1/1 Running 0 142m 10.244.2.15 worker2 <none> <none>

sales-deploy-fdd6b78d8-82vzp 1/1 Running 0 142m 10.244.1.17 worker1 <none> <none>

task-pv-pod 1/1 Running 0 6m31s 10.244.1.22 worker1 <none> <none>

[root@master1 pv-pvc-pod]# curl 10.244.1.22

<html>

<head><title>403 Forbidden</title></head>

[root@worker1 ~]# ls /mnt

data

[root@worker1 ~]# echo "Hello World" > /mnt/data/index.html

[root@master1 pv-pvc-pod]# curl 10.244.1.22

Hello World✔️ 용량제한 static하게 해보자.

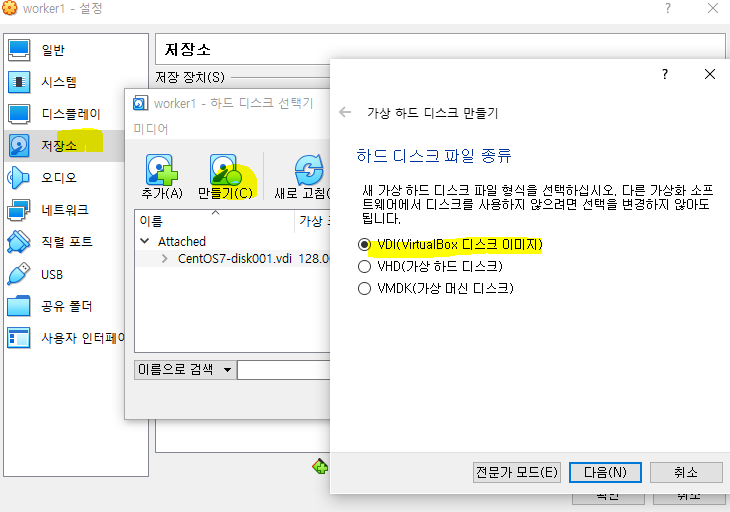

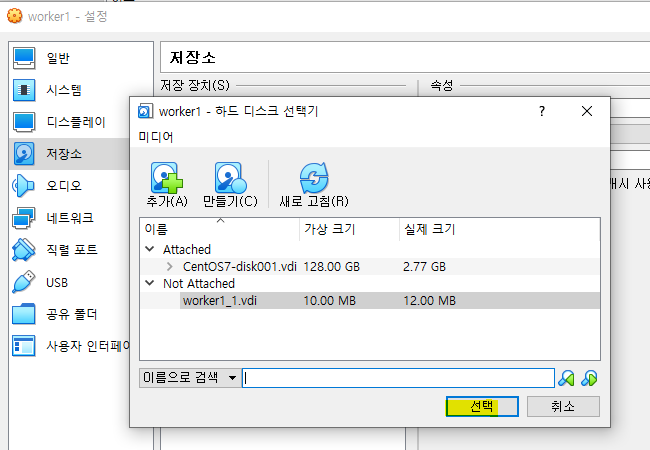

[root@worker1 ~]# poweroff

다음 -고정크기 - 10MB- 만들기

- 확인 - worker1 시작

[root@worker1 ~]# ls /mnt/data/

cirros-0.5.1-x86_64-disk.img

[root@worker1 ~]# rm -rf /mnt/data/cirros-0.5.1-x86_64-disk.img

[root@worker1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 128G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 127G 0 part

├─centos-root 253:0 0 123.1G 0 lvm /

└─centos-swap 253:1 0 3.9G 0 lvm

[root@worker1 ~]# mkfs -t ext4 /dev/sdb

mke2fs 1.42.9 (28-Dec-2013)

/dev/sdb is entire device, not just one partition!

Proceed anyway? (y,n) y

[root@worker1 ~]# mount /dev/sdb /mnt/data/

[root@worker1 ~]# df -h

ed1a94933044dc8a59b92d0afba264b0f48ff225e442be9dd14b/merged

/dev/sdb 8.7M 172K 7.9M 3% /mnt/data

[root@master1 pv-pvc-pod]# kubectl apply -f pv-pvc-pod.yaml

[root@master1 pv-pvc-pod]# kubectl get pod -o wide

[root@worker1 ~]# echo "hello" > /mnt/data/index.html

[root@master1 pv-pvc-pod]# curl 10.244.1.23

hello

✔️용량 제한 확인

[root@master1 ~]# kubectl cp cirros-0.5.1-x86_64-disk.img task-pv-pod:/usr/share/nginx/html

tar: cirros-0.5.1-x86_64-disk.img: Cannot write: No space left on device

tar: Exiting with failure status due to previous errors

command terminated with exit code 2

[root@worker1 ~]# ls -al /mnt/data

total 8524

drwxr-xr-x 3 root root 1024 Jul 18 17:21 .

drwxr-xr-x. 3 root root 18 Jul 18 16:28 ..

-rw-r--r-- 1 root root 8713728 Jul 18 17:21 cirros-0.5.1-x86_64-disk.img

-rw-r--r-- 1 root root 6 Jul 18 17:18 index.html

drwx------ 2 root root 12288 Jul 18 17:12 lost+found✔️ 파드 삭제해도 데이터 남아있는 볼륨 확인.

[root@master1 ~]# kubectl delete pod task-pv-pod

[root@worker1 ~]# ls -al /mnt/data

total 8524

drwxr-xr-x 3 root root 1024 Jul 18 17:21 .

drwxr-xr-x. 3 root root 18 Jul 18 16:28 ..

-rw-r--r-- 1 root root 8713728 Jul 18 17:21 cirros-0.5.1-x86_64-disk.img

-rw-r--r-- 1 root root 6 Jul 18 17:18 index.html

drwx------ 2 root root 12288 Jul 18 17:12 lost+found

✔️ nfs

# yum install -y nfs-utils.x86_64

# mkdir /nfs_shared

# echo '/nfs_shared 192.168.0.0/21(rw,sync,no_root_squash)' >> /etc/exports

# systemctl enable --now nfs

# vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.0.192

path: /nfs_shared

# kubectl apply -f nfs-pv.yaml

# kubectl get pv

# vi nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Mi

# kubectl apply -f nfs-pvc.yaml

# kubectl get pvc

# kubectl get pv

# vi nfs-pvc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc

# kubectl apply -f nfs-pvc-deploy.yaml

# kubectl get pod

# kubectl exec -it nfs-pvc-deploy-76bf944dd5-6j9gf -- /bin/bash

# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --port=80

📙✔️✏️📢⭐️📌

📌기타

⭐️ 용어

한줄한줄씩 실행 : adhoc

파일로 실행 (yaml) : manifest

⭐️ pod IP

[root@master1 deployment]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-55cb6f9cb7-dpj9z 1/1 Running 0 15m 10.244.2.2 worker2 <none> <none>

nginx-deployment-55cb6f9cb7-gncvq 1/1 Running 0 15m 10.244.1.2 worker1 <none> <none>

nginx-deployment-55cb6f9cb7-nqlw2 1/1 Running 0 15m 10.244.1.3 worker1 <none> <none>

[root@master1 deployment]# curl 10.244.1.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

service 설치하지 않아도 pod IP로 pod 잘 동작하고 있는 것 확인 가능.

(내부 IP라서 내부에서만 확인 가능.)

⭐️ schedule

스케쥴 한다 어떤 노드에 파트를 위치시킨다.

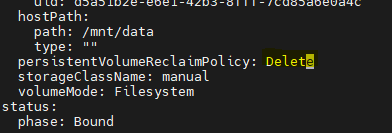

⭐️ volume에서, pod 삭제하면 볼륨 내용물도 삭제되도록 설정하기 시도

다시 생성하고 정책 delete로 바꿔서 pod 다시 삭제.

[root@master1 ~]# kubectl apply -f ./pv-pvc-pod/pv-pvc-pod.yaml

[root@master1 ~]# kubectl edit pv task-pv-volume

[root@master1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

foods-deploy-5797bff46f-jwlpg 1/1 Running 0 24m 10.244.2.19 worker2 <none> <none>

home-deploy-cccdcb7c6-6dfxw 1/1 Running 0 3h21m 10.244.2.15 worker2 <none> <none>

sales-deploy-fdd6b78d8-5jtvj 1/1 Running 0 24m 10.244.2.18 worker2 <none> <none>

task-pv-pod 1/1 Running 0 4m56s 10.244.1.24 worker1 <none> <none>

[root@master1 ~]# curl 10.244.1.24

hello

[root@master1 ~]# kubectl delete pod task-pv-pod

[root@worker1 ~]# ls -al /mnt/data

total 8524

drwxr-xr-x 3 root root 1024 Jul 18 17:21 .

drwxr-xr-x. 3 root root 18 Jul 18 16:28 ..

-rw-r--r-- 1 root root 8713728 Jul 18 17:21 cirros-0.5.1-x86_64-disk.img

-rw-r--r-- 1 root root 6 Jul 18 17:18 index.html

drwx------ 2 root root 12288 Jul 18 17:12 lost+found

-> 잘 안되고 끝.