0719[kubernetes]

📌 0718복습

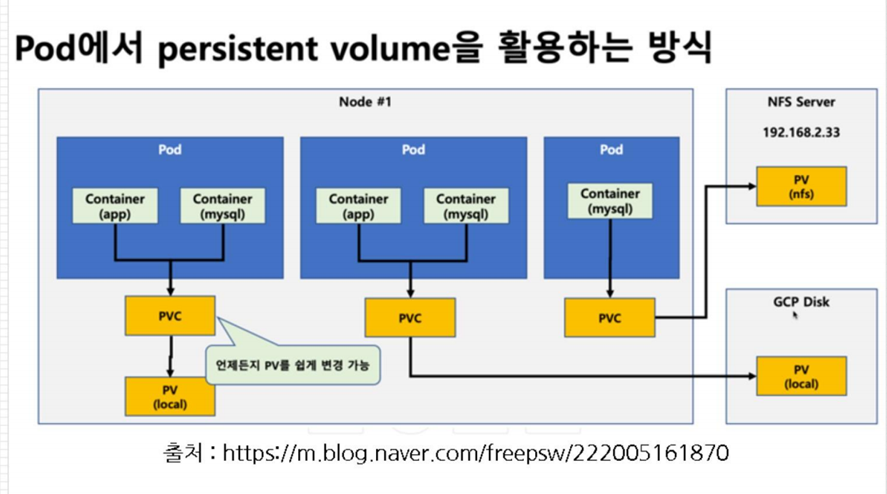

✔️volume; pv-pvc-pod

storageclassname: aws의 ebs등 퍼블릭클라우드에서의 이름 적어주면 됨. 지금은 on-premise 환경이므로 manual.

persistentvolumereclaimploicy(볼륨 삭제 할 때의 정책); delete 명령어 적용시키려면 퍼블릭클라우드, openstack에서 사용해야함.

# vi pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi # 가상 실습환경에서는 큰 의미가 없다. 퍼블릭클라우드에서는 의미 O

accessModes:

- ReadWriteOnce # ReadWriteMany, ReadOnlyMany(접근 보안)

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi # 가상 실습환경에서는 큰 의미가 없다. 퍼블릭클라우드에서는 의미 O

위 capacity 용량이 엄청 클 경우에는 부분적으로 할당해주어도 되지만 지금은 전체 용량이 작으므로 전체를 할당.

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

containers:

- name: task-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

# kubectl apply -f pv-pvc-pod.yaml

# kubectl get pv

# kubectl get pvc

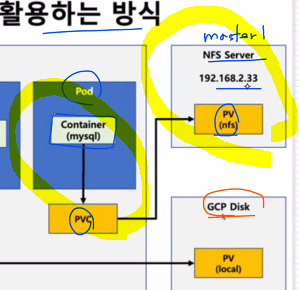

# kubectl describe pod task-pv-pod📌 volume-nfs

📙 준비

# yum install -y nfs-utils.x86_64 (worker,master)

[root@master1 ~]# mkdir /nfs_shared

[root@master1 ~]# chmod 777 /nfs_shared

[root@master1 ~]# echo '/nfs_shared 192.168.0.0/20(rw,sync,no_root_squash)' >> /etc/exports

[root@master1 ~]# systemctl enable --now nfs

[root@master1 ~]# mkdir nfs-pv-pvc-pod && cd $_📙 nfs-pv생성

[root@master1 nfs-pv-pvc-pod]# vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.1.190

path: /nfs_shared

# kubectl apply -f nfs-pv.yaml

[root@master1 nfs-pv-pvc-pod]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv 100Mi RWX Recycle Available 3s

✏️avilable ; 아직 사용하고 있지 않음 (사용가능) -> 사용 하면 bound.

📙 nfs-pvc 생성

# vi nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi

# kubectl apply -f nfs-pvc.yaml

[root@master1 nfs-pv-pvc-pod]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Recycle Bound default/nfs-pvc 7m42s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nfs-pvc Bound nfs-pv 100Mi RWX 14s✏️라벨과 셀렉터가 없는 경우에는 용량으로 연결.(가장 근접한 크기의 pv와 연결됨.)

📙 nfs-pvc-deploy생성 (pod)

# vi nfs-pvc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4 # spot

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc

# kubectl apply -f nfs-pvc-deploy.yaml

# kubectl get pod

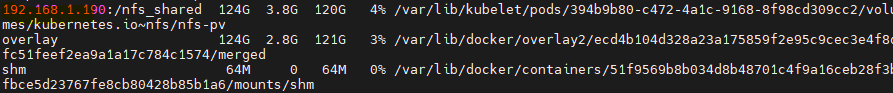

✏️df- h로 마운트 확인 (woker 1,2)

📙 서비스(로드밸런서)생성 및 확인

[root@master1 nfs-pv-pvc-pod]# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --external-ip 192.168.1.190 --port=80

[root@master1 nfs-pv-pvc-pod]# kubectl get svc

[root@master1 nfs-pv-pvc-pod]# echo "HELLO" > /nfs_shared/index.html

[root@master1 nfs-pv-pvc-pod]# ls /nfs_shared/

index.html

[root@master1 nfs-pv-pvc-pod]# curl 10.108.131.196

HELLO

[root@master1 nfs-pv-pvc-pod]# curl 192.168.1.190

HELLO📙 삭제

[root@master1 nfs-pv-pvc-pod]# kubectl delete deployments.apps nfs-pvc-deploy

deployment.apps "nfs-pvc-deploy" deleted

[root@master1 nfs-pv-pvc-pod]# kubectl delete pvc nfs-pvc

persistentvolumeclaim "nfs-pvc" deleted

[root@master1 nfs-pv-pvc-pod]# ls /nfs_shared/

-> recycle로 인해 pvc를 삭제하자 안에 내용물도 삭제됨 (index.html)

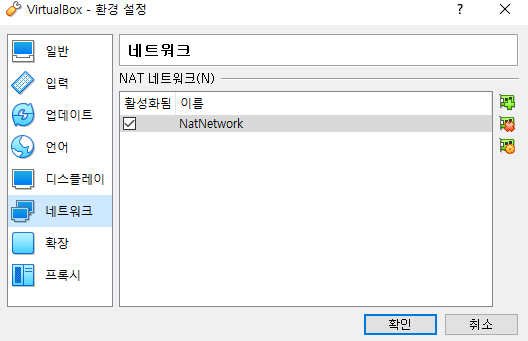

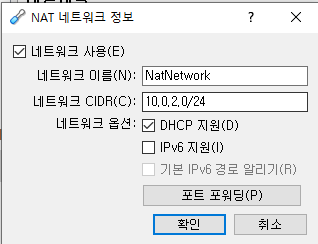

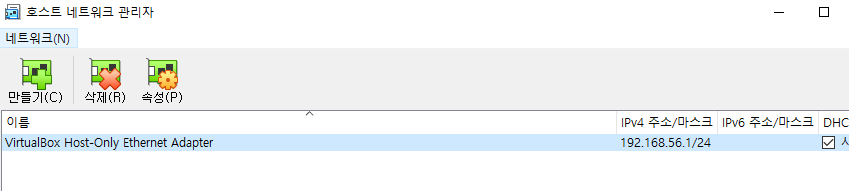

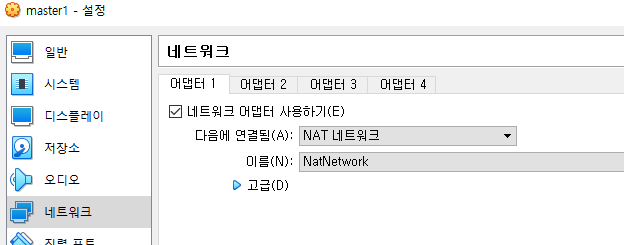

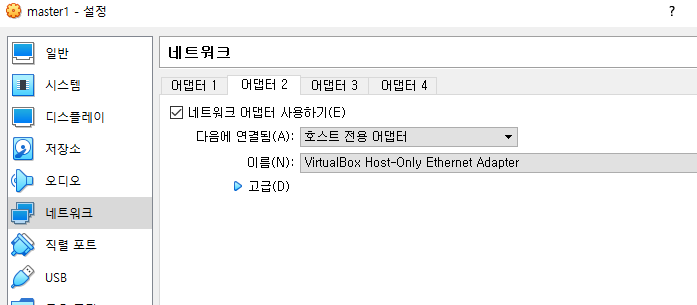

📌 실습환경 NAT로 변경

📙 IP충돌나지 않도록 네트워크 설정

✔️virtual box전체 설정

✔️ 각 서버 설정에서 설정

위 과정 master, worker1,2모두 진행

📙 서버 초기 설정

IP table

master : 192.168.56.103

worker1 : 192.168.56.104

worker2 : 192.168.56.105

--- Master,Worker ---

# cat <<EOF >> /etc/hosts

192.168.56.103 master1

192.168.56.104 worker1

192.168.56.105 worker2

EOF

# kubeadm reset

--- Master ---

# kubeadm init --apiserver-advertise-address=192.168.56.103 --pod-network-cidr=10.244.0.0/16

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml--- Worker ---

# kubeadm join 192.168.56.103:6443 --token g1hqt8.2fh05t05cqdg17yq --discovery-token-ca-cert-hash sha256:2eded64c511417d2ca8bf022183c997dfa66d2206200b2ee9a99dca9a5d62810--- Master ---

[root@master1 ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master1 Ready master 4m34s v1.19.16

worker1 Ready <none> 62s v1.19.16

worker2 Ready <none> 49s v1.19.16

[root@master1 ~]# kubectl get pods --all-namespaces ## 시스템에 의해서 만들어진 pod들 runnging상태인지 확인.(그래야 정상)

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-94x56 1/1 Running 0 75s

kube-flannel kube-flannel-ds-d486s 1/1 Running 0 3m3s

kube-flannel kube-flannel-ds-jcxbm 1/1 Running 0 88s

kube-system coredns-f9fd979d6-9q647 1/1 Running 0 4m40s

kube-system coredns-f9fd979d6-sdh7t 1/1 Running 0 4m40s

kube-system etcd-master1 1/1 Running 0 4m49s

kube-system kube-apiserver-master1 1/1 Running 0 4m49s

kube-system kube-controller-manager-master1 1/1 Running 0 4m49s

kube-system kube-proxy-c5mc8 1/1 Running 0 4m40s

kube-system kube-proxy-jn4hv 1/1 Running 0 75s

kube-system kube-proxy-v4dgp 1/1 Running 0 88s

kube-system kube-scheduler-master1 1/1 Running 0 4m49s

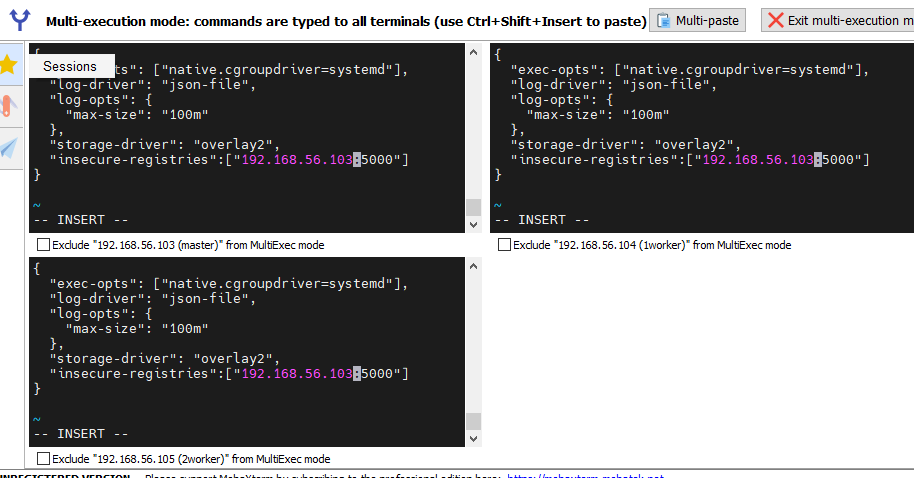

📙 사설레지스트리 수정

--- master,worker ---

# vi /etc/docker/daemon.json

# systemctl restart docker

📙 기본 동작 확인

[root@master1 ~]# docker tag nginx:latest 192.168.56.103:5000/nginx:latest

[root@master1 ~]# docker push 192.168.56.103:5000/nginx:latest

[root@master1 ~]# kubectl run nginx-pod2 --image=192.168.56.103:5000/nginx:latest

[root@master1 ~]# kubectl expose pod nginx-pod2 --name loadbalancer --type=LoadBalancer --external-ip 192.168.56.103 --port 80

[root@master1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 45m

loadbalancer LoadBalancer 10.107.39.44 192.168.56.103 80:32557/TCP 23s

[root@master1 ~]# curl 192.168.56.103

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

📌 NAT 환경에서 nfs 복습

📙 기존에 설정해두었던 파일들 수정 및 확인

# echo '/nfs_shared 192.168.56.0/20(rw,sync,no_root_squash)' > /etc/exports

# systemctl restart nfs

# vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle

nfs:

server: 192.168.56.103

path: /nfs_shared

# kubectl apply -f nfs-pv.yaml

# kubectl apply -f nfs-pvc.yaml

#vi nfs-pvc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: 192.168.56.103:5000/nginx:latest

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc

# kubectl apply -f nfs-pvc-deploy.yaml

# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --external-ip 192.168.56.103 --port=80

[root@master1 nfs-pv-pvc-pod]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 158m

nfs-pvc-deploy-svc1 LoadBalancer 10.104.207.15 192.168.56.103 80:30589/TCP 9s

[root@master1 nfs-pv-pvc-pod]# curl 192.168.56.103 ##폴더에 파일이 없어서 403

<html>

<head><title>403 Forbidden</title></head>

[root@master1 nfs-pv-pvc-pod]# echo "HELLO" > /nfs_shared/index.html

[root@master1 nfs-pv-pvc-pod]# curl 192.168.56.103

HELLO

[root@worker1 ~]# df -h

192.168.56.103:/nfs_shared 124G 4.1G 120G 4% /var/lib/kubelet/pods/732c7fdd-f6ab-4392-ae88-2bbaf6c6572f/volumes/kubernetes.io~nfs/nfs-pv

[root@worker2 ~]# df -h

192.168.56.103:/nfs_shared 124G 4.1G 120G 4% /var/lib/kubelet/pods/44b0bedb-2c24-4f75-ab50-c2aa80f4469d/volumes/kubernetes.io~nfs/nfs-pv

📌 multi-container

📙 준비

[root@master1 ~]# mkdir test && cd $_

[root@master1 test]# docker pull centos:7

[root@master1 test]# docker tag centos:7 192.168.56.103:5000/centos:7

[root@master1 test]# docker push 192.168.56.103:5000/centos:7

📙 2개의 컨테이너가 있는 pod생성

# vi multipod.yaml

apiVersion: v1

kind: Pod

metadata:

name: multipod

spec:

containers:

- name: nginx-container #1번째 컨테이너

image: 192.168.56.103:5000/nginx:latest

ports:

- containerPort: 80

- name: centos-container #2번째 컨테이너

image: 192.168.56.103:5000/centos:7

command:

- sleep

- "10000"

[root@master1 test]# kubectl apply -f multipod.yaml

[root@master1 test]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

multipod 2/2 Running 0 17s 10.244.1.5 worker1 <none> <none>

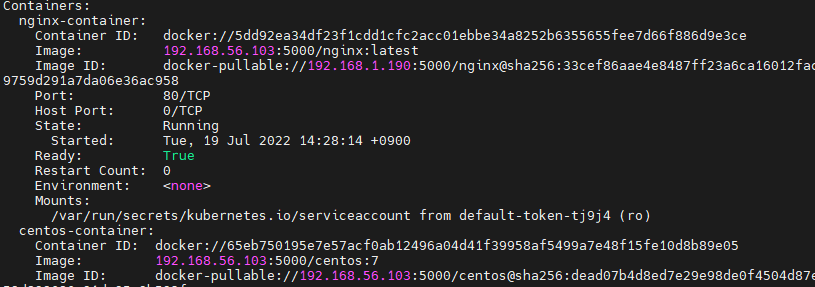

[root@master1 test]# kubectl describe pod multipod

📙 컨테이너(웹서버)에 진입하기

✔️nginx-container

[root@master1 test]# kubectl exec -it multipod -c nginx-container -- bash

root@multipod:/# yum

bash: yum: command not found

root@multipod:/# apt-get

apt 2.2.4 (amd64)

root@multipod:/# cd /usr/share/nginx/

root@multipod:/usr/share/nginx# ls

-> apt, yum 통해서 nginx임을 확인. yum없고 apt-get이 있는 것.

✔️ centos-container

[root@master1 test]# kubectl exec -it multipod -c centos-container -- bash

[root@multipod /]# yum

Loaded plugins: fastestmirror, ovl

You need to give some command

Usage: yum [options] COMMAND

-> yum 통해서 centos임을 확인. yum 작동함.

📙 wordpress설치 - 웹서버와 DB서버

[root@master1 test]# docker pull mysql:5.7

[root@master1 test]# docker pull wordpress

[root@master1 test]# docker tag mysql:5.7 192.168.56.103:5000/mysql:5.7

[root@master1 test]# docker push 192.168.56.103:5000/mysql:5.7

[root@master1 test]# docker tag wordpress 192.168.56.103:5000/wordpress

[root@master1 test]# docker push 192.168.56.103:5000/wordpress:latest

# vi wordpress-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: mysql-container

image: 192.168.56.103:5000/mysql:5.7

env:

- name: MYSQL_ROOT_HOST

value: '%' # wpuser@%

- name: MYSQL_ROOT_PASSWORD

value: kosa0401

- name: MYSQL_DATABASE

value: wordpress

- name: MYSQL_USER

value: wpuser

- name: MYSQL_PASSWORD

value: wppass

ports:

- containerPort: 3306

- name: wordpress-container

image: 192.168.56.103:5000/wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-pod:3306

- name: WORDPRESS_DB_USER

value: wpuser

- name: WORDPRESS_DB_PASSWORD

value: wppass

- name: WORDPRESS_DB_NAME

value: wordpress

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment-wordpress

spec:

type: LoadBalancer

externalIPs:

- 192.168.56.105

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

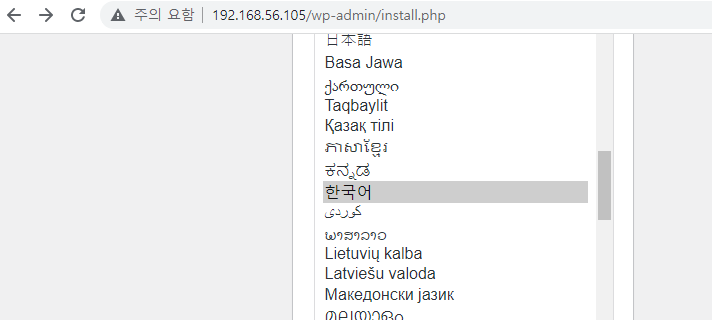

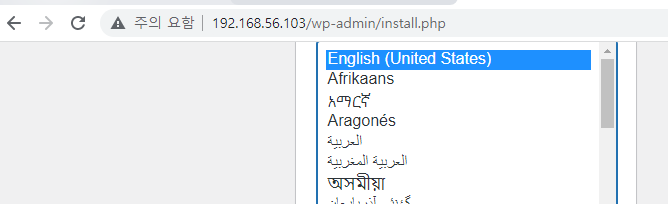

[root@master1 test]# kubectl apply -f wordpress-pod-svc.yaml

[root@master1 test]# kubectl get all

[root@master1 test]# kubectl describe pod wordpress-pod

✔️ 각각의 컨테이너에 진입해보기

[root@master1 test]# kubectl exec -it wordpress-pod -c mysql-container -- bash

bash-4.2#

bash-4.2#

bash-4.2#

bash-4.2# find / -name mysql

/etc/mysql

/usr/bin/mysql

/usr/lib/mysqlsh/lib/python3.9/site-packages/oci/mysql

[root@master1 test]# kubectl exec -it wordpress-pod -c wordpress-container -- bash

root@wordpress-pod:/var/www/html#

root@wordpress-pod:/var/www/html#

root@wordpress-pod:/var/www/html# ls

index.php wp-admin wp-config-sample.php wp-includes wp-mail.php xmlrpc.php

license.txt wp-blog-header.php wp-config.php wp-links-opml.php wp-settings.php

readme.html wp-comments-post.php wp-content wp-load.php wp-signup.php

wp-activate.php wp-config-docker.php wp-cron.php wp-login.php wp-trackback.php

📌 metallb(DHCP)

📙 아무 IP로 loadbalancer 작동 되는지 확인하기

[root@master1 test]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h1m

loadbalancer-service-deployment-wordpress LoadBalancer 10.111.5.7 192.168.56.105 80:31198/TCP 27m

nfs-pvc-deploy-svc1 LoadBalancer 10.104.207.15 192.168.56.103 80:30589/TCP 82m

[root@master1 test]# kubectl delete -f .

[root@master1 test]# vi wordpress-pod-svc.yaml -> 맨 하단 ip수정

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-deployment-wordpress

spec:

type: LoadBalancer

externalIPs:

- 172.31.2.200

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

[root@master1 test]# kubectl apply -f wordpress-pod-svc.yaml

[root@master1 test]# kubectl apply -f .

[root@master1 test]# curl 172.31.2.200

^C->192.168.56.X도(다른분들이 실습), 다른 IP대역(나)도 작동안됨!!!

📙 metallb

[root@master1 ~]# yum install -y git

# git clone https://github.com/hali-linux/_Book_k8sInfra.git

[root@master1 test]# kubectl get po --all-namespaces

[root@master1 test]# docker describe metallb-system speaker-bk485

[root@master1 test]# docker pull metallb/controller:v0.8.2

[root@master1 test]# docker pull metallb/speaker:v0.8.2

[root@master1 test]# docker tag metallb/controller:v0.8.2 192.168.56.103:5000/controller:v0.8.2

[root@master1 test]# docker tag metallb/speaker:v0.8.2 192.168.56.103:5000/speaker:v0.8.2

[root@master1 test]# docker push 192.168.56.103:5000/controller:v0.8.2

# vi /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

image 위에서 push한 이미지로 교체해주기.

# kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

# kubectl get pods -n metallb-system -o wide

[root@master1 test]# kubectl delete deploy,pod,svc --all

[root@master1 test]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 106s

# vi metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 192.168.56.200-192.168.56.250

# kubectl apply -f metallb-l2config.yaml

# kubectl describe configmaps -n metallb-system

[root@master1 test]# vi wordpress-pod-svc.yaml

맨 하단 IP 주석처리

# externalIPs:

# - 172.31.2.200

[root@master1 test]# kubectl apply -f wordpress-pod-svc.yaml-> 결과 DHCP로 할당받은 IP로 진입 안된당

[root@master1 test]# vi metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 192.168.56.103-192.168.56.105

[root@master1 test]# kubectl apply -f metallb-l2config.yaml

[root@master1 test]# kubectl describe configmaps -n metallb-system

Name: config

Namespace: metallb-system

Labels: <none>

Annotations: <none>

Data

====

config:

----

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 192.168.56.103-192.168.56.105

[root@master1 test]# kubectl delete svc loadbalancer-service-deployment-wordpress

[root@master1 test]# kubectl apply -f wordpress-pod-svc.yaml

[root@master1 test]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 40m

loadbalancer-service-deployment-wordpress LoadBalancer 10.109.156.177 192.168.56.200 80:31493/TCP 9s-> 수정 안됨...

지우고 다시

[root@master1 test]# kubectl delete -f metallb-l2config.yaml

[root@master1 test]# kubectl delete svc loadbalancer-service-deployment-wordpress

[root@master1 test]# kubectl apply -f metallb-l2config.yaml

configmap/config created

[root@master1 test]# kubectl apply -f wordpress-pod-svc.yaml

pod/wordpress-pod unchanged

이제 됨..

# vi metallb-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx-pod

spec:

containers:

- name: nginx-pod-container

image: 192.168.56.103:5000/nginx:latest

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-service-pod

spec:

type: LoadBalancer

# externalIPs:

# -

selector:

app: nginx-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

# kubectl apply -f metallb-test.yaml

[root@master1 test]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 48m

loadbalancer-service-deployment-wordpress LoadBalancer 10.111.165.12 192.168.56.103 80:30261/TCP 4m57s

loadbalancer-service-pod LoadBalancer 10.109.73.146 192.168.56.104 80:30803/TCP 12s

[root@master1 test]# curl 192.168.56.104

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

📌config map

컨피그맵은 키-값 쌍으로 기밀이 아닌 데이터를 저장하는 데 사용하는 API 오브젝트입니다. 파드는 볼륨에서 환경 변수, 커맨드-라인 인수 또는 구성 파일로 컨피그맵을 사용할 수 있습니다.

컨피그맵을 사용하면 컨테이너 이미지에서 환경별 구성을 분리하여, 애플리케이션을 쉽게 이식할 수 있습니다.

📙

[root@master1 ~]# mkdir configmap && cd $_

[root@master1 configmap]# vi configmap-dev.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug

[root@master1 configmap]# kubectl apply -f configmap-dev.yaml

[root@master1 configmap]# kubectl describe configmaps config-dev

# vi deployment-config01.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: configapp

labels:

app: configapp

spec:

replicas: 1

selector:

matchLabels:

app: configapp

template:

metadata:

labels:

app: configapp

spec:

containers:

- name: testapp

image: 192.168.56.103:5000/nginx:latest

ports:

- containerPort: 8080

env:

- name: DEBUG_LEVEL

valueFrom:

configMapKeyRef:

name: config-dev

key: DEBUG_INFO📙✔️✏️📢⭐️📌

📌 기타

⭐️ Ingress

L7기능을 쿠버네티스에서 구현.

ALB와 비슷 경로기반라우팅.

⭐️ nfs이용해서 마운트하는 법 (기본 방법)

[root@worker1 ~]# mount -t nfs 192.168.1.190:/nfs_shared /mnt # 마운트

[root@worker1 ~]# umount /mnt # 마운트 해제

⭐️ RWX,RWO

ReadWriteMany ; RWX

ReadWriteOnce ; RWO