💡 0. Abstract

본고는 대량의 데이터로 부터 단어의 연속적인 벡터표현을 계산하기 위한 두 개의 새로운 모델 구조를 제안한다. 이 표현들의 성능은 단어 유사도로 측정되며, 이 결과는 이전에 가장 좋은 성능을 냈던 다른 유형의 신경망구조를 기반으로한 기술과 비교한다. 본고는 매우 작은 계산 복잡도로 큰 성능 향상을 보여준다. 다시말해, 1.6 billion 개의 단어 데이터셋으로 부터 높은 품질의 단어 벡터를 배우는 데에 하루가 채 걸리지 않는다. 더욱이, 구문 유사도와 의미 유사도를 측정하기 위해 이 벡터들이 최첨단의 테스트셋을 제공한다는 것을 보여준다.

💡 1. Introduction

많은 NLP systems and techniques들이 단어를 atomic unit(원자 요소)로 다룬다. 즉, 단어 간의 유사성에 대한 개념이 없다는 것이다. 이러한 방식은 단순하고, robust하며, '많은 데이터를 가지고 훈련된 단순한 모델'이 '적은 데이터를 가지고 훈련된 복잡한 모델'보다 뛰어난 것이 관찰되는 등의 다양한 장점들때문에 자주 사용된다. 그 예로 N-gram model을 말할 수 있는데, 오늘날의 N-Gram은 사실상 모든 데이터에 대해 훈련이 가능하다. 그러나 많은 면에서 제약을 가진다.

N-Gram model

n-gram은 n개의 연속적인 단어 나열을 의미한다. 다시 말해, 갖고 있는 코퍼스에서 n개의 단어 뭉치 단위로 끊은 것을 하나의 토큰으로 간주한다.

📌 "An adorable little boy is spreading smiles"라는 문장이 있을 때, 각 n에 대해서 n-gram을 전부 구해보면 다음과 같다.

- unigrams : an, adorable, little, boy, is, spreading, smiles

- bigrams : an adorable, adorable little, little boy, boy is, is spreading, spreading smiles

- trigrams : an adorable little, adorable little boy, little boy is, boy is spreading, is spreading smiles

- 4-grams : an adorable little boy, adorable little boy is, little boy is spreading, boy is spreading smiles

n이 1일 때는 유니그램(unigram), 2일 때는 바이그램(bigram), 3일 때는 트라이그램(trigram)이라고 명명하고 n이 4 이상일 때는 gram 앞에 그대로 숫자를 붙여서 명명한다.

최근, 머신러닝 기술의 진보와 함께 매우 큰 데이터셋에 더욱 복잡한 모델을 훈련시키는 것이 가능해졌고, 이는 단순한 모델들의 성능을 뛰어넘었다. 아마 가장 성공적인 concept은 단어의 distributed representation을 사용 한 것이다. 예를 들어, 언어 모델에 기반한 Neural Network(인공신경망)는 N-gram model을 능가하는 성능을 보여줬다.

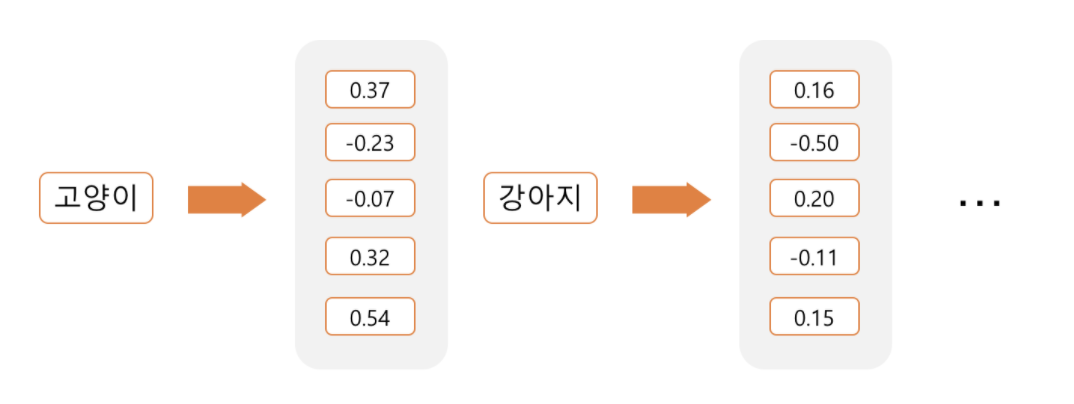

Distributed Representation

각각의 속성을 독립적인 차원으로 나타내지 않고, 우리가 정한 차원으로 대상을 대응시켜서 표현한다. 예를 들어, 해당 속성을 5차원으로 표현할 것이라고 정하면 그 속성을 5차원 벡터에 대응(embedding)시키는 것이다.

임베딩된 벡터는 더이상 sparse하지 않다. One-hot encoding처럼 대부분이 0인 벡터가 아니라, 모든 차원이 값을 갖고 있는 벡터로 표현이 된다. ‘Distributed’라는 말이 붙는 이유는 하나의 정보가 여러 차원에 분산되어 표현되기 때문이다. Sparse representation에서는 각각의 차원이 각각의 독립적인 정보를 갖고 있지만, Distribution representation에서는 하나의 차원이 여러 속성들이 버무려진 정보를 들고 있다. 즉, 하나의 차원이 하나의 속성을 명시적으로 표현하는 것이 아니라 여러 차원들이 조합되어 나타내고자 하는 속성들을 표현하는 것이다.

1. Goals of the Paper

논문의 주 목적은 수 억개의 단어로 구성된 매우 큰 데이터에서 퀄리티 높은 단어 벡터를 학습할 수 있는 기술들을 소개하는 데에 있다. 이제껏 제안된 architecture 중에 어떤 것도 수백만개 단어를 학습하는 것에 성공하지 못했으며, 벡터의 크기도 50~100 정도밖에 사용하지 못했다.

본고는 비슷한 단어들은 가까이에 있고, multiple degrees of similarity를 갖는다는 가정 하에서 vector representations의 퀄리티를 측정하는 기술을 제안한다. 이는 굴절어(라틴어 등)의 문맥에서 먼저 관측되었다. 예를 들어 유사한 단어를 찾을 때, 명사는 다양한 어미를 가지는데 원래의 벡터 공간의 subspace에서 비슷한 어미를 갖는 단어들을 찾을 수 있다. 놀랍게도, word representation의 유사도는 단순한 synatactic regularities(구문 규칙)을 넘어선다. 단어 벡터에서 대수적 연산으로 word offset technique을 사용하면 은 이라는 결과가 나온다.

이 논문에서는 단어 사이의 선형 규칙을 보존하는 새로운 모델 구조를 만들어서 vector representation 연산의 정확도를 극대화 시킬 것이다. 구문 규칙과 의미 규칙을 측정하기 위해 이해하기 쉬운 새로운 테스트셋을 만들었고, 높은 정확도로 규칙들이 학습되는 것을 보였다. 또한, 훈련 시간과 정확도가 단어 벡터의 차원과 training 데이터의 양에 얼마나 의존하는지에 대해 이야기 하고자 한다.

2. Previous Work

이전에도 단어를 연속적인 벡터로 표현하는 방법을 사용했으며, 그 중에서도 NNLM(neural network language model)에 관한 논문에서 제안한 것들이 잘 알려져 있다.A neural probabilistic language model 논문에서 제시된 모델은 Linear Projection Layer와 Non-Linear Hidden Layer 를 기반으로 Feedforward Neural Network를 통해 단어 벡터 표현과 통계학적인 언어 모델의 결합을 학습하는데 사용된다.

또 다른 흥미로운 구조인 NNLM은 Language Modeling for Speech Recognition in Czech, Neural network based language models for higly inflective languages 논문에서 제시한다. 먼저, 단어 벡터들은 single hidden layer를 갖는 Neural network에 의해 학습 된다. 그 단어 벡터들은 NNLM을 학습하는데 사용되므로 단어 벡터들은 비록 전체 NNLM을 구성하지 않아도 학습된다. 이 작업을 통해 직접적으로 구조를 확장하여, 단어 벡터가 간단한 모델에 의해 학습되어지는 첫번째 단계에 주목한다.

이 단어 벡터들은 많은 NLP program의 엄청난 향상과 단순화에 사용될 수 있을 것이다. 단어 벡터의 예측은 다른 모델 구조을 사용하는데 실행되고, 다양한 단어 corpora를 학습한다. 단어 벡터 결과의 일부는 미래 연구와 비교를 위해 사용 가능하게 된다. 하지만 이 구조들은 학습을 하기 위해 매우 계산 복잡도가 커지며 비용이 많이 든다.

💡 2. Model Architectures

LSA와 LDA를 포함한 많은 다양한 종류의 모델들이 단어의 계속적인 표현을 위해 제안되었다. 본고에서는 인공신경망을 통해 학습된 단어의 distributed representation에 주목할 것이다. Strategies for Training Large Scale Neural Network Language Models와 비슷하게, 모델의 계산 복잡성을 모델을 완전히 훈련시키기 위해 액세스 해야 하는 매개 변수의 수로 정의한다. 다음으로, 정확도를 극대화하기 위해 계산 복잡도를 최소화 시킨다.

Training comprexity는 다음과 같이 정의한다.

는 training epochs의 수, 는 training set의 단어 수, 는 각 모델에 따라 다르게 정의됨

- 일반적으로 E = 3~50, T는 10억개 이상으로 정의됨

- 모든 모델은 stochastic gradient descent와 backpropagation을 이용하여 학습

1. Feedforward Neural Net Language Model(NNLM)

A neural probabilistic language model에서 제안된 NNLM 모델은 Input, Projection, Hidden, Output layer 로 구성되어 있다. Input layer에서, 개의 선행 단어들이 1-of- coding으로 인코딩되며, 전체 vocabulary의 크기가 인 경우 크기의 벡터가 주어진다. 벡터 크기를 가진 projection layer가 Input layer가 된다.

NNLM 구조는 projection layer가 촘촘할수록 projection layer와 hidden layer 간의 계산이 복잡하다. 일 때, 는 500~2000이며, 는 500에서 1000개이다. 게다가 hidden layer가 모든 단어의 확률 분포를 계산하는 데에 사용되기에 output layer의 차원은 가 된다. 따라서, 매 training example마다 계산 복잡도는 다음과 같다.

- dominating term은

이를 피하기 위한 몇 가지 실용적인 해결책이 있다(Hierarchical version of softmax, avoiding normalized models). 단어의 이진분류 representation과 함께, output units의 수를 까지 낮출 수 있다. 이에 따라 대부분의 복잡도가 에 의해 발생된다.

본고에서는 Hierachical softmax를 사용하는데, 이는 단어가 Huffman binary tree로 나타나는 방법이다. 단어의 빈도 수가 NNLM에서 class를 얻기위해 잘 작동한다는 이전의 관측들을 따른다. Huffman trees는 빈도 높은 단어들에 짧은 이진 코드를 할당하고, 이는 평가되어야 하는 output unit의 수를 낮춰준다. 균형잡힌 이진 트리는 평가되어야 하는 의 output을 요구하는 반면, Hierachical softmax에 기반한 huffman tree는 에 대해서만을 요구한다. 예를 들어 단어 사이즈가 백만개의 단어라면, 이 결과는 평가에 있어서 속도를 두 배 더 빠르게 한다. 식에서 계산의 병목현상이 일어나는 NNLM에서는 중요한 문제가 아닐지라도, 본고는 hidden layer가 없고 softmax normalization의 효율성에 주로 의존하는 architectures를 제안할 것이다.

2. Recurrent Net Language Model(RNNLM)

Recurrent Neural Net Language Model(RNNLM)은 문맥의 길이(the order of the model N)를 명시해야하는 것과 같은 NNLM의 한계를 극복하기 위해서 생겨났다. 이론적으로 RNN은 더 복잡한 패턴들을 얕은 인공신경망을 이용해서 효율적으로 표현할 수 있다. RNN 모델은 projection layer가 없고, input, hidden, output layer만 있다. 이 모델에 특별한 점은 recurrent matrix가 hidden layer 그 자체와 시간의 흐름의 연결을 갖고 연결되어 있다는 것이다. 이것은 recurrent model이 short term memory를 생성하는 것을 가능하게 하고, 과거의 정보는 이전 단계의 hidden layer의 상태와 현재의 input에 기반하여 업데이트 된 hidden layer의 state로 표현될 수 있다.

RNN model의 훈련 당 복잡도는 다음과 같다.

word represatation 는 hidden layer 와 같은 차원을 갖고, 는 계층적 소프트맥스를 사용해서 로 축소될 수 있다. 대부분의 복잡도는 에서 나온다.

3. Parallel Training of Neural Networks

거대한 data set에 대해 NNLM과 이 논문에서 제안된 새로운 모델들을 포함하여 DistBelief라고 불리는 top of large-scale distributed framework인 몇몇 모델들을 실행했다. 이 framework는 우리가 Parallel하게 같은 모델을 반복해서 실행할 수 있게 했고, 모든 파라미터를 유지하는 centralized server가 통해 각 replica들은 그것의 gradient의 update와 같았다. 이 parallel train에서, 우리는 Adagrad를 사용한 미니배치 경사하강법을 사용했다. 이 framework에서 100개, 또는 몇백개의 replica를 사용하는 것이 일반적이며, 이는 data center의 다른 기계들의 많은 CPU core를 사용한다.

💡 3. New Log-linear Model

이 섹션에서는 computational complexity를 최소화하면서 distributed representation을 학습하기 위한 두가지 모델 구조를 제안한다. 이전 섹션에서의 main observation은 대부분의 복잡도가 non-linear hidden layer에 의해 생긴다는 것이다.

1. Continuous Bag-of-Words Model

첫번째 제안된 아키텍쳐는 non-linear hidden layer가 제거되고 projection layer가 모든 단어를 위해 공유되는 feedforward NNLM과 비슷하다. 우리는 단어의 순서가 projection에 영향을 끼지치 않기 때문에 이 구조를 bag-of-word 모델이라고 부른다. 게다가, 우리는 미래로부터 단어를 사용한다. 즉, 우리는 input으로 4 future and 4 history words로 log-linear classifier을 구축하여 다음 section에서 소개할 task에서 가장 좋은 성능을 얻을 수 있었다. 훈련의 복잡도는 다음과 같다.

우리는 이 모델을 앞으로 CBOW라고 부를 것이다. 보통의 bag-of-word 모델과는 다르게, 이것은 context의 continuous distributed representation를 사용한다. input과 projection layer 사이의 가중치 매트릭스는 NNLM과 같은 방식으로 모든 단어 위치를 위해 공유된다.

2. Continuous Skip-gram Model

두번째 아키텍쳐는 CBOW와 비슷하지만, context에 기반해 현재 단어를 예측하는 대신에 같은 문장의 다른 단어에 기반한 단어의 분류를 극대화한다. 더 정확히 말하자면, 우리는 각 현재의 단어를 continuous projection layer와 함께 log-linear classifier에 사용하고, 현재 단어 앞뒤의 특정 범위안의 단어를 예측한다. range를 증가시키면 단어 벡터의 quality을 향상시키는 것을 발견했으나, 이는 계산 복잡도를 증가시킨다. 거리가 먼 단어는 가까운 단어보다 현재 단어와 연관성이 떨어질 것이므로 훈련 세트에서 이런 단어들은 샘플링을 적게 함으로써 가중치를 줄였다. 이 아키텍쳐의 훈련 복잡도는 다음의 식에 비례한다.

: 단어의 최대 거리

💡 4. Result

- Semantic acc : Skip-gram > CBOW > NNLM < RNNLM

- Syntactic acc : CBOW > Skip-gram > NNLM < RNNLM

- Total acc : Skip-gram > CBOW > NNLM

Reference

https://wikidocs.net/21692

https://dreamgonfly.github.io/blog/word2vec-explained/