이상하게 이 부분은 볼 때마다 재미가 없다. 하지만 결국 하게 된다. 아는 만큼 보인다..

밀렸지만 아무튼 정리 끝.. 이제야 Tokenization과 Word Embedding의 개념을 확실히 잡은 것 같다.

앞으로는 사담을 줄이고 좀 더 체계적인 기록을 남기려고 한다. 도전!

Tokenization

개념

Tokenization, Tokenizing이란, 주어진 text를 token 단위로 분리하는 방법을 말한다. 이때 token은 자연어처리 모델이 각 timestep마다 처리할 수 있는 하나의 단위를 말한다. 이 token은 상황에 따라 형태가 달라지지만, 보통 의미를 단위로 구분한다.

Level별 분류

Word-level Tokenization

단어 단위로 구분하기 위해 영어를 생각해보면, 일반적으로 띄어쓰기 단위로 구분한다.

I love AI → ['I', 'love', 'AI']

한국어는 조사, 어근, 접사 등이 있기 때문에 형태소를 기준으로 단어를 구분하기도 한다.

나는 인공지능이 좋다 → ['나', '는', '인공지능', '이', '좋다']

하지만 사전 정의된 vocab에 특정 단어가 없을 경우 모두 UNK token으로 처리되는 Out-of-Vocabulary 문제가 발생한다.

Character-level Tokenization

token을 철자 단위로 구분한다. 다른 언어라도 같은 철자를 사용하는 경우에 철자 단위 token으로 처리가 가능해진다.

또한 모든 철자를 등록해놓으면, 등장하지 않은 단어라도 철자들의 조합으로 구성되어 있을 것이기 때문에 OOV 문제가 발생하지 않는다.

하지만 주어진 text에 대한 token의 수가 지나치게 많아진다.

철자만으로 의미를 가질 수 없기 때문에 해당 방식으로 tokenization을 수행할 경우, 모델이 낮은 성능을 보인다.

Subword-level Tokenization

subword란 하나의 단어조차 의미를 갖는 더 작은 단위를 말한다.

예를 들면 preprocessing이라는 단어는 pre-, process, -ing로 구분할 수 있다.

하지만 세상 모든 subword를 사전에 등록해놓기 어렵기 때문에 tokenization을 수행하는 다양한 방법이 존재하는데, BPE(Byte-Pair Encoding), WordPiece, SentencePiece 등 subword tokenization 방법론에 따라 subword의 단위는 다양하게 결정된다.

이러한 Subword Tokenization은 Character-level Tokenization에 비해 사용되는 token의 평균 개수가 적고, OOV 문제가 없어 앞선 두 종류의 단점을 해결한 방식이다. 또한 의미를 기준으로 tokenization을 수행하기 때문에 모델이 의미를 이해할 수 있어 높은 정확도를 보인다.

Byte-Pair Encoding

Subword-level Tokenization의 대표적인 예시이다. 예와 함께 과정을 살펴보도록 하자.

['low', 'lower', 'newest', 'widest']라는 단어 목록이 있다고 하자.

-

철자 단위의 subword 목록을 만든다.

['l', 'o', 'w', 'e', 'r', 'n', 's', 't', 'i', 'd'] -

연속해서 등장하는 철자들의 pair 중 가장 빈도수가 높은 pair를 token으로 추가한다.

위 경우에서lo가 두 번으로 가장 많이 등장한다. 이 pair를 subword 목록의 token으로 추가한다.

['l', 'o', 'w', 'e', 'r', 'n', 's', 't', 'lo'] -

추가된 pair를 철자로 포함하여 위 과정을 반복한다.

종료 조건은 특정 횟수만큼 loop를 반복하거나, subword 목록이 일정 크기에 도달했을 때로 설정할 수 있다.

WordPiece

Byte-Pair Encoding 방식과 유사한데, 연속해서 등장하는 token의 pair를 선택할 때 등장하는 빈도수가 아니라, 그에 기반한 likelihood를 사용한다. Google에서 만든 알고리즘으로, BERT를 학습할 때 사용된 알고리즘이며, 코드가 공개되지 않아 이론적으로 설명하는 곳이 많다.

여기서도 ['low', 'lower', 'newest', 'widest']라는 단어 목록이 있다고 하자.

- 철자 단위의 subword 목록을 만든다.

['l', '##o', '##w', '##e', '##r', 'n', '##s', '##t', 'w', '##i', '##d']

이때 BPE와 다른 점은, 시작하는 철자가 아니라면##과 같은 표시를 한다는 것이다. - BPE와 달리 연속하는 철자로 이루어진 pair가 등장하는 빈도수가 아닌, maximum likelihood를 기반으로 subword 목록에 추가할 pair를 선택하여 tokenization 한다.이렇게 하면 아무리 pair가 많이 등장했어도, 각 원소의 빈도수가 더 높다면 score는 낮게 할당된다.

- 단어 목록의 크기가 일정 범위가 될 때까지, 혹은 지정한 횟수만큼 위 과정을 반복한다.

WordPiece 알고리즘은 score를 계산하는 부분에서 BPE와 차이가 있다. 수식만 봤을 때는 low라는 원형이 lower, lowest라는 비교급, 최상급보다 score가 높게 할당될 것 같은 느낌이 들지만 정확히는 모르겠다..

Unigram

여기서는 계산의 편의를 위해 아래와 같은 corpus를 예로 들겠다.

{"hugs bun": 4, "hugs pug": 1, "hug pug pun": 4, "hug pun": 6, "pun": 2}

key는 예시 문장이고, value는 빈도 수이다.

-

띄어쓰기를 기준으로 단어를 구분한다. Word-level Tokenization을 수행한다고 봐도 무방하다. 하지만 이를 token으로 사용하지는 않는다.

{"hug": 10, "pug": 5, "pun": 12, "bun":4, "hugs":5} -

각 단어의 모든 substring을 vocab에 추가한다.

예를 들어hug에 대하여h, u, g, hu, ug, hug가 모두 token이 되는 것이다.

['h', 'u', 'g', 'p', 'n', 's', 'hu', 'ug', 'pu', 'un', 'gs', 'hug', 'pug', 'pun', 'bun', 'ugs', 'hugs'] -

각 token들의 등장 빈도수를 구한다.

{'h': 15, 'u': 36, 'g': 20, 'hu': 15, 'ug': 20, 'p': 17, 'pu': 17, 'n': 16, 'un': 16, 'b': 4, 'bu': 4, 's': 5, 'hug': 15, 'gs': 5, 'ugs': 5} -

token들을 조합해서 각 단어가 생성될 probability를 구한다. 이때 각 token들의 확률은 독립이라고 가정하고 확률을 곱해서 해당 probability를 구한다.

예를 들어pug를 만들기 위해 모든 가능한 조합의 확률은,

['p', 'u', 'g'] = 0.000389,['pu', 'g'] = 0.0022676,['p', 'ug'] = 0.0022676이다.

결과적으로pug는pu g또는p ug로 tokenization 해야 한다는 것이다.

모든 단어에 대해 확률을 구하면 아래와 같다.

'hug': ['hug'] (prob 0.071428)

'pug': ['pu', 'g'] (prob 0.002267)

'pun': ['pu', 'n'] (prob 0.006168)

'bun': ['bu', 'n'] (prob 0.001451)

'hugs': ['hug', 's'] (prob 0.001701) -

각 단어들의 probability를 사용해서 현재의 score를 계산한다. score는 negative log likelihood를 사용한다.

따라서 현재의 score는 이다.

-

현재의 vocab에서 필요하지 않은 p%의 token을 제거한다. token을 제거하는 기준은, 해당 token이 제거되었을 때 현재의 score에서 발생하는 loss가 최소화되는 token이다.

예를 들어, 4번에서p ug로 tokenization을 수행해도 같은 score를 갖게 되기 때문에 현재의 vocab에서pu는 삭제되어도 괜찮다고 판단한다.

이때 p%의 p는 하이퍼파라미터로, 적절히 설정한다. -

이 과정을 원하는 vocab size가 될 때까지, 혹은 원하는 반복 횟수만큼 반복한다.

과정이 길고 복잡하기에 장단점을 살펴보자.

pros 모든 substring을 전부 고려한 vocab으로부터 시작하는 방식이기 때문에 가능한 token들을 모두 조합할 수 있다.

cons 모든 조합을 다 고려하기 때문에 계산 과정이 많고, 시간이 오래 걸린다. 또한 각 token을 조합하여 단어를 생성할 때 token들이 독립이라고 가정하는데, 이는 현실 세계와 부합하지 않는다. (Ex. 영어에서는 대부분의 경우 q 뒤에 u가 와야 함)

SentencePiece

비지도학습 방식으로 수행되는 tokenizer이다. BPE 방식과 Unigram 방식을 포함하고 있으며, 언어의 종류에 구애받지 않고 tokenization을 수행할 수 있다고 한다.

SentencePiece 학습법은 분포 추정(Variational Inference)의 일종으로, 관측 데이터(evidence)와 모델 파라미터(theta)가 있을 때 가설에 대한 분포 P를 virational parameter를 도입해 Q로 근사하는 것이다. 근사를 하는 이유는 분포 P 자체를 찾아내는 것이 매우 어렵기 때문이다.

분포 추정이란, 주사위를 굴렸을 때 어떤 숫자가 나올 확률을 모든 실행을 통해 구할 수 없을 때 이를 으로 추정하는 과정을 생각하면 된다. MLE, MAP 또한 분포 추정의 방식이다.

어려우니까 코드로 수행하는 방식을 알아보자...

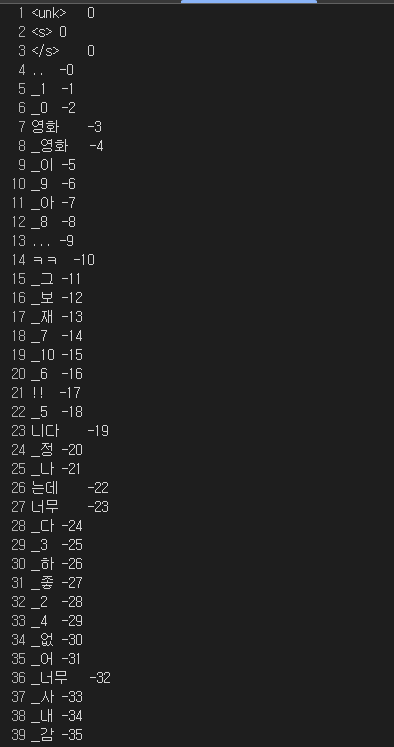

샘플 데이터는 네이버 영화 리뷰 데이터셋을 사용했다. 링크

- 먼저 sentencepiece 모듈을 설치한다.

pip install sentencepice - SentencePiece 모듈을 불러와서 데이터셋을 학습한다. 학습한 모델을 불러온다.

import sentencepice as spm

spm.SentencePiece.Trainer.train(input={txt_file_path}, model_prefix='mymodel', vocab_size=8000, model_type='bpe')

sp = spm.SentencePieceProcessor(model_file='mymodel.model')필요한 인자는 아래와 같다.

input: txt 파일의 경로를 입력한다. 사용하고자 하는 데이터를 별도의 전처리 없이 바로 입력해주기만 해도 알아서 tokenization을 수행한다.

model_prefix: tokenization을 수행 후 생성할 모델의 이름이다. 지정한 이름의 .model과 .vocab이 생성된다.

vocab_size: 생성할 vocab의 크기이다. 위 .vocab에 포함될 token의 개수를 지정한다.

model_type: 적용할 알고리즘을 지정한다. bpe, unigram, char, word가 있다.

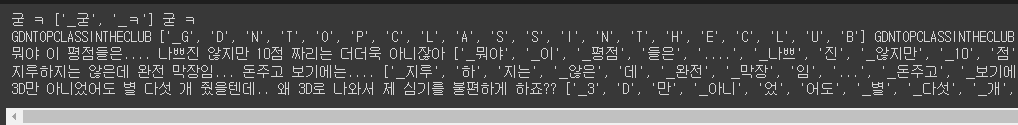

- 학습한 SentencePiece 모델로 test 데이터를 encoding 한다.

sentences = ["예시 문장 1", "예시 문장 2", ...]

# sentences to tokens

tokens = sp.encode(sentences, out_type=str)

# tokens to sentences

backToSentences = sp.decode(tokens)직접 인코딩하고 디코딩한 결과는 아래와 같다.

왼쪽부터 순서대로 원래 문장, token, 복원한 문장이다.

참고

딥러닝을 이용한 자연어 처리 입문: 13-01 바이트 페어 인코딩

Hugging Face: WordPiece tokenization

Hugging Face: Unigram tokenization

velog.io/@gibonki77/SentencePiece

Naver Sentiment Movie Corpus v1.0

Word Embedding

개념

앞서 공부한 Tokenization을 수행 후 각 token에 부여된 index를 기반으로 One-Hot Vector로 표현하는 것에는 두 가지 특징이 있다.

1 단순히 index를 One-Hot으로 표현하기 때문에 단순하다.

2 token 개수만큼의 dimension이 필요하고, token 간 거리가 모두 동일하다.

단점을 설명한 2 때문에 Word Embedding 방식이 제안되었다. 여기서 One-Hot Vector로 표현하는 방식을 Sparse Representation이라고 하는데, Word Embedding으로 표현하는 방식은 다차원으로 압축하여 표현하기 때문에 Dense Representation이라고 한다.

Word Embedding 방법론으로는 LSA, Word2Vec, FastText, Glove 등이 있다. 가장 대표적인 Word2Vec을 살표보도록 하자.

Word2Vec

Distributed Representation

앞서 단어를 One-Hot Vector로 표현하지 않고 원소들이 밀집한 형태의 벡터로 표현하는 방식을 Dense Representation이라고 했다. 이 Dense Representation 내에서 단어의 의미를 여러 차원에 분산하는 것을 Distributed Representation이라고 한다.

Distributed Representation이 되기 위해서는 비슷한 문맥에서 등장하는 단어들은 비슷한 의미를 가진다는 가정에서 시작한다. 예를 들어, 아이유(사심 ON)는 예쁘다, 귀엽다라는 단어와 자주 등장함에 따라 예쁘다와 귀엽다를 벡터화했을 때 둘의 거리는 가까울 것이다.

Word2Vec의 학습 방식에는 CBOW와 Skip-Gram 두 가지가 있다. CBOW는 주변 단어를 입력으로 중간의 단어를 예측하는 학습 방식이고, Skip-Gram은 중간 단어들을 입력으로 주변 단어들을 예측하는 방법이다.

CBOW

예시로 The cat sits on the mat라는 문장이 있다고 하자. 이때 window_size=2라면, target이 되는 단어의 앞뒤로 2개씩이 주변 단어(context)가 된다. 즉, 주변 단어는 2*window_size개가 되는 것이다. center word와 context word를 짝지어보면 아래와 같다.

The: [[pad], [pad], cat, sits]

cat: [[pad], The, sits, on]

sits: [The, cat, on, the]

on: [cat, sits, the, mat]

the: [sits, on, mat, [pad]]

mat: [on, the, [pad], [pad]]

이렇게 window_size만큼 window를 옆으로 이동해가며 데이터셋을 만드는 것을 sliding window라고 한다.

이때 window_size를 충족하지 못하는 중심 단어에 대해서도 짝을 지어 padding을 주었지만, corpus가 충분히 크다면 이 단어들을 삭제해도 무방하고, 그렇지 않다면 모든 중심 단어의 길이를 동일하게 하기 위해 The: [cat sits on the]와 같이 단어를 추가해줘도 된다.

이렇게 데이터셋 전처리를 마치고 모델에 입력한다.

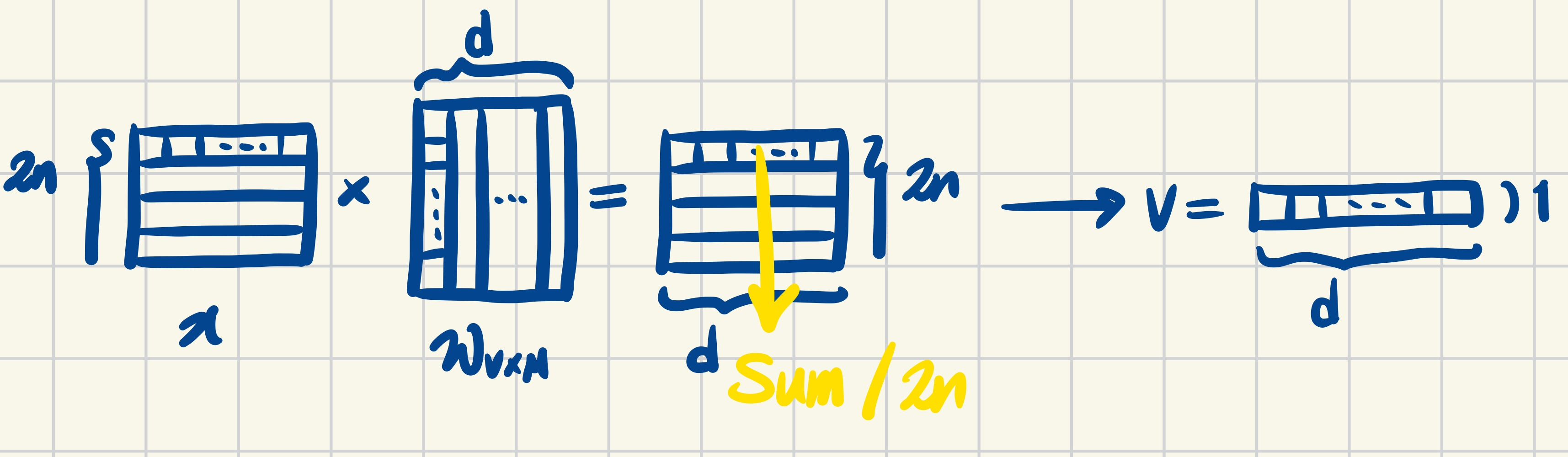

- 주변 단어를 를 사용해서 projection 한다. 이때 V는 입력 벡터 x의 dimension이고, M은 projection layer의 dimension이다.

projection하는 과정을 더 자세히 보면 아래 그림과 같다.

v는 projection layer에서의 벡터의 형태이다. 1xd의 shape(d는 위에서 설명했던 M)을 가지며, 은닉층이 한 개이기 때문에 shallow neural network라고 한다. 또한 예측할 단어와 매핑되는 look-up table이라고 한다. 이때 활성화 함수가 사용되지 않는데, 이는 단어 간 선형성을 유지하기 위함이기도 하고, 비선형을 추가함으로써 단어 간 거리 정보가 사라지지 않도록 하기 위함이기도 한다.

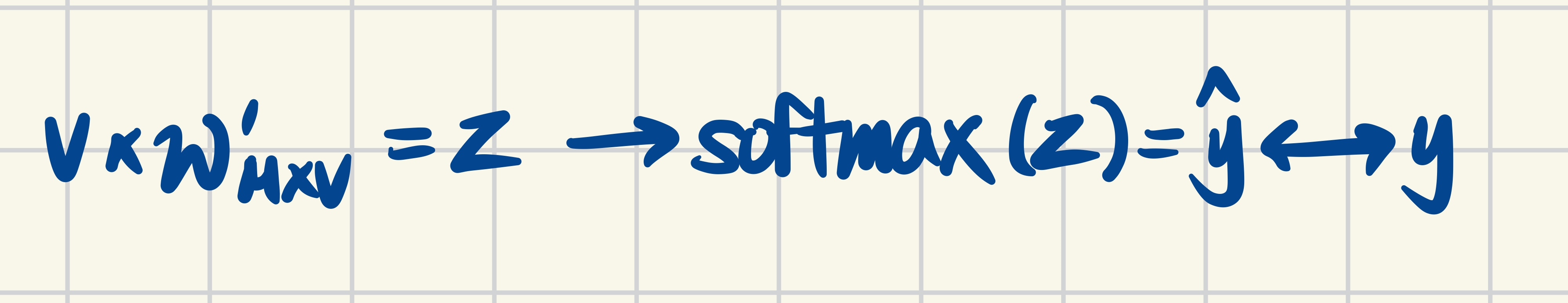

- 복원을 위해 을 사용해서 예측값을 구한다. 이 와 는 transpose가 아니라 다른 가중치이다.

z는 1xV의 shape을 가지며, softmax를 통해 상대적인 확률값으로 변환된다. 이를 실제 정답값과 비교하여 cross entropy loss를 손실함수로 하여 와 를 학습한다.

학습이 완료된 와 를 모두 embedding vector로 사용하기도 하고, M차원의 크기를 갖는 행렬의 행만을 사용하기도 한다.

Skip-Gram

Skip-Gram은 중심 단어로부터 주변 단어를 예측한다.

앞의 예문 The cat sits on the mat를 그대로 사용하여 짝을 지어보면 아래와 같다.

sits: [The]

sits: [cat]

sits: [sits]

sits: [on]

on: [cat]

on: [sits]

on: [the]

on: [mat]

CBOW에 비해서 주변 단어들의 길이가 대폭 줄었다. 따라서 projection layer에서 평균을 구하는 과정이 없다.

연구 결과 Skip-Gram이 CBOW보다 성능이 좋다고 알려져 있다. 이는 Skip-Gram이 보다 복잡함에 따라 단어 하나가 다른 단어와의 관계를 더 많이 배워서라고 생각해볼 수 있다.