Attention 공부하면서 뉴진스 Attention 듣는 사람 나야 나~

정리하면서 하루종일 꽂혀서 뉴진스 노래 반복재생 했다~🎵🔊(tmi)

💡

이번 주부터 벌써 Transformer를 배울 줄은...

학부 연구생 시절(?)에 Attention Is All You Need를 시도한 적이 있는데 Q, K, V에 대한 개념이 잘 잡히지 않아 애매하게 이해하고 넘겼던 기억이 있다.

강의를 들으며 '그럼 이건?', '오잉 저건?👀' 했던 부분들을 마치 예상하셨다는 듯이 설명해주셔서 신기하고 감사했다. 나는 얼마나 공부해야 저런 걸 예상할 정도가 될 것인지...ㅋㅋ..ㅠ

그래도 새로운 걸 아는 것은 늘 즐겁기에 재밌다!

다음주에 팀원들과 논문 리뷰를 하기로 했으니 강의로 다진 개념을 논문으로 공부하며 제대로 익혀야겠다. 이번엔 반드시 Attention 개념을 제대로 챙겨갈 것이다!!🔥

Linear Regression의 가정

시작하기 전에!!! 선형성, 독립성, 등분산성, 정규성에 대한 내용을 🔗Week 1에 추가했으니 링크 타고 가서 확인하기!

오늘 내용에서 중요한 것은 아니고 배운 내용을 다시 정리할 겸 저번 주 포스트와 연결해놓았다.

내 기록용이므로 무시해도 무방!

Transformer Introduction

⭐Attention Is All You Need⭐ 논문에서 등장한 Transformer 모델은 encoder와 decoder 구조로, 문장과 같은 순차적 데이터에서 각 단어의 관계를 추출하여 맥락과 의미를 파악하는 모델이다. 기존에 시계열 데이터 처리를 위한 모델은 RNN 기반(LSTM, GRU 등)이거나 Convolution을 사용했었는데, Transformer 모델은 이 구조에서 벗어나 순전히 self-attention에 기반한 모델이다.

Transformer 모델을 정확히 알기 위해 팀원들과 Attention Is All You Need 논문 리뷰를 하기로 했는데, 그 전에 Transformer 모델이 등장하게 된 배경부터 차근히 정리해보고자 한다.

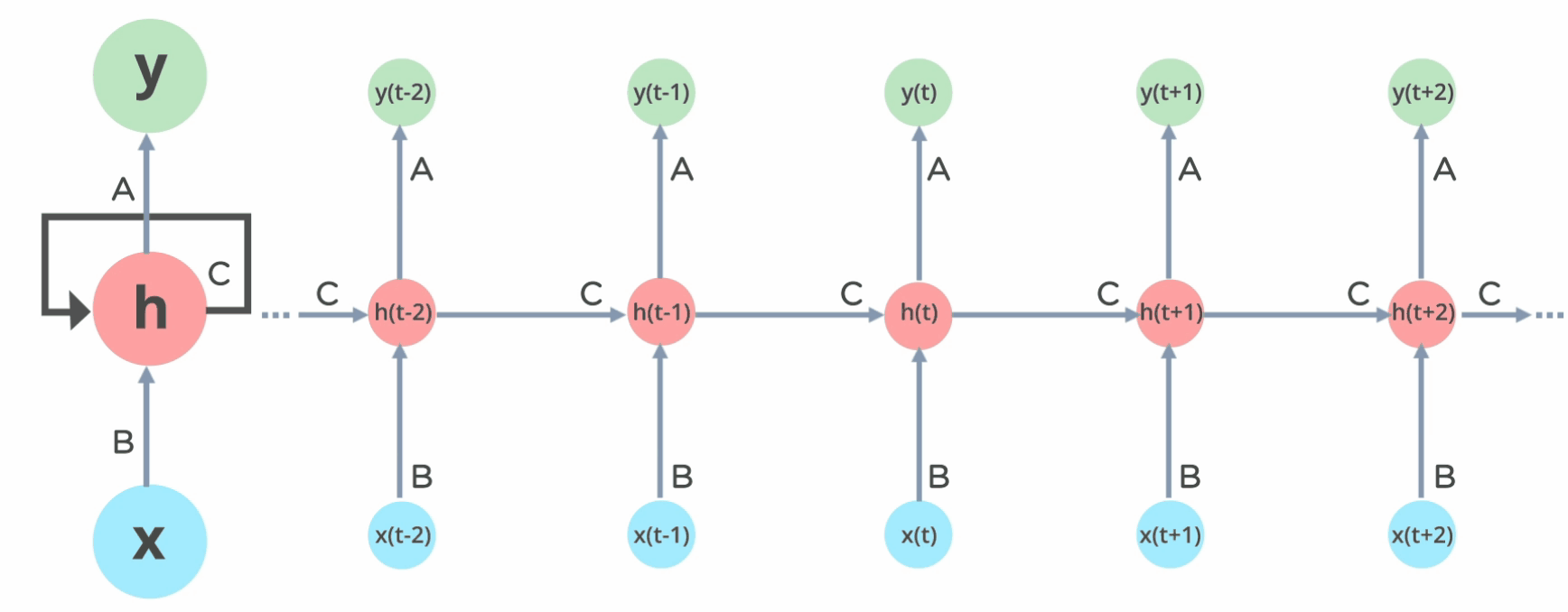

RNN(Recurrent Neural Network)

sequential한 데이터 처리의 원조라고 할 수 있는 모델이다.

그림에서 첫 번째 셀을 보면, input x가 hidden state에 입력된 결과가 다시 hidden state로 전달된다. 이 과정이 바로 Recurrent한 과정이다.

이전 단계 t-1의 hidden state를 다음 단계 t의 hidden state로 전달하며, 이때 과거의 정보가 이후의 timestep까지 전해지기 때문에 squencetial한 데이터를 처리할 수 있는 것이다.

이렇게 input x가 hidden state에 입력될 때, hidden state에서 hidden state로 전달될 때, hidden state에서 출력을 낼 때 각각 아래와 같은 가중치를 사용한다.

각 계층(, , )끼리는 가중치를 공유하지만, 서로 다른 계층끼리는 가중치를 공유하지 않는다.

RNN의 문제는 여기서 발생한다. 입력의 sequence 길이는 모델의 depth처럼 작용하기 때문에 sequence가 넘어갈 때마다 hidden state를 통과할 때 가 반복적으로 곱해진다. 따라서 Back Propagation을 할 때 이 가 반복적으로 곱해지기 때문에 해당 값의 크기에 따라 Vanishing/Exploding Gradient Problem이 발생한다.

먼저, Vanishing Gradient Problem의 경우, 가 0에 가까운 값을 가질 때 발생한다. 마지막 timestep t에서 첫 번째 timestep으로 가는 동안 가 총 t-1번 곱해지는데, 이때 앞 단의 layer의 값이 점점 작아지기 때문에 제대로 된 정보 전달이 이루어지지 않는다. 결과적으로 과거 정보의 소실이 발생한다.

반대로, Exploding Gradient Problem은 의 값이 매우 클 때 발생한다. Back Propagation을 수행할 때 반복적으로 큰 값이 곱해지기 때문에 가중치가 매우 큰 값으로 갱신되어 학습 과정이 불안정해진다. 결과적으로 모델이 발산한다.

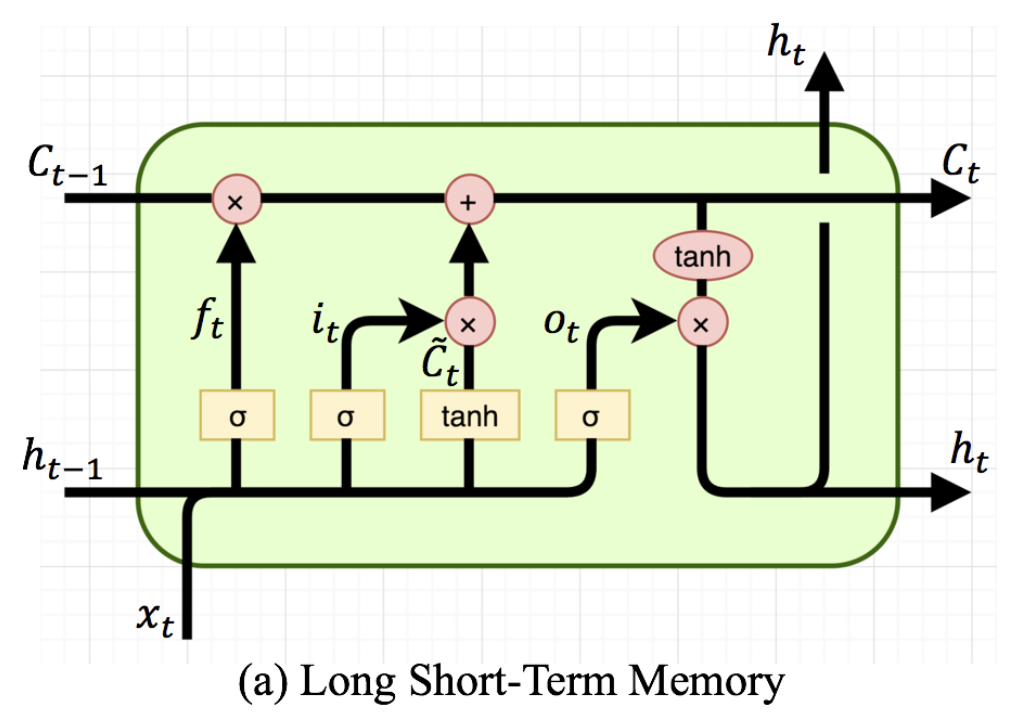

LSTM

RNN의 Vanishing/Exploding Gradient Problem을 방지하기 위해 LSTM이 등장한다. LSTM은 기본적으로 RNN의 구조와 같으나, 장기 메모리를 위한 cell state와 세 가지의 gate가 추가되었다.

Cell State

현재의 timestep을 t라고 했을 때 과거로부터 현재까지 전달되는 정보이다.

아래 설명할 세 가지의 gate를 통해 cell state를 결정할 수 있다.

Forget Gate

과거 메모리를 얼마나 잊을지를 결정하는 게이트이다.

즉, 을 로 전달할 때 과거 메모리를 얼마나 반영할 것인지를 결정한다.

이 값이 클수록 과거 정보가 중요하며, 미래로 전달되어야 한다는 의미이다.

Input Gate

현재 정보를 cell state에 얼마나 반영할지를 결정하는 게이트이다.

이 값이 클수록 현재 정보가 장기적으로 기억되고, 전달되어야 한다는 의미이다.

Output Gate

현재 상태에서의 출력을 결정하는 게이트이다.

출력에 대해 로 과거 메모리를 얼마나 반영할지 결정하고, 로 현재의 input인 를 얼마나 반영할지 결정한다.

결과적으로 RNN보다 메모리를 장기적으로 보존할 수 있다는 장점이 있다. 이때 , 이라면 과거 정보는 전부 미래로 전달하고, 현재 정보는 전혀 반영하지 않아 cell state가 무한히 보장된다. (그럼 이면 LSTM이 RNN처럼 동작하나?)

하지만 LSTM는 cell state와 위 세 가지 gate를 포함하기 때문에 연산량이 많아져 비효율적이라는 단점이 존재한다. GRU는 이를 개선한 모델이다!

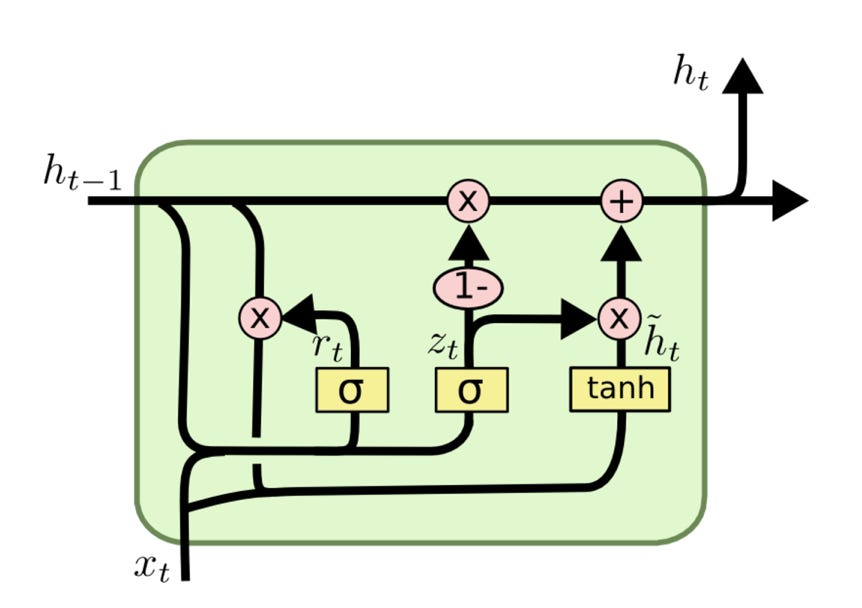

GRU

그림의 상단에 부터 까지 이어지는 라인이 LSTM의 cell state 역할을 한다. 또한 LSTM과 달리 GRU에는 두 가지 gate가 존재한다.

Reset Gate

LSTM에서의 Forget Gate와 같은 역할을 한다.

Update Gate

현재 timestep 에서의 input 를 장기 메모리에 얼마나 전달할지를 결정한다. LSTM에서의 Input Gate와 같은 과정이다.

이때 그림에서 알 수 있듯이 즉, 과거 정보가 두 갈래로 나누어지는 것을 알 수 있다. 하나는 Reset Gate를 통과하여 미래 정보에 반영되고, 다른 하나는 현재 timestep 에서의 input 와 결합하여 output을 결정한다.

결과적으로 GRU는 가 LSTM의 cell state와 hidden state의 역할을 동시에 수행하여 보다 가볍고, 효율적인 모델이라고 할 수 있다.

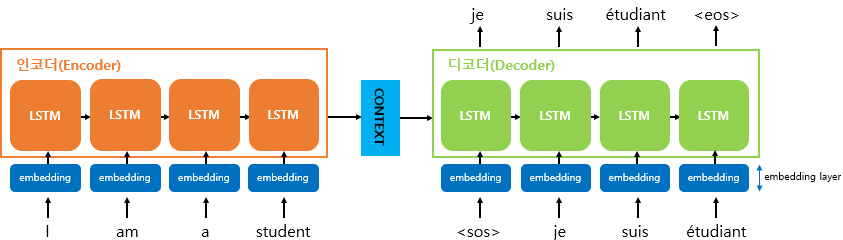

Seq2Seq

앞서 공부한 RNN 기반의 모델들은 one-to-many, many-to-one, many-to-many 구조를 사용하더라도 각 입력에 출력이 순서대로 대응되게 된다. 이때 Sequential한 데이터를 처리하는 모델로 번역을 수행한다고 해보자.

I love you라는 문장은 나는 너를 사랑해라는 문장으로 번역될 것이다. 단어의 순서에 의하면 I=나는, love=너를, you=사랑해라는 구조가 된다는 것인데, 한국어에는 '은(는)', '을(를)'과 같은 조사가 있을 뿐 아니라 단어의 배열 즉, 문장의 구조가 영어랑 다르기 때문에 단어끼리 일대일 대응을 하는 번역은 올바르지 않다는 것을 알 수 있다. 한국어와 영어 외에도 다양한 언어에 이와 같은 변수가 존재할 것이다.

따라서 Seq2Seq 모델은 앞서 공부한 RNN 기반의 모델들을 특정한 방식으로 조합하여 만들 수 있다.

Encoder & Decoder

Seq2Seq 모델은 입력 단의 셀과 출력이 대응된다는 틀에서 벗어난다. 따라서 한 단어, 혹은 현재부터 이전까지의 단어들만 보고 번역을 수행하는 것이 아니라 input sequence를 전부 확인한 후 번역을 할 수 있는 것이다. 사람이 문장 전체를 보고 번역을 수행하는 것과 같은 방식이다. 이때 문장 전체를 읽고 의미를 파악하는 것은 Encoder가 수행하고, 파악한 의미를 번역된 상태로 출력하는 것은 Decoder가 수행한다.

Encoder

위 그림에서 왼쪽의 Encoder 부분을 보면 각 셀마다 출력을 내는 것이 아니라 정보가 계속 다음 timestep으로 전달되고 있는 것을 알 수 있다. 따라서 첫 번째 timestep=이라고 한다면, 은 I라는 단어를 인코딩한 정보를 갖고, 는 I am을 인코딩한 정보를 가지며, 최종적으로 는 전체 문장의 인코딩된 정보를 갖는다. 이때 인코딩한 정보를 input에 대한 embedding vector라고 하며, 최종 출력된 벡터는 전체 문장에 대한 embedding vector가 된다.

Decoder

Decoder에서는 Encoder에서 출력한 embedding vector를 바탕으로 결과를 출력한다. 이때 Auto-Regressive Generation을 수행한다. Auto-Regressive Generation이란 timestep=에 출력 를 계산하기 위해 이전 단계의 과 함께 을 사용하는 것이다. 이 과정을 통해 모델이 자신이 출력했던 단어를 기억하며 다음에 출력할 적절한 단어를 선택할 수 있기 때문에 전체 문장에 대한 inference 성능이 좋아진다.

RNN의 recurrent한 구조

timestep=에 이전 단계에서 계산한 과 현재의 input인 를 사용Auto-Regressive Generation

과 이전 단계의 출력인 을 사용의미적 차이

RNN의 recurrent한 구조는 전체 sequence를 시간에 따라 연속적으로 처리하는 구조인 것이고, Auto-Regressive Generation은 다음 출력을 생성하기 위한 단계적인 과정인 것이다.

참고로 학습할 때는 이전 단계의 출력 대신 이전 단계의 정답이 사용되며, 이를 Teacher Forcing이라고 한다.

이제 Transformer에 거의 다 왔다!

Attention Mechanism

앞서 RNN, LSTM, GRU는 각각 기존의 모델의 단점을 개선한 모델이고, 이를 사용하여 효과적으로 Sequential한 데이터를 처리하기 위해 Seq2Seq 모델이 개발되었다고 했다.

하지만 Seq2Seq 또한

1. RNN의 기본적인 문제인 Vanishing Gradient 문제

2. 하나의 embedding vector에 모든 정보를 저장하려고 할 때 긴 sequence의 경우 정보의 손실 발생 문제

가 있기 때문에 이를 개선하기 위해 ⭐Attention 개념⭐이 도입되었다.

Attention이란?

Seq2Seq의 Decoder에서 출력을 낼 때 input의 모든 정보를 압축하여 포함하는 embedding vector뿐 아니라 Encoder의 모든 input의 각 단계에서 계산된 hidden state를 사용하도록 한다. 하지만 이때 모든 step이 문장 내에서 같은 정도의 중요도를 차지하지 않을 것이기 때문에 Attention score를 계산하기 위한 mechanism이 필요하다.

Attention 함수

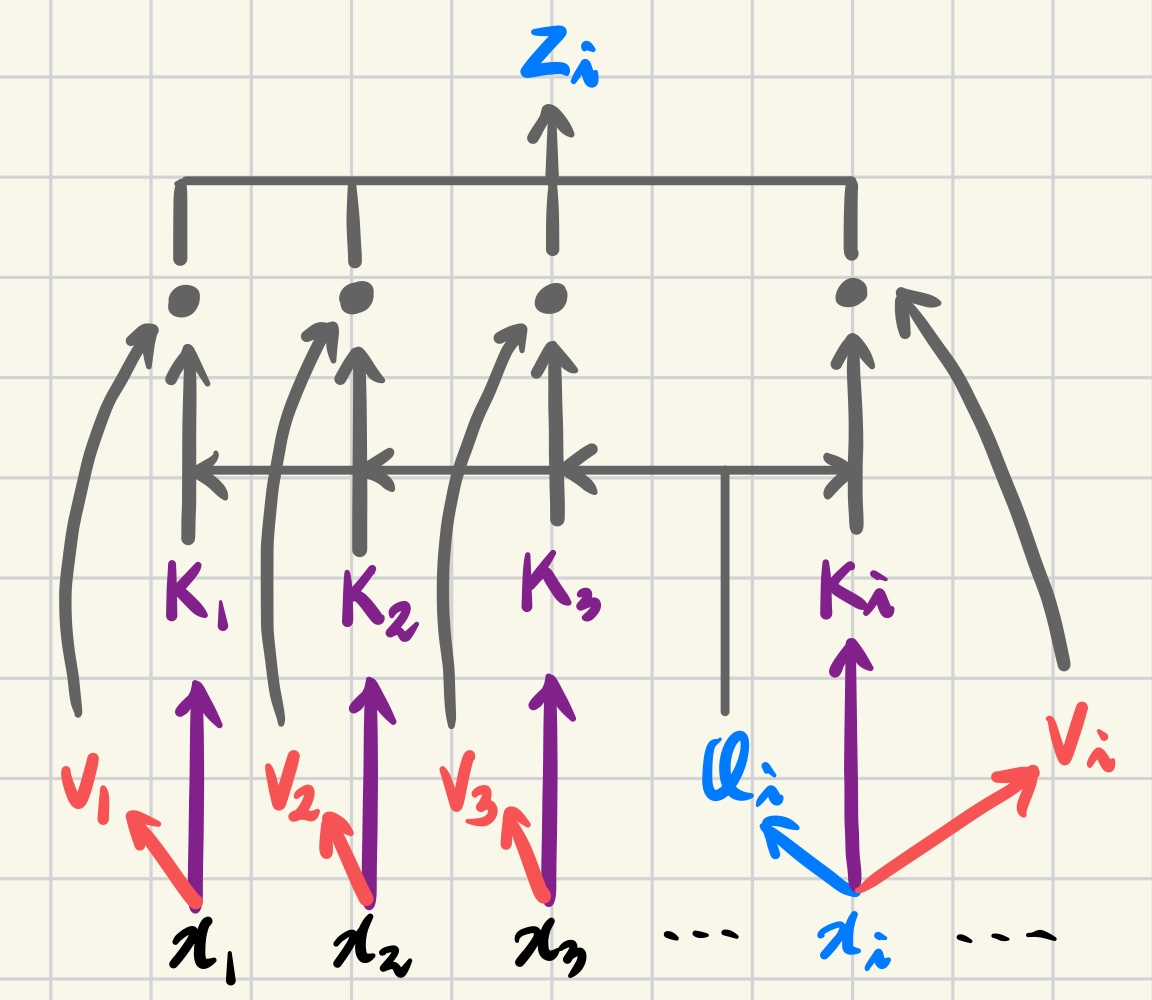

Attention은 Query, Key, Value로 구성되어 있다.

Decoder의 현재 상태의 hidden state가 비교자가 되어 Encoder의 모든 hidden state들을 비교 대상으로 하여 얼만큼 유사한지를 계산한다. 따라서 Query, Key, Value는 같은 shape을 가져야 한다.

Attention을 함수로 표현하면 아래와 같다.

Queryt 시점의 Decoder 셀에서의 hidden state

Key모든 시점의 Encoder 셀의 hidden states

Value모든 시점의 Encoder 셀의 hidden states

Dot Product Attention

Seq2Seq 모델에서 사용되는 Dot Product Attention을 먼저 살펴보자.

그림에서 Decoder의 상태를 보면 이미 Attention Mechanism을 통해 세 개의 token들을 추출한 것을 알 수 있다. 'suis'를 출력한 세 번째 LSTM 셀은 다음 단어를 출력하기 위해 Encoder의 모든 hidden state들을 살펴볼 것이다.

1. Attention Score 계산

Encoder의 모든 hidden state: (그림을 기준으로 T=4)

Decoder의 hidden state: (그림을 기준으로 t=3)

이때 와 의 shape은 동일하다.

Attention Score:

두 벡터를 내적했기 때문에 유사도를 계산하는 것과 같다. 즉, 현재 Decoder의 LSTM의 hidden state와 가장 유사한 Encoder의 hidden state를 찾는 것이라고 봐도 무방하다.

2. Softmax 함수로 Attention Distribution 구하기

Softmax 함수는 Multi-class Classification에서 자주 사용되는 활성화 함수이며, 모든 값을 0과 1사이에 분포하게 하며, 그 값들의 총합이 1이 되는 특성이 있다. 따라서 이를 에 적용하면 각 값들이 어떤 분포로 구성되어 있는지를 알 수 있다. 이 값을 Attention Distribution 또는 Attention Coefficient라고 하며, 각각의 값을 Attention Weight라고 한다.

3. Attention Value 계산

각 Encoder의 Attention Weight 과 hidden state 를 곱한다. 앞서 계산한 Attention Score가 Decoder의 현재 상태(t)의 hidden state와의 유사도라고 했으므로, 이 값을 Encoder의 hidden state와 곱함으로써 가장 유사한 token의 영향력을 가장 크게 만들겠다는 의미가 된다. 이제 계산한 값들을 모두 더한다.

이 값이 Attention Value이다!!

4. Concatenate &

이렇게 계산한 Attention Value를 다시 Decoder의 hidden state 와 concatenate하여 를 만든다. 마지막으로 출력층의 입력이 되는 를 계산하기 위해 Linear 연산을 수행하고 를 통과시킨다.

5. Prediction

를 출력층의 입력으로 사용하여 최종 출력을 계산한다.

아이고 복잡하다.. 정리하자면!

1. Decoder의 현재 단계에서 출력을 내기 위해 Encoder의 모든 hidden state들을 파악한다.

2. Encoder의 hidden state들 각각을 출력에 얼마나 반영할지 결정하기 위해 Attention Value를 추출한다.

3. Attention Value를 사용하여 Decoder에서 출력을 계산한다.

Attention을 계산하는 종류는 이것 말고도 다양하다고 하지만, Transformer를 이해하기 위한 기본적인, Seq2Seq에서 가장 기본적으로 사용되는 mechanism을 살펴본 것이다.

이제 Transformer를 맞이할 준비가 다 끝났다... 드디어...

Keyword만으로 복습해보자!

RNN > LSTM, GRU > Seq2Seq > Dot-Product Attention

다 기억나지요?

Tansformer

Seq2Seq의 한계

기존의 RNN 기반의 모델들은 Vanishing Gradient Problem이 있었다. 이를 개선하기 위해 Attention mechanism이 사용되었는데, RNN을 보정하는 기능이 아닌, Attention 자체만으로 sequential한 데이터를 처리하는 모델이 바로 Transformer이다.

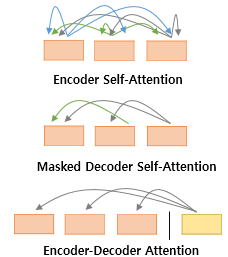

Transformer는 RNN 기반의 모델들을 사용하지 않지만, Seq2Seq의 Encoder Decoder 구조는 유지한다. Attention mechanism 또한 Seq2Seq와 살짝 다른데, 여기서는 Transformer는 Self-Attention이라는 mechanism을 사용한다.

Intro

기존의 Seq2Seq에서는 Decoder의 hidden state에서 Encoder의 모든 hidden state들과 결합되었다. 따라서 현재 단계에서 출력을 내기 위해 입력 sequence의 모든 관계를 살펴본 것이다. 구조 또한 입력 sequence에 맞춰 token 당 하나의 RNN 기반의 cell이 사용되었는데, Transformer에서는 sequence data가 하나의 Encoder에 통째로 입력된다.

Positional Encoding

Transformer는 현재의 타겟 token과 자신을 포함한 입력의 모든 token을 비교한 값을 Attention으로 사용하기 때문에 입력 데이터가 sequential함에도 순서 정보가 무시된다. 따라서 순서 정보를 인위적으로 부여하기 위해 Positional Encoding이 적용된다. 이 방식은 Encoder와 Decoder에 동일하게 적용된다.

Sinusoidal Encoding

과 함수를 사용한 Positional Encoding 방식이다.

수식에서 볼 수 있듯이 sin, cos 함수에 위치값이 입력되었을 때 그 주기를 늘려 sequence 정보에 주기성이 없게 한다. 따라서 긴 sequence 데이터에 대해서 서로 다른 위치의 token에 동일한 값이 할당되지 않는다.

또한 위치가 짝수()인지 홀수()인지에 따라 과 함수를 다르게 적용하는데, 각 함수에 동일하게 이 적용되기 때문에 유사하지만 서로 다른 값을 사용하기 위해서라는 것을 알 수 있다.

Self Attention - Encoder

Transformer의 self-attention은 현재 기준이 되는 단어인 Query를 자기 자신을 포함한 context인 Key들과 비교한다. 이 과정을 통해 자기 자신이 context 내에서 어떤 의미를 갖는지 알 수 있기 때문에 self-attention이라는 이름이 붙었다. 이때 Value는 context의 단어들이다.

1. Query, Key, Value 만들기

각 input의 토큰들을 , , 와 내적하여 Query, Key, Value를 만들고, 현재의 timestep 의 타겟에 해당하는 의 Query와 곱하면 이전의 Dot-Product Attention과 동일하게 유사도를 구할 수 있다.

이때!!!

각 가중치들은 같은 계층(Q, K, V)에 대해서는 그 값을 공유하지만 서로 다른 계층과는 공유되지 않는다. 따라서Query는Query끼리,Key는Key끼리,Value는Value끼리만 학습된다.

2. Scaled-Dot Product Attention

앞 단계에서 계산한 유사도를 Query와 Key의 길이인 로 나누어 scaling을 수행한다. Transformer는 하나의 encoder에서 여러 번의 Attention을 수행하는 multi-head attention을 사용하기 때문에 각각의 head에서 같은 수의 token을 사용하기 위해 아래와 같은 값을 scaling에 사용한다.

scaling을 하는 이유

Query와Key의 길이가 길어질 수록 하나의 token에 대한 내적값이 커지며, 모든 token들의 결과가 넓은 분포를 가지게 된다. 따라서 모두 Softmax를 통과하면 각 값들이 0에 가까운 값으로 변환될 수 있기 때문에 이를 방지하고자 scaling을 수행한다.

3. Attention Value 계산

scaling까지 수행한 후 각 token들에 대한 값을 구했다면, 전체의 분포를 0과 1사이의 값으로 적절히 구성하기 위해 함수를 거친다. 그 다음 타겟 token인 의 Value와 곱한 뒤 concatenation한다.

출력된 결과가 바로 Attention Score이다.

4. FFNN(Feed Forward Neural Network)

3번에서 구한 Attention Score는 모든 multi-head의 token들을 concat한 값이기 때문에 ()의 shape을 가진다. 따라서 이를 원래의 input 모양으로 되돌리기 위해 한 번 더 선형 변환을 수행한다. 이때 전체 정보를 압축하며 특징 추출을 한 번 더 하는 효과가 있다.

5. Multi-head Self Attention

앞서 잠깐 언급했지만 Transformer는 multi-head를 사용한다. 하나의 Encoder에 입력되는 token들에 대하여, 모델을 빌드할 때 미리 정의된 개수만큼 구분하여 병렬로 Attention Score 연산을 수행하고 최종적으로 concat한다. 즉, 2, 3번의 과정을 Encoder 내에서 병렬로 만큼 수행하고 4번의 FFNN을 거친다.

또한 Attention Is All You Need에서는 Encoder와 Decoder를 각각 6개씩 쌓았기 때문에 2-4번 과정을 6번 수행 후 Decoder로 전달된다!

Self Attention - Decoder

Decoder는 두 개의 Sub-layer로 구성되어 있다. 하나는 Masked Multi-head Self Attention을 수행하고, 두 번째는 Multi-head Self Attention을 수행한다.

1. Masked Multi-head Self Attention

Decoder의 입력은 Encoder 또는 이전 단계의 Decoder에서 출력된 sequence 데이터를 Positional Encoding한 값이다. 즉, 바로 전 단계의 block에서 출력된 값을 사용한다. 하지만 실제 추론 시 Decoder가 출력을 낼 때 현재 단계 이후의 정보는 알 수 없기 때문에 Input들 중 현재의 timestep 이후의 정보들은 masking한다. 이후 Decoder 내에서 수행하는 self-attention 연산은 Encoder에서 사용한 방식과 동일하다.

2. Encoder-Decoder Multi-head Self Attention

Decoder의 첫 번째 Sub-layer의 입력은 바로 전 단계의 출력을 사용하기 때문에 각각의 Query, Key, Value의 출처가 동일하지만, 두 번째 Sub-layer부터는 Query만 Decoder에서 출력한 값을 사용하고, Key, Value는 마지막 Encoder의 것을 사용한다.

Encoder의 값을 사용하는 이유

Decoder의 첫 번째 Sub-layer에서 출력한Query를 Encoder의Key,Value와 비교함으로써 Decoder가 현재 출력할 token이 최초 입력과 어떤 관계를 갖는지를 파악할 수 있다.

즉, Encoder-Decoder 간의 상호작용을 통해 입력 문장과 출력 문장 간의 관계를 모델링한다.

이제 Attention Is All You Need를 읽으며 놓친 개념, 잘 연결이 되지 않은 개념들을 더 챙기도록 하겠다!