MNIST 숫자 손글씨 데이터 셋

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

import os

print(tf.__version__) # Tensorflow의 버전을 출력

mnist = keras.datasets.mnist # MNIST 데이터를 로드. 다운로드하지 않았다면 다운로드까지 자동으로 진행됩니다.

(x_train, y_train), (x_test, y_test) = mnist.load_data()

print(len(x_train)) # x_train 배열의 크기를 출력2.6.0

60000- x에는 이미지 데이터가 들어있음

- y에는 이미지의 정답이 들어있음 -> y_train[0]

문제에 해당하는 정보를 확인할 때

- 문제가 이미지라면

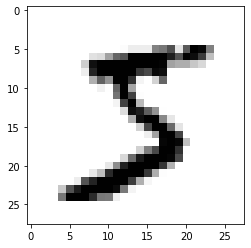

plt.imshow(x_train[0],cmap=plt.cm.binary)

plt.show()

plt.imshow(x_train[0],cmap=plt.cm.binary)

plt.show()

plt.imshow(x_train[1],cmap=plt.cm.binary)

plt.show()

답에 해당하는 정보를 확인할 때

print(y_train[0])

print(y_train[0])5문제와 답을 같이 보고 싶을 때.

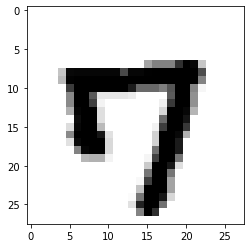

index=10050

plt.imshow(x_train[index],cmap=plt.cm.binary)

plt.show()

print('문제 :', (index+1), '번째 이미지 ',' 답 : 숫자', y_train[index])

문제 : 10051 번째 이미지 답 : 숫자 7입력 받아서 확인하고 싶을떄

x = int(input('1~ 60000 사이의 숫자를 넣어라 ->'))

index =int(x - 1)

plt.imshow(x_train[index],cmap=plt.cm.binary)

plt.show()

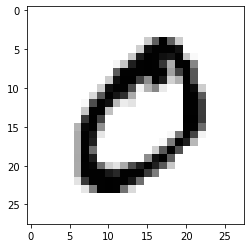

print('문제 :', (index+1), '번째 이미지 ',' 답 : 숫자', y_train[index])1~ 60000 사이의 숫자를 넣어라 ->2

문제 : 2 번째 이미지 답 : 숫자 0훈련용 데이터의 크기와 모양을 확인해보자 (장수, 픽셀)

print(x_train.shape)(60000, 28, 28)# 60,000장과 28x28 픽셀시험용 데이터도 확인해보자

print(x_test.shape)(10000, 28, 28)# 10,000장 28*28픽셀.저장된 이미지의 본래 픽셀값을 확인해보자.

print('최소값:',np.min(x_train), ' 최대값:',np.max(x_train))최소값: 0 최대값: 255정규화 : 훈련시 입력값은 0~1로 정규화 시켜주는게 좋덴다.근데 왜??

x_train_norm, x_test_norm = x_train / 255.0, x_test / 255.0

print('최소값:',np.min(x_train_norm), ' 최대값:',np.max(x_train_norm))최소값: 0.0 최대값: 1.0이제 데이터 준비는 끝났으니 딥러닝 네트워크를 시켜보자.

텐서플로우 케라스(tf.keras)에서 Sequential API라는 방법을 사용해보자

- Sequential API는 개발의 자유도는 많이 떨어지지만, 매우 간단하게 딥러닝 모델을 만들어낼 수 있는 방법

tf.keras의 Sequential API를 이용하여 LeNet이라는 딥러닝 네트워크를 설계한 예

model=keras.models.Sequential()

model.add(keras.layers.Conv2D(16, (3,3), activation='relu', input_shape=(28,28,1)))

model.add(keras.layers.MaxPool2D(2,2))

model.add(keras.layers.Conv2D(32, (3,3), activation='relu'))

model.add(keras.layers.MaxPooling2D((2,2)))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(32, activation='relu'))

model.add(keras.layers.Dense(10, activation='softmax'))

print('Model에 추가된 Layer 개수: ', len(model.layers))Model에 추가된 Layer 개수: 7

- Conv2D 레이어의 첫 번째 인자는 사용하는 이미지 특징의 수입니다. 여기서는 16과 32를 사용했습니다. 가장 먼저 16개의 이미지 특징을, 그 뒤에 32개의 이미지 특징씩을 고려하겠다는 뜻입니다. 우리의 숫자 이미지는 사실 매우 단순한 형태의 이미지입니다. 만약 강아지 얼굴 사진이 입력 이미지라면 훨씬 디테일하고 복잡한 영상일 것입니다. 그럴 경우에는 이 특징 숫자를 늘려주는 것을 고려해 볼 수 있습니다.

- Dense 레이어의 첫 번째 인자는 분류기에 사용되는 뉴런의 숫자 입니다. 이 값이 클수록 보다 복잡한 분류기를 만들 수 있습니다. 10개의 숫자가 아닌 알파벳을 구분하고 싶다면, 대문자 26개, 소문자 26개로 총 52개의 클래스를 분류해 내야 합니다. 그래서 32보다 큰 64, 128 등을 고려해 볼 수 있을 것입니다.

- 마지막 Dense 레이어의 뉴런 숫자는 결과적으로 분류해 내야 하는 클래스 수로 지정하면 됩니다. 숫자 인식기에서는 10, 알파벳 인식기에서는 52가 되겠지요

딥러닝 네트워크 모델을 만들었으니 확인해보자

model.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 16) 160

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 13, 13, 16) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 11, 11, 32) 4640

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 800) 0

_________________________________________________________________

dense (Dense) (None, 32) 25632

_________________________________________________________________

dense_1 (Dense) (None, 10) 330

=================================================================

Total params: 30,762

Trainable params: 30,762

Non-trainable params: 0

_________________________________________________________________이게 딥러닝 네트워크 모델이다

입력의 형태는 (데이터 갯수, 이미지 크기 x, 이미지 크기 y, 채널수)의 형태이다.

이걸 input_shape=(28,28,1)로 지정한 거다

근데 print(x_train.shape)를 해보면 (60000, 28, 28)로 채널수가 없다그러니까 채널도 넣어주어야 한다 -> 채널은 색구성을 말한다 흑백:1 칼라RGB : 3

print("Before Reshape - x_train_norm shape: {}".format(x_train_norm.shape))

print("Before Reshape - x_test_norm shape: {}".format(x_test_norm.shape))

x_train_reshaped=x_train_norm.reshape( -1, 28, 28, 1) # 데이터갯수에 -1을 쓰면 reshape시 자동계산됩니다.

x_test_reshaped=x_test_norm.reshape( -1, 28, 28, 1)

print("After Reshape - x_train_reshaped shape: {}".format(x_train_reshaped.shape))

print("After Reshape - x_test_reshaped shape: {}".format(x_test_reshaped.shape))Before Reshape - x_train_norm shape: (60000, 28, 28)

Before Reshape - x_test_norm shape: (10000, 28, 28)

After Reshape - x_train_reshaped shape: (60000, 28, 28, 1)

After Reshape - x_test_reshaped shape: (10000, 28, 28, 1)만든 모델 가지고 딥러닝 네트워크 학습을 시켜보자

x_train 학습 데이터로 딥러닝 네트워크를 학습시킬 거다.

- 이 때 epoch=10은 전체 60,000개 데이터를 10번 반복해서 학습시킨다는 거다.

- model에 입력 형태를 맞춰야 하니까 채널까지 넣어준 x_train_reshaped로 시켜야 한다.

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.fit(x_train_reshaped, y_train, epochs=10)Epoch 1/10

1875/1875 [==============================] - 10s 3ms/step - loss: 0.2158 - accuracy: 0.9334

Epoch 2/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0682 - accuracy: 0.9793

Epoch 3/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0496 - accuracy: 0.9851

Epoch 4/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0388 - accuracy: 0.9878

Epoch 5/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0326 - accuracy: 0.9899

Epoch 6/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0270 - accuracy: 0.9913

Epoch 7/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0223 - accuracy: 0.9930

Epoch 8/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0201 - accuracy: 0.9934

Epoch 9/10

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0159 - accuracy: 0.9950

Epoch 10/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0138 - accuracy: 0.9958

<keras.callbacks.History at 0x7fa094fbfb50>- 학습이 진행됨에 따라 epoch 별로 어느 정도 인식 정확도(accuracy)가 올라가는지 확인

- 인식률 상승이 미미할떄까지 훈련시키면 됨

만들었으니까 성능을 확인해보자

그래서 시험용 데이터가 있는거야

- x_test 로 확인해보자

test_loss, test_accuracy = model.evaluate(x_test_reshaped,y_test, verbose=2)

print("test_loss: {} ".format(test_loss))

print("test_accuracy: {}".format(test_accuracy))313/313 - 1s - loss: 0.0394 - accuracy: 0.9906

test_loss: 0.03935988247394562

test_accuracy: 0.9905999898910522

- 100%는 안나온다.

- 데이터 보면 손글씨 주인이 다른 것도 있다.

- 처음보는 필체도 있다.

그럼 어떤 데이터를 잘못 추론한거냐? 확인해보자

일단 제대로 추론한 것부터 보자

model.predict() 를 사용하면 된다

- 확률분포로 보여준다.

- 1에 근접할 수록 확신을 가지는 것.

predicted_result = model.predict(x_test_reshaped) # model이 추론한 확률값.

predicted_labels = np.argmax(predicted_result, axis=1)

idx=0 #1번째 x_test를 살펴보자.

print('model.predict() 결과 : ', predicted_result[idx])

print('model이 추론한 가장 가능성이 높은 결과 : ', predicted_labels[idx])

print('실제 데이터의 라벨 : ', y_test[idx])model.predict() 결과 : [5.0936549e-10 4.2054354e-10 1.4162788e-07 3.0713025e-07 1.0975039e-09

4.7302101e-10 4.8337666e-17 9.9998915e-01 1.5172457e-09 1.0410251e-05]

model이 추론한 가장 가능성이 높은 결과 : 7

실제 데이터의 라벨 : 7[0으로 추론할 확률, 1로추론, ...., 7로 추론:, 8로, 9로]

predicted_result = model.predict(x_test_reshaped) # model이 추론한 확률값.

predicted_labels = np.argmax(predicted_result, axis=1)

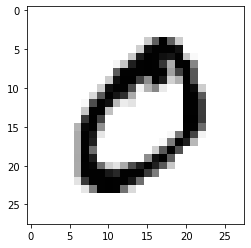

idx=1 #2번째 x_test를 살펴보자.

print('model.predict() 결과 : ', predicted_result[idx])

print('model이 추론한 가장 가능성이 높은 결과 : ', predicted_labels[idx])

print('실제 데이터의 라벨 : ', y_test[idx])model.predict() 결과 : [3.8869772e-11 5.8056837e-10 1.0000000e+00 2.7700270e-13 6.0729700e-16

9.5813839e-24 2.9645359e-13 1.0713646e-12 1.4265510e-10 7.0767866e-18]

model이 추론한 가장 가능성이 높은 결과 : 2

실제 데이터의 라벨 : 2추론한거 실제 이미지 보자

plt.imshow(x_test[idx],cmap=plt.cm.binary)

plt.show()

잘못 추론한거 5개만 뽑아보자

import random

wrong_predict_list=[]

for i, _ in enumerate(predicted_labels):

# i번째 test_labels과 y_test이 다른 경우만 모아 봅시다.

if predicted_labels[i] != y_test[i]:

wrong_predict_list.append(i)

# wrong_predict_list 에서 랜덤하게 5개만 뽑아봅시다.

samples = random.choices(population=wrong_predict_list, k=5)

for n in samples:

print("예측확률분포: " + str(predicted_result[n]))

print("라벨: " + str(y_test[n]) + ", 예측결과: " + str(predicted_labels[n]))

plt.imshow(x_test[n], cmap=plt.cm.binary)

plt.show()예측확률분포: [9.9993050e-01 2.2502122e-12 4.7690723e-06 3.0059752e-08 1.7519780e-13

8.0025169e-09 5.0630263e-08 6.8369168e-09 5.4815446e-05 9.7632701e-06]

라벨: 8, 예측결과: 0

예측확률분포: [4.3070769e-11 9.9670094e-01 2.3406226e-04 6.9325289e-08 9.2083937e-06

7.6245671e-10 3.0557083e-03 1.7472924e-13 8.3793372e-09 3.5816929e-12]

라벨: 6, 예측결과: 1

예측확률분포: [2.3253349e-11 1.5969941e-05 2.2154758e-03 1.5413132e-13 9.9764663e-01

7.1346483e-12 3.1916945e-12 1.1906381e-04 3.9855234e-08 2.7896983e-06]

라벨: 2, 예측결과: 4

예측확률분포: [5.2042344e-07 8.2894566e-08 4.1278052e-09 1.4097756e-06 5.7676568e-04

5.9966849e-05 7.5762877e-08 5.5446890e-07 5.0400358e-01 4.9535707e-01]

라벨: 9, 예측결과: 8

예측확률분포: [6.0286757e-14 7.1217093e-10 2.9044655e-14 5.7257526e-02 3.6976814e-11

9.4270760e-01 1.5280622e-12 1.6837074e-09 3.4841847e-05 1.6904140e-08]

라벨: 3, 예측결과: 5

인식률을 높여보자 딥러닝 네트워크는 그대로 두고

하이퍼 파라미터 바꿔보기

- 바꿔볼 수 있는 것들

- Conv2D 레이어에서 입력 이미지의 특징 수를 조절

- Dense 레이어에서 뉴런수 바꿔보기

- 반복 횟수인 epoch 값을 변경해보기.

# 바꿔 볼 수 있는 하이퍼파라미터들

n_channel_1=16

n_channel_2=32

n_dense=32

n_train_epoch=10

model=keras.models.Sequential()

model.add(keras.layers.Conv2D(n_channel_1, (3,3), activation='relu', input_shape=(28,28,1)))

model.add(keras.layers.MaxPool2D(2,2))

model.add(keras.layers.Conv2D(n_channel_2, (3,3), activation='relu'))

model.add(keras.layers.MaxPooling2D((2,2)))

model.add(keras.layers.Flatten())

model.add(keras.layers.Dense(n_dense, activation='relu'))

model.add(keras.layers.Dense(10, activation='softmax'))

model.summary()

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# 모델 훈련

model.fit(x_train_reshaped, y_train, epochs=n_train_epoch)

# 모델 시험

test_loss, test_accuracy = model.evaluate(x_test_reshaped, y_test, verbose=2)

print("test_loss: {} ".format(test_loss))

print("test_accuracy: {}".format(test_accuracy))Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_2 (Conv2D) (None, 26, 26, 16) 160

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 13, 13, 16) 0

_________________________________________________________________

conv2d_3 (Conv2D) (None, 11, 11, 32) 4640

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 5, 5, 32) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 800) 0

_________________________________________________________________

dense_2 (Dense) (None, 32) 25632

_________________________________________________________________

dense_3 (Dense) (None, 10) 330

=================================================================

Total params: 30,762

Trainable params: 30,762

Non-trainable params: 0

_________________________________________________________________

Epoch 1/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1991 - accuracy: 0.9392

Epoch 2/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0726 - accuracy: 0.9779

Epoch 3/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0535 - accuracy: 0.9834

Epoch 4/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0434 - accuracy: 0.9864

Epoch 5/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0366 - accuracy: 0.9884

Epoch 6/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0311 - accuracy: 0.9903

Epoch 7/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0256 - accuracy: 0.9916

Epoch 8/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0215 - accuracy: 0.9930

Epoch 9/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0183 - accuracy: 0.9940

Epoch 10/10

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0162 - accuracy: 0.9945

313/313 - 1s - loss: 0.0400 - accuracy: 0.9881

test_loss: 0.04003562033176422

test_accuracy: 0.988099992275238파라미터 값을 바꿔 최고로 높은 점수를 얻는 네트워크 모델 코드와 그 떄의 시험용 데이터 인식률을 확인