Feature-level Deeper Self-Attention Network for Sequential Recommendation(2019, IJCAI)

논문

코드

- (RecBole library) https://github.com/RUCAIBox/RecBole/blob/master/recbole/model/sequential_recommender/fdsa.py

참고자료

- 없음

문제의식

- Existing Sequential recommendation usually consider the transition patterns between items.

- ignore the transition patterns between features of items.

- only the item-level sequences cannot reveal the full sequential patterns.

아이디어

- explicit & implicit feature-level sequences can help extract the full sequential patterns.

- beneficial for capturing the user’s fine-grained preferences.

- (ex) a user is more likely to buy shoes after buying clothes

- the next product’s category is highly related to that of the current product.

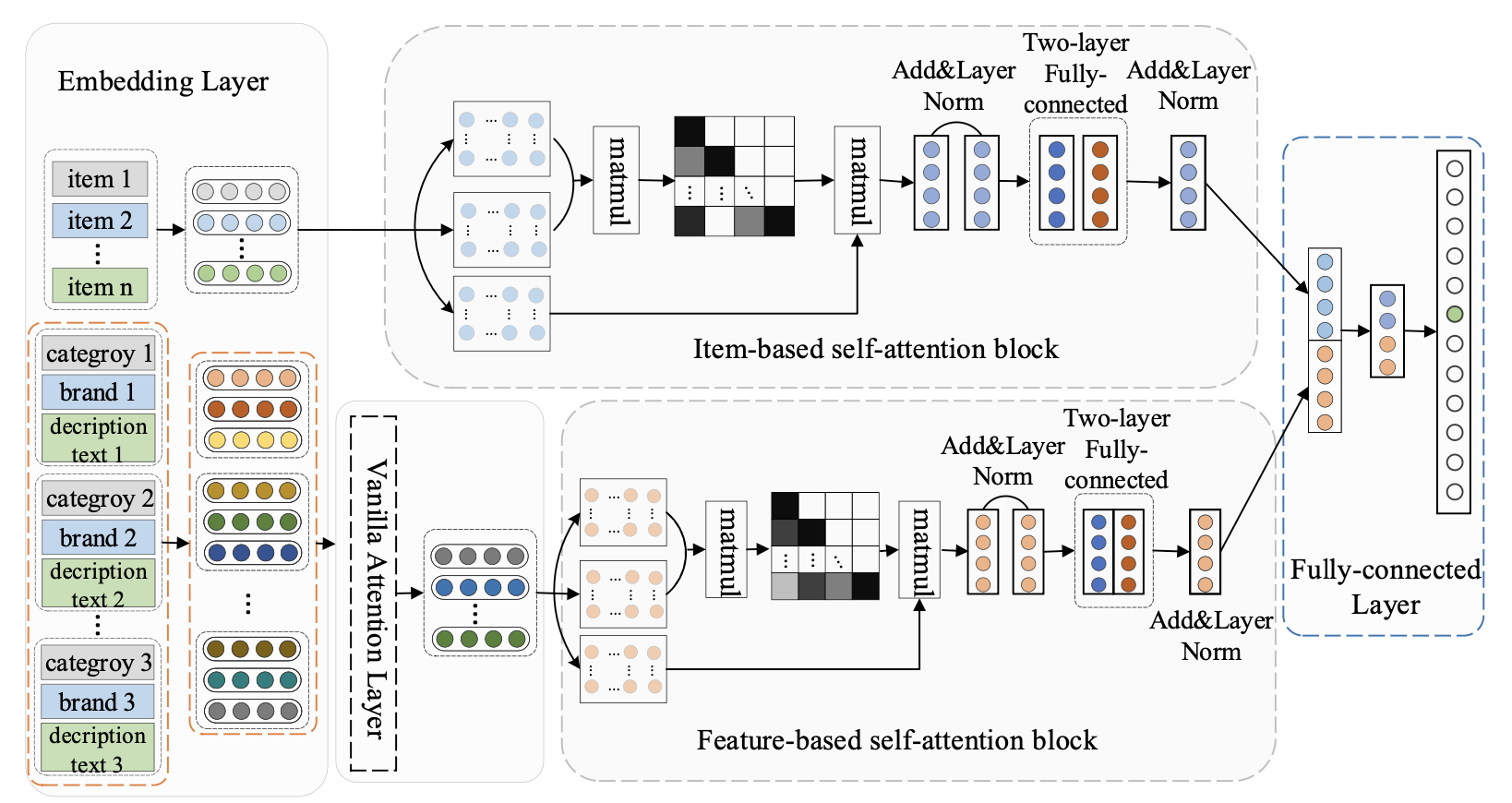

모델 구조

- Instead of using the combined representation of item and its features,

apply separated self-attention blocks on item sequences and feature sequences, respectively.- utilize vanilla attention to a self-attention block to adaptively select essential item's features

- we combine the contexts at the item-level and the feature-level

→ a fully-connected layer for the recommendation.

- 인풋구성

- user’s action sequence → a fixed-length sequence

- item id, category, brand(seller) : one-hot vectors → dense vector representations

- item's texts (title, description text): topic modeling → Word2vec(word vector)

- extract five topical keywords vector : apply the Mean Pooling method

- user’s action sequence → a fixed-length sequence

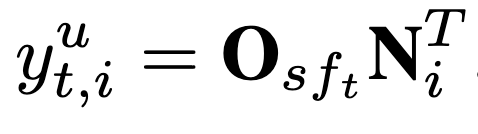

- 최종예측

- a dot product operation

- : item embedding matrix

- : user’s preference for items

- a dot product operation

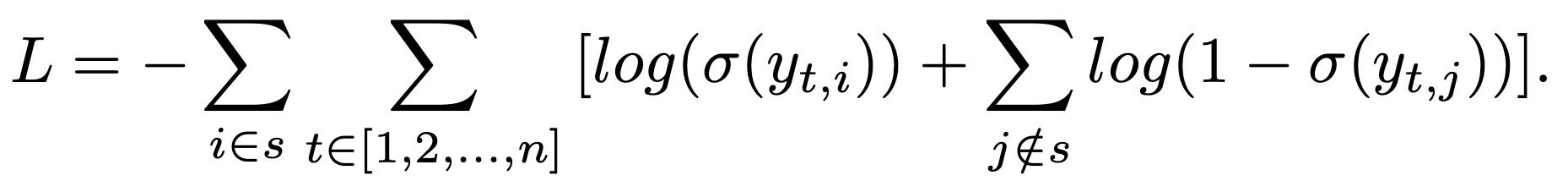

- 학습

- binary cross-entropy loss(a negative item sample 활용)

- binary cross-entropy loss(a negative item sample 활용)

(개인의견) 한계점

- 학습시 피처간 관련성 고려 부족

- 추천 설명력 : 성능 포커스