MLFQS

Multi Level Feedback Queue Scheduling

./threads/fixed_point.h

새로 파일 생성해서 fixed_point.h 파일을 만들어주자.

#include <stdint.h>

#define F (1 << 14) /* fixed point 1 */

#define INT_MAX ((1 << 31) - 1)

#define INT_MIN (-(1 << 31))

// x and y denote fixed_point numbers in 17.14 format

// n is an integer

int int_to_fp(int n); /* integer를 fixed point로 전환 */

int fp_to_int_round(int x); /* FP를 int로 전환(반올림) */

int fp_to_int(int x); /* FP를 int로 전환(버림) */

int add_fp(int x, int y); /*FP의덧셈*/

int add_mixed(int x, int n); /* FP와 int의 덧셈 */

int sub_fp(int x, int y); /* FP의 뺄셈(x-y) */

int sub_mixed(int x, int n); /* FP와 int의 뺄셈(x-n) */

int mult_fp(int x, int y); /*FP의곱셈*/

int mult_mixed(int x, int y); /* FP와 int의 곱셈 */

int div_fp(int x, int y); /* FP의 나눗셈(x/y) */

int div_mixed(int x, int n); /* FP와 int 나눗셈(x/n) */

/* 함수 본체 */

/* integer를 fixed point로 전환 */

int int_to_fp(int n){return n * F;}

/* FP를 int로 전환(버림) */

int fp_to_int(int x){return x / F;}

/* FP를 int로 전환(반올림) */

int fp_to_int_round(int x){

return x >= 0 ? (x + F / 2) / F : (x - F / 2) / F;

}

/*FP의덧셈*/

int add_fp(int x, int y){return x + y;}

/* FP와 int의 덧셈 */

int add_mixed(int x, int n){return x + n * F;}

/* FP의 뺄셈(x-y) */

int sub_fp(int x, int y){return x - y;}

/* FP와 int의 뺄셈(x-n) */

int sub_mixed(int x, int n){return x - n * F;}

/* FP의곱셈 */

int mult_fp(int x, int y){return (int)((((int64_t)x) * y) / F);}

/* FP와 int의 곱셈 */

int mult_mixed(int x, int n){return x * n;}

/* FP의 나눗셈(x/y) */

int div_fp(int x, int y){return (int)((((int64_t)x) * F) / y);}

/* FP와 int 나눗셈(x/n) */

int div_mixed(int x, int n){return x / n;}thread.h

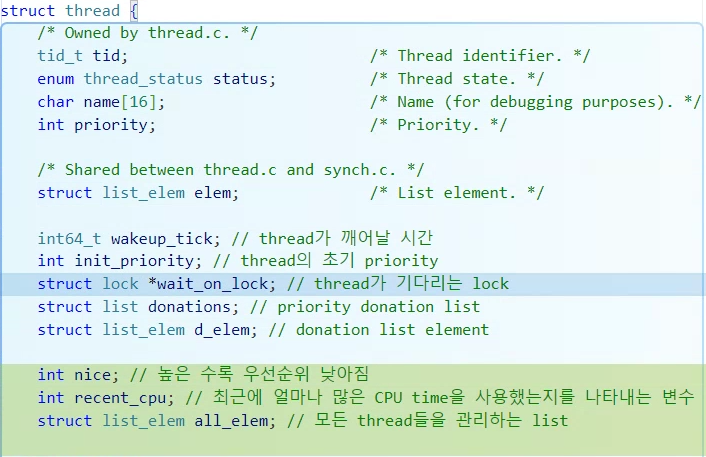

struct thread {

/* Owned by thread.c. */

tid_t tid; /* Thread identifier. */

enum thread_status status; /* Thread state. */

char name[16]; /* Name (for debugging purposes). */

int priority; /* Priority. */

/* Shared between thread.c and synch.c. */

struct list_elem elem; /* List element. */

int64_t wakeup_tick; // thread가 깨어날 시간

int init_priority; // thread의 초기 priority

struct lock *wait_on_lock; // thread가 기다리는 lock

struct list donations; // priority donation list

struct list_elem d_elem; // donation list element

int nice; // 높은 수록 우선순위 낮아짐

int recent_cpu; // 최근에 얼마나 많은 CPU time을 사용했는지를 나타내는 변수

struct list_elem all_elem; // 모든 thread들을 관리하는 list

#ifdef USERPROG

/* Owned by userprog/process.c. */

uint64_t *pml4; /* Page map level 4 */

#endif

#ifdef VM

/* Table for whole virtual memory owned by thread. */

struct supplemental_page_table spt;

#endif

/* Owned by thread.c. */

struct intr_frame tf; /* Information for switching */

unsigned magic; /* Detects stack overflow. */

};

...

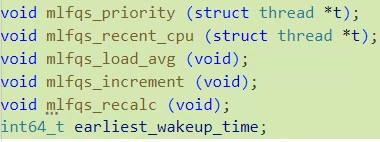

void mlfqs_priority (struct thread *t);

void mlfqs_recent_cpu (struct thread *t);

void mlfqs_load_avg (void);

void mlfqs_increment (void);

void mlfqs_recalc (void);

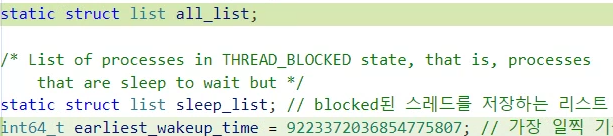

int64_t earliest_wakeup_time;thread.c

함수 원형 및 변수

MLFQS함수를 구현하기 위해 새로 작성한 함수들은

그중에서 sleep_list에 있는 스레드의 우선순위 중 가장 일찍 일어나는 스레드의

기상시간을 저장한 변수 earliest_wakeup_time을 timer.c에서도 쓰이게 되어서

static 키워드를 제거해줬다.

static 키워드가 earliest_wakeup_time에 남아있으면

...

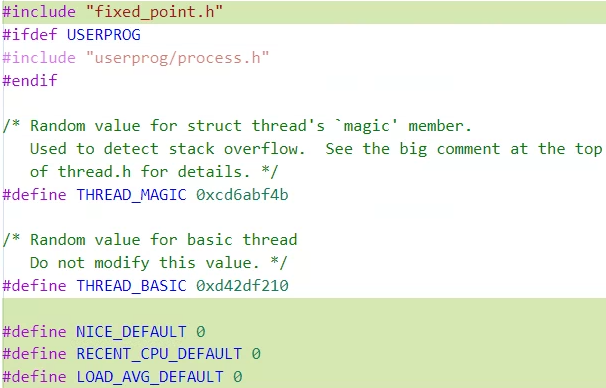

#include "fixed_point.h" //

...

#define NICE_DEFAULT 0

#define RECENT_CPU_DEFAULT 0

#define LOAD_AVG_DEFAULT 0

...

static struct list all_list;

...

int64_t earliest_wakeup_time = 9223372036854775807; // 가장 일찍 기상하는 시간

...

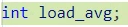

int load_avg;

...

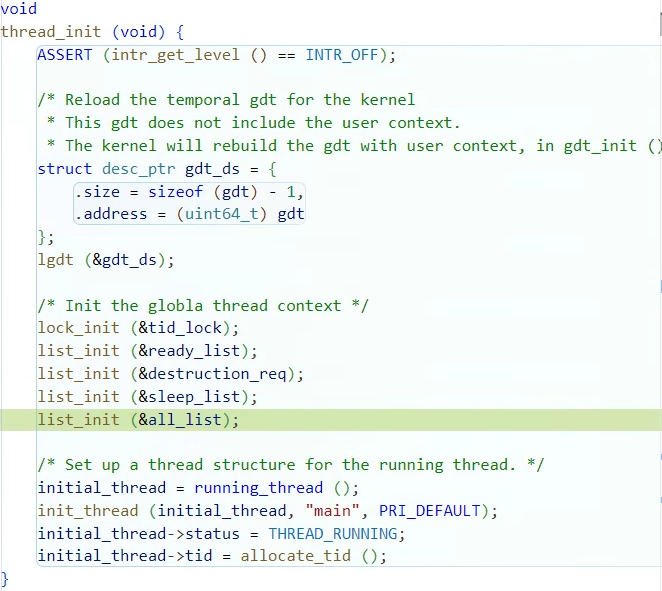

void thread_init (void)

실행 중인 대부분의 스레드를 담는 리스트 all_list를 초기화해줘야한다.

thread_init(void)에서 all_list를 초기화해줘야한다.

void

thread_init (void) {

ASSERT (intr_get_level () == INTR_OFF);

/* Reload the temporal gdt for the kernel

* This gdt does not include the user context.

* The kernel will rebuild the gdt with user context, in gdt_init (). */

struct desc_ptr gdt_ds = {

.size = sizeof (gdt) - 1,

.address = (uint64_t) gdt

};

lgdt (&gdt_ds);

/* Init the globla thread context */

lock_init (&tid_lock);

list_init (&ready_list);

list_init (&destruction_req);

list_init (&sleep_list);

list_init (&all_list);

/* Set up a thread structure for the running thread. */

initial_thread = running_thread ();

init_thread (initial_thread, "main", PRI_DEFAULT);

initial_thread->status = THREAD_RUNNING;

initial_thread->tid = allocate_tid ();

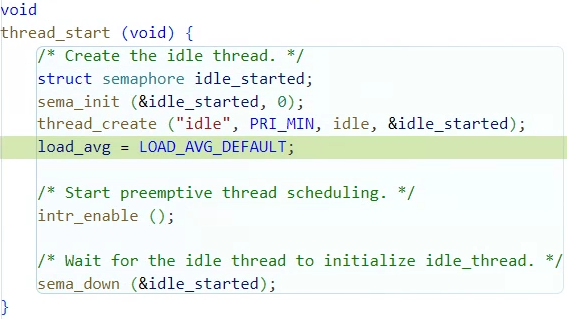

}void thread_start (void)

void

thread_start (void) {

/* Create the idle thread. */

struct semaphore idle_started;

sema_init (&idle_started, 0);

thread_create ("idle", PRI_MIN, idle, &idle_started);

load_avg = LOAD_AVG_DEFAULT;

/* Start preemptive thread scheduling. */

intr_enable ();

/* Wait for the idle thread to initialize idle_thread. */

sema_down (&idle_started);

}void thread_exit (void)

스레드를 종료할 때 all_list에 담긴 allelem을 지워줘서

불필요한 오버헤드를 방지하자.

/* Deschedules the current thread and destroys it. Never

returns to the caller. */

void

thread_exit (void) {

ASSERT (!intr_context ());

#ifdef USERPROG

process_exit ();

#endif

/* Just set our status to dying and schedule another process.

We will be destroyed during the call to schedule_tail(). */

intr_disable ();

list_remove(&thread_current()->all_elem);

do_schedule (THREAD_DYING);

NOT_REACHED ();

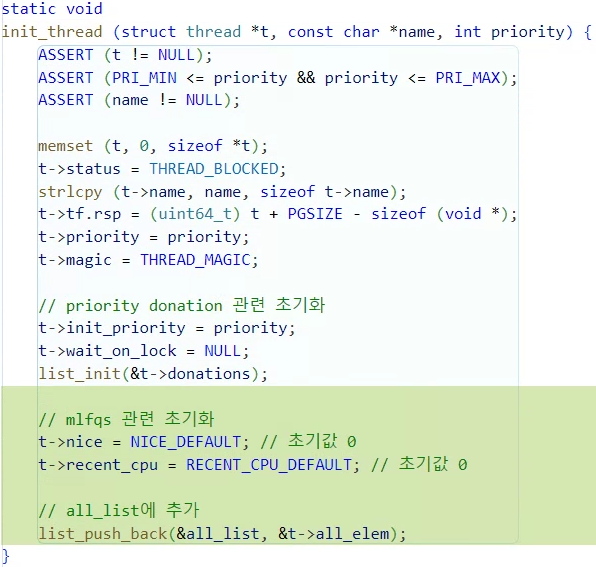

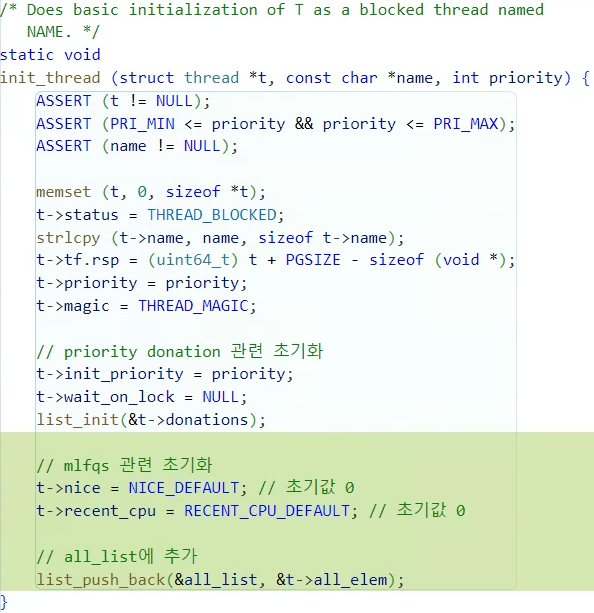

}static void init_thread(...)

스레드를 사용하기 위해 초기화할 때마다 all_list에 allelem을 담아서

모든 스레드의 정보를 all_list가 알 수 있도록 한다.

/* Does basic initialization of T as a blocked thread named

NAME. */

static void

init_thread (struct thread *t, const char *name, int priority) {

ASSERT (t != NULL);

ASSERT (PRI_MIN <= priority && priority <= PRI_MAX);

ASSERT (name != NULL);

memset (t, 0, sizeof *t);

t->status = THREAD_BLOCKED;

strlcpy (t->name, name, sizeof t->name);

t->tf.rsp = (uint64_t) t + PGSIZE - sizeof (void *);

t->priority = priority;

t->magic = THREAD_MAGIC;

// priority donation 관련 초기화

t->init_priority = priority;

t->wait_on_lock = NULL;

list_init(&t->donations);

// mlfqs 관련 초기화

t->nice = NICE_DEFAULT; // 초기값 0

t->recent_cpu = RECENT_CPU_DEFAULT; // 초기값 0

// all_list에 추가

list_push_back(&all_list, &t->all_elem);

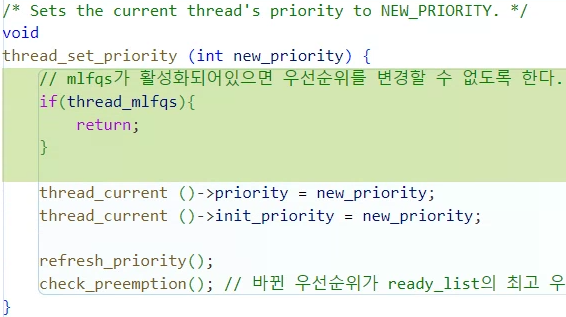

}void thread_set_priority (int new_priority)

지난 Priority Scheduling에서 구현했던 메서드인데

우선순위 방식을 mlfqs로 바꿀 때는 이 함수를 비활성화해줘야한다.

다른 방식으로 우선순위를 바꾸기 때문이다.

mlfqs방식을 사용하는 경우 thread_mlfqs==true이므로

이때는 그냥 return하도록 작성했다.

void

thread_set_priority (int new_priority) {

// mlfqs가 활성화되어있으면 우선순위를 변경할 수 없도록 한다.

if(thread_mlfqs){

return;

}

thread_current ()->priority = new_priority;

thread_current ()->init_priority = new_priority;

refresh_priority();

check_preemption(); // 바뀐 우선순위가 ready_list의 최고 우선순위보다 낮으면 양도

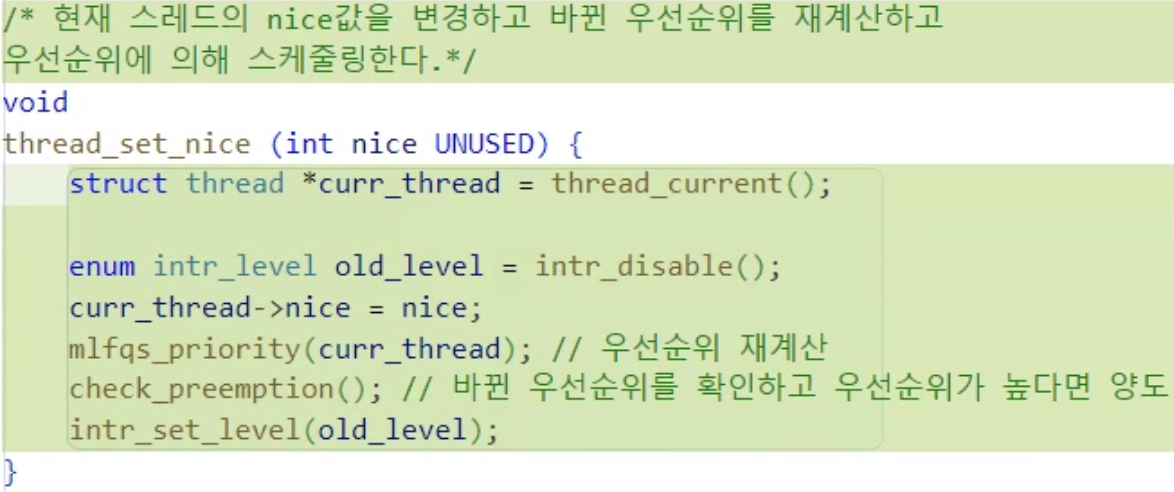

}void thread_set_nice (int nice UNUSED)

/* 현재 스레드의 nice값을 변경하고 바뀐 우선순위를 재계산하고

우선순위에 의해 스케줄링한다.*/

void

thread_set_nice (int nice UNUSED) {

struct thread *curr_thread = thread_current();

enum intr_level old_level = intr_disable();

curr_thread->nice = nice;

mlfqs_priority(curr_thread); // 우선순위 재계산

check_preemption(); // 바뀐 우선순위를 확인하고 우선순위가 높다면 양도

intr_set_level(old_level);

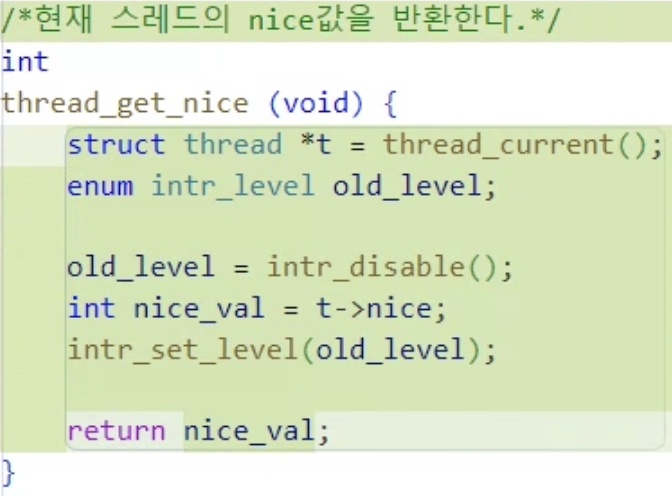

}int thread_get_nice (void)

/*현재 스레드의 nice값을 반환한다.*/

int

thread_get_nice (void) {

struct thread *t = thread_current();

enum intr_level old_level;

old_level = intr_disable();

int nice_val = t->nice;

intr_set_level(old_level);

return nice_val;

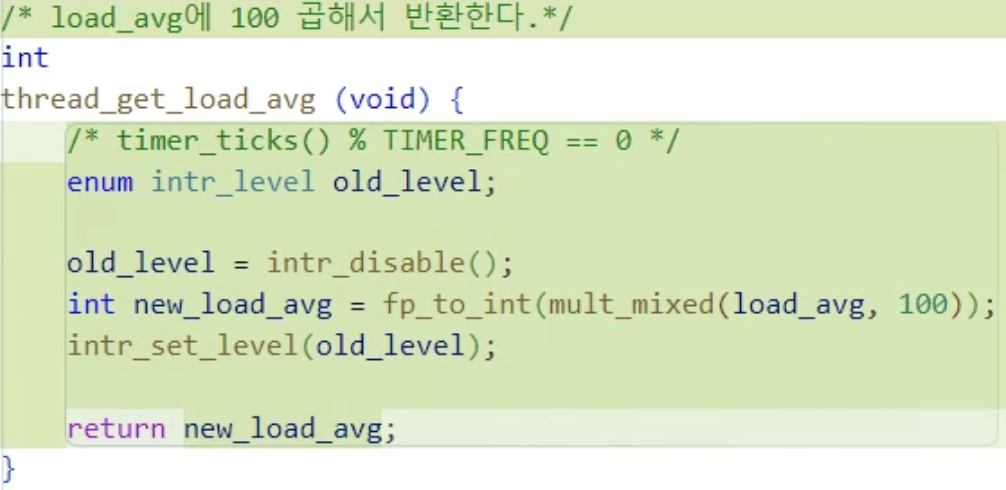

}int thread_get_load_avg (void)

/* load_avg에 100 곱해서 반환한다.*/

int

thread_get_load_avg (void) {

/* timer_ticks() % TIMER_FREQ == 0 */

enum intr_level old_level;

old_level = intr_disable();

int new_load_avg = fp_to_int(mult_mixed(load_avg, 100));

intr_set_level(old_level);

return new_load_avg;

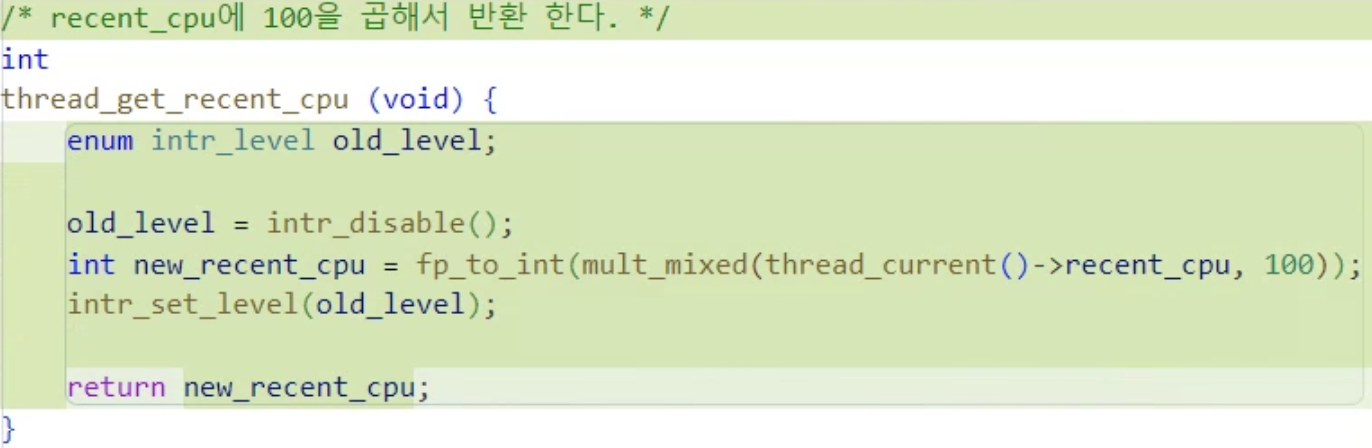

}int thread_get_recent_cpu (void)

/* recent_cpu에 100을 곱해서 반환 한다. */

int

thread_get_recent_cpu (void) {

enum intr_level old_level;

old_level = intr_disable();

int new_recent_cpu = fp_to_int(mult_mixed(thread_current()->recent_cpu, 100));

intr_set_level(old_level);

return new_recent_cpu;

}static void init_thread (...)

static void

init_thread (struct thread *t, const char *name, int priority) {

ASSERT (t != NULL);

ASSERT (PRI_MIN <= priority && priority <= PRI_MAX);

ASSERT (name != NULL);

memset (t, 0, sizeof *t);

t->status = THREAD_BLOCKED;

strlcpy (t->name, name, sizeof t->name);

t->tf.rsp = (uint64_t) t + PGSIZE - sizeof (void *);

t->priority = priority;

t->magic = THREAD_MAGIC;

// priority donation 관련 초기화

t->init_priority = priority;

t->wait_on_lock = NULL;

list_init(&t->donations);

// mlfqs 관련 초기화

t->nice = NICE_DEFAULT; // 초기값 0

t->recent_cpu = RECENT_CPU_DEFAULT; // 초기값 0

// all_list에 추가

list_push_back(&all_list, &t->all_elem);

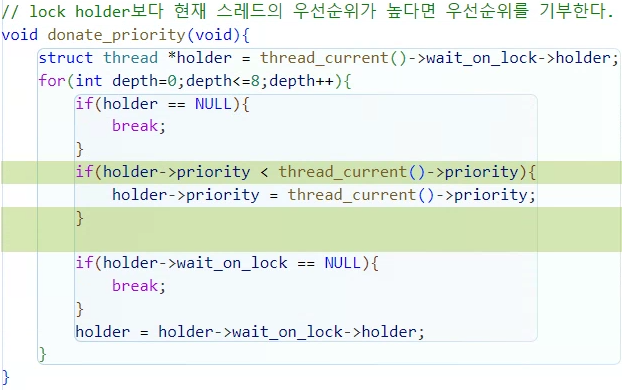

}void donate_priority(void)

// lock holder보다 현재 스레드의 우선순위가 높다면 우선순위를 기부한다.

void donate_priority(void){

struct thread *holder = thread_current()->wait_on_lock->holder;

for(int depth=0;depth<=8;depth++){

if(holder == NULL){

break;

}

if(holder->priority < thread_current()->priority){

holder->priority = thread_current()->priority;

}

if(holder->wait_on_lock == NULL){

break;

}

holder = holder->wait_on_lock->holder;

}

}mlfqs 관련함수들

/* 입력한 스레드의 priority 계산 */

void mlfqs_priority(struct thread *t){

/* idle스레드가 아닌 경우에만 실행*/

if (t != idle_thread) {

int rec_by_4 = div_mixed(t->recent_cpu, 4);

int nice2 = 2 * t->nice;

int to_sub = add_mixed(rec_by_4, nice2);

int tmp = sub_mixed(to_sub, (int)PRI_MAX);

int pri_result = fp_to_int(sub_fp(0, tmp));

if (pri_result < PRI_MIN)

pri_result = PRI_MIN;

if (pri_result > PRI_MAX)

pri_result = PRI_MAX;

t->priority = pri_result;

}

}

/* 입력한 스레드의 recent_cpu 계산 */

void mlfqs_recent_cpu(struct thread *t){

/* idle스레드가 아닌 경우에만 실행*/

if (t != idle_thread) {

int load_avg_2 = mult_mixed(load_avg, 2);

int load_avg_2_1 = add_mixed(load_avg_2, 1);

int frac = div_fp(load_avg_2, load_avg_2_1);

int tmp = mult_fp(frac, t->recent_cpu);

int result = add_mixed(tmp, t->nice);

if ((result >> 31) == (-1) >> 31) {

result = 0;

}

t->recent_cpu = result;

}

}

/* load_avg 계산 */

void mlfqs_load_avg(void){

/* load_avg = (59/60) * load_avg + (1/60) * ready_threads;

load_avg는 항상 0 보다 크다. */

int a = div_fp(int_to_fp(59), int_to_fp(60));

int b = div_fp(int_to_fp(1), int_to_fp(60));

int load_avg2 = mult_fp(a, load_avg);

int ready_thread = (int)list_size(&ready_list);

ready_thread = (thread_current() == idle_thread) ? ready_thread : ready_thread + 1;

int ready_thread2 = mult_mixed(b, ready_thread);

int result = add_fp(load_avg2, ready_thread2);

load_avg = result;

}

/* 현재 스레드의 recent_cpu값을 1 증가 */

void mlfqs_increment(void) {

if (thread_current() != idle_thread) {

int cur_recent_cpu = thread_current()->recent_cpu;

thread_current()->recent_cpu = add_mixed(cur_recent_cpu, 1);

}

}

/* 모든 스레드의 recent_cpu 재계산 */

void mlfqs_recalc_recent_cpu(void) {

for (struct list_elem *tmp = list_begin(&all_list); tmp != list_end(&all_list); tmp = list_next(tmp)) {

mlfqs_recent_cpu(list_entry(tmp, struct thread, all_elem));

}

}

/* 모든 스레드의 priority 재계산 */

void mlfqs_recalc_priority(void) {

for (struct list_elem *tmp = list_begin(&all_list); tmp != list_end(&all_list); tmp = list_next(tmp)) {

mlfqs_priority(list_entry(tmp, struct thread, all_elem));

}

}synch.c

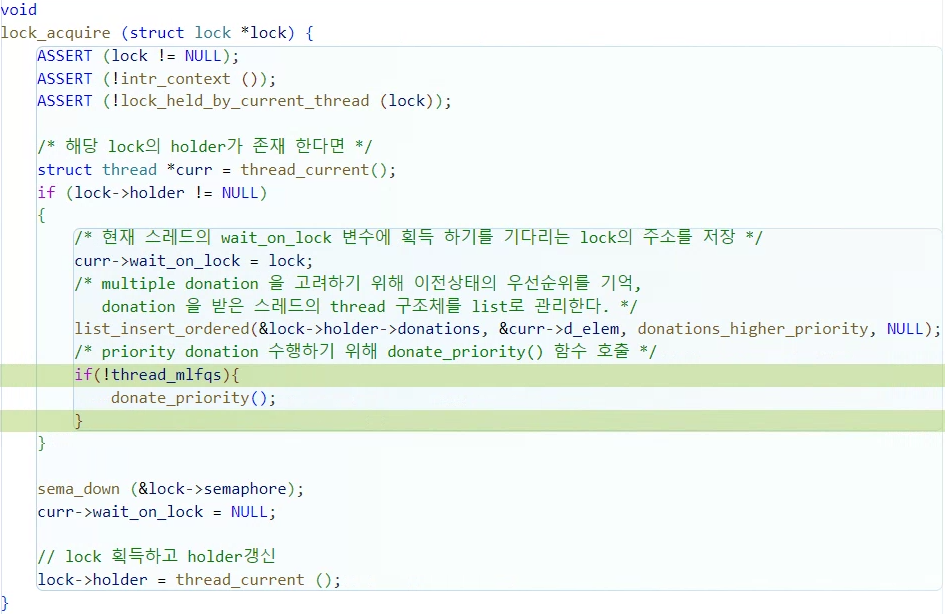

void lock_acquire(...)

/* Acquires LOCK, sleeping until it becomes available if

necessary. The lock must not already be held by the current

thread.

This function may sleep, so it must not be called within an

interrupt handler. This function may be called with

interrupts disabled, but interrupts will be turned back on if

we need to sleep. */

void

lock_acquire (struct lock *lock) {

ASSERT (lock != NULL);

ASSERT (!intr_context ());

ASSERT (!lock_held_by_current_thread (lock));

/* 해당 lock의 holder가 존재 한다면 */

struct thread *curr = thread_current();

if (lock->holder != NULL)

{

/* 현재 스레드의 wait_on_lock 변수에 획득 하기를 기다리는 lock의 주소를 저장 */

curr->wait_on_lock = lock;

/* multiple donation 을 고려하기 위해 이전상태의 우선순위를 기억,

donation 을 받은 스레드의 thread 구조체를 list로 관리한다. */

list_insert_ordered(&lock->holder->donations, &curr->d_elem, donations_higher_priority, NULL);

/* priority donation 수행하기 위해 donate_priority() 함수 호출 */

if(!thread_mlfqs){

donate_priority();

}

}

sema_down (&lock->semaphore);

curr->wait_on_lock = NULL;

// lock 획득하고 holder갱신

lock->holder = thread_current ();

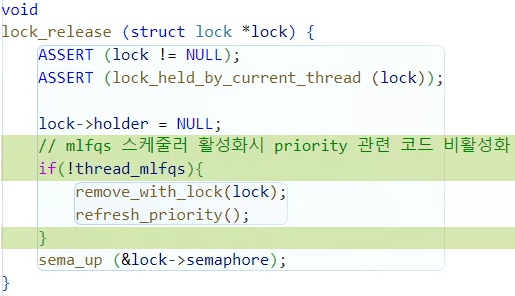

}void lock_release (...)

/* Releases LOCK, which must be owned by the current thread.

This is lock_release function.

An interrupt handler cannot acquire a lock, so it does not

make sense to try to release a lock within an interrupt

handler. */

void

lock_release (struct lock *lock) {

ASSERT (lock != NULL);

ASSERT (lock_held_by_current_thread (lock));

lock->holder = NULL;

// mlfqs 스케줄러 활성화시 priority 관련 코드 비활성화

if(!thread_mlfqs){

remove_with_lock(lock);

refresh_priority();

}

sema_up (&lock->semaphore);

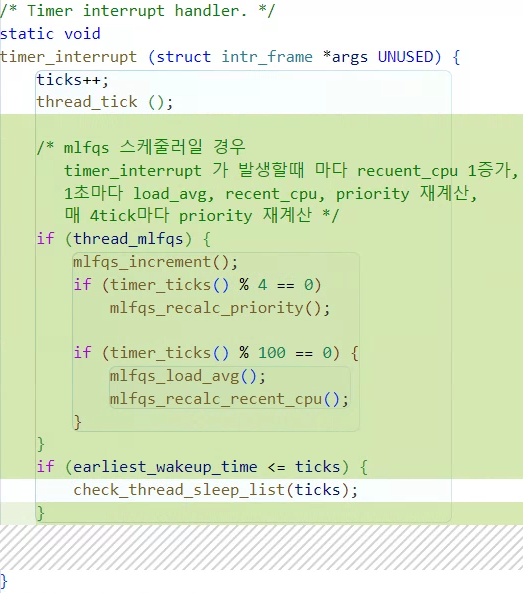

}timer.c

/* Timer interrupt handler. */

static void

timer_interrupt (struct intr_frame *args UNUSED) {

ticks++;

thread_tick ();

/* mlfqs 스케줄러일 경우

timer_interrupt 가 발생할때 마다 recuent_cpu 1증가,

1초마다 load_avg, recent_cpu, priority 재계산,

매 4tick마다 priority 재계산 */

if (thread_mlfqs) {

mlfqs_increment();

if (timer_ticks() % 4 == 0)

mlfqs_recalc_priority();

if (timer_ticks() % 100 == 0) {

mlfqs_load_avg();

mlfqs_recalc_recent_cpu();

}

}

if (earliest_wakeup_time <= ticks) {

check_thread_sleep_list(ticks);

}

}트러블슈팅

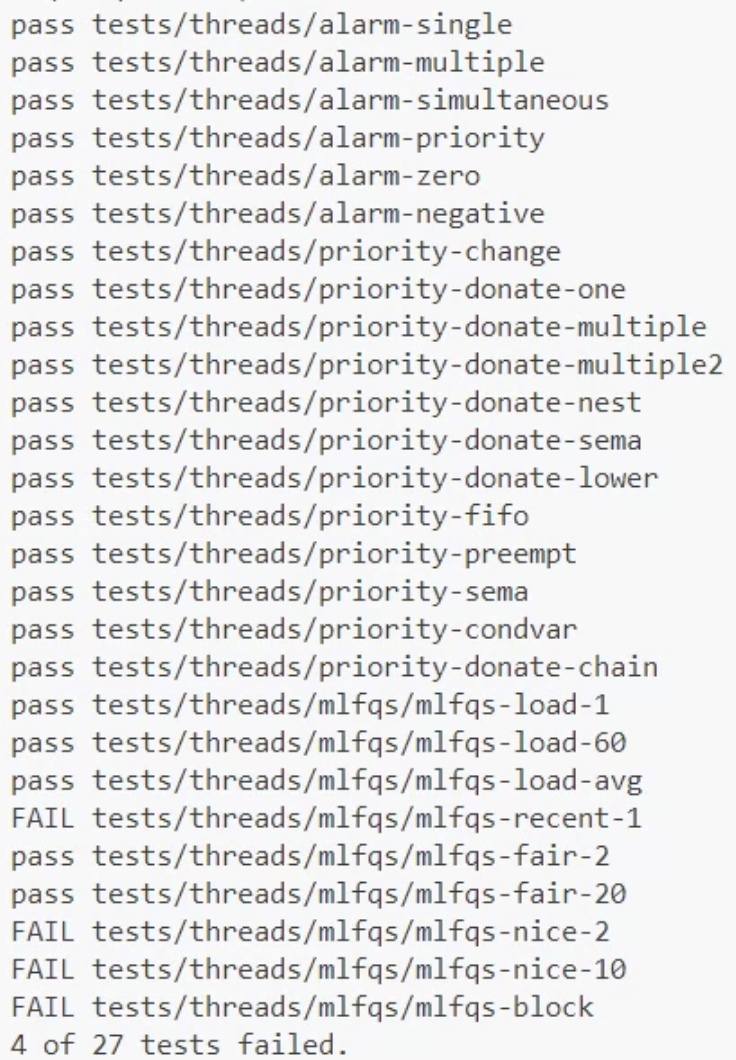

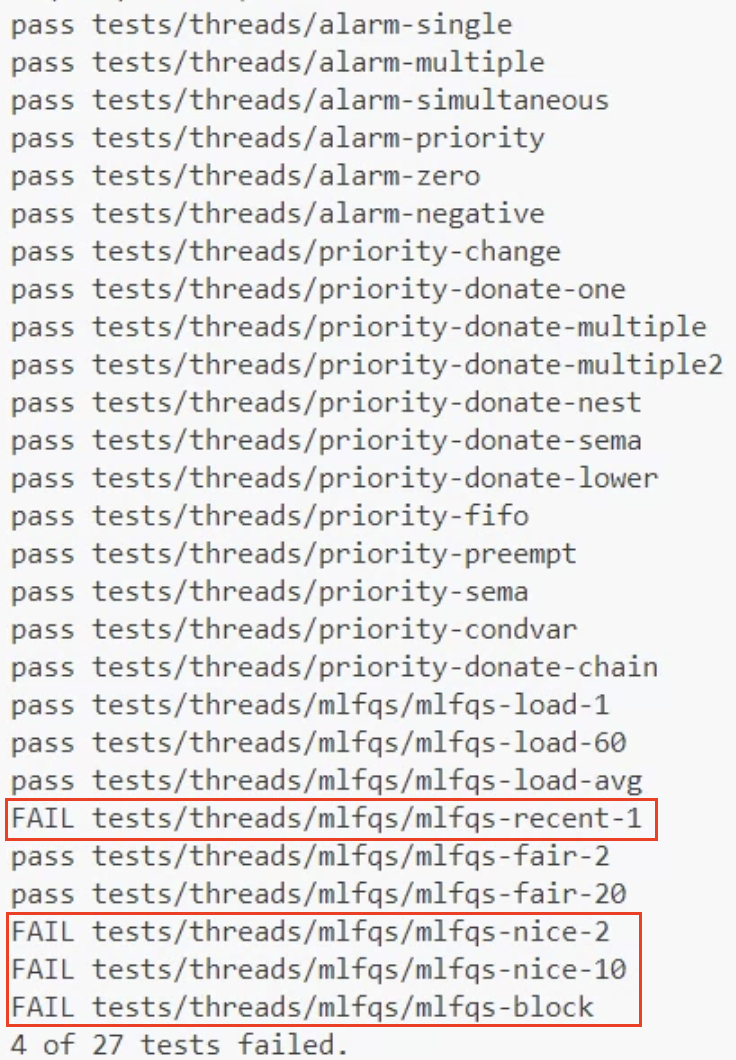

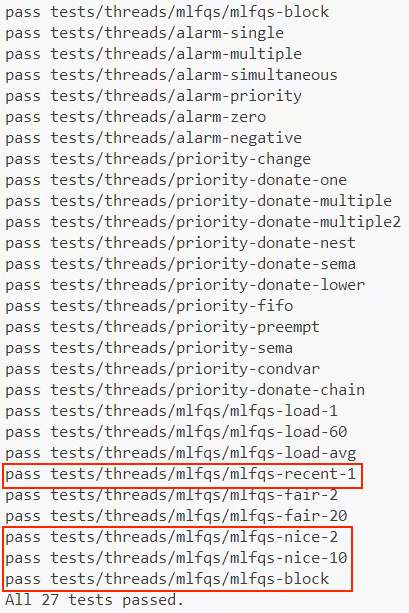

mlfqs 테스트를 했는데 4개의 테스트에서 통과하지 못했다.

pass tests/threads/alarm-single

pass tests/threads/alarm-multiple

pass tests/threads/alarm-simultaneous

pass tests/threads/alarm-priority

pass tests/threads/alarm-zero

pass tests/threads/alarm-negative

pass tests/threads/priority-change

pass tests/threads/priority-donate-one

pass tests/threads/priority-donate-multiple

pass tests/threads/priority-donate-multiple2

pass tests/threads/priority-donate-nest

pass tests/threads/priority-donate-sema

pass tests/threads/priority-donate-lower

pass tests/threads/priority-fifo

pass tests/threads/priority-preempt

pass tests/threads/priority-sema

pass tests/threads/priority-condvar

pass tests/threads/priority-donate-chain

pass tests/threads/mlfqs/mlfqs-load-1

pass tests/threads/mlfqs/mlfqs-load-60

pass tests/threads/mlfqs/mlfqs-load-avg

FAIL tests/threads/mlfqs/mlfqs-recent-1

pass tests/threads/mlfqs/mlfqs-fair-2

pass tests/threads/mlfqs/mlfqs-fair-20

FAIL tests/threads/mlfqs/mlfqs-nice-2

FAIL tests/threads/mlfqs/mlfqs-nice-10

FAIL tests/threads/mlfqs/mlfqs-block

4 of 27 tests failed.원인과 해결 방법

all_list을 선언하기만 하고 초기화를 안 해줬었는데 해줬고

스레드를 초기화하는 thread_init을 할 때마다 all_list에

struct thread의 멤버변수 all_elem을 삽입해주지 않았었다.

테스트 코드의 함수를 실행하는 스레드는 init만 할 뿐 create가 되지 않기 때문에

thread_create에서 all_list를 초기화하면 문제가 발생했다.

모든 스레드의 우선순위를 4ticks마다 재계산을 해야 제대로 작동하는데

all_list에 all_elem을 넣어주지 않았기 때문에 발생한 문제였다.

위에 적힌코드에는 이미 해결한 코드가 구현되어있다.

해결 후 테스트

pass tests/threads/mlfqs/mlfqs-block

pass tests/threads/alarm-single

pass tests/threads/alarm-multiple

pass tests/threads/alarm-simultaneous

pass tests/threads/alarm-priority

pass tests/threads/alarm-zero

pass tests/threads/alarm-negative

pass tests/threads/priority-change

pass tests/threads/priority-donate-one

pass tests/threads/priority-donate-multiple

pass tests/threads/priority-donate-multiple2

pass tests/threads/priority-donate-nest

pass tests/threads/priority-donate-sema

pass tests/threads/priority-donate-lower

pass tests/threads/priority-fifo

pass tests/threads/priority-preempt

pass tests/threads/priority-sema

pass tests/threads/priority-condvar

pass tests/threads/priority-donate-chain

pass tests/threads/mlfqs/mlfqs-load-1

pass tests/threads/mlfqs/mlfqs-load-60

pass tests/threads/mlfqs/mlfqs-load-avg

pass tests/threads/mlfqs/mlfqs-recent-1

pass tests/threads/mlfqs/mlfqs-fair-2

pass tests/threads/mlfqs/mlfqs-fair-20

pass tests/threads/mlfqs/mlfqs-nice-2

pass tests/threads/mlfqs/mlfqs-nice-10

pass tests/threads/mlfqs/mlfqs-block

All 27 tests passed.