🤔 BoW란?

BoW란 Bag of Words의 약자로, 단어 가방을 활용하여 텍스트를 벡터화하는 방식입니다. 즉, 여러 단어들이 담긴 단어 가방(Bag of Words)에서 임의로 단어를 꺼내어 텍스트에 해당되는 단어가 몇 개 있는 지 그 빈도를 세는 방식이죠.

예를 들어, 다음과 같은 단어 가방이 있다고 가정해봅시다.

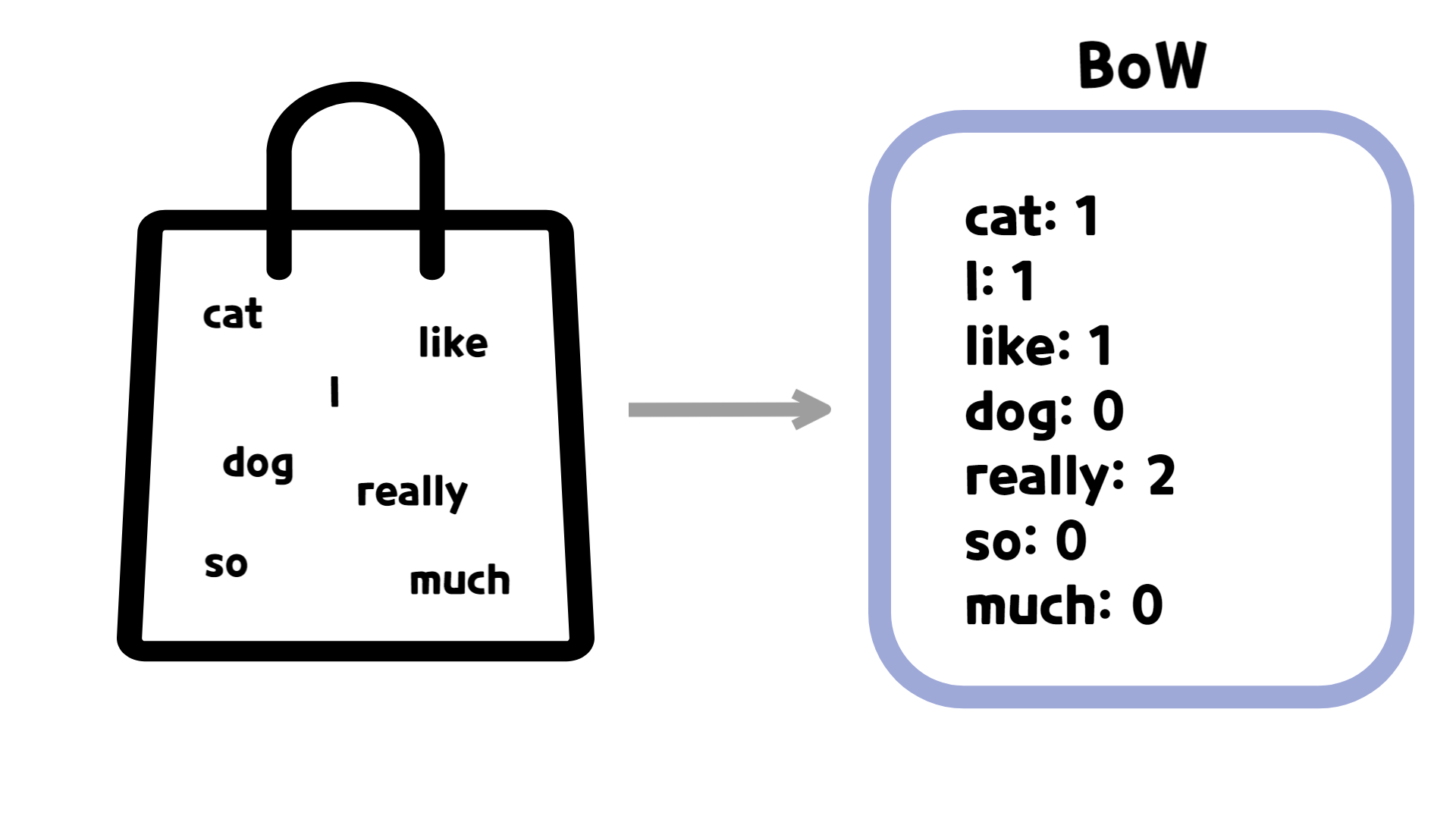

만약 I really really like dog.라는 문장이 있다면, BoW로는 다음과 같이 표현할 수 있습니다.

이처럼 BoW는 단어 가방에 포함된 단어가 몇 개 들었는지 개수로 텍스트를 벡터화합니다. 해당 문장을 벡터화하면 [1, 1, 1, 0, 2, 0, 0]으로 표현할 수 있습니다.

💻 BoW의 구현

BoW을 구현하기 위해선 먼저 단어 가방을 만들어야 할 필요가 있습니다. 단어 가방의 경우, 중복되는 단어가 없어야 하고 벡터화했을시 어느 단어인지 구별하기 위해 순번이 부여되어야 합니다.

다음과 같은 문장에 대해서 BoW를 실행한다 가정해봅시다.

sentences = ["I really want to stay at your house",

"I wish that I could turn back time",

"I need your time"]이 경우, 단어 가방은 세 문장 내의 중복되지 않은 모든 단어만큼의 길이로 만들어져야 합니다.

from nltk.tokenize import word_tokenzie

word_bag = {}

for sent in sentences:

tokens = word_tokenize(sent)

for token in tokens:

if token not in word_bag.keys():

word_bag[token] = len(word_bag)

print(word_bag)

# 결과

# {'I': 0, 'really': 1, 'want': 2, 'to': 3, 'stay': 4, 'at': 5, 'your': 6, 'house': 7, 'wish': 8, 'that': 9, 'could': 10, 'turn': 11, 'back': 12, 'time': 13, 'need': 14}단어 가방을 기반으로 BoW를 진행하면 다음과 같습니다.

result = []

for sent in sentences:

vector = []

for word in word_bag.keys():

if word in sent:

vector.append(sent.count(word))

else:

vector.append(0)

result.append(vector)

print(result)

# 결과

# [[1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0],

# [2, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 1, 0],

# [1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1]]단어 가방 내 단어의 빈도수가 순서대로 나열되어 있는 것을 확인할 수 있습니다. 이를 함수로 정리하면 다음과 같이 정리할 수 있습니다.

import re

from nltk.tokenize import word_tokenize

def vector_word_of_bag(sents: list[str]) -> list[list[int]]:

sents = [re.sub("[^a-zA-Z0-9\s]", "", sent) for sent in sents] # 특수 문자를 제거합니다.

tokens: list[list[str]] = [word_tokenize(sent) for sent in sents] # 토큰화를 진행합니다.

word_bag: dict[str, int] = {}

for token in sum(tokens, []):

if token not in word_bag.keys():

word_bag[token] = len(word_bag)

result: list[list[int]] = []

for sent in tokens:

temp: list[int] = []

for word in word_bag.keys():

if word in sent:

temp.append(sent.count(word))

else:

temp.append(0)

result.append(temp)

return result BoW는 직접적인 구현 말고도 파이썬 내 scikit-learn 라이브러리의 CountVectorizer() 클래스를 통해서 구현할 수도 있습니다.

💡 scikit-learn이란?

scikit-learn 라이브러리는 파이썬 내 라이브러리 중 하나로 주로 머신러닝과 관련한 기능을 제공합니다.

CountVectorizer()클래스는 빈도수를 기준으로 텍스트를 벡터화하는 기능을 제공합니다. (pip명령어를 통한 설치가 필요합니다.)

from sklearn.feature_extraction.text import CountVectorizer

sents = ["I really want to stay at your house",

"I wish that I could turn back time",

"I need your time"]

vectorizer = CountVectorizer()

vectorizer.fit(sents) # 텍스트 데이터를 학습시킵니다.

word_bag = vectorizer.vocabulary_

result = vectorizer.transform(sents).toarray()

print(word_bag)

print(result)

# 결과

# {'really': 5, 'want': 11, 'to': 9, 'stay': 6, 'at': 0, 'your': 13, 'house': 3, 'wish': 12, 'that': 7, 'could': 2, 'turn': 10, 'back': 1, 'time': 8, 'need': 4}

# [[1 0 0 1 0 1 1 0 0 1 0 1 0 1]

# [0 1 1 0 0 0 0 1 1 0 1 0 1 0]

# [0 0 0 0 1 0 0 0 1 0 0 0 0 1]]이 경우에는 불용어가 처리되어 의미있는 단어들의 빈도수를 기준으로 결과를 도출합니다.

🔍 BoW의 장단점

🟢 장점

BoW는 단어 가방 내 단어들의 빈도를 기준으로 텍스트 벡터화를 진행하기 때문에 문장 간 비교가 가능합니다. 즉, 문장 간의 유사도를 살펴볼 수 있다는 이야기이죠.

🔴 단점

원-핫 인코딩과 마찬가지로, 문장 내에서 단어 간의 관계를 드러내지는 못합니다. 단어의 순서나 위치를 반영하지 않기 때문이죠. 또한 단어 수가 많아질수록 단어 가방이 늘어나고, 그만큼 벡터 차원도 늘어나기 때문에 공간적으로 비효율적일 수 있습니다.

🔥 DTM이란?

DTM은 Document-Term Matrix, 문서 단어 행렬의 약자로, 앞서 배웠던 BoW를 활용하여 여러 문서들을 하나의 벡터로 표현한 것을 말합니다. 예를 들어, 문서1, 문서2, 문서3, 문서4가 있다면 이들의 단어 가방을 만들고 BoW를 실행한 것이죠.

문서 1. 나는 밥을 먹는다.

문서 2. 나는 밥을 좋아하고 요리도 좋아한다.

문서 3. 요리는 재밌고 밥을 먹는 것도 즐겨한다.

문서 4. 밥도 맛있지만 카레도 맛있다.

DTM의 경우 BoW의 장단점에서 볼 수 있듯, 단어 집합이 커질 수록 단어 가방 또한 커지기 때문에 공간적으로 비효율적이라는 단점을 가지고 있습니다. 또한, 단순 빈도 수를 기반으로 하기에 불용어 처리를 잘 하지 않으면 유의미한 결과를 얻을 수 없다는 한계가 있습니다.