도커 네트워크 (ingress와 docker_gwbridge)

- test1 서비스 배포 (포트를 외부에 노출하지 않음)

rapa@manager:~$ docker service create \

> --name test1 \

> --mode global \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

2m5ogimgjcek9fb54fxxn6f07

overall progress: 4 out of 4 tasks

r4nzbg56vw2t: running [==================================================>]

ja2thy2jrbx7: running [==================================================>]

3eluuoy3nlnt: running [==================================================>]

65fyctmrwxut: running [==================================================>]

verify: Service converged - test2 서비스 배포 (8001 포트를 외부에 노출함)

rapa@manager:~$ docker service create \

> --name test2 \

> -p 8001:80 \

> --mode global \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

vc0wdye5cvokhf4ss3qi5s352

overall progress: 4 out of 4 tasks

r4nzbg56vw2t: running [==================================================>]

3eluuoy3nlnt: running [==================================================>]

65fyctmrwxut: running [==================================================>]

ja2thy2jrbx7: running [==================================================>]

verify: Service converged - 서비스 배포 확인

rapa@manager:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0b0a71d1bc65 nginx:latest "/docker-entrypoint.…" 26 seconds ago Up 24 seconds 80/tcp test2.3eluuoy3nlnt879m08u8leejp.jctck3f4iiuh3egauef07rml3

a1925c762b3c nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp test1.3eluuoy3nlnt879m08u8leejp.m3hyfb7t5h516469hipxks3ay- test1의 컨테이너 확인 (포트를 외부에 노출하지 않음)

rapa@manager:~$ docker container inspect test1.3eluuoy3nlnt879m08u8leejp.m3hyfb7t5h516469hipxks3ay "Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "4e05e2d998f4a02fdd25a45ac73214c22562e31cb94a50b7c9f0fdbf0e2bbbe1",

"EndpointID": "114f3d1ddaa8a22dfe2bbda228e8aee3c8088de39574ae435b55e5b3b6f6a74c",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null

}

}-> ip: 172.17.0.X

-> docker0에 연결

- test2의 컨테이너 확인 (8003 포트를 외부에 노출함)

rapa@manager:~$ docker container inspect test2.3eluuoy3nlnt879m08u8leejp.jctck3f4iiuh3egauef07rml3 "Networks": {

"ingress": {

"IPAMConfig": {

"IPv4Address": "10.0.0.23"

},

"Links": null,

"Aliases": [

"0b0a71d1bc65"

],

"NetworkID": "f9ffavipvi297sshgrpbh714c",

"EndpointID": "6edf574bf6f5a6da946243d79688c993b5540a74b7310c91df25e6174a9250d9",

"Gateway": "",

"IPAddress": "10.0.0.23",

"IPPrefixLen": 24,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:0a:00:00:17",

"DriverOpts": null

}

}-> ip: 10.0.0.X

-> ingress 네트워크에 연결됨

- ingress 네트워크 확인

rapa@manager:~$ docker network inspect ingress "Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]- overlay 네트워크 생성 (myoverlay)

overlay 드라이버를 이용한 네트워크는 전 클러스터 상에 동일한 네트워크 영역을 제공하고 터널을 통해 물리적 위치와 상관 없이 통신한다.

rapa@manager:~$ docker network create \

> --subnet 20.20.20.0/24 \

> -d overlay \

> testoverlay

vhrv5rkuh4d9cvlzv2xihxkfw- overlay 네트워크 생성 확인 (myoverlay)

rapa@manager:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

4e05e2d998f4 bridge bridge local

e440baec57b5 docker_gwbridge bridge local

3ead376f089f host host local

f9ffavipvi29 ingress overlay swarm

39430d1f4412 none null local

vhrv5rkuh4d9 testoverlay overlay swarm-> scope: swarm

- myoverlay 네트워크를 사용하는 서비스 배포(test3)

rapa@manager:~$ docker service create --name test3 -p 8002:80 --network testoverlay --mode global nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

6ji3p3n2zia0rfhi92hlf5sv6

overall progress: 4 out of 4 tasks

ja2thy2jrbx7: running [==================================================>]

65fyctmrwxut: running [==================================================>]

r4nzbg56vw2t: running [==================================================>]

3eluuoy3nlnt: running [==================================================>]

verify: Service converged - test3 컨테이너 확인

rapa@manager:~$ docker container ls | grep test3

aa7ff3e111e6 nginx:latest "/docker-entrypoint.…" About a minute ago Up About a minute 80/tcp test3.3eluuoy3nlnt879m08u8leejp.m76pot1izt3kypshhthmi26v5

rapa@manager:~$ docker container inspect test3.3eluuoy3nlnt879m08u8leejp.m76pot1izt3kypshhthmi26v5 "Networks": {

"ingress": {

"IPAMConfig": {

"IPv4Address": "10.0.0.30"

},

"Links": null,

"Aliases": [

"aa7ff3e111e6"

],

"NetworkID": "f9ffavipvi297sshgrpbh714c",

"EndpointID": "5ce01b4f200177fc0b4ddcfbc77d6f51718fdd00f1fc875093f23742fcdabb1c",

"Gateway": "",

"IPAddress": "10.0.0.30",

"IPPrefixLen": 24,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:0a:00:00:1e",

"DriverOpts": null

},

"testoverlay": {

"IPAMConfig": {

"IPv4Address": "20.20.20.5"

},

"Links": null,

"Aliases": [

"aa7ff3e111e6"

],

"NetworkID": "vhrv5rkuh4d9cvlzv2xihxkfw",

"EndpointID": "81584abd922d9a336d588930d2b6ba7770808ea76b0de0b5a96f6164ac4204ee",

"Gateway": "",

"IPAddress": "20.20.20.5",

"IPPrefixLen": 24,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:14:14:14:05",

"DriverOpts": null

}

}-> ip가 두 개 할당되었다.

-> ingress 로부터 10.0.0.30 할당

-> testoverlay 로부터 20.20.20.5 할당

-> -p 옵션이 있으면 ingress 네트워크와 연결된다.

-> -p 옵션이 없으면 docker0 네트워크와 연결된다.

docker container run으로 생성한 컨테이너는 클러스터 네트워크에 연결하는 것이 불가능하다.

오버레이 네트워크를 생성할 때 --attachable 옵션을 사용하면 docker container run으로 생성한 컨테이너를 연결할 수 있다.

docker network create \

-d overlay \

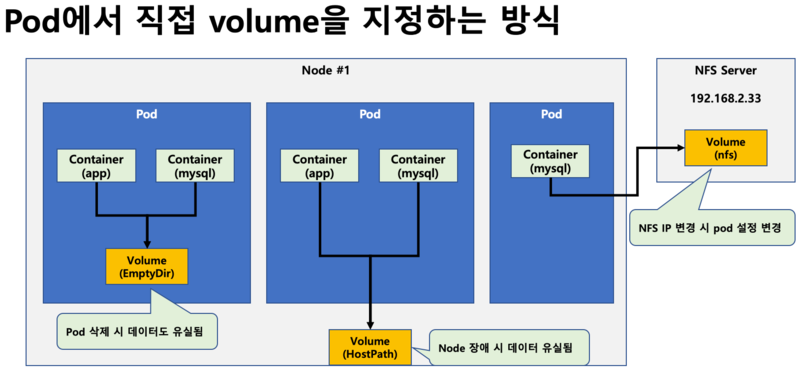

--attachable myoverlay2 볼륨 사용하기

1) 볼륨 마운트(iSCSI)

docker container run \

-v testvolume:/var/lib/mysql 2) 바인딩(NFSj)

docker container run \

-v testdir:/var/www/html

이미지 출처: https://m.blog.naver.com/freepsw/222005161870

호스트의 볼륨을 사용할 경우

1. 노드 자체(호스트)에 문제가 있을 경우 볼륨 접근 불가

2. 스케일의 축소/확장 등으로 인하여 신규 컨테이너가 기존 볼륨과 연결되지 않는 문제

3. 각 호스트 별로 별도의 볼륨을 사용하므로 데이터의 동기화가 되지 않아 각 볼륨 별로 별도의 데이터베이스가 동작하는 문제

최종적으로 로컬 볼륨은 좋은 선택이 이니다. 외부에 있는 스토리지를 사용해야 한다.

서비스 생성 시 볼륨 생성을 동시에 진행할 수 있다. 단, 기존 컨테이너에서 -v 옵션을 이용하는 방법의 경우 volume, nfs 구분 어려울 수 있으므로 서비스에서는 이를 명확히 구분해주어야 한다.

type=volume -> volume

type=bind -> nfs

- 배포 (volume 연결)

rapa@manager:~$ docker service create \

> --name testvol1 \

> --mount type=volume,source=vol1,target=/root \

> --replicas 1 \

> --constraint node.role==manager \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

k7zmxo9m5qlwqerugp57rbvzx

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged -> 컨테이너의 /root와 volume 방식으로 연결

- 컨테이너의 볼륨 확인

rapa@manager:~$ docker container exec testvol1.1.ozckjxdkji9a1dezppnusgnar df -h | grep /root

/dev/sda5 20G 12G 6.3G 66% /root-> /root: 별도의 독립적인 파이션과 연결되어있음 (sda5)

- 배포 (nfs 연결)

rapa@manager:~$ sudo mkdir /testvol2rapa@manager:~$ docker service create \

> --name testvol2 \

> --mount type=bind,source=/testvol2,target=/root \

> --replicas 1 \

> --constraint node.role==manager \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

zem081t7isbks4exxa2ke9p8z

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged - 마운트 확인

rapa@manager:/testvol2$ sudo touch test_from_host.txt

rapa@manager:/testvol2$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c15e2ba2ecbf nginx:latest "/docker-entrypoint.…" 4 minutes ago Up 4 minutes 80/tcp testvol2.1.pco0pgvuw5ockfl2hmhddlmy8

ee7919db546e nginx:latest "/docker-entrypoint.…" 10 minutes ago Up 10 minutes 80/tcp testvol1.1.ozckjxdkji9a1dezppnusgnar

aa7ff3e111e6 nginx:latest "/docker-entrypoint.…" 3 hours ago Up 3 hours 80/tcp test3.3eluuoy3nlnt879m08u8leejp.m76pot1izt3kypshhthmi26v5

0b0a71d1bc65 nginx:latest "/docker-entrypoint.…" 3 hours ago Up 3 hours 80/tcp test2.3eluuoy3nlnt879m08u8leejp.jctck3f4iiuh3egauef07rml3

a1925c762b3c nginx:latest "/docker-entrypoint.…" 3 hours ago Up 3 hours 80/tcp test1.3eluuoy3nlnt879m08u8leejp.m3hyfb7t5h516469hipxks3ay

99241f2512d0 portainer/portainer "/portainer" 23 hours ago Up 5 hours 8000/tcp, 0.0.0.0:9000->9000/tcp, :::9000->9000/tcp portainer

rapa@manager:/testvol2$ docker container exec testvol2.1.pco0pgvuw5ockfl2hmhddlmy8 ls /root

test_from_host.txt

rapa@manager:/testvol2$ 가용성(Availability)

rapa@manager:~$

rapa@manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

r4nzbg56vw2tuylr1hhvqdn70 worker1 Ready Active 20.10.17

65fyctmrwxutz4k9i1tkabtm7 worker2 Ready Active 20.10.17

ja2thy2jrbx7zi1y3b6iffluw worker3 Ready Active 20.10.17active: 노드가 컨테이너를 동작시키고 생성할 수 있는 준비가 된 상태

drain: 컨테이너를 해당 노드에 배치시키지 않는다. 해당 노드에서 동작 중인 모든 컨테이너는 중지

pause: 컨테이너를 해당 노드에서 배치시키지 않는다. 컨테이너가 일시 중지된다.

- 서비스 배포 (replicas 3)

rapa@manager:~$ docker service create \

> --name test1 \

> --replicas 3 \

> --constraint node.role==worker \

> -p 8001:80 \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

plefjwliyq486xtdopl7c8hrb

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - 배포 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lcrccltgq94o test1.1 nginx:latest worker1 Running Running 25 seconds ago

oy7al1s4t4a2 test1.2 nginx:latest worker2 Running Running 25 seconds ago

t98av0l5lnm7 test1.3 nginx:latest worker3 Running Running 23 seconds ago - worker3을 drain으로 변경

rapa@manager:~$ docker node update \

> --availability drain \

> worker3

worker3- worker3 노드 상태 변경 확인

rapa@manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

r4nzbg56vw2tuylr1hhvqdn70 worker1 Ready Active 20.10.17

65fyctmrwxutz4k9i1tkabtm7 worker2 Ready Active 20.10.17

ja2thy2jrbx7zi1y3b6iffluw worker3 Ready Drain 20.10.17-> worker3이 Drain으로 변경되었음

- 컨테이너 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lcrccltgq94o test1.1 nginx:latest worker1 Running Running about a minute ago

oy7al1s4t4a2 test1.2 nginx:latest worker2 Running Running about a minute ago

gz99nn9n4l82 test1.3 nginx:latest worker2 Running Preparing 4 seconds ago

t98av0l5lnm7 \_ test1.3 nginx:latest worker3 Shutdown Shutdown 1 second ago -> worker3에 있던 test1.3이 worker2에 배치되었음(worker3에 배치되지 않음)

- scale 4

rapa@manager:~$ docker service scale test1=4

test1 scaled to 4

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged - scale 변경 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lcrccltgq94o test1.1 nginx:latest worker1 Running Running 3 minutes ago

oy7al1s4t4a2 test1.2 nginx:latest worker2 Running Running 3 minutes ago

gz99nn9n4l82 test1.3 nginx:latest worker2 Running Running 2 minutes ago

t98av0l5lnm7 \_ test1.3 nginx:latest worker3 Shutdown Shutdown 2 minutes ago

b1y4vxkd6beu test1.4 nginx:latest worker1 Running Running 20 seconds ago -> test1.4가 worker1에 생성되었음(worker3에 배치되지 않음)

- worker3을 다시 active로 변경

rapa@manager:~$ docker node update --availability active worker3

worker3- worker3 노드 상태 변경 확인

rapa@manager:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

r4nzbg56vw2tuylr1hhvqdn70 worker1 Ready Active 20.10.17

65fyctmrwxutz4k9i1tkabtm7 worker2 Ready Active 20.10.17

ja2thy2jrbx7zi1y3b6iffluw worker3 Ready Active 20.10.17- 서비스 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lcrccltgq94o test1.1 nginx:latest worker1 Running Running 6 minutes ago

oy7al1s4t4a2 test1.2 nginx:latest worker2 Running Running 6 minutes ago

gz99nn9n4l82 test1.3 nginx:latest worker2 Running Running 5 minutes ago

t98av0l5lnm7 \_ test1.3 nginx:latest worker3 Shutdown Shutdown 5 minutes ago

b1y4vxkd6beu test1.4 nginx:latest worker1 Running Running 3 minutes ago -> worker3이 다시 active로 되었어도 여전히 컨테이너들이 worker3으로 재배치 되지 않고, worker1과 worker2에 두 개씩 있음

- scale 1로 축소 후 다시 3으로 확장

rapa@manager:~$ docker service scale test1=1

test1 scaled to 1

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged

rapa@manager:~$ docker service scale test1=3

test1 scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - 컨테이너 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lcrccltgq94o test1.1 nginx:latest worker1 Running Running 7 minutes ago

d36ys4z4szui test1.2 nginx:latest worker2 Running Running 8 seconds ago

0f0s4759uqj6 test1.3 nginx:latest worker3 Running Running 8 seconds ago

t98av0l5lnm7 \_ test1.3 nginx:latest worker3 Shutdown Shutdown 5 minutes ago -> worker1, 2, 3에 각 1개의 컨테이너가 배치된다.

Label

기존의 node.role과 비슷하게 노드에 key:value 형식의 라벨을 부착하고 이를 기반으로 컨테이너를 배치하고자 할 때 활용된다.

노드 구분

-

node.role

-

node.id

-

node.hostname

-

worker에 label 추가

rapa@manager:~$ docker node update \

> --label-add zone=a \

> --label-add company=abc \

> worker1

worker1

rapa@manager:~$ docker node update \

> --label-add zone=b \

> --label-add company=abc \

> worker2

worker2

rapa@manager:~$ docker node update \

> --label-add zone=c \

> worker3

worker3- label 적용 확인

rapa@manager:~$ docker node inspect worker3 --pretty | grep zone

- zone=c- zone=c에 배포

rapa@manager:~$ docker service create \

> --name zone \

> --mode global \

> --constraint 'node.labels.zone==c' \

> nginx

wrieg858imotor62zxptlbdsp

overall progress: 1 out of 1 tasks

ja2thy2jrbx7: running [==================================================>]

verify: Service converged - 배포 확인

rapa@manager:~$ docker service ps zone

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

n7lzywznrbrx zone.ja2thy2jrbx7zi1y3b6iffluw nginx:latest worker3 Running Running 20 seconds ago -> zone=c인 worker3에 배치되었다

-

swarm mode

명령어를 사용하여 클러스터 환경에 컨테이너, 볼륨, 네트워크를 배포할 수 있다. 단, 위의 방법을 사용할 경우 서비스 환경을 조정해야 하는 경우에는 명령을 다시 처음부터 작성해야 하는 등의 불편함이 있다. -

docker-compose

명령어 사용의 불편함을 해결하기 위하여 yml 형태로 환경을 구성하고 이를 도커가 명령으로 변환하여 서비스를 제공하는 기능 -

swarm mode + docker-compose -> docker stack

실습 - docker stack 배포 (1)

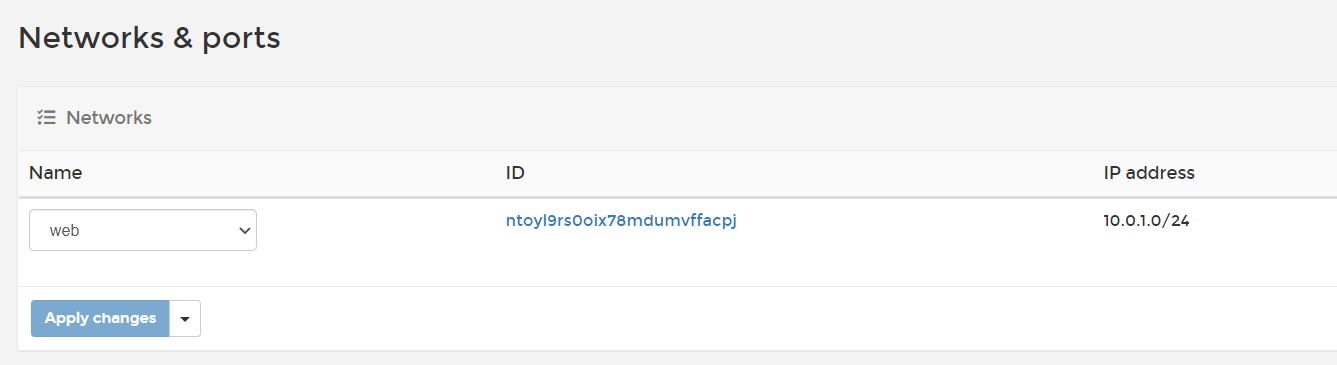

step 1) overlay 네트워크 생성 (web)

rapa@manager:~/0825$ docker network create \

> --driver=overlay \

> --attachable \

> web

ntoyl9rs0oix78mdumvffacpjstep 2) yml 파일 작성

rapa@manager:~/0825$ vi web.yml version: '3.7'

services:

nginx:

image: nginx

deploy:

replicas: 3

placement:

constraints: [node.role == worker]

restart_policy:

condition: on-failure

max_attempts: 2

environment:

SERVICE_PORTS: 80

networks:

- web

proxy:

image: dockercloud/haproxy

depends_on:

- nginx

volumes:

- /var/run/docker.sock:/var/run/docker.sock # nginx와 haproxy는 socker 통신을 해야 하기 떄문에 mount 설정

ports: # -p 옵션. ingress network에 부착된다.

- "80:80"

networks: # backend network -> nginx containers

- web

deploy:

mode: global

placement:

constraints: [node.role == manager]

networks:

web:

external: true step 3) 스택 배포

rapa@manager:~/0825$ docker stack deploy \

> -c web.yml \

> web

Creating service web_nginx

Creating service web_proxy-> -c: compose 파일

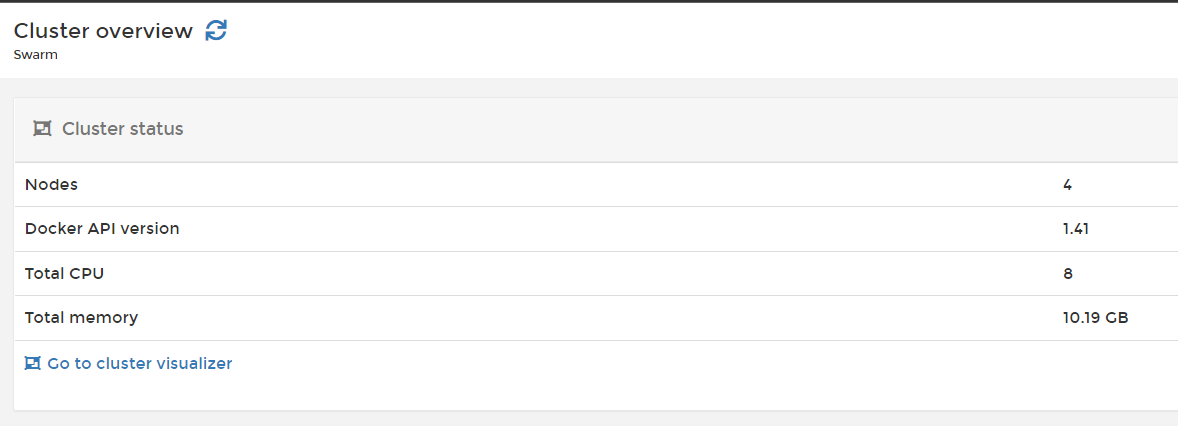

step 4) 스택 배포 확인

rapa@manager:~/0825$ docker stack ls

NAME SERVICES ORCHESTRATOR

web 2 Swarm

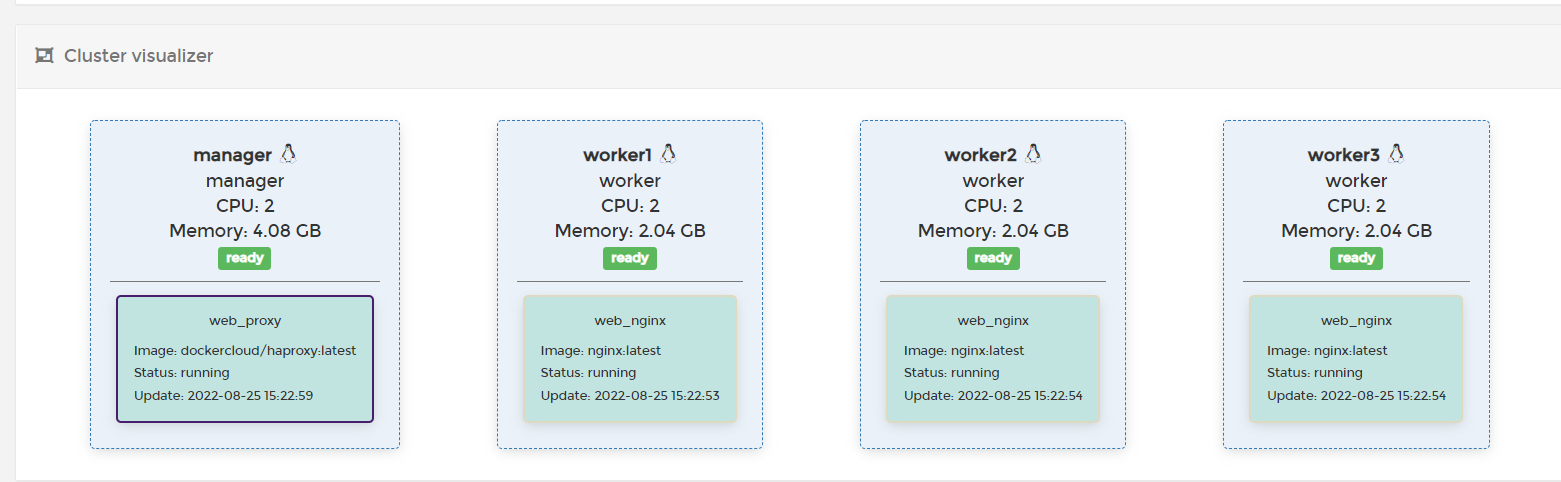

rapa@manager:~/0825$ docker stack ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

4pftqx0d8dnj web_nginx.1 nginx:latest worker3 Running Running about a minute ago

ta9k55hveahx web_nginx.2 nginx:latest worker1 Running Running about a minute ago

i9qgwyhepr03 web_nginx.3 nginx:latest worker2 Running Running about a minute ago

8j2nx1bd2zrm web_proxy.3eluuoy3nlnt879m08u8leejp dockercloud/haproxy:latest manager Running Running 56 seconds ago

-

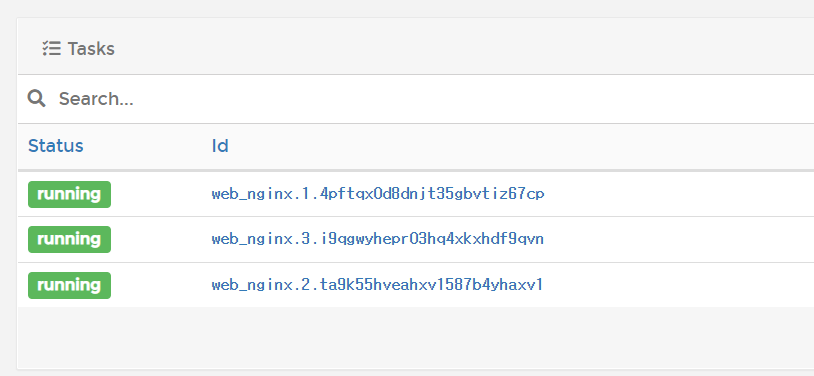

portainer에서 확인

-

web_nginx 확인

실습 - docker stack 배포 (2)

HAPROXY -> manager 에 배치

Label 부착

worker 1 -> zone=seoul

worker 2 -> zone=seoul

worker 3 -> zone=busan

새로운 overlay network: myovlnet -> 서브넷: 10.10.123.0/24

haproxy는 자신의 80번 포트를 호스트의 8001과 연결하고 해당 연결은 myovlnet을 통해 zone=seoul에 배포된, wordpress로 연결된다. 최종적으로 worker3에는 cAdvisor가 연결한다.

cAdvisor는 myovlnet과 연결되어 다른 컨테이너들을 모니터링할 수 있다.

step 1) overlay 네트워크 생성 (myovlnet)

rapa@manager:~/0825$ docker network create \

> --subnet 10.10.123.0/24 \

> --driver=overlay \

> --attachable \

> myovlnet

tc7gxpmtk9p2b3s6dgt312mlestep 2) 노드에 라벨 부착 (zone)

rapa@manager:~/0825$ docker node update \

> --label-add zone=seoul \

> worker1

worker1

rapa@manager:~/0825$ docker node update \

> --label-add zone=seoul \

> worker2

worker2

rapa@manager:~/0825$ docker node update \

> --label-add zone=busan \

> worker3

worker3step 3) yml 파일 작성

rapa@manager:~/0825$ vi web2.yml version: '3.7'

services:

wordpress:

image: wordpress

deploy:

mode: global

placement:

constraints: [node.labels.zone == seoul]

restart_policy:

condition: on-failure

max_attempts: 2

environment:

SERVICE_PORTS: 80

networks:

- myovlnet

proxy:

image: dockercloud/haproxy

depends_on:

- wordpress

volumes:

- /var/run/docker.sock:/var/run/docker.sock # nginx와 haproxy는 socker 통신을 해야 하기 떄문에 mount 설정

ports: # -p 옵션. ingress network에 부착된다.

- "8001:80"

networks: # backend network -> wordpress containers

- myovlnet

deploy:

mode: global

placement:

constraints: [node.role == manager]

networks:

myovlnet:

external: true step 4) 스택 배포

rapa@manager:~/0825$ docker stack deploy \

> -c web2.yml \

> web2

Creating service web2_proxy

Creating service web2_wordpress-> -c: compose 파일

step 5) 스택 배포 확인

rapa@manager:~/0825$ docker stack ls

NAME SERVICES ORCHESTRATOR

web2 2 Swarm

rapa@manager:~/0825$ docker stack ps web2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nej5r4b6inyk web2_proxy.3eluuoy3nlnt879m08u8leejp dockercloud/haproxy:latest manager Running Running 2 minutes ago

09cirgob5yvz web2_wordpress.65fyctmrwxutz4k9i1tkabtm7 wordpress:latest worker2 Running Running about a minute ago

rv7du8yb8wit web2_wordpress.r4nzbg56vw2tuylr1hhvqdn70 wordpress:latest worker1 Running Running about a minute ago -> manager에 haproxy 배포되었음

-> zone=seoul인 worker1, worker2에 wordpress 배포되었음

step 6) portainer 배포 (manager)

[manager]

docker container run -d \

--restart always \

-p 9000:9000 \

-v /var/run/docker.sock:/var/run/docker.sock \

--name portainer2 \

--net=myovlnet \

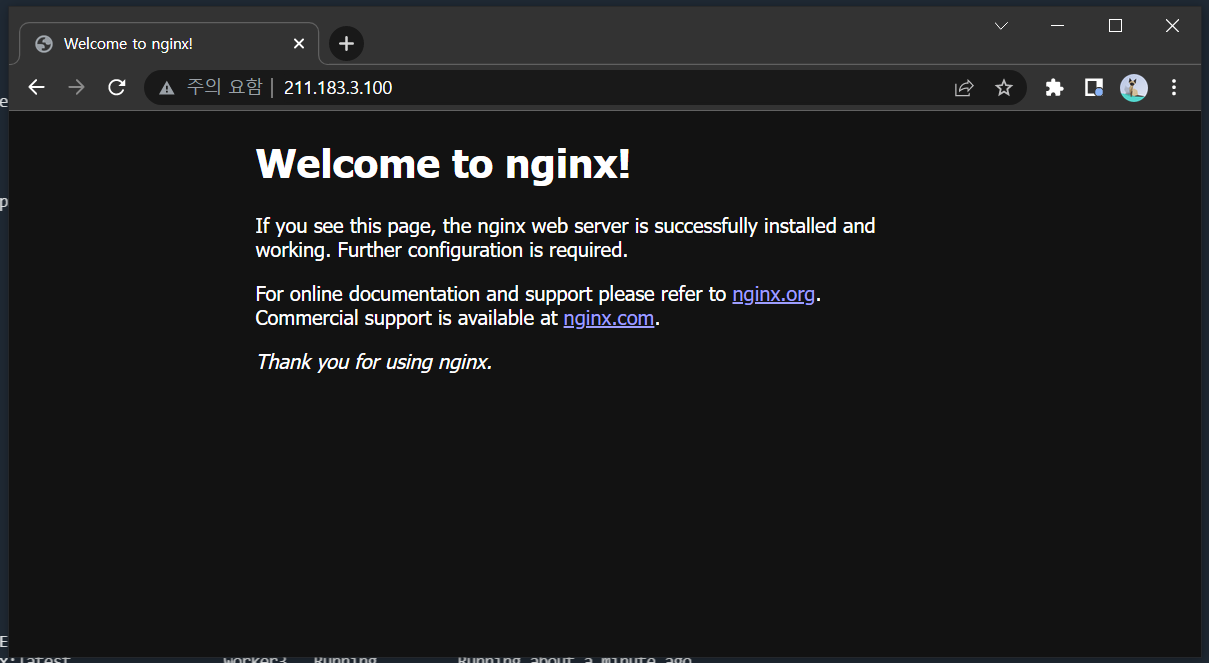

portainer/portainerstep 7) 배포 확인

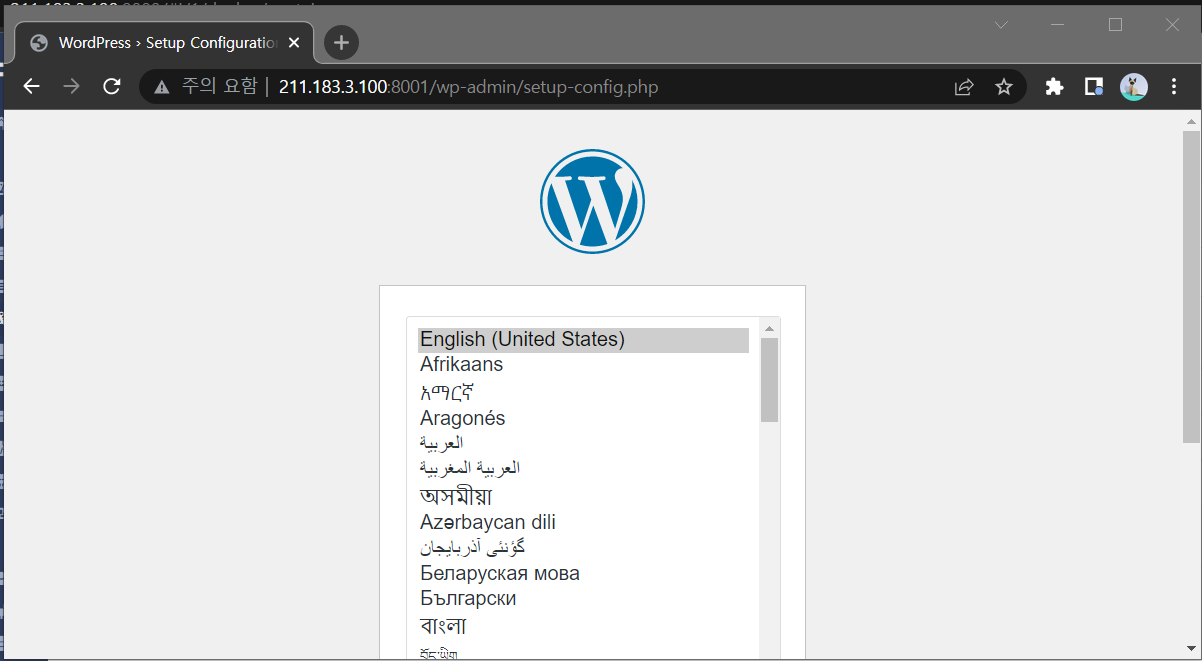

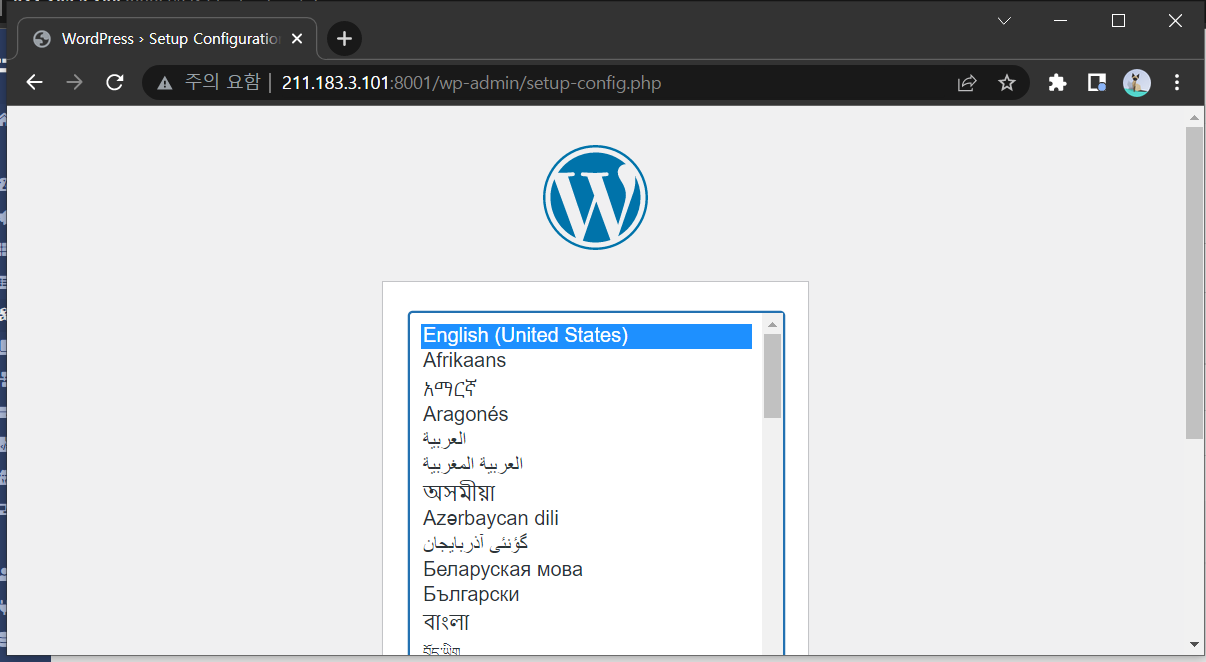

8001번 포트(haproxy) 접속

-> .100, .101, .102, .103에서 모두 접속이 된다

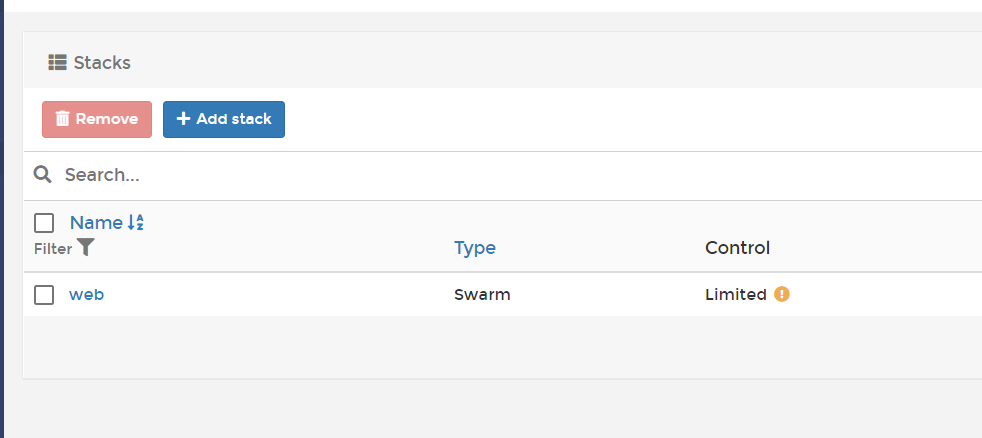

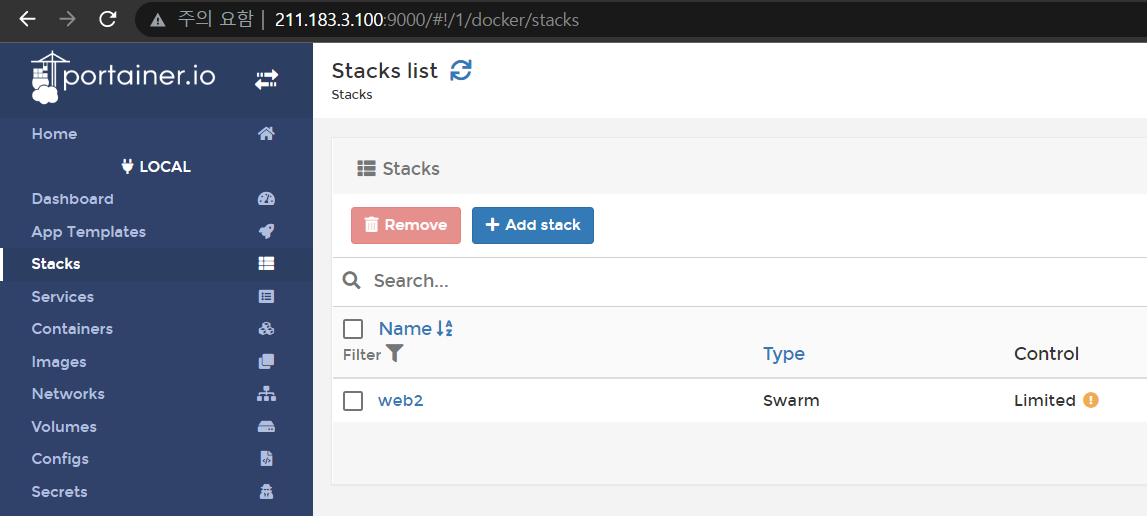

9000번 포트(portainer) 접속

- stacks 확인

-> web2 스택이 배포되었다

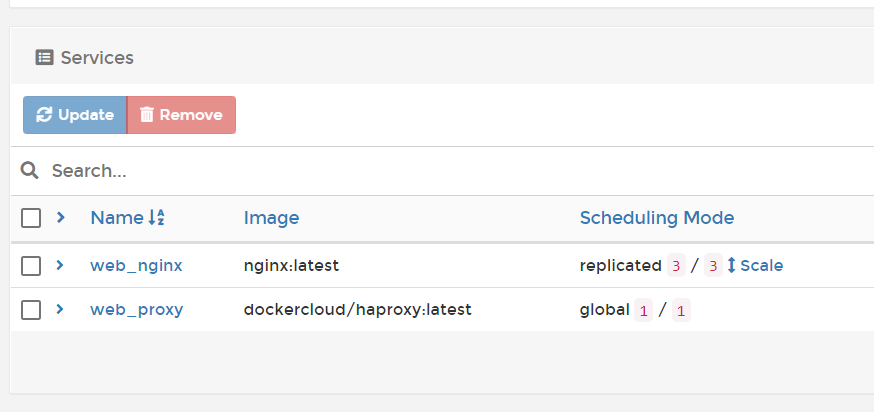

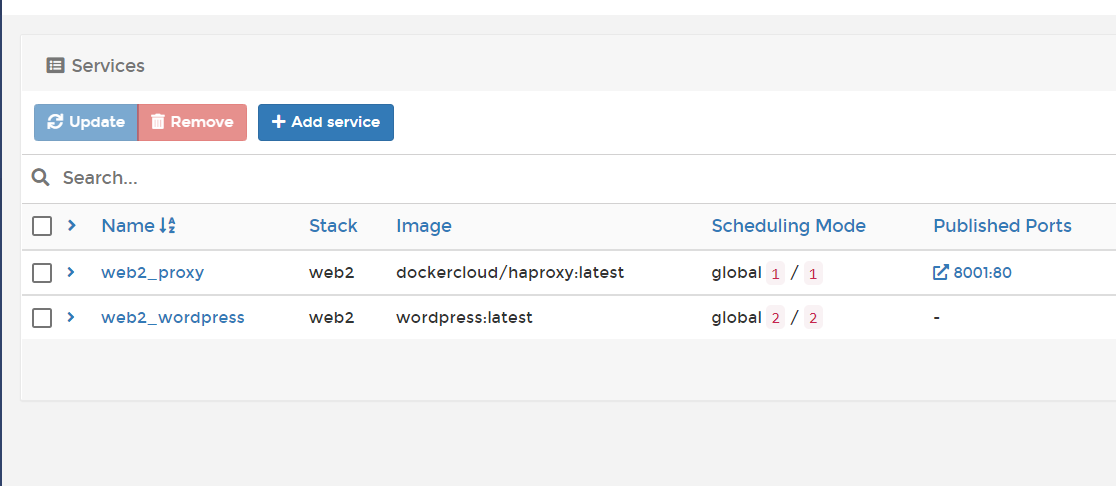

- services 확인

-> web2의 서비스 proxy와 wordpress가 배포되었다.