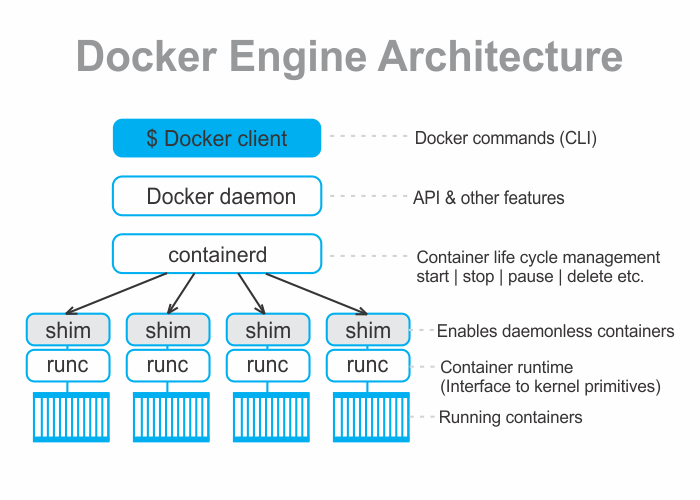

이미지 출처: https://hemanta.io/a-detailed-guide-to-docker-engine/

- daemon: containerd에게 명령을 전달한다

- containerd: 실제 컨테이너를 생성하기 때문에 runtime의 역할을 한다. 컨테이너 life cycle을 관리한다.

(kubernetes는 runtime으로 docker daemon을 쓰지 않고 kubelet을 사용함. 과거에는 kubelet을 통해 docker daemon과 통신했지만, k8s에서 이제는 docker daemon이 사라졌음) - shim: 향후에 만들어지는 컨테이너를 관리한다. shim은 컨테이너를 만들기 위해 runc와 통신한다.

- runc: chroot, namespace를 활용해 컨테이너를 만든다. 컨테이너를 생성한 후 runc는 사라진다.

- container: runc를 통해 컨테이너가 생성된 후 runc는 사라지기 때문에 shim과 통신을 하게 됨.

실습 1- 도커 엔진 api 사용하기

예제 1 - 컨테이너 배포

- 파이썬 버전 및 경로 확인

rapa@manager:~/0824$ python3 --version

Python 3.8.10

rapa@manager:~/0824$ which python3

/usr/bin/python3- pip 설치

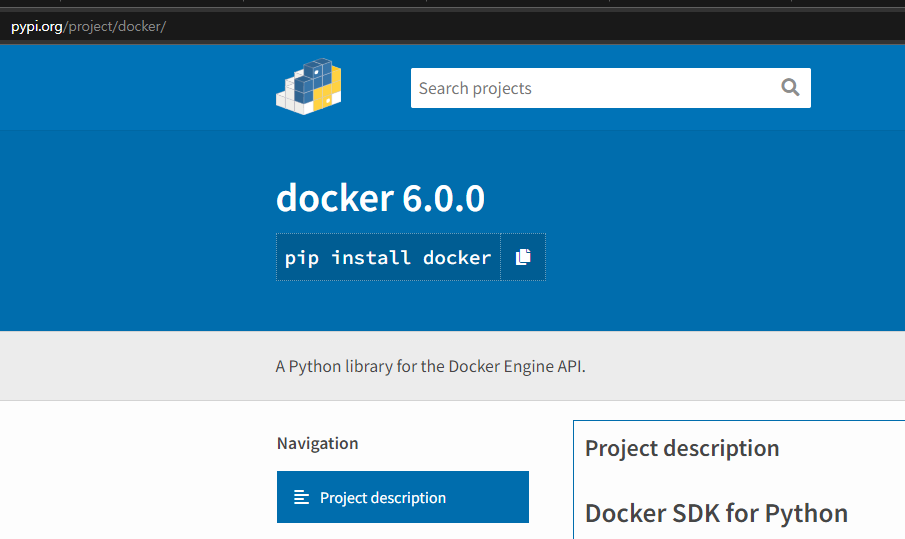

rapa@manager:~/0824$ sudo apt -y install pip- 도커 라이브러리 설치

rapa@manager:~$ pip install docker- 파이썬 스크립트 작성

rapa@manager:~/0824$ vi ctn.py#!/usr/bin/python3

import docker

client = docker.from_env()

print(client.containers.run("alpine", ["echo", "hello", "all"]))-> alpine 컨테이너 배포한 뒤 명령어 실행

-> alpine 리눅스는 가볍고 간단한 리눅스 배포판이다.

- 파일에 실행 권한 부여

rapa@manager:~/0824$ chmod +x ctn.py - 스크립트 실행

rapa@manager:~/0824$ ./ctn.py

b'hello all\n'- 컨테이너 확인

rapa@manager:~/0824$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESrapa@manager:~/0824$ docker container ls --all

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01e2790ed5a9 alpine "echo hello all" 40 seconds ago Exited (0) 37 seconds ago recursing_pare-> 컨테이너가 한 번 실행되고 종료되었음

예제 2 - 컨테이너 id 출력 (백그라운드 실행)

- 컨테이너를 백그라운드에서 실행

rapa@manager:~/0824$ cp ctn.py ctn1.py

rapa@manager:~/0824$ vi ctn1.py #!/usr/bin/python3

import docker

client = docker.from_env()

test = client.containers.run("nginx", detach=True)

print(test.id)-> detach=True: 백그라운드에서 실행

-> 컨테이너 실행 후 id 출력

- 컨테이너 실행

rapa@manager:~/0824$ ./ctn1.py

b1f688f5a5d7d24aa7cafa3e90791dff7ca53b64070ac75c3f95729da993f6fd-> 컨테이너 실행 후 id 출력

- 컨테이너 확인

rapa@manager:~/0824$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b1f688f5a5d7 nginx "/docker-entrypoint.…" 42 seconds ago Up 41 seconds 80/tcp jovial_jemison- 컨테이너 하나 더 실행

rapa@manager:~/0824$ ./ctn1.py

5350076169a78710ee7081f97087f398071500c13a710f35b7642d6cc9c1d835- 컨테이너 실행 확인

rapa@manager:~/0824$ docker container ls -q

5350076169a7

b1f688f5a5d7예제 3 - 컨테이너 이미지 출력(백그라운드 실행)

- 파일 생성

rapa@manager:~/0824$ cp ctn1.py ctn2.py

rapa@manager:~/0824$ vi ctn2.py#!/usr/bin/python3

import docker

client = docker.from_env()

for ctn in client.containers.list():

print(ctn.id)- 스크립트 실행

rapa@manager:~/0824$ ./ctn2.py

5350076169a78710ee7081f97087f398071500c13a710f35b7642d6cc9c1d835

b1f688f5a5d7d24aa7cafa3e90791dff7ca53b64070ac75c3f95729da993f6fd- 이미지 id 출력

rapa@manager:~/0824$ vi ctn2.py#!/usr/bin/python3

import docker

client = docker.from_env()

for img in client.images.list():

print(img.id)- 실행

rapa@manager:~/0824$ ./ctn2.py

sha256:a213a945cd8801d57ec46f013b01abd024731563ceedc861fd3c0baa197c3426

sha256:faa9ae101fe98c887ae0609e5916648c361f8f0fddcaf7c0d7be4981e257cae6

sha256:3a0f7b0a13ef62e85d770396e1868bf919f4747743ece4f233895a246c436394

sha256:9c6f0724472873bb50a2ae67a9e7adcb57673a183cea8b06eb778dca859181b5

sha256:7b94cda7ffc7c59b01668e63f48e0f4ee3d16b427cc0b846193b65db671e9fa2

sha256:66b89e8b083b68f0bc8c80a4190bc16a72368a1b44e04b1ed625d954a854c9ea

sha256:b692a91e4e1582db97076184dae0b2f4a7a86b68c4fe6f91affa50ae06369bf5

sha256:f2a976f932ec6fe48978c1cdde2c8217a497b1f080c80e49049e02757302cf74

sha256:8d5df41c547bd107c14368ad302efc46760940ae188df451cabc23e10f7f161b

sha256:3147495b3a5ce957dee2319099a8808c1418e0b0a2c82c9b2396c5fb4b688509

sha256:eeb6ee3f44bd0b5103bb561b4c16bcb82328cfe5809ab675bb17ab3a16c517c9

sha256:eb12107075737019dce2d795dd82f5a72197eb3c64b2140392eaad3ba3b8a34e

sha256:0db5683824d8669ef8494f6e2c3aebf29facbda82a07f17e76bc60e752287144실습 2 - portainer 대시보드 배포

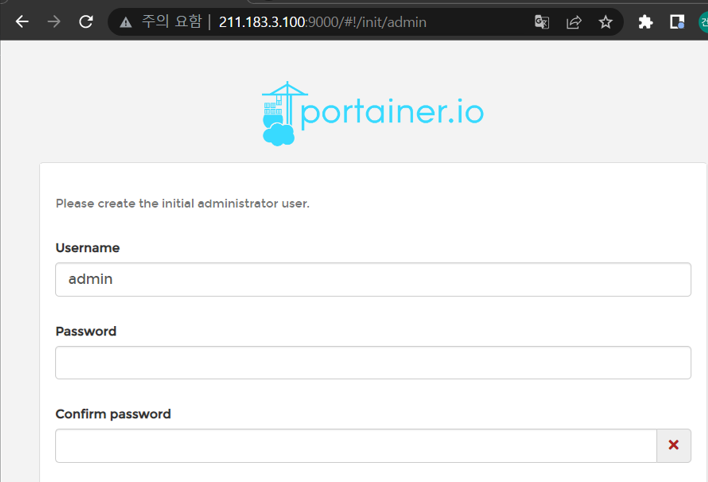

docker container run -d \

> --restart always \

> -p 9000:9000 \

> -v /var/run/docker.sock:/var/run/docker.sock \

> --name portainer \

> portainer/portainer

Unable to find image 'portainer/portainer:latest' locally

latest: Pulling from portainer/portainer

b890dbc4eb27: Pull complete

81378af8dad0: Pull complete

Digest: sha256:958a8e5c814e2610fb1946179a3db598f9b5c15ed90d92b42d94aa99f039f30b

Status: Downloaded newer image for portainer/portainer:latest

6ee9bf22966452662e96aa802666870a1dd6cd10f2057467c913f76100cded9d-

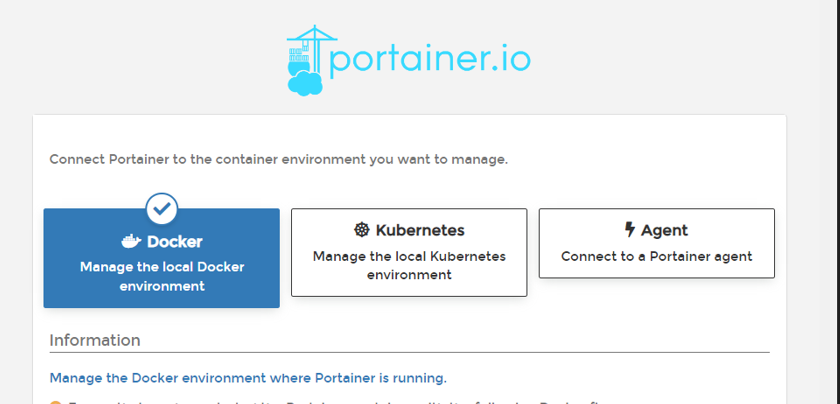

9000포트 접속

-

도커 선택

-

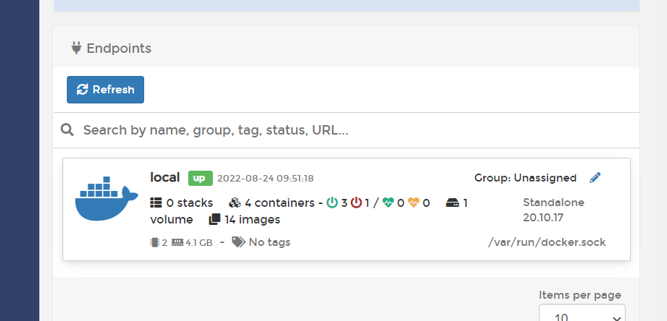

엔드포인트 선택

-

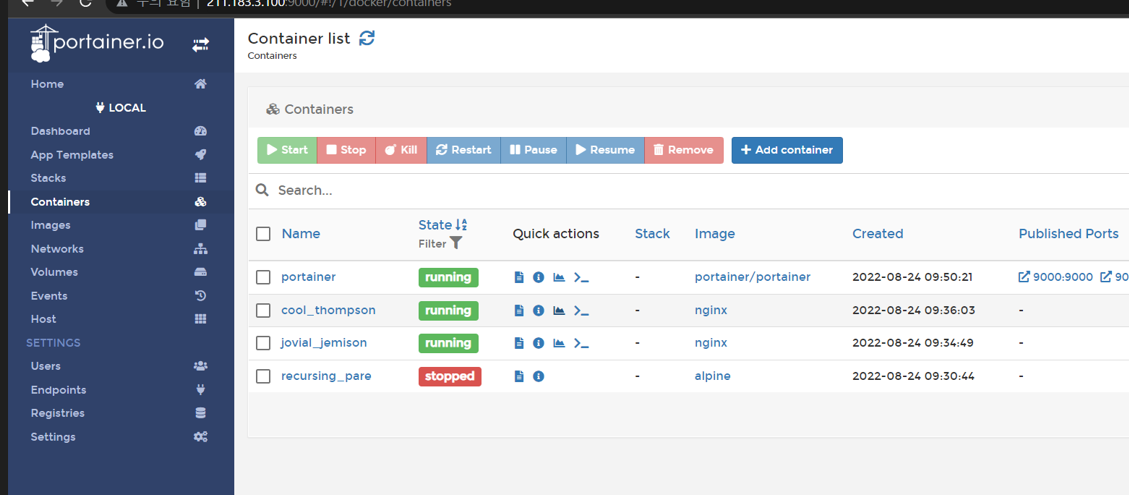

컨테이너 확인

-

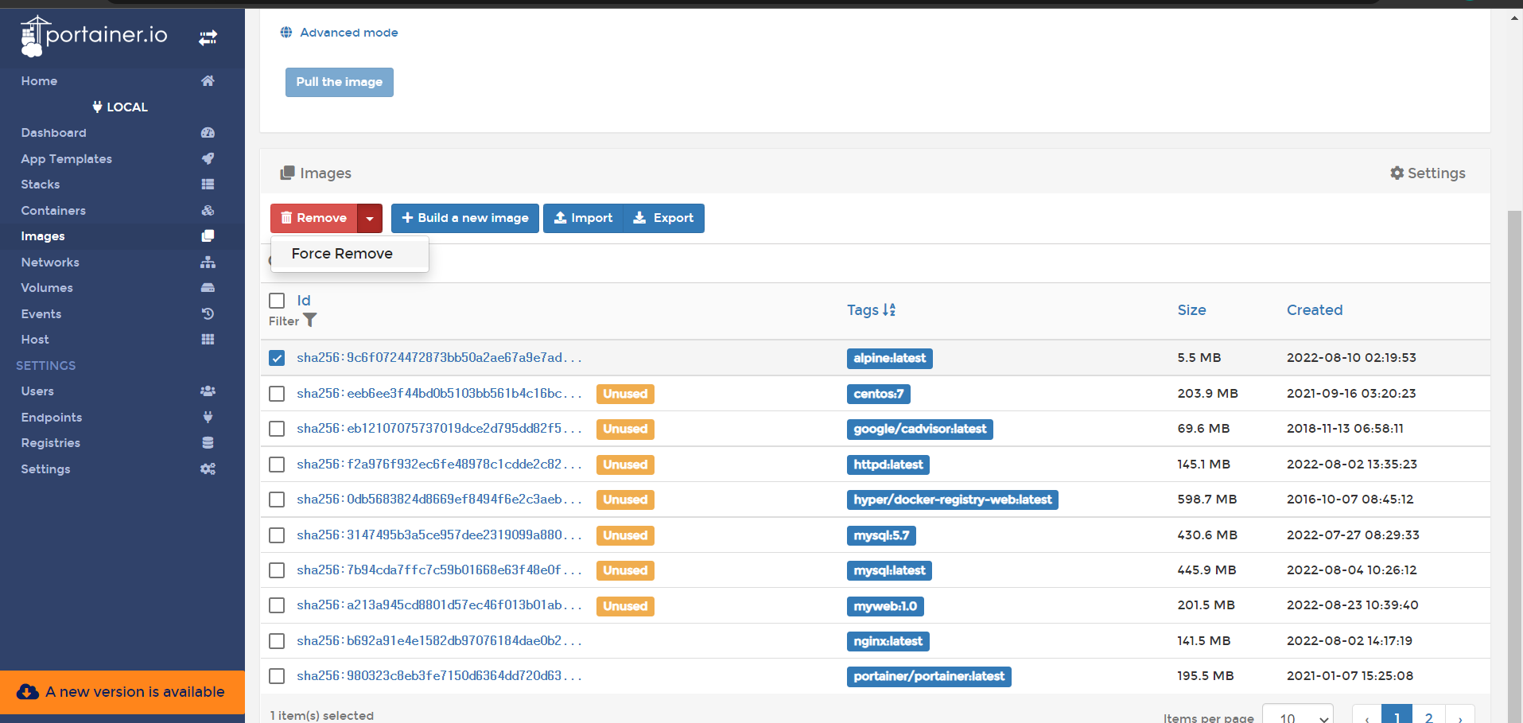

이미지 확인

실습 3 - 도커 스웜 모드

- 스웜 시작 전 확인 사항

- 각 노드에서 /etc/hosts 파일을 열고 아래의 내용이 추가되어 있는지 여부를 확인하세요

211.183.3.100 manager

211.183.3.101 worker1

211.183.3.102 worker2

211.183.3.103 worker3 - 모든 노드에서 동작 중인 컨테이너 중지 -> 삭제

rapa@manager:~/0824$ docker container rm -f $(docker container ls -aq)

6ee9bf229664

5350076169a7

b1f688f5a5d7

01e2790ed5a9- 모든 노드에서 시간을 확인하여 동일한 시간인지 여부 확인

rapa@manager:~/0824$ date

2022. 08. 24. (수) 10:00:16 KST- 구성

CPU RAM NIC(VMnet10)

manager(private-registry) 2 4 211.183.3.100

worker1 2 2 211.183.3.101

worker2 2 2 211.183.3.102

worker3 2 2 211.183.3.103 - cluster 환경에서는 자원을 pool에 담아 하나의 자원으로 활용될 수 있다.

기능적으로 두 가지 기능이 호스트에 부여된다.- manager(master): controller의 역할을 수행

manager는 worker의 역할을 포함한다. roundrobin 방식으로 컨테이너가 배포되기 떄문에 manager에도 컨테이너가 배포된다.

일반적으로 manager는 컨테이너 배포에서 제외시키고 주로 컨트롤, 관리, 모니터링 역할에 주력해야 한다. - worker(node): control로부터 명령을 전달 받고 이를 수행하는 역할

- manager(master): controller의 역할을 수행

- manager, worker는 처음 클러스터 생성 시 자동으로 node.role이 각 노드에 부여된다.

또는 추가적으로 label을 각 노드에 부여하여 컨테이너 배포 시 활용할 수 있다. - 쿠버네티스의 master는 worker의 기능을 수행하지 않는다.

- MSA(Micro Service Architecture)

고도화를 위해 다수의 컨테이너나 VM을 이용하여 개발을 진행할 수 있다. 개발 속도를 향상시키고 모듈 형식으로 기능을 구분하여 개발하므로 문제 발생 시 해당 컨테이너나 코드, VM만 확인하면 되므로 관리가 용이하다. 또한 수평적 확장에도 유용하다.

각 기능 개발에서 언어를 지정하지 않으므로 필요한 언어를 선택하여 개발하면 된다. 각 기능 별 데이터는 api를 통해 주고 받으면 된다.

- 클러스터 환경 구현하기

manager 역할을 수행할 노드에서 토큰을 발행하고 worker는 해당 토큰을 이용하여 매니저에 조인한다.

manager에서 토큰 발행

[manager]

rapa@manager:~/0824$ docker swarm init --advertise-addr ens32

Swarm initialized: current node (3eluuoy3nlnt879m08u8leejp) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3rt8stwmhqiwpf7crsc6pywzyz2au6cz52vog65mclfm6b1d9m-c89oi7eydzewdgdj4bqbf7jbg 211.183.3.100:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.-> 자동으로 ip를 확인해서 넣어줌

-> 토큰을 발행한 노드가 manager가 됨

worker에 토큰 입력

[worker1, 2, 3]

rapa@worker1:~$ docker swarm join --token SWMTKN-1-3rt8stwmhqiwpf7crsc6pywzyz2au6cz52vog65mclfm6b1d9m-c89oi7eydzewdgdj4bqbf7jbg 211.183.3.100:2377

This node joined a swarm as a worker.- 클러스터 구성 확인

[manager]

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

xu4xtago6si3jgz2kc2jz0rgz worker1 Ready Active 20.10.17

l4dpe1ad4ettiq38dprroai16 worker2 Ready Active 20.10.17

5dryaji9a8mhueo4qcjyk7wnz worker3 Ready Active 20.10.17-> 처음 토큰을 발행한 manager가 leader이다.

Availability 종류

Active: 컨테이너 생성이 가능한 상태

drain: 동작 중인 모든 컨테이너가 종료되고, 새로운 컨테이너를 생성할 수도 없는 상태

pause: 새로운 컨테이너를 생성할 수는 없다. 하지만, drain과는 달리 기존 컨테이너가 종료되지는 않는다. 유지 보수를 위해 해당 모드를 drain 또는 pause로 변경한다.

- 토큰 확인

rapa@manager:~/0824$ docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3rt8stwmhqiwpf7crsc6pywzyz2au6cz52vog65mclfm6b1d9m-c89oi7eydzewdgdj4bqbf7jbg 211.183.3.100:2377

rapa@manager:~/0824$ docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3rt8stwmhqiwpf7crsc6pywzyz2au6cz52vog65mclfm6b1d9m-8r8c4r3qo5no0y3me55szogs2 211.183.3.100:2377처음 manager가 토큰을 발행하면 manager용, worker용 토큰이 발행된다. worker 외에 추가로 manager를 두고 싶다면 별도의 노드에서는 manager용 토큰으로 스웜 클러스터에 join한다.

manager1, manager2, worker1, worker2

만약 manager가 한 개인 상태에서 해당 manager가 다운된다면 전체 클러스터를 관리할 수 없으므로 실제 환경에서는 최소한 2대의 manager를 두어야 한다.

- Role 확인

rapa@manager:~/0824$ docker node inspect manager --format "{{.Spec.Role}}"

manager

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

worker- worker1의 등급을 manager로 승격 (promote)

rapa@manager:~/0824$ docker node promote worker1

Node worker1 promoted to a manager in the swarm.

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

manager[worker1]

rapa@worker1:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp manager Ready Active Leader 20.10.17

xu4xtago6si3jgz2kc2jz0rgz * worker1 Ready Active Reachable 20.10.17

l4dpe1ad4ettiq38dprroai16 worker2 Ready Active 20.10.17

5dryaji9a8mhueo4qcjyk7wnz worker3 Ready Active 20.10.17[worker2]

rapa@worker2:~$ docker node ls

Error response from daemon: This node is not a swarm manager. Worker nodes can't be used to view or modify cluster state. Please run this command on a manager node or promote the current node to a manager.-> worker2는 manager가 아니기 때문에 노드 정보를 볼 수 없음

- worker1의 등급을 worker로 격하 (demote)

rapa@manager:~/0824$ docker node demote worker1

Manager worker1 demoted in the swarm.

rapa@manager:~/0824$ docker node inspect worker1 --format "{{.Spec.Role}}"

worker- 각 노드에서 클러스터 벗어나기

[worker1]

rapa@worker1:~$ docker swarm leave

Node left the swarm.[manager]

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

xu4xtago6si3jgz2kc2jz0rgz worker1 Down Active 20.10.17

l4dpe1ad4ettiq38dprroai16 worker2 Ready Active 20.10.17

5dryaji9a8mhueo4qcjyk7wnz worker3 Ready Active 20.10.17-> worker1이 down 상태로 뜨고, 클러스터에는 있는 것으로 보임

[worker2]

rapa@worker2:~$ docker swarm leave

Node left the swarm.[worker3]

rapa@worker3:~$ docker swarm leave

Node left the swarm.[manager]

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

xu4xtago6si3jgz2kc2jz0rgz worker1 Down Active 20.10.17

l4dpe1ad4ettiq38dprroai16 worker2 Down Active 20.10.17

5dryaji9a8mhueo4qcjyk7wnz worker3 Down Active 20.10.17-> worker1, 2, 3이 모두 down 상태가 되었음

rapa@manager:~/0824$ docker node rm worker1

worker1

rapa@manager:~/0824$ docker node rm worker2

worker2

rapa@manager:~/0824$ docker node rm worker3

worker3

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17-> rm을 해서 클러스터에서 지웠음

rapa@manager:~/0824$ docker swarm leave

Error response from daemon: You are attempting to leave the swarm on a node that is participating as a manager. Removing the last manager erases all current state of the swarm. Use `--force` to ignore this message.-> manager는 클러스터에서 벗어나지 못함

- worker1, 2, 3 다시 클러스터에 가입

[worker1, 2, 3]

rapa@worker1:~$ docker swarm join --token SWMTKN-1-3rt8stwmhqiwpf7crsc6pywzyz2au6cz52vog65mclfm6b1d9m-c89oi7eydzewdgdj4bqbf7jbg 211.183.3.100:2377

This node joined a swarm as a worker.- 클러스터 확인

rapa@manager:~/0824$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3eluuoy3nlnt879m08u8leejp * manager Ready Active Leader 20.10.17

r4nzbg56vw2tuylr1hhvqdn70 worker1 Ready Active 20.10.17

65fyctmrwxutz4k9i1tkabtm7 worker2 Ready Active 20.10.17

ja2thy2jrbx7zi1y3b6iffluw worker3 Ready Active 20.10.17-> 다시 추가가 되었다.

- 단일 노드에서는 컨테이너 단위로 배포

compose, swarm에서는 서비스(다수 개의 컨테이너) 단위로 배포

클러스터에 컨테이너 배포하기 (nginx)

rapa@manager:~/0824$ docker service create \

> --name web \

> --constraint node.role==worker \

> --replicas 3 \

> -p 80:80 \

> nginx

vonknzltfgtm4w07on94hdjkr

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - 배포 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vonknzltfgtm web replicated 3/3 nginx:latest *:80->80/tcp- 각 서비스가 어떤 노드에서 동작 중인지 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

kbpti1g1jny1 web.1 nginx:latest worker1 Running Running 3 minutes ago

k7ny0y9w6b64 web.2 nginx:latest worker2 Running Running 3 minutes ago

46uxg9olhvge web.3 nginx:latest worker3 Running Running 3 minutes ago -> web.1은 worker1에서, web.2은 worker2에서, web.3은 worker3에서 동작함

- 컨테이너가 생성되지 않은 경우 그 이유

- 이미지가 다운로드되지 않은 상태에서 생성하려고 할 때

- 이미지 다운로드를 위한 인증 정보가 없는 경우

-> 자원 사용의 불균형이 발생할 수 있다. 즉, 한 대의 노드에서 여러 컨테이너가 동작하고 몇 개의 노드에서는 컨테이너 생성이 되지 않는 문제

각 노드에 저장소 접근을 위한 인증 정보가 config.json에 없다면 이를 해결하기 위해

1. manager에서 로그인을 하고 config.json 정보를 일일이 각 노드의 config.json에 붙여넣기 한다.

2. manager에서 로그인을 하고 config.json 정보를 docker service create 시 넘겨준다.

--with-registry-auth

-

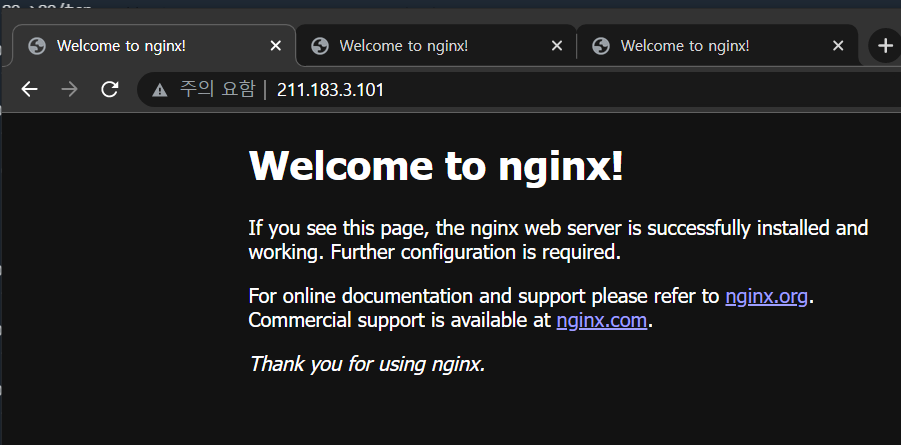

외부에서 접속해보기

.100, .101, .102, .103으로 모두 접속이 가능하다

-

scale 1개로

rapa@manager:~/0824$ docker service scale web=1

web scaled to 1

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged - scale 확인

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

kbpti1g1jny1 web.1 nginx:latest worker1 Running Running 4 minutes ago -

외부에서 접속해보기

.100, .101, .102, .103으로 모두 접속이 가능하다

-

scale 4개로

rapa@manager:~/0824$ docker service scale web=4

web scaled to 4

overall progress: 4 out of 4 tasks

1/4: running [==================================================>]

2/4: running [==================================================>]

3/4: running [==================================================>]

4/4: running [==================================================>]

verify: Service converged - scale 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

vonknzltfgtm web replicated 4/4 nginx:latest *:80->80/tcprapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

dtu4kasvb7mg web.1 nginx:latest worker1 Running Running 25 minutes ago

w2uxhc3a5ch9 web.2 nginx:latest worker3 Running Running 49 seconds ago

5tijiwd0j243 web.3 nginx:latest worker3 Running Running 49 seconds ago

zu0ii9qnt9wa web.4 nginx:latest worker2 Running Preparing 52 seconds ago -> worker3에 2개의 컨테이너가 배포되었다.

- 각 worker에서 container 확인

rapa@worker1:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7bfeb0163521 nginx:latest "/docker-entrypoint.…" 7 minutes ago Up 7 minutes 80/tcp web.1.kbpti1g1jny1ilpdwodifteulrapa@worker2:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

98c835cf68cf nginx:latest "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 80/tcp web.2.uoo7v4f86te0gimpnneqtn9edrapa@worker3:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

123666f9efb4 nginx:latest "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 80/tcp web.4.4pmv0lk6i1iign63rr510gmyu

eb8c226a3636 nginx:latest "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 80/tcp web.3.z8rmpb9xqn3sqdk5locbbvs37-> 각 worker가 80번 포트를 공유함. overlay 네트워크로 가능함.

- 서비스 삭제

[manager]

rapa@manager:~/0824$ docker service rm web

web- 삭제 확인

[manager]

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS[worker]

rapa@worker1:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES- 배포하기 (node.role!=manager)

rapa@manager:~/0824$ docker service create \

> --name web \

> --constraint node.role!=manager \

> --replicas 3 \

> -p 80:80 \

> nginx

myu2bi8thuclv92teqiyv6vq8

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged -> replicas 3: 항상 3개를 유지해야 한다.

- 배포 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

myu2bi8thucl web replicated 3/3 nginx:latest *:80->80/tcprapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ib6qpsmsf9w7 web.1 nginx:latest worker2 Running Running 13 minutes ago

zpar9hm5h1yf web.2 nginx:latest worker3 Running Running 13 minutes ago

cg9kj6epucqb web.3 nginx:latest worker1 Running Running 13 minutes ago - 컨테이너 삭제

[worker3]

rapa@worker3:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1867a6bfc86c nginx:latest "/docker-entrypoint.…" 14 minutes ago Up 13 minutes 80/tcp web.2.zpar9hm5h1yfot8n3x24owoat

rapa@worker3:~$

rapa@worker3:~$ docker container rm -f 18

18- 서비스 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

myu2bi8thucl web replicated 3/3 nginx:latest *:80->80/tcp-> 3개가 실행 중이다

rapa@manager:~/0824$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ib6qpsmsf9w7 web.1 nginx:latest worker2 Running Running 15 minutes ago

nt8m37ao2hp0 web.2 nginx:latest worker3 Running Running about a minute ago

zpar9hm5h1yf \_ web.2 nginx:latest worker3 Shutdown Failed about a minute ago "task: non-zero exit (137)"

cg9kj6epucqb web.3 nginx:latest worker1 Running Running 15 minutes ago -> worker3에서 web.2 컨테이너가 down되어서 자동으로 다시 실행되었음

-> manager가 contraints를 감시해서 다시 실행시켰음

- swarm의 2가지 모드

- 도커 스웜(스웜 클래식)

- zookeeper, etcd 와 같은 별도의 분산 코디네이터를 두고 이를 통해 노드, 컨테이너들의 정보를 관리한다.

- 컨테이너의 정보를 확인하기 위하여 각 노드에 agent를 두고 이를 통해 통신하게 된다.

- 스웜 모드

- menager 자체에 분산 코디네이터가 내장되어 있어 추가 설치 패키지가 없다.

- 일반적으로 말하는 스웜 클러스터는 '스웜 모드'를 지칭한다.

- 도커 스웜(스웜 클래식)

global 배포 (mode global)

rapa@manager:~/0824$ docker service create \

> --name web \

> --constraint node.role!=manager \

> --mode global \

> -p 80:80 \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

og91z8hl3dc6c40hsjsa3geli

overall progress: 3 out of 3 tasks

r4nzbg56vw2t: running [==================================================>]

ja2thy2jrbx7: running [==================================================>]

65fyctmrwxut: running [==================================================>]

verify: Service converged - 배포 확인

rapa@manager:~/0824$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

og91z8hl3dc6 web global 3/3 nginx:latest *:80->80/tcp-> mode: global

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ir45wvhj1kkt web.65fyctmrwxutz4k9i1tkabtm7 nginx:latest worker2 Running Running 17 seconds ago

6y5m0qxybp2e web.ja2thy2jrbx7zi1y3b6iffluw nginx:latest worker3 Running Running 17 seconds ago

lb7yof6nuh37 web.r4nzbg56vw2tuylr1hhvqdn70 nginx:latest worker1 Running Running 17 seconds ago -> 각 노드에 하나씩 배포됨

롤링 업데이트

- 동작 중인 컨테이너를 새로운 컨테이너로 업데이트할 수 있다.

- 컨테이너 내부의 내용을 바꾸는 것이 아니라, 기존 컨테이너는 중지 되고 새로운 컨테이너로 대체되는 것이다.

- 도커파일, index.html 생성

rapa@manager:~/0824$ mkdir blue green

rapa@manager:~/0824$ touch blue/Dockerfile green/Dockerfile

rapa@manager:~/0824$ touch blue/index.html green/index.html

rapa@manager:~/0824$ tree

.

├── blue

│ ├── Dockerfile

│ └── index.html

└── green

├── Dockerfile

└── index.html

2 directories, 7 files

rapa@manager:~/0824$ echo "<h2>BLUE PAGE<h2>" > blue/index.html

rapa@manager:~/0824$ echo "<h2>GREEN PAGE<h2>" > green/index.html- blue의 도커파일 작성

rapa@manager:~/0824$ vi blue/Dockerfile FROM httpd

ADD index.html /usr/local/apache2/htdocs/index.html

CMD httpd -D FOREGROUND- green의 도커파일 작성

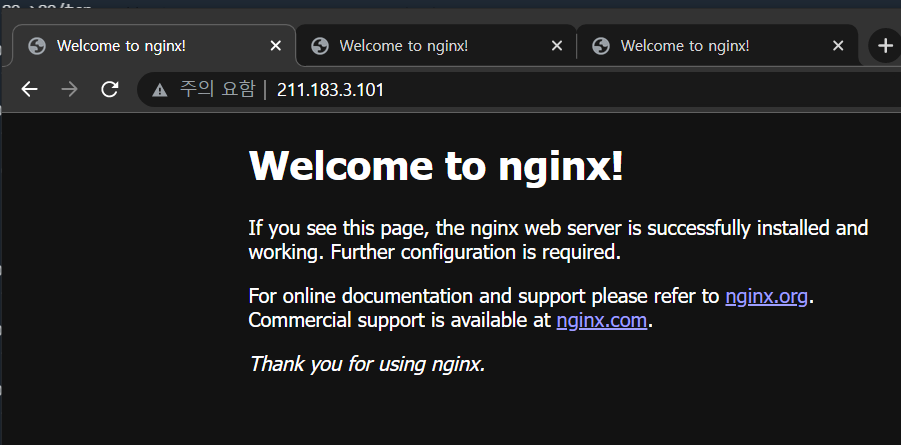

rapa@manager:~/0824$ cat blue/Dockerfile > green/Dockerfile - docker hub의 저장소로 들어가서

repository name: myweb, tag:blue, green

-> myweb:blue

-> myweb:green

- 이미지 build (myweb:green)

rapa@manager:~/0824/green$ docker build -t myweb:green .

Sending build context to Docker daemon 3.072kB

Step 1/3 : FROM httpd

---> f2a976f932ec

Step 2/3 : ADD index.html /usr/local/apache2/htdocs/index.html

---> 3c7ee4af8803

Step 3/3 : CMD httpd -D FOREGROUND

---> Running in 781a1a4149d0

Removing intermediate container 781a1a4149d0

---> f96a9bd64acc

Successfully built f96a9bd64acc

Successfully tagged myweb:green- 이미지 태그 생성 (ptah0414/myweb:green)

rapa@manager:~/0824/green$ docker image tag myweb:green \

> ptah0414/myweb:green- 이미지 푸쉬 (ptah0414/myweb:green)

rapa@manager:~/0824/green$ docker push ptah0414/myweb:green

The push refers to repository [docker.io/ptah0414/myweb]

2ec03db6f90d: Pushed

0c2dead5c030: Mounted from library/httpd

54fa52c69e00: Mounted from library/httpd

28a53545632f: Mounted from library/httpd

eea65516ea3b: Mounted from library/httpd

92a4e8a3140f: Mounted from ptah0414/mynginx

green: digest: sha256:14596abc0778b6a09c8385d13bbcc9e7c327393ca7451c300a9365cf090f8944 size: 1573- blue도 마찬가지 방법으로 push

- dockerhub에서 이미지 pull 해서 배포

rapa@manager:~/0824/blue$ docker service rm web

web

rapa@manager:~/0824/blue$ docker service create \

> --name web \

> --replicas 3 \

> --with-registry-auth \

> --constraint node.role==worker \

> -p 80:80 \

> ptah0414/myweb:blue

90ko6ecbbpr0sx9py5eq23sjr

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

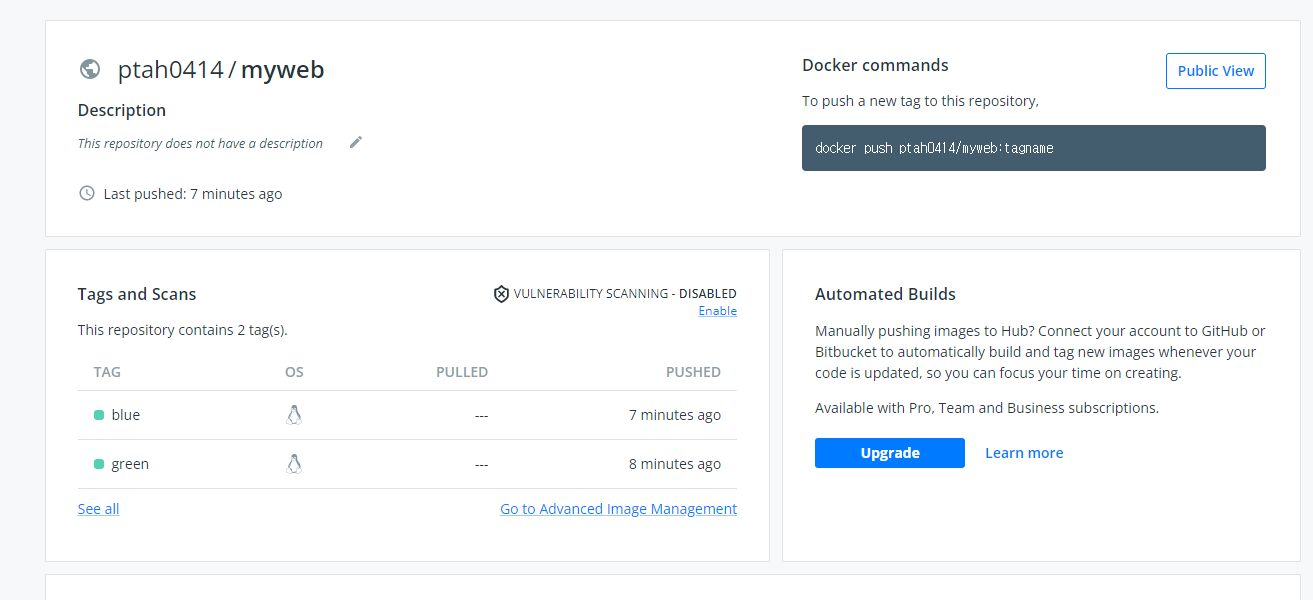

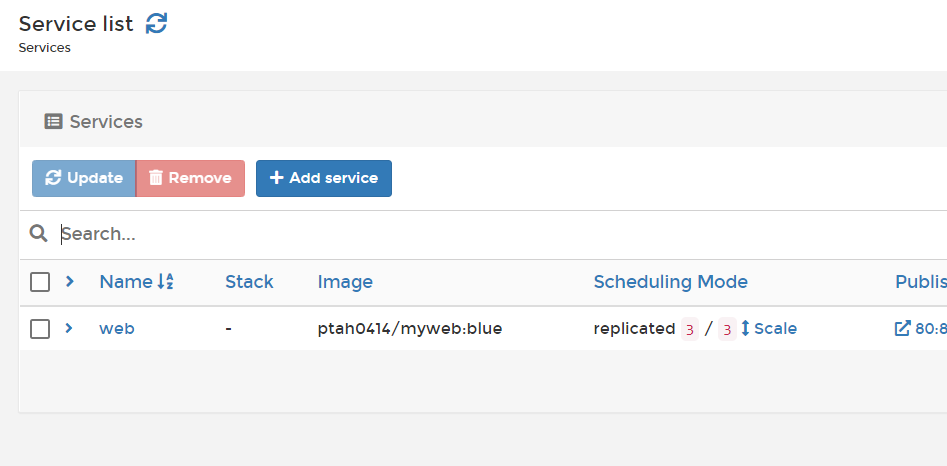

verify: Service converged - 배포 확인

-

portainer에서 확인

-

portainer에서 scale 조정도 가능하다

-

scale 조정 확인

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

wmi4ahvgdljz web.1 ptah0414/myweb:blue worker2 Running Running 44 minutes ago

m77a1scqufc9 web.2 ptah0414/myweb:blue worker3 Running Running 44 minutes ago

auv87a1l43ci web.3 ptah0414/myweb:blue worker1 Running Running 44 minutes ago

rkhcyvpsij50 web.4 ptah0414/myweb:blue worker2 Running Running 45 seconds ago

ip5s5gqdu4xn web.5 ptah0414/myweb:blue worker3 Running Running 44 seconds ago

7i94x6t0snw9 web.6 ptah0414/myweb:blue worker1 Running Running 46 seconds ago - blue에서 green으로 롤링 업데이트

rapa@manager:~/0824/blue$ docker service update \

> --image ptah0414/myweb:green \

> web

image ptah0414/myweb:green could not be accessed on a registry to record

its digest. Each node will access ptah0414/myweb:green independently,

possibly leading to different nodes running different

versions of the image.

web

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged - 업데이트 확인

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

najy28dc9rfk web.1 ptah0414/myweb:green worker2 Running Running 2 minutes ago

wmi4ahvgdljz \_ web.1 ptah0414/myweb:blue worker2 Shutdown Shutdown 2 minutes ago

wc98xnpffwlv web.2 ptah0414/myweb:green worker3 Running Running about a minute ago

m77a1scqufc9 \_ web.2 ptah0414/myweb:blue worker3 Shutdown Shutdown about a minute ago

z35v6w48wl6w web.3 ptah0414/myweb:green worker1 Running Running about a minute ago

auv87a1l43ci \_ web.3 ptah0414/myweb:blue worker1 Shutdown Shutdown about a minute ago

ukirro7219nq web.4 ptah0414/myweb:green worker2 Running Running 54 seconds ago

rkhcyvpsij50 \_ web.4 ptah0414/myweb:blue worker2 Shutdown Shutdown 55 seconds ago

nwoy9dpea8i4 web.5 ptah0414/myweb:green worker3 Running Running about a minute ago

ip5s5gqdu4xn \_ web.5 ptah0414/myweb:blue worker3 Shutdown Shutdown about a minute ago

kto70wriebix web.6 ptah0414/myweb:green worker1 Running Running about a minute ago

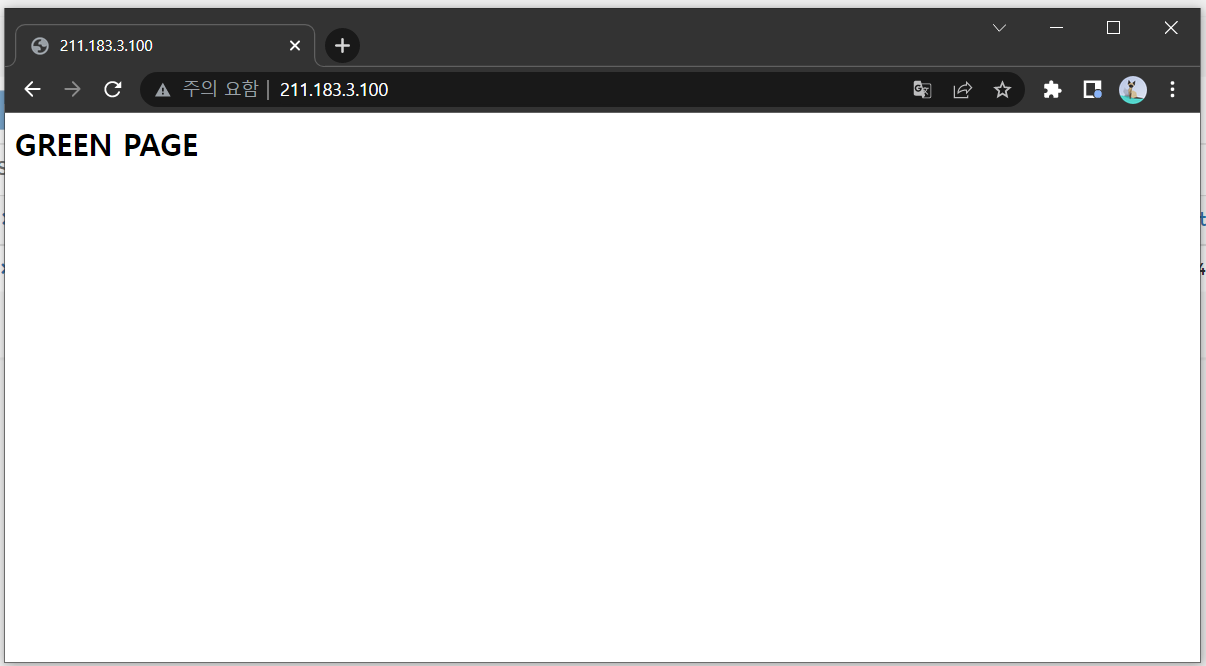

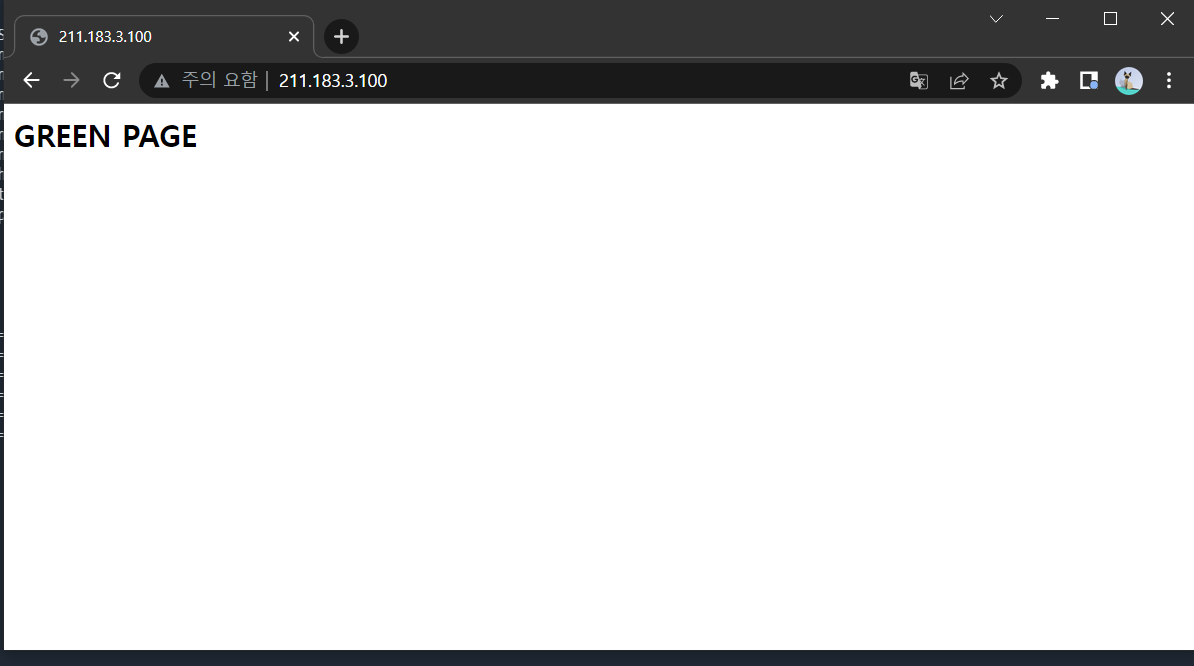

7i94x6t0snw9 \_ web.6 ptah0414/myweb:blue worker1 Shutdown Shutdown about a minute ago - green page 확인

업데이트를 하게되면 기존에 동작하고 있던 컨테이너는 down 상태가 되고, 새로운 이미지로 생성한 컨테이너가 외부에 서비스를 제공하게 된다. 기존 컨테이너의 내용을 업데이트하는

- 롤백

rapa@manager:~/0824/blue$ docker service rollback web

web

rollback: manually requested rollback

overall progress: rolling back update: 6 out of 6 tasks

1/6: running [> ]

2/6: running [> ]

3/6: running [> ]

4/6: running [> ]

5/6: running [> ]

6/6: running [> ]

verify: Service converged

- 배포

rapa@manager:~/0824/blue$ docker service rm web

web

rapa@manager:~/0824/blue$ docker service create \

> --name web \

> --replicas 6 \

> --update-delay 3s \

> --update-parallelism 3 \

> --with-registry-auth \

> --constraint node.role==worker \

> -p 80:80 \

> ptah0414/myweb:blue

tcac52588bcx9bgheorq20owk

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged -> 3개씩 업데이트 진행

-> 3초 후 다음 3개씩 업데이트 진행

- 배포 확인

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

vrqbd20yczyc web.1 ptah0414/myweb:blue worker3 Running Running 22 seconds ago

n9k45px0jq6l web.2 ptah0414/myweb:blue worker1 Running Running 23 seconds ago

iefxoqag6xiw web.3 ptah0414/myweb:blue worker2 Running Running 23 seconds ago

qmclpac2ku1t web.4 ptah0414/myweb:blue worker3 Running Running 23 seconds ago

hzyzdrxrj0h8 web.5 ptah0414/myweb:blue worker1 Running Running 23 seconds ago

z88st9hqwtli web.6 ptah0414/myweb:blue worker2 Running Running 23 seconds ago - 업데이트

rapa@manager:~/0824/blue$ docker service update --image ptah0414/myweb:green web

image ptah0414/myweb:green could not be accessed on a registry to record

its digest. Each node will access ptah0414/myweb:green independently,

possibly leading to different nodes running different

versions of the image.

web

overall progress: 6 out of 6 tasks

1/6: running [==================================================>]

2/6: running [==================================================>]

3/6: running [==================================================>]

4/6: running [==================================================>]

5/6: running [==================================================>]

6/6: running [==================================================>]

verify: Service converged -> 3개씩 업데이트 진행

-> 3초 후 다음 3개씩 업데이트 진행

- 업데이트 확인

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

de7y45mcq45c web.1 ptah0414/myweb:green worker3 Running Running 2 minutes ago

vrqbd20yczyc \_ web.1 ptah0414/myweb:blue worker3 Shutdown Shutdown 2 minutes ago

ivrt8esu9whl web.2 ptah0414/myweb:green worker1 Running Running about a minute ago

n9k45px0jq6l \_ web.2 ptah0414/myweb:blue worker1 Shutdown Shutdown about a minute ago

qbpdmljtg0sc web.3 ptah0414/myweb:green worker3 Running Running about a minute ago

iefxoqag6xiw \_ web.3 ptah0414/myweb:blue worker2 Shutdown Shutdown about a minute ago

y4ics1vwd2at web.4 ptah0414/myweb:green worker2 Running Running about a minute ago

qmclpac2ku1t \_ web.4 ptah0414/myweb:blue worker3 Shutdown Shutdown about a minute ago

10b05yzkpqyd web.5 ptah0414/myweb:green worker1 Running Running 2 minutes ago

hzyzdrxrj0h8 \_ web.5 ptah0414/myweb:blue worker1 Shutdown Shutdown 2 minutes ago

vip5fxlc7ma6 web.6 ptah0414/myweb:green worker2 Running Running 2 minutes ago

z88st9hqwtli \_ web.6 ptah0414/myweb:blue worker2 Shutdown Shutdown 2 minutes ago

green으로 업데이트 되었음

- 롤백

rapa@manager:~/0824/blue$ docker service rollback web

web

rollback: manually requested rollback

overall progress: rolling back update: 6 out of 6 tasks

1/6: running [> ]

2/6: running [> ]

3/6: running [> ]

4/6: running [> ]

5/6: running [> ]

6/6: running [> ]

verify: Service converged -> 롤백은 하나씩 진행됨

rapa@manager:~/0824/blue$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

7i6n7fxrg88i web.1 ptah0414/myweb:blue worker3 Running Running 2 minutes ago

de7y45mcq45c \_ web.1 ptah0414/myweb:green worker3 Shutdown Shutdown 2 minutes ago

vrqbd20yczyc \_ web.1 ptah0414/myweb:blue worker3 Shutdown Shutdown 6 minutes ago

elv4bbkzz1wu web.2 ptah0414/myweb:blue worker1 Running Running about a minute ago

ivrt8esu9whl \_ web.2 ptah0414/myweb:green worker1 Shutdown Shutdown about a minute ago

n9k45px0jq6l \_ web.2 ptah0414/myweb:blue worker1 Shutdown Shutdown 6 minutes ago

5gxk94jd02g7 web.3 ptah0414/myweb:blue worker3 Running Running about a minute ago

qbpdmljtg0sc \_ web.3 ptah0414/myweb:green worker3 Shutdown Shutdown about a minute ago

iefxoqag6xiw \_ web.3 ptah0414/myweb:blue worker2 Shutdown Shutdown 6 minutes ago

tkmg78692fp5 web.4 ptah0414/myweb:blue worker2 Running Running about a minute ago

y4ics1vwd2at \_ web.4 ptah0414/myweb:green worker2 Shutdown Shutdown about a minute ago

qmclpac2ku1t \_ web.4 ptah0414/myweb:blue worker3 Shutdown Shutdown 6 minutes ago

876rm8wt7hqs web.5 ptah0414/myweb:blue worker1 Running Running 2 minutes ago

10b05yzkpqyd \_ web.5 ptah0414/myweb:green worker1 Shutdown Shutdown 2 minutes ago

hzyzdrxrj0h8 \_ web.5 ptah0414/myweb:blue worker1 Shutdown Shutdown 6 minutes ago

p5wz46lcmsbf web.6 ptah0414/myweb:blue worker2 Running Running about a minute ago

vip5fxlc7ma6 \_ web.6 ptah0414/myweb:green worker2 Shutdown Shutdown about a minute ago

z88st9hqwtli \_ web.6 ptah0414/myweb:blue worker2 Shutdown Shutdown 6 minutes ago secret/config

- compose 파일이나 Dockerfile에서 변수 지정(-e MYSQL_DATABASE, -e MYSQL_ROOT_PASSWORD)을 한 적이 있다. 해당 내용은 민감한 정보를 포함하고 있음에도 이미지에서 해당 내용을 직접 볼 수 있다는 단점이 있다.

- 모든 사용자 별로 별도의 환경변수, index.html, DB 패스워드를 다르게 하고 싶다면 그 때마다 별도의 이미지를 만들어야하는 불편함이 있다. 이 경우 사용자별로 별도의 config, secret을 사용하면 추가 이미지를 생성하는 작업 없이 패스워드나 변수 전달이 가능해진다.

- 변수, 파일 -> config

- password, key -> secret

secret (mysql_password)

- secret 설정 (mysql_password)

rapa@manager:~/0824/blue$ echo "test123" | docker secret create mysql_password -

p18iglawo0l5lwxgoteogn5jg-> echo로 출력한 값이 pipe(|) 뒤의 - 에 입력된다.

-> docker secret create mysql_password test123

- secret 확인 (mysql_password)

rapa@manager:~/0824/blue$ docker secret ls

ID NAME DRIVER CREATED UPDATED

p18iglawo0l5lwxgoteogn5jg mysql_password 33 minutes ago 33 minutes agorapa@manager:~/0824/blue$ docker secret inspect mysql_password

[

{

"ID": "p18iglawo0l5lwxgoteogn5jg",

"Version": {

"Index": 434

},

"CreatedAt": "2022-08-24T07:25:16.57046259Z",

"UpdatedAt": "2022-08-24T07:25:16.57046259Z",

"Spec": {

"Name": "mysql_password",

"Labels": {}

}

}

]-> 비밀번호를 볼 수 없다.

-> 컨테이너에 secret을 붙인다면 컨테이너에서 mysql_password 파일을 확인할 수 있다.

- 서비스 배포 (sql)

rapa@manager:~/0824/blue$ docker service create \

> --name sql \

> --secret source=mysql_password,target=mysql_root_password \

> --secret source=mysql_password,target=mysql_password \

> -e MYSQL_ROOT_PASSWORD_FILE="/run/secrets/mysql_root_password" \

> -e MYSQL_PASSWORD_FILE="/run/secrets/mysql_password" \

> -e MYSQL_DATABASE="testdb" \

> --constraint node.role==manager \

> mysql:5.7

image mysql:5.7 could not be accessed on a registry to record

its digest. Each node will access mysql:5.7 independently,

possibly leading to different nodes running different

versions of the image.

mztzyaf8p2rc25rvf4nxqmybj

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged - 서비스 배포 확인 (sql)

rapa@manager:~/0824/blue$ docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

mztzyaf8p2rc sql replicated 1/1 mysql:5.7

tcac52588bcx web replicated 6/6 ptah0414/myweb:blue *:80->80/tcp

rapa@manager:~/0824/blue$ docker service ps sql

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

retfeio0ydv7 sql.1 mysql:5.7 manager Running Running 13 minutes ago - 컨테이너에서 secrets 확인

rapa@manager:~/0824/blue$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6e0d7ec1fd55 mysql:5.7 "docker-entrypoint.s…" 24 minutes ago Up 24 minutes 3306/tcp, 33060/tcp sql.1.retfeio0ydv7jzb3sdlqlv59hrapa@manager:~/0824/blue$ docker container exec 6e0d7ec1fd55 ls /run/secrets/

mysql_password

mysql_root_passwordrapa@manager:~/0824/blue$ docker container exec 6e0d7ec1fd55 cat /run/secrets/mysql_password

test123

rapa@manager:~/0824/blue$ docker container exec 6e0d7ec1fd55 cat /run/secrets/mysql_root_password

test123-> 컨테이너 안에 secrets로 설정한 비밀번호가 평문으로 저장되어있다.

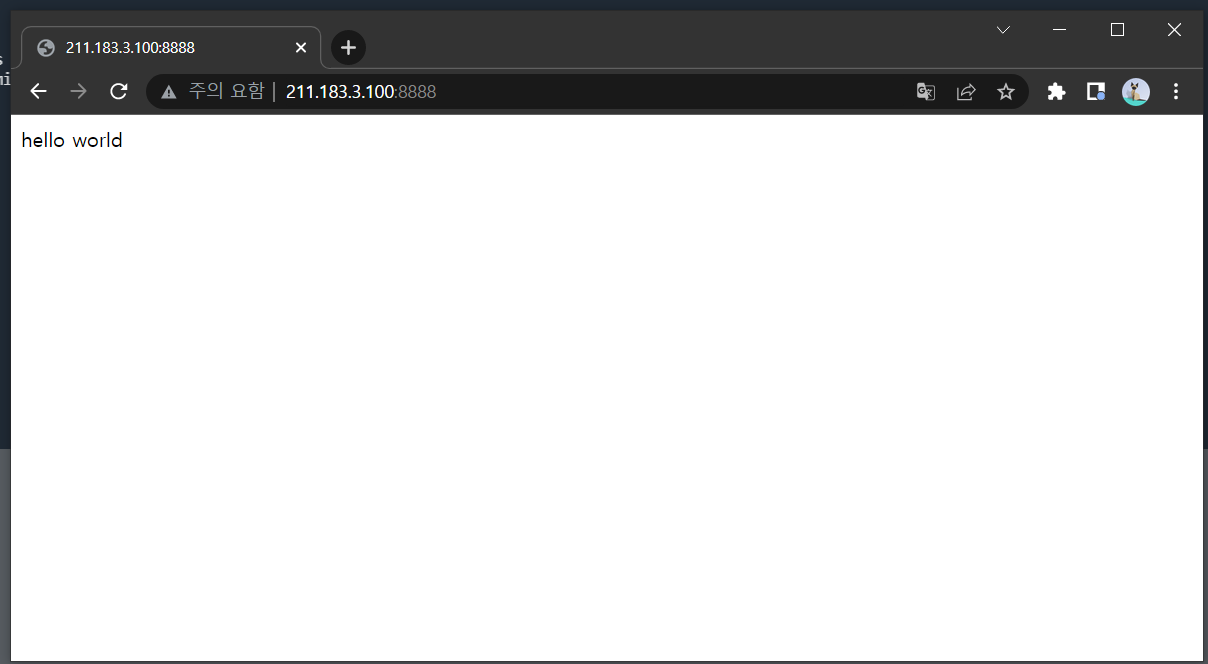

config (index.html)

- index.html 생성

rapa@manager:~/0824/blue$ echo "hello world" > index.html - config 생성

rapa@manager:~/0824/blue$ docker config create webcfg index.html

8cidrybqlf1wkq3mxz18qhway- config 확인

rapa@manager:~/0824/blue$ docker config ls

ID NAME CREATED UPDATED

8cidrybqlf1wkq3mxz18qhway webcfg 8 seconds ago 8 seconds agorapa@manager:~/0824/blue$ docker config inspect webcfg

[

{

"ID": "8cidrybqlf1wkq3mxz18qhway",

"Version": {

"Index": 443

},

"CreatedAt": "2022-08-24T08:06:41.178552434Z",

"UpdatedAt": "2022-08-24T08:06:41.178552434Z",

"Spec": {

"Name": "webcfg",

"Labels": {},

"Data": "aGVsbG8gd29ybGQK"

}

}

]-> index.html이 base64로 인코딩(암호화 아님) 되어있다.

rapa@manager:~/0824/blue$ echo aGVsbG8gd29ybGQK | base64 -d

hello world- nginx 서비스 배포 (config로 index.html 업로드)

rapa@manager:~/0824/blue$ docker service create \

> --replicas 1 \

> --constraint node.role==manager \

> --name webcfg \

> -p 8888:80 \

> --config source=webcfg,target=/usr/share/nginx/html/index.html \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

ab5kg04k431e9cabf3o3p73h1

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged -> webcfg(index.html)를 nginx 경로에 넣음

- 배포 확인

8888포트 접속

클러스터에서의 네트워크

- 네트워크 확인

rapa@manager:~/0824/blue$ docker network ls

NETWORK ID NAME DRIVER SCOPE

a5d5d4875529 bridge bridge local

e440baec57b5 docker_gwbridge bridge local

3ead376f089f host host local

f9ffavipvi29 ingress overlay swarm

39430d1f4412 none null localdocker_gwbridge, ingress 네트워크가 추가되었다.

- docker_gwbridge(bridge, local)

- 각 호스트에서만 영향을 미친다.

- 스웜에서 오버레이 네트워크 사용.

- 외부로 나가는 통신 및 오버레이 네트워크 트래픽의 종단점 역할을 한다.

- ingress(overlay, swarm)

- 클러스터 전체에 하나의 네트워크로 동작한다.

- 로드밸런싱과 라우팅 메시에 사용되며 서비스 내의 컨테이너에 대한 접근을 라운드 로빈 방식으로 분산하는 로드 밸런싱을 담당한다.

스웜모드로 생성된 모든 서비스의 컨테이너가 외부로 노출되기 위해서는 무조건 ingress 네트워크를 사용해야하는 것은 아니다. docker run -p를 사용해 외부에 노출했던 것처럼 특정 포트를 사용하도록 설정할 수도 있다. 다음은 ingress를 사용하지 않고 호스트의 8888포트를 직접 컨테이너의 80번 포트에 연결하는 방식이다.

docker service create \

> --publish mode=host,target=80,published=8080,protocol=tcp --name web nginx

- 네트워크 확인

rapa@manager:~/0824/blue$ docker network inspect ingress...

"Peers": [

{

"Name": "34269b156ec4",

"IP": "211.183.3.100"

},

{

"Name": "835a3edbc2cb",

"IP": "211.183.3.101"

},

{

"Name": "bfdece7ae3b8",

"IP": "211.183.3.103"

},

{

"Name": "2dc210cca720",

"IP": "211.183.3.102"

}

]

...-> manager, worker1-3이 ingress와 연결되어있음

- 사용자 정의 오버레이 네트워크 생성 (myoverlay)

rapa@manager:~/0824/blue$ docker network create --subnet 192.168.123.0/24 -d overlay myoverlay

d7sec2t1f898uky6pnczc7f9q- 오버레이 네트워크(myoverlay)를 사용하는 우분투 서비스 배포 (ovctn)

rapa@manager:~/0824/blue$ docker service create \

> --name ovctn \

> --network myoverlay \

> --replica 1 \

> --constraint node.role==manager \

> ubuntu:18.04요약

- worker 노드를 run level 3(CLI) 환경으로 전환

rapa@worker1:~$ sudo systemctl set-default multi-user.target

[sudo] password for rapa:

Created symlink /etc/systemd/system/default.target → /lib/systemd/system/multi-user.target.

rapa@worker1:~$ sudo reboot-> pc의 RAM이 부족하여 GUI에서 CLI로 전환하였음

aws, gcp에서 제공하는 컨테이너 클러스터링 서비스는 EKS, GKE가 있다. 이들은 별도의 manager용 VM을 제공해주는 것이 아니라, 전체 관리용 VM(콘솔VM)에 도커를 설치하여 사용한다.

노드(worker)는 완전 관리형 서비스로 제공된다.

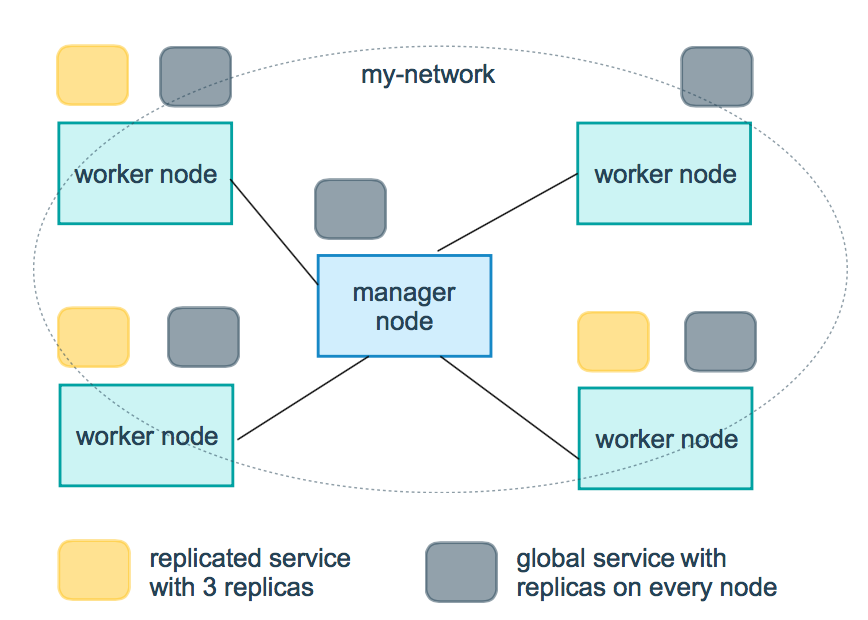

- 서비스를 배포하는 두 가지 방식

- replicas: 지정된 개수만큼 컨테이너를 동작시킨다. scale과 연계하여 확장/축소가 용이하다.

- global: 지정된 노드에 각 한 개씩 글로벌하게 배포한다.

이미지 출처: https://docs.docker.com/engine/swarm/how-swarm-mode-works/services/

- 서비스 배포 (80 port)

rapa@manager:~$ docker service create \

> --name test1 \

> --replicas 3 \

> --constraint node.role!=manager \

> -p 80:80 \

> nginx

25jaro7g3iy1dleokj1rdkq4g

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - 배포 확인

.100, .101, .102, .103 에서 접속 가능

- scale 1

rapa@manager:~$ docker service scale test1=1

test1 scaled to 1

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service converged - 배포 확인

rapa@manager:~$ docker service ps test1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

5x4qtax22071 test1.1 nginx:latest worker2 Running Running 2 minutes ago -> worker2 에서 동작 중임

[worker2]

rapa@worker2:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

560fa1c7d938 nginx:latest "/docker-entrypoint.…" 2 minutes ago Up 2 minutes 80/tcp test1.1.5x4qtax220711khk19chxcrho-> PORTS: 80/tcp

-> swarm에서 service로 만들면 호스트의 물리 인터페이스에 종속적이지 않음

- container의 ip 확인

rapa@worker2:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

560fa1c7d938 nginx:latest "/docker-entrypoint.…" 5 minutes ago Up 5 minutes 80/tcp test1.1.5x4qtax220711khk19chxcrho

rapa@worker2:~$ docker container inspect 56"Networks": {

"ingress": {

"IPAMConfig": {

"IPv4Address": "10.0.0.4"

},

"Links": null,

"Aliases": [

"560fa1c7d938"

],

"NetworkID": "f9ffavipvi297sshgrpbh714c",

"EndpointID": "110ee35c8de250f1facb9e567f4fc29e852ed597c87101aea96cd18ef17699ab",

"Gateway": "",

"IPAddress": "10.0.0.4",

"IPPrefixLen": 24,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:0a:00:00:04",

"DriverOpts": null

}

}-> ingress와 연결되어 ip 주소가 10.0.0.4로 자동으로 부여 받았다.

- ingress 네트워크 확인

rapa@manager:~$ docker network inspect ingress

[

{

"Name": "ingress",

"Id": "f9ffavipvi297sshgrpbh714c",

"Created": "2022-08-25T08:52:19.496230617+09:00",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},-> 10.0.0.0/24 네트워크이다.

- VIP 확인 (virtual IP)

rapa@manager:~$ docker service inspect test1"Endpoint": {

"Spec": {

"Mode": "vip",

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 80,

"PublishedPort": 80,

"PublishMode": "ingress"

}

]

},

"Ports": [

{

"Protocol": "tcp",

"TargetPort": 80,

"PublishedPort": 80,

"PublishMode": "ingress"

}

],

"VirtualIPs": [

{

"NetworkID": "f9ffavipvi297sshgrpbh714c",

"Addr": "10.0.0.3/24"

}

]

}-> vip: 10.0.0.3

-> 서비스 생성 시 별도의 네트워크를 지정하지 않는 경우 클러스터 환경에서는 ingress 네트워크에 컨테이너가 연결되고, ingress에서 제공하는 dhcp 기능을 통해 주소를 동적으로 할당 받는다.

- scale 3

rapa@manager:~$ docker service scale test1=3

test1 scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - insepct 이쁘게 보는 법! (pretty)

rapa@manager:~$ docker service inspect test1 --pretty

ID: 25jaro7g3iy1dleokj1rdkq4g

Name: test1

Service Mode: Replicated

Replicas: 3

Placement:

Constraints: [node.role!=manager]

UpdateConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-first

RollbackConfig:

Parallelism: 1

On failure: pause

Monitoring Period: 5s

Max failure ratio: 0

Rollback order: stop-first

ContainerSpec:

Image: nginx:latest@sha256:b95a99feebf7797479e0c5eb5ec0bdfa5d9f504bc94da550c2f58e839ea6914f

Init: false

Resources:

Endpoint Mode: vip

Ports:

PublishedPort = 80

Protocol = tcp

TargetPort = 80

PublishMode = ingress - 업데이트 관련

UpdateConfig:

Parallelism: 1 # 한 테스크 당 한 컨테이너만 업데이트 함

On failure: pause # 업데이트 진행 시 오류가 발생하면 중단된다.

Monitoring Period: 5s

Max failure ratio: 0

Update order: stop-firstParallelism: 1 -> 한 테스크 당 한 컨테이너만 업데이트 함

On failure: pause -> 업데이트 진행 시 오류가 발생하면 중단된다.

On failure: continue -> 오류가 발생하더라도 업데이트는 계속 진행

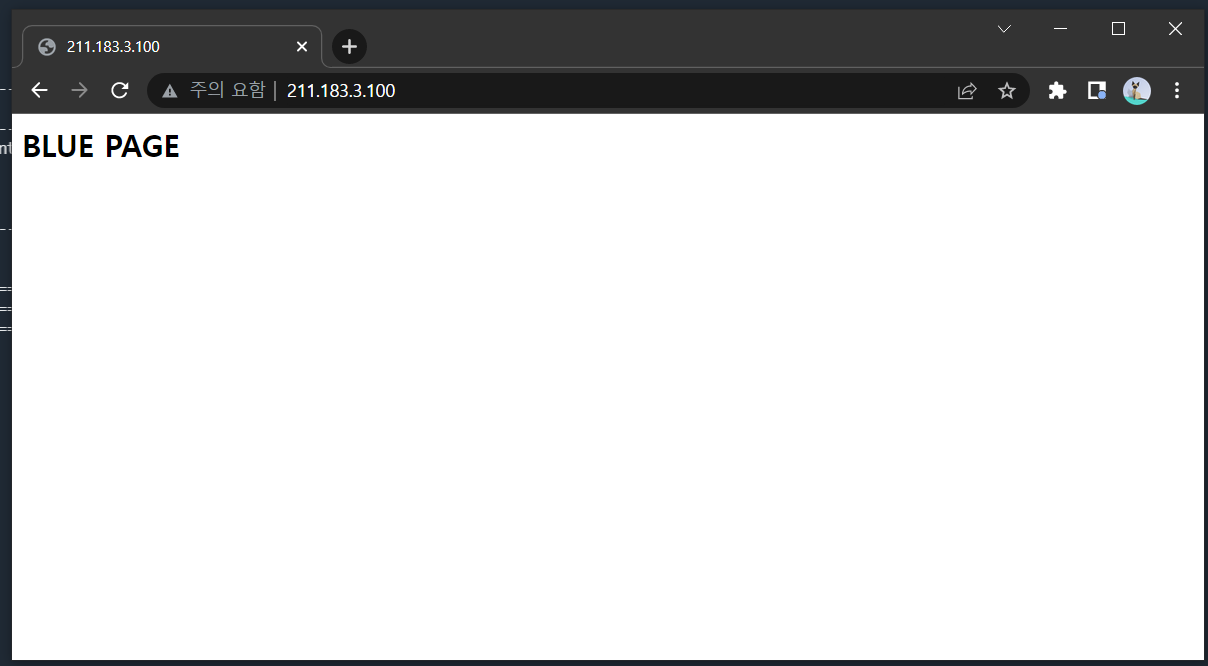

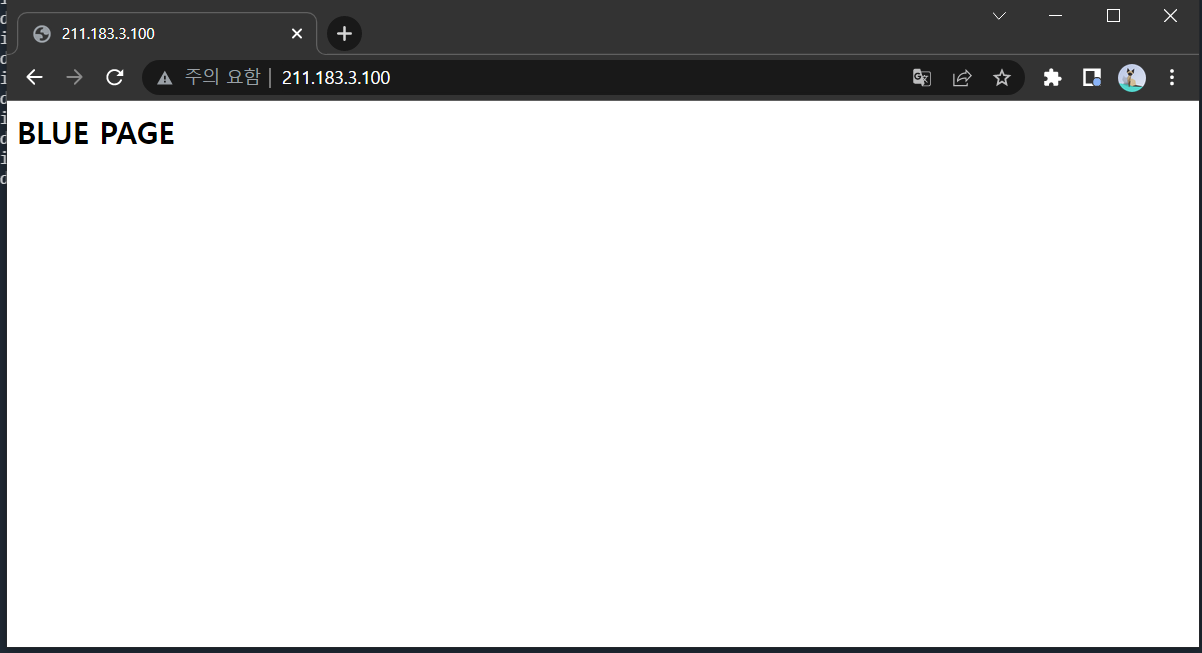

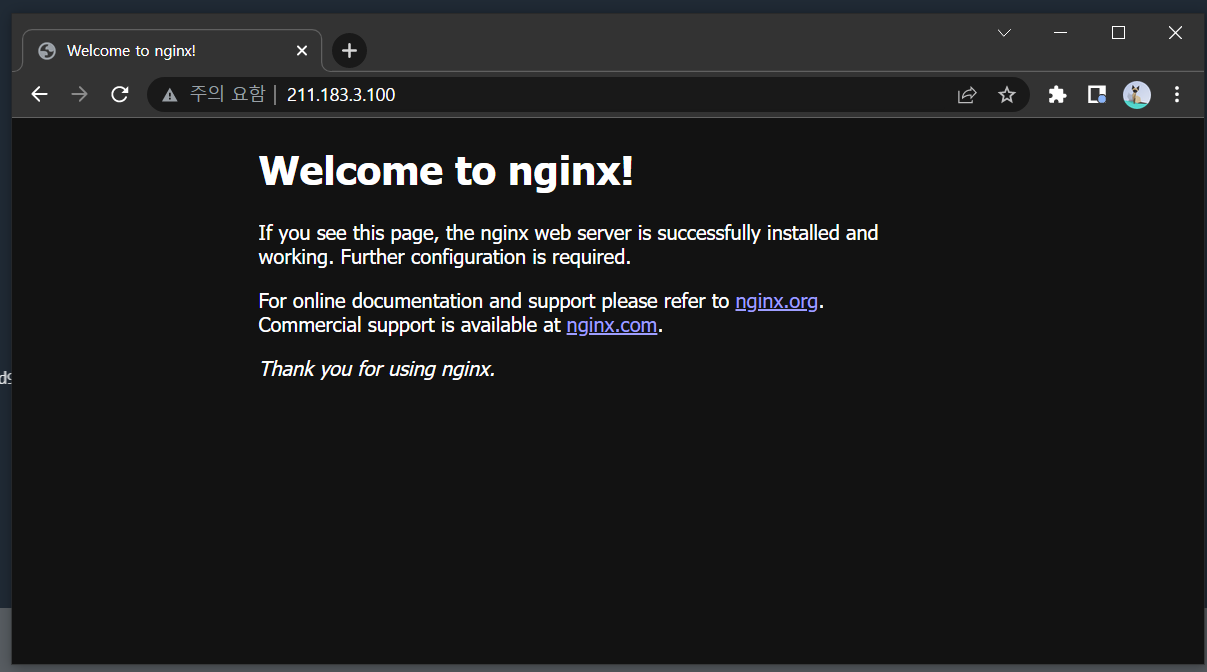

- 업데이트

rapa@manager:~$ docker service update \

> --image ptah0414/myweb:blue \

> test1

test1

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged -> test1 서비스의 이미지를 ptah0414/myweb:blue 이미지로 변경

-

업데이트 확인

-

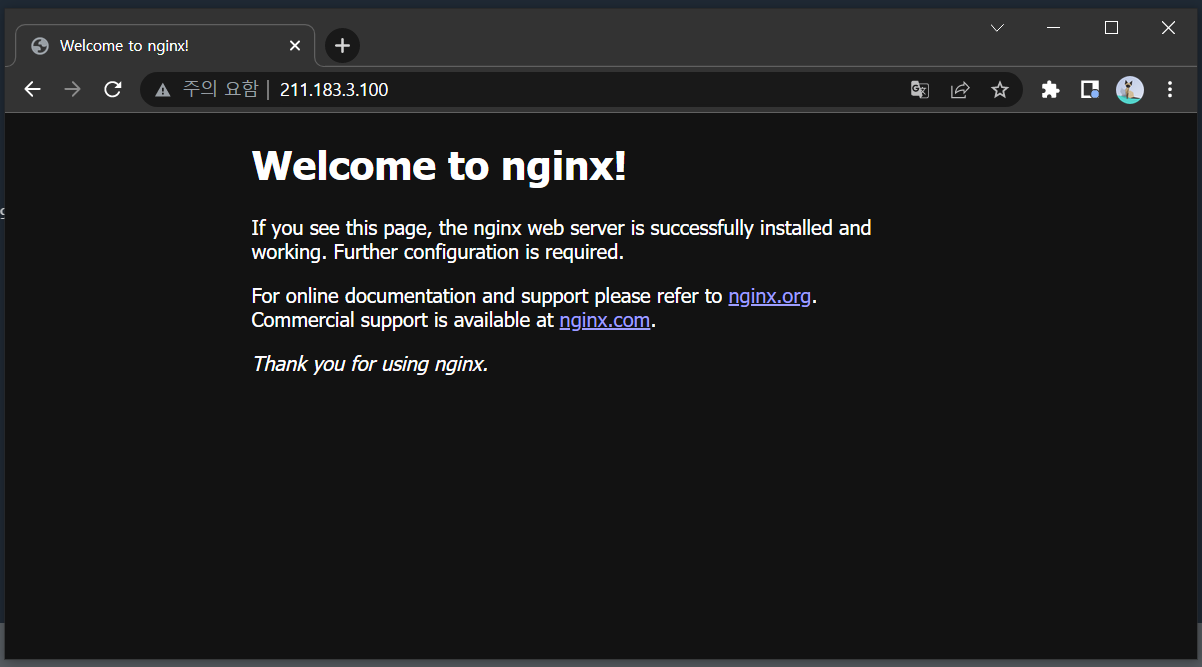

롤백

rapa@manager:~$ docker service rollback test1

test1

rollback: manually requested rollback

overall progress: rolling back update: 3 out of 3 tasks

1/3: running [> ]

2/3: running [> ]

3/3: running [> ]

verify: Service converged - 롤백 확인

-> nginx 이미지로 롤백되었음

-

config/secret

- 기존 명령에서 password를 지정하거나 변수를 선언했던 것처럼 하는 것이 아니라 별도의 config 또는 secret 객체를 생성하고 이를 컨테이너에 적용하는 방식으로 운영하는 것

- config -> registry 설정 파일, 변수 선언, index.html 과 같은 보안성이 낮은 파일 자체

- secret -> DB 패스워드, SSH Key, 인증서

-

secret 생성

rapa@manager:~$ echo "test1234" | docker secret create testsecret -

3ykyp5a0lg68uovan8a8ikd58

rapa@manager:~$ docker secret ls

ID NAME DRIVER CREATED UPDATED

3ykyp5a0lg68uovan8a8ikd58 testsecret 54 seconds ago 54 seconds ago- config 생성

rapa@manager:~$ docker config create testconfig index.html

1h4e95j4d7kdkf1nsjs69d0bwrapa@manager:~$ docker config ls

ID NAME CREATED UPDATED

1h4e95j4d7kdkf1nsjs69d0bw testconfig 17 seconds ago 17 seconds ago- secret 확인

rapa@manager:~$ docker secret inspect testsecret

[

{

"ID": "3ykyp5a0lg68uovan8a8ikd58",

"Version": {

"Index": 9137

},

"CreatedAt": "2022-08-25T01:45:34.173744442Z",

"UpdatedAt": "2022-08-25T01:45:34.173744442Z",

"Spec": {

"Name": "testsecret",

"Labels": {}

}

}

]-> secret은 Data가 보이지 않는다.

- config 확인

rapa@manager:~$ docker config inspect testconfig

[

{

"ID": "1h4e95j4d7kdkf1nsjs69d0bw",

"Version": {

"Index": 9139

},

"CreatedAt": "2022-08-25T01:47:14.743924604Z",

"UpdatedAt": "2022-08-25T01:47:14.743924604Z",

"Spec": {

"Name": "testconfig",

"Labels": {},

"Data": "SEVMTE8gQUxMCg=="

}

}

]-> secret과 달리, Data가 보인다!

- config의 Data decode하기

rapa@manager:~$ echo "SEVMTE8gQUxMCg==" | base64 -d

HELLO ALLsecret은 기본적으로 컨테이너의 /run/secrets 라는 디렉토리 아래에 파일 형태로 보관된다. 또한 컨테이너 내에서는 평문으로 보관된다.

- secret과 함께 서비스 배포 (sql)

rapa@manager:~$ docker service create \

> --name sql \

> --replicas 3 \

> --constraint node.role==worker \

> --secret source=testsecret,target=testsecret \

> -e MYSQL_ROOT_PASSWORD_FILE="/run/secrets/testsecret" \

> -e MYSQL_DATABASE=testdb \

> mysql:5.7

image mysql:5.7 could not be accessed on a registry to record

its digest. Each node will access mysql:5.7 independently,

possibly leading to different nodes running different

versions of the image.

losy34b17r414cc3r8oetpp52

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - secret 파일 확인

[worker]

rapa@worker1:~$ docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d866dd356b06 mysql:5.7 "docker-entrypoint.s…" 11 minutes ago Up 11 minutes 3306/tcp, 33060/tcp sql.2.8uw441ssw1i6cqs4ioqt5lf25rapa@worker1:~$ docker container exec d8 ls /run/secrets

testsecret- secret 파일 내용 확인

rapa@worker1:~$ docker container exec d8 cat /run/secrets/testsecret

test1234-> 컨테이너 안에 평문으로 저장되어있다.

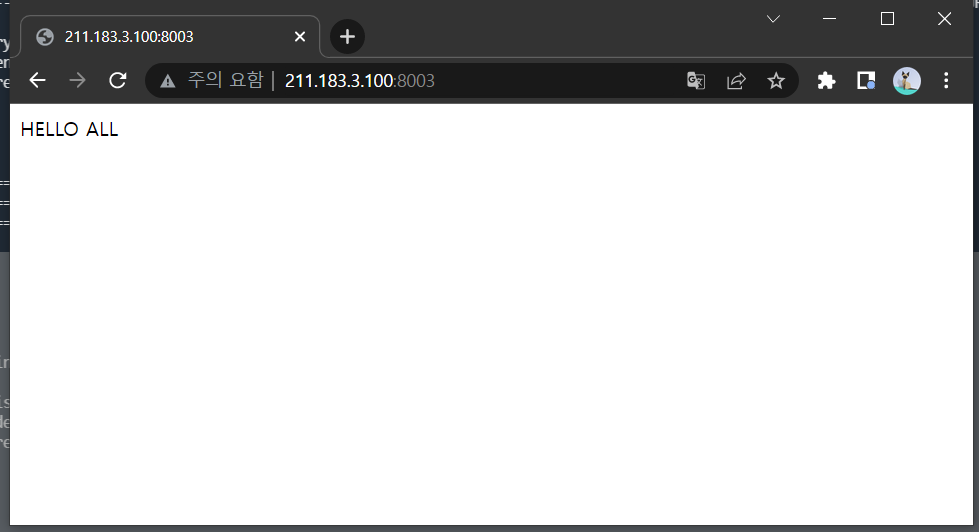

- config(index.html)와 함께 서비스 배포 (nginx1)

rapa@manager:~$ docker service create \

> --name nginx1 \

> --replicas 3 \

> --constraint node.role==worker \

> -p 8003:80 \

> --config source=testconfig,target=/usr/share/nginx/html/index.html \

> nginx

image nginx:latest could not be accessed on a registry to record

its digest. Each node will access nginx:latest independently,

possibly leading to different nodes running different

versions of the image.

j70rnz3bqq9gn2dus83bdb86a

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service converged - 배포 확인

8003포트 접속

-> config에 적용해두었던 "HELLO ALL"이 담겨있는 index.html 이 보여야 한다.