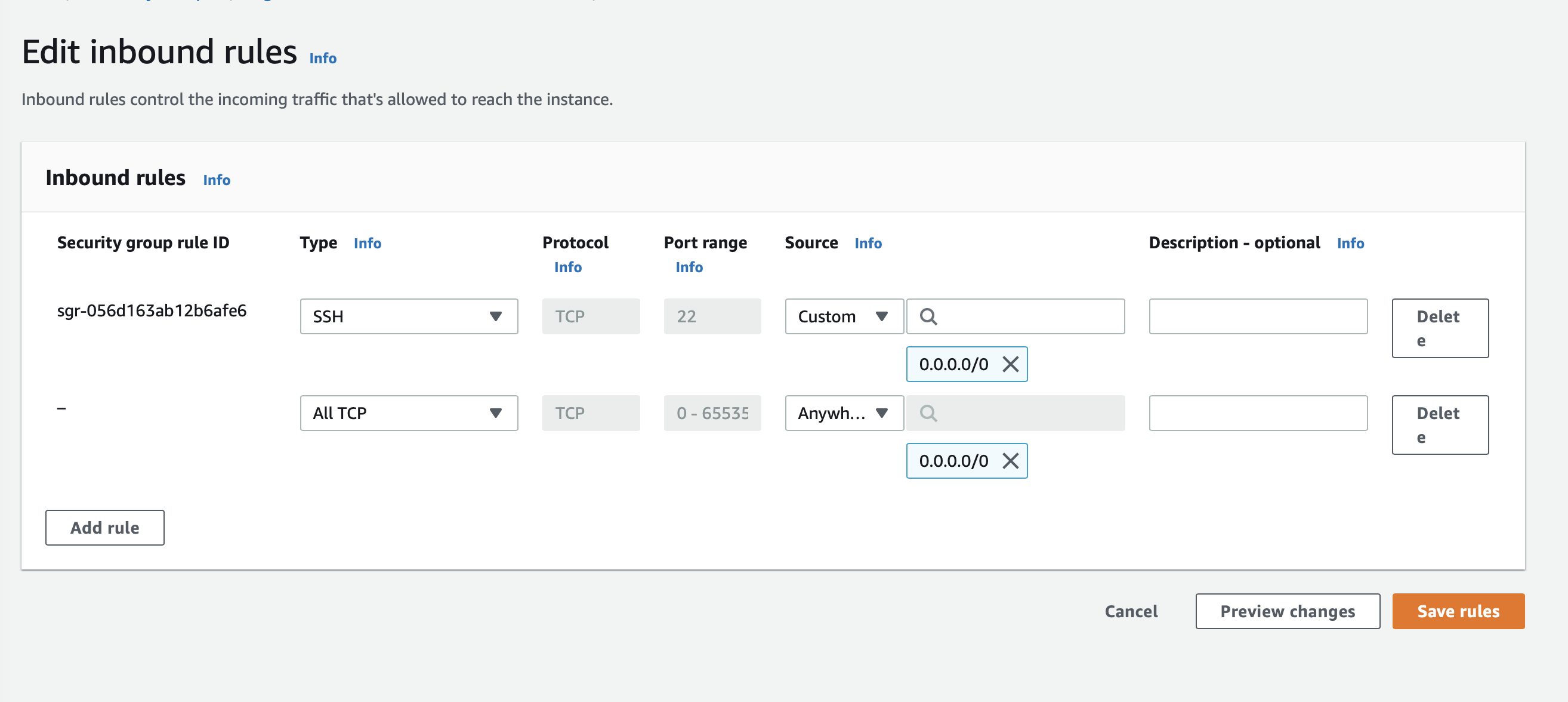

- 인스턴스 준비

ubuntu@ip-172-31-1-65:~$ sudo hostnamectl set-hostname gunwoo-k8s-hardway-master-1

ubuntu@ip-172-31-1-65:~$ sudo -i

root@gunwoo-k8s-hardway-master-1:~# cat << EOF >> /etc/hosts

> 127.0.0.1 localhost

> 172.31.1.65 gunwoo-k8s-hardway-master-1

> 172.31.7.230 gunwoo-k8s-hardway-master-2

> 172.31.15.197 gunwoo-k8s-hardway-master-3

> 172.31.3.25 gunwoo-k8s-hardway-worker-1

> 172.31.2.183 gunwoo-k8s-hardway-worker-2

> 172.31.11.91 gunwoo-k8s-hardway-worker-3

> EOF

root@gunwoo-k8s-hardway-master-1:~# cat /etc/hosts

127.0.0.1 localhost

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhosts

127.0.0.1 localhost

172.31.1.65 gunwoo-k8s-hardway-master-1

172.31.7.230 gunwoo-k8s-hardway-master-2

172.31.15.197 gunwoo-k8s-hardway-master-3

172.31.3.25 gunwoo-k8s-hardway-worker-1

172.31.2.183 gunwoo-k8s-hardway-worker-2

172.31.11.91 gunwoo-k8s-hardway-worker-3root@gunwoo-auth-basic:~# mkdir hardway

root@gunwoo-auth-basic:~# cd hardway/

root@gunwoo-auth-basic:~/hardway# mkdir cert

root@gunwoo-auth-basic:~/hardway# cd cert/

root@gunwoo-auth-basic:~/hardway/cert# root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out ca.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.................................................................................................................+++++

....................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert# # Create CSR using the private key

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key ca.key -subj "/CN=KUBERNETES-CA" -out ca.csr

root@gunwoo-auth-basic:~/hardway/cert# # Self sign the csr using its own private key

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in ca.csr -signkey ca.key -CAcreateserial -out ca.crt -days 3650

Signature ok

subject=CN = KUBERNETES-CA

Getting Private keyroot@gunwoo-auth-basic:~/hardway/cert# # Generate private key for admin user

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out admin.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.......................+++++

................................................................................................................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert# # Generate CSR for admin user. Note the OU.

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key admin.key -subj "/CN=admin/O=system:masters" -out admin.csr

root@gunwoo-auth-basic:~/hardway/cert# # Sign certificate for admin user using CA servers private key

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in admin.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out admin.crt -days 365

Signature ok

subject=CN = admin, O = system:masters

Getting CA Private Keyroot@gunwoo-auth-basic:~/hardway/cert# # kube-controller-manager

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out kube-controller-manager.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

..........+++++

................................................................................................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key kube-controller-manager.key -subj "/CN=system:kube-controller-manager" -out kube-controller-manager.csr

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in kube-controller-manager.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kube-controller-manager.crt -days 365

Signature ok

subject=CN = system:kube-controller-manager

Getting CA Private Key

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# # kube-proxy

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out kube-proxy.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.............+++++

.....................................................................................................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key kube-proxy.key -subj "/CN=system:kube-proxy" -out kube-proxy.csr

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in kube-proxy.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kube-proxy.crt -days 365

Signature ok

subject=CN = system:kube-proxy

Getting CA Private Key

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# # kube-scheduler

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out kube-scheduler.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

................................................+++++

...................................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key kube-scheduler.key -subj "/CN=system:kube-scheduler" -out kube-scheduler.csr

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in kube-scheduler.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kube-scheduler.crt -days 365

Signature ok

subject=CN = system:kube-scheduler

Getting CA Private Key

root@gunwoo-auth-basic:~/hardway/cert# root@gunwoo-auth-basic:~/hardway/cert# ll

total 72

drwxr-xr-x 2 root root 4096 Oct 11 01:09 ./

drwxr-xr-x 3 root root 4096 Oct 11 01:06 ../

-rw-r--r-- 1 root root 1025 Oct 11 01:08 admin.crt

-rw-r--r-- 1 root root 920 Oct 11 01:08 admin.csr

-rw------- 1 root root 1679 Oct 11 01:08 admin.key

-rw-r--r-- 1 root root 1001 Oct 11 01:07 ca.crt

-rw-r--r-- 1 root root 895 Oct 11 01:07 ca.csr

-rw------- 1 root root 1679 Oct 11 01:07 ca.key

-rw-r--r-- 1 root root 41 Oct 11 01:09 ca.srl

-rw-r--r-- 1 root root 1025 Oct 11 01:09 kube-controller-manager.crt

-rw-r--r-- 1 root root 920 Oct 11 01:09 kube-controller-manager.csr

-rw------- 1 root root 1675 Oct 11 01:09 kube-controller-manager.key

-rw-r--r-- 1 root root 1009 Oct 11 01:09 kube-proxy.crt

-rw-r--r-- 1 root root 903 Oct 11 01:09 kube-proxy.csr

-rw------- 1 root root 1675 Oct 11 01:09 kube-proxy.key

-rw-r--r-- 1 root root 1013 Oct 11 01:09 kube-scheduler.crt

-rw-r--r-- 1 root root 907 Oct 11 01:09 kube-scheduler.csr

-rw------- 1 root root 1679 Oct 11 01:09 kube-scheduler.key인증서 10개 생성됨

root@gunwoo-auth-basic:~/hardway/cert# # 1번 마스터의 INTERNAL IP를 사용

root@gunwoo-auth-basic:~/hardway/cert# export KUBE_API_SERVER_ADDRESS=172.31.1.65

root@gunwoo-auth-basic:~/hardway/cert# export KUBE_CLUSTER_NAME=gunwoo-k8s-hardwayroot@gunwoo-auth-basic:~/hardway/cert# # kubectl 바이너리 다운로드

root@gunwoo-auth-basic:~/hardway/cert# wget -q --show-progress --https-only --timestamping "https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubectl"

kubectl 100%[=====================================================================================>] 40.96M 48.8MB/s in 0.8s

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# {

> chmod +x kubectl

> sudo mv kubectl /usr/local/bin/

> }Client 인증서와 key를 이용해 kubeconfig를 생성

root@gunwoo-auth-basic:~/hardway/cert# {

> kubectl config set-cluster ${KUBE_CLUSTER_NAME} \

> --certificate-authority=ca.crt \

> --embed-certs=true \

> --server=https://${KUBE_API_SERVER_ADDRESS}:6443 \

> --kubeconfig=kube-proxy.kubeconfig

>

> kubectl config set-credentials system:kube-proxy \

> --client-certificate=kube-proxy.crt \

> --client-key=kube-proxy.key \

> --embed-certs=true \

> --kubeconfig=kube-proxy.kubeconfig

>

> kubectl config set-context default \

> --cluster=${KUBE_CLUSTER_NAME} \

> --user=system:kube-proxy \

> --kubeconfig=kube-proxy.kubeconfig

>

> kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

> }

Cluster "gunwoo-k8s-hardway" set.

User "system:kube-proxy" set.

Context "default" created.

Switched to context "default".root@gunwoo-auth-basic:~/hardway/cert# {

> kubectl config set-cluster ${KUBE_CLUSTER_NAME} \

> --certificate-authority=ca.crt \

> --embed-certs=true \

> --server=https://${KUBE_API_SERVER_ADDRESS}:6443 \

> --kubeconfig=kube-controller-manager.kubeconfig

>

> kubectl config set-credentials system:kube-controller-manager \

> --client-certificate=kube-controller-manager.crt \

> --client-key=kube-controller-manager.key \

> --embed-certs=true \

> --kubeconfig=kube-controller-manager.kubeconfig

>

> kubectl config set-context default \

> --cluster=${KUBE_CLUSTER_NAME} \

> --user=system:kube-controller-manager \

> --kubeconfig=kube-controller-manager.kubeconfig

>

> kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

> }

Cluster "gunwoo-k8s-hardway" set.

User "system:kube-controller-manager" set.

Context "default" created.

Switched to context "default".root@gunwoo-auth-basic:~/hardway/cert# {

> kubectl config set-cluster ${KUBE_CLUSTER_NAME} \

> --certificate-authority=ca.crt \

> --embed-certs=true \

> --server=https://${KUBE_API_SERVER_ADDRESS}:6443 \

> --kubeconfig=kube-scheduler.kubeconfig

>

> kubectl config set-credentials system:kube-scheduler \

> --client-certificate=kube-scheduler.crt \

> --client-key=kube-scheduler.key \

> --embed-certs=true \

> --kubeconfig=kube-scheduler.kubeconfig

>

> kubectl config set-context default \

> --cluster=${KUBE_CLUSTER_NAME} \

> --user=system:kube-scheduler \

> --kubeconfig=kube-scheduler.kubeconfig

>

> kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

> }

Cluster "gunwoo-k8s-hardway" set.

User "system:kube-scheduler" set.

Context "default" created.

Switched to context "default".root@gunwoo-auth-basic:~/hardway/cert# {

> kubectl config set-cluster ${KUBE_CLUSTER_NAME} \

> --certificate-authority=ca.crt \

> --embed-certs=true \

> --server=https://${KUBE_API_SERVER_ADDRESS}:6443 \

> --kubeconfig=admin.kubeconfig

>

> kubectl config set-credentials admin \

> --client-certificate=admin.crt \

> --client-key=admin.key \

> --embed-certs=true \

> --kubeconfig=admin.kubeconfig

>

> kubectl config set-context default \

> --cluster=${KUBE_CLUSTER_NAME} \

> --user=admin \

> --kubeconfig=admin.kubeconfig

>

> kubectl config use-context default --kubeconfig=admin.kubeconfig

> }

Cluster "gunwoo-k8s-hardway" set.

User "admin" set.

Context "default" created.

Switched to context "default".root@gunwoo-auth-basic:~/hardway/cert# mkdir -p ~/.kube

root@gunwoo-auth-basic:~/hardway/cert# cp -rf admin.kubeconfig ~/.kube/config- SANs 생성

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# cat > openssl-kube-apiserver.cnf <<EOF

> [req]

> req_extensions = v3_req

> distinguished_name = req_distinguished_name

> [req_distinguished_name]

> [ v3_req ]

> basicConstraints = CA:FALSE

> keyUsage = nonRepudiation, digitalSignature, keyEncipherment

> subjectAltName = @alt_names

> [alt_names]

> DNS.1 = kubernetes

> DNS.2 = kubernetes.default

> DNS.3 = kubernetes.default.svc

> DNS.4 = kubernetes.default.svc.cluster.local

> IP.1 = 10.231.0.1

> IP.2 = 172.31.1.65

> IP.3 = 172.31.7.230

> IP.4 = 172.31.15.197

> IP.5 = 127.0.0.1

> EOFmaster 1, 2, 3의 IP를 넣음

- API 인증서

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out kube-apiserver.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

..............................+++++

.............+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key kube-apiserver.key -subj "/CN=kube-apiserver" -out kube-apiserver.csr -config openssl-kube-apiserver.cnf

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in kube-apiserver.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out kube-apiserver.crt -extensions v3_req -extfile openssl-kube-apiserver.cnf -days 365

Signature ok

subject=CN = kube-apiserver

Getting CA Private Key- 생성된 인증서(kube-apiserver.crt) SANs 확인

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -in kube-apiserver.crt -noout -text - etcd 인증서 생성

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# cat > openssl-etcd.cnf <<EOF

> [req]

> req_extensions = v3_req

> distinguished_name = req_distinguished_name

> [req_distinguished_name]

> [ v3_req ]

> basicConstraints = CA:FALSE

> keyUsage = nonRepudiation, digitalSignature, keyEncipherment

> subjectAltName = @alt_names

> [alt_names]

> IP.1 = 172.31.1.65

> IP.2 = 172.31.7.230

> IP.3 = 172.31.15.197

> IP.4 = 127.0.0.1

> EOFroot@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out etcd-server.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

........................................................+++++

......................................................................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key etcd-server.key -subj "/CN=etcd-server" -out etcd-server.csr -config openssl-etcd.cnf

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in etcd-server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out etcd-server.crt -extensions v3_req -extfile openssl-etcd.cnf -days 3650

Signature ok

subject=CN = etcd-server

Getting CA Private Key- 생성된 인증서(etcd-server.crt) SANs 확인

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -in etcd-server.crt -noout -text - Service Account Key Pair 생성

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out service-account.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

......................+++++

..........+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key service-account.key -subj "/CN=service-accounts" -out service-account.csr

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in service-account.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out service-account.crt -days 1

Signature ok

subject=CN = service-accounts

Getting CA Private Keyroot@gunwoo-auth-basic:~/hardway/cert# vi gunwoo.pem-----BEGIN RSA PRIVATE KEY-----

MIIEowIBAAKCAQEAr2Yd6FIs9MboX9mvj6+mdc99HwF2XirPjzWj4yckbSywrUC9

MXBpLQxtl7RRYwW6Hwv0zhPmxLyL0dT4Z7gWiN8vULdFCvliFyuy6bkBRuOK2ZQt

5jdow0Nntu5PVsJE70H7ogUI62bPdAdVYlsbkx8LKxtYsEP27xdjy34+wnvWdvHv

s5sPDP/Y/gZPBOpX7misSeKMGqKSNFD1mBsC2x8tgj4af44xaYpa2AlO+QKQoQ0Z

SJnFKh3Rl1ENoWL9Do2KV7+xlaVheuwrZMxvhl43tA2RPD2U7GIPnYCGTazOipyW

vTeOz971C2otCYU89Oww+w14ekqsWWEkr87f2QIDAQABAoIBAFlYE+3uRfYdh+qj

MRlo6Mrj+SEdFpVbC0Uwlp2zp4txE0H9UyHm17xZs01uTXK6TRdZeMasPoWCYVpu

OGZk0B3CShC8eg/f/kY9PTJuyaa5a1Xvc/3rxZKJOEBnXcScyo5xNuNN20BFq3C3

95dVP1OOoZrAoEmRJSye5neWhBN+Nf4hyJNapc6+6PRyY0UhHwH1gW42/GDEh8h1

aURV8cPgQuKb4b48Nq5VYnkWCpGvO0ASYotlKyK8F3ElRCqtNdW0SS+L2U22VxRJ

YtM4/A0Ay1MqZiy50GZcy6dH1YxrlEfD5oeNlSaC1Zjz+bLVNxAbO32hDjYSjBsE

q29g8r0CgYEA7SYtbDto6B1nM0NQqtIlIPl4XrvOZMF0r/hJynGUfhYBmcAXx+7q

qUuQGPQKXkEulpN8mijocOJjlIu68zkE1r6akN/gnWOlKA9teQT3cJMPNs420tXe

5WxpluYPxE6ZRe8mIBAqWeXtcgQAGcKwvoXMYY3AHFgLUlvyK2AHixMCgYEAvVdd

RCBELf7ju5ETADOwBn2kdTA2UgC7CPIOMfBqFTGQdkAvOw9W3KivrKA5h+YAhiXY

j6TCjnJyhzyk+lCtOVyX+uNicVVg9RCqLT5Eo/xjruH52wSeX0Er0AHiG7lzzK22

as8pGX2EfpsXY0LpfcG8TFtUnZd8iadLS9M6+uMCgYEAyjN+EUWKijbf0ma39h5d

yALNrkCFl4UXgB68lB9J1EPhM34FCNTeQmEKSSxzVrBPD9ZLLIpqvi1ZaMPHMDDS

vmMgGRy6QJhArqqQ1dn1PIg3NayYNCZk+cr5MM+Sa/f0Whuxry75XVNdpJRinZRR

7PDaNeeOANzVMnDxSCiF5f8CgYAOa1vX91kZ46J2Q1seFdGe/OyMXCqW3iVgTgbU

uMlracXlq4etSMLFtTcDv4QCHHsHvFQcxGBotsCQGgc6ZPB8QNf2LGDv3p+uqiE7

ptVVY8A3rk/35Qcmm/D5O1t73wi5mrUct0AbB9sSRT9nuM3NXPa5He94vPOoDAyI

7sI8HwKBgD41tUNQz2l193OtoICRiZZfbyJpp/pWgUnQtHDW1z5oif7xVvFVhCgC

Udbjn4nq8dwF6uL0FwtkvLTK0+eIaxFSFW6Bm6z2LwSr60jUMKDqY+55A/i2ZKVF

kVGRts8zxH9KkO/NLeVOQJTpssqu1xn+A+WOwfd++B1E8S1gfc2q

-----END RSA PRIVATE KEY-----root@gunwoo-auth-basic:~/hardway/cert# chmod 400 gunwoo.pem root@gunwoo-auth-basic:~/hardway/cert# for instance in 172.31.1.65 172.31.7.230 172.31.15.197; do

> scp -i gunwoo.pem ca.crt ca.key kube-apiserver.key kube-apiserver.crt \

> service-account.key service-account.crt \

> etcd-server.key etcd-server.crt \

> ubuntu@${instance}:~/

> done

The authenticity of host '172.31.1.65 (172.31.1.65)' can't be established.

ECDSA key fingerprint is SHA256:27gsQ8TuotxQnWbSQm/JSxYm87Zfm5JwTPT+iawkQco.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.1.65' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.3MB/s 00:00

ca.key 100% 1679 2.3MB/s 00:00

kube-apiserver.key 100% 1679 1.3MB/s 00:00

kube-apiserver.crt 100% 1237 932.3KB/s 00:00

service-account.key 100% 1679 1.3MB/s 00:00

service-account.crt 100% 1005 210.8KB/s 00:00

etcd-server.key 100% 1679 1.3MB/s 00:00

etcd-server.crt 100% 1090 617.3KB/s 00:00

The authenticity of host '172.31.7.230 (172.31.7.230)' can't be established.

ECDSA key fingerprint is SHA256:g22Qvv90n+lEr99d7I8cpwq7DomAjEimUdYjasCQ558.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.7.230' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.4MB/s 00:00

ca.key 100% 1679 2.2MB/s 00:00

kube-apiserver.key 100% 1679 1.2MB/s 00:00

kube-apiserver.crt 100% 1237 1.7MB/s 00:00

service-account.key 100% 1679 2.5MB/s 00:00

service-account.crt 100% 1005 1.5MB/s 00:00

etcd-server.key 100% 1679 1.2MB/s 00:00

etcd-server.crt 100% 1090 1.7MB/s 00:00

The authenticity of host '172.31.15.197 (172.31.15.197)' can't be established.

ECDSA key fingerprint is SHA256:lT7GEml7+xgAlYMax0+M83QdomeGFuzkeggLIaLlIyo.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.15.197' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.0MB/s 00:00

ca.key 100% 1679 2.2MB/s 00:00

kube-apiserver.key 100% 1679 2.0MB/s 00:00

kube-apiserver.crt 100% 1237 1.7MB/s 00:00

service-account.key 100% 1679 2.5MB/s 00:00

service-account.crt 100% 1005 1.5MB/s 00:00

etcd-server.key 100% 1679 2.1MB/s 00:00

etcd-server.crt 파일을 마스터 서버에게 옮김

- 모든 노드에 배포

- ca.crt

- kube-proxy.kubeconfig

root@gunwoo-auth-basic:~/hardway/cert# for instance in 172.31.1.65 172.31.7.230 172.31.15.197 172.31.3.25 172.31.2.183 172.31.11.91; do

> scp -i gunwoo.pem ca.crt kube-proxy.kubeconfig ubuntu@${instance}:~/

> done

ca.crt 100% 1001 1.3MB/s 00:00

kube-proxy.kubeconfig 100% 5297 2.7MB/s 00:00

ca.crt 100% 1001 1.2MB/s 00:00

kube-proxy.kubeconfig 100% 5297 3.0MB/s 00:00

ca.crt 100% 1001 1.2MB/s 00:00

kube-proxy.kubeconfig 100% 5297 6.7MB/s 00:00

The authenticity of host '172.31.3.25 (172.31.3.25)' can't be established.

ECDSA key fingerprint is SHA256:rKULwNOhZd6vswinZslwdpU2rMXLfm5KDMHLAEOCxQE.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.3.25' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.2MB/s 00:00

kube-proxy.kubeconfig 100% 5297 6.9MB/s 00:00

The authenticity of host '172.31.2.183 (172.31.2.183)' can't be established.

ECDSA key fingerprint is SHA256:PDD3iwMmlQWXLfWx5XPmqzuHmNjkH0oxtYJm5Y/LgjM.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.2.183' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.1MB/s 00:00

kube-proxy.kubeconfig 100% 5297 6.3MB/s 00:00

The authenticity of host '172.31.11.91 (172.31.11.91)' can't be established.

ECDSA key fingerprint is SHA256:qUN4Mizh1EJb6jSTYIoyeEr2ZYHg8YI9kaRB0FfQIQU.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '172.31.11.91' (ECDSA) to the list of known hosts.

ca.crt 100% 1001 1.3MB/s 00:00

kube-proxy.kubeconfig 100% 5297 6.6MB/s 00:00

root@gunwoo-auth-basic:~/hardway/cert# - 마스터 노드에 배포

- admin.kubeconfig

- kube-controller-manager.kubeconfig

- kube-scheduler.kubeconfig

root@gunwoo-auth-basic:~/hardway/cert# for instance in 172.31.1.65 172.31.7.230 172.31.15.197; do

> scp -i gunwoo.pem admin.kubeconfig kube-controller-manager.kubeconfig kube-scheduler.kubeconfig ubuntu@${instance}:~/

> done

admin.kubeconfig 100% 5297 3.3MB/s 00:00

kube-controller-manager.kubeconfig 100% 5343 3.5MB/s 00:00

kube-scheduler.kubeconfig 100% 5313 3.8MB/s 00:00

admin.kubeconfig 100% 5297 5.7MB/s 00:00

kube-controller-manager.kubeconfig 100% 5343 6.2MB/s 00:00

kube-scheduler.kubeconfig 100% 5313 1.8MB/s 00:00

admin.kubeconfig 100% 5297 5.9MB/s 00:00

kube-controller-manager.kubeconfig 100% 5343 6.6MB/s 00:00

kube-scheduler.kubeconfig 100% 5313 6.8MB/s 00:00 etcd 클러스터 생성 (마스터 3대에서 모두 실행)

wget https://github.com/coreos/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

{

tar -xvf etcd-v3.4.13-linux-amd64.tar.gz

sudo mv etcd-v3.4.13-linux-amd64/etcd* /usr/local/bin/

sudo mkdir -p /etc/ssl/etcd/ssl /var/lib/etcd

sudo chmod 700 /var/lib/etcd

sudo cp /home/ubuntu/ca.crt /home/ubuntu/etcd-server.key /home/ubuntu/etcd-server.crt /etc/ssl/etcd/ssl

}

export INTERNAL_IP=$(ip addr show eth0|grep -w "inet"|awk '{print $2}'|cut -d / -f 1)

export ETCD_NAME=$(hostname -s)- systemd etcd service 등록

cat <<EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} --cert-file=/etc/ssl/etcd/ssl/etcd-server.crt --key-file=/etc/ssl/etcd/ssl/etcd-server.key --peer-cert-file=/etc/ssl/etcd/ssl/etcd-server.crt \\

--peer-key-file=/etc/ssl/etcd/ssl/etcd-server.key --trusted-ca-file=/etc/ssl/etcd/ssl/ca.crt --peer-trusted-ca-file=/etc/ssl/etcd/ssl/ca.crt --peer-client-cert-auth \\

--client-cert-auth --initial-advertise-peer-urls https://${INTERNAL_IP}:2380 --listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 --advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 --initial-cluster-state new --data-dir=/var/lib/etcd \\

--initial-cluster gunwoo-k8s-hardway-master-1=https://172.31.1.65:2380,gunwoo-k8s-hardway-master-2=https://172.31.7.230:2380,gunwoo-k8s-hardway-master-3=https://172.31.15.197:2380

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- etcd 권한 700 확인

root@gunwoo-k8s-hardway-master-1:~# ls -al /var/lib | grep etcd

drwxr-xr-x 2 root root 4096 Oct 11 02:03 etcd

root@gunwoo-k8s-hardway-master-1:~# sudo chmod 700 /var/lib/etcd

root@gunwoo-k8s-hardway-master-1:~# ls -al /var/lib | grep etcd

drwx------ 2 root root 4096 Oct 11 02:03 etcd- etcd service 시작

root@gunwoo-k8s-hardway-master-1:~# {

> sudo systemctl daemon-reload

> sudo systemctl enable etcd

> sudo systemctl start etcd

> }

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service → /etc/systemd/system/etcd.service.- etcd cluster health check

root@gunwoo-k8s-hardway-master-1:~# export ETCDCTL_API=3

root@gunwoo-k8s-hardway-master-1:~# export ETCDCTL_CERT=/etc/ssl/etcd/ssl/etcd-server.crt

root@gunwoo-k8s-hardway-master-1:~# export ETCDCTL_KEY=/etc/ssl/etcd/ssl/etcd-server.key

root@gunwoo-k8s-hardway-master-1:~# export ETCDCTL_CACERT=/etc/ssl/etcd/ssl/ca.crt

root@gunwoo-k8s-hardway-master-1:~# etcdctl endpoint status --cluster --write-out=table

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://172.31.15.197:2379 | 9b0457963e93d934 | 3.4.13 | 20 kB | false | false | 5 | 9 | 9 | |

| https://172.31.7.230:2379 | d40c5ebf706021ad | 3.4.13 | 20 kB | false | false | 5 | 9 | 9 | |

| https://172.31.1.65:2379 | f7eeb6f0f7c3540c | 3.4.13 | 20 kB | true | false | 5 | 9 | 9 | |

+----------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+bootstrap k8s control plane

- Binary 다운로드 (마스터 노드 3대에서 각각 실행)

root@gunwoo-k8s-hardway-master-1:~# wget -q --show-progress --https-only --timestamping \

> https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubectl \

> "https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kube-apiserver" \

> "https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kube-controller-manager" \

> "https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kube-scheduler"

kubectl 100%[=====================================================================================>] 40.96M 34.8MB/s in 1.2s

kube-apiserver 100%[=====================================================================================>] 110.00M 42.7MB/s in 2.6s

kube-controller-manager 100%[=====================================================================================>] 102.35M 88.4MB/s in 1.2s

kube-scheduler 100%[=====================================================================================>] 40.95M 41.6MB/s in 1.0s

root@gunwoo-k8s-hardway-master-1:~# {

> chmod +x kubectl kube-apiserver kube-controller-manager kube-scheduler

> sudo mv kubectl kube-apiserver kube-controller-manager kube-scheduler /usr/local/bin/

> }root@gunwoo-k8s-hardway-master-1:~# mkdir -p ~/.kube

root@gunwoo-k8s-hardway-master-1:~# cp -rf /home/ubuntu/admin.kubeconfig ~/.kube/config- k8s dir를 생성하고 인증서 복사

root@gunwoo-k8s-hardway-master-1:~# {

> sudo mkdir -p /etc/kubernetes/ssl

>

> sudo cp /home/ubuntu/ca.crt /home/ubuntu/ca.key /home/ubuntu/kube-apiserver.crt /home/ubuntu/kube-apiserver.key \

> /home/ubuntu/service-account.key /home/ubuntu/service-account.crt /etc/kubernetes/ssl

> }

root@gunwoo-k8s-hardway-master-1:~# ls /etc/kubernetes/ssl

ca.crt ca.key kube-apiserver.crt kube-apiserver.key service-account.crt service-account.key- control plane과 admin이 사용할 kubeconfig 복사

root@gunwoo-k8s-hardway-master-1:~# {

> sudo cp /home/ubuntu/kube-controller-manager.kubeconfig /etc/kubernetes

> sudo cp /home/ubuntu/kube-scheduler.kubeconfig /etc/kubernetes

> sudo cp /home/ubuntu/admin.kubeconfig /etc/kubernetes

> }

root@gunwoo-k8s-hardway-master-1:~#

root@gunwoo-k8s-hardway-master-1:~# export INTERNAL_IP=$(ip addr show eth0|grep -w "inet"|awk '{print $2}'|cut -d / -f 1)

root@gunwoo-k8s-hardway-master-1:~# echo $INTERNAL_IP

172.31.1.65

root@gunwoo-k8s-hardway-master-1:~# ls /etc/kubernetes/

admin.kubeconfig kube-controller-manager.kubeconfig kube-scheduler.kubeconfig ssl- systemd service 등록 - kube-apiserver

cat <<EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} --allow-privileged=true --apiserver-count=3 --service-cluster-ip-range=10.231.0.0/18 --service-node-port-range=30000-32767 \\

--authorization-mode=Node,RBAC --bind-address=0.0.0.0 --v=2 \\

--client-ca-file=/etc/kubernetes/ssl/ca.crt --enable-admission-plugins=NodeRestriction,ServiceAccount --enable-swagger-ui=true \\

--enable-bootstrap-token-auth=true --etcd-cafile=/etc/ssl/etcd/ssl/ca.crt --etcd-certfile=/etc/ssl/etcd/ssl/etcd-server.crt --etcd-keyfile=/etc/ssl/etcd/ssl/etcd-server.key \\

--etcd-servers=https://172.31.1.65:2379,https://172.31.7.230:2379,https://172.31.15.197:2379 \\

--event-ttl=1h --kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.crt \\

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver.key --kubelet-https=true --runtime-config= --service-account-key-file=/etc/kubernetes/ssl/service-account.crt \\

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.crt --tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver.key

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- systemd service 등록 - kube-controller-manager

cat <<EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--address=0.0.0.0 --cluster-cidr=10.240.0.0/13 --allocate-node-cidrs=true --cluster-name=gunwoo-k8s-hardway \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.crt --cluster-signing-key-file=/etc/kubernetes/ssl/ca.key \\

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true --root-ca-file=/etc/kubernetes/ssl/ca.crt --service-account-private-key-file=/etc/kubernetes/ssl/service-account.key \\

--service-cluster-ip-range=10.231.0.0/18 --use-service-account-credentials=true --v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

- systemd service 등록 - kube-scheduler

cat <<EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \\

--address=127.0.0.1 \\

--leader-elect=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- systemd service 시작

root@gunwoo-k8s-hardway-master-1:~# {

> sudo systemctl daemon-reload

> sudo systemctl enable kube-apiserver kube-controller-manager kube-scheduler

> sudo systemctl start kube-apiserver kube-controller-manager kube-scheduler

> }

Created symlink /etc/systemd/system/multi-user.target.wants/kube-apiserver.service → /etc/systemd/system/kube-apiserver.service.

Created symlink /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service → /etc/systemd/system/kube-controller-manager.service.

Created symlink /etc/systemd/system/multi-user.target.wants/kube-scheduler.service → /etc/systemd/system/kube-scheduler.service.- kubectl 명령어 실행

root@gunwoo-k8s-hardway-master-1:~# kubectl get nodes

No resources found

root@gunwoo-k8s-hardway-master-1:~# kubectl version

Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.16", GitCommit:"e37e4ab4cc8dcda84f1344dda47a97bb1927d074", GitTreeState:"clean", BuildDate:"2021-10-27T16:25:59Z", GoVersion:"go1.15.15", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.16", GitCommit:"e37e4ab4cc8dcda84f1344dda47a97bb1927d074", GitTreeState:"clean", BuildDate:"2021-10-27T16:20:18Z", GoVersion:"go1.15.15", Compiler:"gc", Platform:"linux/amd64"}

root@gunwoo-k8s-hardway-master-1:~# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.231.0.1 <none> 443/TCP 25sroot@gunwoo-k8s-hardway-master-1:~# curl https://localhost:6443/version -k

{

"major": "1",

"minor": "19",

"gitVersion": "v1.19.16",

"gitCommit": "e37e4ab4cc8dcda84f1344dda47a97bb1927d074",

"gitTreeState": "clean",

"buildDate": "2021-10-27T16:20:18Z",

"goVersion": "go1.15.15",

"compiler": "gc",

"platform": "linux/amd64"

}- kubelet client 인증서 생성 (gunwoo-auth-basic 인스턴스에서)

root@gunwoo-auth-basic:~/hardway/cert# cat > openssl-gunwoo-k8s-hardway-worker-1.cnf <<EOF

> [req]

> req_extensions = v3_req

> distinguished_name = req_distinguished_name

> [req_distinguished_name]

> [ v3_req ]

> basicConstraints = CA:FALSE

> keyUsage = nonRepudiation, digitalSignature, keyEncipherment

> subjectAltName = @alt_names

> [alt_names]

> IP.1 = 172.31.3.25

> DNS.1 = gunwoo-k8s-hardway-worker-1

> EOF

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# openssl genrsa -out gunwoo-k8s-hardway-worker-1.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

..................................+++++

..............................+++++

e is 65537 (0x010001)

root@gunwoo-auth-basic:~/hardway/cert# openssl req -new -key gunwoo-k8s-hardway-worker-1.key -subj "/CN=system:node:gunwoo-k8s-hardway-worker-1/O=system:nodes" -out gunwoo-k8s-hardway-worker-1.csr -config openssl-gunwoo-k8s-hardway-worker-1.cnf

root@gunwoo-auth-basic:~/hardway/cert# openssl x509 -req -in gunwoo-k8s-hardway-worker-1.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out gunwoo-k8s-hardway-worker-1.crt -extensions v3_req -extfile openssl-gunwoo-k8s-hardway-worker-1.cnf -days 365

Signature ok

subject=CN = system:node:gunwoo-k8s-hardway-worker-1, O = system:nodes

Getting CA Private KeyIP.1 = 172.31.3.25

worker node 1의 ip 주소

- kubelet kubeconfig 생성 (gunwoo-auth-basic 인스턴스에서)

root@gunwoo-auth-basic:~/hardway/cert# export KUBE_API_SERVER_ADDRESS=172.31.1.65

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# {

> kubectl config set-cluster gunwoo-k8s-hardway \

> --certificate-authority=ca.crt --embed-certs=true \

> --server=https://${KUBE_API_SERVER_ADDRESS}:6443 --kubeconfig=gunwoo-k8s-hardway-worker-1.kubeconfig

>

> kubectl config set-credentials system:node:gunwoo-k8s-hardway-worker-1 \

> --client-certificate=gunwoo-k8s-hardway-worker-1.crt --client-key=gunwoo-k8s-hardway-worker-1.key \

> --embed-certs=true --kubeconfig=gunwoo-k8s-hardway-worker-1.kubeconfig

>

> kubectl config set-context default \

> --cluster=gunwoo-k8s-hardway --user=system:node:gunwoo-k8s-hardway-worker-1 --kubeconfig=gunwoo-k8s-hardway-worker-1.kubeconfig

>

> kubectl config use-context default --kubeconfig=gunwoo-k8s-hardway-worker-1.kubeconfig

> }

Cluster "gunwoo-k8s-hardway" set.

User "system:node:gunwoo-k8s-hardway-worker-1" set.

Context "default" created.

Switched to context "default".export KUBE_API_SERVER_ADDRESS=172.31.1.65

master node 1의 ip 주소

kubelet server/client cert/key 배포

root@gunwoo-auth-basic:~/hardway/cert# for instance in 172.31.3.25; do

> scp -i gunwoo.pem gunwoo-k8s-hardway-worker-1.crt gunwoo-k8s-hardway-worker-1.key gunwoo-k8s-hardway-worker-1.kubeconfig \

> ubuntu@${instance}:~/

> done

gunwoo-k8s-hardway-worker-1.crt 100% 1176 1.6MB/s 00:00

gunwoo-k8s-hardway-worker-1.key 100% 1675 2.6MB/s 00:00

gunwoo-k8s-hardway-worker-1.kubeconfig 100% 5561 7.9MB/s 00:00

root@gunwoo-auth-basic:~/hardway/cert# worker1에게 인증서 전송

docker 설치

- docker 설치 (worker-1)

sudo apt-get update

sudo apt-get install -y docker.io

sudo swapoff -a- Docker 서비스 시작 및 테스트 (worker-1)

root@gunwoo-k8s-hardway-worker-1:~# {

> sudo systemctl daemon-reload

> sudo systemctl enable docker

> sudo systemctl restart docker

> }

root@gunwoo-k8s-hardway-worker-1:~#

root@gunwoo-k8s-hardway-worker-1:~# sudo docker run --rm -it nicolaka/netshoot

Unable to find image 'nicolaka/netshoot:latest' locally

latest: Pulling from nicolaka/netshoot

2408cc74d12b: Pull complete

3cf03e9fd7e4: Pull complete

d3299a042ee2: Pull complete

e00dbf0b44c3: Pull complete

1cb6452f30ec: Pull complete

cc37e878581f: Pull complete

4f4fb700ef54: Pull complete

b01a5ec78db0: Pull complete

123e924da2d1: Pull complete

367cf0ba0067: Pull complete

5a3733011111: Pull complete

6adf3d98c1c3: Pull complete

77c79836780d: Pull complete

4aafb6d72b2b: Pull complete

Digest: sha256:aeafd567d7f7f1edb5127ec311599bb2b8a9c0fb31d7a53e9cff26af6d29fd4e

Status: Downloaded newer image for nicolaka/netshoot:latest

dP dP dP

88 88 88

88d888b. .d8888b. d8888P .d8888b. 88d888b. .d8888b. .d8888b. d8888P

88' `88 88ooood8 88 Y8ooooo. 88' `88 88' `88 88' `88 88

88 88 88. ... 88 88 88 88 88. .88 88. .88 88

dP dP `88888P' dP `88888P' dP dP `88888P' `88888P' dP

Welcome to Netshoot! (github.com/nicolaka/netshoot)

360c61f9da2c ~ Binary 다운로드

- kubectl, kube-proxy, kubelet (worker-1)

wget -q --show-progress --https-only --timestamping \

https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubelet

sudo mkdir -p /etc/cni/net.d /opt/cni/bin /var/lib/kubelet /var/lib/kube-proxy /etc/kubernetes/ssl /var/run/kubernetes

{

chmod +x kubectl kube-proxy kubelet

sudo mv kubectl kube-proxy kubelet /usr/local/bin/

}

{

sudo mkdir -p /opt/cni/bin

wget https://github.com/containernetworking/plugins/releases/download/v0.8.6/cni-plugins-linux-amd64-v0.8.6.tgz

sudo tar -xzvf cni-plugins-linux-amd64-v0.8.6.tgz --directory /opt/cni/bin/

}- 인증서 옮기기

root@gunwoo-k8s-hardway-worker-1:~# export HOSTNAME=$(hostname)

root@gunwoo-k8s-hardway-worker-1:~# {

> mkdir -p /etc/kubernetes/ssl

> sudo cp -rf /home/ubuntu/${HOSTNAME}.key /home/ubuntu/${HOSTNAME}.crt /home/ubuntu/ca.crt /etc/kubernetes/ssl

> sudo cp -rf /home/ubuntu/${HOSTNAME}.kubeconfig /etc/kubernetes

> }- kubelet-config.yaml

root@gunwoo-k8s-hardway-worker-1:~# cat << EOF | sudo tee /etc/kubernetes/kubelet-config.yaml

> kind: KubeletConfiguration

> apiVersion: kubelet.config.k8s.io/v1beta1

> authentication:

> anonymous:

> enabled: false

> webhook:

> enabled: true

> x509:

> clientCAFile: "/etc/kubernetes/ssl/ca.crt"

> authorization:

> mode: Webhook

> clusterDomain: "cluster.local"

> clusterDNS:

> - 10.231.0.10

> resolvConf: "/run/systemd/resolve/resolv.conf"

> runtimeRequestTimeout: "15m"

> EOF- kubelet.service

root@gunwoo-k8s-hardway-worker-1:~# cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

> [Unit]

> Description=Kubernetes Kubelet

> Documentation=https://github.com/kubernetes/kubernetes

> After=docker.service

> Requires=docker.service

>

> [Service]

> ExecStart=/usr/local/bin/kubelet \\

> --config=/etc/kubernetes/kubelet-config.yaml --image-pull-progress-deadline=2m \\

> --kubeconfig=/etc/kubernetes/${HOSTNAME}.kubeconfig --tls-cert-file=/etc/kubernetes/ssl/${HOSTNAME}.crt \\

> --tls-private-key-file=/etc/kubernetes/ssl/${HOSTNAME}.key --network-plugin=cni --register-node=true --v=2

> Restart=on-failure

> RestartSec=5

>

> [Install]

> WantedBy=multi-user.target

> EOFroot@gunwoo-k8s-hardway-worker-1:~# {

> sudo cp /home/ubuntu/kube-proxy.kubeconfig /etc/kubernetes

> }

root@gunwoo-k8s-hardway-worker-1:~# cd /etc/kubernetes/

root@gunwoo-k8s-hardway-worker-1:/etc/kubernetes# ls

gunwoo-k8s-hardway-worker-1.kubeconfig kube-proxy.kubeconfig kubelet-config.yaml sslsudo apt-get install ipset -y

cat <<EOF | sudo tee /etc/kubernetes/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

mode: "ipvs"

clusterCIDR: "10.240.0.0/13"

EOFcat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/etc/kubernetes/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF{

sudo systemctl daemon-reload

sudo systemctl enable kubelet kube-proxy

sudo systemctl start kubelet kube-proxy

}

systemctl status kubelet

systemctl status kube-proxy

sudo journalctl -xef -u kubelet

sudo journalctl -xef -u kube-proxy- auth-basic에서 worker 노드 확인

root@gunwoo-auth-basic:~/hardway/cert# kubectl get nodes

NAME STATUS ROLES AGE VERSION

gunwoo-k8s-hardway-worker-1 NotReady <none> 50s v1.19.16overlay network가 없기 떄문에 NotReady 상태이다.

- auth-basic에서 CNI plugin 설치

{

wget https://raw.githubusercontent.com/cilium/cilium/v1.7/install/kubernetes/quick-install.yaml

kubectl apply -f quick-install.yaml

}- 아직 로그 볼 권한이 없음

root@gunwoo-auth-basic:~/hardway/cert# kubectl logs -f cilium-mtx66 -n kube-system

Error from server (Forbidden): Forbidden (user=kube-apiserver, verb=get, resource=nodes, subresource=proxy) ( pods/log cilium-mtx66)- clusterrole 바인딩

cat << EOF > kubelet-clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOF

kubectl apply -f kubelet-clusterrole.yaml

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# cat << EOF > kubelet-clusterrolebinding.yaml

> apiVersion: rbac.authorization.k8s.io/v1beta1

> kind: ClusterRoleBinding

> metadata:

> name: system:kube-apiserver

> namespace: ""

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: system:kube-apiserver-to-kubelet

> subjects:

> - apiGroup: rbac.authorization.k8s.io

> kind: User

> name: kube-apiserver

> EOF

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f kubelet-clusterrolebinding.yaml

Warning: rbac.authorization.k8s.io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created- 이제 로그 볼 수 있음

root@gunwoo-auth-basic:~/hardway/cert# kubectl logs -f cilium-mtx66 -n kube-systemCoreDNS

vi coredns.yaml---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

prefer_udp

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: "coredns"

namespace: kube-system

labels:

k8s-app: "kube-dns"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "coredns"

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0

maxSurge: 10%

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

k8s-app: kube-dns

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

preference:

matchExpressions:

- key: node-role.kubernetes.io/master

operator: In

values:

- ""

containers:

- name: coredns

image: "coredns/coredns:1.6.5"

imagePullPolicy: IfNotPresent

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

- name: tz-config

mountPath: /etc/localtime

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 10

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 10

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

- name: tz-config

hostPath:

path: /etc/localtime

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "coredns"

addonmanager.kubernetes.io/mode: Reconcile

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.231.0.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCProot@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f coredns.yaml

configmap/coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

deployment.apps/coredns created

serviceaccount/coredns created

service/coredns creatednetshoot

root@gunwoo-auth-basic:~/hardway/cert# vi netshoot.yaml apiVersion: apps/v1

kind: DaemonSet

metadata:

name: netshoot-cluster

labels:

app: netshoot-cluster

spec:

selector:

matchLabels:

name: netshoot-cluster

template:

metadata:

labels:

name: netshoot-cluster

spec:

tolerations:

- operator: Exists

containers:

- name: netshoot-cluster

tty: true

stdin: true

args:

- bash

image: nicolaka/netshootroot@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f netshoot.yaml

daemonset.apps/netshoot-cluster created- netshoot 사용

root@gunwoo-auth-basic:~/hardway/cert# kubectl get pod

NAME READY STATUS RESTARTS AGE

netshoot-cluster-jj48b 1/1 Running 0 39sroot@gunwoo-auth-basic:~/hardway/cert# kubectl exec -it netshoot-cluster-jj48b /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-5.1# hostname

netshoot-cluster-jj48b

bash-5.1# nslookup kubernetes

Server: 10.231.0.10

Address: 10.231.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.231.0.1

bash-5.1# nslookup naver.com

Server: 10.231.0.10

Address: 10.231.0.10#53

Non-authoritative answer:

Name: naver.com

Address: 223.130.200.107

Name: naver.com

Address: 223.130.195.200

Name: naver.com

Address: 223.130.200.104

Name: naver.com

Address: 223.130.195.95- echo-server

root@gunwoo-auth-basic:~/hardway/cert# vi echo-server.yamlkind: Deployment

apiVersion: apps/v1

metadata:

name: service-test

spec:

replicas: 2

selector:

matchLabels:

app: service_test_pod

template:

metadata:

labels:

app: service_test_pod

spec:

containers:

- name: simple-http

image: python:2.7

imagePullPolicy: IfNotPresent

command: ["/bin/bash"]

args: ["-c", "echo \"<p>Hello from $(hostname)</p>\" > index.html; python -m SimpleHTTPServer 8080"]

ports:

- name: http

containerPort: 8080root@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f echo-server.yaml

deployment.apps/service-test createdroot@gunwoo-auth-basic:~/hardway/cert# vi echo-service.yamlapiVersion: v1

kind: Service

metadata:

labels:

app: service_test_pod

name: echo

spec:

ports:

- name: 8080-8080

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: service_test_pod

type: ClusterIProot@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f echo-service.yaml

service/echo createdroot@gunwoo-auth-basic:~/hardway/cert# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

netshoot-cluster-jj48b 1/1 Running 0 7m2s 10.240.0.165 gunwoo-k8s-hardway-worker-1 <none> <none>

service-test-db6d56f8f-8sqv2 1/1 Running 0 119s 10.240.0.179 gunwoo-k8s-hardway-worker-1 <none> <none>

service-test-db6d56f8f-jwwpn 1/1 Running 0 119s 10.240.0.208 gunwoo-k8s-hardway-worker-1 <none> <none>10.240.0.179

root@gunwoo-auth-basic:~/hardway/cert# kubectl exec -it netshoot-cluster-jj48b /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-5.1# curl 10.240.0.179:8080

<p>Hello from service-test-db6d56f8f-8sqv2</p>

bash-5.1# curl 10.240.0.208:8080

<p>Hello from service-test-db6d56f8f-jwwpn</p>bash-5.1# nslookup echo

Server: 10.231.0.10

Address: 10.231.0.10#53

Name: echo.default.svc.cluster.local

Address: 10.231.31.28

bash-5.1# curl echo:8080

<p>Hello from service-test-db6d56f8f-8sqv2</p>

bash-5.1# curl echo:8080

<p>Hello from service-test-db6d56f8f-jwwpn</p>

bash-5.1# curl echo:8080

<p>Hello from service-test-db6d56f8f-8sqv2</p>

bash-5.1# curl echo:8080

<p>Hello from service-test-db6d56f8f-jwwpn</p>echo는 로드밸런서 역할을 하여 라운드 로빈으로 접속

root@gunwoo-k8s-hardway-worker-1:~# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.231.0.1:443 rr

-> 172.31.1.65:6443 Masq 1 2 0

-> 172.31.7.230:6443 Masq 1 2 0

-> 172.31.15.197:6443 Masq 1 1 0

TCP 10.231.0.10:53 rr

-> 10.240.0.77:53 Masq 1 0 0

TCP 10.231.0.10:9153 rr

-> 10.240.0.77:9153 Masq 1 0 0

TCP 10.231.31.28:8080 rr

-> 10.240.0.179:8080 Masq 1 0 0

-> 10.240.0.208:8080 Masq 1 0 0

UDP 10.231.0.10:53 rr

-> 10.240.0.77:53 Masq 1 0 0 TLS Bootstraping

root@gunwoo-auth-basic:~/hardway/cert# cat > bootstrap-token-07401b.yaml <<EOF

> apiVersion: v1

> kind: Secret

> metadata:

> # Name MUST be of form "bootstrap-token-<token id>"

> name: bootstrap-token-07401b

> namespace: kube-system

>

> # Type MUST be 'bootstrap.kubernetes.io/token'

> type: bootstrap.kubernetes.io/token

> stringData:

> # Human readable description. Optional.

> description: "The bootstrap token generated by kdt kakao"

>

> # Token ID and secret. Required.

> token-id: 07401b

> token-secret: f395accd246ae52d

>

> # Expiration. Optional.

> expiration: 2121-09-01T00:00:00Z

>

> # Allowed usages.

> usage-bootstrap-authentication: "true"

> usage-bootstrap-signing: "true"

>

> # Extra groups to authenticate the token as. Must start with "system:bootstrappers:"

> auth-extra-groups: system:bootstrappers:worker

> EOF

root@gunwoo-auth-basic:~/hardway/cert# kubectl apply -f bootstrap-token-07401b.yaml

secret/bootstrap-token-07401b created

root@gunwoo-auth-basic:~/hardway/cert# kubectl get secret -n kube-system | grep bootstrap

bootstrap-token-07401b bootstrap.kubernetes.io/token 7 17s- csrs-for-bootstrapping.yaml

root@gunwoo-auth-basic:~/hardway/cert# cat > csrs-for-bootstrapping.yaml <<EOF

> # enable bootstrapping nodes to create CSR

> kind: ClusterRoleBinding

> apiVersion: rbac.authorization.k8s.io/v1

> metadata:

> name: create-csrs-for-bootstrapping

> subjects:

> - kind: Group

> name: system:bootstrappers

> apiGroup: rbac.authorization.k8s.io

> roleRef:

> kind: ClusterRole

> name: system:node-bootstrapper

> apiGroup: rbac.authorization.k8s.io

> EOF

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# kubectl create -f csrs-for-bootstrapping.yaml- auto-approve-csrs-for-group.yaml

cat > auto-approve-csrs-for-group.yaml <<EOF

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

EOF

kubectl create -f auto-approve-csrs-for-group.yaml- 확인

root@gunwoo-auth-basic:~/hardway/cert# kubectl get clusterrolebinding | grep csr

create-csrs-for-bootstrapping ClusterRole/system:node-bootstrapper 2m52s

root@gunwoo-auth-basic:~/hardway/cert# kubectl get secret -n kube-system | grep bootstrap

bootstrap-token-07401b bootstrap.kubernetes.io/token 7 5m42s- auto-approve-renewals-for-nodes.yaml

root@gunwoo-auth-basic:~/hardway/cert# cat > auto-approve-renewals-for-nodes.yaml <<EOF

> # Approve renewal CSRs for the group "system:nodes"

> kind: ClusterRoleBinding

> apiVersion: rbac.authorization.k8s.io/v1

> metadata:

> name: auto-approve-renewals-for-nodes

> subjects:

> - kind: Group

> name: system:nodes

> apiGroup: rbac.authorization.k8s.io

> roleRef:

> kind: ClusterRole

> name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

> apiGroup: rbac.authorization.k8s.io

> EOF

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# kubectl create -f auto-approve-renewals-for-nodes.yaml

clusterrolebinding.rbac.authorization.k8s.io/auto-approve-renewals-for-nodes created

root@gunwoo-auth-basic:~/hardway/cert# worker 2

root@gunwoo-auth-basic:~/hardway/cert#

root@gunwoo-auth-basic:~/hardway/cert# for instance in 172.31.2.183; do

> scp -i gunwoo.pem ca.crt kube-proxy.kubeconfig ubuntu@${instance}:~/

> done

ca.crt 100% 1001 1.4MB/s 00:00

kube-proxy.kubeconfig 100% 5297 6.7MB/s 00:00

root@gunwoo-auth-basic:~/hardway/cert# - 도커 설치

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# {

> sudo apt-get update

> sudo apt-get install -y docker.io

> sudo swapoff -a

> }root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# {

> sudo systemctl daemon-reload

> sudo systemctl enable docker

> sudo systemctl restart docker

> }

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# sudo docker run --rm -it nicolaka/netshoot

Unable to find image 'nicolaka/netshoot:latest' locally

latest: Pulling from nicolaka/netshoot

2408cc74d12b: Pull complete

3cf03e9fd7e4: Pull complete

d3299a042ee2: Pull complete

e00dbf0b44c3: Pull complete

1cb6452f30ec: Pull complete

cc37e878581f: Pull complete

4f4fb700ef54: Pull complete

b01a5ec78db0: Pull complete

123e924da2d1: Pull complete

367cf0ba0067: Pull complete

5a3733011111: Pull complete

6adf3d98c1c3: Pull complete

77c79836780d: Pull complete

4aafb6d72b2b: Pull complete

Digest: sha256:aeafd567d7f7f1edb5127ec311599bb2b8a9c0fb31d7a53e9cff26af6d29fd4e

Status: Downloaded newer image for nicolaka/netshoot:latest

dP dP dP

88 88 88

88d888b. .d8888b. d8888P .d8888b. 88d888b. .d8888b. .d8888b. d8888P

88' `88 88ooood8 88 Y8ooooo. 88' `88 88' `88 88' `88 88

88 88 88. ... 88 88 88 88 88. .88 88. .88 88

dP dP `88888P' dP `88888P' dP dP `88888P' `88888P' dP

Welcome to Netshoot! (github.com/nicolaka/netshoot)

e3b01919af15 ~ root@gunwoo-k8s-hardway-worker2:~# wget -q --show-progress --https-only --timestamping \

> https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubectl \

> https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kube-proxy \

> https://storage.googleapis.com/kubernetes-release/release/v1.19.16/bin/linux/amd64/kubelet

kubectl 100%[=====================================================================================>] 40.96M 47.1MB/s in 0.9s

kube-proxy 100%[=====================================================================================>] 36.96M 43.4MB/s in 0.9s

kubelet 100%[=====================================================================================>] 105.01M 49.4MB/s in 2.1s

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# sudo mkdir -p /etc/cni/net.d /opt/cni/bin /var/lib/kubelet /var/lib/kube-proxy /etc/kubernetes/ssl /var/run/kubernetes

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# {

> chmod +x kubectl kube-proxy kubelet

> sudo mv kubectl kube-proxy kubelet /usr/local/bin/

> }

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# {

> sudo cp -rf /home/ubuntu/ca.crt /etc/kubernetes/ssl

> }

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# sudo mkdir -p /var/lib/kubelet/sslroot@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# cat <<EOF | sudo tee /etc/kubernetes/bootstrap-kubeconfig

> apiVersion: v1

> kind: Config

> clusters:

> - cluster:

> certificate-authority: /etc/kubernetes/ssl/ca.crt

> server: https://172.31.1.65:6443

> name: bootstrap

> contexts:

> - context:

> cluster: bootstrap

> user: kubelet-bootstrap

> name: bootstrap

> current-context: bootstrap

> users:

> - name: kubelet-bootstrap

> user:

> token: 07401b.f395accd246ae52d

> preferences: {}

> EOFmaster 1 ip 주소 입력

root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# cat << EOF | sudo tee /etc/kubernetes/kubelet-config.yaml

> kind: KubeletConfiguration

> apiVersion: kubelet.config.k8s.io/v1beta1

> authentication:

> anonymous:

> enabled: false

> webhook:

> enabled: true

> x509:

> clientCAFile: "/etc/kubernetes/ssl/ca.crt"

> authorization:

> mode: Webhook

> clusterDomain: "cluster.local"

> clusterDNS:

> - "10.231.0.10"

> resolvConf: "/run/systemd/resolve/resolv.conf"

> runtimeRequestTimeout: "15m"

> rotateCertificates: true

> EOFroot@gunwoo-k8s-hardway-worker2:~# ls /etc/kubernetes/

bootstrap-kubeconfig kubelet-config.yaml ssl

root@gunwoo-k8s-hardway-worker2:~# ls /etc/kubernetes/ssl/

ca.crtroot@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

> [Unit]

> Description=Kubernetes Kubelet

> Documentation=https://github.com/kubernetes/kubernetes

> After=docker.service

> Requires=docker.service

>

> [Service]

> ExecStart=/usr/local/bin/kubelet \\

> --config=/etc/kubernetes/kubelet-config.yaml --image-pull-progress-deadline=2m \\

> --network-plugin=cni --register-node=true --v=2 \\

> --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubeconfig --kubeconfig=/var/lib/kubelet/kubeconfig --cert-dir=/var/lib/kubelet/ssl/

> Restart=on-failure

> RestartSec=5

>

> [Install]

> WantedBy=multi-user.target

> EOFroot@gunwoo-k8s-hardway-worker2:~# {

> sudo cp /home/ubuntu/kube-proxy.kubeconfig /etc/kubernetes

> }root@gunwoo-k8s-hardway-worker2:~#

root@gunwoo-k8s-hardway-worker2:~# {

> sudo apt-get install ipset -y

>

> cat <<EOF | sudo tee /etc/kubernetes/kube-proxy-config.yaml

> kind: KubeProxyConfiguration

> apiVersion: kubeproxy.config.k8s.io/v1alpha1

> clientConnection:

> kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

> mode: "ipvs"

> clusterCIDR: "10.240.0.0/13"

> EOF

> }root@gunwoo-k8s-hardway-worker2:~# cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

> [Unit]

> Description=Kubernetes Kube Proxy

> Documentation=https://github.com/kubernetes/kubernetes

>

> [Service]

> ExecStart=/usr/local/bin/kube-proxy \\

> --config=/etc/kubernetes/kube-proxy-config.yaml

> Restart=on-failure

> RestartSec=5

>

> [Install]

> WantedBy=multi-user.target

> EOF- systemd service 시작

root@gunwoo-k8s-hardway-worker2:~# {

> sudo systemctl daemon-reload

> sudo systemctl enable kubelet kube-proxy

> sudo systemctl start kubelet kube-proxy

> }

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /etc/systemd/system/kubelet.service.

Created symlink /etc/systemd/system/multi-user.target.wants/kube-proxy.service → /etc/systemd/system/kube-proxy.service.

root@gunwoo-k8s-hardway-worker2:~# root@gunwoo-auth-basic:~/hardway/cert# kubectl get node

NAME STATUS ROLES AGE VERSION

gunwoo-k8s-hardway-worker-1 Ready <none> 95m v1.19.16

gunwoo-k8s-hardway-worker2 Ready <none> 2m35s v1.19.16worker2 확인

네트워크 공부: https://ssup2.github.io