Metrics-Server

- metrics-server 설치

root@master:~# wget https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

--2022-11-01 09:39:29-- https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

Resolving github.com (github.com)... 20.200.245.247

Connecting to github.com (github.com)|20.200.245.247|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml [following]

--2022-11-01 09:39:29-- https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml

Reusing existing connection to github.com:443.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/92132038/d85e100a-2404-4c5e-b6a9-f3814ad4e6e5?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20221101%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20221101T003917Z&X-Amz-Expires=300&X-Amz-Signature=00b8cee6c8ac2502d3810826c0d76391d84bb68471931f3141c091e2ca77ef62&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream [following]

--2022-11-01 09:39:29-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/92132038/d85e100a-2404-4c5e-b6a9-f3814ad4e6e5?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20221101%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20221101T003917Z&X-Amz-Expires=300&X-Amz-Signature=00b8cee6c8ac2502d3810826c0d76391d84bb68471931f3141c091e2ca77ef62&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=92132038&response-content-disposition=attachment%3B%20filename%3Dcomponents.yaml&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.109.133, 185.199.108.133, 185.199.111.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.109.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4181 (4.1K) [application/octet-stream]

Saving to: ‘components.yaml’

components.yaml 100%[==================================================================================================================>] 4.08K --.-KB/s in 0s

2022-11-01 09:39:29 (24.1 MB/s) - ‘components.yaml’ saved [4181/4181]root@master:~# vi components.yaml 134 - args:

135 - --cert-dir=/tmp

136 - --kubelet-insecure-tls

137 - --secure-port=4443

138 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

139 - --kubelet-use-node-status-port

140 - --metric-resolution=15s--kubelet-insecure-tls 추가

- 배포

root@master:~# kubectl apply -f components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created- 배포 확인

root@master:~# kubectl get secrets -n kube-system | grep metric

metrics-server-token-pdn55 kubernetes.io/service-account-token 3 110smetrics-server-token-pdn55

- 토큰 값 확인

root@master:~# kubectl get secrets metrics-server-token-pdn55 -n kube-system -o jsonpath={.data.token}

ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklteG1jMEZ5UkZoS1RYVXdSa010UkVwYU5HVmtXR2RCVVhCNk5EaGZVR1JOUjNWUmVubFlURUZmYjBVaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUpyZFdKbExYTjVjM1JsYlNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZqY21WMExtNWhiV1VpT2lKdFpYUnlhV056TFhObGNuWmxjaTEwYjJ0bGJpMXdaRzQxTlNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExtNWhiV1VpT2lKdFpYUnlhV056TFhObGNuWmxjaUlzSW10MVltVnlibVYwWlhNdWFXOHZjMlZ5ZG1salpXRmpZMjkxYm5RdmMyVnlkbWxqWlMxaFkyTnZkVzUwTG5WcFpDSTZJak14Tm1abU5tUXlMVGhsTkRVdE5EUTJNUzA1WkdaaExXUmtZV1ZsTmpOaU1XVTVNaUlzSW5OMVlpSTZJbk41YzNSbGJUcHpaWEoyYVdObFlXTmpiM1Z1ZERwcmRXSmxMWE41YzNSbGJUcHRaWFJ5YVdOekxYTmxjblpsY2lKOS5mdWlEN0w3ajVMcTVNQ2R6cTMweW91N09KNW9rTU9nN292ZG9wWFhjWGxYdVdiTkhWVzVxNVVKbkIwT3Z0YTNpMGFjQ1NYN0tjc19BY00xWUJ0V0ZoWXZ1cEVfUThVdnpJUjdHQWhuOXJyV0s5aTFVN2lHUDlBV3gwcVVCUHU2dmFrSjF4bzFpUzBQckdwWXNIY2MtQWp1bEZzMGp6MEJGN2NDTHdUM3l5MmJONWJ1RE9Wb3RIX2FEZW85Z2gxZ0xRV0NjQmE1Y1FwWUs2dy1sUlE1aEw3WF9Xb1dTWVFvYml6aGtmOGpEZGJDNlBGcC12SG1naVpqdDJQZzIwR3p6ZTkwMmdlU0tXZWM3Uy1QZWEwNk12Ym0tZFNCRV9ramtqZy1xRFZhZ1hXX21rN1NvZVl1RWE0NUo1QzN1UW5uVlZXTGM4d090RjFEaVpRa1dJTXVpT1E= - base64 -d

root@master:~# kubectl get secrets metrics-server-token-pdn55 -n kube-system -o jsonpath={.data.token} | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Imxmc0FyRFhKTXUwRkMtREpaNGVkWGdBUXB6NDhfUGRNR3VRenlYTEFfb0UifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJtZXRyaWNzLXNlcnZlci10b2tlbi1wZG41NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJtZXRyaWNzLXNlcnZlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjMxNmZmNmQyLThlNDUtNDQ2MS05ZGZhLWRkYWVlNjNiMWU5MiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTptZXRyaWNzLXNlcnZlciJ9.fuiD7L7j5Lq5MCdzq30you7OJ5okMOg7ovdopXXcXlXuWbNHVW5q5UJnB0Ovta3i0acCSX7Kcs_AcM1YBtWFhYvupE_Q8UvzIR7GAhn9rrWK9i1U7iGP9AWx0qUBPu6vakJ1xo1iS0PrGpYsHcc-AjulFs0jz0BF7cCLwT3yy2bN5buDOVotH_aDeo9gh1gLQWCcBa5cQpYK6w-lRQ5hL7X_WoWSYQobizhkf8jDdbC6PFp-vHmgiZjt2Pg20Gzze902geSKWec7S-Pea06Mvbm-dSBE_kjkjg-qDVagXW_mk7SoeYuEa45J5C3uQnnVVWLc8wOtF1DiZQkWIMuiOQ- 변수로 저장

root@master:~# TKN=$(kubectl get secrets metrics-server-token-pdn55 -n kube-system -o jsonpath={.data.token} | base64 -d)- 토큰 사용하여 접속

root@master:~# curl -Ssk --header "Authorization: Bearer ${TKN}" https://192.168.8.100:10250/metrics/cadvisor

...

# HELP machine_nvm_avg_power_budget_watts NVM power budget.

# TYPE machine_nvm_avg_power_budget_watts gauge

machine_nvm_avg_power_budget_watts{boot_id="1d10544f-c81f-4e28-a4b1-a4a3cb02e02c",machine_id="4fd9e3209828436888f0cb339de09edf",system_uuid="90394d56-6661-789e-16af-928e53cacb31"} 0

# HELP machine_nvm_capacity NVM capacity value labeled by NVM mode (memory mode or app direct mode).

# TYPE machine_nvm_capacity gauge

machine_nvm_capacity{boot_id="1d10544f-c81f-4e28-a4b1-a4a3cb02e02c",machine_id="4fd9e3209828436888f0cb339de09edf",mode="app_direct_mode",system_uuid="90394d56-6661-789e-16af-928e53cacb31"} 0

machine_nvm_capacity{boot_id="1d10544f-c81f-4e28-a4b1-a4a3cb02e02c",machine_id="4fd9e3209828436888f0cb339de09edf",mode="memory_mode",system_uuid="90394d56-6661-789e-16af-928e53cacb31"} 0

# HELP machine_scrape_error 1 if there was an error while getting machine metrics, 0 otherwise.

# TYPE machine_scrape_error gauge

machine_scrape_error 0-s: silent

-k: insecure 접속

- prometheus-server-preconfig.yaml

root@master:~/lab2/cicd_samplecode# vi prometheus-server-preconfig.sh #!/usr/bin/env bash

# check helm command

echo "[Step 1/4] Task [Check helm status]"

if [ ! -e "/usr/local/bin/helm" ]; then

echo "[Step 1/4] helm not found"

exit 1

fi

echo "[Step 1/4] ok"

# check metallb

echo "[Step 2/4] Task [Check MetalLB status]"

namespace=$(kubectl get namespace metallb-system -o jsonpath={.metadata.name} 2> /dev/null)

if [ "$namespace" == "" ]; then

echo "[Step 2/4] metallb not found"

exit 1

fi

echo "[Step 2/4] ok"

# create nfs directory & change owner

nfsdir=/nfs_shared/prometheus/server

echo "[Step 3/4] Task [Create NFS directory for prometheus-server]"

if [ ! -e "$nfsdir" ]; then

./nfs-exporter.sh prometheus/server

chown 1000:1000 $nfsdir

echo "$nfsdir created"

echo "[Step 3/4] Successfully completed"

else

echo "[Step 3/4] failed: $nfsdir already exists"

exit 1

fi

# create pv,pvc

echo "[Step 4/4] Task [Create PV,PVC for prometheus-server]"

pvc=$(kubectl get pvc prometheus-server -o jsonpath={.metadata.name} 2> /dev/null)

if [ "$pvc" == "" ]; then

kubectl apply -f prometheus-server-volume.yaml

echo "[Step 4/4] Successfully completed"

else

echo "[Step 4/4] failed: prometheus-server pv,pvc already exist"

fi- prometheus-server-volume.yaml

root@master:~/lab2/cicd_samplecode# vi prometheus-server-volume.yaml apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-server

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.8.100

path: /nfs_shared/prometheus/server

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus-server

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi- 배포

root@master:~/lab2/cicd_samplecode# ./prometheus-server-preconfig.sh

[Step 1/4] Task [Check helm status]

[Step 1/4] ok

[Step 2/4] Task [Check MetalLB status]

[Step 2/4] ok

[Step 3/4] Task [Create NFS directory for prometheus-server]

Failed to get unit file state for nfs.service: No such file or directory

/nfs_shared/prometheus/server created

[Step 3/4] Successfully completed

[Step 4/4] Task [Create PV,PVC for prometheus-server]

persistentvolume/prometheus-server created

persistentvolumeclaim/prometheus-server created

[Step 4/4] Successfully completedroot@master:~/lab2/cicd_samplecode# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/jenkins 5Gi RWX Retain Bound default/jenkins 4d18h

persistentvolume/prometheus-server 5Gi RWX Retain Bound default/prometheus-server 54s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins Bound jenkins 5Gi RWX 4d18h

persistentvolumeclaim/prometheus-server Bound prometheus-server 5Gi RWX 54s- prometheus-install.sh

root@master:~/lab2/cicd_samplecode# vi prometheus-install.sh #!/usr/bin/env bash

helm install prometheus edu/prometheus \

--set pushgateway.enabled=false \

--set alertmanager.enabled=false \

--set nodeExporter.tolerations[0].key=node-role.kubernetes.io/master \

--set nodeExporter.tolerations[0].effect=NoSchedule \

--set nodeExporter.tolerations[0].operator=Exists \

--set server.persistentVolume.existingClaim="prometheus-server" \

--set server.securityContext.runAsGroup=1000 \

--set server.securityContext.runAsUser=1000 \

--set server.service.type="LoadBalancer" \

--set server.extraFlags[0]="storage.tsdb.no-lockfile" - prometheus 설치

root@master:~/lab2/cicd_samplecode# ./prometheus-install.sh

NAME: prometheus

LAST DEPLOYED: Tue Nov 1 10:37:32 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Prometheus server can be accessed via port 80 on the following DNS name from within your cluster:

prometheus-server.default.svc.cluster.local

Get the Prometheus server URL by running these commands in the same shell:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc --namespace default -w prometheus-server'

export SERVICE_IP=$(kubectl get svc --namespace default prometheus-server -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

echo http://$SERVICE_IP:80

#################################################################################

###### WARNING: Pod Security Policy has been moved to a global property. #####

###### use .Values.podSecurityPolicy.enabled with pod-based #####

###### annotations #####

###### (e.g. .Values.nodeExporter.podSecurityPolicy.annotations) #####

#################################################################################

For more information on running Prometheus, visit:

https://prometheus.io/- 배포 확인

root@master:~/lab2/cicd_samplecode# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins LoadBalancer 10.101.100.100 192.168.8.201 80:30782/TCP 4d18h

jenkins-agent ClusterIP 10.106.40.101 <none> 50000/TCP 4d18h

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d

prometheus-kube-state-metrics ClusterIP 10.101.169.195 <none> 8080/TCP 64s

prometheus-node-exporter ClusterIP None <none> 9100/TCP 64s

prometheus-server LoadBalancer 10.109.75.161 192.168.8.203 80:30710/TCP 64s

testweb-svc LoadBalancer 10.98.39.107 192.168.8.202 80:31059/TCP 3d18h192.168.203

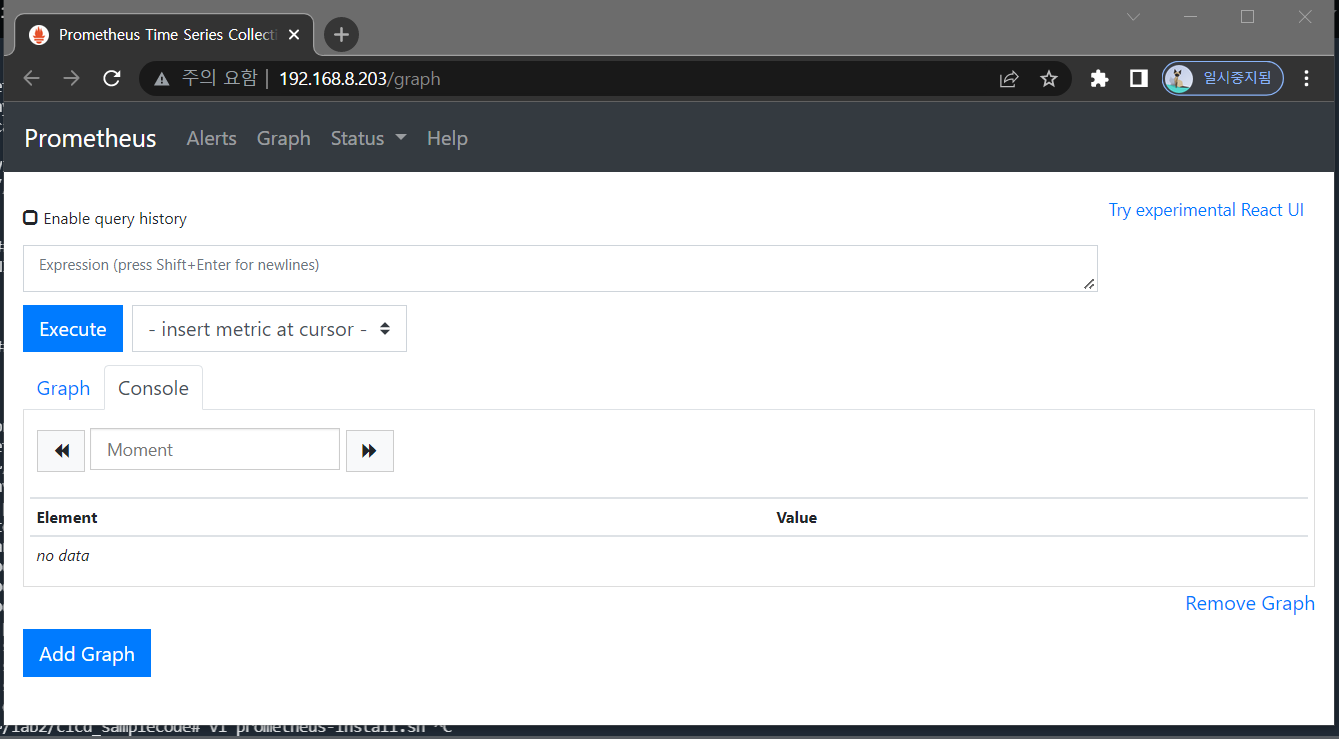

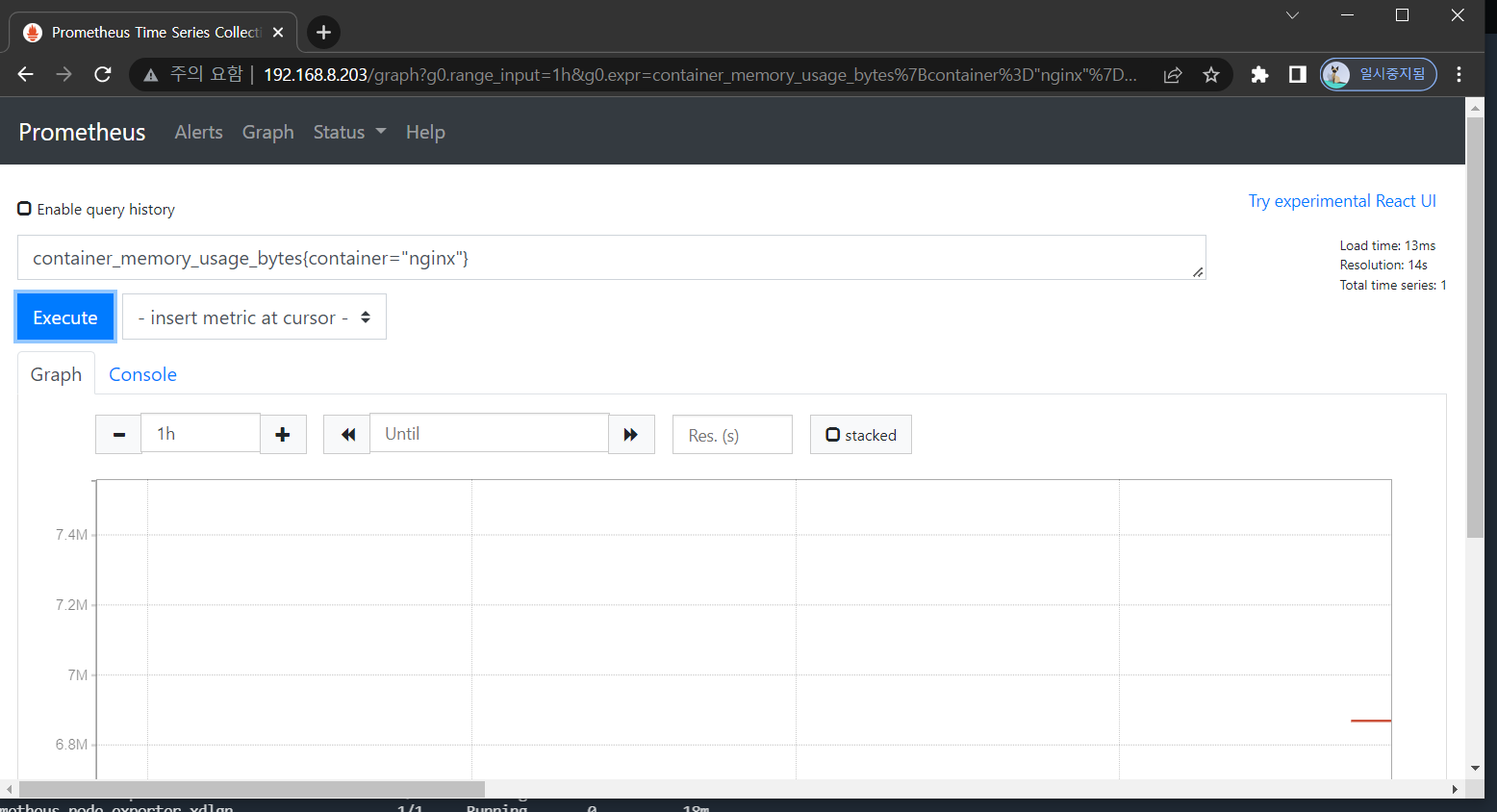

- prometheus 접속

- 메트릭 데이터 생성 확인

root@master:~/lab2/cicd_samplecode# ls /nfs_shared/prometheus/server/

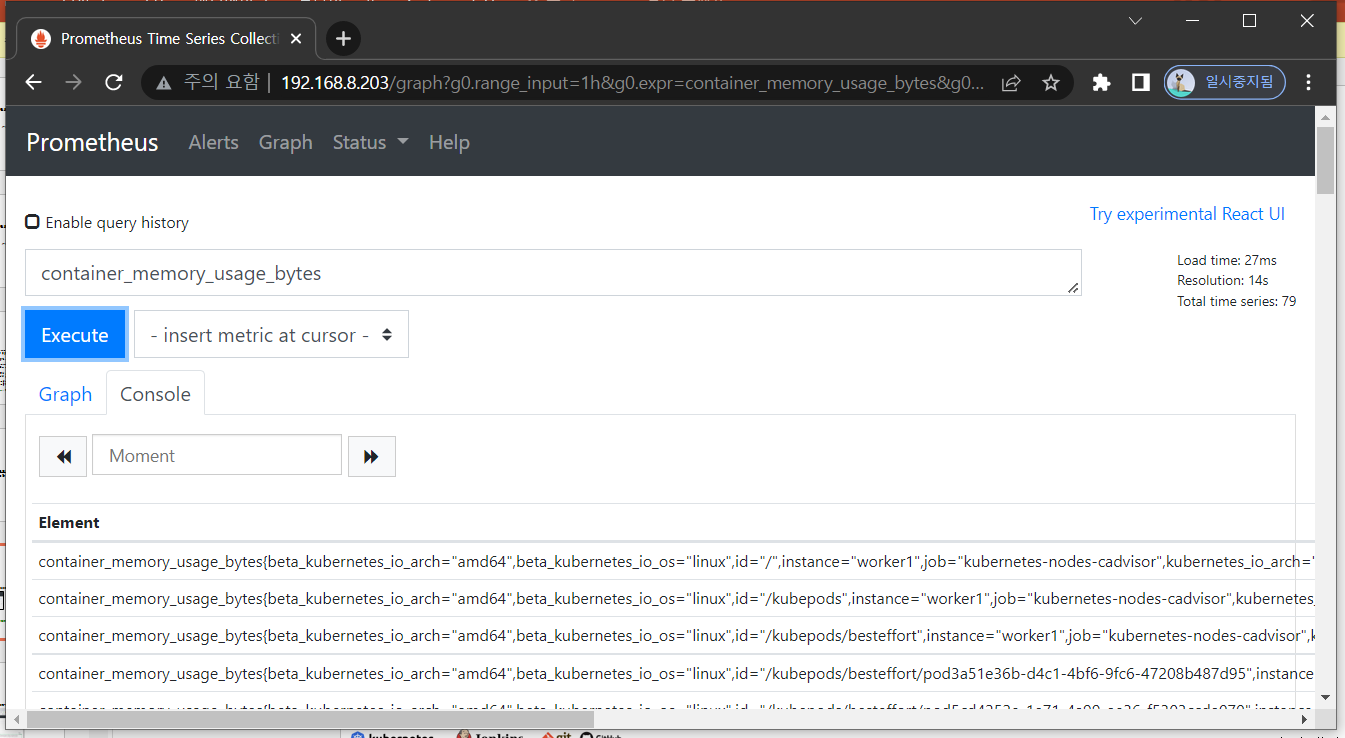

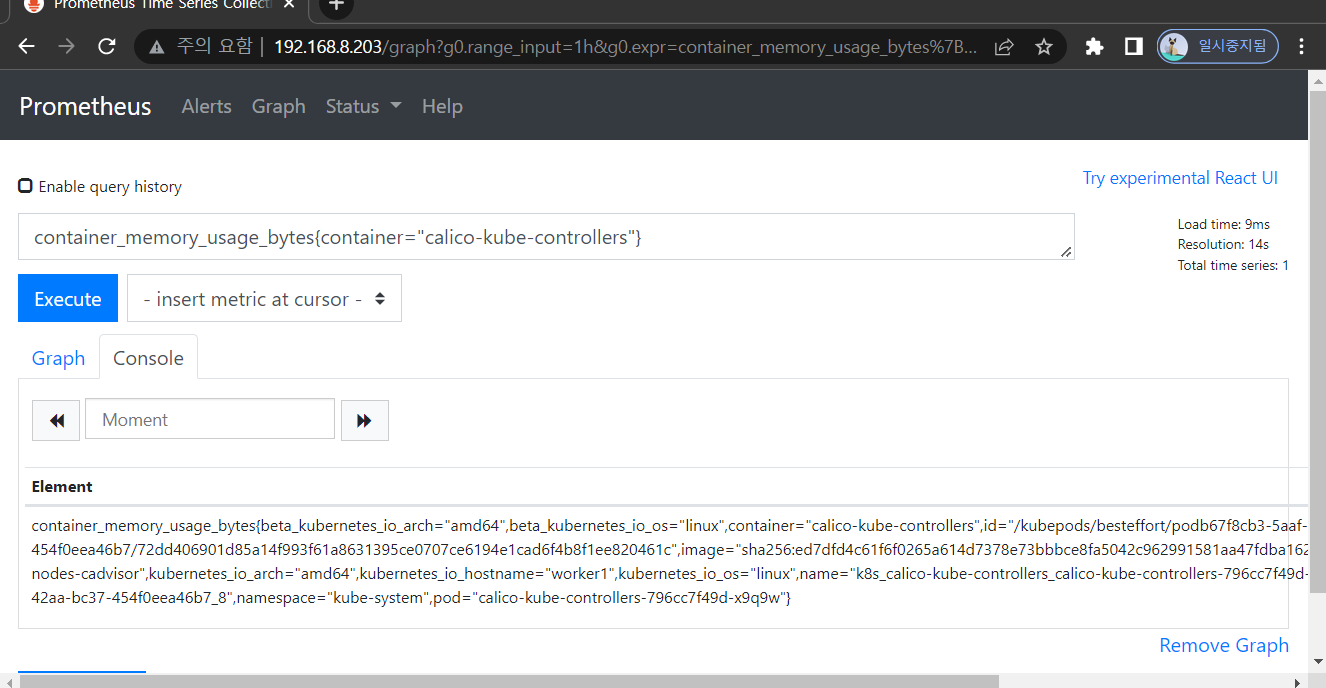

chunks_head queries.active wal- 메트릭 조회

- nginx deploy

root@master:~/lab2/cicd_samplecode# kubectl create deploy nginx --image=nginx - nginx 컨테이너 메트릭 확인

- nginx deploy 삭제

root@master:~/lab2/cicd_samplecode# kubectl delete deploy nginx

deployment.apps "nginx" deleted- 10분 후 nginx 컨테이너 메트릭 다시 확인

- 컨테이너 수준의 정보 (kubelet[cadvisor])

- 노드 자체의 정보 (node-exporter)

- 클러스터 정보 ({etcd <- api} -> kube-state-metrics

- grafana-preconfig.sh

root@master:~/lab2/cicd_samplecode# vi grafana-preconfig.sh #!/usr/bin/env bash

# check helm command

echo "[Step 1/4] Task [Check helm status]"

if [ ! -e "/usr/local/bin/helm" ]; then

echo "[Step 1/4] helm not found"

exit 1

fi

echo "[Step 1/4] ok"

# check metallb

echo "[Step 2/4] Task [Check MetalLB status]"

namespace=$(kubectl get namespace metallb-system -o jsonpath={.metadata.name} 2> /dev/null)

if [ "$namespace" == "" ]; then

echo "[Step 2/4] metallb not found"

exit 1

fi

echo "[Step 2/4] ok"

# create nfs directory & change owner

nfsdir=/nfs_shared/grafana

echo "[Step 3/4] Task [Create NFS directory for grafana]"

if [ ! -e "$nfsdir" ]; then

./nfs-exporter.sh grafana

chown 1000:1000 $nfsdir

ls /nfs-shared

echo "$nfsdir created"

echo "[Step 3/4] Successfully completed"

else

echo "[Step 3/4] failed: $nfsdir already exists"

exit 1

fi

# create pv,pvc

echo "[Step 4/4] Task [Create PV,PVC for grafana]"

pvc=$(kubectl get pvc grafana -o jsonpath={.metadata.name} 2> /dev/null)

if [ "$pvc" == "" ]; then

kubectl apply -f grafana-volume.yaml

echo "[Step 4/4] Successfully completed"

kubectl get pv,pvc

else

echo "[Step 4/4] failed: grafana pv,pvc already exist"

fi- 실행

root@master:~/lab2/cicd_samplecode# ./grafana-preconfig.sh

[Step 1/4] Task [Check helm status]

[Step 1/4] ok

[Step 2/4] Task [Check MetalLB status]

[Step 2/4] ok

[Step 3/4] Task [Create NFS directory for grafana]

Failed to get unit file state for nfs.service: No such file or directory

ls: cannot access '/nfs-shared': No such file or directory

/nfs_shared/grafana created

[Step 3/4] Successfully completed

[Step 4/4] Task [Create PV,PVC for grafana]

persistentvolume/grafana created

persistentvolumeclaim/grafana created

[Step 4/4] Successfully completed

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/grafana 5Gi RWX Retain Available 1s

persistentvolume/jenkins 5Gi RWX Retain Bound default/jenkins 4d18h

persistentvolume/prometheus-server 5Gi RWX Retain Bound default/prometheus-server 48m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana Pending 1s

persistentvolumeclaim/jenkins Bound jenkins 5Gi RWX 4d18h

persistentvolumeclaim/prometheus-server Bound prometheus-server 5Gi RWX 48m아직 Pending 상태

- 잠시 후 다시 확인

root@master:~/lab2/cicd_samplecode# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/grafana 5Gi RWX Retain Bound default/grafana 42s

persistentvolume/jenkins 5Gi RWX Retain Bound default/jenkins 4d18h

persistentvolume/prometheus-server 5Gi RWX Retain Bound default/prometheus-server 48m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana Bound grafana 5Gi RWX 42s

persistentvolumeclaim/jenkins Bound jenkins 5Gi RWX 4d18h

persistentvolumeclaim/prometheus-server Bound prometheus-server 5Gi RWX 48mBound 상태

- grafana-install.sh

root@master:~/lab2/cicd_samplecode# vi grafana-install.sh root@master:~/lab2/cicd_samplecode# cat grafana-install.sh

#!/usr/bin/env bash

helm install grafana edu/grafana \

--set persistence.enabled=true \

--set persistence.existingClaim=grafana \

--set service.type=LoadBalancer \

--set securityContext.runAsUser=1000 \

--set securityContext.runAsGroup=1000 \

--set adminPassword="admin"- grafana 설치

root@master:~/lab2/cicd_samplecode# ./grafana-install.sh

NAME: grafana

LAST DEPLOYED: Tue Nov 1 11:24:11 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get your 'admin' user password by running:

kubectl get secret --namespace default grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

2. The Grafana server can be accessed via port 80 on the following DNS name from within your cluster:

grafana.default.svc.cluster.local

Get the Grafana URL to visit by running these commands in the same shell:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc --namespace default -w grafana'

export SERVICE_IP=$(kubectl get svc --namespace default grafana -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

http://$SERVICE_IP:80

3. Login with the password from step 1 and the username: admin- grafana 배포 확인

root@master:~/lab2/cicd_samplecode# kubectl get svc | grep grafana

grafana LoadBalancer 10.98.136.118 192.168.8.204 80:32011/TCP 6m49s192.168.8.204

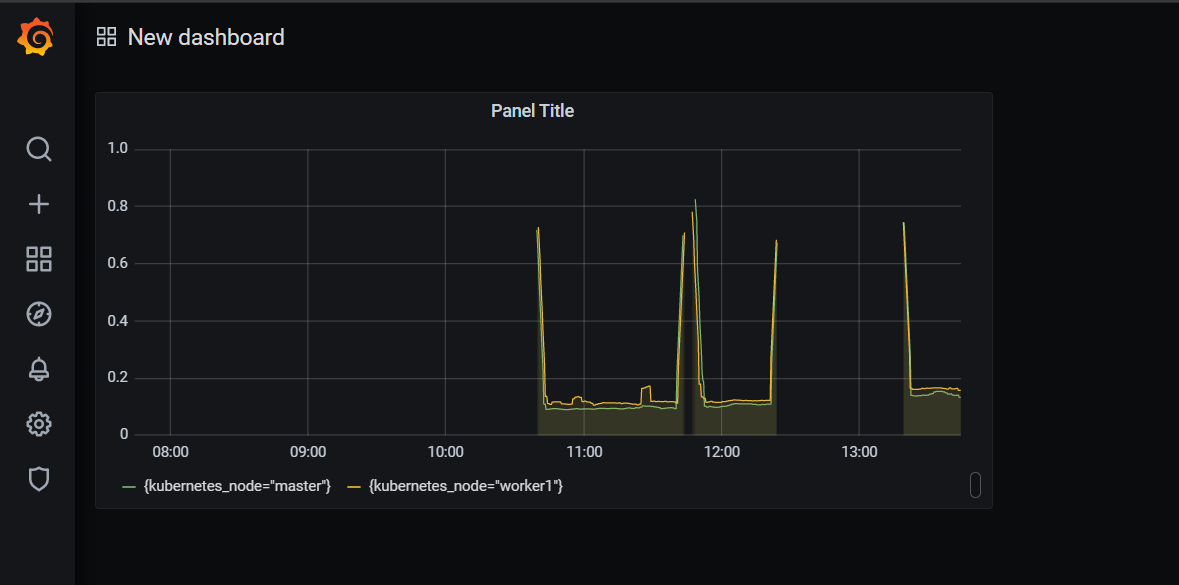

-

grafana 접속

초기 username과 password는 admin, admin

-

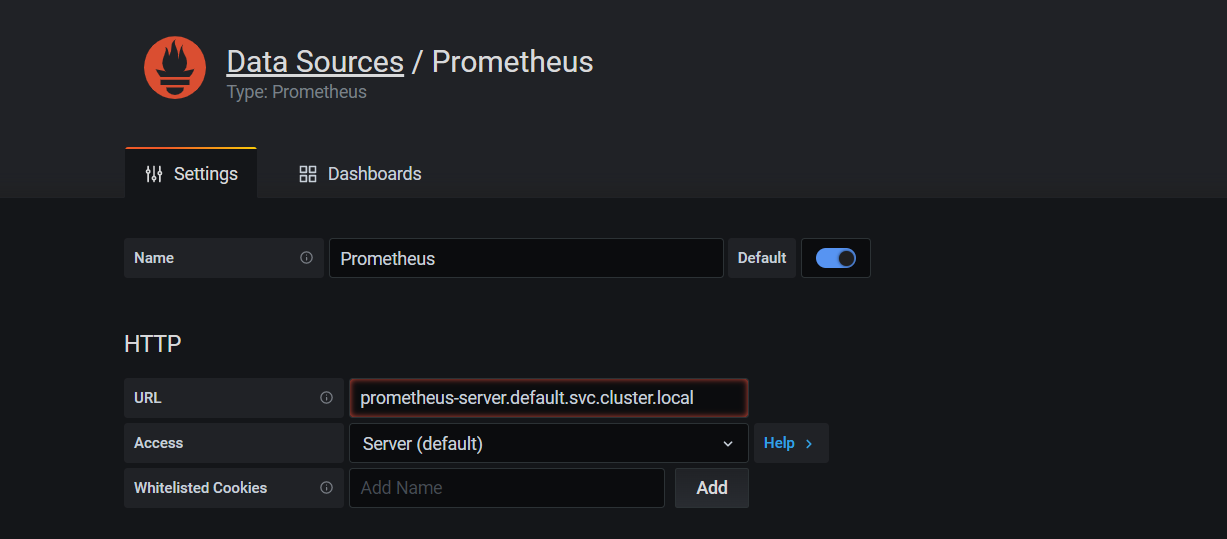

datasource 추가

prometheus-server.default.svc.cluster.local

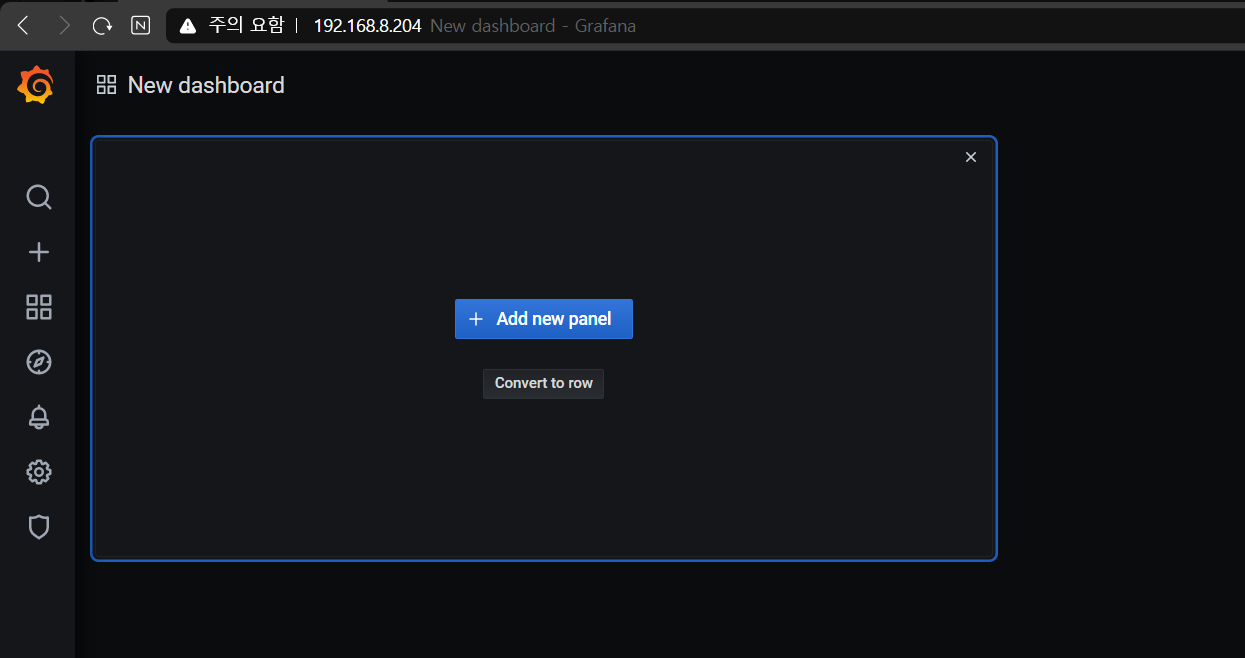

- add new panel

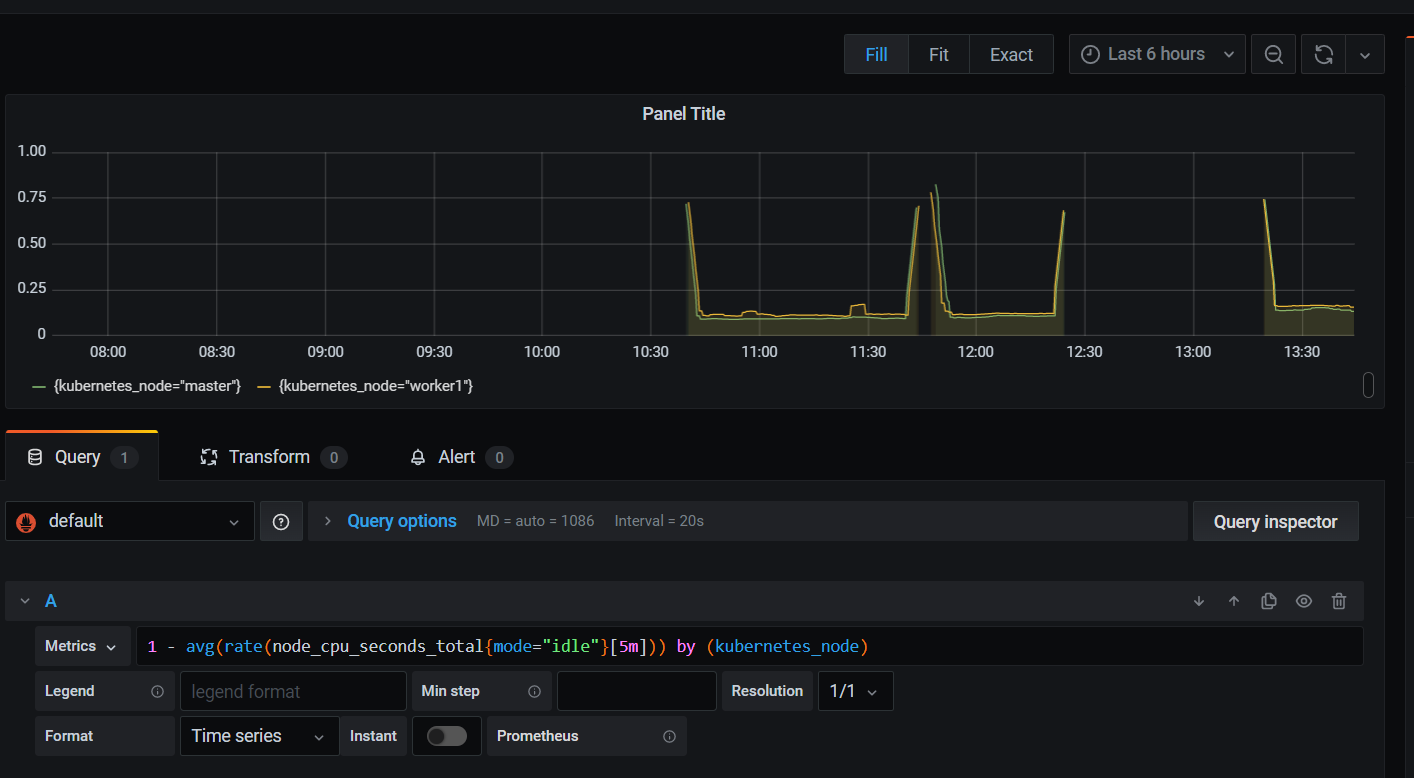

- 쿼리 추가

1 - avg(rate(node_cpu_seconds_total{mode="idle"}[5m])) by (kubernetes_node)

- 대시보드 확인