볼륨 사용하기

- host path, localhost: 데이터를 영구적으로 보관하기 어렵다

- pod간 컨테이너간 스토리지 공유 -> pod 하나를 스토리지용으로 활용하고 클러스터를 통해 타 호스트에 있는 pod에서도 접근할 수 있다.

- 외부에 스토리지를 두고 이를 연결하여 사용하는 방법을 선택해야 한다.

- file storage: 서버 -> nfs(공유 저장소)

- block storage: 서버 -> iSCSI(볼륨)

- object storage: 사용자 별 일정 공간 제공 (적절하지 않음)

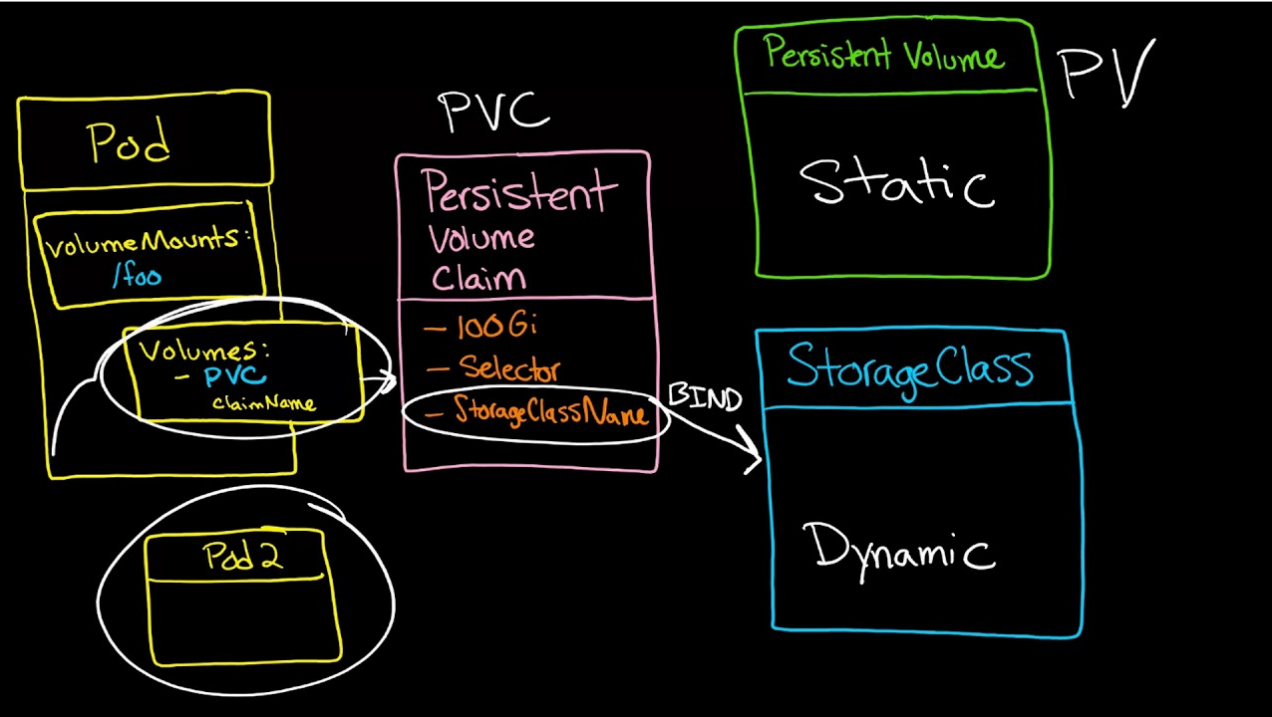

운영자와 개발자의 구분에 따른 이용

yaml 파일 내에 볼륨을 정의한다. 해당 정의에는 nfs, 주소, 디렉토리명 등을 기입하고 이를 pod에 연결하는 형태로 작성한다. 이 경우 운영자는 볼륨을 만들어 이를 pool에 보관하고 개발자는 자신이 필요한 정보(볼륨 크기, 접속 방법(1:1 또는 1:N), PVC가 없을 경우 재사용 여부)만을 요청하면 해당 정보와 매칭되는 pool에 담겨있는 볼륨을 자동으로 연결시켜 준다.

동적 프로비저닝

- nfs, iscsi, glusterFS, EBS 등에서 명령을 전달하여 볼륨을 생성할 수 있도록 지시하는 역할을 수행한는데, 퍼블릭 환경은 해당 프로비저너가 이미 존재하고 있으므로 별도의 추가 작업 없이 개발자가 요청만 하면 볼륨을 생성하여 제공할 수 있다.

- 가령 AWS의 경우 EKS, GCP의 경우 GKE 동적 프로비저너가 있다.

EKS에서 작성 예시

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: slow

provisioner: kubernetes.io/aws-ebs

parameters:

type: st1 # 제공 받고 싶은 디스크의 형태

fsType: ext4

zones: ap-northeast-2a # EKS 가 위치한 가용 영역을 입력실습 1 - PV, PVC 연결

방식 1) storageClassName

- pv의 yaml 작성

root@manager:~/k8slab/pvpvclab# vi pv.yaml apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

storageClassName: testsc

persistentVolumeReclaimPolicy: Retain

nfs:

server: 211.183.3.100

path: /sharedspec: storageClassName: testsc

- 배포

root@manager:~/k8slab/pvpvclab# k apply -f pv.yaml

persistentvolume/nfs-pv created- 배포 확인

root@manager:~/k8slab/pvpvclab# k get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

nfs-pv 100Mi RWX Retain Available testsc 22s- pvc의 yaml 작성

root@manager:~/k8slab/pvpvclab# vi pvc.yaml apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi- pvc 배포

root@manager:~/k8slab/pvpvclab# k apply -f pvc.yaml

persistentvolumeclaim/pvc created- pvc 배포 확인

root@manager:~/k8slab/pvpvclab# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Available testsc 2m32s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc Pending -> storageClassName이 다르기 때문에 Pending(연결되지 않음) 상태임

- pvc에 storageClassName 추가

root@manager:~/k8slab/pvpvclab# vi pvc.yaml apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: testsc

resources:

requests:

storage: 100Mispec: storageClassName: testsc

- pvc 재배포

root@manager:~/k8slab/pvpvclab# k delete -f pvc.yaml

persistentvolumeclaim "pvc" deleted

root@manager:~/k8slab/pvpvclab# k apply -f pvc.yaml

persistentvolumeclaim/pvc created- pvc 배포 확인

root@manager:~/k8slab/pvpvclab# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Bound default/pvc testsc 4m48s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc Bound nfs-pv 100Mi RWX testsc 44s-> storageClassName이 같기 때문에 Bound(연결)되었다.

수동 환경에서 PV와 PVC를 필터링 요청한 개발자에게 정확히 제공하기 위하여 아래와 같은 방법을 사용할 수 있다.

1. storageClassName: 일종의 label과 같이 pv, pvc에 이름을 지정하고 동일한 이름일 경우 용량, 접근, 퍼미션 등을 비교하여 Bing 시킨다. => 동적 프로비저닝에서는 반드시 필요하다!

2. LoadBalancer에서 특정 pod로 트래픽을 전달하기 위해 사용했던 selector를 사용한다. PV에 라벨을 미리 부착해두어야 한다.

방식 2) Selector-Label 사용

- pv의 yaml 작성

root@manager:~/k8slab/pvpvclab# vi pv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

labels:

dev: goyangyee # Label 부착

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 211.183.3.100

path: /sharedmetadata: labels: dev: goyangyee # Label 부착

- pvc의 yaml 작성

root@manager:~/k8slab/pvpvclab# vi pvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

spec:

selector:

matchLabels:

dev: goyangyee # Label 부착

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mispec: selector: matchLabels: dev: goyangyee # Label 부착

- pv, pvc 배포

root@manager:~/k8slab/pvpvclab# k delete -f .

root@manager:~/k8slab/pvpvclab# k apply -f pv.yaml

persistentvolume/nfs-pv created

root@manager:~/k8slab/pvpvclab# k apply -f pvc.yaml

persistentvolumeclaim/pvc created- 배포 확인

root@manager:~/k8slab/pvpvclab# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Bound default/pvc 19s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc Bound nfs-pv 100Mi RWX 13s-> Label이 같이 때문에 Bound(연결) 되었다.

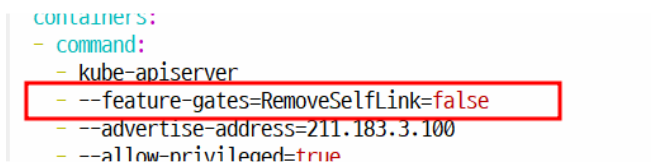

실습 2 - 동적 프로비저닝

aws, gcp, azure의 경우에는 각 CSP에서 제공하는 블록 스토리지를 제어할 수 있는 동적 프로비저너가 이미 존재하기 때문에 각 CSP에서 kubernetes 서비스를 제공하는 engine을 이용하여 손쉽게 볼륨을 제공받을 수 있다. 하지만 on-premise에서 직접 클라우드 환경을 구성하여 운영한다면 직접 프로비저너를 준비해야 한다. 우리는 nfs를 이용하여 볼륨(디렉토리)를 제공하므로 nfs-provisioner를 생성해야 한다. 이는 pod로 존재한다.

- service account 확인

root@manager:~/k8slab/pvpvclab# k get sa

NAME SECRETS AGE

default 1 22h- 동적 프로비저닝을 위한 준비

root@manager:~/k8slab/pvpvclab# vi /etc/kubernetes/manifests/kube-apiserver.yaml spec:

containers:

- command:

- kube-apiserver

- --feature-gates=RemoveSelfLink=false

step 1) Service Account 및 Role 생성

root@manager:~/k8slab/pvpvclab/dynamic# vi sa.yaml kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-pod-provisioner-sa

---

kind: ClusterRole # Role of kubernetes

apiVersion: rbac.authorization.k8s.io/v1 # auth API

metadata:

name: nfs-provisioner-clusterRole

rules:

- apiGroups: [""] # rules on persistentvolumes

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-provisioner-rolebinding

subjects:

- kind: ServiceAccount

name: nfs-pod-provisioner-sa # defined on top of file

namespace: default

roleRef: # binding cluster role to service account

kind: ClusterRole

name: nfs-provisioner-clusterRole # name defined in clusterRole

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-pod-provisioner-otherRoles

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-pod-provisioner-otherRoles

subjects:

- kind: ServiceAccount

name: nfs-pod-provisioner-sa # same as top of the file

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: nfs-pod-provisioner-otherRoles

apiGroup: rbac.authorization.k8s.io- sa 배포

root@manager:~/k8slab/pvpvclab/dynamic# k apply -f sa.yaml

serviceaccount/nfs-pod-provisioner-sa created

clusterrole.rbac.authorization.k8s.io/nfs-provisioner-clusterRole created

clusterrolebinding.rbac.authorization.k8s.io/nfs-provisioner-rolebinding created

role.rbac.authorization.k8s.io/nfs-pod-provisioner-otherRoles created

rolebinding.rbac.authorization.k8s.io/nfs-pod-provisioner-otherRoles created- sa 배포 확인

root@manager:~/k8slab/pvpvclab/dynamic# k get sa

NAME SECRETS AGE

default 1 24h

nfs-pod-provisioner-sa 1 2m21sstep 2) Provisioner Pod 생성

- Pod Provisioner: NFS 서버를 동적 프로비저닝으로 사용할 수 있는 도구

root@manager:~/k8slab/pvpvclab/dynamic# vi provisioner.yaml kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-pod-provisioner

spec:

selector:

matchLabels:

app: nfs-pod-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-pod-provisioner

spec:

serviceAccountName: nfs-pod-provisioner-sa # name of service account created in rbac.yaml

containers:

- name: nfs-pod-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-provisioner-v

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME # do not change

value: nfs-test # SAME AS PROVISONER NAME VALUE IN STORAGECLASS

- name: NFS_SERVER # do not change

value: 211.183.3.100 # Ip of the NFS SERVER

- name: NFS_PATH # do not change

value: /shared # path to nfs directory setup

volumes:

- name: nfs-provisioner-v # same as volumemouts name

nfs:

server: 211.183.3.100

path: /sharedcontainers: - volumeMounts: - name: nfs-provisioner-v volumes: - name: nfs-provisioner-v # same as volumemouts name

- pod provisioner 배포

root@manager:~/k8slab/pvpvclab/dynamic# k apply -f provisioner.yaml

deployment.apps/nfs-pod-provisioner created- 배포 확인

root@manager:~/k8slab/pvpvclab/dynamic# k get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nfs-pod-provisioner 1/1 1 1 22sstep 3) 스토리지 클래스 생성

root@manager:~/k8slab/pvpvclab/dynamic# vi storageClass.yaml apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storageclass # IMPORTANT pvc needs to mention this name

provisioner: nfs-test # name can be anything

parameters:

archiveOnDelete: "false"- 스토리지 클래스 배포

root@manager:~/k8slab/pvpvclab/dynamic# k apply -f storageClass.yaml

storageclass.storage.k8s.io/nfs-storageclass created- 배포 확인

root@manager:~/k8slab/pvpvclab/dynamic# k get StorageClass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storageclass nfs-test Delete Immediate false 5sstep 4) pvc 생성

root@manager:~/k8slab/pvpvclab/dynamic# vi pvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc-test

spec:

storageClassName: nfs-storageclass # SAME NAME AS THE STORAGECLASS

accessModes:

- ReadWriteMany # must be the same as PersistentVolume

resources:

requests:

storage: 50Mi- pvc 배포

root@manager:~/k8slab/pvpvclab/dynamic# k apply -f pvc.yaml

persistentvolumeclaim/nfs-pvc-test created

> nfs-pvc-test- pvc 배포 확인

root@manager:~/k8slab/pvpvclab/dynamic# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Bound default/pvc 112m

persistentvolume/pvc-827ca925-7560-4699-ac0a-a4e43cc56dc8 50Mi RWX Delete Bound default/nfs-pvc-test nfs-storageclass 39s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/nfs-pvc-test Bound pvc-827ca925-7560-4699-ac0a-a4e43cc56dc8 50Mi RWX nfs-storageclass 39s

persistentvolumeclaim/pvc Bound nfs-pv 100Mi RWX 112mnfs-pvc-test가 Bound 되었다.

- nfs 서버의 /shared에 생성된 디렉토리 확인

root@manager:~/k8slab/pvpvclab/dynamic# ls /shared/

default-nfs-pvc-test-pvc-827ca925-7560-4699-ac0a-a4e43cc56dc8 step 5) pv를 사용할 수 있는 pod 배포하기

root@manager:~/k8slab/pvpvclab/dynamic# vi deployment.yamlapiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nfs-nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

volumes:

- name: nfs-test #

persistentVolumeClaim:

claimName: nfs-pvc-test # same name of pvc that was created

containers:

- image: nginx

name: nginx

volumeMounts:

- name: nfs-test # name of volume should match claimName volume

mountPath: mydata2 # mount inside of contianervolumes: - name: nfs-test # containers: - volumeMounts: - name: nfs-test # name of volume should match claimName volume

- pod 배포

```shell

root@manager:~/k8slab/pvpvclab/dynamic# k apply -f deployment.yaml

deployment.apps/nfs-nginx created- pod 배포 확인

root@manager:~/k8slab/pvpvclab/dynamic# k get deploy | grep nfs-nginx

nfs-nginx 1/1 1 1 42sstep 6) pod에서 연결된 볼륨 사용 가능 여부 확인

root@manager:/shared/default-nfs-pvc-test-pvc-827ca925-7560-4699-ac0a-a4e43cc56dc8# touch test.txt-> nfs 서버에서 파일 생성

root@manager:~# k exec nfs-nginx-d9bbf65b5-jnktw -- ls /mydata2

test.txt-> nfs 서버에서 생성한 파일을 pod에서 볼 수 있다.

실습 3 - ServiceAccount

-

UserAccount: k8s 내에 있는 오브젝트들을 동작시키는 것 등은 k8s 시스템에서 제어한다는 의미에서 ServiceAccount를 사용한다. 우리가 알고있는 일반적인 UserAccount의 개념도 있지만 주로 ServiceAccount를 사용하고 만약 UserAccount를 사용하고 싶다면 외부 인증 서버를 연결해야 한다.

-

ServiceAccount 확인

root@manager:~# k get sa

NAME SECRETS AGE

default 1 26h- secret 확인

root@manager:~# k get secret

NAME TYPE DATA AGE

default-token-5k4gt kubernetes.io/service-account-token 3 26h- 토큰 자세히 보기

root@manager:~# k describe secret default-token-5k4gt

Name: default-token-5k4gt

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: default

kubernetes.io/service-account.uid: 24009692-63b2-4726-9f7b-8793b2e304cd

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 7 bytes

token: [토큰]- config 확인

root@manager:~# cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: [공개키]

server: https://211.183.3.100:6443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kubernetes-admin

name: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: [공개키]

client-key-data: [개인키]server: https://211.183.3.100:6443

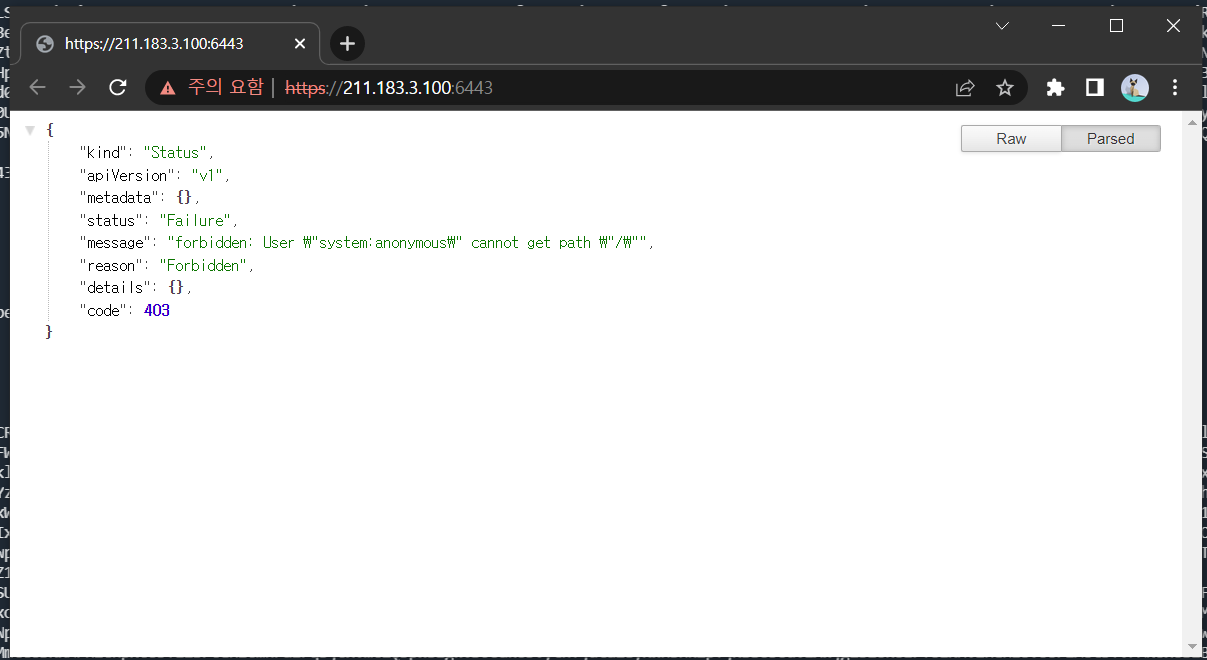

- 해당 페이지에 접속

api로 접속했을 경우 결과 내용을 확인할 수 없다. SA가 누구인지(default인지, user1인지) 알 수 없기 때문이다.

암호화 통신 절차

1. PC : 사용자의 패스워드 입력

2. PC : 인증서 전달

3. 서버: 인증서 검증 요청

4. 기관: 발급자의 전자서명으로 검증

5. 서버: A의 공개키 추출

6. 서버: 대칭키 생성

7. 서버: 암호화된 대칭키 파일 생성

8. 서버: 파일 전달

9. PC : 대칭키 획득

10. PC, 서버: 대칭키로 파일 암호화

11. PC, 서버: 안전한 파일 송수신 가능이미지 출처: https://brunch.co.kr/@ka3211/13

export KUBECONFIG=/etc/kubernetes/admin.conf위의 과정을 통하면 api-server에 접속할 수 있는 권한을 현재 계정(root)에게 부여하게 된다(kubernetes-admin).

실제 환경이라면 적절한 권한을 부여한 SA를 별도로 생성하여 운영해야 한다. 만약 고객 관리가 필요하다면 고객에게는 해당 프로젝트(namespace) 내에서 get 등을 할 수 있는 SA를 발행하서 제공해줄 수 있어야 한다.

각 namespace 별로 기본적으로 default라는 SA가 있다.

ServiceAccount 생성

root@manager:~# k create sa user1

serviceaccount/user1 created- secret 확인

root@manager:~# k get secret

NAME TYPE DATA AGE

default-token-5k4gt kubernetes.io/service-account-token 3 26h

user1-token-xnlgc kubernetes.io/service-account-token 3 39s-> user1의 토큰이 발행되어있다.

- user1의 권한으로 api 사용

root@manager:~# k get svc --as system:serviceaccount:default:user1

Error from server (Forbidden): services is forbidden: User "system:serviceaccount:default:user1" cannot list resource "services" in API group "" in the namespace "default"-> user1은 권한이 없다.

- API 접속

root@manager:~# curl https://localhost:6443

curl: (60) SSL certificate problem: unable to get local issuer certificate

More details here: https://curl.haxx.se/docs/sslcerts.html

curl failed to verify the legitimacy of the server and therefore could not

establish a secure connection to it. To learn more about this situation and

how to fix it, please visit the web page mentioned above.-> 인증서가 없기 때문에 접속 불가

- 인증서 없이 접속해보기

root@manager:~# curl https://localhost:6443 -k

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403-> 토큰 정보가 포함되지 않은 상태에서 api로 접속했을 경우 결과 내용을 확인할 수 없다. SA가 누구인지(default인지, user1인지) 알 수 없기 때문이다.

실습 4 - RBAC(Role Based Access Control) 구성

Role

- pod, deployment, svc 등과 같이 특정 namespace에 포함되는 오브젝트에 대한 명령 실행. 해당 namespace에만 제한적이다.

- RoleBinding이 필요하다.

ClusterRole

- namespace, node, pv 등과 같이 특정 namespace에 속하지 않는 오브젝트에 대한 명령 뿐만 아니라 클러스터 전반에 걸쳐 지정된 몇몇 namespace에게 반복적인 명령 등을 전달하고자 하는 경우에 활용한다.

- ClusterRoleBinding이 필요하다.

default: kubernetes-admin(전체 관리 가능함)

user1: 매핑된 권한(role) 없음

- role의 api 버전 확인

root@manager:~/k8slab/0908# k api-resources | grep -e ^roles

roles rbac.authorization.k8s.io/v1 true RoleapiVersion: rbac.authorization.k8s.io/v1

step 1) role 배포

- api 그룹 구분

APIVERSION: v1 => apiGroups: ""(core group)

APIVERSION: apps/v1 => apiGroups: apps- apiversion: v1

root@manager:~/k8slab/0908# k api-resources | grep services

NAME SHORTNAMES APIVERSION NAMESPACED KIND

services svc v1 true ServiceAPIVERSION: v1 => apiGroups: ""

- apiversion: apps/v1

root@manager:~# k api-resources | grep deployments

NAME SHORTNAMES APIVERSION NAMESPACED KIND

deployments deploy apps/v1 true DeploymentAPIVERSION: apps/v1 => apiGroups: "apps"

- 만약 service에 대하여 명령을 실행할 계획이고 리스트만 확인하고 싶다면

root@manager:~/k8slab/0908# k api-resources | grep services

NAME SHORTNAMES APIVERSION NAMESPACED KIND

services svc v1 true Service아래의 내용을 추가해야 함

rules: - apiGroups: [""] # APIVERSION: v1 resources: ["services"] # NAME verbs: ["get", "list"] # list: pod 전체 보기, get: 특정 pod 보기

- role 생성

root@manager:~/k8slab/0908# vi user1-role.yaml apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: user1role

rules:

- apiGroups: [""] # APIVERSION: v1

resources: ["services"] # NAME

verbs: ["get", "list"] # list: pod 전체 보기, get: 특정 pod 보기metadata: name: user1role

services 확인 권한 부여함.

- role 배포

root@manager:~/k8slab/0908# k apply -f user1-role.yaml

role.rbac.authorization.k8s.io/user1role created- role 배포 확인

root@manager:~/k8slab/0908# k get role

NAME CREATED AT

user1role 2022-09-08T06:34:29Zstep 2) RoleBinding 배포

- rolebinding 생성

root@manager:~/k8slab/0908# vi user1-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

namespace: default

name: user1-rolebinding

subjects:

- kind: ServiceAccount

name: user1

namespace: default

roleRef:

kind: Role

name: user1role

apiGroup: rbac.authorization.k8s.ioroleRef: name: user1role

- rolebinding 배포

root@manager:~/k8slab/0908# k apply -f user1-rolebinding.yaml

rolebinding.rbac.authorization.k8s.io/user1-rolebinding createdstep 3) API 사용

- services 확인

root@manager:~/k8slab/0908# k get svc --as system:serviceaccount:default:user1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 27hservices 확인 가능

- pods 확인

root@manager:~/k8slab/0908# k get pod --as system:serviceaccount:default:user1

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:default:user1" cannot list resource "pods" in API group "" in the namespace "default"service 확인 권한만 부여했기 때문에 pod는 확인 불가

Quiz. 다음 중 정상 동작하는 것은?

1. k get deployment --as list-only -> 정상

2. k get deployment --as get-only -> 오류

3. k get deployment --as watch-only -> 오류

Quiz.

현재 상태에서 user1이 deployment에 대하여 get이 가능하도록 해보세요.

- deployment api 확인

root@manager:~/k8slab/0908# k api-resources | grep deployment

NAME SHORTNAMES APIVERSION NAMESPACED KIND

deployments deploy apps/v1 true DeploymentAPIVERSION: apps/v1 => apiGroups: "apps"

아래 항목을 추가해야 한다.

rules: - apiGroups: ["apps"] # APIVERSION: apps/v1 resources: ["deployments"] # NAME verbs: ["get"]

- user1의 role 추가 (get deployments)

root@manager:~/k8slab/0908# vi user1-role.yaml apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: user1role

rules: # 아래에 사용할 수 있는 api그룹, 리소스, 명령 작성

- apiGroups: ["", "apps"]

resources: ["services", "deployments"]

verbs: ["get", "list"] # list: pod 전체 보기, get: 특정 pod 보기- role 재배포

root@manager:~/k8slab/0908# k apply -f user1-role.yaml

role.rbac.authorization.k8s.io/user1role configured- get deployment 확인

root@manager:~/k8slab/0908# k get deploy --as system:serviceaccount:default:user1

No resources found in default namespace.-> get deployment 명령어를 쓸 수 있다.

실습 5 - SA의 토큰을 사용해 API 접속(RBAC)

참고하면 좋은 포스트: https://bryan.wiki/291

step 1) 사용할 API를 user1의 role에 설정

root@manager:~/k8slab/0908# vi user1-role.yaml apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: user1role

rules: # 아래에 사용할 수 있는 api그룹, 리소스, 명령 작성

- apiGroups: ["", "apps"]

resources: ["pods", "services", "deployments"]

verbs: ["get", "list", "watch"] # list: pod 전체 보기, get: 특정 pod 보기아래와 같은 API를 사용할 것임

- apiGroups: ["", "apps"] resources: ["pods", "services", "deployments"] verbs: ["get", "list", "watch"]

- user1 role 재배포

root@manager:~/k8slab/0908# k apply -f .

role.rbac.authorization.k8s.io/user1role unchanged

rolebinding.rbac.authorization.k8s.io/user1-rolebinding unchangedstep 2) 토큰 확인

- secret 확인

root@manager:~/k8slab/0908# k get secret

NAME TYPE DATA AGE

default-token-5k4gt kubernetes.io/service-account-token 3 28h

user1-token-xnlgc kubernetes.io/service-account-token 3 107m- user1의 토큰 확인

root@manager:~/k8slab/0908# k describe secret user1-token-xnlgc

Name: user1-token-xnlgc

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: user1

kubernetes.io/service-account.uid: 3814bb7c-bb1a-46b7-8372-ba480e0bd2b5

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1066 bytes

namespace: 7 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6ImQyQjgxZW9nUlhJRWxILTdEbEZqY3FFRVBhV1oxUzRZc19reXExYXg3THcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InVzZXIxLXRva2VuLXhubGdjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InVzZXIxIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzgxNGJiN2MtYmIxYS00NmI3LTgzNzItYmE0ODBlMGJkMmI1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6dXNlcjEifQ.Tkcja7I3lJa1UXO0srs61EYSFKyLSSQ26lfJCvBJrAPIAK270ulVfVvnwp6QwVZOuAtn7RIfTsFjQcp4rpWdUMzWAGN9kv122E3hO8MnR_q5MVuRQbKR6GUNWhHJJ5HCUGS6ajnCVLlmi505QA7fFwbIPSEchZ8TK5rd7jSAtezo7VDFJu3NrKllM4O7geMNy2gFrirMKuoSq2k8BRyCdmw0uEX0--_TZKFs826Luh83AvM3GnhHiyVmAalpr0xZ2dqcTH7tNl1Dme_egD9ubI8Dcw6DI_YXyx0hB6qz5Lk2ZA_o03MS3aQ4e-jpqHJZw0UXK1NSQndorx8AwKfflw- 토큰의 이름을 변수에 담기

root@manager:~/k8slab/0908# export SECNAME=user1-token-xnlgc- 변수 확인

root@manager:~/k8slab/0908# echo $SECNAME

user1-token-xnlgc- user1 토큰 확인

root@manager:~/k8slab/0908# k get secret $SECNAME

NAME TYPE DATA AGE

user1-token-xnlgc kubernetes.io/service-account-token 3 111mroot@manager:~/k8slab/0908# k get secret $SECNAME -o jsonpath='{.data.token}'

ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNkltUXlRamd4Wlc5blVsaEpSV3hJTFRkRWJFWnFZM0ZGUlZCaFYxb3hVelJaYzE5cmVYRXhZWGczVEhjaWZRLmV5SnBjM01pT2lKcmRXSmxjbTVsZEdWekwzTmxjblpwWTJWaFkyTnZkVzUwSWl3aWEzVmlaWEp1WlhSbGN5NXBieTl6WlhKMmFXTmxZV05qYjNWdWRDOXVZVzFsYzNCaFkyVWlPaUprWldaaGRXeDBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpXTnlaWFF1Ym1GdFpTSTZJblZ6WlhJeExYUnZhMlZ1TFhodWJHZGpJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5elpYSjJhV05sTFdGalkyOTFiblF1Ym1GdFpTSTZJblZ6WlhJeElpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WlhKMmFXTmxMV0ZqWTI5MWJuUXVkV2xrSWpvaU16Z3hOR0ppTjJNdFltSXhZUzAwTm1JM0xUZ3pOekl0WW1FME9EQmxNR0prTW1JMUlpd2ljM1ZpSWpvaWMzbHpkR1Z0T25ObGNuWnBZMlZoWTJOdmRXNTBPbVJsWm1GMWJIUTZkWE5sY2pFaWZRLlRrY2phN0kzbEphMVVYTzBzcnM2MUVZU0ZLeUxTU1EyNmxmSkN2QkpyQVBJQUsyNzB1bFZmVnZud3A2UXdWWk91QXRuN1JJZlRzRmpRY3A0cnBXZFVNeldBR045a3YxMjJFM2hPOE1uUl9xNU1WdVJRYktSNkdVTldoSEpKNUhDVUdTNmFqbkNWTGxtaTUwNVFBN2ZGd2JJUFNFY2haOFRLNXJkN2pTQXRlem83VkRGSnUzTnJLbGxNNE83Z2VNTnkyZ0ZyaXJNS3VvU3EyazhCUnlDZG13MHVFWDAtLV9UWktGczgyNkx1aDgzQXZNM0duaEhpeVZtQWFscHIweFoyZHFjVEg3dE5sMURtZV9lZ0Q5dWJJOERjdzZESV9ZWHl4MGhCNnF6NUxrMlpBX28wM01TM2FRNGUtanBxSEpadzBVWEsxTlNRbmRvcng4QXdLZmZsdw==- user1 토큰 복호화

root@manager:~/k8slab/0908# k get secret $SECNAME -o jsonpath='{.data.token}' | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6ImQyQjgxZW9nUlhJRWxILTdEbEZqY3FFRVBhV1oxUzRZc19reXExYXg3THcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6InVzZXIxLXRva2VuLXhubGdjIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6InVzZXIxIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzgxNGJiN2MtYmIxYS00NmI3LTgzNzItYmE0ODBlMGJkMmI1Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6dXNlcjEifQ.Tkcja7I3lJa1UXO0srs61EYSFKyLSSQ26lfJCvBJrAPIAK270ulVfVvnwp6QwVZOuAtn7RIfTsFjQcp4rpWdUMzWAGN9kv122E3hO8MnR_q5MVuRQbKR6GUNWhHJJ5HCUGS6ajnCVLlmi505QA7fFwbIPSEchZ8TK5rd7jSAtezo7VDFJu3NrKllM4O7geMNy2gFrirMKuoSq2k8BRyCdmw0uEX0--_TZKFs826Luh83AvM3GnhHiyVmAalpr0xZ2dqcTH7tNl1Dme_egD9ubI8Dcw6DI_YXyx0hB6qz5Lk2ZA_o03MS3aQ4e-jpqHJZw0UXK1NSQndorx8AwKfflw- 복호화된 토큰 변수에 담기

root@manager:~/k8slab/0908# export DTKN=$(k get secret $SECNAME -o jsonpath='{.data.token}' | base64 -d)step 3) 토큰으로 API 접속 (인증서 없이)

- APIGroupList 확인

root@manager:~/k8slab/0908# curl https://localhost:6443/apis --header "Authorization: Bearer $DTKN" -k

{

"kind": "APIGroupList",

"apiVersion": "v1",

"groups": [

{

"name": "apiregistration.k8s.io",

"versions": [

{

"groupVersion": "apiregistration.k8s.io/v1",

"version": "v1"

.../apis

- DeploymentList 확인

root@manager:~/k8slab/0908# curl https://211.183.3.100:6443/apis/apps/v1/namespaces/default/deployments --header "Authorization: Bearer $DTKN" -k

{

"kind": "DeploymentList",

"apiVersion": "apps/v1",

"metadata": {

"selfLink": "/apis/apps/v1/namespaces/default/deployments",

"resourceVersion": "116827"

},

"items": []

}/apis/apps/v1/namespaces/{namespace}/deployments

- ServiceList 확인

root@manager:~/k8slab/0908# curl https://localhost:6443/api/v1/namespaces/default/services -k --header "Authorization: Bearer $DTKN"

{

"kind": "ServiceList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/namespaces/default/services",

"resourceVersion": "119773"

},

"items": [

{

"metadata": {

.../api/v1/namespaces/{namespace}/services

- PodList 확인

root@manager:~/k8slab/0908# curl https://localhost:6443/api/v1/namespaces/default/pods -k --header "Authorization: Bearer $DTKN"

{

"kind": "PodList",

"apiVersion": "v1",

"metadata": {

"selfLink": "/api/v1/namespaces/default/pods",

"resourceVersion": "126943"

},

"items": [

{

"metadata": {/api/v1/namespaces/{namespace}/pods

실습 6 - nodeSelector

참고: https://kubernetes.io/ko/docs/concepts/scheduling-eviction/assign-pod-node/

- 노드의 label 보기

root@manager:~/k8slab/0908# k get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

manager Ready control-plane,master 29h v1.21.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=manager,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

worker1 Ready <none> 29h v1.21.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker1,kubernetes.io/os=linux

worker2 Ready <none> 29h v1.21.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker2,kubernetes.io/os=linux

worker3 Ready <none> 29h v1.21.0 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=worker3,kubernetes.io/os=linux각 노드에 label 부착

root@manager:~/k8slab/0908# k label nodes worker1 \

> zone=seoul

node/worker1 labeled

root@manager:~/k8slab/0908# k label nodes worker2 \

> zone=busan

node/worker2 labeled

root@manager:~/k8slab/0908# k label nodes worker3 \

> zone=jeju

node/worker3 labeledpod 생성

root@manager:~/k8slab/0908# vi labelpod.yaml apiVersion: v1

kind: Pod

metadata:

name: testpod

spec:

nodeSelector:

zone: jeju

containers:

- name: testctn

image: nginxjeju label을 가진 노드에 생성

spec: nodeSelector: zone: jeju

- pod 배포

root@manager:~/k8slab/0908# k apply -f labelpod.yaml

pod/testpod created- pod 배포 확인

root@manager:~/k8slab/0908# k get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

testpod 1/1 Running 0 13s 192.168.182.46 worker3 <none> <none>jeju label을 가진 worker3 노드에 생성되었다.