실습 1 - NFS와 LoadBalancer

step 0) NFS 구성

- exports 설정

root@manager:~/k8slab/lab3_volume# vi /etc/exports/shared 211.183.3.0/24(rw,no_root_squash,sync)- nfs-server 동작 확인

root@manager:~/k8slab/lab3_volume# systemctl status nfs-server | grep Active

Active: active (exited) since Tue 2022-09-06 12:10:58 KST; 22h agostep 1) yaml 작성 - NFS를 설정한 nginx Deployment 및 LoadBalancer Service

root@manager:~/k8slab/lab3_volume# vi nfs-ip.yamlkind: Deployment

metadata:

name: nfs-ip

spec:

replicas: 3

selector:

matchLabels:

app: nfs-ip # replicas

template: # pod 정의

metadata:

name: my-nfs-ip

labels:

app: nfs-ip # 3개 생성

spec:

containers:

- name: webserver

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html/lab3 # pod의 경로

volumes:

- name: nfs-vol

nfs:

server: 211.183.3.100

path: /shared

---

apiVersion: v1

kind: Service

metadata:

name: nfslb

spec:

ports:

- name: nfslb-port

port: 80 # service의 포트

targetPort: 80 # pod의 포트

selector:

app: nfs-ip # pod의 label

type: LoadBalancerstep 2) LoadBalancer의 ConfigMap 배포 - pool 정의

root@manager:~/k8slab/lab1# vi lb-config.yaml apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system # metallb의 namespace에 작성해서 config로 넘김

name: config

data:

config: | # config에게 넘길 내용

address-pools:

- name: default

protocol: layer2

addresses:

- 211.183.3.201-211.183.3.210

- name: kia

protocol: layer2

addresses:

- 211.183.3.211-211.183.3.220

- name: sk

protocol: layer2

addresses:

- 211.183.3.221-211.183.3.230root@manager:~/k8slab/lab1# k apply -f lb-config.yaml

configmap/config createdstep 3) nfs-ip 배포

root@manager:~/k8slab/lab3_volume# k apply -f nfs-ip.yaml

deployment.apps/nfs-ip created

service/nfslb created- 배포 확인

root@manager:~/k8slab/lab3_volume# k get pod,svc

NAME READY STATUS RESTARTS AGE

pod/nfs-ip-cf4bb796-8cj6h 1/1 Running 0 71s

pod/nfs-ip-cf4bb796-f6jhf 1/1 Running 0 71s

pod/nfs-ip-cf4bb796-wr9z6 1/1 Running 0 71s

pod/private-reg 1/1 Running 0 17h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d1h

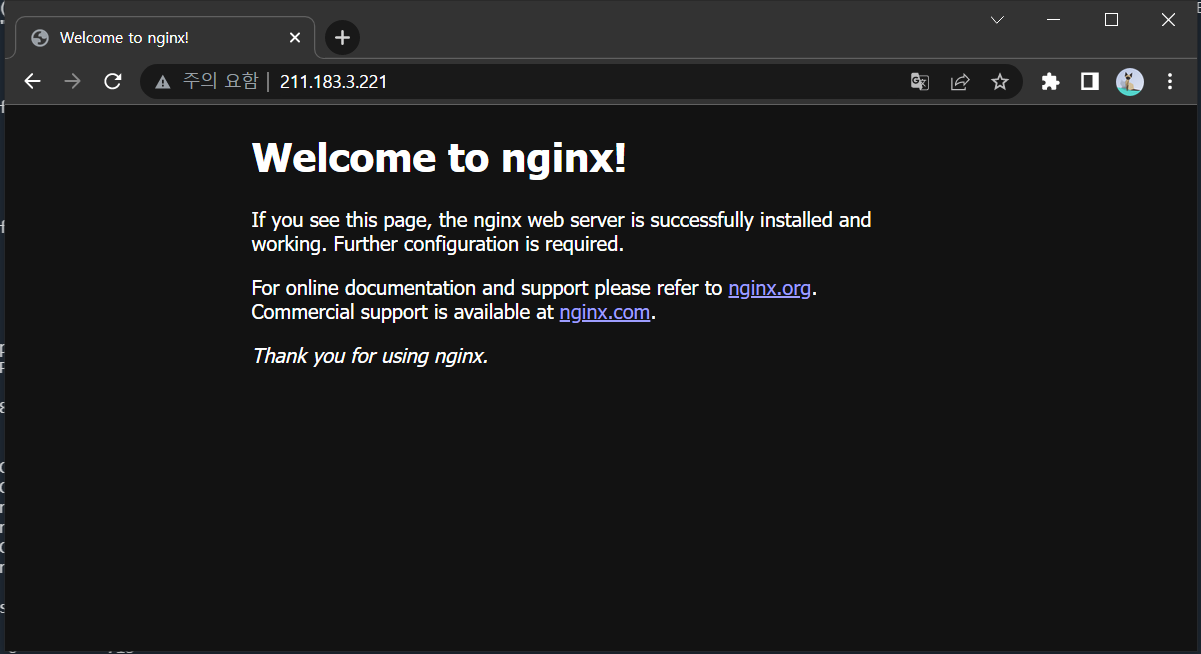

service/nfslb LoadBalancer 10.100.248.33 211.183.3.221 80:30609/TCP 71s-> LoadBalancer의 ip: 211.183.3.221

- /shared 에 index.html 생성

root@manager:~/k8slab/lab3_volume# curl -L http://www.nginx.com > /shared/index.html

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 162 0 162 0 0 294 0 --:--:-- --:--:-- --:--:-- 294

100 399k 0 399k 0 0 170k 0 --:--:-- 0:00:02 --:--:-- 256k

실습 2 - 종합 정리

- 모든 노드를 실행하고 master에서 토큰을 발행하여 각 노드에서 해당 토큰을 이용한 뒤, 클러스터에 조인한다.

- manager는 calico를 이용하여 네트워크 애드온을 설치한다. 이는 pod 간 통신에 오버레이 네트워크를 제공한다. 또한 BGP를 이용하는 대규모 라우팅이 제공되는 환경이라면 이 역시 이용 가능한 플로그인으로 동작한다. 단, 우리 실습에서는 L2로 이용한다.

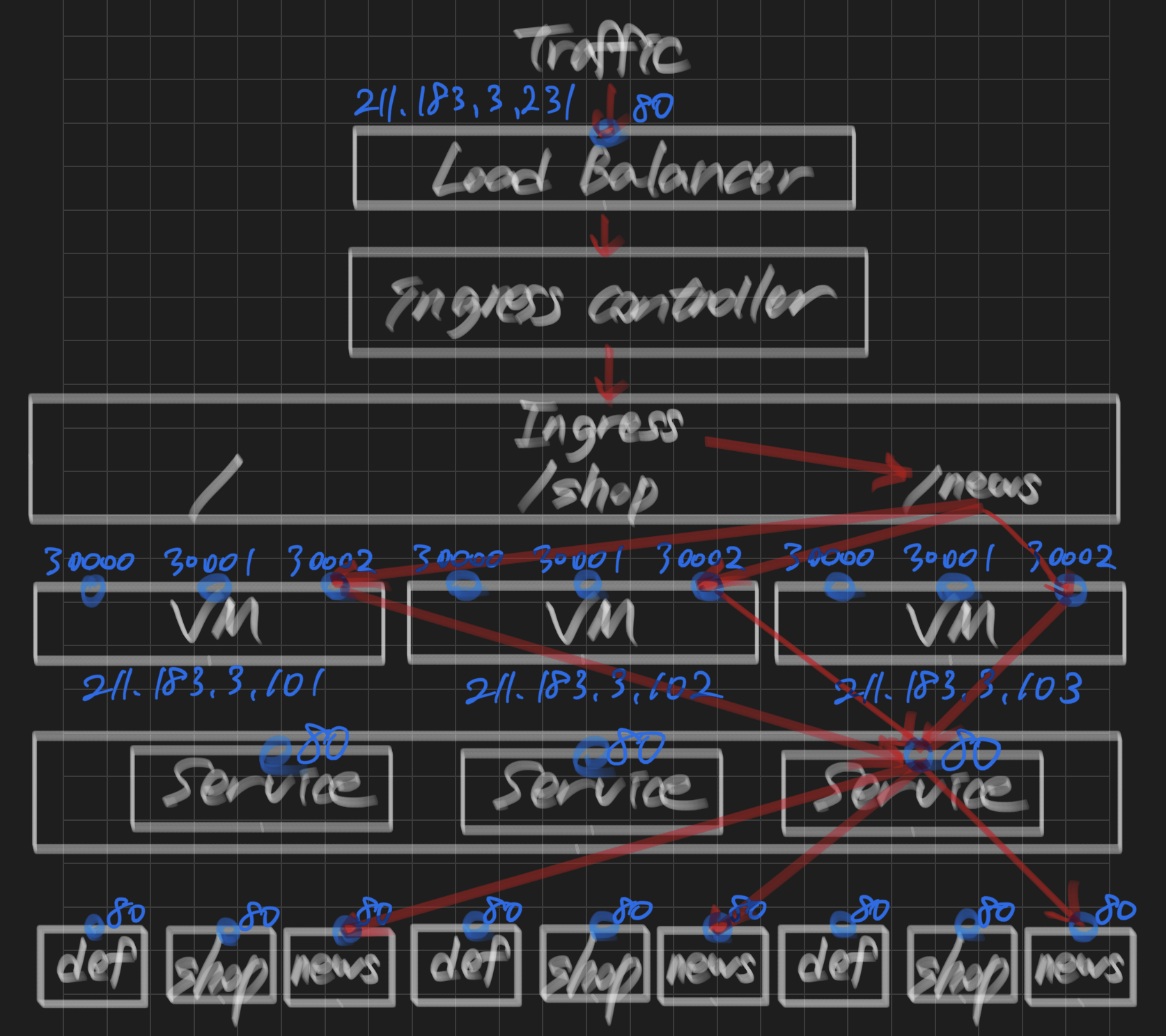

- 다음의 조건을 만족하는 웹서비스를 제공해주세요.

- 외부로의 접속은 LB를 이용한다. (metalLB 사용)

- LB에서 제공하는 주소 대역은 211.183.3.231~211.183.3.249 까지이다.

- deployment

- 3개의 웹서비스를 제공(nginx)

- pod는 각각 0.5개의 CPU, 32MB 메모리가 기본적으로 제공된다.

- 만약 각 pod의 CPU 사용량이 80%를 넘어서게 되면 최대 10개까지 확장 가능해야 한다.

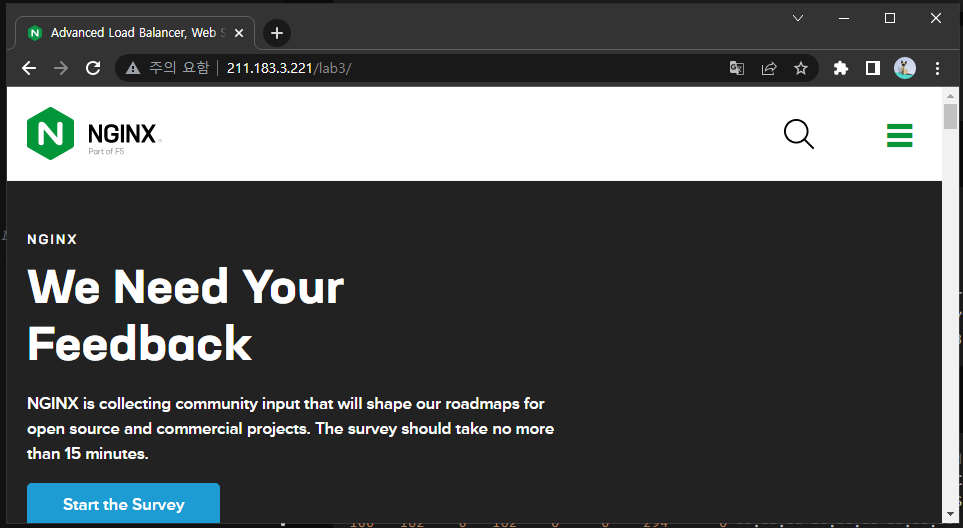

- nginx의 페이지는 ingress를 통해 라우팅되어야 한다. 이는 nginx ingress controller를 이용하여 다음과 같이 구성한다.

vm port pod

211.183.3.20X --> LB --> IC --> Ingress --> / -> default 서비스 --> 30001 3개

/shop -> shop 서비스 --> 30002 3개

/news -> news 서비스 --> 30003 3개 -

nfs를 구성하여 각 pod에 공간을 제공할 수 있어야 한다.

default 서비스: nfs 서버의 /default/index.html

shop 서비스: nfs 서버의 /shop/index.html

news 서비스: nfs 서버의 /news/index.html -

ingress controller는 LB와 연계하여 211.183.3.20X를 이용하고 있다.

www.goyangyee.com을 manager의 /etc/hosts에 등록하고

manager에서는 curl을 이용하여 확인해본다. 그 외의 곳에서는 브라우저에서 확인해본다.

step 1) 클러스터 구성

root@manager:~# kubeadm init --apiserver-advertise-address 211.183.3.100

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 211.183.3.100:6443 --token lmktk6.hfr4jr33wzycb3kf \

--discovery-token-ca-cert-hash sha256:6530d35ff4fdadfd734be7860d3fbcec1323fdac06c5a6a2cd0384d13ee9bf8b root@manager:~# mkdir -p $HOME/.kube

root@manager:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@manager:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@manager:~# export KUBECONFIG=/etc/kubernetes/admin.conf[worker1, 2, 3]

root@worker1:~# kubeadm join 211.183.3.100:6443 --token lmktk6.hfr4jr33wzycb3kf \

> --discovery-token-ca-cert-hash sha256:6530d35ff4fdadfd734be7860d3fbcec1323fdac06c5a6a2cd0384d13ee9bf8b

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.- 노드 확인

root@manager:~# k get node

NAME STATUS ROLES AGE VERSION

manager NotReady control-plane,master 2m33s v1.21.0

worker1 NotReady <none> 117s v1.21.0

worker2 NotReady <none> 115s v1.21.0

worker3 NotReady <none> 111s v1.21.0step 2) calico 애드온 추가(pod간 오버레이 네트워크 구성)

참고: https://velog.io/@ptah0414/쿠버네티스-volume#step-2-calico-애드온-추가pod간-오버레이-네트워크-구성

- manger 노드 도커 로그인

root@manager:~# docker login

Login with your Docker ID to push and pull images from Docker Hub. If you don't have a Docker ID, head over to https://hub.docker.com to create one.

Username: ptah0414

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded- calico 애드온 추가

root@manager:~# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

poddisruptionbudget.policy/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

serviceaccount/calico-node created

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

deployment.apps/calico-kube-controllers created- pod 확인

root@manager:~# k get pod -n kube-system -w

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-867d8d6bd8-r4hb8 1/1 Running 0 4m1s

calico-node-2sz4c 1/1 Running 0 4m1s

calico-node-m2h6w 1/1 Running 0 4m1s

calico-node-rt277 1/1 Running 0 4m1s

calico-node-v7fmd 1/1 Running 0 4m1s

coredns-558bd4d5db-9xj7b 1/1 Running 0 7m19s

coredns-558bd4d5db-rlfm5 1/1 Running 0 7m19s

etcd-manager 1/1 Running 0 7m27s

kube-apiserver-manager 1/1 Running 0 7m27s

kube-controller-manager-manager 1/1 Running 0 7m27s

kube-proxy-6qrbn 1/1 Running 0 7m1s

kube-proxy-gsz29 1/1 Running 0 6m55s

kube-proxy-hfn5f 1/1 Running 0 7m19s

kube-proxy-vrm66 1/1 Running 0 6m59s

kube-scheduler-manager 1/1 Running 0 7m27sstep 3) NFS 구성

- nfs 패키지 설치

[manager]

root@manager:~/k8slab# apt install -y nfs-server[worker 1, 2, 3]

root@worker1:~# apt install -y nfs-common- shared 디렉토리 생성

root@manager:~/k8slab# mkdir /shared

root@manager:~/k8slab# chmod 777 /shared- /shared 외부에 공개 설정

root@manager:~/k8slab# vi /etc/exports/shared 211.183.3.0/24(rw,no_root_squash,sync)- 방화벽 해제 및 nfs-server 재시작

root@manager:~/k8slab# ufw disable

Firewall stopped and disabled on system startup

root@manager:~/k8slab# systemctl restart nfs-server- 디렉토리 생성

root@manager:/shared# mkdir default shop news- index.html 생성

root@manager:/shared# curl -L https://news.naver.com/ > /shared/news/index.html

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 717k 0 717k 0 0 1807k 0 --:--:-- --:--:-- --:--:-- 1812k

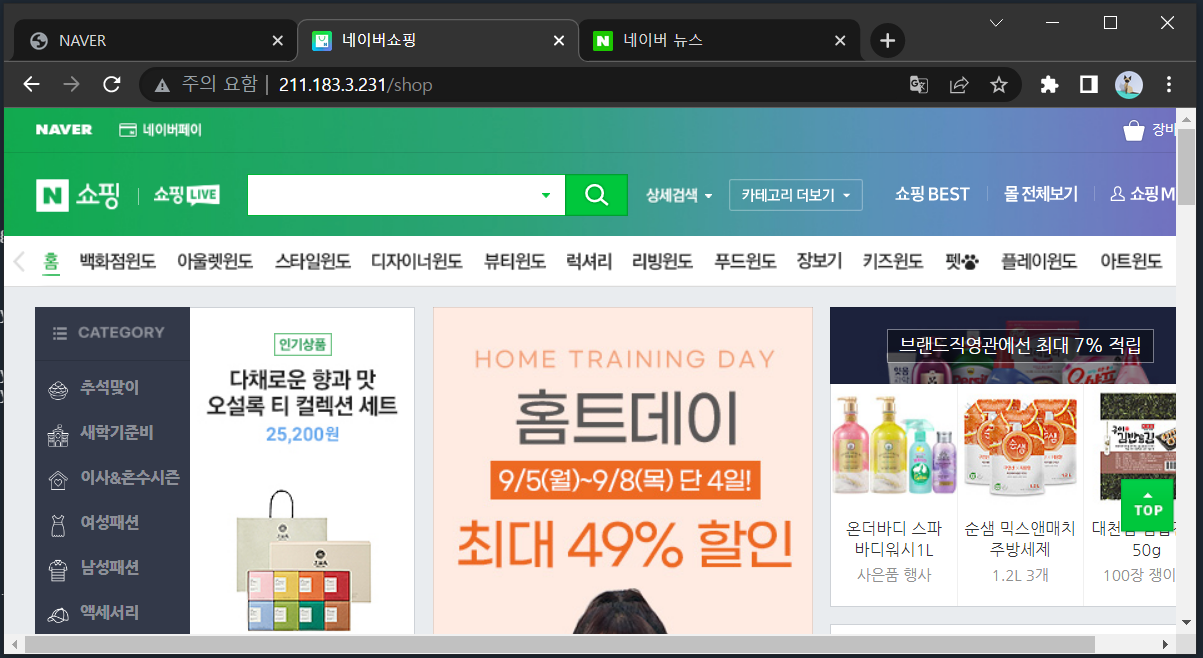

root@manager:/shared# curl -L https://shopping.naver.com/ > /shared/shop/index.html

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 178 100 178 0 0 1271 0 --:--:-- --:--:-- --:--:-- 1271

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 664k 0 664k 0 0 2231k 0 --:--:-- --:--:-- --:--:-- 2231k

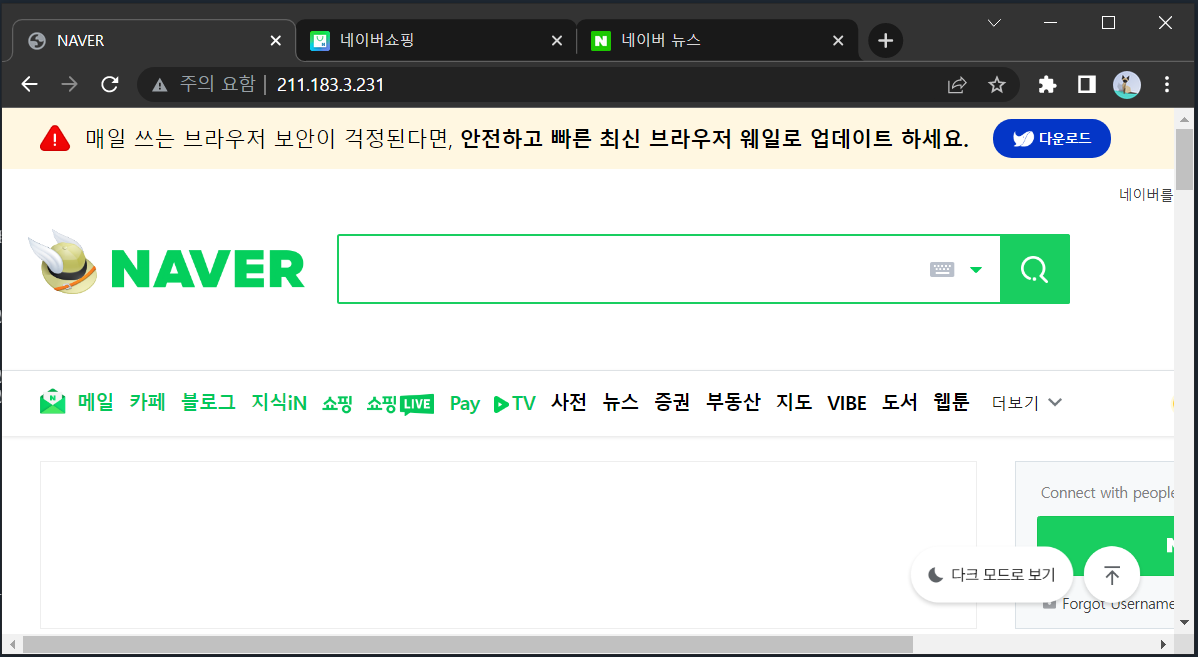

root@manager:/shared# curl -L https://naver.com/ > /shared/default/index.html

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 162 0 162 0 0 1572 0 --:--:-- --:--:-- --:--:-- 1572

0 0 0 0 0 0 0 0 --:--:-- 0:00:05 --:--:-- 0

100 206k 0 206k 0 0 39090 0 --:--:-- 0:00:05 --:--:-- 39090step 4) namespace 생성

참고:

https://velog.io/@ptah0414/쿠버네티스-Volume#step-2-namespace-생성

- namespace의 yaml 작성

root@manager:~/k8slab# vi rapa-ns.yamlapiVersion: v1

kind: Namespace

metadata:

name: rapa- namespace 배포

root@manager:~/k8slab# k apply -f rapa-ns.yaml

namespace/rapa createdstep 5) MetalLB 구성

- metallb-system Namespace 생성

root@manager:~# k create ns metallb-system

namespace/metallb-system created- controller, speaker 배포

root@manager:~# kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.12.1/manifests/metallb.yaml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/controller created

podsecuritypolicy.policy/speaker created

serviceaccount/controller created

serviceaccount/speaker created

clusterrole.rbac.authorization.k8s.io/metallb-system:controller created

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created

role.rbac.authorization.k8s.io/config-watcher created

role.rbac.authorization.k8s.io/pod-lister created

role.rbac.authorization.k8s.io/controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created

rolebinding.rbac.authorization.k8s.io/config-watcher created

rolebinding.rbac.authorization.k8s.io/pod-lister created

rolebinding.rbac.authorization.k8s.io/controller created

daemonset.apps/speaker created

deployment.apps/controller createdroot@manager:~/k8slab/lab/deploy# k get pod -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-66445f859d-wr9gf 1/1 Running 0 41m

speaker-62xkb 1/1 Running 0 41m

speaker-hmd4g 1/1 Running 0 41m

speaker-j7z2r 1/1 Running 0 41m

speaker-wvhkb 1/1 Running 0 41m- MetalLB ConfigMap 구성

- LoadBalancer가 부여 받을 ip 주소 범위 설정

root@manager:~/k8slab# vi metallb.yamlapiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 211.183.3.231-211.183.3.249- MetalLB ConfigMap 배포

root@manager:~/k8slab# k apply -f metallb.yaml

configmap/config createdstep 6) ingress 구성

- ingress의 yaml 작성

- 도메인 설정

- /

- /shop

- /news

- 도메인 설정

root@manager:~/k8slab# vi ingress-config.yamlnamespace: rapa 설정 유의

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: rapa

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

kubernetes.io/ingress.class: "nginx"

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: default # 서비스 이름

port:

number: 80 # nodePort Service의 포트 번호

- path: /shop

pathType: Prefix

backend:

service:

name: shop # 서비스 이름

port:

number: 80 # nodePort Service의 포트 번호

- path: /news

pathType: Prefix

backend:

service:

name: news # 서비스 이름

port:

number: 80 # nodePort Service의 포트 번호 step 7) ingress controller 배포

root@manager:~/k8slab# k apply -f \

> https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.2/deploy/static/provider/cloud/deploy.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission createdstep 8) nginx deploy, nodePort service 배포

참고:

https://velog.io/@ptah0414/쿠버네티스-Volume#step-3-shop-blog-default-페이지의-yaml-작성

- default.yaml

root@manager:~/k8slab/lab/deploy# vi default.yaml nginx Deployment와 Nodeport Service에 namespace: rapa 설정 유의

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: rapa

name: default

spec:

replicas: 3

selector:

matchLabels:

app: default # replicas

template: # pod 정의

metadata:

name: default # pod 이름

labels:

app: default # 3개 생성

spec:

containers:

- name: default

image: nginx

ports:

- containerPort: 80

resources:

requests:

cpu: 500m

memory: 32Mi

volumeMounts:

- name: default-vol

mountPath: /usr/share/nginx/html

volumes:

- name: default-vol

nfs:

server: 211.183.3.100

path: /shared/default

---

apiVersion: v1

kind: Service

metadata:

namespace: rapa

name: default

spec:

ports:

- name: default-port

port: 80 # service의 포트

targetPort: 80 # pod의 포트

nodePort: 30001

selector:

app: default # pod의 label

type: NodePortnodePort: 30001

- shop.yaml

root@manager:~/k8slab/lab/deploy# vi shop.yaml apiVersion: apps/v1

kind: Deployment

metadata:

namespace: rapa

name: shop

spec:

replicas: 3

selector:

matchLabels:

app: shop # replicas

template: # pod 정의

metadata:

name: shop # pod 이름

labels:

app: shop # 3개 생성

spec:

containers:

- name: shop

image: nginx

ports:

- containerPort: 80

resources:

requests:

cpu: 500m

memory: 32Mi

volumeMounts:

- name: shop-vol

mountPath: /usr/share/nginx/html

volumes:

- name: shop-vol

nfs:

server: 211.183.3.100

path: /shared/shop

---

apiVersion: v1

kind: Service

metadata:

namespace: rapa

name: shop

spec:

ports:

- name: shop-port

port: 80 # service의 포트

targetPort: 80 # pod의 포트

nodePort: 30002

selector:

app: shop # pod의 label

type: NodePortnodePort: 30002

- news.yaml

root@manager:~/k8slab/lab/deploy# vi news.yaml apiVersion: apps/v1

kind: Deployment

metadata:

namespace: rapa

name: news

spec:

replicas: 3

selector:

matchLabels:

app: news # replicas

template: # pod 정의

metadata:

name: news # pod 이름

labels:

app: news # 3개 생성

spec:

containers:

- name: news

image: nginx

ports:

- containerPort: 80

resources:

requests:

cpu: 500m

memory: 32Mi

volumeMounts:

- name: news-vol

mountPath: /usr/share/nginx/html

volumes:

- name: news-vol

nfs:

server: 211.183.3.100

path: /shared/news

---

apiVersion: v1

kind: Service

metadata:

namespace: rapa

name: news

spec:

ports:

- name: news-port

port: 80 # service의 포트

targetPort: 80 # pod의 포트

nodePort: 30003

selector:

app: news # pod의 label

type: NodePortnodePort: 30003

- 배포

root@manager:~/k8slab/lab/deploy# k apply -f .

deployment.apps/default created

service/default created

deployment.apps/news created

service/news created

deployment.apps/shop created

service/shop createdroot@manager:~# k get deploy,svc -n rapa

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/default 3/3 3 3 47m

deployment.apps/news 3/3 3 3 47m

deployment.apps/shop 3/3 3 3 47m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/default NodePort 10.105.181.185 <none> 80:30001/TCP 47m

service/news NodePort 10.111.35.174 <none> 80:30003/TCP 47m

service/shop NodePort 10.108.228.189 <none> 80:30002/TCP 47mstep 9) ingress 배포

root@manager:~/k8slab/lab# k apply -f ingress-config.yaml

ingress.networking.k8s.io/ingress-nginx createdroot@manager:~/k8slab/lab/deploy# k get ing -n rapa

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-nginx <none> * 211.183.3.231 80 40mstep 10) HPA 구성

-

metric server 배포

참고: https://velog.io/@ptah0414/K8S-쿠버네티스-구성하기#step-1-깃허브에서-metric-server-소스코드-다운로드

-

HPA 설정

root@manager:~/k8slab/lab/deploy# k autoscale deploy default -n rapa \

> --cpu-percent=80 \

> --min=3 \

> --max=10

horizontalpodautoscaler.autoscaling/default autoscaled

root@manager:~/k8slab/lab/deploy# k autoscale deploy shop -n rapa \

> --cpu-percent=80 \

> --min=3 \

> --max=10

horizontalpodautoscaler.autoscaling/shop autoscaled

root@manager:~/k8slab/lab/deploy# k autoscale deploy news -n rapa \

> --cpu-percent=80 \

> --min=3 \

> --max=10

horizontalpodautoscaler.autoscaling/news autoscaledroot@manager:~/k8slab/lab/deploy# k get hpa -n rapa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

default Deployment/default 0%/80% 3 10 3 38s

news Deployment/news 0%/80% 3 10 3 28s

shop Deployment/shop 0%/80% 3 10 3 32s- 외부에서 default로 부하 발생 (http://211.183.3.231:80/)

root@manager:~/k8slab/lab/deploy# ab -c 1000 -n 200 -t 60 http://211.183.3.231:80/root@manager:~/k8slab/lab# kubectl get hpa -n rapa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

default Deployment/default 2%/10% 3 10 3 3m43s

news Deployment/news 0%/10% 3 10 3 3m49s

shop Deployment/shop 0%/10% 3 10 3 3m37s

default Deployment/default 14%/10% 3 10 3 3m46s

default Deployment/default 12%/10% 3 10 5 4m1s

-> default의 replicas 증가

- 외부에서 shop으로 부하 발생 (http://211.183.3.231/shop:80/)

root@manager:~/k8slab/lab/deploy# ab -c 1000 -n 200 -t 60 http://211.183.3.231/shop:80/root@manager:~/k8slab/lab# kubectl get hpa -n rapa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

default Deployment/default 0%/10% 3 10 3 11m

news Deployment/news 0%/10% 3 10 3 11m

shop Deployment/shop 7%/10% 3 10 3 11m

shop Deployment/shop 9%/10% 3 10 3 11m

shop Deployment/shop 13%/10% 3 10 3 11m

shop Deployment/shop 9%/10% 3 10 4 11m-> shop의 replicas 증가

- 외부에서 news로 부하 발생 (http://211.183.3.231/news:80/)

root@manager:~/k8slab/lab/deploy# ab -c 1000 -n 200 -t 60 http://211.183.3.231/news:80/root@manager:~/k8slab/lab# kubectl get hpa -n rapa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

default Deployment/default 0%/10% 3 10 3 21m

news Deployment/news 0%/10% 3 10 3 21m

shop Deployment/shop 0%/10% 3 10 3 20m

news Deployment/news 2%/10% 3 10 3 21m

news Deployment/news 11%/10% 3 10 3 21m

news Deployment/news 9%/10% 3 10 4 21m-> news의 replicas 증가

step 11) hosts 등록

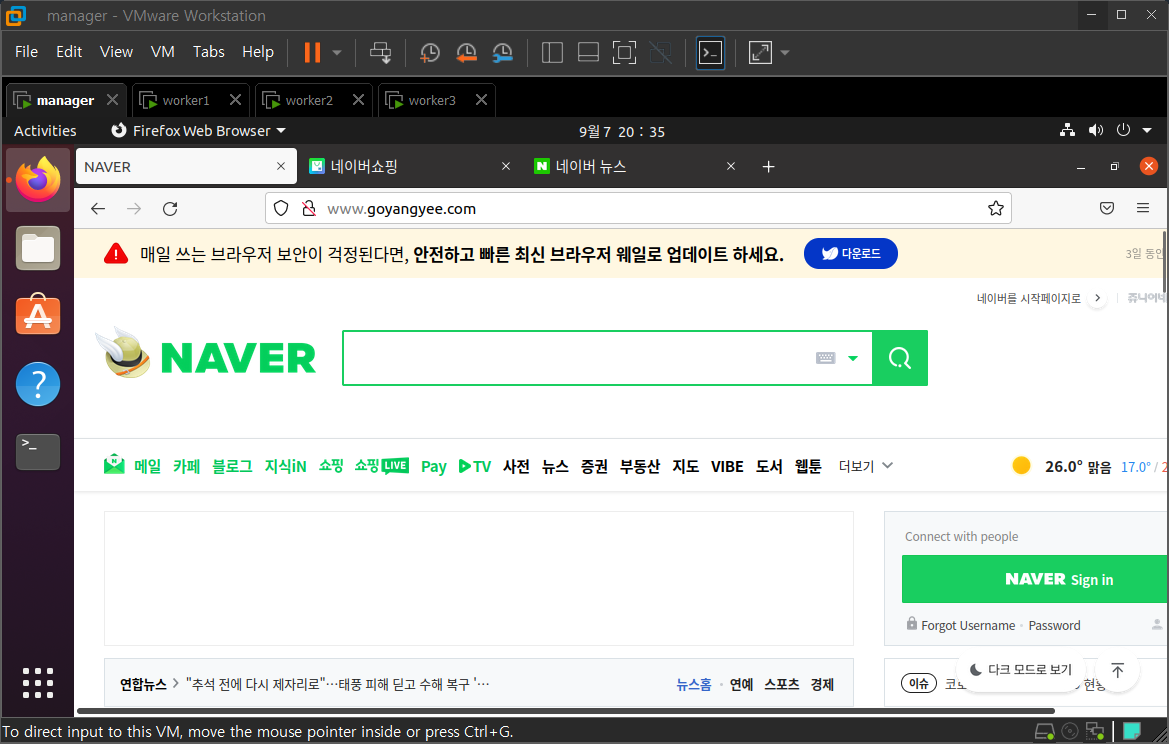

root@manager:~/k8slab/lab/deploy# vi /etc/hosts211.183.3.231 www.goyangyee.com- www.goyangyee.com 접속

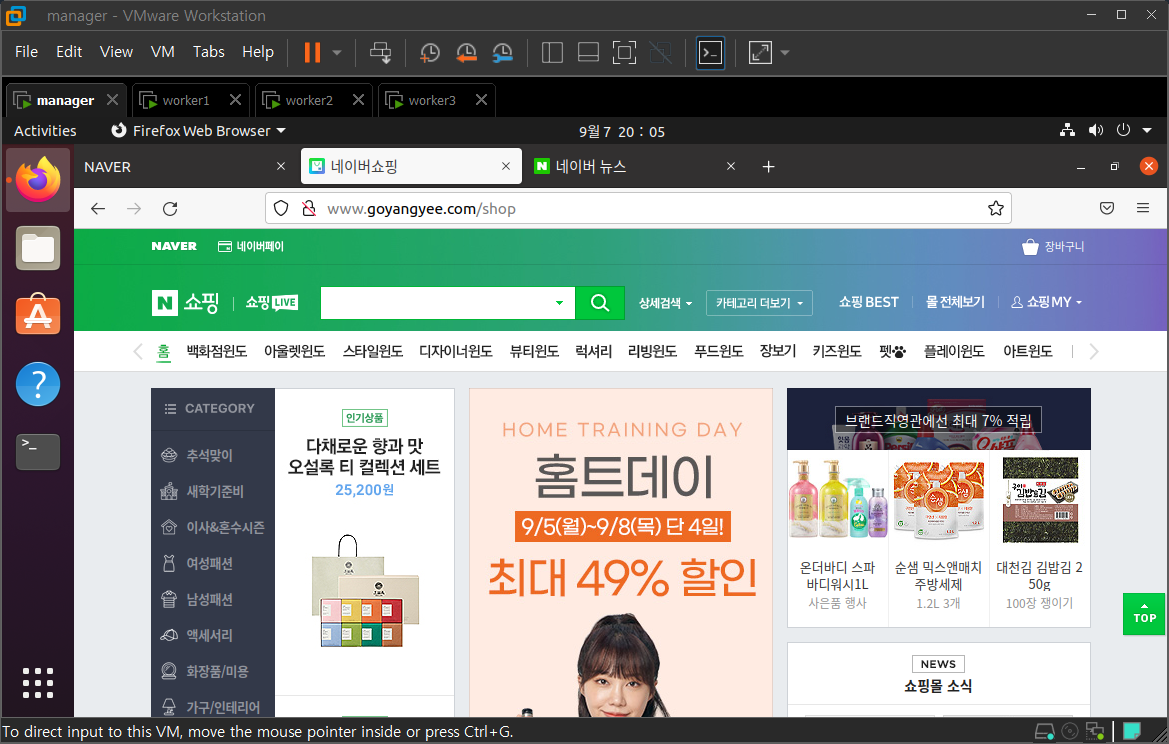

- www.goyangyee.com/shop 접속

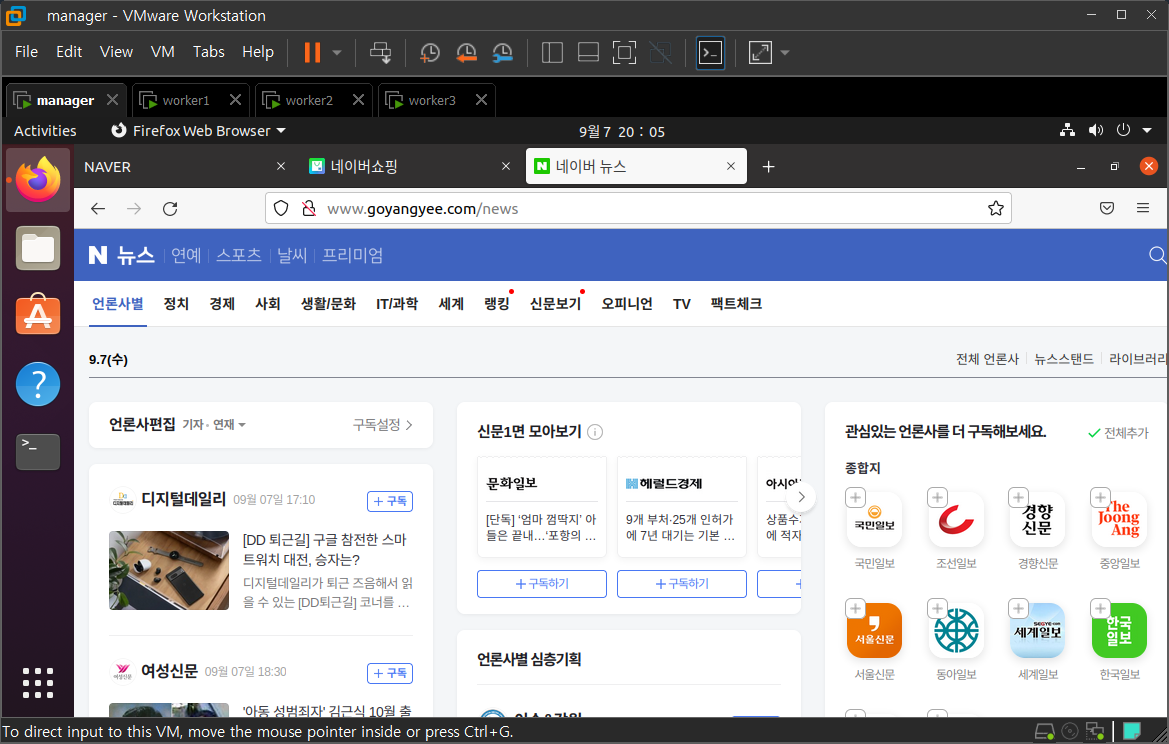

- www.goyangyee.com/news 접속

실습 3 - PV, PVC

pv: ops

pvc: dev

- yaml 생성

root@manager:~/k8slab/pvpvc# touch pv.yaml pvc.yaml deploy.yaml

root@manager:~/k8slab/pvpvc# tree

.

├── deploy.yaml

├── pvc.yaml

└── pv.yamlstep 0) nfs 구성

- /shared 디렉토리 설정

root@manager:~/k8slab/pvpvc# mkdir /shared ; chmod 777 /shared

root@manager:~/k8slab/pvpvc# echo "HELLO ALL" > /shared/index.html - exports 설정

root@manager:~/k8slab/pvpvc# vi /etc/exports /shared 211.183.3.0/24(rw,no_root_squash,sync)- nfs 재시작

root@manager:~/k8slab/pvpvc# vi /etc/exports root@manager:~/k8slab/pvpvc# systemctl restart nfs-serverstep 1) pv, pvc 구성

- pv의 yaml 작성

root@manager:~/k8slab/pvpvc# vi pv.yaml apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 211.183.3.100

path: /sharedPV: 100MB 제공

- pvc의 yaml 작성

root@manager:~/k8slab/pvpvc# vi pvc.yaml apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 50MiPVC: 50MB 주세요

- pv, pvc 배포

root@manager:~/k8slab/pvpvc# k apply -f .

persistentvolume/nfs-pv created

persistentvolumeclaim/pvc created- pv, pvc 배포 확인

root@manager:~/k8slab/pvpvc# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Bound default/pvc 10s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc Bound nfs-pv 100Mi RWX 10spv는 default/pvc와 Bound 되었다.

- 배포 삭제

root@manager:~/k8slab/pvpvc# k delete -f .

persistentvolume "nfs-pv" deleted

persistentvolumeclaim "pvc" deleted- pvc의 yaml 수정

root@manager:~/k8slab/pvpvc# vi pvc.yaml storage: 200MiPVC: 200MB 주세요

- 배포

root@manager:~/k8slab/pvpvc#

root@manager:~/k8slab/pvpvc# k apply -f .

persistentvolume/nfs-pv created

persistentvolumeclaim/pvc created- 배포 확인

root@manager:~/k8slab/pvpvc# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/nfs-pv 100Mi RWX Retain Available 19s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc Pending 19sPV: 100MB 제공

PVC: 200MB 주세요

=> PV(제공 용량)보다 PVC(요구 용량)가 더 크면 연결이 되지 않음

PV의 상태

: Available(독립적인 상태) -> Bound(연결이 된 상태) -> Release (연결이 되었다가 해제 된 상태)

- pvc를 100MB로 변경 후 재배포

root@manager:~/k8slab/pvpvc# k delete -f .

persistentvolume "nfs-pv" deleted

persistentvolumeclaim "pvc" deletedroot@manager:~/k8slab/pvpvc# vi pvc.yaml storage: 100MiPVC: 100MB 주세요

root@manager:~/k8slab/pvpvc#

root@manager:~/k8slab/pvpvc# k apply -f .

persistentvolume/nfs-pv created

persistentvolumeclaim/pvc createdstep 2) deploy 배포

- deploy의 yaml 작성

root@manager:~/k8slab/pvpvc# vi deploy.yaml apiVersion: apps/v1

kind: Deployment

metadata:

name: testdeploy

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: testnginx

image: nginx

volumeMounts:

- name: pvpvc

mountPath: /usr/share/nginx/html

volumes:

- name: pvpvc

persistentVolumeClaim:

claimName: pvc- deploy 배포

root@manager:~/k8slab/pvpvc# k apply -f deploy.yaml

deployment.apps/testdeploy createdstep 4) 마운트 확인

- 배포 확인

root@manager:~/k8slab/pvpvc# k get pod

NAME READY STATUS RESTARTS AGE

testdeploy-7694f559b4-f9hzt 1/1 Running 0 61s

testdeploy-7694f559b4-lsxrt 1/1 Running 0 61s

testdeploy-7694f559b4-nck8t 1/1 Running 0 61s- 파일 확인

root@manager:~/k8slab/pvpvc# k exec testdeploy-7694f559b4-f9hzt -- ls /usr/share/nginx/html

index.html

root@manager:~/k8slab/pvpvc# k exec testdeploy-7694f559b4-f9hzt -- cat /usr/share/nginx/html/index.html

HELLO ALL- pod의 디스크 확인

root@manager:~/k8slab/pvpvc# k exec testdeploy-7694f559b4-f9hzt -- df -h | grep shared

211.183.3.100:/shared 20G 9.7G 8.4G 54% /usr/share/nginx/html-> 100MB를 요구했는데 20GB를 사용할 수 있음

- 로컬 디스크 확인

root@manager:~/k8slab/pvpvc# df -h | grep /dev/sda5

/dev/sda5 20G 9.7G 8.4G 54% /-> pod는 로컬의 sda5와 연결되었음

실습 4 - Quota(PVC에 제한 걸기)

root@manager:~/k8slab/pvpvc# vi quota.yamlapiVersion: v1

kind: ResourceQuota

metadata:

name: testquota

spec:

hard:

persistentvolumeclaims: "5"

requests.storage: "250Mi"용량 제한: 250MB

- 배포

root@manager:~/k8slab/pvpvc# k apply -f quota.yaml

resourcequota/testquota created

- pvc 4개 만들기

root@manager:~/k8slab/pvpvc# cp pvc.yaml pvc2.yaml

root@manager:~/k8slab/pvpvc# cp pvc.yaml pvc3.yaml

root@manager:~/k8slab/pvpvc# cp pvc.yaml pvc4.yaml- pvc2 yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi- pvc3 yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi- pvc4 yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc4

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100Mi- pvc2 배포

root@manager:~/k8slab/pvpvc# k apply -f pvc2.yaml

persistentvolumeclaim/pvc2 created- pvc3 배포

root@manager:~/k8slab/pvpvc# k apply -f pvc3.yaml

Error from server (Forbidden): error when creating "pvc3.yaml": persistentvolumeclaims "pvc3" is forbidden: exceeded quota: testquota, requested: requests.storage=100Mi, used: requests.storage=200Mi, limited: requests.storage=250Mi-> 250MB 한도를 넘어서기 때문에 생성 불가

storageclass는 pv와 pvc를 일종의 그룹으로 묶는 역할을 한다.

주로 동적 프로비저닝에서 사용한다.