Batch Normalization

Batch Normalization

Definition

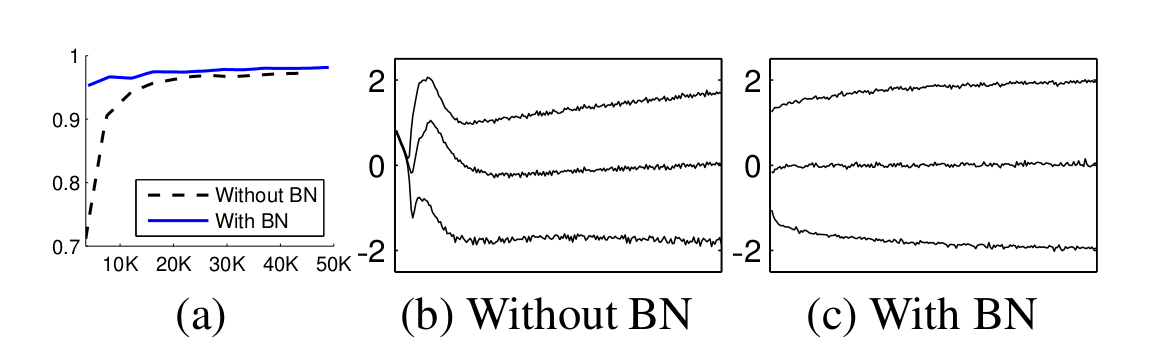

- 인공신경망을 re-centering과 re-scaling으로 layer의 input 정규화를 통해 더 빠르고 안정화시키는 방법

Motivation

Internal covariate shift

- Covariate shift : 이전 레이어의 파라미터 변화로 현재 레이어 입력 분포가 바뀌는 현상

- Internal covariate shift : 레이어 통과시 마다 covariate shift가 발생해 입력 분포가 약간씩 변하는 현상

- 망이 깊어짐에 따라 작은 변화가 뒷단에 큰 영향을 미침

- Covariate Shift 줄이는 방법

- layer's input 을 whitening 시킴(입력 평균:0, 분산:1)

- whitening이 backpropagation과 무관하게 진행되기 때문에 특정 파라미터가 계속 커질 수 있음(loss가 변하지 않으면 최적화 과정동안 특정 변수 계속 커지는 현상 발생 가능)

- BN(Batch Normalization)을 통해 조절(평균, 분산도 학습시에 같이 조절됨)

Gradient Vanishing / Exploding problem

- 간접적 방법

- change activation function : Sigmoid 대신 ReLU 사용

- careful initialization : 가중치 초기화를 잘 함

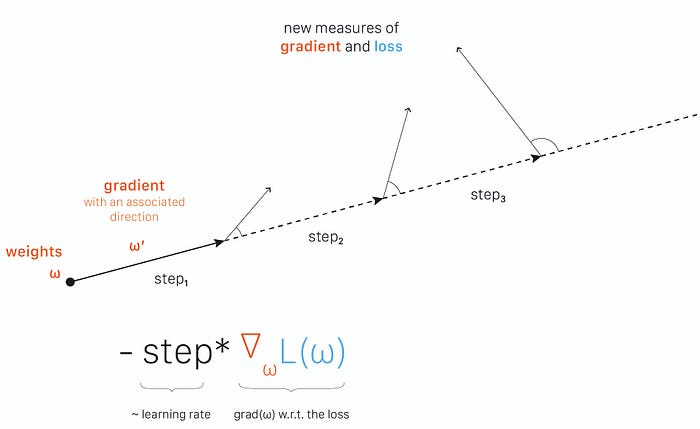

- small learning rate : gradient exploding 방지를 위해 작은 learning rate

- 배치 정규화

- 학습 과정 전체를 안정화 해서 학습속도 가속

Normalization

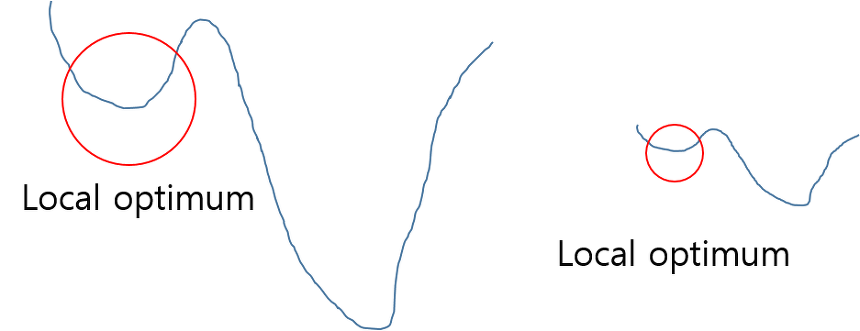

- local optimum(minima or maxima) 방지

Training

- Input: Values of x over a mini-batch: Parameters to be learned:

- Output:

- // mini-batch mean

- // mini-batch variance

- // normalize

- // scale and shift

- whitening과의 차이점 : 평균, 분산 구한 후 정규화 시키고 scale과 shift연산을 위해 가 추가되고 정규화 시킨 부분을 원래대로 돌리는 identity mapping도 가능하고 학습을 통해 를 정할 수 있어 단순하게 정규화만 할 때 보다 강력해짐

- 보통 non-linear activation function 앞에 배치

- BN은 신경망에 포함돼 역전파를 통해 학습 가능하고, 이 때 chain rule 적용

Training vs Test

Training

- mini-batch 마다 를 구하고 그 값을 저장

Test

- 학습 시 mini-batch마다 구했던 의 평균을 사용

- 분산 : 분산의 평균에 을 곱함(통계학적으로 unbiased variance에는 Bessel's correction을 통해 보정)

- 학습 전체 데이터에 대한 분산이 아니라 mini-batch 들의 분산을 통해 전체 분산 추정 시 통계학적 보정을 위해 베셀 보정값을 곱해주는 방식으로 추정

pseudo code

- Input : Network N with trainable parameters ; subset of activations

- Output : Batch-normalized network for inference,

-

1: // Training BN network

-

2:

-

3: Add transformation to (Alg. 1)

-

4: Modify each layer in with input to take instead

-

5: end for

-

6: Train to optimize the parameters

-

7: // Inference BN network with frozen parameters

-

8:

-

9: // For clarity,

-

10: Process multiple training mini-batches , each of size m, and average over them:

$$E[x] \gets E_{\mathcal{B}}[\mu_{\mathcal{B}}]$$ -

11: In , replace the transform with

-

12: end for

-

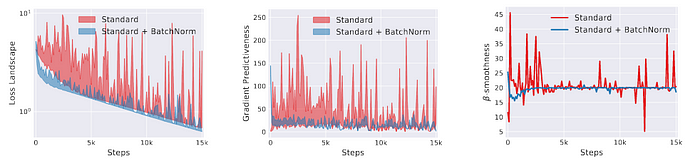

Optimization landscape smoother

- 최적화 문제를 reparametrize함 ⇒ 학습을 빠르고 쉽게함

Side Effect

- orthogonality matter 때문에 오버피팅을 피하기 위해 BN에 의존하면 안 됨(한 가지 문제를 다루는데 여러가지 module이 필요하면 더 어려움)

- 배치 사이즈가 커질 수록 regularization이 적게된다.(noise impact를 줄임)

Conclusion

- 단순하게 평균, 분산을 구하는 것이 아닌, 를 통한 변환으로 유용하게 되고, 신경망의 중간에 BN이 위치하게 되어 학습으로 를 구할 수 있게 됨

- Covariate shift 문제를 줄여줌 ⇒ 성능 향상, 빠른 학습

Reference

https://en.wikipedia.org/wiki/Batch_normalization

https://arxiv.org/pdf/1502.03167.pdf

https://eehoeskrap.tistory.com/430

https://m.blog.naver.com/laonple/220808903260

https://towardsdatascience.com/batch-normalization-in-3-levels-of-understanding-14c2da90a338

https://www.analyticsvidhya.com/blog/2021/03/introduction-to-batch-normalization/