Exercise is on a separate piece of paper

Cache

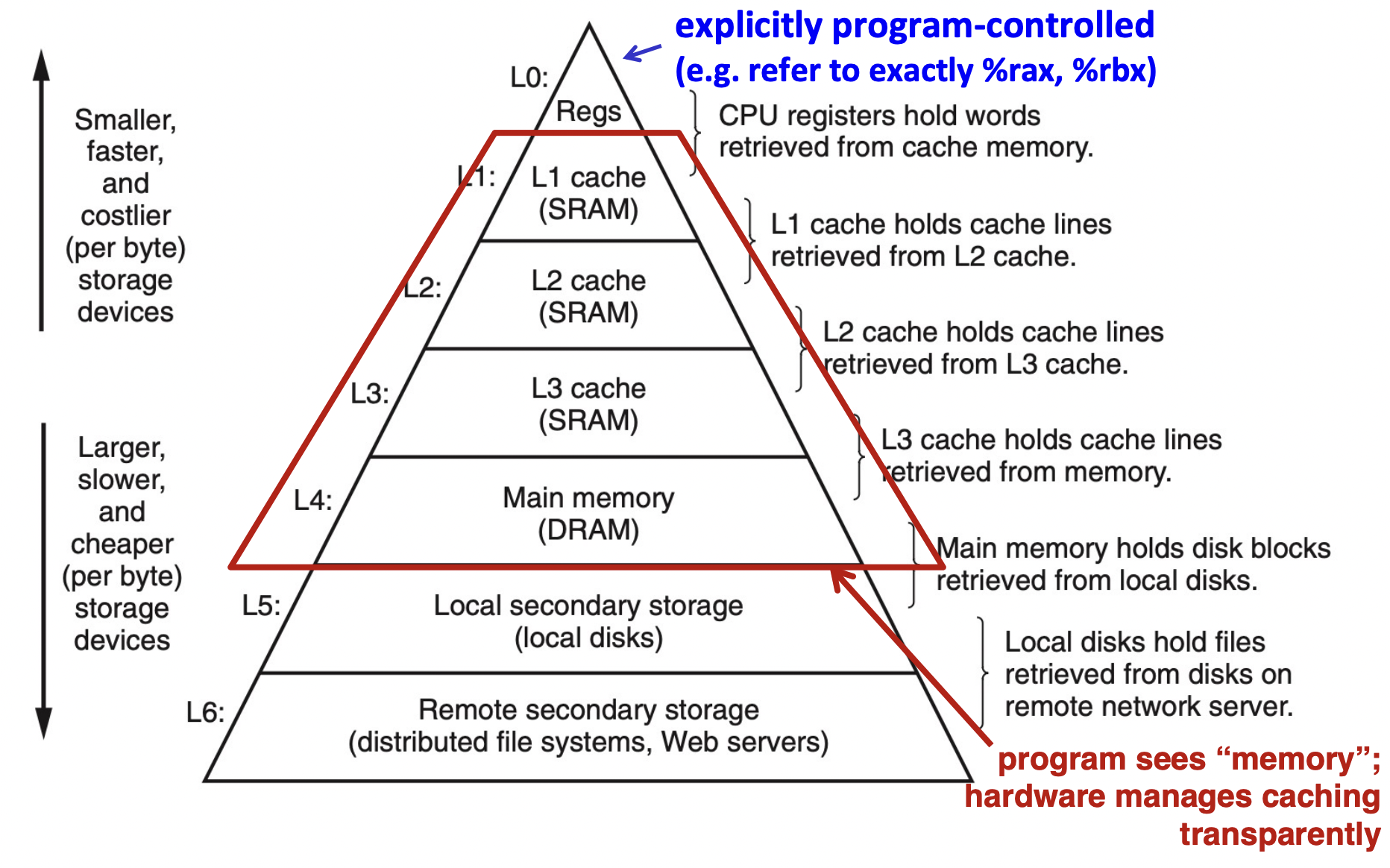

- Memory with short access time used for the storage of frequently of recently used instruction or data

- Cache memories are small, fast SRAM-based memories managed automatically in hardware

- Hold frequently accessed blocks of main memory

- CPU looks first for data in L1, then in L2, then in main memory

- Cache

- Smaller, faster, more expensive memory

- Subset of the blocks

- Memory

- Larger, slower, cheaper memory

- Viewed as partitioned into blocks

Locality

- Programs tend to use data and instructions with addresses

near or equal to those they have used recently

- Temporal Locality

- Recently referenced items are likely

to be referenced again in the near future

- Recently referenced items are likely

- Spatial Locality

- Items with nearby addresses tend

to be referenced close together in time

- Items with nearby addresses tend

sum = 0;

for (i = 0; i < n; i++) {

sum += a[i];

}

return sum;- Data

- Temporal :

sum - Spatial : consecutive elements of array

a[]

- Temporal :

- Instructions

- Temporal : cycle through loop repeatedly

- Spatial : reference instruction in sequence

Memory Hierarchies

Cache performance Metrices

- Hit Rate

- cache hits / number of references

- Miss Rate

- cache misses / number of references

- Hit time

- time to deliver a line(block) in the cache to the processor

- include time to determine whether the line is in the cache

- time to deliver a line(block) in the cache to the processor

- Miss Penalty

- additional time required because of a miss

Set Associative Cache

- Drawback

- Time to determine a hit

- What if the cache is full? -> eviction policy

Fully Associative Cache

- Drawback

- More complex and expensive logic

- Increased controller logic may slow low-level cache access time

- Increased scan lengths may decrease feasible cache size

- Preserving access time may decrease feasible cache size

- Totally unnecessary for many common access pattern

Increase hit ratio,

but also increase accessing time, hit time

Trade-off

| Direct mapped | n-way set associative mapped | fully associative mapped |

|---|---|---|

| high capacity | less capacity | |

| cheap | expensive | |

| fast | slow | |

| thrashiing | ||

| high conflict misses | no conflict misses |

Cache size

- Cache size

- C = B x E x S bytes

- Cache implementation size

- = (1 + t + 8B + e) x E x S bits

- valid bit

- tag bit

- block size (bits)

- eviction bit

- = (1 + t + 8B + e) x E x S bits

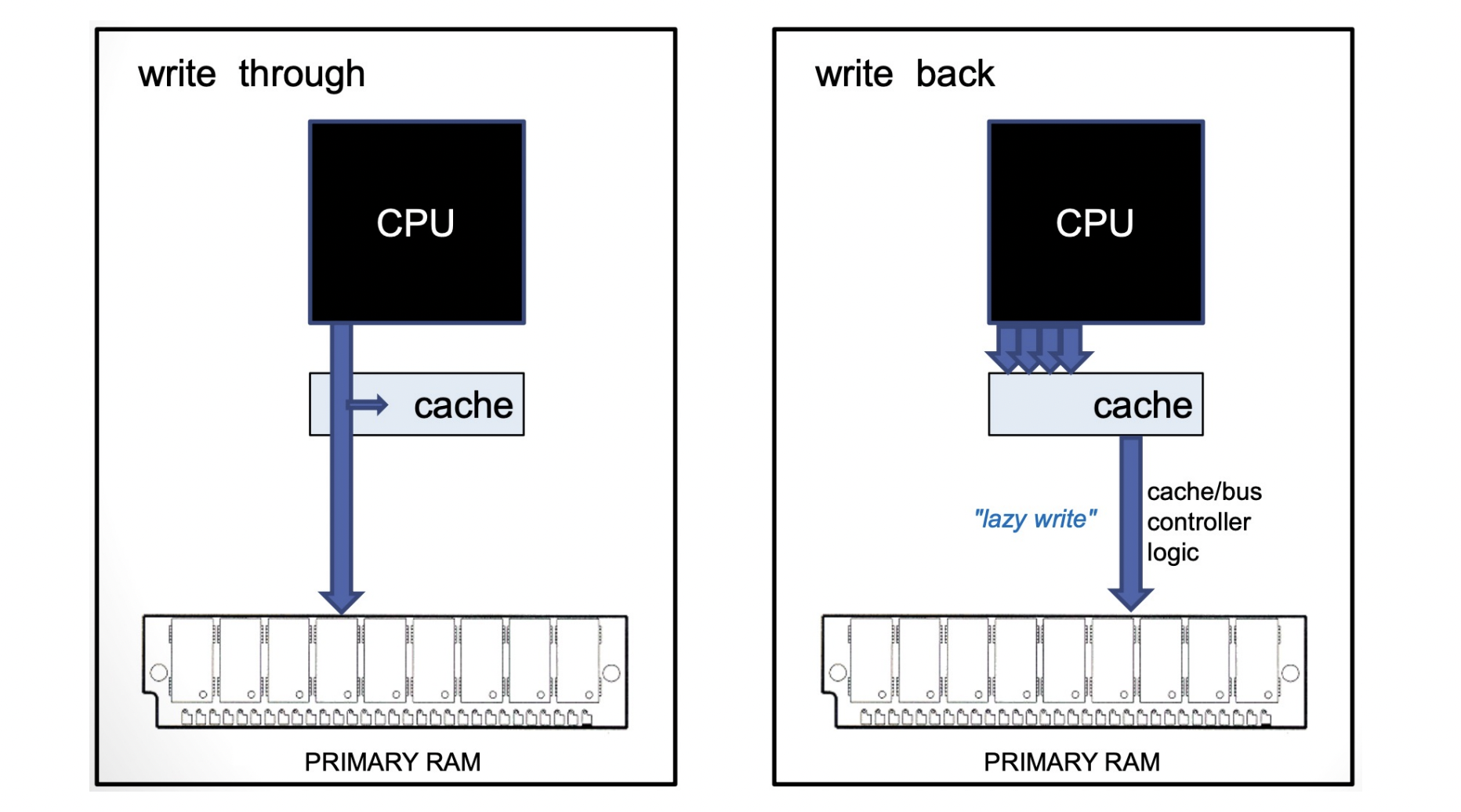

Cache Write

Write-hit

- Write-through

- write immediately to next level (main memory)

- stored in cache and memory simultaneously (same data updated)

- Write-back

- defer write to next level until line is evicted(replaced)

- using dirty bit to keep track which line has been modified

- defer write to next level until line is evicted(replaced)

Write-miss

- Write-allocate

- ("fetch on write") load into cache, then execute the write-hit policy

- No-write allocate

- ("write around") just write immediately to next level

Typical caches :

- Write-back + Write allocate, usually

- Write-through + No-write allocate, occasionally

Effect of cache size parameter

Increase overal cache size

- imporved hit rate

- slower (increased hit time)

Increase block size

- better spatial locality

- fewer lines

- larger miss penalty

Increase associativity

- decreased thrashing

- expensive

- slower

- more complex

- increased penalty

Cache performance metric

AMAT(Average Memory Access Time)

- t_hit (hit time)

- cycles to complete detection and transfer of a cache hit

- when hit data from cache, we need time to access/transfer/detect data

- prob_miss (miss probability)

- % time for cache miss

- penalty_miss (miss penalty)

- additional cycles incurred to process a cache miss

- when cache miss, need to go to main memory

AMAT = t_hit + prob_miss * penalty_miss

- Reduce hit time

- larger size

- Reduce miss rate

- better eviction policy

- Reduce miss penalty

- larger block

- asoociative with main memory

Cache-Friendly Code

- Programmer can optimize for cache performance

- How data structures are optimized

- How data are accessed

- Nexted loop structure

- Blocking is a general technique

- All systems favor "cache-freiendly code"

- Getting absolute optimum performance is very platform specific

- cache size, cache block size, associativity, etc.

- Can get most of the advantage with generic code

- Keep working set reasonably small (temporal locality)

- Use small strides (spatial locality)

- Focus on inner loop code

- Getting absolute optimum performance is very platform specific

-

Writing cache-friendly code

-

Maximize spatial locality in data organization

-

Maximize spatial locality in data access

- row-major

-

Maximize temporal locality

-

Engineer acecss strides

- array allocated successively and adjacent

-

Padding

-

Blocking / tiling

sizeof(float) = 8 bytes C = 64K m = 32 B = 32 S = 64 E = 1 /*(1)*/ float A[1024][1024], R[1024], C[1024]; for (int i = 0; i < 1024; i++) { for (int j = 0; j < 1024; j++) { R[i] += A[i][j]; C[i] -= A[i][j]; } } /*(2)*/ for (int i = 0; i < 1024; i++) { for (int jb = 0; jb < 1024; jb+= 4) { for (int j = 0; j < 4; j++) { R[i] += A[i][jb+j]; C[i] -= A[i][jb+j]; } } } -

Computational structure (ordered fetching)

-