terraform에서는 그냥 helm module넣어서 바로 설치할수있지만,,, 여기는 terraform을 안쓰는 곳이여서... Helm으로 설치를해야한다..

기존에 아는것보다 더 알아가야 하니까.. 힘내자고

왜 예전에 스터디할때 기록을 안해두었을까...무조건 기록해야해...

CA를 쓸때는 autoscaling 그룹으로 요리조리 마음대로 하고싶을때 편한거 같고

Karpenter를 쓸때는, keda를 연결해서 keda가 pod를 조절하고 karpenter가 이리저리 따라갈때 좋은 거같다.

물론 속도는 karpenter가 훨씬 빠르다!

CA는 autoscaling그룹 조절하는 시간이 걸리기때문에, karpenter보다 느릴수밖에없지...!

karpenter를 쓸때는 infra nodegroup하나 만들어서, infra 관련 pod를 전부다 affinity나 nodeselector로 infra nodegroup에 띄워야한다. 그래야 나중에 대참사를 막을수있다..^^;;

생각하면 할수록... fargate로 띄우는게 진짜 좋은거같음...

0.36.2 올리면서 바뀐거 수정중

설치

export CLUSTER_NAME=goyo-eks

export AWS_DEFAULT_REGION=ap-northeast-2

AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text)

echo ${AWS_ACCOUNT_ID}Karpenter Node Role 생성

아래의 권한을 넣어서 Role 생성

- role-eks-node-goyo-karpenter

신뢰정책

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"sts:AssumeRole"

],

"Principal": {

"Service": [

"ec2.amazonaws.com"

]

}

}

]

}policy

AmazonEKSWorkerNodePolicy

AmazonEKS_CNI_Policy

AmazonEC2ContainerRegistryReadOnly

AmazonSSMManagedInstanceCoreKarpenter Controller Role 생성

- role-ekssa-goyo-karpenter

policy 하나 만들어서 붙혀주기

{

"Statement": [

{

"Action": [

"ssm:GetParameter",

"ec2:DescribeImages",

"ec2:RunInstances",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribeLaunchTemplates",

"ec2:DescribeInstances",

"ec2:DescribeInstanceTypes",

"ec2:DescribeInstanceTypeOfferings",

"ec2:DescribeAvailabilityZones",

"ec2:DeleteLaunchTemplate",

"ec2:CreateTags",

"ec2:CreateLaunchTemplate",

"ec2:CreateFleet",

"ec2:DescribeSpotPriceHistory",

"pricing:GetProducts"

],

"Effect": "Allow",

"Resource": "*",

"Sid": "Karpenter"

},

{

"Action": "ec2:TerminateInstances",

"Condition": {

"StringLike": {

"ec2:ResourceTag/karpenter.sh/nodepool": "*"

}

},

"Effect": "Allow",

"Resource": "*",

"Sid": "ConditionalEC2Termination"

},

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "arn:aws:iam::${myaccountnumber}:role/role-eks-node-goyo-karpenter",

"Sid": "PassNodeIAMRole"

},

{

"Effect": "Allow",

"Action": "eks:DescribeCluster",

"Resource": "arn:aws:eks:ap-northeast-2:${myaccountnumber}:cluster/${CLUSTER_NAME}",

"Sid": "EKSClusterEndpointLookup"

},

{

"Sid": "AllowScopedInstanceProfileCreationActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:CreateInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "ap-northeast-2"

},

"StringLike": {

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileTagActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:TagInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "ap-northeast-2",

"aws:RequestTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:RequestTag/topology.kubernetes.io/region": "ap-northeast-2"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*",

"aws:RequestTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowScopedInstanceProfileActions",

"Effect": "Allow",

"Resource": "*",

"Action": [

"iam:AddRoleToInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:DeleteInstanceProfile"

],

"Condition": {

"StringEquals": {

"aws:ResourceTag/kubernetes.io/cluster/${CLUSTER_NAME}": "owned",

"aws:ResourceTag/topology.kubernetes.io/region": "ap-northeast-2"

},

"StringLike": {

"aws:ResourceTag/karpenter.k8s.aws/ec2nodeclass": "*"

}

}

},

{

"Sid": "AllowInstanceProfileReadActions",

"Effect": "Allow",

"Resource": "*",

"Action": "iam:GetInstanceProfile"

}

],

"Version": "2012-10-17"

}- 신뢰관계

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${oidc_endpoint}"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"oidc.eks.ap-northeast-2.amazonaws.com/id/${oidc_endpoint}:aud": "sts.amazonaws.com",

"oidc.eks.ap-northeast-2.amazonaws.com/id/${oidc_endpoint}:sub": "system:serviceaccount:karpenter:karpenter"

}

}

}

]

}Subnet 태그 추가

$ aws eks list-nodegroups --cluster-name literary-goyo-eks --query 'nodegroups' --output text

$ aws eks describe-nodegroup --cluster-name literary-goyo-eks --nodegroup-name literary-goyo-eks-ng --query "nodegroup.subnets" --output text

해당 subnet에 태그 추가

key : karpenter.sh/discovery

value : literary-goyo-eks ( 넣고싶은 값 넣기, eks 클러스터 이름넣으면 편함 )

EKS SG 태그 추가

key : karpenter.sh/discovery

value : literary-goyo-eks (넣고 싶은 값 넣기, eks 클러스터 이름넣으면 편함 )

AWS auth 수정

kubectl edit configmap aws-auth -n kube-system

- rolearn: aarn:aws:iam::${account_number}:role/${karpenternoderolename}

username: system:node:{{EC2PrivateDNSName}}

groups:

- system:bootstrappers

- system:nodesHelm 설치

helm template karpenter oci://public.ecr.aws/karpenter/karpenter --version="0.36.2" --namespace "karpenter" \

--set "settings.clusterName=literary-goyo-eks" \

--set "serviceAccount.annotations.eks\.amazonaws\.com/role-arn"="arn:aws:iam::${myaccountnumber}:role/role-ekssa-goyo-karpenter" \

--set "settings.isolatedVPC=true" \

--set controller.resources.requests.cpu=1 \

--set controller.resources.requests.memory=1Gi \

--set controller.resources.limits.cpu=1 \

--set controller.resources.limits.memory=1Gi > karpenter.yaml

Deloyment.yaml에 affinity 추가

카펜터가 만드는 노드에 카펜터가 상주하면, 큰일남..^^;;

나는 infranode에 다 띄울생각이여서 이름을 넣어줌

- matchExpressions:

- key: eks.amazonaws.com/nodegroup

operator: In

values:

- infra-nodegroup

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_provisioners.yaml

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.k8s.aws_awsnodetemplates.yaml

kubectl create -f \

https://raw.githubusercontent.com/aws/karpenter/${KARPENTER_VERSION}/pkg/apis/crds/karpenter.sh_machines.yaml

kubectl apply -f karpenter.yaml

kubectl api-resources \

--categories karpenter \

-o wide0.36.2으로 오면서 provisionr, nodetemplate이 nodetemplate으로 바꼇네 ... ㅇㄴ 이거 바꿔야됨 ...^^; ; 그

---

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: default

spec:

template:

spec:

requirements:

- key: kubernetes.io/arch

operator: In

values: ["amd64"]

- key: kubernetes.io/os

operator: In

values: ["linux"]

- key: karpenter.sh/capacity-type

operator: In

values: ["on-demand"]

- key: karpenter.k8s.aws/instance-category

operator: In

values: ["t"]

- key: node.kubernetes.io/instance-type

operator: In

values: ["t3.medium"]

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values: ["2"]

nodeClassRef:

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

name: default

# taints:

# - key: karpenter

# value: "applied"

# effect: NoSchedule

limits:

cpu: 1000

disruption:

consolidationPolicy: WhenUnderutilized

expireAfter: 720h # 30 * 24h = 720h

---

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: default

spec:

amiFamily: AL2023

role: role-eks-node-goyo-karpenter

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: literary-goyo-eks

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: literary-goyo-eks

amiSelectorTerms:

- id: ami-0f6b269f5b7aac774

tags:

project: goyo

Env: test테스트

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx-test

name: nginx-test

spec:

replicas: 1

selector:

matchLabels:

app: nginx-test

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx-test

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

cpu: 20m

limits:

cpu: 100m

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: karpenter.sh/nodepool

operator: In

values:

- default

kubectl logs karpenter-56fc488d76-5f96b -n karpenter

{"level":"INFO","time":"2024-05-31T05:15:05.839Z","logger":"controller.nodeclaim.lifecycle","message":"launched nodeclaim","commit":"e719109","nodeclaim":"default-7hw6r","provider-id":"aws:///ap-northeast-2a/i-00f498c84b028bead","instance-type":"t3.medium","zone":"ap-northeast-2a","capacity-type":"on-demand","allocatable":{"cpu":"1930m","ephemeral-storage":"17Gi","memory":"3246Mi","pods":"17"}}

{"level":"INFO","time":"2024-05-31T05:15:13.825Z","logger":"controller.provisioner","message":"found provisionable pod(s)","commit":"e719109","pods":"default/nginx-test-7f87977dc4-255w4","duration":"11.441124ms"}

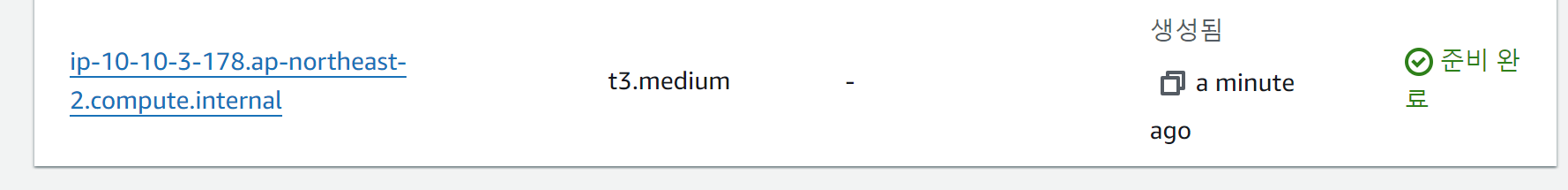

kubectl get nodeclaim -w

NAME TYPE ZONE NODE READY AGE

default-7hw6r t3.medium ap-northeast-2a ip-10-10-3-178.ap-northeast-2.compute.internal False 35s

default-7hw6r t3.medium ap-northeast-2a ip-10-10-3-178.ap-northeast-2.compute.internal False 40s

default-7hw6r t3.medium ap-northeast-2a ip-10-10-3-178.ap-northeast-2.compute.internal True 41s

kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-10-0-127.ap-northeast-2.compute.internal Ready <none> 41m v1.29.3-eks-ae9a62a

ip-10-10-15-149.ap-northeast-2.compute.internal Ready <none> 41m v1.29.3-eks-ae9a62a

ip-10-10-3-178.ap-northeast-2.compute.internal Ready <none> 54s v1.29.0-eks-5e0fdde30초안에 뜨는걸 볼수있음..!

pod를 삭제하면 karpenter에서 날려버린다.

kubectl logs karpenter-56fc488d76-5f96b -n karpenter

{"level":"INFO","time":"2024-05-31T05:17:23.379Z","logger":"controller.node.termination","message":"tainted node","commit":"e719109","node":"ip-10-10-3-178.ap-northeast-2.compute.internal"}

{"level":"INFO","time":"2024-05-31T05:17:23.397Z","logger":"controller.node.termination","message":"deleted node","commit":"e719109","node":"ip-10-10-3-178.ap-northeast-2.compute.internal"}

{"level":"INFO","time":"2024-05-31T05:17:23.814Z","logger":"controller.nodeclaim.termination","message":"deleted nodeclaim","commit":"e719109","nodeclaim":"default-7hw6r","node":"ip-10-10-3-178.ap-northeast-2.compute.internal","provider-id":"aws:///ap-northeast-2a/i-00f498c84b028bead"}

[

kubectl get nodeclaim

No resources found

참고 terraform

# Allow terraform to access k8s, helm

provider "kubernetes" {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.eks.token

}

provider "helm" {

kubernetes {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

token = data.aws_eks_cluster_auth.eks.token

}

}

data "aws_eks_cluster_auth" "eks" {name = module.eks.cluster_name}

resource "helm_release" "karpenter" {

depends_on = [module.eks.kubeconfig]

namespace = "karpenter"

create_namespace = true

name = "karpenter"

repository = "https://charts.karpenter.sh"

chart = "karpenter"

version = "v0.16.0"

set {

name = "replicas"

value = 2

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = module.iam_assumable_role_karpenter.iam_role_arn

}

set {

name = "clusterName"

value = local.cluster_name

}

set {

name = "clusterEndpoint"

value = module.eks.cluster_endpoint

}

set {

name = "aws.defaultInstanceProfile"

value = aws_iam_instance_profile.karpenter.name

}

}옛날 버전

### provisioner yaml

이거 중요함!

ttlSecondsAfterEmpty

ttlSecondsUntilExpired

consolidation

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: application-nodetemplate

spec:

subnetSelector:

karpenter.sh/discovery: goyo-eks

securityGroupSelector:

karpenter.sh/discovery: goyo-eks

instanceProfile: role-eks-node-goyo-karpenter

amiFamily: AL2

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 25G

volumeType: gp3

iops: 10000

throughput: 125

deleteOnTermination: true

tags:

Name : goyo-karpenter-test### nodetemplate yaml

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: application-nodetemplate

spec:

subnetSelector:

karpenter.sh/discovery: goyo-eks

securityGroupSelector:

karpenter.sh/discovery: goyo-eks

instanceProfile: role-ekssa-goyo-karpenter

amiFamily: AL2

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 25G

volumeType: gp3

iops: 10000

throughput: 125

deleteOnTermination: true

tags:

Name : goyo-karpenter-test참고

https://karpenter.sh/docs/getting-started/getting-started-with-karpenter/

https://devblog.kakaostyle.com/ko/2022-10-13-1-karpenter-on-eks/

https://aws-ia.github.io/terraform-aws-eks-blueprints/patterns/karpenter-mng/

https://techblog.gccompany.co.kr/karpenter-7170ae9fb677

https://younsl.github.io/blog/k8s/karpenter/