https://keda.sh/docs/2.14/scalers/

https://keda.sh/

https://keda.sh/docs/2.14/concepts/

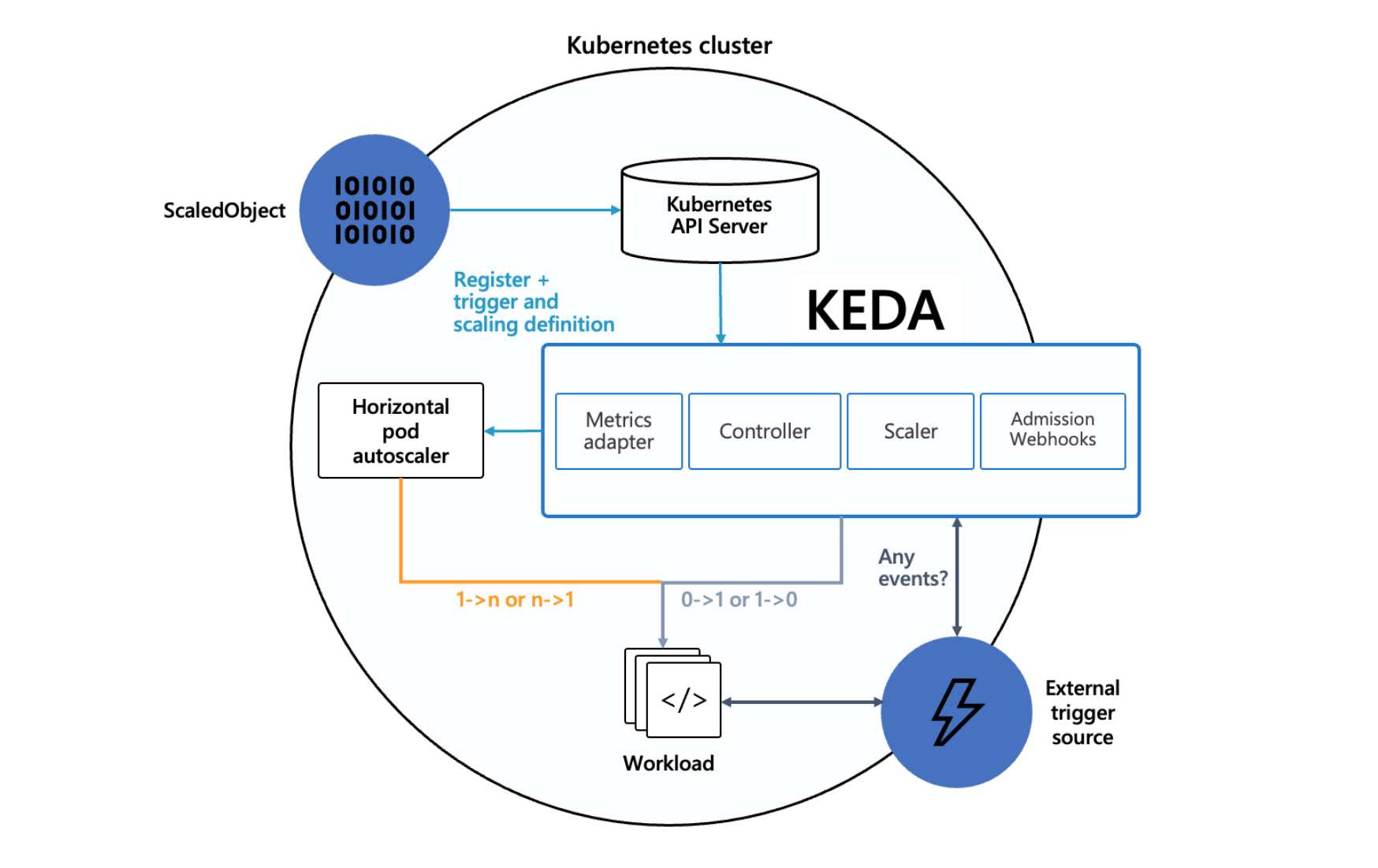

Agent — KEDA activates and deactivates Kubernetes Deployments to scale to and from zero on no events. This is one of the primary roles of the keda-operator container that runs when you install KEDA.

Metrics — KEDA acts as a Kubernetes metrics server that exposes rich event data like queue length or stream lag to the Horizontal Pod Autoscaler to drive scale out. It is up to the Deployment to consume the events directly from the source. This preserves rich event integration and enables gestures like completing or abandoning queue messages to work out of the box. The metric serving is the primary role of the keda-operator-metrics-apiserver container that runs when you install KEDA.

Admission Webhooks - Automatically validate resource changes to prevent misconfiguration and enforce best practices by using an admission controller. As an example, it will prevent multiple ScaledObjects to target the same scale target.

helm repo add kedacore https://kedacore.github.io/charts

helm repo update

helm pull kedacore/keda --version=2.14.0

docker pull ghcr.io/kedacore/keda-admission-webhooks:2.14.0

docker pull ghcr.io/kedacore/keda-metrics-apiserver:2.14.0

docker pull ghcr.io/kedacore/keda:2.14.0

docker save -o keda-admission-webhooks-2140.tar 5e7a029c7981

docker save -o keda-metrics-apiserver-2140.tar 789f6a8835d9

docker save -o keda-2140.tar 90f874bd572a

helm install keda kedacore/keda -n keda --create-namespaceobject 만들기, 가능한 메트릭이 여러개 있지만, 일단 간단하게 cpu로 확인 ( sqs연결시 iam oidc 연결 필요 )

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

cat <<EOF | kubectl apply -f -

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: nginx-cpu-scaler

spec:

scaleTargetRef:

name: nginx-test

kind: Deployment

apiVersion: apps/v1

fallback:

failureThreshold: 4

replicas: 5

minReplicaCount: 1

maxReplicaCount: 10

triggers:

- type: cpu

metricType: Utilization

metadata:

value: "50" # CPU 사용률이 50%를 초과하면 새 파드를 생성

EOF

cat <<EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx-test

name: nginx-test

spec:

replicas: 1

selector:

matchLabels:

app: nginx-test

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx-test

spec:

containers:

- image: nginx

name: nginx

resources:

requests:

cpu: 20m

limits:

cpu: 100m

EOF

kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

keda-hpa-nginx-cpu-scaler Deployment/nginx-test 0%/50% 1 10 1 10m

keda prometheus 연결

https://keda.sh/docs/2.14/operate/prometheus/

keda cron

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: cron-scaledobject

namespace: default

spec:

scaleTargetRef:

name: my-deployment

minReplicaCount: 0

cooldownPeriod: 300

triggers:

- type: cron

metadata:

timezone: Asia/Kolkata

start: 0 6 * * *

end: 0 20 * * *

desiredReplicas: "10"http object참고

https://medium.com/@carlocolumna/how-to-scale-your-pods-based-on-http-traffic-d58221d5e7f1

https://github.com/kedacore/http-add-on/blob/main/docs/ref/v0.2.0/http_scaled_object.md