가시다(gasida) 님이 진행하는 KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 1주차는 컨테이너 격리, 도커 네트워크, 보안을 학습하였습니다.

내용이 매우 방대하고 어려운 점이 많아 직접 실습하면서 도움이 될 내용들을 집중적으로 정리하고자 합니다.

MacBook Air - M1(Arm)의 경우 VirtualBox를 실행할 수 없는데 QEMU를 이용하여 Ubuntu 24.04 LTS(amd64), Rocky 9을 실행하는 방법 또한 정리하였으니 도움이 되길 바랍니다.

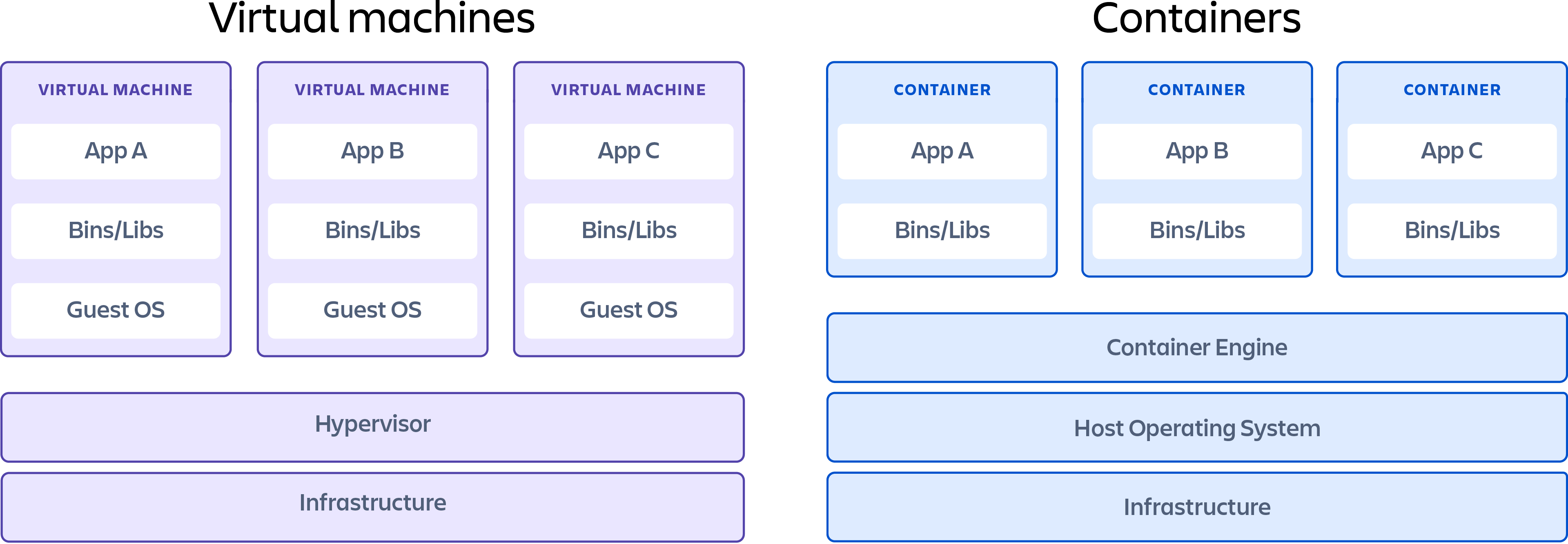

1. 가상 컴퓨터와 컨테이너 비교

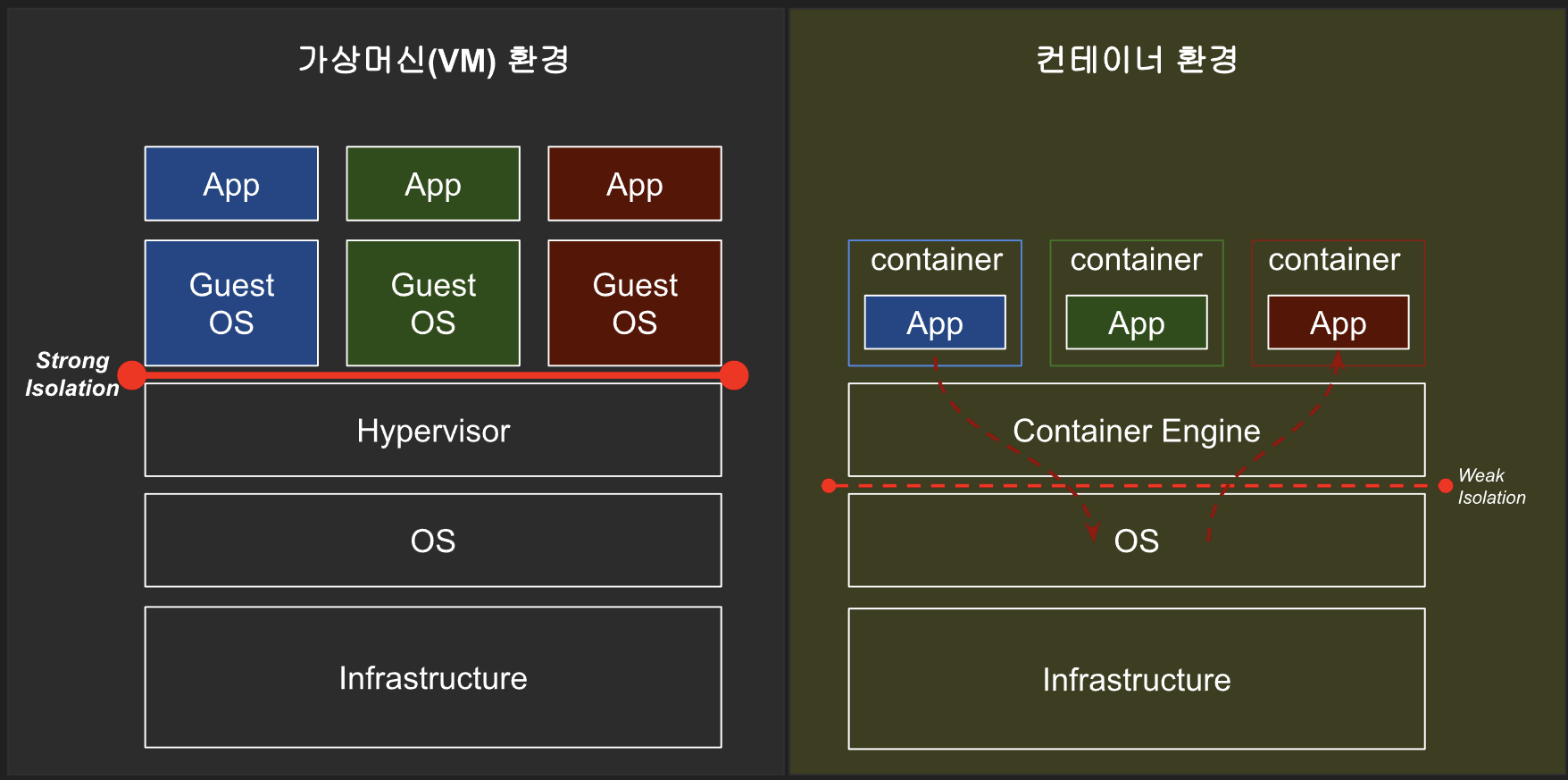

가상 컴퓨터와 컨테이너 매우 유사한 리소스 가상화 기술입니다. 가상화는 RAM, CPU, 디스크 또는 네트워킹과 같은 시스템 단일 리소스를 '가상화'하고 여러 리소스로 나타낼 수 있는 프로세스입니다. 가상 컴퓨터와 컨테이너 주요 차이점은 가상 컴퓨터가 전체 컴퓨터를 하드웨어 계층까지 가상화하는 반면 컨테이너는 운영 체제 수준 위의 소프트웨어 계층만 가상화한다는 것입니다.

컨테이너는 무엇일까요?

컨테이너는 포함된 소프트웨어 애플리케이션을 실행하는 데 필요한 모든 종속성을 포함하는 경량 소프트웨어 패키지입니다. 종속성에는 시스템 라이브러리, 외부 타사 코드 패키지 및 기타 운영 체제 수준 애플리케이션 등이 있습니다. 컨테이너에 포함된 종속성은 운영 체제보다 높은 스택 수준에 존재합니다.

장점

- 반복 속도

컨테이너는 가볍고 높은 수준의 소프트웨어만 포함하기 때문에 수정 및 반복이 매우 빠릅니다. - 강력한 에코시스템

대부분의 컨테이너 런타임 시스템은 사전 제작된 컨테이너의 호스팅된 공개 리포지토리를 제공합니다. 컨테이너 리포지토리에는 데이터베이스나 메시징 시스템과 같이 인기 있는 소프트웨어 애플리케이션이 많이 포함되어 있으며 즉시 다운로드하여 실행할 수 있으므로 개발 팀의 시간을 절약할 수 있습니다.

단점

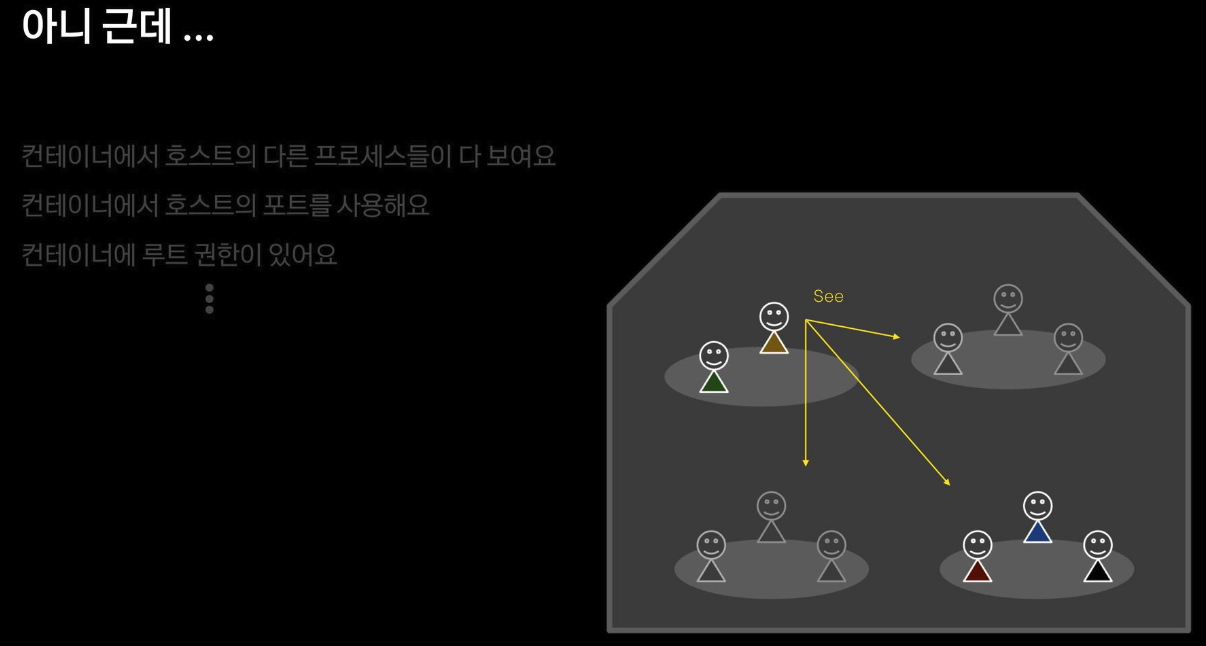

- 공유 호스트 악용

컨테이너는 모두 운영 체제 계층 아래의 동일한 기본 하드웨어 시스템을 공유하므로, 한 컨테이너가 악용되면 해당 컨테이너에서 벗어나 공유 하드웨어에 영향을 미칠 수 있습니다. 가장 인기 있는 컨테이너 런타임에는 사전 제작된 컨테이너로 구성된 공개 리포지토리가 있습니다. 이러한 공개 이미지 중 하나를 사용하는 경우 익스플로잇이 포함되어 있거나 악의적인 공격자의 하이재킹에 취약할 수 있으므로 보안 위험이 있습니다.

2. 실습환경

실습환경 구성

저는 MacBook Air - M1(Arm) 과 ThinkPad L13 Yoga(Windows11&Ubuntu 22.04 LTS 듀얼부팅)를 사용하고 있습니다. Thinkpad에 VirtualBox 또는 AWS Cloud에 EC2를 생성하여 실습을 할 수 있으나 MacBook Air - M1에 QEMU 가상화 환경에 Ubuntu 22.04 LTS를 실행하여 실습 환경을 구성하겠습니다.

QEMU는 가장 강력한 하드웨어 에뮬레이션 가상 컴퓨터 옵션으로, 일반적인 하드웨어 아키텍처를 모두 지원합니다. QEMU는 명령줄 전용 유틸리티이며, 구성이나 실행을 위한 그래픽 사용자 인터페이스를 제공하지 않습니다. 그렇기 때문에 가장 빠른 가상 컴퓨터 옵션이라고 할 수 있습니다.

Vagrant & QEMU설치

# vagrant 설치

> brew install vagrant --cask

# qemu 설치

> brew install qemu

# plugin 설치

> vagrant plugin install vagrant-qemuVagrant 파일 생성

Vagrant Configure로 아래 사항을 기입

- vm.box Image로

generic/ubuntu2204Rocky9를 실행하고자 할 때는generic/rocky9로- archtecture는

x86_64, machine은q35- net_device는

virtio-net-pci

> cat > Vagrantfile1 <<-EOF

BOX_IMAGE = "generic/ubuntu2204"

HOST_NAME = "ubuntu2204"

$pre_install = <<-SCRIPT

#!/bin/bash

echo ">>>> pre-install <<<<<<"

hostnamectl --static set-hostname MyServer

# Config convenience

echo 'alias vi=vim' >> /etc/profile

systemctl stop ufw && systemctl disable ufw

systemctl stop apparmor && systemctl disable apparmor

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Install packages

apt update -qq && apt install tree jq bridge-utils net-tools conntrack gcc make pkg-config libseccomp-dev -y

SCRIPT

Vagrant.configure("2") do |config|

config.vm.define HOST_NAME do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.hostname = HOST_NAME

subconfig.vm.network :private_network, ip: "192.168.50.10"

subconfig.vm.provider "qemu" do |qe|

qe.arch = "x86_64"

qe.machine = "q35"

qe.cpu = "max"

qe.net_device = "virtio-net-pci"

end

subconfig.vm.provision "shell", inline: $pre_install

end

end

EOF

> ls

VagrantfileUbuntu 22.04 실행 & 환경점검

QEMU Provider의 제약사항은 High-Level network configuration은 지원안된다고 합니다.

# starts and provisions the vagrant environment

> vagrant up

Bringing machine 'ubuntu2204' up with 'qemu' provider...

==> ubuntu2204: Checking if box 'generic/ubuntu2204' version '4.3.2' is up to date...

==> ubuntu2204: Importing a QEMU instance

ubuntu2204: Creating and registering the VM...

ubuntu2204: Successfully imported VM

==> ubuntu2204: Warning! The QEMU provider doesn't support any of the Vagrant

==> ubuntu2204: high-level network configurations (`config.vm.network`). They

==> ubuntu2204: will be silently ignored.

==> ubuntu2204: Starting the instance...

==> ubuntu2204: Waiting for machine to boot. This may take a few minutes...

ubuntu2204: SSH address: 127.0.0.1:50022

ubuntu2204: SSH username: vagrant

ubuntu2204: SSH auth method: private key

ubuntu2204: Warning: Connection reset. Retrying...

...

ubuntu2204:

ubuntu2204: Vagrant insecure key detected. Vagrant will automatically replace

ubuntu2204: this with a newly generated keypair for better security.

ubuntu2204:

ubuntu2204: Inserting generated public key within guest...

ubuntu2204: Removing insecure key from the guest if it's present...

ubuntu2204: Key inserted! Disconnecting and reconnecting using new SSH key...

==> ubuntu2204: Machine booted and ready!

==> ubuntu2204: Setting hostname...

==> ubuntu2204: Running provisioner: shell...

ubuntu2204: Running: inline script

ubuntu2204: >>>> pre-install <<<<<<

ubuntu2204: Synchronizing state of ufw.service with SysV service script with /lib/systemd/systemd-sysv-install.

ubuntu2204: Executing: /lib/systemd/systemd-sysv-install disable ufw

ubuntu2204: Removed /etc/systemd/system/multi-user.target.wants/ufw.service.

ubuntu2204: Synchronizing state of apparmor.service with SysV service script with /lib/systemd/systemd-sysv-install.

ubuntu2204: Executing: /lib/systemd/systemd-sysv-install disable apparmor

ubuntu2204: Removed /etc/systemd/system/sysinit.target.wants/apparmor.service.

ubuntu2204:

ubuntu2204: WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

ubuntu2204:

ubuntu2204: 183 packages can be upgraded. Run 'apt list --upgradable' to see them.

ubuntu2204:

ubuntu2204: WARNING: apt does not have a stable CLI interface. Use with caution in scripts.

ubuntu2204:

ubuntu2204: Reading package lists...

ubuntu2204: Building dependency tree...

ubuntu2204: Reading state information...

ubuntu2204: The following additional packages will be installed:

ubuntu2204: bzip2 cpp cpp-11 fontconfig-config fonts-dejavu-core gcc-11 gcc-11-base

...

ubuntu2204:

ubuntu2204: Running kernel seems to be up-to-date.

ubuntu2204:

ubuntu2204: Services to be restarted:

ubuntu2204: systemctl restart cron.service

...

ubuntu2204: No containers need to be restarted.

ubuntu2204:

ubuntu2204: No user sessions are running outdated binaries.

ubuntu2204:

ubuntu2204: No VM guests are running outdated hypervisor (qemu) binaries on this host.

# Machine 상태 확인

> vagrant status

Current machine states:

ubuntu2204 running (qemu)

# SSH 로 VM 접속

> vagrant ssh

# MacBook Air - M1 (Arm) 이지만 x86_64 아키텍처로 기동 됨

vagrant@ubuntu2204:~$ uname -a

Linux ubuntu2204.localdomain 5.15.0-84-generic #93-Ubuntu SMP Tue Sep 5 17:16:10 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

# ip 확인시 eth0에 10.0.2.15로 올라왔네요

> vagrant@ubuntu2204:~$ ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:12:34:56 brd ff:ff:ff:ff:ff:ff

altname enp0s1

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 86218sec preferred_lft 86218sec

inet6 fec0::5054:ff:fe12:3456/64 scope site dynamic mngtmpaddr noprefixroute

valid_lft 86220sec preferred_lft 14220sec

inet6 fe80::5054:ff:fe12:3456/64 scope link

valid_lft forever preferred_lft forever

# VM 종료 시 shutdown 명령어 실행 또는 vagrant halt 명령어 실행

vagrant@ubuntu2204:~$ sudo shutdown -h now

Connection to 127.0.0.1 closed by remote host.

# Machine 상태 확인

> vagrant status

Current machine states:

default stopped (qemu)

# 다시 Machine 기동하고자 할 때

> vagrant up- 추가적으로 시스템 체크

# 계정 정보 확인

root@MyServer:~# whoami

root

root@MyServer:~# id

uid=0(root) gid=0(root) groups=0(root)

# 버전 확인

root@MyServer:~# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 22.04.3 LTS

Release: 22.04

Codename: jammy

# CPU, MEM, DISK 등 기본 정보 확인

root@MyServer:~# hostnamectl

Static hostname: MyServer

Icon name: computer-vm

Chassis: vm

Machine ID: 5d8e52b5f38248d490636210c5cc4696

Boot ID: 85a634b206ad440e93bcd39a7924e5ed

Virtualization: qemu

Operating System: Ubuntu 22.04.3 LTS

Kernel: Linux 5.15.0-84-generic

Architecture: x86-64

Hardware Vendor: QEMU

Hardware Model: Standard PC _Q35 + ICH9, 2009_

root@MyServer:~# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 40 bits physical, 57 bits virtual

Byte Order: Little Endian

CPU(s): 2

On-line CPU(s) list: 0,1

Vendor ID: AuthenticAMD

Model name: QEMU TCG CPU version 2.5+

CPU family: 6

Model: 6

Thread(s) per core: 1

Core(s) per socket: 2

Socket(s): 1

Stepping: 3

BogoMIPS: 2000.34

Flags: fpu de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush acpi mmx fxsr sse sse2 ss ht syscall nx mmxex

t pdpe1gb rdtscp lm 3dnowext 3dnow nopl cpuid pni pclmulqdq monitor ssse3 fma cx16 sse4_1 sse4_2 x2apic movbe popcnt aes

xsave avx f16c rdrand hypervisor lahf_lm cmp_legacy svm cr8_legacy abm sse4a 3dnowprefetch vmmcall fsgsbase bmi1 avx2 sme

p bmi2 erms mpx rdseed adx smap clflushopt clwb sha_ni xsaveopt xgetbv1 xsaveerptr wbnoinvd arat npt vgif umip pku ospke

vaes la57 rdpid fsrm

Virtualization features:

Virtualization: AMD-V

Caches (sum of all):

L1d: 128 KiB (2 instances)

L1i: 128 KiB (2 instances)

L2: 1 MiB (2 instances)

L3: 32 MiB (2 instances)

NUMA:

NUMA node(s): 1

NUMA node0 CPU(s): 0,1

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: Not affected

L1tf: Not affected

Mds: Not affected

Meltdown: Not affected

Mmio stale data: Not affected

Retbleed: Not affected

Spec store bypass: Vulnerable

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines, STIBP disabled, RSB filling, PBRSB-eIBRS Not affected

Srbds: Not affected

Tsx async abort: Not affected

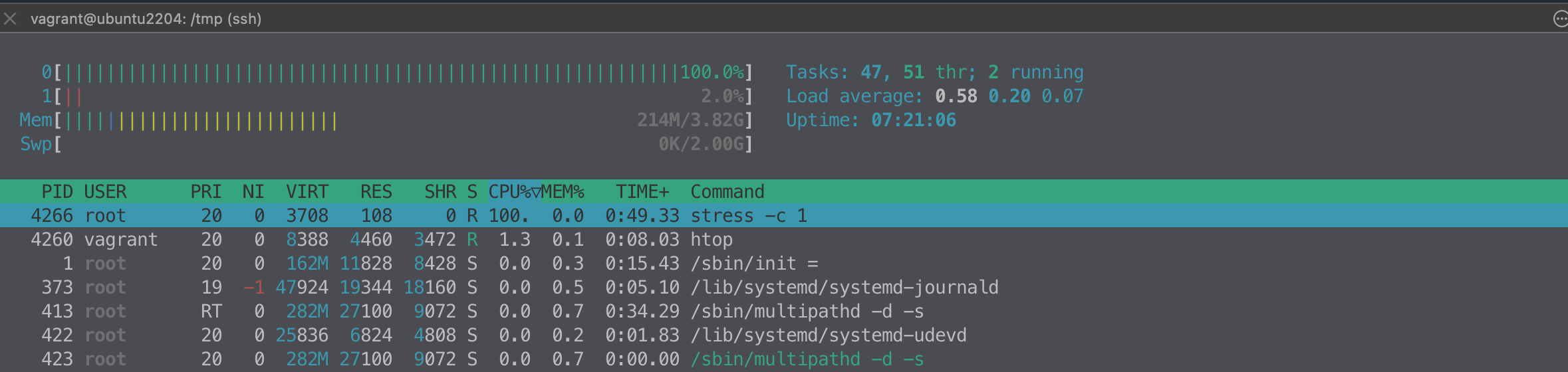

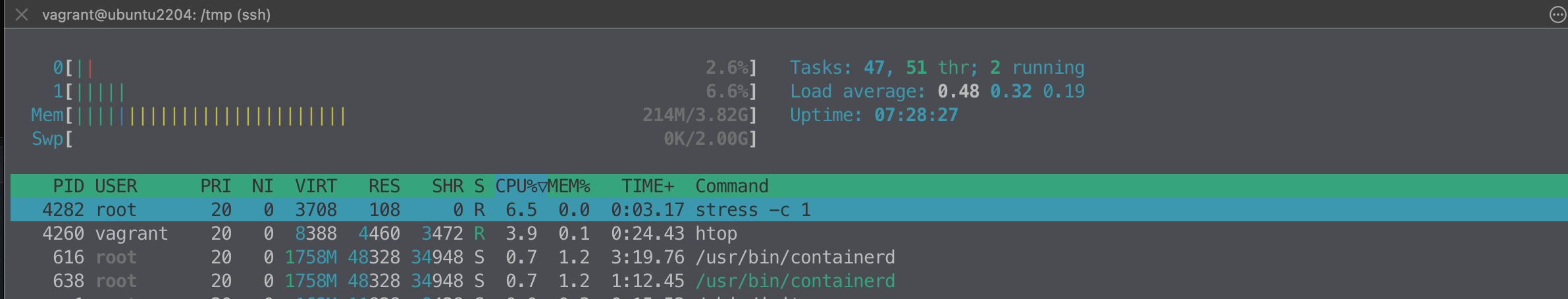

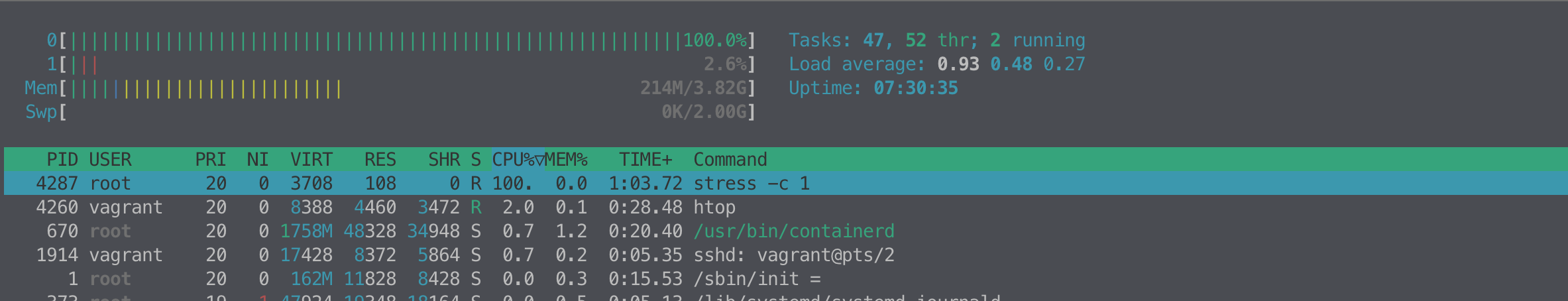

root@MyServer:~# htop

root@MyServer:~# free -h

total used free shared buff/cache available

Mem: 3.8Gi 211Mi 2.7Gi 0.0Ki 885Mi 3.4Gi

Swap: 2.0Gi 0B 2.0Gi

root@MyServer:~# df -hT /

Filesystem Type Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lv ext4 62G 5.2G 54G 9% /

root@MyServer:~#

root@MyServer:~# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=1944500k,nr_inodes=486125,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,noexec,relatime,size=400492k,mode=755,inode64)

/dev/mapper/ubuntu--vg-ubuntu--lv on / type ext4 (rw,relatime)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

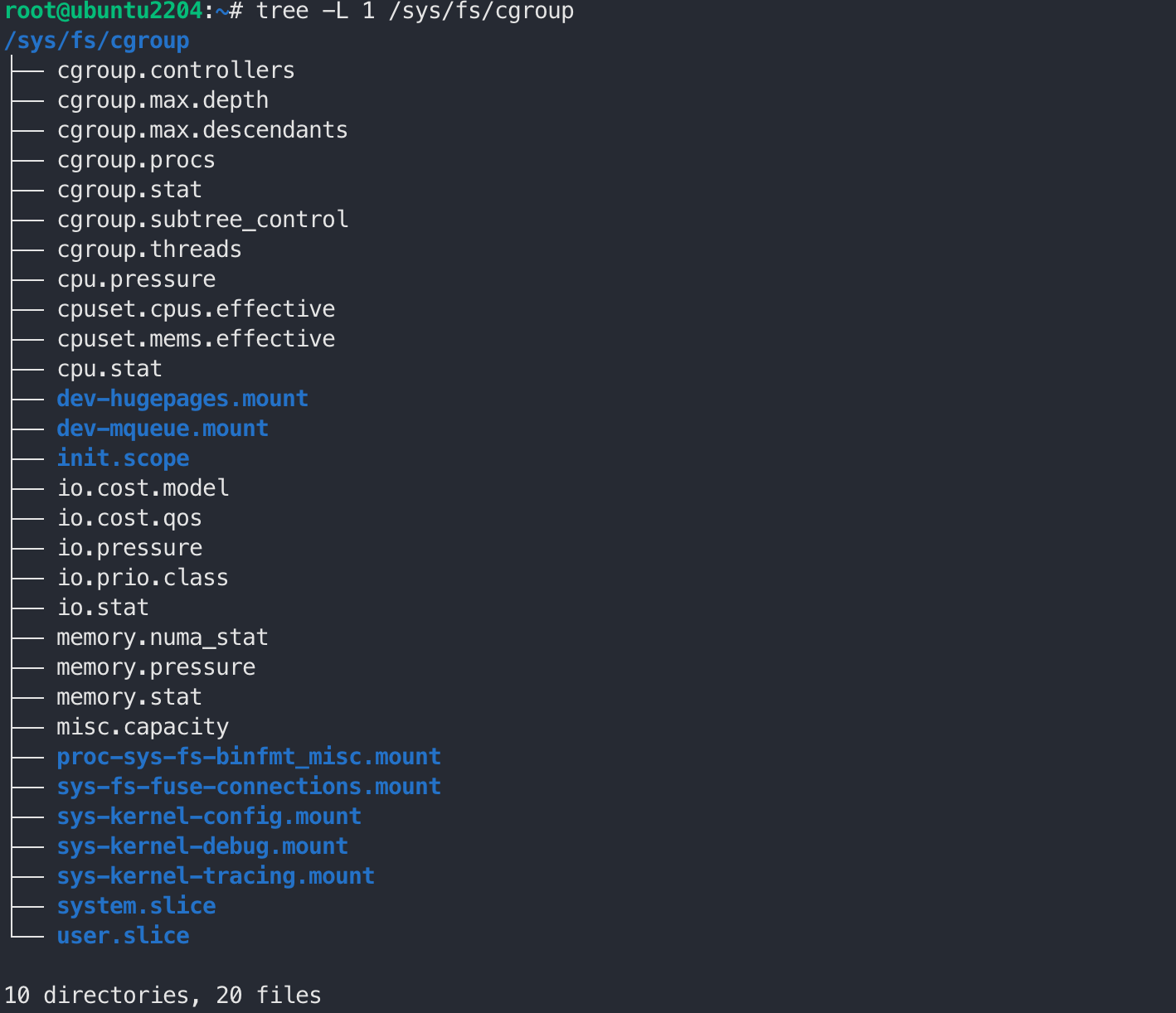

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime,nsdelegate,memory_recursiveprot)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

bpf on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=29,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=19093)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

none on /run/credentials/systemd-sysusers.service type ramfs (ro,nosuid,nodev,noexec,relatime,mode=700)

/var/lib/snapd/snaps/snapd_19457.snap on /snap/snapd/19457 type squashfs (ro,nodev,relatime,errors=continue,x-gdu.hide)

/var/lib/snapd/snaps/lxd_24322.snap on /snap/lxd/24322 type squashfs (ro,nodev,relatime,errors=continue,x-gdu.hide)

/var/lib/snapd/snaps/core20_1974.snap on /snap/core20/1974 type squashfs (ro,nodev,relatime,errors=continue,x-gdu.hide)

/dev/vda2 on /boot type ext4 (rw,relatime)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,nosuid,nodev,noexec,relatime)

tmpfs on /run/snapd/ns type tmpfs (rw,nosuid,nodev,noexec,relatime,size=400492k,mode=755,inode64)

nsfs on /run/snapd/ns/lxd.mnt type nsfs (rw)

tmpfs on /run/user/1000 type tmpfs (rw,nosuid,nodev,relatime,size=400488k,nr_inodes=100122,mode=700,uid=1000,gid=1000,inode64)

root@MyServer:~# findmnt -A

TARGET SOURCE FSTYPE OPTIONS

/ /dev/mapper/ubuntu--vg-ubuntu--lv

│ ext4 rw,relatime

├─/sys sysfs sysfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/security securityfs securityfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/cgroup cgroup2 cgroup2 rw,nosuid,nodev,noexec,relatime,nsdelegate,memory_recursiveprot

│ ├─/sys/fs/pstore pstore pstore rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/bpf bpf bpf rw,nosuid,nodev,noexec,relatime,mode=700

│ ├─/sys/kernel/debug debugfs debugfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/tracing tracefs tracefs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/fuse/connections fusectl fusectl rw,nosuid,nodev,noexec,relatime

│ └─/sys/kernel/config configfs configfs rw,nosuid,nodev,noexec,relatime

├─/proc proc proc rw,nosuid,nodev,noexec,relatime

│ └─/proc/sys/fs/binfmt_misc systemd-1 autofs rw,relatime,fd=29,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=19093

│ └─/proc/sys/fs/binfmt_misc binfmt_misc binfmt_misc rw,nosuid,nodev,noexec,relatime

├─/dev udev devtmpfs rw,nosuid,relatime,size=1944500k,nr_inodes=486125,mode=755,inode64

│ ├─/dev/pts devpts devpts rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000

│ ├─/dev/shm tmpfs tmpfs rw,nosuid,nodev,inode64

│ ├─/dev/mqueue mqueue mqueue rw,nosuid,nodev,noexec,relatime

│ └─/dev/hugepages hugetlbfs hugetlbfs rw,relatime,pagesize=2M

├─/run tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=400492k,mode=755,inode64

│ ├─/run/lock tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=5120k,inode64

│ ├─/run/credentials/systemd-sysusers.service

│ │ none ramfs ro,nosuid,nodev,noexec,relatime,mode=700

│ ├─/run/user/1000 tmpfs tmpfs rw,nosuid,nodev,relatime,size=400488k,nr_inodes=100122,mode=700,uid=1000,gid=1000,inod

│ └─/run/snapd/ns tmpfs[/snapd/ns] tmpfs rw,nosuid,nodev,noexec,relatime,size=400492k,mode=755,inode64

│ └─/run/snapd/ns/lxd.mnt nsfs[mnt:[4026532290]]

│ nsfs rw

├─/snap/snapd/19457 /dev/loop1 squashfs ro,nodev,relatime,errors=continue

├─/snap/lxd/24322 /dev/loop2 squashfs ro,nodev,relatime,errors=continue

├─/snap/core20/1974 /dev/loop0 squashfs ro,nodev,relatime,errors=continue

└─/boot /dev/vda2 ext4 rw,relatime

root@MyServer:~# cat /etc/hosts

127.0.0.1 localhost

127.0.1.1 ubuntu2204.localdomain

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.0.1 ubuntu2204.localdomain

# iptables 정책 확인

root@MyServer:~# sudo iptables -t filter -L

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

root@MyServer:~# sudo iptables -t nat -L

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

root@MyServer:~# ip -br -c addr show

lo UNKNOWN 127.0.0.1/8

eth0 UP 10.0.2.15/24 metric 100 fec0::5054:ff:fe12:3456/64 fe80::5054:ff:fe12:3456/64

root@MyServer:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 52:54:00:12:34:56 brd ff:ff:ff:ff:ff:ff

altname enp0s1

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic eth0

valid_lft 83664sec preferred_lft 83664sec

inet6 fec0::5054:ff:fe12:3456/64 scope site dynamic mngtmpaddr noprefixroute

valid_lft 86100sec preferred_lft 14100sec

inet6 fe80::5054:ff:fe12:3456/64 scope link

valid_lft forever preferred_lft forever

root@MyServer:~# ip route

default via 10.0.2.2 dev eth0 proto dhcp src 10.0.2.15 metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.15 metric 100

10.0.2.2 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

10.0.2.3 dev eth0 proto dhcp scope link src 10.0.2.15 metric 100

# 프로세스 확인 - 쉘변수

root@MyServer:~# ps auf

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

vagrant 3079 0.0 0.1 8836 5340 pts/0 Ss 08:14 0:00 -bash

root 3091 0.0 0.1 11496 5612 pts/0 S+ 08:15 0:00 \_ sudo -Es

root 3092 0.0 0.0 11496 880 pts/1 Ss 08:15 0:00 \_ sudo -Es

root 3093 0.0 0.1 8804 5304 pts/1 S 08:15 0:00 \_ /bin/bash

root 3173 0.0 0.0 10072 3440 pts/1 R+ 08:49 0:00 \_ ps auf

root 973 0.0 0.0 6172 1060 tty1 Ss+ 08:02 0:00 /sbin/agetty -o -p -- \u --noclear tty1 linux

root@MyServer:~# pstree -p

systemd(1)─┬─ModemManager(708)─┬─{ModemManager}(713)

│ └─{ModemManager}(719)

├─agetty(973)

├─cron(952)

├─dbus-daemon(665)

├─haveged(580)

├─ifplugd(980)

├─irqbalance(671)───{irqbalance}(698)

├─multipathd(411)─┬─{multipathd}(421)

│ ├─{multipathd}(422)

│ ├─{multipathd}(423)

│ ├─{multipathd}(424)

│ ├─{multipathd}(425)

│ └─{multipathd}(426)

├─networkd-dispat(672)

├─packagekitd(2214)─┬─{packagekitd}(2215)

│ └─{packagekitd}(2216)

├─polkitd(673)─┬─{polkitd}(682)

│ └─{polkitd}(707)

├─rsyslogd(674)─┬─{rsyslogd}(690)

│ ├─{rsyslogd}(691)

│ └─{rsyslogd}(693)

├─snapd(676)─┬─{snapd}(833)

│ ├─{snapd}(834)

│ ├─{snapd}(835)

│ ├─{snapd}(836)

│ ├─{snapd}(837)

│ ├─{snapd}(849)

│ ├─{snapd}(850)

│ ├─{snapd}(872)

│ ├─{snapd}(884)

│ ├─{snapd}(945)

│ └─{snapd}(947)

├─sshd(1259)───sshd(3016)───sshd(3078)───bash(3079)───sudo(3091)───sudo(3092)───bash(3093)───pstree(3174)

├─systemd(3019)───(sd-pam)(3021)

├─systemd-journal(374)

├─systemd-logind(678)

├─systemd-network(1668)

├─systemd-resolve(630)

├─systemd-timesyn(586)───{systemd-timesyn}(652)

├─systemd-udevd(418)

└─udisksd(679)─┬─{udisksd}(687)

├─{udisksd}(706)

├─{udisksd}(711)

└─{udisksd}(726)

root@MyServer:~# echo $$

3093

root@MyServer:~# kill -9 $$

Killed3. 컨테이너

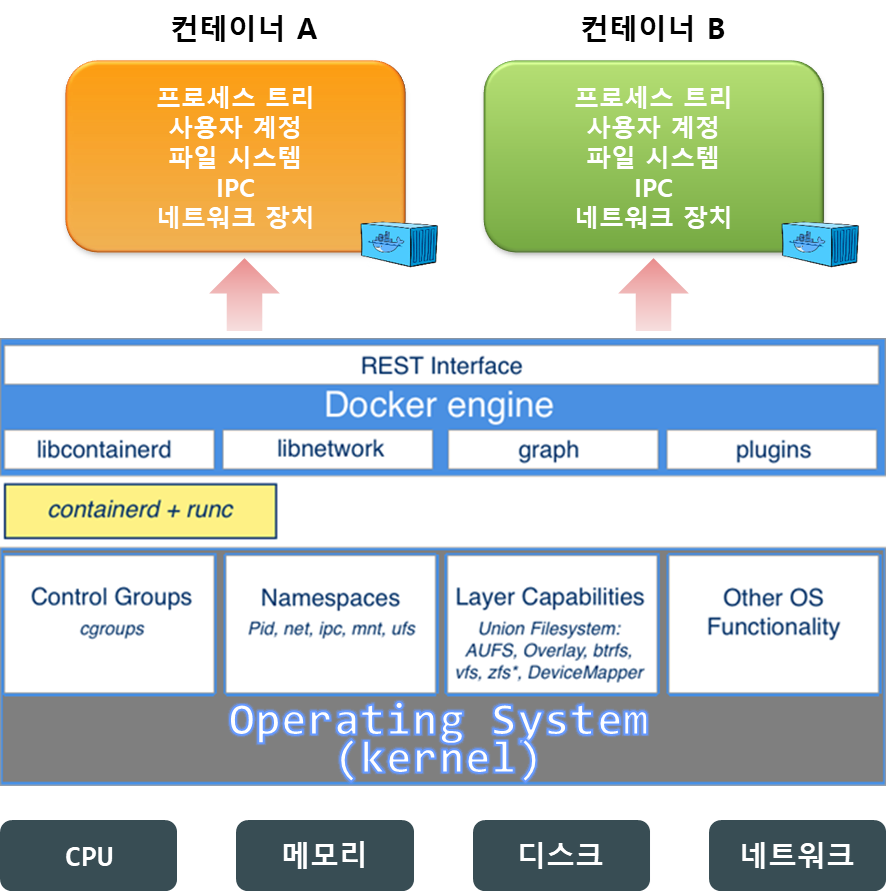

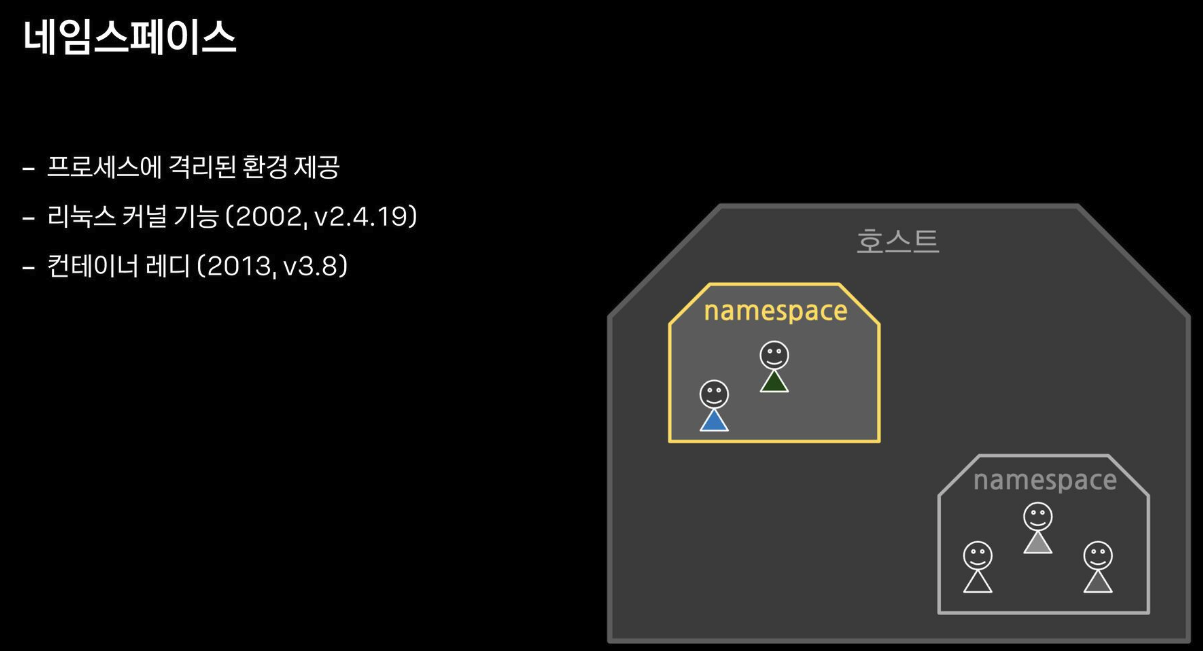

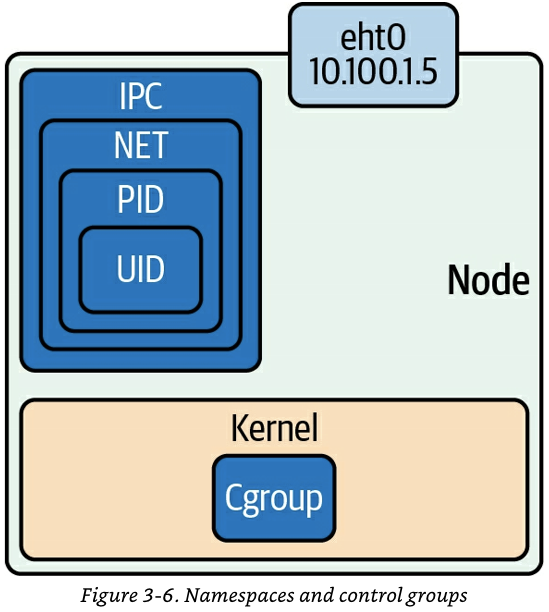

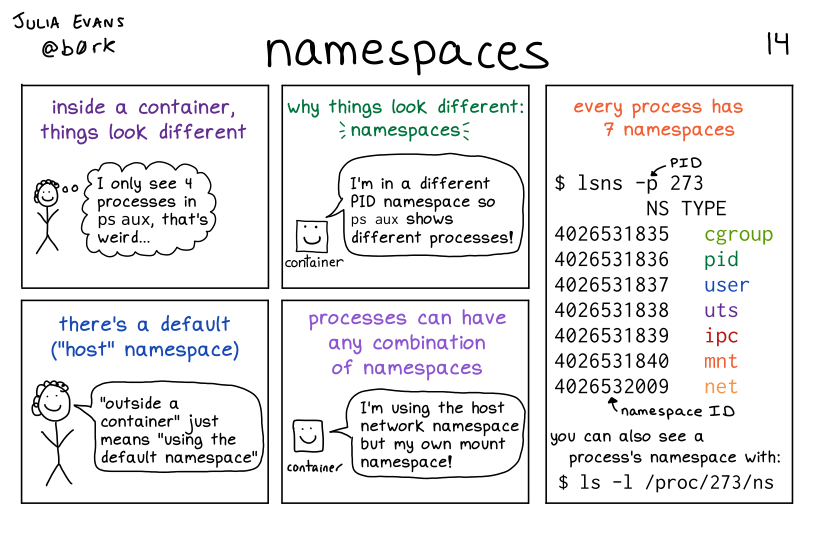

컨테이너는 독립된 리눅스 환경(pivot-root, namespace, Overlay filesystem, cgroup)을 보장받는

프로세스

컨테이너는 애플리케이션(프로세스) 동작에 필요한 파일들만 패키징된 이미지를 실행하여 동작

컨테이너는 컨테이너 환경이 조성된 곳 어디에서나(온프레미스/클라우드 환경)에서도 실행 가능

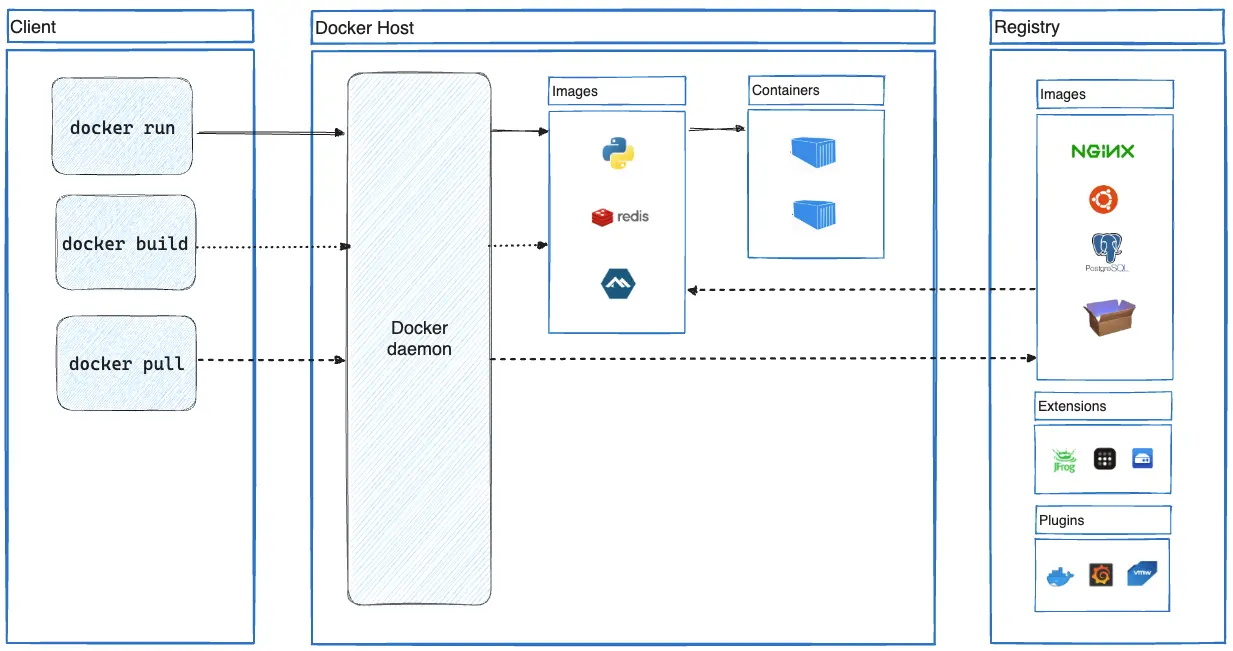

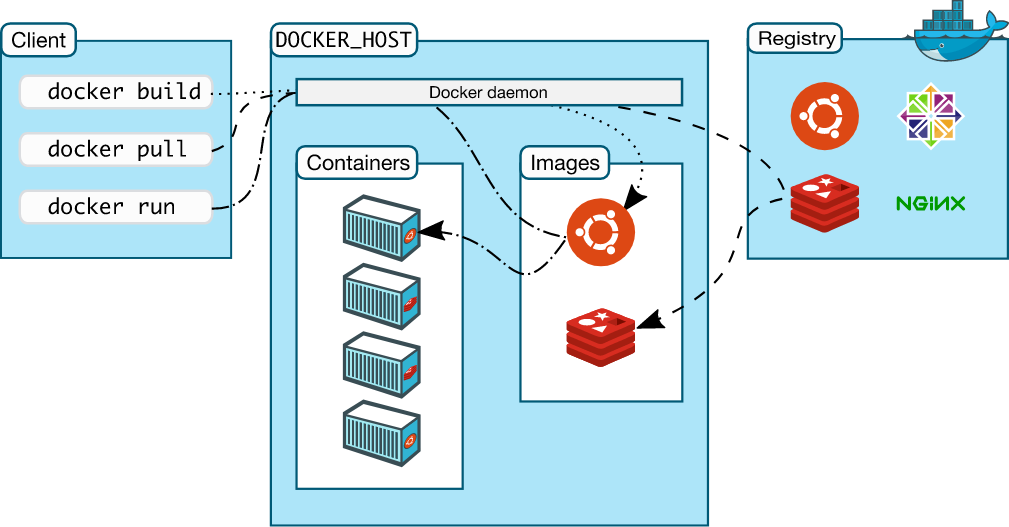

Docker란

- 도커(Docker)는 가상실행 환경을 제공해주는 오픈소스 플랫폼입니다. 도커에서는 이 가상실행 환경을 '컨테이너(Container)'라고 부릅니다.

- 좀 더 정확히 표현하는 용어는 '컨테이너화된 프로세스(Containerized Process)' 이다

- 도커 플랫폼이 설치된 곳이라면 컨테이너로 묶인 애플리케이션을 어디서든 실행할 수 있는 장점을 가집니다.

- 가상머신은 운영체제 위에 하드웨어를 에뮬레이션하고 그 위에 운영체제를 올리고 프로세스를 실행하는 반면에,

도커 컨테이너는 하드웨어 에뮬레이션 없이 리눅스 커널을 공유해서 바로 프로세스를 실행합니다.

보안의 측면에서 '가상 머신' 환경과 '컨테이너' 환경 중 가상 머신이 리소스는 좀 더 많이 소비하고 무겁지만 하드웨어 레벨의 가상화를 지원하여 OS레벨의 가상화 대비 격리 수준이 훨씬 높습니다.

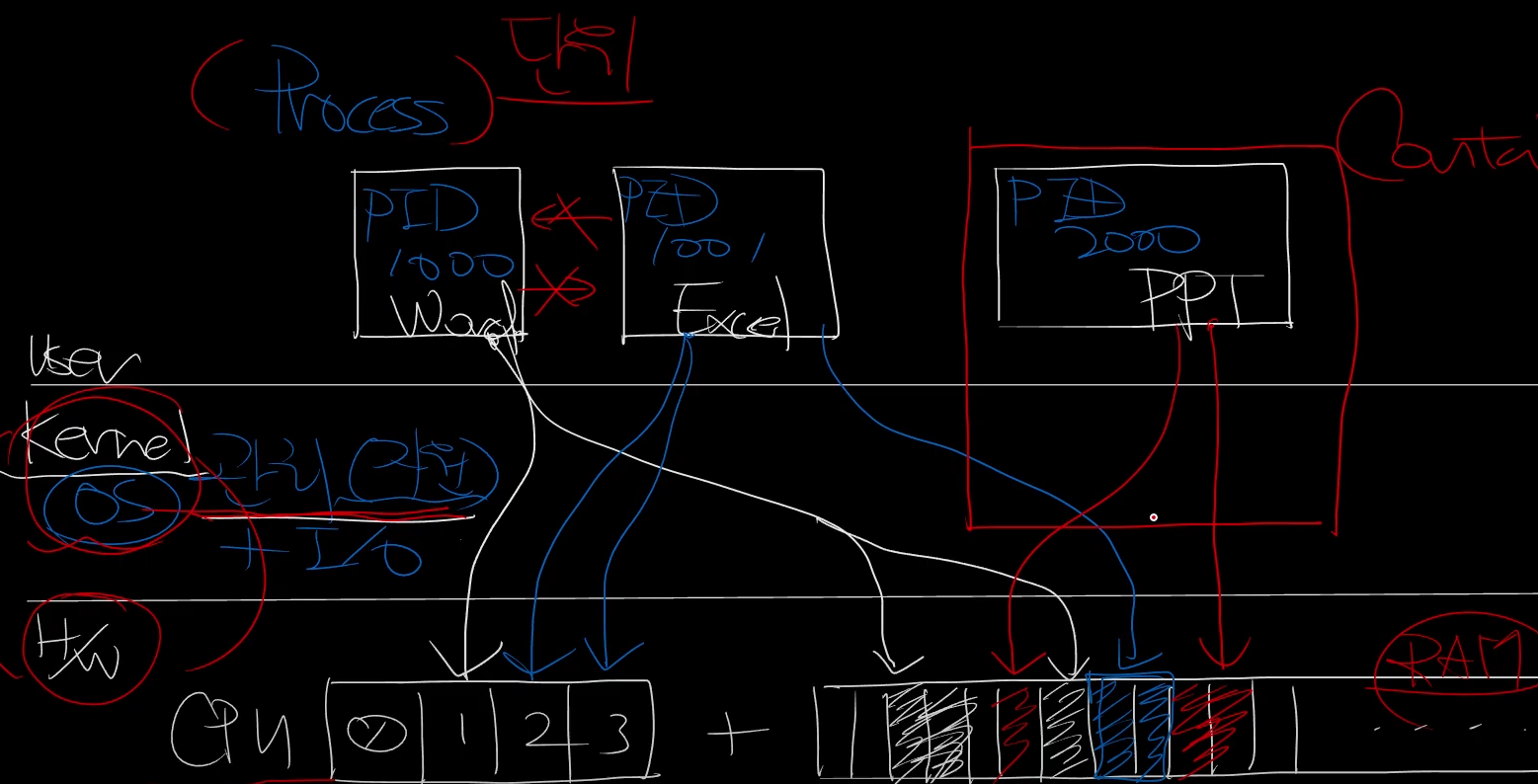

프로세스 & 쓰레드

- 프로세스(process)란 실행중에 있는 프로그램(Program)을 의미한다.스케줄링의 대상이 되는 작업(task)과 같은 의미로 쓰인다.프로세스 내부에는 최소 하나의 스레드(thread)를 가지고있는데, 실제로는 스레드(thread)단위로 스케줄링을 한다.하드디스크에 있는 프로그램을 실행하면, 실행을 위해서 메모리 할당이 이루어지고, 할당된 메모리 공간으로 바이너리 코드가 올라가게 된다. 이 순간부터 프로세스라 불린다.

- 프로세스라는 것이 사실은 큰 의미? 통? 같은 것이고 실제로 상태변화를 하거나 컴퓨터에서 task로 사용하는 단위는 Thread 입니다.간단히 말하자면 스레드(Thread)는 프로세스 내부의 작업의 흐름, 단위입니다. 스레드(thread)는 한 프로세스(process)내부에 적어도 하나 존재합니다.스레드(thread)가 여러개 존재하는것을 멀티스레드(Mulitread)라고 합니다.멀티스레드(Multitread)에서 각 스레드끼리는 프로세스의 일정 메모리 영역을 공유합니다. 일정 메모리 영역을 공유 하기 때문에 동일한 프로세스 내부의 스레드 간 문맥전환(context switching) 할때가, 프로세스 끼리 문맥전환(context switching)을 할때보다 빠릅니다. 상대적으로 스위치 해야 할 메모리 영역이 적기 때문입니다.

Docker 아키텍처

Docker 설치

# [터미널1] 관리자 전환

sudo su -

whoami

id

# 도커 설치

curl -fsSL https://get.docker.com | sh

# 도커 정보 확인 : Client 와 Server , Storage Driver(overlay2), Cgroup Version(2), Default Runtime(runc)

root@ubuntu2204:~# docker info

Client: Docker Engine - Community

Version: 27.2.0

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.16.2

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.29.2

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 27.2.0

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 472731909fa34bd7bc9c087e4c27943f9835f111

runc version: v1.1.13-0-g58aa920

init version: de40ad0

Security Options:

apparmor

seccomp

Profile: builtin

cgroupns

Kernel Version: 5.15.0-84-generic

Operating System: Ubuntu 22.04.3 LTS

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.819GiB

Name: ubuntu2204

ID: db912212-f5f4-4ced-a37d-cb6ac0b9f964

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

root@ubuntu2204:~# docker version

Client: Docker Engine - Community

Version: 27.2.0

API version: 1.47

Go version: go1.21.13

Git commit: 3ab4256

Built: Tue Aug 27 14:15:13 2024

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 27.2.0

API version: 1.47 (minimum version 1.24)

Go version: go1.21.13

Git commit: 3ab5c7d

Built: Tue Aug 27 14:15:13 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.7.21

GitCommit: 472731909fa34bd7bc9c087e4c27943f9835f111

runc:

Version: 1.1.13

GitCommit: v1.1.13-0-g58aa920

docker-init:

Version: 0.19.0

GitCommit: de40ad0

# 도커 서비스 상태 확인

root@ubuntu2204:~# systemctl status docker -l --no-pager

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2024-08-31 15:51:30 KST; 6min ago

TriggeredBy: ● docker.socket

Docs: https://docs.docker.com

Main PID: 3301 (dockerd)

Tasks: 9

Memory: 22.3M

CPU: 3.587s

CGroup: /system.slice/docker.service

└─3301 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Aug 31 15:51:27 ubuntu2204 systemd[1]: Starting Docker Application Container Engine...

Aug 31 15:51:27 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:27.576576762+09:00" level=info msg="Starting up"

Aug 31 15:51:27 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:27.600562805+09:00" level=info msg="detected 127.0.0.53 nameserver, assuming systemd-resolved, so using resolv.conf: /run/systemd/resolve/resolv.conf"

Aug 31 15:51:28 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:28.333185630+09:00" level=info msg="Loading containers: start."

Aug 31 15:51:30 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:30.168270010+09:00" level=info msg="Loading containers: done."

Aug 31 15:51:30 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:30.267884301+09:00" level=info msg="Docker daemon" commit=3ab5c7d containerd-snapshotter=false storage-driver=overlay2 version=27.2.0

Aug 31 15:51:30 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:30.271734467+09:00" level=info msg="Daemon has completed initialization"

Aug 31 15:51:30 ubuntu2204 dockerd[3301]: time="2024-08-31T15:51:30.520036162+09:00" level=info msg="API listen on /run/docker.sock"

Aug 31 15:51:30 ubuntu2204 systemd[1]: Started Docker Application Container Engine.

# 모든 서비스의 상태 표시 - 링크

systemctl list-units --type=service

# 도커 루트 디렉터리 확인 : Docker Root Dir(/var/lib/docker)

root@ubuntu2204:~# tree -L 3 /var/lib/docker

/var/lib/docker

├── buildkit

│ ├── cache.db

│ ├── containerdmeta.db

│ ├── content

│ │ └── ingest

│ ├── executor

│ ├── history.db

│ ├── metadata_v2.db

│ └── snapshots.db

├── containers

├── engine-id

├── image

│ └── overlay2

│ ├── distribution

│ ├── imagedb

│ ├── layerdb

│ └── repositories.json

├── network

│ └── files

│ └── local-kv.db

├── overlay2

│ └── l

├── plugins

│ ├── storage

│ │ └── ingest

│ └── tmp

├── runtimes

├── swarm

├── tmp

└── volumes

├── backingFsBlockDev

└── metadata.db

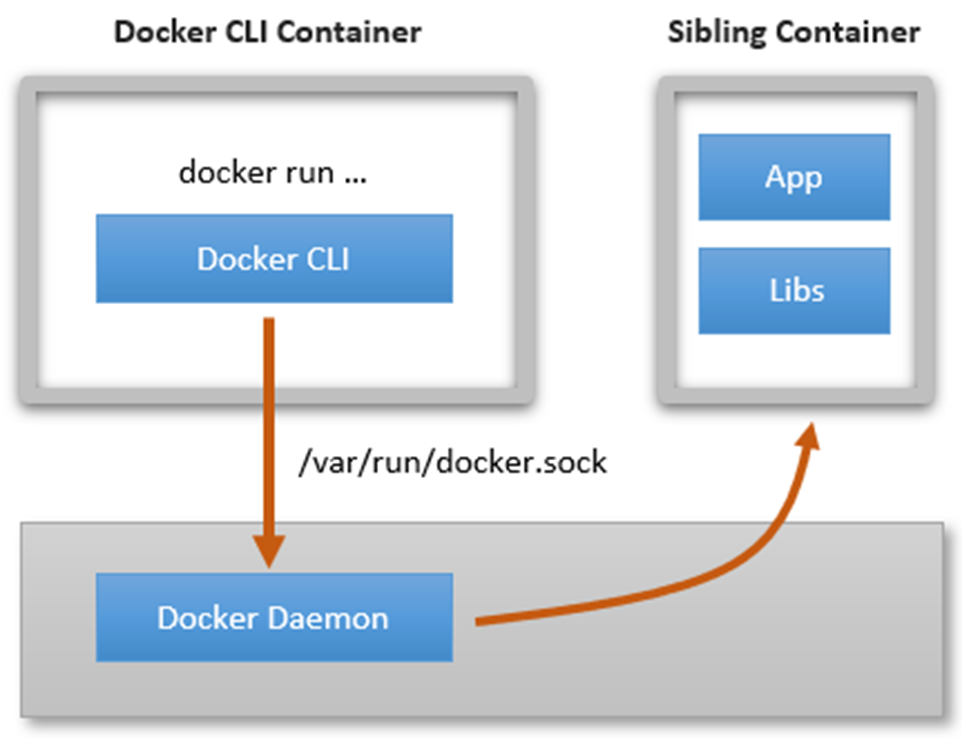

22 directories, 10 filesDooD(Docker Out of Docker)로 실행하기

- 호스트의 도커 데몬을 Jenkins 컨테이너에게 Bind Mount로 전달하여, Jenkins가 도커 데몬 사용할 수 있도록 함

vagrant@ubuntu2204:~$ docker run --rm -it -v /run/docker.sock:/run/docker.sock -v /usr/bin/docker:/usr/bin/docker ubuntu:latest bash

root@56037f7d497b:/# docker info

Client: Docker Engine - Community

Version: 27.2.0

Context: default

Debug Mode: false

Server:

Containers: 1

Running: 1

Paused: 0

Stopped: 0

Images: 2

Server Version: 27.2.0

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 472731909fa34bd7bc9c087e4c27943f9835f111

runc version: v1.1.13-0-g58aa920

init version: de40ad0

Security Options:

apparmor

seccomp

Profile: builtin

cgroupns

Kernel Version: 5.15.0-84-generic

Operating System: Ubuntu 22.04.3 LTS

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.819GiB

Name: ubuntu2204

ID: db912212-f5f4-4ced-a37d-cb6ac0b9f964

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

root@56037f7d497b:/# docker run -d --rm --name webserver nginx:alpine

65944f58ad1337f5f3dd275486ea2788e0a9db845d5589630b4dd1170c08a284

root@56037f7d497b:/# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

65944f58ad13 nginx:alpine "/docker-entrypoint.…" 7 seconds ago Up 7 seconds 80/tcp webserver

56037f7d497b ubuntu:latest "bash" 41 seconds ago Up 40 seconds stoic_curran

root@56037f7d497b:/# docker rm -f webserver

webserver

root@56037f7d497b:/# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

56037f7d497b ubuntu:latest "bash" 58 seconds ago Up 57 seconds stoic_curran

root@56037f7d497b:/# exit

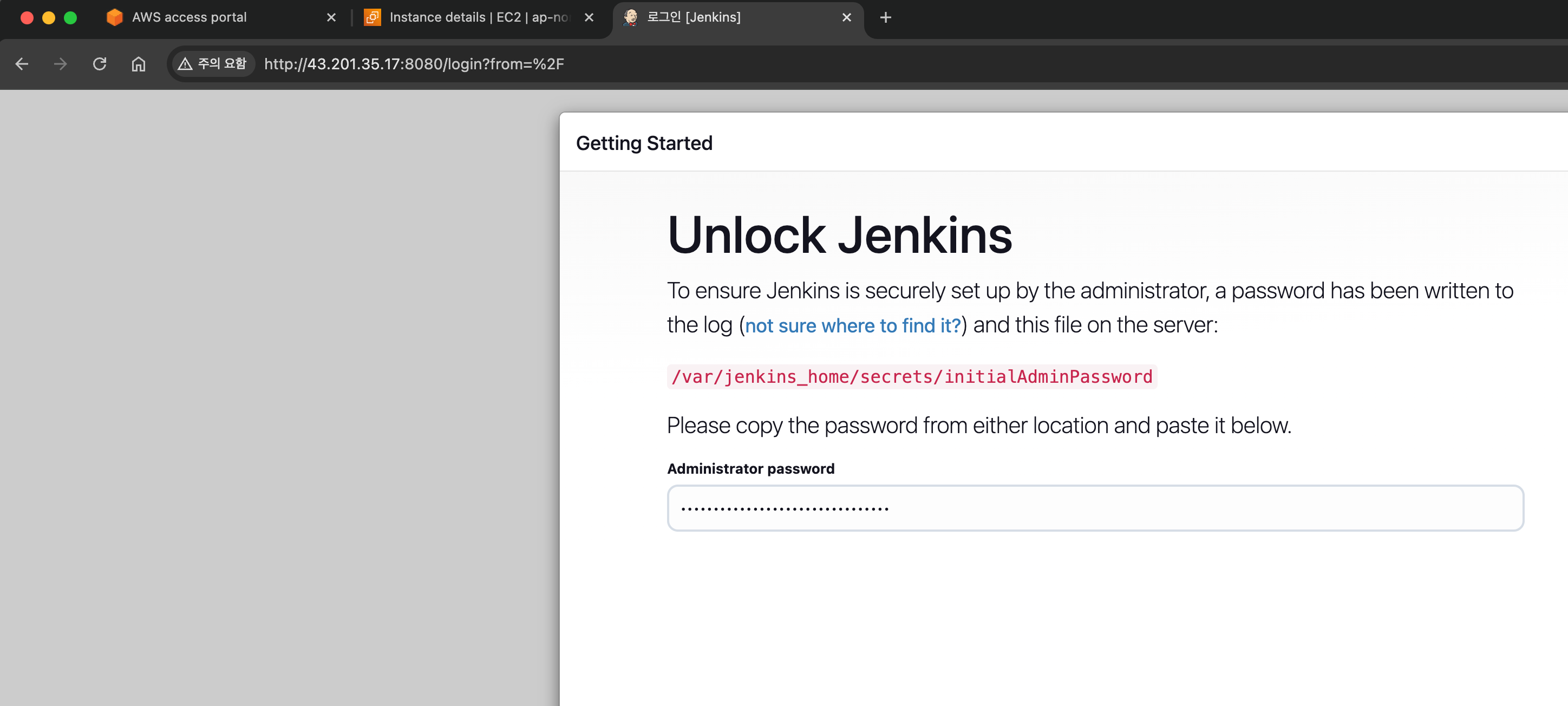

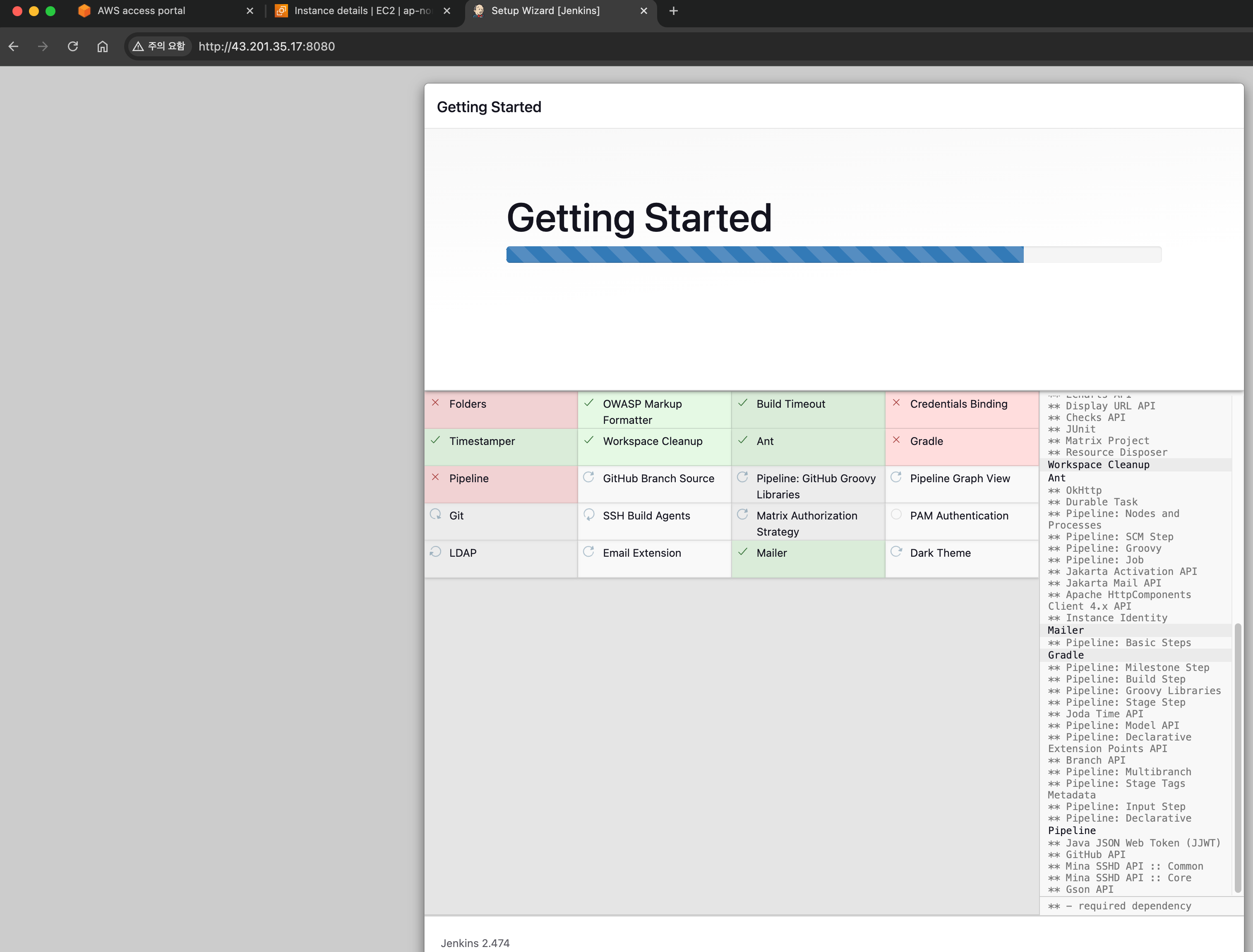

exitJenkins 컨테이너 실행

- QEMU 환경에선 OCI runtime exec failed: exec failed: unable to start container process: exec: "kins-server": executable file not found in $PATH: unknown 오류 발생으로 EC2에서 실습함

# devops credential profile과 keypair 선 준비 필요함

> export AWS_PROFILE=devops

# YAML 파일 다운로드

> curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/kans/kans-1w.yaml

# CloudFormation으로 Default VPC와 Ubuntu 22.04 LTS용 EC2 생성

> aws cloudformation deploy --template-file kans-1w.yaml --stack-name mylab --parameter-overrides MyInstanceType=t3.medium KeyName=martha SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 --region ap-northeast-2

Waiting for changeset to be created..

Waiting for stack create/update to complete

Successfully created/updated stack - mylab

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

> aws cloudformation describe-stacks --stack-name mylab --query 'Stacks[*].Outputs[0].OutputValue' --output text --region ap-northeast-2

43.201.35.17

> ssh -i ~/keypair/martha.pem ubuntu@$(aws cloudformation describe-stacks --stack-name mylab --query 'Stacks[*].Outputs[0].OutputValue' --output text --region ap-northeast-2)

# Jenkins 컨테이너 실행

ubuntu@MyServer:~$ docker run -d -p 8080:8080 -p 50000:50000 --name jenkins-server --restart=on-failure -v jenkins_home:/var/jenkins_home -v /var/run/docker.sock:/var/run/docker.sock -v /usr/bin/docker:/usr/bin/docker jenkins/jenkins

Unable to find image 'jenkins/jenkins:latest' locally

latest: Pulling from jenkins/jenkins

903681d87777: Pull complete

...

d3d97238a393: Pull complete

Digest: sha256:0adbdcdf66aafb9851e685634431797f78ec83208c9147435325dd1019cef832

Status: Downloaded newer image for jenkins/jenkins:latest

b73ce6c9b79e1d14d8bec72fe98829259b879747a14e6acd2241f97ee8fe72c8

# 확인

buntu@MyServer:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b73ce6c9b79e jenkins/jenkins "/usr/bin/tini -- /u…" 15 seconds ago Up 13 seconds 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp, 0.0.0.0:50000->50000/tcp, :::50000->50000/tcp jenkins-server

ubuntu@MyServer:~$ docker volume ls

DRIVER VOLUME NAME

local jenkins_home

# 초기 암호 확인

ubuntu@MyServer:~$ docker exec -it jenkins-server cat /var/jenkins_home/secrets/initialAdminPassword

1e21da25f1b94711ac24cbabcd0e4185

# Jenkins 컨테이너 웹 접속 주소 확인 : 초기 암호 입력

http://43.201.35.17:8080

# jdk 확인

ubuntu@MyServer:~$ docker exec -it jenkins-server java --version

openjdk 17.0.12 2024-07-16

OpenJDK Runtime Environment Temurin-17.0.12+7 (build 17.0.12+7)

OpenJDK 64-Bit Server VM Temurin-17.0.12+7 (build 17.0.12+7, mixed mode)

# JAVA_HOME 확인

ubuntu@MyServer:~$ docker exec -it jenkins-server sh -c 'echo $JAVA_HOME'

/opt/java/openjdk

# Git 확인

ubuntu@MyServer:~$ docker exec -it jenkins-server git -v

git version 2.39.2

# 기본 사용자 확인

ubuntu@MyServer:~$ docker exec -it jenkins-server whoami

jenkins

# Jenkins 컨테이너에서 도커 명령 실행

ubuntu@MyServer:~$ docker exec -it --user 0 jenkins-server whoami

root

ubuntu@MyServer:~$ docker exec -it --user 0 jenkins-server docker info

Client: Docker Engine - Community

Version: 27.2.0

Context: default

Debug Mode: false

Server:

Containers: 1

Running: 1

Paused: 0

Stopped: 0

Images: 1

Server Version: 27.2.0

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 472731909fa34bd7bc9c087e4c27943f9835f111

runc version: v1.1.13-0-g58aa920

init version: de40ad0

Security Options:

apparmor

seccomp

Profile: builtin

cgroupns

Kernel Version: 6.5.0-1024-aws

Operating System: Ubuntu 22.04.4 LTS

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 3.738GiB

Name: MyServer

ID: 50abd24b-255c-4d03-8023-196fcb023274

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

ubuntu@MyServer:~$ docker exec -it --user 0 jenkins-server docker run --rm hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:53cc4d415d839c98be39331c948609b659ed725170ad2ca8eb36951288f81b75

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

ubuntu@MyServer:~$ docker exec -it --user 0 jenkins-server docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

b73ce6c9b79e jenkins/jenkins "/usr/bin/tini -- /u…" 6 minutes ago Up 6 minutes 0.0.0.0:8080->8080/tcp, :::8080->8080/tcp, 0.0.0.0:50000->50000/tcp, :::50000->50000/tcp jenkins-server

# Jenkins 컨테이너 삭제

docker rm -f jenkins-server

docker volume rm jenkins_home- Docker 설치 후 기본 정보 확인

# [터미널1] 관리자 권한

# 프로세스 확인 - 셸변수

ps -ef

pstree -p

# 시스템에 (마운트 된) disk free 디스크 여유 공간 확인

root@MyServer:~# df -hT

Filesystem Type Size Used Avail Use% Mounted on

/dev/root ext4 29G 3.2G 26G 11% /

tmpfs tmpfs 1.9G 0 1.9G 0% /dev/shm

tmpfs tmpfs 766M 964K 765M 1% /run

tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock

efivarfs efivarfs 128K 3.6K 120K 3% /sys/firmware/efi/efivars

/dev/nvme0n1p15 vfat 105M 6.1M 99M 6% /boot/efi

tmpfs tmpfs 383M 4.0K 383M 1% /run/user/1000

overlay overlay 29G 3.2G 26G 11% /var/lib/docker/overlay2/47e9d96966a6f1aab0abf50489355c443e7c90a139ba4c6264168af29a9a902b/merged

# 네트워크 정보 확인 >> docker0 네트워크 인터페이스가 추가됨, 현재는 DOWN 상태

root@MyServer:~# ip -br -c addr

lo UNKNOWN 127.0.0.1/8 ::1/128

ens5 UP 192.168.50.10/24 metric 100 fe80::2f:d7ff:fe92:3bb/64

docker0 UP 172.17.0.1/16 fe80::42:21ff:fe34:843f/64

veth26ad758@if4 UP fe80::3826:1aff:fec6:12bc/64

root@MyServer:~# ip -c addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP group default qlen 1000

link/ether 02:2f:d7:92:03:bb brd ff:ff:ff:ff:ff:ff

altname enp0s5

inet 192.168.50.10/24 metric 100 brd 192.168.50.255 scope global dynamic ens5

valid_lft 3463sec preferred_lft 3463sec

inet6 fe80::2f:d7ff:fe92:3bb/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:21:34:84:3f brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:21ff:fe34:843f/64 scope link

valid_lft forever preferred_lft forever

5: veth26ad758@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 3a:26:1a:c6:12:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::3826:1aff:fec6:12bc/64 scope link

valid_lft forever preferred_lft forever

root@MyServer:~# ip -c link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens5: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9001 qdisc mq state UP mode DEFAULT group default qlen 1000

link/ether 02:2f:d7:92:03:bb brd ff:ff:ff:ff:ff:ff

altname enp0s5

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 02:42:21:34:84:3f brd ff:ff:ff:ff:ff:ff

5: veth26ad758@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether 3a:26:1a:c6:12:bc brd ff:ff:ff:ff:ff:ff link-netnsid 0

root@MyServer:~#

root@MyServer:~# ip -br -c link

lo UNKNOWN 00:00:00:00:00:00 <LOOPBACK,UP,LOWER_UP>

ens5 UP 02:2f:d7:92:03:bb <BROADCAST,MULTICAST,UP,LOWER_UP>

docker0 UP 02:42:21:34:84:3f <BROADCAST,MULTICAST,UP,LOWER_UP>

veth26ad758@if4 UP 3a:26:1a:c6:12:bc <BROADCAST,MULTICAST,UP,LOWER_UP>

root@MyServer:~# ip -c route

default via 192.168.50.1 dev ens5 proto dhcp src 192.168.50.10 metric 100

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.0.2 via 192.168.50.1 dev ens5 proto dhcp src 192.168.50.10 metric 100

192.168.50.0/24 dev ens5 proto kernel scope link src 192.168.50.10 metric 100

192.168.50.1 dev ens5 proto dhcp scope link src 192.168.50.10 metric 100

# 이더넷 브리지 정보 확인

root@MyServer:~# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02422134843f no veth26ad758

# iptables 정책 확인

## filter 에 FORWARD 가 기존 ACCEPT 에서 DROP 로 변경됨

## filter 에 FORWARD 에 docker0 에서 docker0 혹은 외부로 전달 허용 정책이 추가됨

root@MyServer:~# iptables -t filter -S

-P INPUT ACCEPT

-P FORWARD DROP

-P OUTPUT ACCEPT

-N DOCKER

-N DOCKER-ISOLATION-STAGE-1

-N DOCKER-ISOLATION-STAGE-2

-N DOCKER-USER

-A FORWARD -j DOCKER-USER

-A FORWARD -j DOCKER-ISOLATION-STAGE-1

-A FORWARD -o docker0 -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

-A FORWARD -o docker0 -j DOCKER

-A FORWARD -i docker0 ! -o docker0 -j ACCEPT

-A FORWARD -i docker0 -o docker0 -j ACCEPT

-A DOCKER -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 8080 -j ACCEPT

-A DOCKER -d 172.17.0.2/32 ! -i docker0 -o docker0 -p tcp -m tcp --dport 50000 -j ACCEPT

-A DOCKER-ISOLATION-STAGE-1 -i docker0 ! -o docker0 -j DOCKER-ISOLATION-STAGE-2

-A DOCKER-ISOLATION-STAGE-1 -j RETURN

-A DOCKER-ISOLATION-STAGE-2 -o docker0 -j DROP

-A DOCKER-ISOLATION-STAGE-2 -j RETURN

-A DOCKER-USER -j RETURN

## nat POSTROUTING 에 172.17.0.0/16 에서 외부로 전달 시 매스커레이딩(SNAT) 정책이 추가됨

root@MyServer:~# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N DOCKER

-A PREROUTING -m addrtype --dst-type LOCAL -j DOCKER

-A OUTPUT ! -d 127.0.0.0/8 -m addrtype --dst-type LOCAL -j DOCKER

-A POSTROUTING -s 172.17.0.0/16 ! -o docker0 -j MASQUERADE

-A POSTROUTING -s 172.17.0.2/32 -d 172.17.0.2/32 -p tcp -m tcp --dport 8080 -j MASQUERADE

-A POSTROUTING -s 172.17.0.2/32 -d 172.17.0.2/32 -p tcp -m tcp --dport 50000 -j MASQUERADE

-A DOCKER -i docker0 -j RETURN

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 8080 -j DNAT --to-destination 172.17.0.2:8080

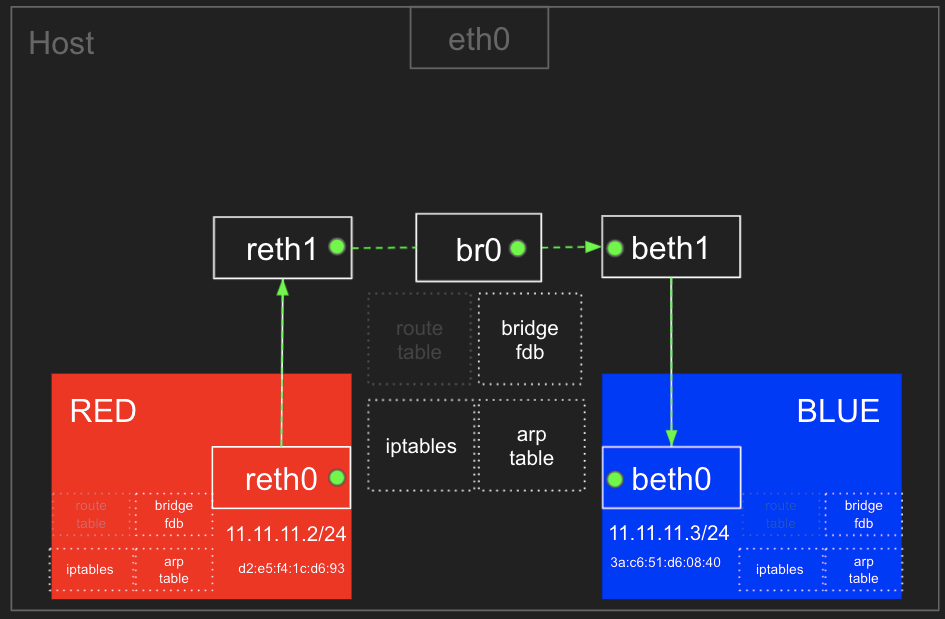

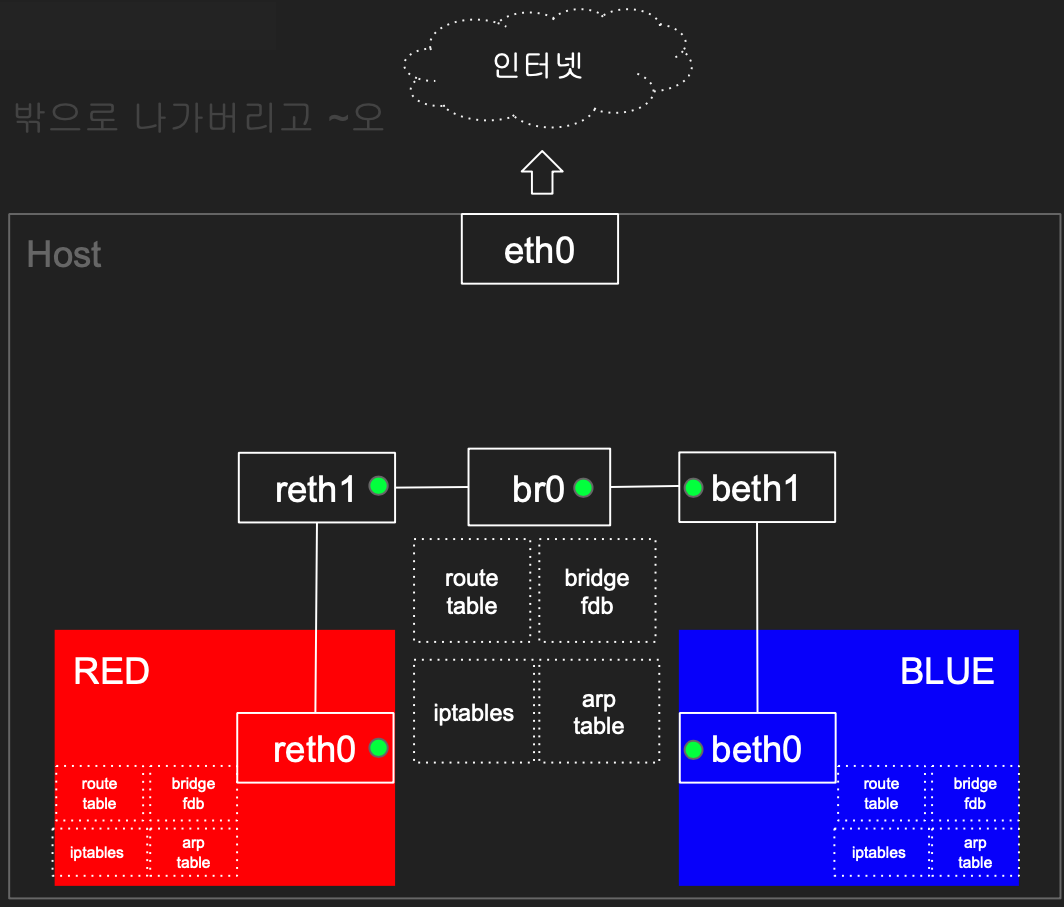

-A DOCKER ! -i docker0 -p tcp -m tcp --dport 50000 -j DNAT --to-destination 172.17.0.2:50000본 수업을 받기전에는 Docker 또는 Containerd 등 으로만 컨테이너를 실행가능한 것으로 알고 있었으나, chroot, pivot-root, namespace, Overlay filesystem, cgroup을 효율적으로 활용하여 컨테이너를 기동할 수 있음을 알게되었고 직접 실습을 하였습니다.이게돼요? 도커없이 컨테이너 만들기

Process

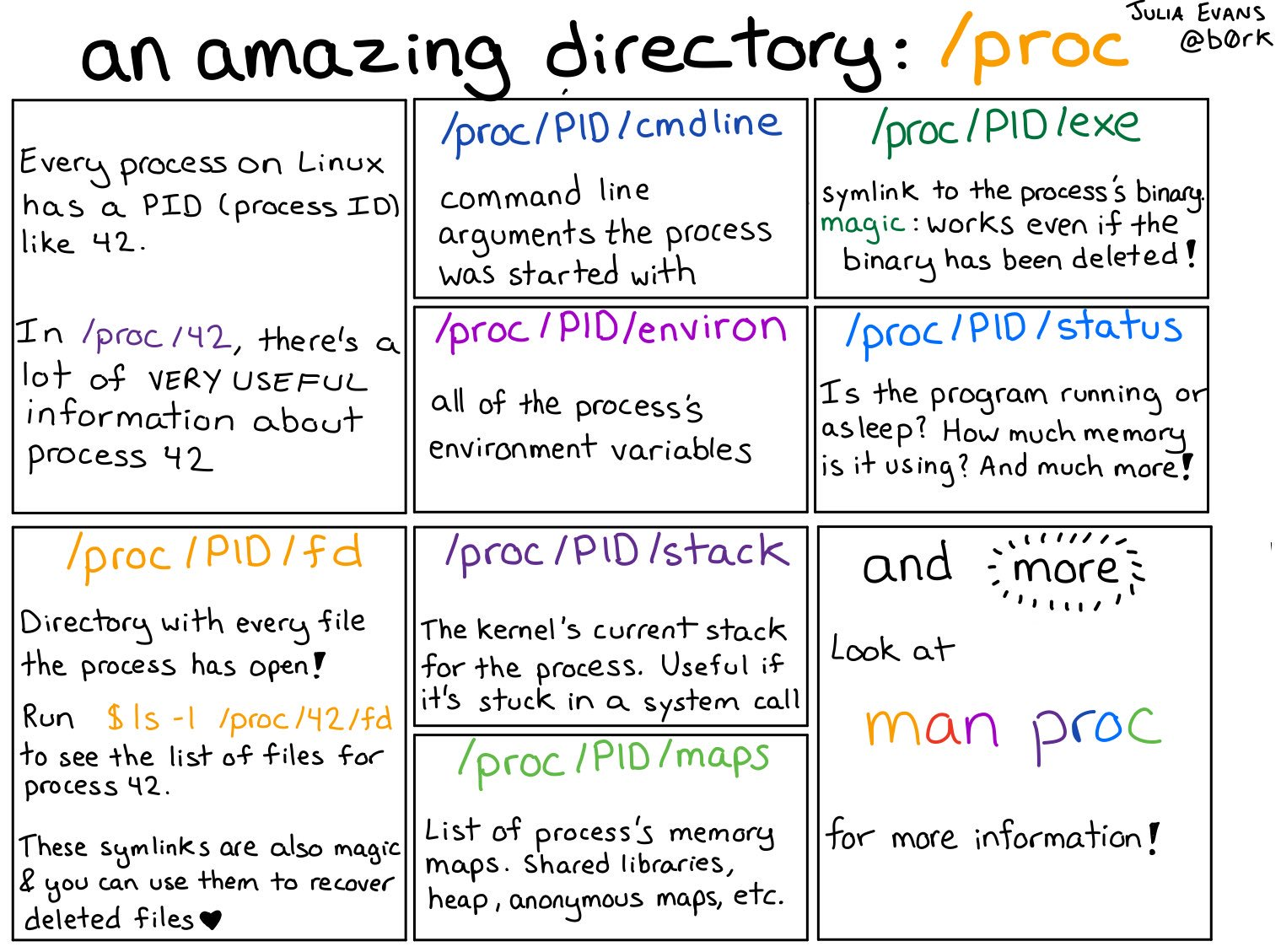

Linux Process 이해: /proc

- 프로세스는 실행 중인 프로그램의 인스턴스를 의미. OS에서 프로세스를 관리하며, 각 프로세스는 고유한 ID(PID)를 가짐.

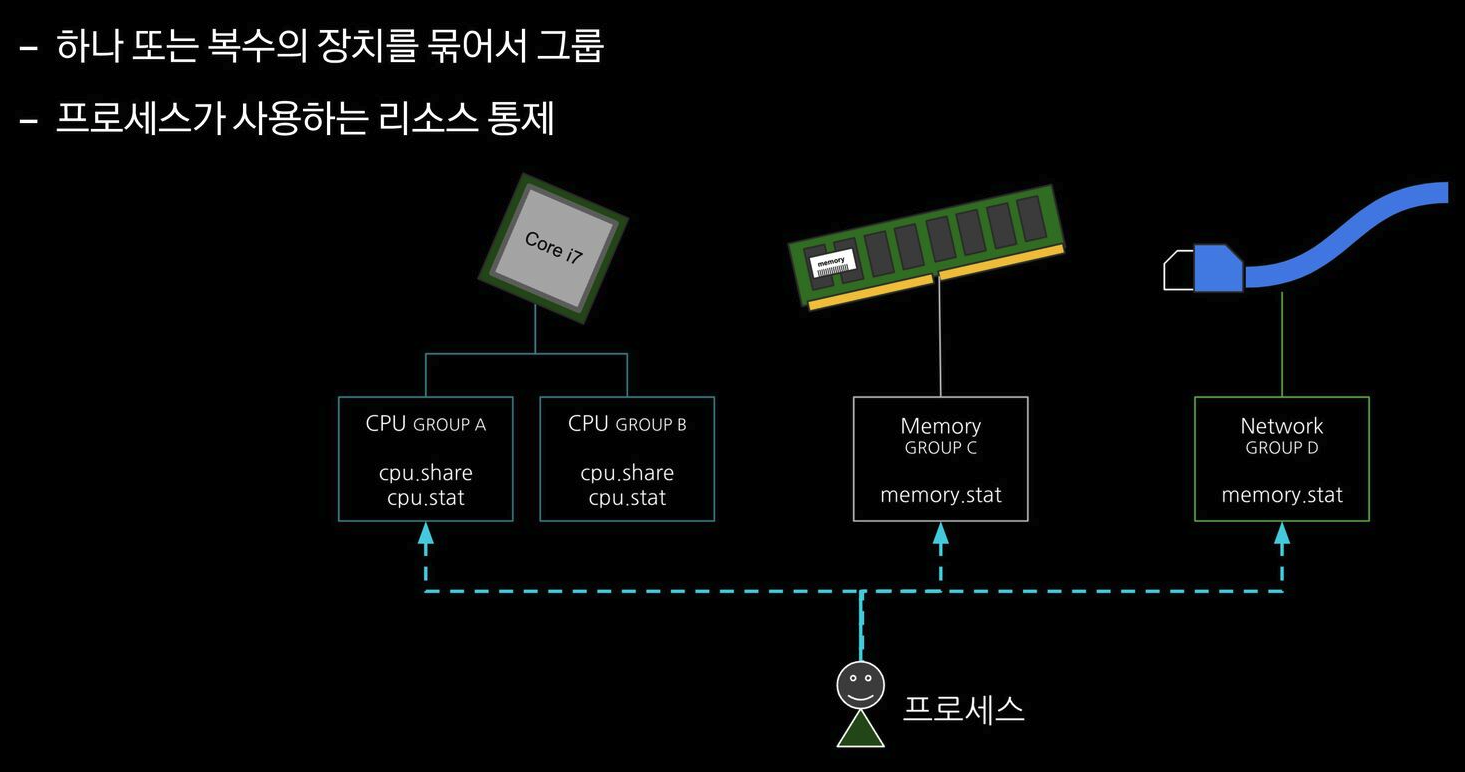

- 프로세스는 CPU와 메모리를 사용하는 기본 단위로, OS 커널(Cgroup)에서 각 프로세스의 자원을 관리함.

영상 출처

# 프로세스 정보 확인

ps

# /sbin/init 1번 프로세스 확인

# 프로세스별 CPU 차지율, Memory 점유율, 실제 메모리 사용량 등 확인

ps aux

ps -ef

# 프로세스 트리 확인

root@ubuntu2204:~# pstree --help

pstree: unrecognized option '--help'

Usage: pstree [-acglpsStTuZ] [ -h | -H PID ] [ -n | -N type ]

[ -A | -G | -U ] [ PID | USER ]

or: pstree -V

Display a tree of processes.

-a, --arguments show command line arguments

-A, --ascii use ASCII line drawing characters

-c, --compact-not don't compact identical subtrees

-C, --color=TYPE color process by attribute

(age)

-g, --show-pgids show process group ids; implies -c

-G, --vt100 use VT100 line drawing characters

-h, --highlight-all highlight current process and its ancestors

-H PID, --highlight-pid=PID

highlight this process and its ancestors

-l, --long don't truncate long lines

-n, --numeric-sort sort output by PID

-N TYPE, --ns-sort=TYPE

sort output by this namespace type

(cgroup, ipc, mnt, net, pid, time, user, uts)

-p, --show-pids show PIDs; implies -c

-s, --show-parents show parents of the selected process

-S, --ns-changes show namespace transitions

-t, --thread-names show full thread names

-T, --hide-threads hide threads, show only processes

-u, --uid-changes show uid transitions

-U, --unicode use UTF-8 (Unicode) line drawing characters

-V, --version display version information

-Z, --security-context

show security attributes

PID start at this PID; default is 1 (init)

USER show only trees rooted at processes of this user

pstree

pstree -a

pstree -p

pstree -apn

pstree -apnT

pstree -apnTZ

pstree -apnTZ | grep -v unconfined

# 실시간 프로세스 정보 출력

top -d 1

htop

# 특정 프로세스 정보 찾기

pgrep -h

Usage:

pgrep [options] <pattern>

Options:

-d, --delimiter <string> specify output delimiter

-l, --list-name list PID and process name

-a, --list-full list PID and full command line

-v, --inverse negates the matching

-w, --lightweight list all TID

-c, --count count of matching processes

-f, --full use full process name to match

-g, --pgroup <PGID,...> match listed process group IDs

-G, --group <GID,...> match real group IDs

-i, --ignore-case match case insensitively

-n, --newest select most recently started

-o, --oldest select least recently started

-O, --older <seconds> select where older than seconds

-P, --parent <PPID,...> match only child processes of the given parent

-s, --session <SID,...> match session IDs

-t, --terminal <tty,...> match by controlling terminal

-u, --euid <ID,...> match by effective IDs

-U, --uid <ID,...> match by real IDs

-x, --exact match exactly with the command name

-F, --pidfile <file> read PIDs from file

-L, --logpidfile fail if PID file is not locked

-r, --runstates <state> match runstates [D,S,Z,...]

--ns <PID> match the processes that belong to the same

namespace as <pid>

--nslist <ns,...> list which namespaces will be considered for

the --ns option.

Available namespaces: ipc, mnt, net, pid, user, uts

-h, --help display this help and exit

-V, --version output version information and exit

For more details see pgrep(1).

# [터미널1]

sleep 10000

# [터미널2]

pgrep sleep

pgrep sleep -u root

pgrep sleep -u ubuntu-

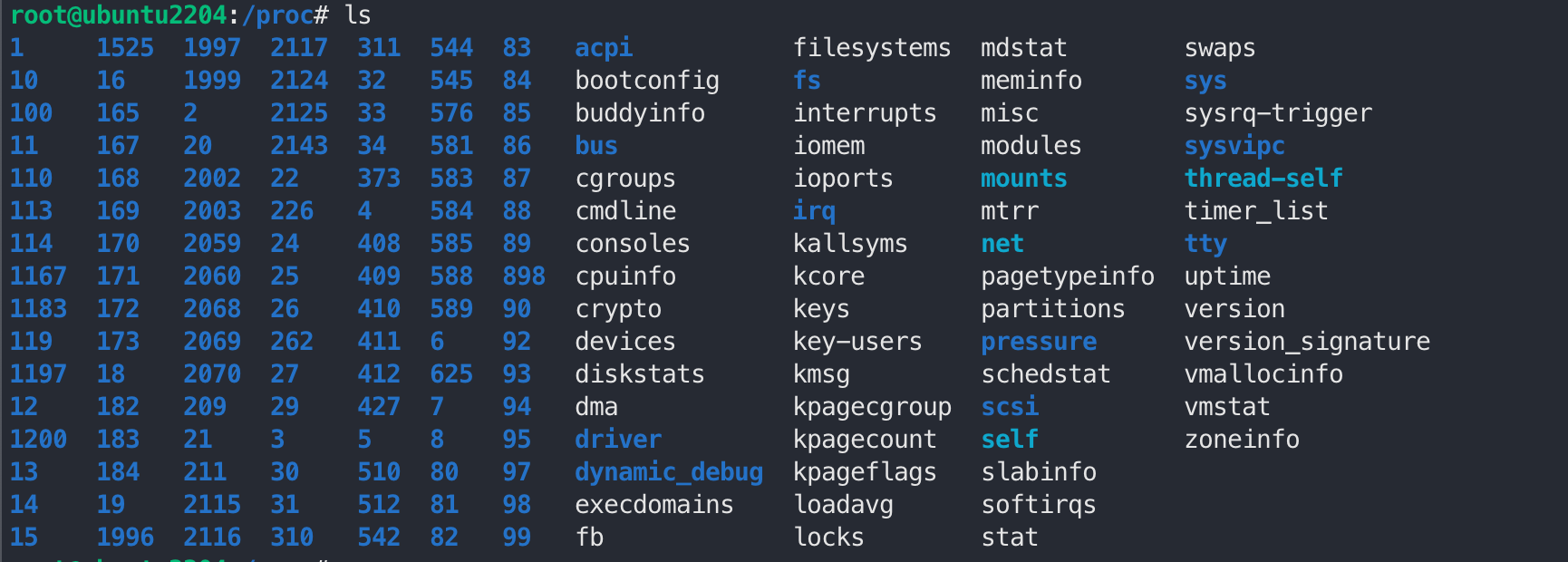

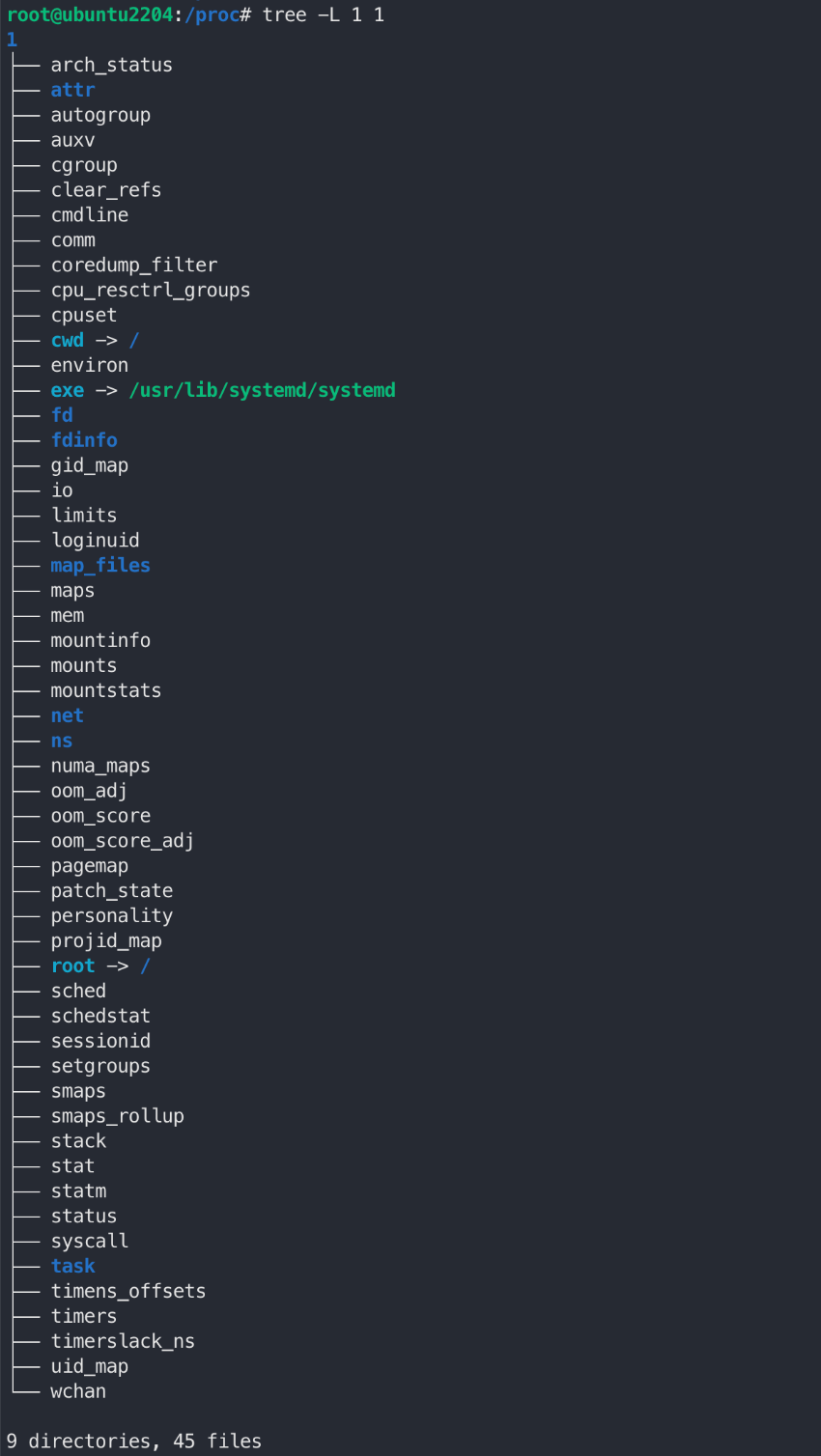

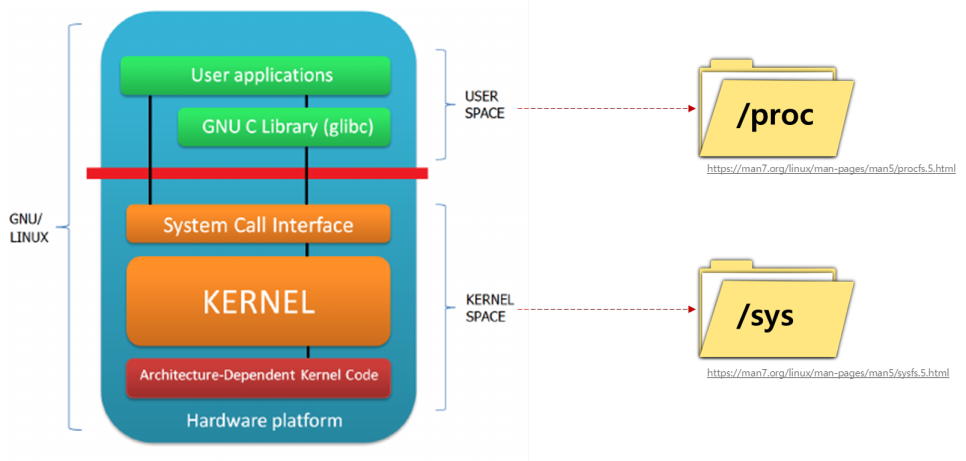

리눅스의 /proc 디렉터리는 커널이 동적으로 생성하는 정보를 실시간 제공 : 시스템 상태, 프로세스(/proc/[PID]), HW 정보

- /proc/cpuinfo: CPU에 대한 정보가 포함되어 있습니다. CPU 모델, 코어 수, 클럭 속도 등의 정보를 확인할 수 있습니다. - /proc/meminfo: 메모리 사용 현황을 보여줍니다. 전체 메모리, 사용 중인 메모리, 가용 메모리, 캐시 메모리 등 다양한 메모리 관련 정보를 제공합니다. - /proc/uptime: 시스템이 부팅된 후 경과된 시간을 초 단위로 보여줍니다. 첫 번째 숫자는 총 가동 시간, 두 번째 숫자는 시스템의 유휴 시간입니다. - /proc/loadavg: 시스템의 현재 부하 상태를 나타냅니다. 첫 번째 세 개의 숫자는 1, 5, 15분간의 시스템 부하 평균을 의미하며, 네 번째 숫자는 현재 실행 중인 프로세스와 총 프로세스 수, 마지막 숫자는 마지막으로 실행된 프로세스의 PID를 나타냅니다. - /proc/version: 커널 버전, GCC 버전 및 컴파일된 날짜와 같은 커널의 빌드 정보를 포함합니다. - /proc/filesystems: 커널이 인식하고 있는 파일 시스템의 목록을 보여줍니다. - /proc/partitions: 시스템에서 인식된 파티션 정보를 제공합니다. 디스크 장치와 해당 파티션 크기 등을 확인할 수 있습니다. - 프로세스(/proc/[PID]) 별 정보 - /proc/[PID]/cmdline: 해당 프로세스를 실행할 때 사용된 명령어와 인자를 포함합니다. - /proc/[PID]/cwd: 프로세스의 현재 작업 디렉터리에 대한 심볼릭 링크입니다. ls -l로 확인하면 해당 프로세스가 현재 작업 중인 디렉터리를 알 수 있습니다. - /proc/[PID]/environ: 프로세스의 환경 변수를 나타냅니다. 각 변수는 NULL 문자로 구분됩니다. - /proc/[PID]/exe: 프로세스가 실행 중인 실행 파일에 대한 심볼릭 링크입니다. - /proc/[PID]/fd: 프로세스가 열어놓은 모든 파일 디스크립터에 대한 심볼릭 링크를 포함하는 디렉터리입니다. 이 파일들은 해당 파일 디스크립터가 가리키는 실제 파일이나 소켓 등을 참조합니다. - /proc/[PID]/maps: 프로세스의 메모리 맵을 나타냅니다. 메모리 영역의 시작과 끝 주소, 접근 권한, 매핑된 파일 등을 확인할 수 있습니다. - /proc/[PID]/stat: 프로세스의 상태 정보를 포함한 파일입니다. 이 파일에는 프로세스의 상태, CPU 사용량, 메모리 사용량, 부모 프로세스 ID, 우선순위 등의 다양한 정보가 담겨 있습니다. - /proc/[PID]/status: 프로세스의 상태 정보를 사람이 읽기 쉽게 정리한 파일입니다. PID, PPID(부모 PID), 메모리 사용량, CPU 사용률, 스레드 수 등을 확인할 수 있습니다.

#

mount -t proc

findmnt /proc

TARGET SOURCE FSTYPE OPTIONS

/proc proc proc rw,nosuid,nodev,noexec,relatime

#

ls /proc

tree /proc -L 1

tree /proc -L 1 | more

# 커널이 동적으로 생성하는 정보

cat /proc/cpuinfo

cat /proc/meminfo

cat /proc/uptime

cat /proc/loadavg

cat /proc/version

cat /proc/filesystems

cat /proc/partitions

# 실시간(갱신) 정보

cat /proc/uptime

cat /proc/uptime

cat /proc/uptime

# 프로세스별 정보

ls /proc > 1.txt

# [터미널1]

sleep 10000

# [터미널2]

## 프로세스별 정보

ls /proc > 2.txt

ls /proc

diff 1.txt 2.txt

pstree -p

ps -C sleep

pgrep sleep

## sleep 프로세스 디렉터리 확인

tree /proc/$(pgrep sleep) -L 1

tree /proc/$(pgrep sleep) -L 2 | more

## 해당 프로세스가 실행한 명령 확인

cat /proc/$(pgrep sleep)/cmdline ; echo

## 해당 프로세스의 Current Working Directory 확인

ls -l /proc/$(pgrep sleep)/cwd

## 해당 프로세스가 오픈한 file descriptor 목록 확인

ls -l /proc/$(pgrep sleep)/fd

## 해당 프로세스의 환경 변수 확인

cat /proc/$(pgrep sleep)/environ ; echo

cat /proc/$(pgrep sleep)/environ | tr '\000' '\n'

## 해당 프로세스의 메모리 정보 확인

cat /proc/$(pgrep sleep)/maps

## 해당 프로세스의 자원 사용량 확인

cat /proc/$(pgrep sleep)/status

## 기타 정보

ls -l /proc/$(pgrep sleep)/exe

cat /proc/$(pgrep sleep)/stat4. 프로세스 격리

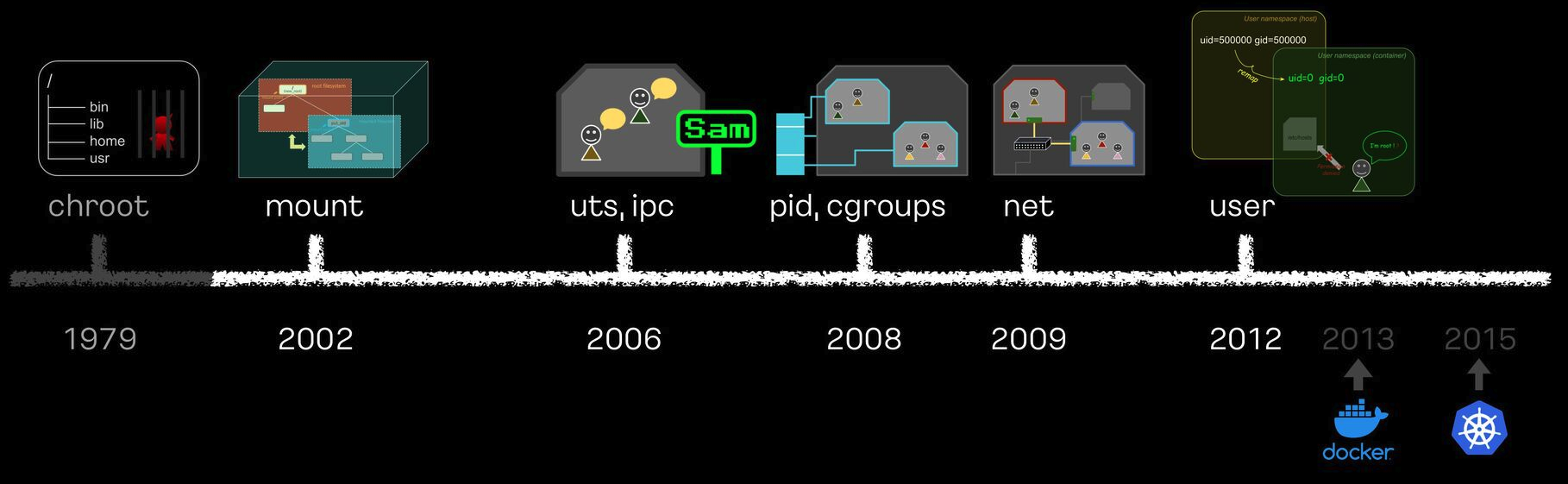

리눅스 프로세스 격리 기술 발전

chroot

chroot root directory

- user 디렉터리를 user 프로세스에게 root 디렉터리를 속임

# [터미널1] 관리자 전환

vagrant ssh

Last login: Sat Aug 31 16:14:21 2024 from 10.0.2.2

vagrant@ubuntu2204:~$ sudo su -

root@ubuntu2204:~# whoami

root

root@ubuntu2204:~# cd /tmp

root@ubuntu2204:/tmp# mkdir myroot

# chroot 사용법 : [옵션] NEWROOT [커맨드]

root@ubuntu2204:/tmp# chroot myroot /bin/sh

chroot: failed to run command ‘/bin/sh’: No such file or directory

root@ubuntu2204:/tmp# tree myroot

myroot

root@ubuntu2204:/tmp# which sh

/usr/bin/sh

root@ubuntu2204:/tmp# ldd /bin/sh

linux-vdso.so.1 (0x00007ffe37b9d000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f41d2ed9000)

/lib64/ld-linux-x86-64.so.2 (0x00007f41d312c000)

# 바이러리 파일과 라이브러리 파일 복사

root@ubuntu2204:/tmp# mkdir -p myroot/bin

root@ubuntu2204:/tmp# cp /usr/bin/sh myroot/bin/

root@ubuntu2204:/tmp# mkdir -p myroot/{lib64,lib/x86_64-linux-gnu}

root@ubuntu2204:/tmp# tree myroot

myroot

├── bin

│ └── sh

├── lib

│ └── x86_64-linux-gnu

└── lib64

root@ubuntu2204:/tmp# cp /lib/x86_64-linux-gnu/libc.so.6 myroot/lib/x86_64-linux-gnu/

root@ubuntu2204:/tmp# cp /lib64/ld-linux-x86-64.so.2 myroot/lib64

root@ubuntu2204:/tmp# tree myroot/

myroot/

├── bin

│ └── sh

├── lib

│ └── x86_64-linux-gnu

│ └── libc.so.6

└── lib64

└── ld-linux-x86-64.so.2

root@ubuntu2204:/tmp# chroot myroot /bin/sh

# ls

/bin/sh: 4: ls: not found

# exit

--------------------

root@ubuntu2204:/tmp# which ls

/usr/bin/ls

root@ubuntu2204:/tmp# ldd /usr/bin/ls

linux-vdso.so.1 (0x00007ffd55df5000)

libselinux.so.1 => /lib/x86_64-linux-gnu/libselinux.so.1 (0x00007f2ca0d4e000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f2ca0b25000)

libpcre2-8.so.0 => /lib/x86_64-linux-gnu/libpcre2-8.so.0 (0x00007f2ca0a8e000)

/lib64/ld-linux-x86-64.so.2 (0x00007f2ca0da6000)

root@ubuntu2204:/tmp# cp /usr/bin/ls myroot/bin/

root@ubuntu2204:/tmp# mkdir -p myroot/bin/

root@ubuntu2204:/tmp# cp /lib/x86_64-linux-gnu/{libselinux.so.1,libc.so.6,libpcre2-8.so.0} myroot/lib/x86_64-linux-gnu/

root@ubuntu2204:/tmp# cp /lib64/ld-linux-x86-64.so.2 myroot/lib64

root@ubuntu2204:/tmp# tree myroot

myroot

├── bin

│ ├── ls

│ └── sh

├── lib

│ └── x86_64-linux-gnu

│ ├── libc.so.6

│ ├── libpcre2-8.so.0

│ └── libselinux.so.1

└── lib64

└── ld-linux-x86-64.so.2

4 directories, 6 files

root@ubuntu2204:/tmp# chroot myroot /bin/sh

# ls /

bin lib lib64

## 탈출 가능한지 시도

# cd ../../../

# ls /

bin lib lib64

# 아래 터미널2와 비교 후 빠져나오기

exit

--------------------

# chroot 요약 : 경로를 모으고(패키징), 경로에 가둬서 실행(격리)

# [터미널2]

# chroot 실행한 터미널1과 호스트 디렉터리 비교

vagrant@ubuntu2204:~$ ls /

bin dev home lib32 libx32 media opt root sbin srv sys usr

boot etc lib lib64 lost+found mnt proc run snap swap.img tmp varchroot에서 ps 실행해 보기

# copy ps

root@ubuntu2204:/tmp# ldd /usr/bin/ps;

linux-vdso.so.1 (0x00007ffe4c149000)

libprocps.so.8 => /lib/x86_64-linux-gnu/libprocps.so.8 (0x00007fd316125000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007fd315efc000)

libsystemd.so.0 => /lib/x86_64-linux-gnu/libsystemd.so.0 (0x00007fd315e35000)

/lib64/ld-linux-x86-64.so.2 (0x00007fd3161aa000)

liblzma.so.5 => /lib/x86_64-linux-gnu/liblzma.so.5 (0x00007fd315e0a000)

libzstd.so.1 => /lib/x86_64-linux-gnu/libzstd.so.1 (0x00007fd315d3b000)

liblz4.so.1 => /lib/x86_64-linux-gnu/liblz4.so.1 (0x00007fd315d19000)

libcap.so.2 => /lib/x86_64-linux-gnu/libcap.so.2 (0x00007fd315d0e000)

libgcrypt.so.20 => /lib/x86_64-linux-gnu/libgcrypt.so.20 (0x00007fd315bd0000)

libgpg-error.so.0 => /lib/x86_64-linux-gnu/libgpg-error.so.0 (0x00007fd315baa000)

root@ubuntu2204:/tmp# cp /usr/bin/ps /tmp/myroot/bin/;

root@ubuntu2204:/tmp# cp /lib/x86_64-linux-gnu/{libprocps.so.8,libc.so.6,libsystemd.so.0,liblzma.so.5,libgcrypt.so.20,libgpg-error.so.0,libzstd.so.1,libcap.so.2} /tmp/myroot/lib/x86_64-linux-gnu/;

root@ubuntu2204:/tmp# mkdir -p /tmp/myroot/usr/lib/x86_64-linux-gnu;

root@ubuntu2204:/tmp# cp /usr/lib/x86_64-linux-gnu/liblz4.so.1 /tmp/myroot/usr/lib/x86_64-linux-gnu/;

root@ubuntu2204:/tmp# cp /lib64/ld-linux-x86-64.so.2 /tmp/myroot/lib64/;

# copy mount

root@ubuntu2204:/tmp# ldd /usr/bin/mount;

linux-vdso.so.1 (0x00007ffefbfb2000)

libmount.so.1 => /lib/x86_64-linux-gnu/libmount.so.1 (0x00007f211e361000)

libselinux.so.1 => /lib/x86_64-linux-gnu/libselinux.so.1 (0x00007f211e335000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007f211e10c000)

libblkid.so.1 => /lib/x86_64-linux-gnu/libblkid.so.1 (0x00007f211e0d5000)

libpcre2-8.so.0 => /lib/x86_64-linux-gnu/libpcre2-8.so.0 (0x00007f211e03e000)

/lib64/ld-linux-x86-64.so.2 (0x00007f211e3ba000)

root@ubuntu2204:/tmp# cp /usr/bin/mount /tmp/myroot/bin/;

root@ubuntu2204:/tmp# cp /lib/x86_64-linux-gnu/{libmount.so.1,libc.so.6,libblkid.so.1,libselinux.so.1,libpcre2-8.so.0} /tmp/myroot/lib/x86_64-linux-gnu/;

root@ubuntu2204:/tmp# cp /lib64/ld-linux-x86-64.so.2 /tmp/myroot/lib64/;

# copy mkdir

root@ubuntu2204:/tmp# ldd /usr/bin/mkdir;

linux-vdso.so.1 (0x00007ffc68700000)

libselinux.so.1 => /lib/x86_64-linux-gnu/libselinux.so.1 (0x00007fb5f2f5a000)

libc.so.6 => /lib/x86_64-linux-gnu/libc.so.6 (0x00007fb5f2d31000)

libpcre2-8.so.0 => /lib/x86_64-linux-gnu/libpcre2-8.so.0 (0x00007fb5f2c9a000)

/lib64/ld-linux-x86-64.so.2 (0x00007fb5f2fa0000)

root@ubuntu2204:/tmp# cp /usr/bin/mkdir /tmp/myroot/bin/;

root@ubuntu2204:/tmp# cp /lib/x86_64-linux-gnu/{libselinux.so.1,libc.so.6,libpcre2-8.so.0} /tmp/myroot/lib/x86_64-linux-gnu/;

root@ubuntu2204:/tmp# cp /lib64/ld-linux-x86-64.so.2 /tmp/myroot/lib64/;

# tree 확인

myroot

├── bin

│ ├── ls

│ ├── mkdir

│ ├── mount

│ ├── ps

│ └── sh

├── lib

│ └── x86_64-linux-gnu

│ ├── libblkid.so.1

│ ├── libcap.so.2

│ ├── libc.so.6

│ ├── libgcrypt.so.20

│ ├── libgpg-error.so.0

│ ├── liblzma.so.5

│ ├── libmount.so.1

│ ├── libpcre2-8.so.0

│ ├── libprocps.so.8

│ ├── libselinux.so.1

│ ├── libsystemd.so.0

│ └── libzstd.so.1

├── lib64

│ └── ld-linux-x86-64.so.2

└── usr

└── lib

└── x86_64-linux-gnu

└── liblz4.so.1

7 directories, 19 files

root@ubuntu2204:/tmp# chroot myroot /bin/sh

# ps

/bin/sh: 1: ps: not found

---------------------

# 왜 ps가 안될까요?

Error, do this: mount -t proc proc /proc

# mount -t proc proc /proc

mount: /proc: mount point does not exist.

# mkdir /proc

# mount -t proc proc /proc

# mount -t proc

proc on /proc type proc (rw,relatime)

# ps는 /proc 의 실시간 정보를 활용

# ps

PID TTY TIME CMD

2110 ? 00:00:00 sudo

2111 ? 00:00:00 bash

2149 ? 00:00:00 sh

2159 ? 00:00:00 ps

# ps auf

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

1000 1915 0.0 0.1 8792 5400 ? Ss+ 11:01 0:00 -bash

1000 1827 0.0 0.1 8792 5452 ? Ss+ 11:01 0:00 -bash

1000 1826 0.0 0.1 8792 5520 ? Ss 11:01 0:00 -bash

0 2109 0.1 0.1 11496 5624 ? S+ 11:29 0:00 \_ sudo -Es

0 2110 0.0 0.0 11496 880 ? Ss 11:29 0:00 \_ sudo -Es

0 2111 0.0 0.1 8796 5436 ? S 11:29 0:00 \_ /bin/bash

0 2149 0.0 0.0 2892 288 ? S 11:34 0:00 \_ /bin/sh

0 2166 0.0 0.0 7064 2464 ? R+ 11:40 0:00 \_ ps auf

0 801 0.0 0.0 6176 1124 ? Ss+ 10:27 0:00 /sbin/agetty -o -p -- \u --nocl

# ps aux

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

0 1 0.1 0.2 100908 11812 ? Ss 10:25 0:08 /sbin/init =

0 2 0.0 0.0 0 0 ? S 10:25 0:00 [kthreadd]

0 3 0.0 0.0 0 0 ? I< 10:25 0:00 [rcu_gp]

0 4 0.0 0.0 0 0 ? I< 10:25 0:00 [rcu_par_gp]

0 5 0.0 0.0 0 0 ? I< 10:25 0:00 [slub_flushwq]

0 6 0.0 0.0 0 0 ? I< 10:25 0:00 [netns]

0 8 0.0 0.0 0 0 ? I< 10:25 0:00 [kworker/0:0H-events_highpri]

...

...

0 2149 0.0 0.0 2892 288 ? S 11:34 0:00 /bin/sh

0 2160 0.0 0.0 7064 2460 ? R+ 11:37 0:00 ps aux

# ls -l /proc

total 0

dr-xr-xr-x 9 0 0 0 Aug 31 11:37 1

...

dr-xr-xr-x 9 0 0 0 Aug 31 11:37 99

dr-xr-xr-x 3 0 0 0 Aug 31 11:38 acpi

-r--r--r-- 1 0 0 0 Aug 31 11:38 bootconfig

-r--r--r-- 1 0 0 0 Aug 31 11:38 buddyinfo

dr-xr-xr-x 4 0 0 0 Aug 31 11:38 bus

-r--r--r-- 1 0 0 0 Aug 31 11:38 cgroups

-r--r--r-- 1 0 0 0 Aug 31 11:38 cmdline

-r--r--r-- 1 0 0 0 Aug 31 11:38 consoles

-r--r--r-- 1 0 0 0 Aug 31 11:38 cpuinfo

-r--r--r-- 1 0 0 0 Aug 31 11:38 crypto

-r--r--r-- 1 0 0 0 Aug 31 11:38 devices

-r--r--r-- 1 0 0 0 Aug 31 11:38 diskstats

-r--r--r-- 1 0 0 0 Aug 31 11:38 dma

dr-xr-xr-x 3 0 0 0 Aug 31 11:38 driver

dr-xr-xr-x 3 0 0 0 Aug 31 11:38 dynamic_debug

-r--r--r-- 1 0 0 0 Aug 31 11:38 execdomains

-r--r--r-- 1 0 0 0 Aug 31 11:38 fb

-r--r--r-- 1 0 0 0 Aug 31 11:37 filesystems

dr-xr-xr-x 5 0 0 0 Aug 31 11:38 fs

-r--r--r-- 1 0 0 0 Aug 31 11:38 interrupts

-r--r--r-- 1 0 0 0 Aug 31 11:38 iomem

-r--r--r-- 1 0 0 0 Aug 31 11:38 ioports

dr-xr-xr-x 27 0 0 0 Aug 31 11:38 irq

-r--r--r-- 1 0 0 0 Aug 31 11:38 kallsyms

-r-------- 1 0 0 72057594021167104 Aug 31 11:38 kcore

-r--r--r-- 1 0 0 0 Aug 31 11:38 key-users

-r--r--r-- 1 0 0 0 Aug 31 11:38 keys

-r-------- 1 0 0 0 Aug 31 11:38 kmsg

-r-------- 1 0 0 0 Aug 31 11:38 kpagecgroup

-r-------- 1 0 0 0 Aug 31 11:38 kpagecount

-r-------- 1 0 0 0 Aug 31 11:38 kpageflags

-r--r--r-- 1 0 0 0 Aug 31 11:38 loadavg

-r--r--r-- 1 0 0 0 Aug 31 11:38 locks

-r--r--r-- 1 0 0 0 Aug 31 11:38 mdstat

-r--r--r-- 1 0 0 0 Aug 31 11:37 meminfo

-r--r--r-- 1 0 0 0 Aug 31 11:38 misc

-r--r--r-- 1 0 0 0 Aug 31 11:38 modules

lrwxrwxrwx 1 0 0 11 Aug 31 11:38 mounts -> self/mounts

-rw-r--r-- 1 0 0 0 Aug 31 11:38 mtrr

lrwxrwxrwx 1 0 0 8 Aug 31 11:38 net -> self/net

-r-------- 1 0 0 0 Aug 31 11:38 pagetypeinfo

-r--r--r-- 1 0 0 0 Aug 31 11:38 partitions

dr-xr-xr-x 5 0 0 0 Aug 31 11:38 pressure

-r--r--r-- 1 0 0 0 Aug 31 11:38 schedstat

dr-xr-xr-x 5 0 0 0 Aug 31 11:38 scsi

lrwxrwxrwx 1 0 0 0 Aug 31 11:37 self -> 2161

-r-------- 1 0 0 0 Aug 31 11:38 slabinfo

-r--r--r-- 1 0 0 0 Aug 31 11:38 softirqs

-r--r--r-- 1 0 0 0 Aug 31 11:37 stat

-r--r--r-- 1 0 0 0 Aug 31 11:38 swaps

dr-xr-xr-x 1 0 0 0 Aug 31 11:37 sys

--w------- 1 0 0 0 Aug 31 11:38 sysrq-trigger

dr-xr-xr-x 5 0 0 0 Aug 31 11:38 sysvipc

lrwxrwxrwx 1 0 0 0 Aug 31 11:37 thread-self -> 2161/task/2161

-r-------- 1 0 0 0 Aug 31 11:38 timer_list

dr-xr-xr-x 6 0 0 0 Aug 31 11:37 tty

-r--r--r-- 1 0 0 0 Aug 31 11:37 uptime

-r--r--r-- 1 0 0 0 Aug 31 11:38 version

-r--r--r-- 1 0 0 0 Aug 31 11:38 version_signature

-r-------- 1 0 0 0 Aug 31 11:38 vmallocinfo

-r--r--r-- 1 0 0 0 Aug 31 11:38 vmstat

-r--r--r-- 1 0 0 0 Aug 31 11:38 zoneinfo

exit

---------------------

# 실습 시 사용한 proc 마운트 제거

root@ubuntu2204:/tmp# mount -t proc

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)

proc on /tmp/myroot/proc type proc (rw,relatime)

root@ubuntu2204:/tmp#

root@ubuntu2204:/tmp# umount /tmp/myroot/proc

root@ubuntu2204:/tmp# mount -t proc

proc on /proc type proc (rw,nosuid,nodev,noexec,relatime)남이 만든 이미지 chroot 해보기

- 컨테이너 이미지는 실행되는 프로세스의 동작에 필요한 모든 관련 파일을 묶어서 패키징

root@ubuntu2204:/tmp# mkdir nginx-root

root@ubuntu2204:/tmp# tree nginx-root

nginx-root

0 directories, 0 files

# nginx 컨테이너 압축 이미지를 받아서 압축 풀기

root@ubuntu2204:/tmp# docker export $(docker create nginx) | tar -C nginx-root -xvf -;

Unable to find image 'nginx:latest' locally

latest: Pulling from library/nginx

e4fff0779e6d: Pull complete

2a0cb278fd9f: Pull complete

7045d6c32ae2: Pull complete

03de31afb035: Pull complete

0f17be8dcff2: Pull complete

14b7e5e8f394: Pull complete

23fa5a7b99a6: Pull complete

Digest: sha256:447a8665cc1dab95b1ca778e162215839ccbb9189104c79d7ec3a81e14577add

Status: Downloaded newer image for nginx:latest

.dockerenv

bin

boot/

dev/

dev/console

dev/pts/

dev/shm/

docker-entrypoint.d/

docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

docker-entrypoint.d/15-local-resolvers.envsh

...

var/mail/

var/opt/

var/run

var/spool/

var/spool/mail

var/tmp/

root@ubuntu2204:/tmp# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx alpine 0f0eda053dc5 2 weeks ago 43.3MB

nginx latest 5ef79149e0ec 2 weeks ago 188MB

#

root@ubuntu2204:/tmp# tree -L 1 nginx-root

nginx-root

├── bin -> usr/bin

├── boot

├── dev

├── docker-entrypoint.d

├── docker-entrypoint.sh

├── etc

├── home

├── lib -> usr/lib

├── lib64 -> usr/lib64

├── media

├── mnt

├── opt

├── proc

├── root

├── run

├── sbin -> usr/sbin

├── srv

├── sys

├── tmp

├── usr

└── var

20 directories, 1 file

root@ubuntu2204:/tmp# tree -L 2 nginx-root

nginx-root

├── bin -> usr/bin

├── boot

├── dev

│ ├── console

│ ├── pts

│ └── shm

├── docker-entrypoint.d

│ ├── 10-listen-on-ipv6-by-default.sh

│ ├── 15-local-resolvers.envsh

│ ├── 20-envsubst-on-templates.sh

│ └── 30-tune-worker-processes.sh

├── docker-entrypoint.sh

├── etc

│ ├── adduser.conf

│ ├── alternatives

│ ├── apt

│ ├── bash.bashrc

│ ├── bindresvport.blacklist

│ ├── ca-certificates

│ ├── ca-certificates.conf

│ ├── cron.d

│ ├── cron.daily

│ ├── debconf.conf

│ ├── debian_version

│ ├── default

│ ├── deluser.conf

│ ├── dpkg

│ ├── e2scrub.conf

│ ├── environment

│ ├── fonts

│ ├── fstab

│ ├── gai.conf

│ ├── group

│ ├── group-

│ ├── gshadow

│ ├── gshadow-

│ ├── gss

│ ├── host.conf

│ ├── hostname

│ ├── hosts

│ ├── init.d

│ ├── issue

│ ├── issue.net

│ ├── kernel

│ ├── ld.so.cache

│ ├── ld.so.conf

│ ├── ld.so.conf.d

│ ├── libaudit.conf

│ ├── localtime -> /usr/share/zoneinfo/Etc/UTC

│ ├── login.defs

│ ├── logrotate.d

│ ├── mke2fs.conf

│ ├── motd

│ ├── mtab -> /proc/mounts

│ ├── nginx

│ ├── nsswitch.conf

│ ├── opt

│ ├── os-release -> ../usr/lib/os-release

│ ├── pam.conf

│ ├── pam.d

│ ├── passwd

│ ├── passwd-

│ ├── profile

│ ├── profile.d

│ ├── rc0.d

│ ├── rc1.d

│ ├── rc2.d

│ ├── rc3.d

│ ├── rc4.d

│ ├── rc5.d

│ ├── rc6.d

│ ├── rcS.d

│ ├── resolv.conf

│ ├── rmt -> /usr/sbin/rmt

│ ├── security

│ ├── selinux

│ ├── shadow

│ ├── shadow-

│ ├── shells

│ ├── skel

│ ├── ssl

│ ├── subgid

│ ├── subuid

│ ├── systemd

│ ├── terminfo

│ ├── timezone

│ ├── update-motd.d

│ └── xattr.conf

├── home

├── lib -> usr/lib

├── lib64 -> usr/lib64

├── media

├── mnt

├── opt

├── proc

├── root

├── run

│ └── lock

├── sbin -> usr/sbin

├── srv

├── sys

├── tmp

├── usr

│ ├── bin

│ ├── games

│ ├── include

│ ├── lib

│ ├── lib64

│ ├── libexec

│ ├── local

│ ├── sbin

│ ├── share

│ └── src

└── var

├── backups

├── cache

├── lib

├── local

├── lock -> /run/lock

├── log

├── mail

├── opt

├── run -> /run

├── spool

└── tmp

76 directories, 49 files

root@ubuntu2204:/tmp# chroot nginx-root /bin/sh

#---------------------

# ls

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var

boot docker-entrypoint.d etc lib media opt root sbin sys usr

# nginx -g "daemon off;"

# 터미널1에서 아래 확인 후 종료

CTRL +C # nginx 실행 종료

exit

---------------------

# [터미널2]

## 루트 디렉터리 비교 및 확인

vagrant@ubuntu2204:~$ ls /

bin dev home lib32 libx32 media opt root sbin srv sys usr

boot etc lib lib64 lost+found mnt proc run snap swap.img tmp var

vagrant@ubuntu2204:~$ ps -ef | grep nginx

root 2243 2238 0 20:50 pts/3 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 2244 2243 0 20:50 pts/3 00:00:00 nginx: worker process

systemd+ 2245 2243 0 20:50 pts/3 00:00:00 nginx: worker process

vagrant 2252 1915 0 20:50 pts/2 00:00:00 grep --color=auto nginx

vagrant@ubuntu2204:~$ curl localhost:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

vagrant@ubuntu2204:~$ sudo ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 511 0.0.0.0:80 0.0.0.0:* users:(("nginx",pid=2245,fd=6),("nginx",pid=2244,fd=6),("nginx",pid=2243,fd=6))

LISTEN 0 4096 0.0.0.0:50000 0.0.0.0:* users:(("docker-proxy",pid=1147,fd=4))

LISTEN 0 4096 0.0.0.0:8080 0.0.0.0:* users:(("docker-proxy",pid=1105,fd=4))

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=591,fd=14))

LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=811,fd=3))

LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=811,fd=4))

vagrant@ubuntu2204:~$chroot 환경에서 탈옥해 보기

root@ubuntu2204:/tmp# cat > escape_chroot.c <<-EOF

#include <sys/stat.h>

#include <unistd.h>

int main(void)

{

mkdir(".out", 0755);

chroot(".out");

chdir("../../../../../");

chroot(".");

return execl("/bin/sh", "-i", NULL);

}

EOF

# 탈옥 코드를 컴파일하고 new-root 에 복사

gcc -o myroot/escape_chroot escape_chroot.c

# 컴파일

root@ubuntu2204:/tmp# gcc -o myroot/escape_chroot escape_chroot.c

root@ubuntu2204:/tmp# tree -L 1 myroot

myroot

├── bin

├── escape_chroot

├── lib

├── lib64

├── proc

└── usr

5 directories, 1 file

root@ubuntu2204:/tmp# file myroot/escape_chroot

myroot/escape_chroot: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=db08d2c6b8345c74424c2e0b0cc711501ac4e440, for GNU/Linux 3.2.0, not stripped

# chroot 실행

root@ubuntu2204:/tmp# chroot myroot /bin/sh

# ls /

bin escape_chroot lib lib64 proc usr

# cd ../../

# cd ../../

# ls /

bin escape_chroot lib lib64 proc usr

# 탈출!

# ./escape_chroot

# ls /

bin dev home lib32 libx32 media opt root sbin srv sys usr

boot etc lib lib64 lost+found mnt proc run snap swap.img tmp var

# 종료

exit

exit

-----------------------

# [터미널2]

## 루트 디렉터리 비교 및 확인

vagrant@ubuntu2204:~$ ls /

bin dev home lib32 libx32 media opt root sbin srv sys usr

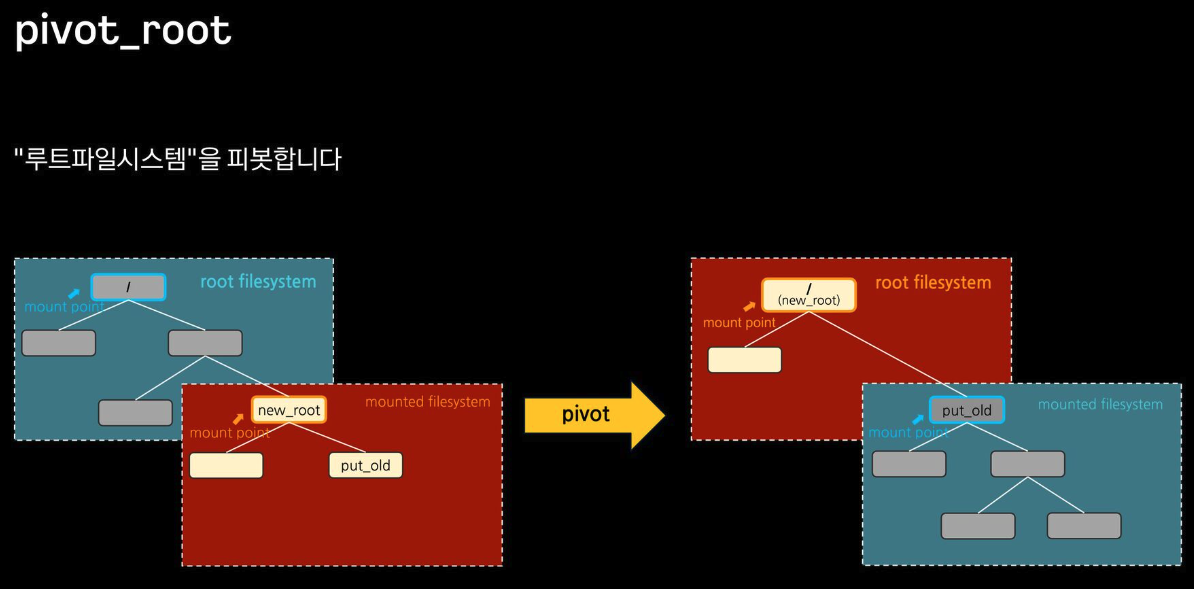

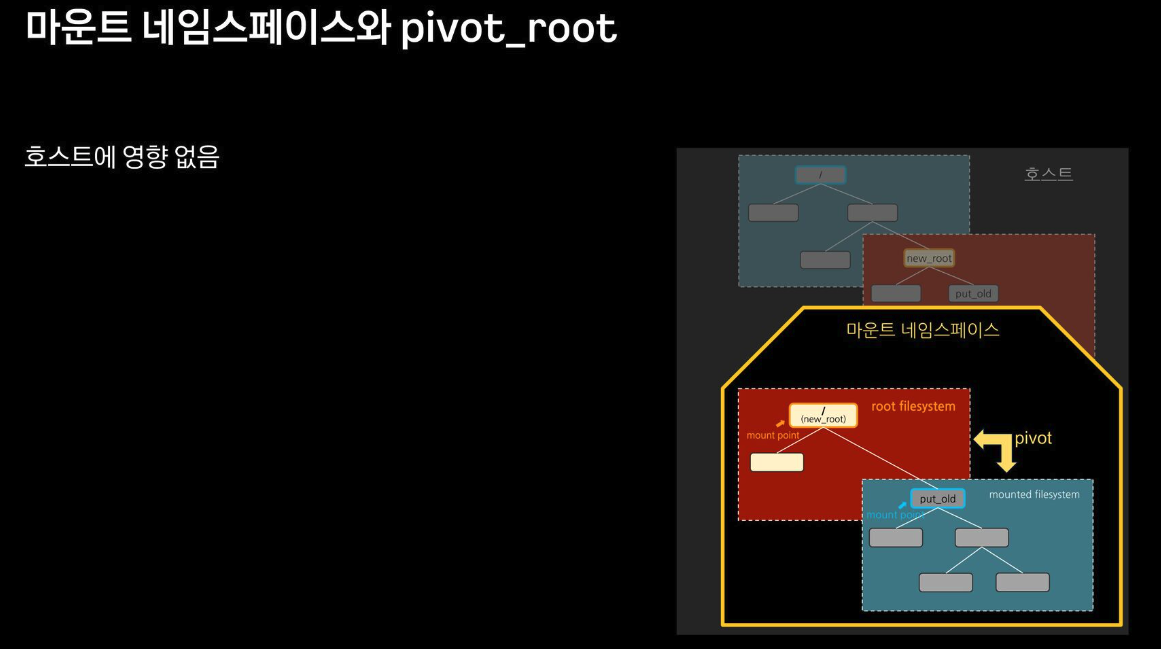

boot etc lib lib64 lost+found mnt proc run snap swap.img tmp varpivot_root

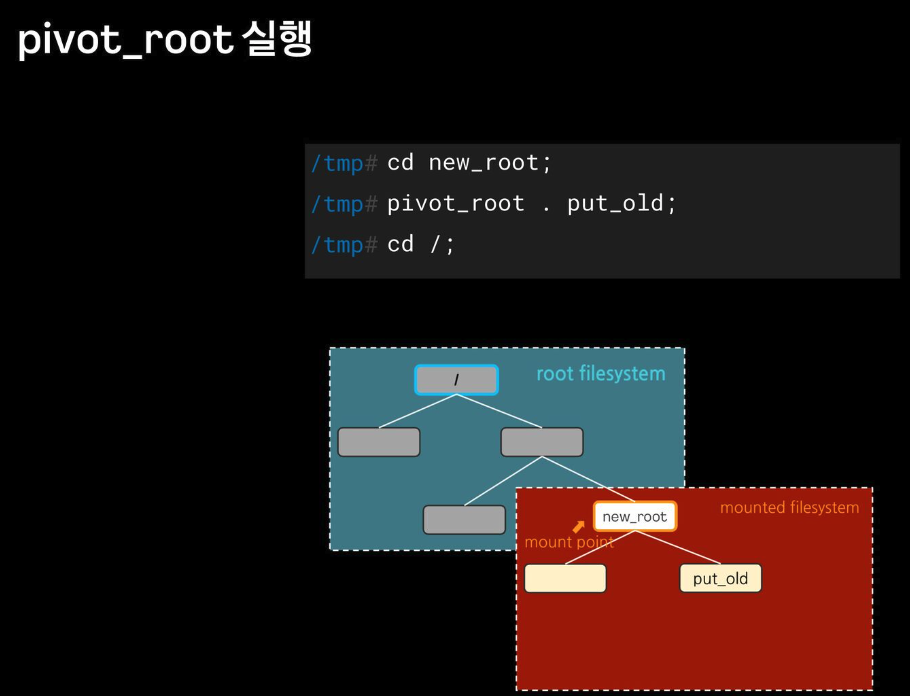

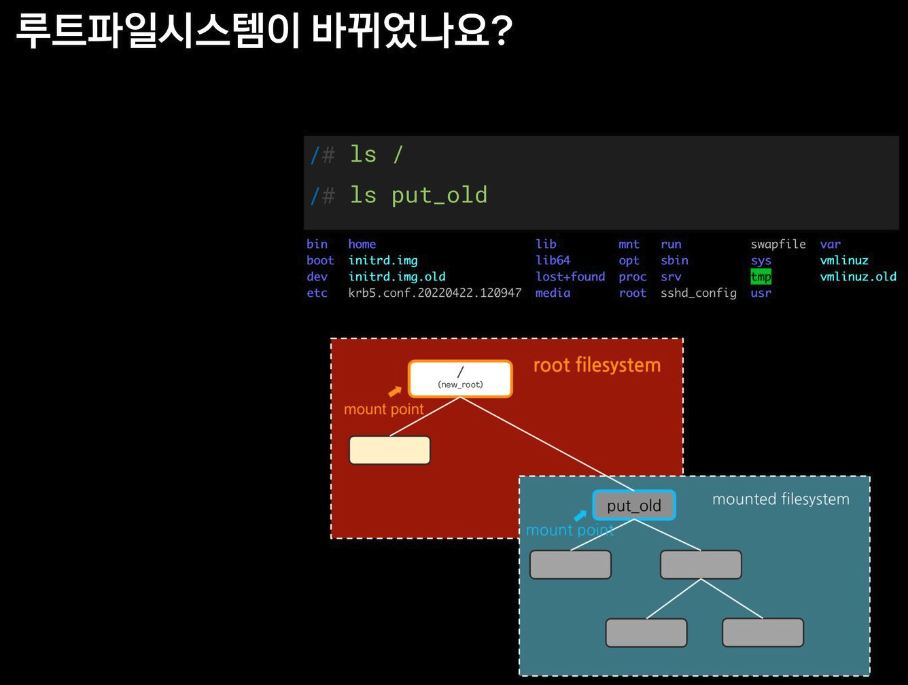

마운트 네임스페이스 + Pivot_root

-

chroot 차단을 위해서, pivot_root + mount ns(호스트 영향 격리) 를 사용 : 루트 파일 시스템을 변경(부착 mount) + 프로세스 환경 격리

-

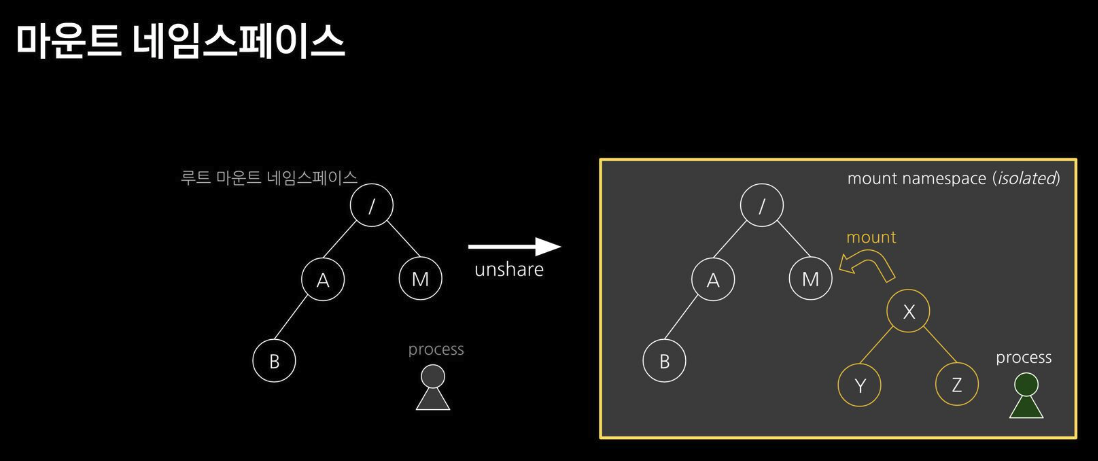

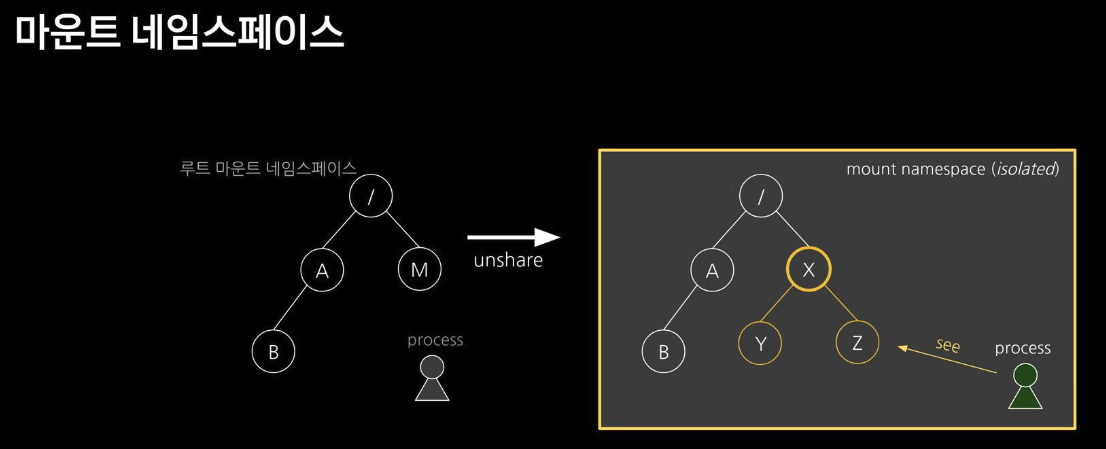

마운트 네임스페이스 : 마운트 포인트를 격리(unshare)

마운트 네임스페이스

주요 명령어

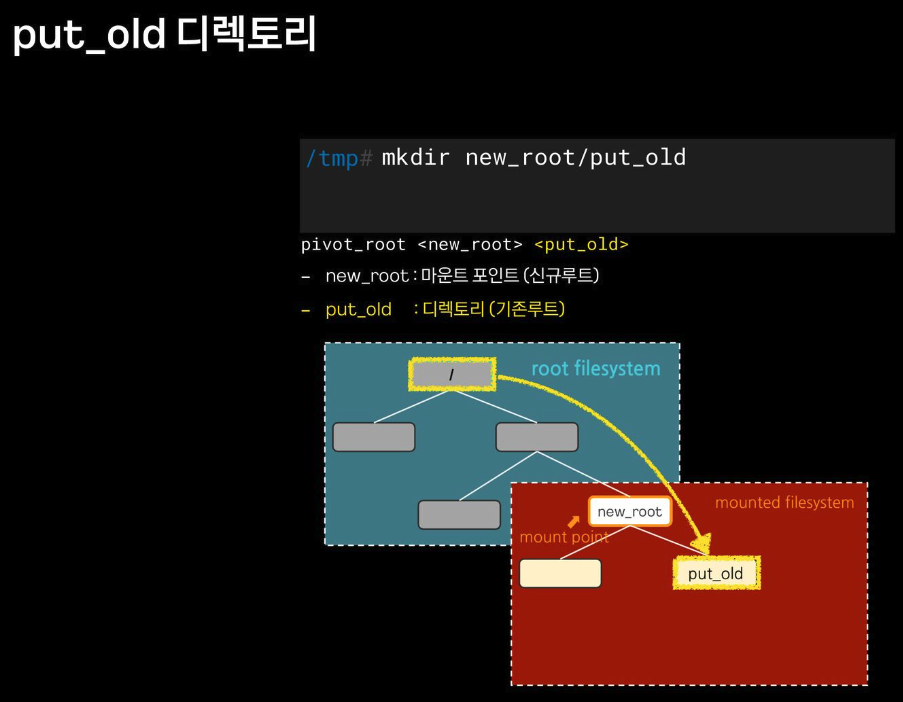

pivot_root

# pivot_root [new-root] [old-root]

## 사용법은 심플합니다 ~ new-root와 old-root 경로를 주면 됩니다

mount

# mount -t [filesystem type] [device_name] [directory - mount point]

## root filesystem tree에 다른 파일시스템을 붙이는 명령

## -t : filesystem type ex) -t tmpfs (temporary filesystem : 임시로 메모리에 생성됨)

## -o : 옵션 ex) -o size=1m (용량 지정 등 …)

## 참고) * /proc/filesystems 에서 지원하는 filesystem type 조회 가능

unshare

# unshare [options] [program] [arguments]]

## "새로운 네임스페이스를 만들고 나서 프로그램을 실행" 하는 명령어입니다

# [터미널1]

root@ubuntu2204:/tmp# unshare --mount /bin/sh

-----------------------

# 아래 터미널2 호스트 df -h 비교 : mount unshare 시 부모 프로세스의 마운트 정보를 복사해서 자식 네임스페이스를 생성하여 처음은 동일

# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lv 62G 7.0G 52G 12% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 392M 1.1M 391M 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 392M 4.0K 392M 1% /run/user/1000

/dev/vda2 2.0G 129M 1.7G 8% /boot

overlay 62G 7.0G 52G 12% /var/lib/docker/overlay2/5294914e81e79503daa0e028cac52d1ef07dfed61c2b29f09f34e5ba20ceb443/merged

overlay 62G 7.0G 52G 12% /var/lib/docker/overlay2/540f582b0d9ee797014308b777870f6fe87e6730bcff85793dd20ebf6adc462a/merged

-----------------------

# [터미널2]

vagrant@ubuntu2204:~$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 392M 1.1M 391M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 62G 7.0G 52G 12% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/vda2 2.0G 129M 1.7G 8% /boot

tmpfs 392M 4.0K 392M 1% /run/user/1000

# [터미널1]

-----------------------

#

# mkdir new_root

# mount -t tmpfs none new_root

# ls -l

total 48

-rw-r--r-- 1 root root 186 Aug 31 20:55 escape_chroot.c

drwxr-xr-x 3 root root 4096 Aug 31 20:04 lib

drwxr-xr-x 8 root root 4096 Aug 31 20:59 myroot

drwxrwxrwt 2 root root 40 Aug 31 21:13 new_root

drwxr-xr-x 18 root root 4096 Aug 31 20:45 nginx-root

drwx------ 3 root root 4096 Aug 31 19:20 snap-private-tmp

drwx------ 3 root root 4096 Aug 31 20:00 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-fwupd.service-2nw0lO

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-haveged.service-SoQmvN

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-ModemManager.service-8udLhn

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-logind.service-gha9Az

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-resolved.service-oftVjb

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-timesyncd.service-CaXq7d

drwx------ 3 root root 4096 Aug 31 20:00 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-upower.service-VFd7Bf

# tree new_root

new_root

0 directories, 0 files

## 마운트 정보 비교 : 마운트 네임스페이스를 unshare

# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/ubuntu--vg-ubuntu--lv 62G 7.0G 52G 12% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 392M 1.1M 391M 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 392M 4.0K 392M 1% /run/user/1000

/dev/vda2 2.0G 129M 1.7G 8% /boot

overlay 62G 7.0G 52G 12% /var/lib/docker/overlay2/5294914e81e79503daa0e028cac52d1ef07dfed61c2b29f09f34e5ba20ceb443/merged

overlay 62G 7.0G 52G 12% /var/lib/docker/overlay2/540f582b0d9ee797014308b777870f6fe87e6730bcff85793dd20ebf6adc462a/merged

none 2.0G 0 2.0G 0% /tmp/new_root

# mount | grep new_root

none on /tmp/new_root type tmpfs (rw,relatime,inode64)

# findmnt -A

TARGET SOURCE FSTYPE OPTIONS

/ /dev/mapper/ubuntu--vg-ubuntu--lv

│ ext4 rw,relatime

├─/dev udev devtmpfs rw,nosuid,relatime,size=1944500k,nr_i

│ ├─/dev/pts devpts devpts rw,nosuid,noexec,relatime,gid=5,mode=

│ ├─/dev/shm tmpfs tmpfs rw,nosuid,nodev,inode64

│ ├─/dev/hugepages hugetlbfs hugetlbf rw,relatime,pagesize=2M

│ └─/dev/mqueue mqueue mqueue rw,nosuid,nodev,noexec,relatime

├─/run tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=

│ ├─/run/lock tmpfs tmpfs rw,nosuid,nodev,noexec,relatime,size=

│ ├─/run/credentials/systemd-sysusers.service

│ │ none ramfs ro,nosuid,nodev,noexec,relatime,mode=

│ ├─/run/snapd/ns tmpfs[/snapd/ns] tmpfs rw,nosuid,nodev,noexec,relatime,size=

│ ├─/run/docker/netns/d713514cb82d nsfs[net:[4026532299]]

│ │ nsfs rw

│ └─/run/user/1000 tmpfs tmpfs rw,nosuid,nodev,relatime,size=400488k

├─/sys sysfs sysfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/security securityfs security rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/cgroup cgroup2 cgroup2 rw,nosuid,nodev,noexec,relatime,nsdel

│ ├─/sys/fs/pstore pstore pstore rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/bpf bpf bpf rw,nosuid,nodev,noexec,relatime,mode=

│ ├─/sys/kernel/debug debugfs debugfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/tracing tracefs tracefs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/config configfs configfs rw,nosuid,nodev,noexec,relatime

│ └─/sys/fs/fuse/connections fusectl fusectl rw,nosuid,nodev,noexec,relatime

├─/proc proc proc rw,nosuid,nodev,noexec,relatime

│ └─/proc/sys/fs/binfmt_misc systemd-1 autofs rw,relatime,fd=29,pgrp=1,timeout=0,mi

│ └─/proc/sys/fs/binfmt_misc binfmt_misc binfmt_m rw,nosuid,nodev,noexec,relatime

├─/snap/core20/1974 /dev/loop0 squashfs ro,nodev,relatime,errors=continue

├─/snap/core20/2318 /dev/loop1 squashfs ro,nodev,relatime,errors=continue

├─/snap/lxd/24322 /dev/loop3 squashfs ro,nodev,relatime,errors=continue

├─/snap/lxd/29351 /dev/loop4 squashfs ro,nodev,relatime,errors=continue

├─/snap/snapd/19457 /dev/loop5 squashfs ro,nodev,relatime,errors=continue

├─/snap/snapd/21759 /dev/loop2 squashfs ro,nodev,relatime,errors=continue

├─/boot /dev/vda2 ext4 rw,relatime

├─/var/lib/docker/overlay2/5294914e81e79503daa0e028cac52d1ef07dfed61c2b29f09f34e5ba20ceb443/merged

│ overlay overlay rw,relatime,lowerdir=/var/lib/docker/

├─/var/lib/docker/overlay2/540f582b0d9ee797014308b777870f6fe87e6730bcff85793dd20ebf6adc462a/merged

│ overlay overlay rw,relatime,lowerdir=/var/lib/docker/

└─/tmp/new_root none tmpfs rw,relatime,inode64

## 파일 복사 후 터미널2 호스트와 비교

# cp -r myroot/* new_root/

# tree new_root/

new_root/

├── bin

│ ├── ls

│ ├── mkdir

│ ├── mount

│ ├── ps

│ └── sh

├── escape_chroot

├── lib

│ └── x86_64-linux-gnu

│ ├── libblkid.so.1

│ ├── libcap.so.2

│ ├── libc.so.6

│ ├── libgcrypt.so.20

│ ├── libgpg-error.so.0

│ ├── liblzma.so.5

│ ├── libmount.so.1

│ ├── libpcre2-8.so.0

│ ├── libprocps.so.8

│ ├── libselinux.so.1

│ ├── libsystemd.so.0

│ └── libzstd.so.1

├── lib64

│ └── ld-linux-x86-64.so.2

├── proc

└── usr

└── lib

└── x86_64-linux-gnu

└── liblz4.so.1

8 directories, 20 files

-----------------------

# [터미널2]

vagrant@ubuntu2204:~$ cd /tmp

vagrant@ubuntu2204:/tmp$ ls -l

total 52

-rw-r--r-- 1 root root 186 Aug 31 20:55 escape_chroot.c

drwxr-xr-x 3 root root 4096 Aug 31 20:04 lib

drwxr-xr-x 8 root root 4096 Aug 31 20:59 myroot

drwxr-xr-x 2 root root 4096 Aug 31 21:13 new_root

drwxr-xr-x 18 root root 4096 Aug 31 20:45 nginx-root

drwx------ 3 root root 4096 Aug 31 19:20 snap-private-tmp

drwx------ 3 root root 4096 Aug 31 20:00 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-fwupd.service-2nw0lO

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-haveged.service-SoQmvN

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-ModemManager.service-8udLhn

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-logind.service-gha9Az

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-resolved.service-oftVjb

drwx------ 3 root root 4096 Aug 31 19:20 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-systemd-timesyncd.service-CaXq7d

drwx------ 3 root root 4096 Aug 31 20:00 systemd-private-81e10695661b42f8a9d63bd4bcf4141f-upower.service-VFd7Bf

vagrant@ubuntu2204:/tmp$ tree new_root

new_root

0 directories, 0 files

vagrant@ubuntu2204:/tmp$ df -h

Filesystem Size Used Avail Use% Mounted on

tmpfs 392M 1.1M 391M 1% /run

/dev/mapper/ubuntu--vg-ubuntu--lv 62G 7.0G 52G 12% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/vda2 2.0G 129M 1.7G 8% /boot

tmpfs 392M 4.0K 392M 1% /run/user/1000

vagrant@ubuntu2204:/tmp$ mount | grep new_root

vagrant@ubuntu2204:/tmp$ findmnt -A

TARGET SOURCE FSTYPE OPTIONS

/ /dev/mapper/ubuntu--vg-ubuntu--lv

│ ext4 rw,relatime

├─/sys sysfs sysfs rw,nosuid,nodev,noexec,relatime

│ ├─/sys/kernel/security securityfs security rw,nosuid,nodev,noexec,relatime

│ ├─/sys/fs/cgroup cgroup2 cgroup2 rw,nosuid,nodev,noexec,relatime,nsdel

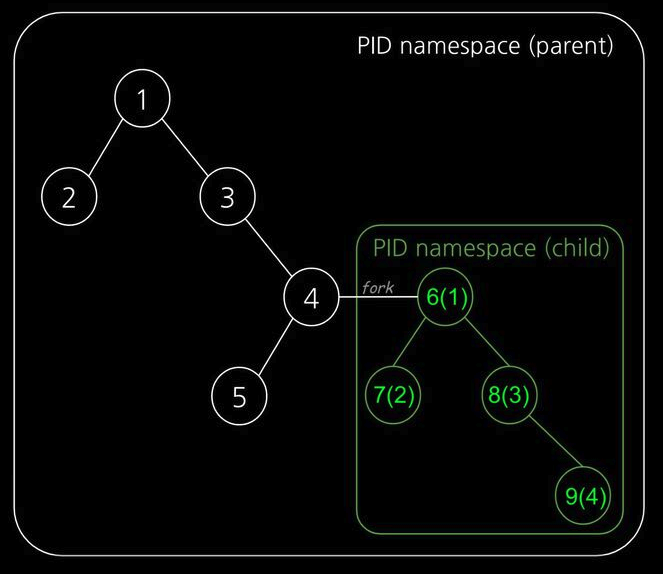

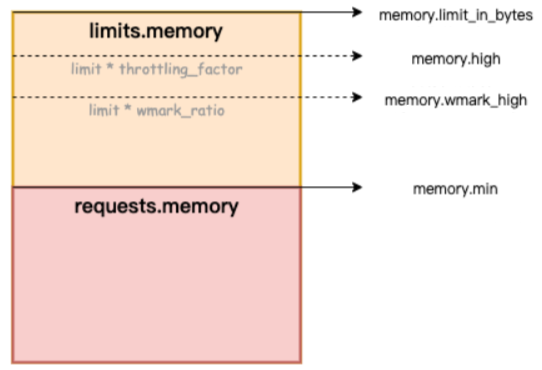

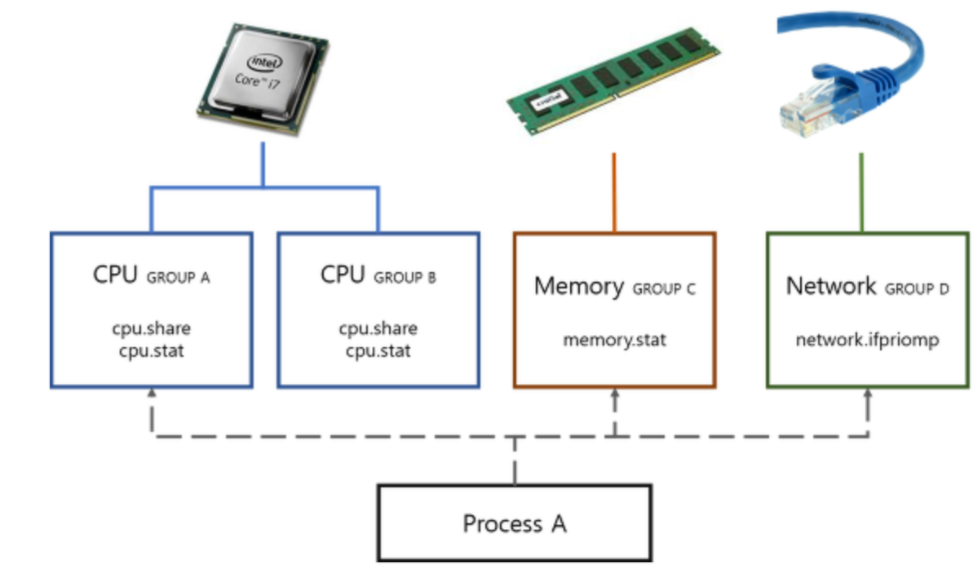

│ ├─/sys/fs/pstore pstore pstore rw,nosuid,nodev,noexec,relatime