KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 2주차는 Kind, K8S Flannel CNI & PAUSE를 학습하였습니다. 실습 중심으로 글을 작성하겠습니다. 참고적으로 실습환경은 MacBook Air - M1 입니다. 끝으로 kube-ops-view를 운영 환경에 적용 시 유의점(장애사례) 정리하였습니다.

1. 쿠버네티스 소개

Kubernetes는

컨테이너화된 워크로드와 서비스를 관리하기 위한 이식성이 있고, 확장가능한 오픈소스 플랫폼이다. 쿠버네티스는 선언적 구성과 자동화를 모두 용이하게 해준다. 쿠버네티스는 크고, 빠르게 성장하는 생태계를 가지고 있다. 쿠버네티스 서비스, 기술 지원 및 도구는 어디서나 쉽게 이용할 수 있다.

쿠버네티스란 명칭은 키잡이(helmsman)나 파일럿을 뜻하는 그리스어에서 유래했다. K8s라는 표기는 "K"와 "s"와 그 사이에 있는 8글자를 나타내는 약식 표기이다. 구글이 2014년에 쿠버네티스 프로젝트를 오픈소스화했다. 쿠버네티스는 프로덕션 워크로드를 대규모로 운영하는 15년 이상의 구글 경험과 커뮤니티의 최고의 아이디어와 적용 사례가 결합되어 있다.

Container로 발전 여정

전통적인 배포 시대: 초기 조직은 애플리케이션을 물리 서버에서 실행했었다. 한 물리 서버에서 여러 애플리케이션의 리소스 한계를 정의할 방법이 없었기에, 리소스 할당의 문제가 발생했다. 예를 들어 물리 서버 하나에서 여러 애플리케이션을 실행하면, 리소스 전부를 차지하는 애플리케이션 인스턴스가 있을 수 있고, 결과적으로는 다른 애플리케이션의 성능이 저하될 수 있었다. 이에 대한 해결책으로 서로 다른 여러 물리 서버에서 각 애플리케이션을 실행할 수도 있다. 그러나 이는 리소스가 충분히 활용되지 않는다는 점에서 확장 가능하지 않았으며, 조직이 많은 물리 서버를 유지하는 데에 높은 비용이 들었다.

가상화된 배포 시대: 그 해결책으로 가상화가 도입되었다. 이는 단일 물리 서버의 CPU에서 여러 가상 시스템 (VM)을 실행할 수 있게 한다. 가상화를 사용하면 VM간에 애플리케이션을 격리하고 애플리케이션의 정보를 다른 애플리케이션에서 자유롭게 액세스할 수 없으므로, 일정 수준의 보안성을 제공할 수 있다.

가상화를 사용하면 물리 서버에서 리소스를 보다 효율적으로 활용할 수 있으며, 쉽게 애플리케이션을 추가하거나 업데이트할 수 있고 하드웨어 비용을 절감할 수 있어 더 나은 확장성을 제공한다. 가상화를 통해 일련의 물리 리소스를 폐기 가능한(disposable) 가상 머신으로 구성된 클러스터로 만들 수 있다.

각 VM은 가상화된 하드웨어 상에서 자체 운영체제를 포함한 모든 구성 요소를 실행하는 하나의 완전한 머신이다.

컨테이너 개발 시대: 컨테이너는 VM과 유사하지만 격리 속성을 완화하여 애플리케이션 간에 운영체제(OS)를 공유한다. 그러므로 컨테이너는 가볍다고 여겨진다. VM과 마찬가지로 컨테이너에는 자체 파일 시스템, CPU 점유율, 메모리, 프로세스 공간 등이 있다. 기본 인프라와의 종속성을 끊었기 때문에, 클라우드나 OS 배포본에 모두 이식할 수 있다.

컨테이너는 다음과 같은 추가적인 혜택을 제공하기 때문에 유명해졌다.

- 기민한 애플리케이션 생성과 배포: VM 이미지를 사용하는 것에 비해 컨테이너 이미지 생성이 보다 쉽고 효율적이다.

- 지속적인 개발, 통합 및 배포: 안정적이고 주기적으로 컨테이너 이미지를 빌드해서 배포할 수 있고 (이미지의 불변성 덕에) 빠르고 효율적으로 롤백할 수 있다.

- 개발과 운영의 관심사 분리: 배포 시점이 아닌 빌드/릴리스 시점에 애플리케이션 컨테이너 이미지를 만들기 때문에, 애플리케이션이 인프라스트럭처에서 분리된다.

- 가시성(observability): OS 수준의 정보와 메트릭에 머무르지 않고, 애플리케이션의 헬스와 그 밖의 시그널을 볼 수 있다.

- 개발, 테스팅 및 운영 환경에 걸친 일관성: 랩탑에서도 클라우드에서와 동일하게 구동된다.

- 클라우드 및 OS 배포판 간 이식성: Ubuntu, RHEL, CoreOS, 온-프레미스, 주요 퍼블릭 클라우드와 어디에서든 구동된다.

- 애플리케이션 중심 관리: 가상 하드웨어 상에서 OS를 실행하는 수준에서 논리적인 리소스를 사용하는 OS 상에서 애플리케이션을 실행하는 수준으로 추상화 수준이 높아진다.

느슨하게 커플되고, 분산되고, 유연하며, 자유로운 마이크로서비스: 애플리케이션은 단일 목적의 머신에서 모놀리식 스택으로 구동되지 않고 보다 작고 독립적인 단위로 쪼개져서 동적으로 배포되고 관리될 수 있다. - 리소스 격리: 애플리케이션 성능을 예측할 수 있다.

- 리소스 사용량: 고효율 고집적.

쿠버네티스가 왜 필요하고 무엇을 할 수 있나

컨테이너는 애플리케이션을 포장하고 실행하는 좋은 방법이다. 프로덕션 환경에서는 애플리케이션을 실행하는 컨테이너를 관리하고 가동 중지 시간이 없는지 확인해야 한다. 예를 들어 컨테이너가 다운되면 다른 컨테이너를 다시 시작해야 한다. 이 문제를 시스템에 의해 처리한다면 더 쉽지 않을까?

그것이 쿠버네티스가 필요한 이유이다! 쿠버네티스는 분산 시스템을 탄력적으로 실행하기 위한 프레임 워크를 제공한다. 애플리케이션의 확장과 장애 조치를 처리하고, 배포 패턴 등을 제공한다. 예를 들어, 쿠버네티스는 시스템의 카나리아 배포를 쉽게 관리할 수 있다.

쿠버네티스는 다음을 제공한다

- 서비스 디스커버리와 로드 밸런싱: 쿠버네티스는 DNS 이름을 사용하거나 자체 IP 주소를 사용하여 컨테이너를 노출할 수 있다. 컨테이너에 대한 트래픽이 많으면, 쿠버네티스는 네트워크 트래픽을 로드밸런싱하고 배포하여 배포가 안정적으로 이루어질 수 있다.

- 스토리지 오케스트레이션 : 쿠버네티스를 사용하면 로컬 저장소, 공용 클라우드 공급자 등과 같이 원하는 저장소 시스템을 자동으로 탑재할 수 있다

- 자동화된 롤아웃과 롤백 : 쿠버네티스를 사용하여 배포된 컨테이너의 원하는 상태를 서술할 수 있으며 현재 상태를 원하는 상태로 설정한 속도에 따라 변경할 수 있다. 예를 들어 쿠버네티스를 자동화해서 배포용 새 컨테이너를 만들고, 기존 컨테이너를 제거하고, 모든 리소스를 새 컨테이너에 적용할 수 있다.

- 자동화된 빈 패킹(bin packing) : 컨테이너화된 작업을 실행하는데 사용할 수 있는 쿠버네티스 클러스터 노드를 제공한다. 각 컨테이너가 필요로 하는 CPU와 메모리(RAM)를 쿠버네티스에게 지시한다. 쿠버네티스는 컨테이너를 노드에 맞추어서 리소스를 가장 잘 사용할 수 있도록 해준다.

- 자동화된 복구(self-healing) : 쿠버네티스는 실패한 컨테이너를 다시 시작하고, 컨테이너를 교체하며, '사용자 정의 상태 검사'에 응답하지 않는 컨테이너를 죽이고, 서비스 준비가 끝날 때까지 그러한 과정을 클라이언트에 보여주지 않는다.

- 시크릿과 구성 관리 : 쿠버네티스를 사용하면 암호, OAuth 토큰 및 SSH 키와 같은 중요한 정보를 저장하고 관리할 수 있다. 컨테이너 이미지를 재구성하지 않고 스택 구성에 시크릿을 노출하지 않고도 시크릿 및 애플리케이션 구성을 배포 및 업데이트할 수 있다.

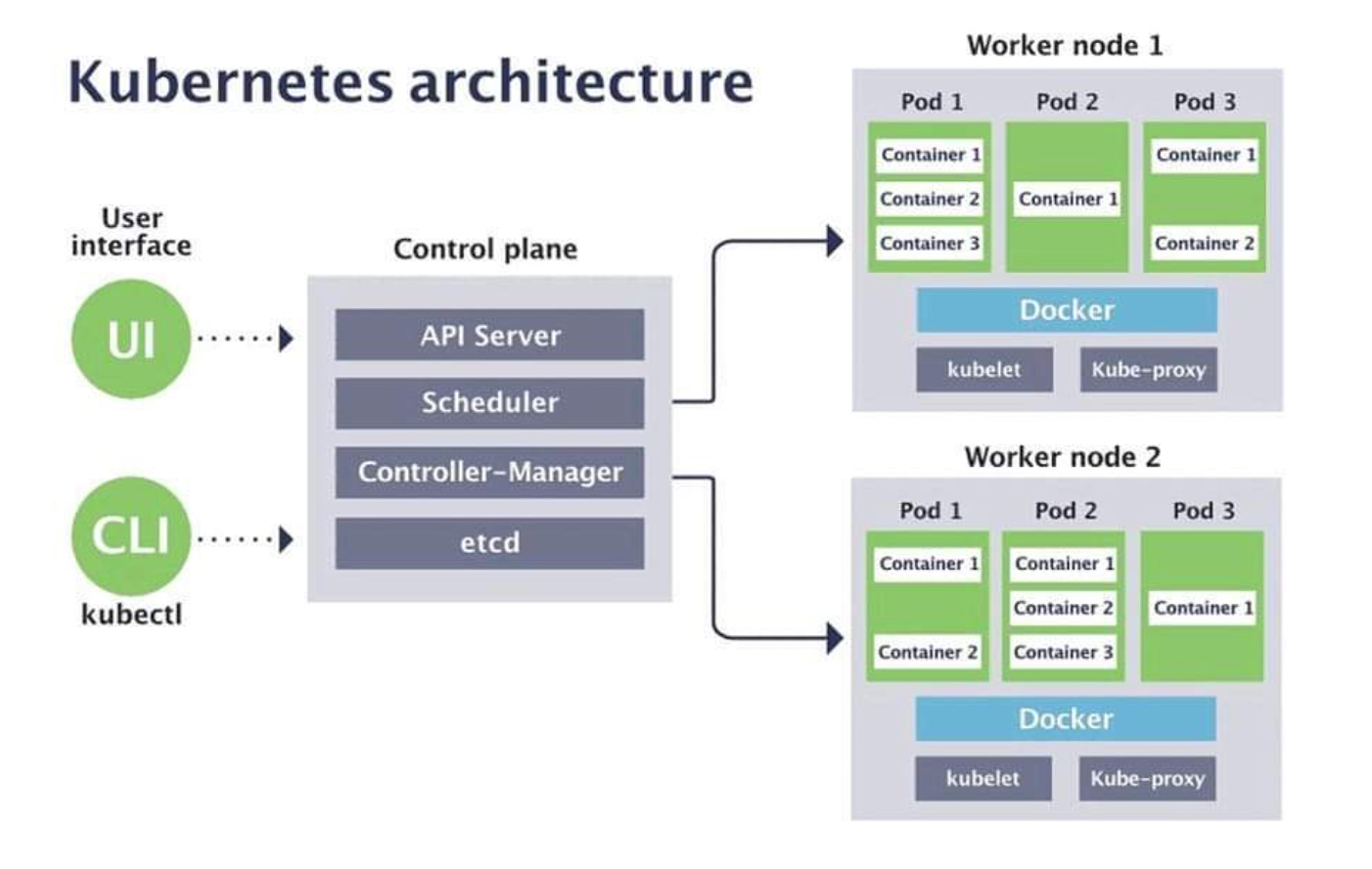

Kubernetes Components

-

K8S 클러스터는 Control Plane과 Worker Node로 구성

https://blog.naver.com/love_tolty/222167051615

https://blog.naver.com/love_tolty/222167051615 -

Control Plane : 마스터는 단일 서버 혹은 고가용성을 위한 클러스터 마스터로 구축

- api server : 마스터로 전달되는 모든 요청을 받아 드리는 API 서버

- kube-controller manager : 현재 상태와 바라는 상태를 지속적으로 확인하며 특정 이벤트에 따라 특정 동작을 수행하는 컨트롤러

- cloud-controller-manager : 클라우드 플랫폼(AWS, GCP, Azure 등)에 특화된 리소스를 제어하는 클라우드 컨트롤러

- etcd : 클러스터내 모든 메타 정보를 저장하는 서비스

- scheduler : 사용자의 요청에 따라 적절하게 컨테이너를 워커 노드에 배치하는 스케줄러

-

Worker Node : 서비스 Pod 들이 실행되는 서버

- kubelet : 마스터의 명령에 따라 컨테이너의 라이프 사이클을 관리하는 노드 관리자

- kube-proxy : 컨테이너의 네트워킹을 책임지는 프록시, 네트워크 규칙을 유지 관리

- Container Runtime : 실제 컨테이너를 실행하는 컨테이너 실행 환경(containerD & CRI-O)

- Addons s/w

- dns : 쿠버네티스 서비스를 위해 DNS 레코드를 제공해주며, Service Discovery 기능을 제공. 대표적인 것은 CoreDNS

- cni : Container Network Interface 는 k8s 네트워크 환경을 구성해준다 - 링크, 다양한 플러그인이 존재

- ebs-csi-driver(aws) : ebs를 pv로 사용할 수 있도록 함

- efs-csi-driver(aws) : efs를 pv로 사용할 수 있도록 함

- Container Runtime : 실제 컨테이너를 실행하는 컨테이너 실행 환경(

Docker-shim& containerD & CRI-D)

-

Client

- kubectl : Kubernetes API 서버와 통신하기 위해 사용하는 명령줄 도구

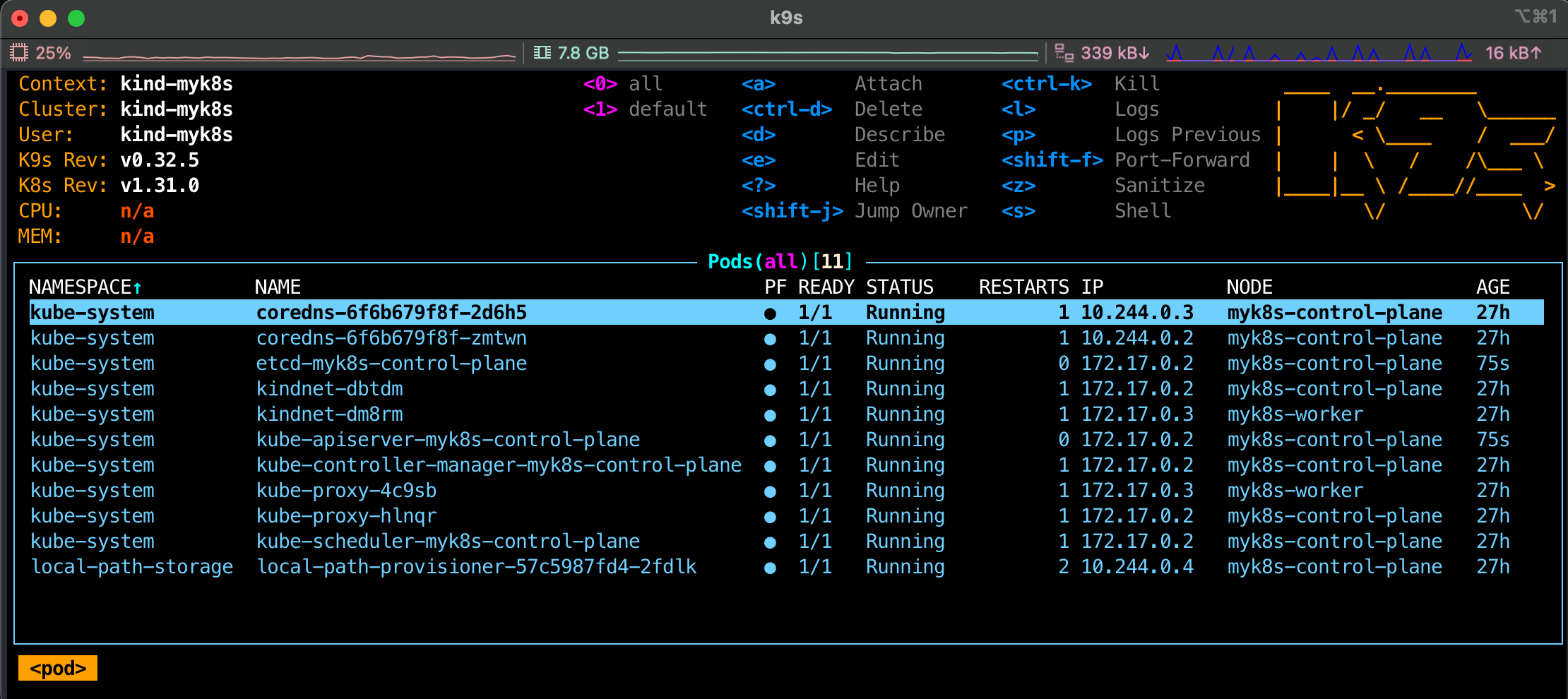

- k9s : k8s를 gui스타일로 관리(https://github.com/derailed/k9s)

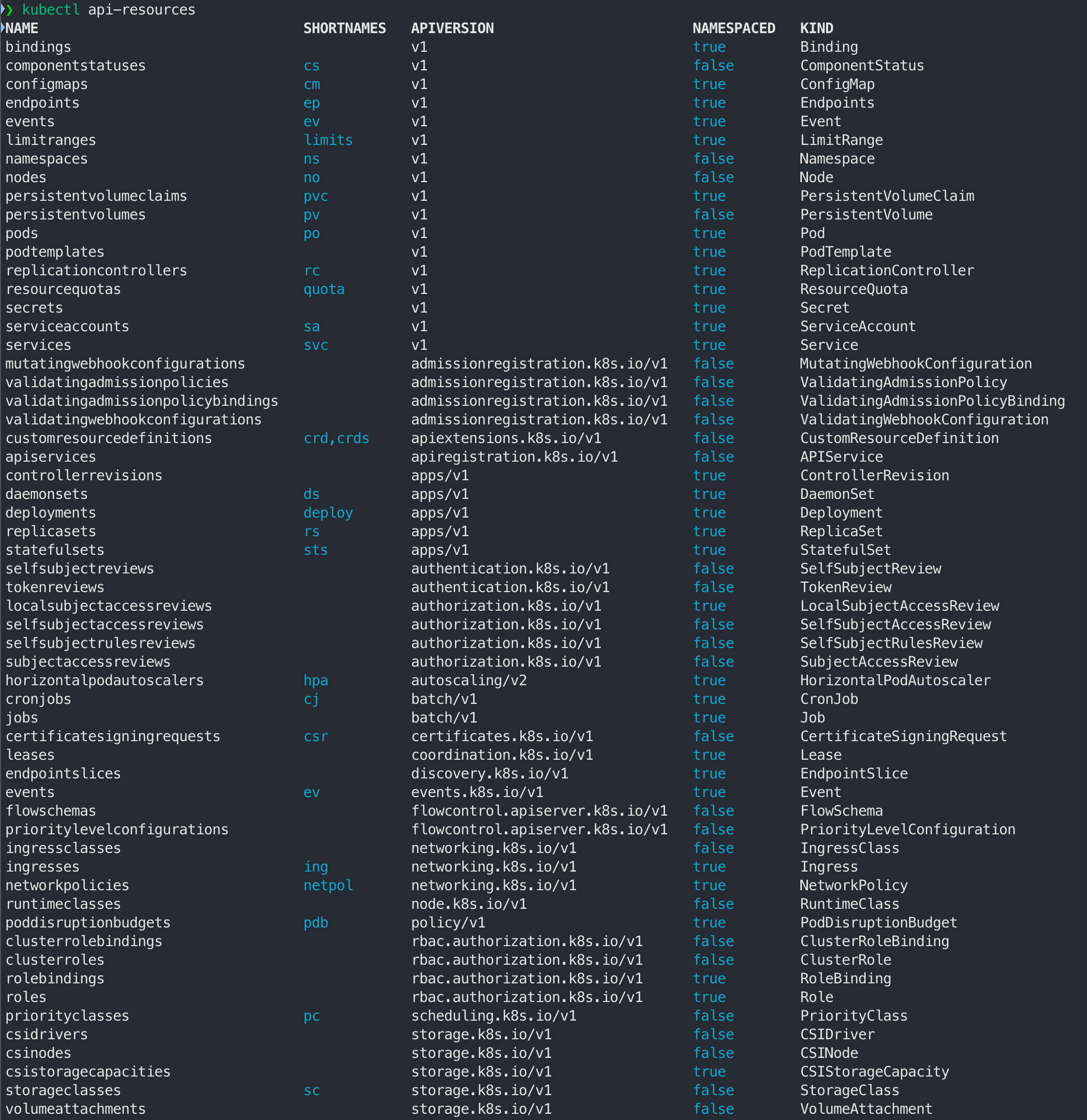

kubernetes에서 제공하는 object

- api-resources

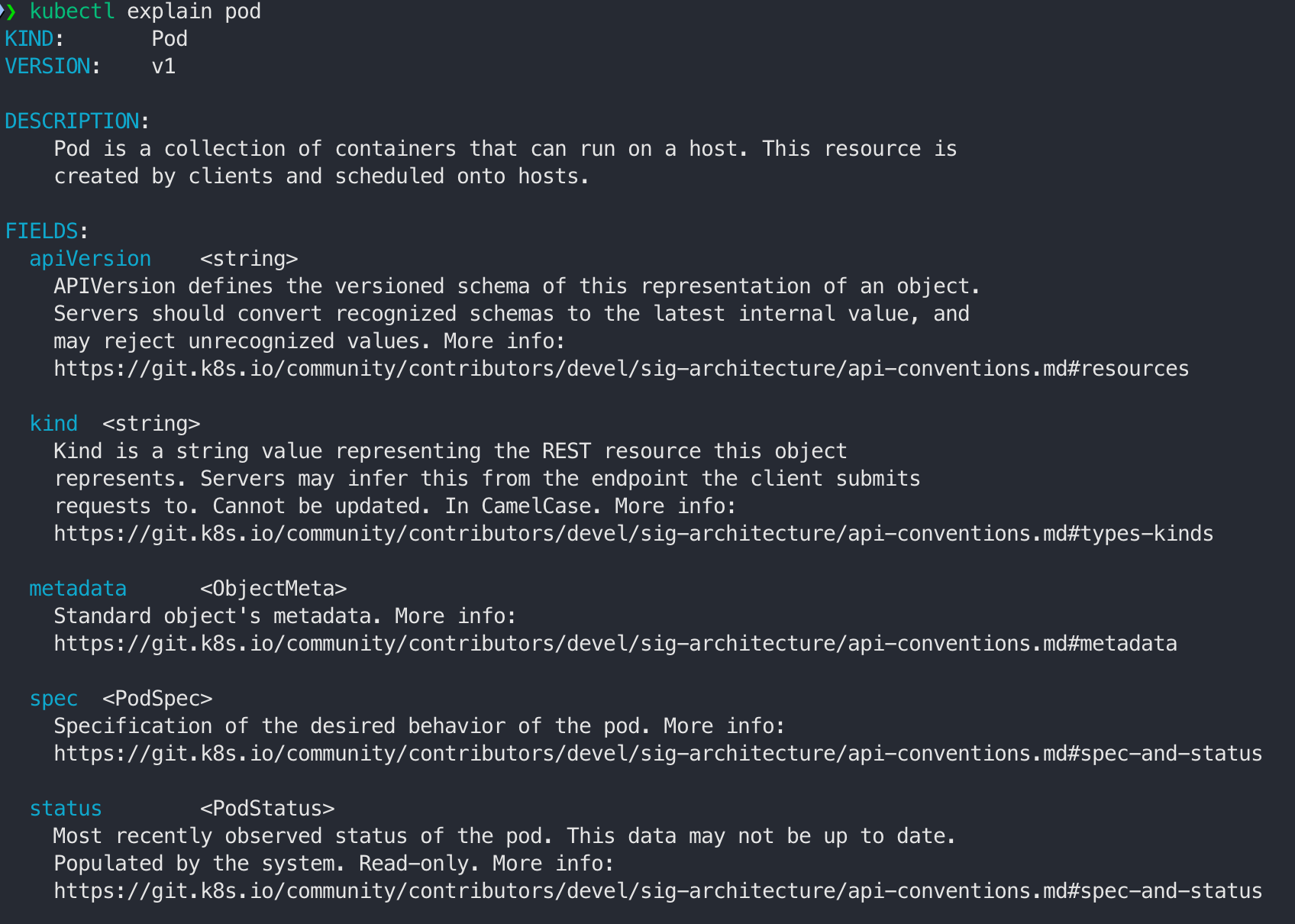

- 특정 object 설명(예, pod)

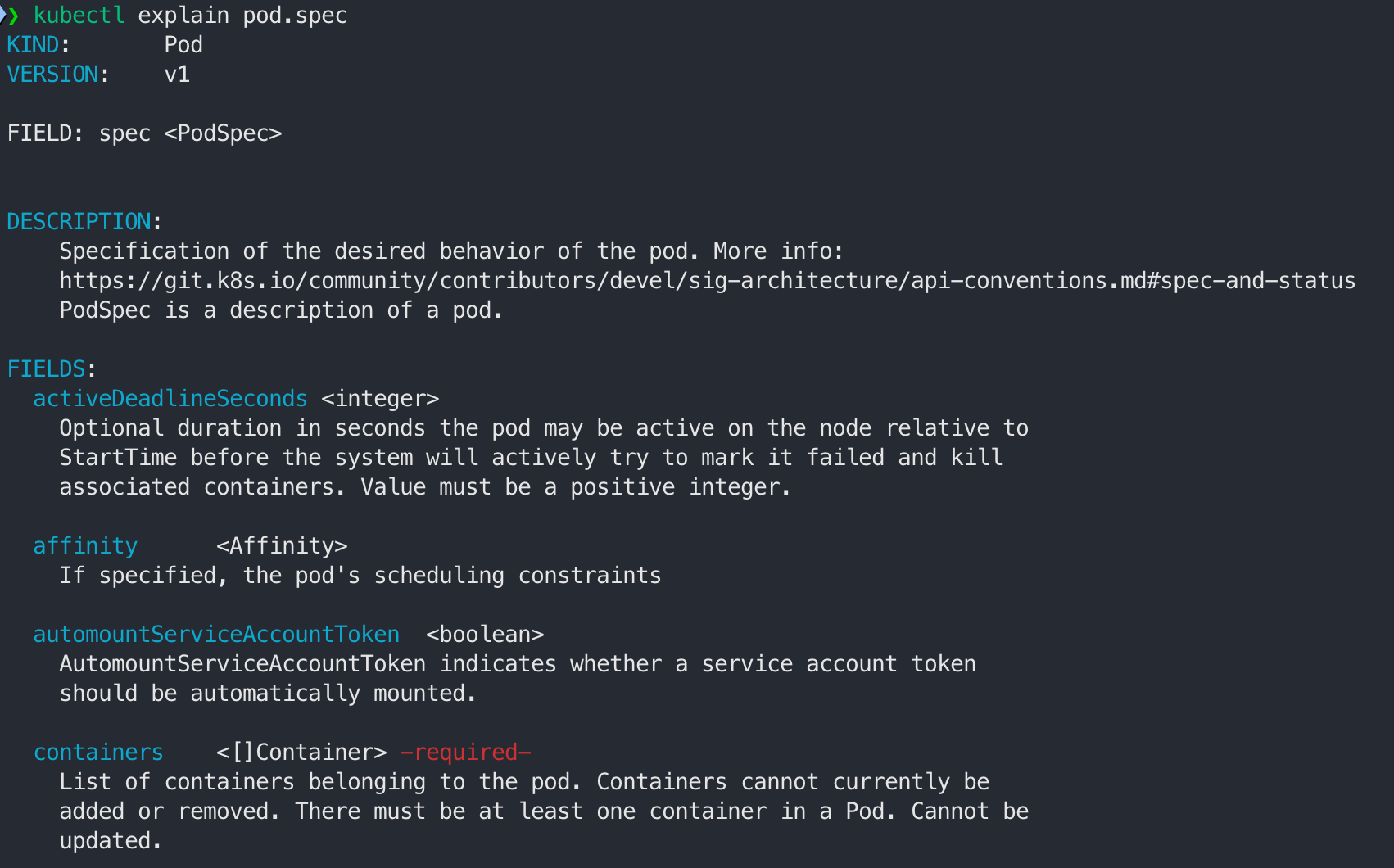

- 특정 object의 하위 필드의 정보 확인

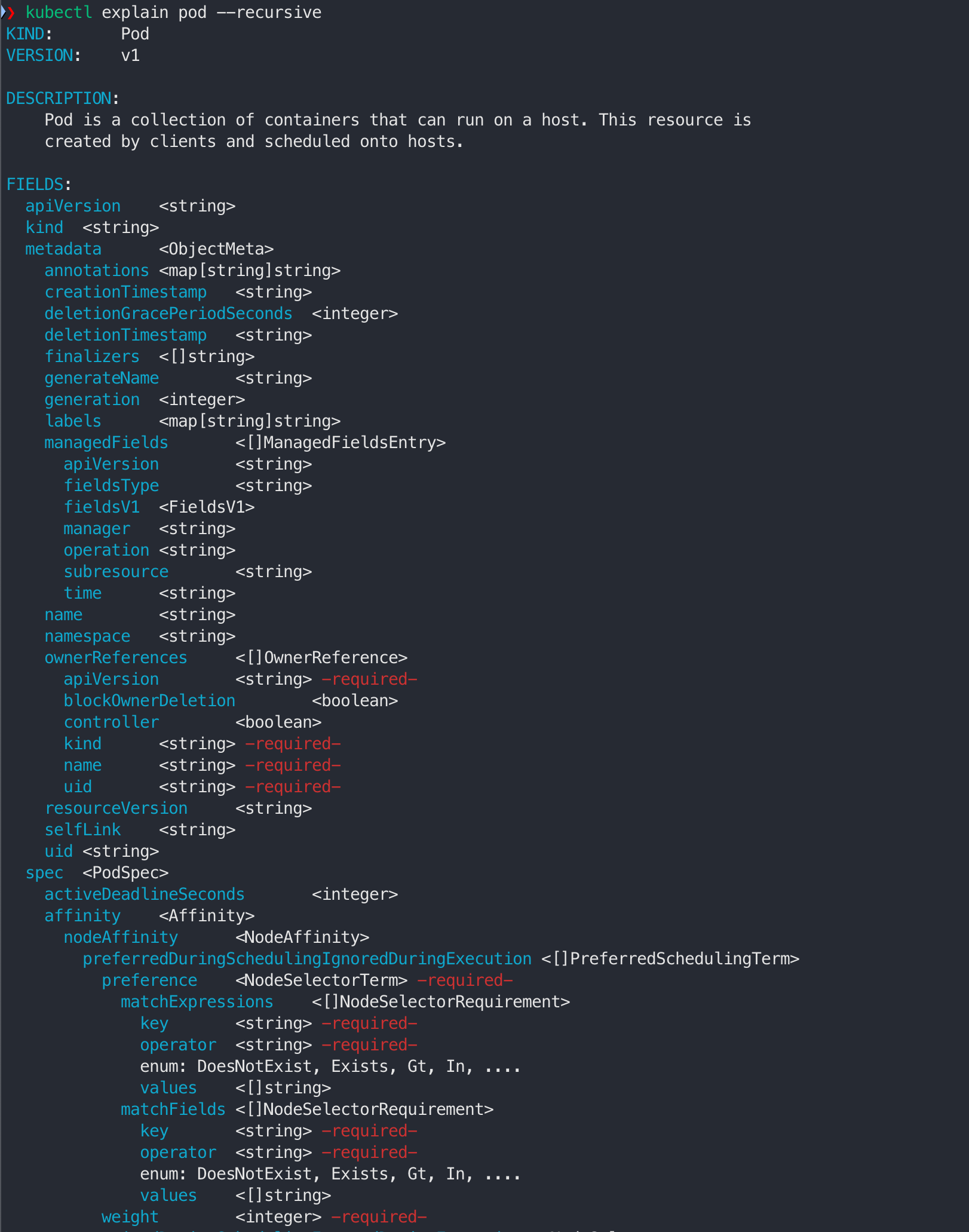

- 특정 object의 모든 하위 필드 정보 확인

2. kind 소개 및 설치

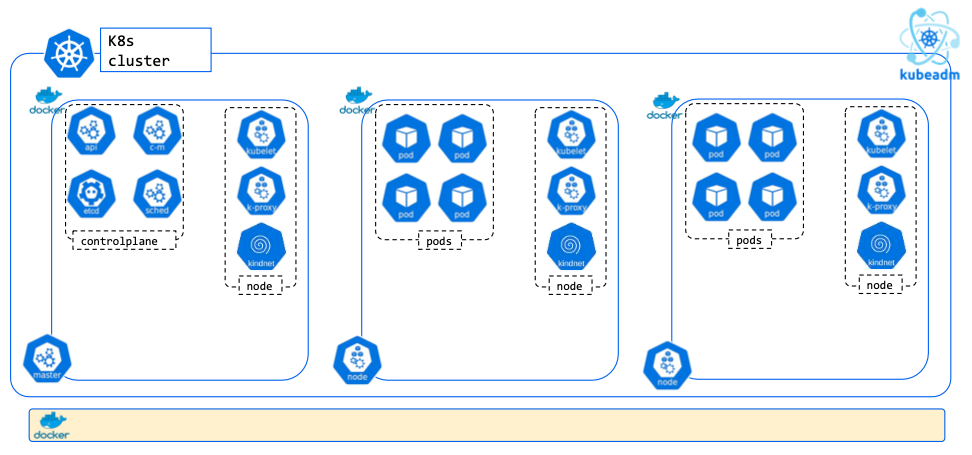

kind는

- docker in docker’로 쿠버네티스 클러스터 환경을 구성

(https://kind.sigs.k8s.io/) - Docker 컨테이너 "노드"를 사용하여 로컬 Kubernetes 클러스터를 실행하기 위한 도구

- kind는 주로 Kubernetes 자체를 테스트하기 위해 설계되었지만 로컬 개발이나 CI에도 사용 가능

설치

- Docker Desktop(https://www.docker.com/products/docker-desktop/) 또는 OrbStack(https://orbstack.dev) 설치

# OrbStack 설치 (https://mokpolar.tistory.com/61)

brew install orbstack- kind 설치

# Install Kind

brew install kind

kind --version

# Install kubectl

brew install kubernetes-cli

kubectl version --client=true

# Install Helm

brew install helm

helm version

# Install Wireshark : 캡처된 패킷 확인

brew install --cask wireshark- (옵션) 유용한 툴 설치

brew install krew

brew install kube-ps1

brew install kubectx

brew install wireshark

# kubectl 출력 시 하이라이트 처리

brew install kubecolor

echo "alias kubectl=kubecolor" >> ~/.zshrc

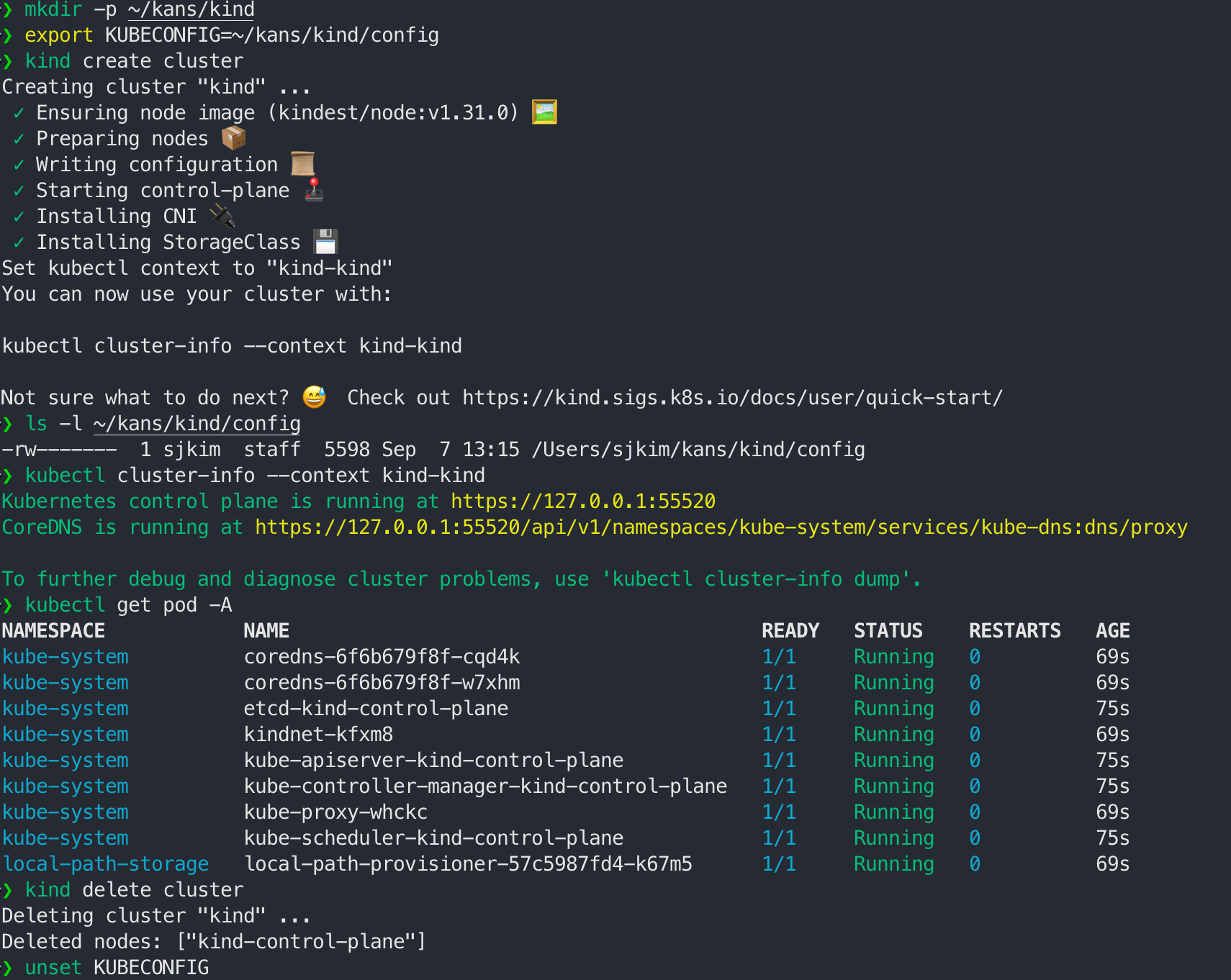

echo "compdef kubecolor=kubectl" >> ~/.zshrckind 기본 사용

회사에서 사용 중인 kubeconfig 가 있다면, 미리 kubeconfig 백업 후 kind 실습을 하시거나 혹은 아래 kubeconfig 변수 지정 사용 권장

파일위치 : ~/.kube/config

- (옵션) 별도 kubeconfig 지정 후 사용

# 방안1 : 환경변수 지정

export KUBECONFIG=/Users/<Username>/Downloads/kind/config

# 방안2 : 혹은 --kubeconfig ./config 지정 가능

# 클러스터 생성

kind create cluster

# kubeconfig 파일 확인

ls -l /Users/<Username>/Downloads/kind/config

-rw------- 1 <Username> staff 5608 4 24 09:05 /Users/<Username>/Downloads/kind/config

# 파드 정보 확인

kubectl get pod -A

# 클러스터 삭제

kind delete cluster

unset KUBECONFIG

- 클러스터 기본 배포 및 확인

# 클러스터 배포 전 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# kind로 1node 클러스터 생성

❯ kind create cluster

Creating cluster "kind" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

Set kubectl context to "kind-kind"

You can now use your cluster with:

kubectl cluster-info --context kind-kind

Thanks for using kind! 😊

# 클러스터 배포 확인

❯ kind get clusters

kind

❯ kind get nodes

kind-control-plane

❯ kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:55635

CoreDNS is running at https://127.0.0.1:55635/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 노드 정보 확인

❯ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kind-control-plane Ready control-plane 34s v1.31.0 172.17.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

# 파트 정보 확인

❯ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6f6b679f8f-lg2c8 1/1 Running 0 33s

kube-system coredns-6f6b679f8f-zgn82 1/1 Running 0 33s

kube-system etcd-kind-control-plane 1/1 Running 0 41s

kube-system kindnet-4fpf2 1/1 Running 0 34s

kube-system kube-apiserver-kind-control-plane 1/1 Running 0 41s

kube-system kube-controller-manager-kind-control-plane 1/1 Running 0 41s

kube-system kube-proxy-rf52v 1/1 Running 0 34s

kube-system kube-scheduler-kind-control-plane 1/1 Running 0 41s

local-path-storage local-path-provisioner-57c5987fd4-st5bz 1/1 Running 0 33s

❯ kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy ok

# 컨트롤플레인 (컨테이너) 노드 1대가 실행

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

178872ec319c kindest/node:v1.31.0 "/usr/local/bin/entr…" About a minute ago Up About a minute 127.0.0.1:55575->6443/tcp kind-control-plane

❯ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kindest/node <none> 9d05f134f12f 3 weeks ago 1.04GB

# kube config 파일 확인

❯ cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJZEN...0K

server: https://127.0.0.1:55635

name: kind-kind

contexts:

- context:

cluster: kind-kind

user: kind-kind

name: kind-kind

current-context: kind-kind

kind: Config

preferences: {}

users:

- name: kind-kind

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLVENDQWhHZ0F3SUJBZ0lJVFk...ZDSVZ4WUJMT2RpcnJzMnhXZVdlWFp1Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBMjV...QUklWQVRFIEtFWS0tLS0tCg==

# KUBECONFIG 변수 지정 사용 시 ❯ cat $KUBECONFIG

# nginx 파드 배포 및 확인 : 컨트롤플레인 노드인데 파드가 배포 될까요?

❯ kubectl run nginx --image=nginx:alpine

pod/nginx created

❯ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 9s 10.244.0.5 kind-control-plane <none> <none>

# 노드에 Taints 정보 확인, Taints 설정된 경우 Pod에 Toleration 설정 필요

❯ kubectl describe node | grep Taints

Taints: <none>

# 클러스터 삭제

❯ kind delete cluster

Deleting cluster "kind" ...

Deleted nodes: ["kind-control-plane"]

# kube config 삭제 확인

❯ cat ~/.kube/config

apiVersion: v1

kind: Config

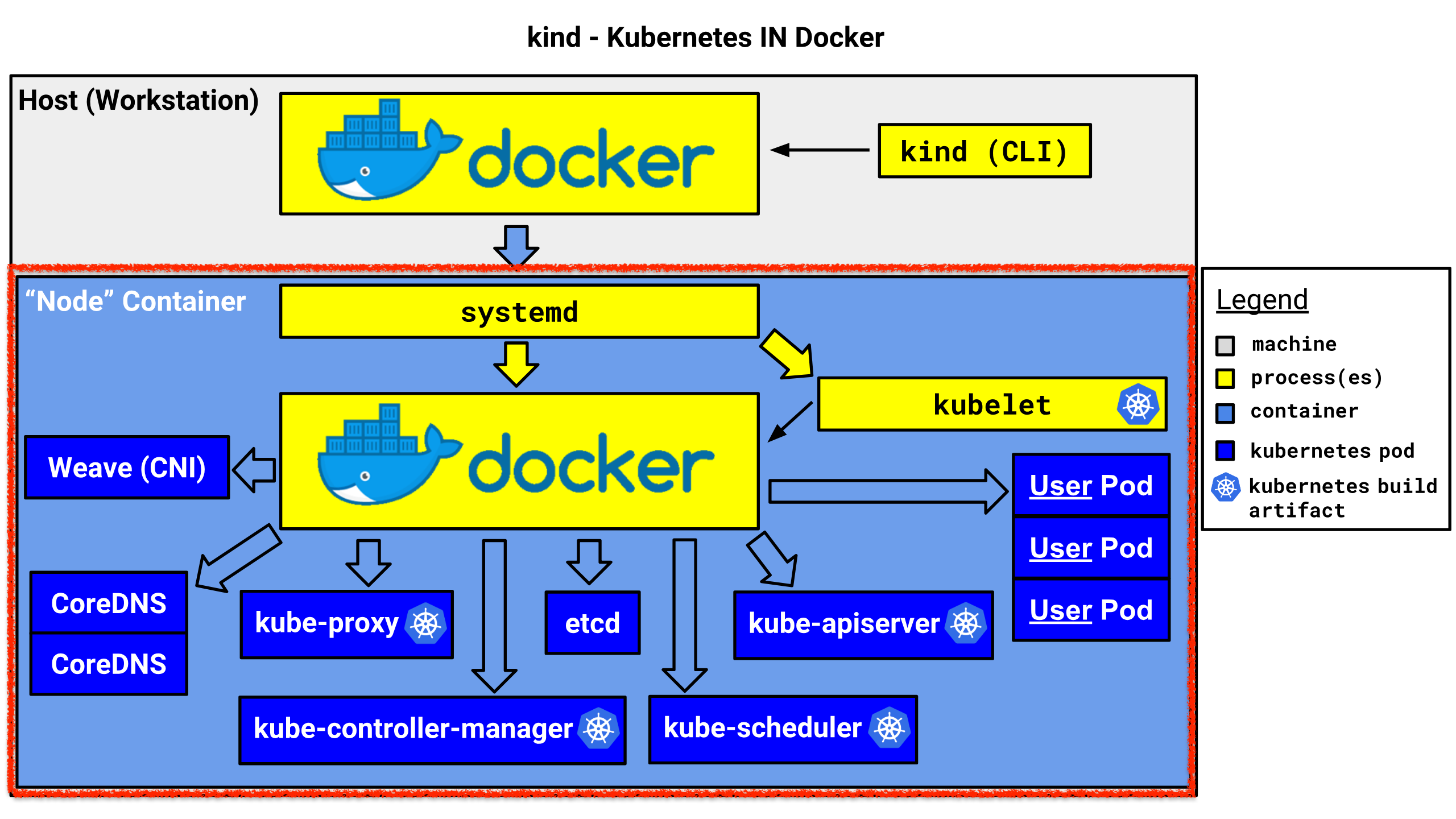

preferences: {}kind 동작원리(Docker in Docker) 확인

- Docker안에 Docker가 실행되고 host와는 컨테이너 정보가 격리 됨

- 기본정보 확인

# 클러스터 배포 전 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

❯ docker network ls

NETWORK ID NAME DRIVER SCOPE

cb9fdeb47317 bridge bridge local

07357a770572 host host local

b7b45198a43c kind bridge local

34ffd7bbaefd none null local

❯ docker inspect kind | jq

[

{

"Name": "kind",

"Id": "b7b45198a43c00cf25348444656cb96a88fc5dcc46bd2e02047aa3f4c1d41bc3",

"Created": "2024-09-05T23:18:10.746168376+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

},

{

"Subnet": "fc00:f853:ccd:e793::/64",

"Gateway": "fc00:f853:ccd:e793::1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

# Kind로 2-Node Cluster 생성

❯ cat << EOT > kind-2node.yaml

# two node (one workers) cluster config

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

EOT

❯ kind create cluster --config kind-2node.yaml --name myk8s

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Thanks for using kind! 😊

# 확인

❯ kind get nodes --name myk8s

myk8s-control-plane

myk8s-worker

# k8s api 주소 확인 : 어떻게 로컬에서 접속이 되는 걸까?

❯ kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:56534

CoreDNS is running at https://127.0.0.1:56534/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 포트 포워딩 정보 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c043e18c6b86 kindest/node:v1.31.0 "/usr/local/bin/entr…" About an hour ago Up About an hour 127.0.0.1:56534->6443/tcp myk8s-control-plane

11c1b7d67e2f kindest/node:v1.31.0 "/usr/local/bin/entr…" About an hour ago Up About an hour myk8s-worker

❯ docker exec -it myk8s-control-plane ss -tnlp | grep 6443

LISTEN 0 4096 *:6443 *:* users:(("kube-apiserver",pid=560,fd=3))

# 파드 IP 확인

❯ kubectl get pod -n kube-system -l component=kube-apiserver -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-apiserver-myk8s-control-plane 1/1 Running 0 164m 172.17.0.3 myk8s-control-plane <none> <none>

❯ kubectl describe pod -n kube-system -l component=kube-apiserver

Name: kube-apiserver-myk8s-control-plane

Namespace: kube-system

Priority: 2000001000

Priority Class Name: system-node-critical

Node: myk8s-control-plane/172.17.0.3

Start Time: Sat, 07 Sep 2024 16:25:21 +0900

Labels: component=kube-apiserver

tier=control-plane

Annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 172.17.0.3:6443

kubernetes.io/config.hash: a990ec62fa605076d1a2cd3310f22e3a

kubernetes.io/config.mirror: a990ec62fa605076d1a2cd3310f22e3a

kubernetes.io/config.seen: 2024-09-07T07:25:15.724062008Z

kubernetes.io/config.source: file

Status: Running

SeccompProfile: RuntimeDefault

IP: 172.17.0.3

IPs:

IP: 172.17.0.3

Controlled By: Node/myk8s-control-plane

Containers:

kube-apiserver:

Container ID: containerd://aca940217b86f00d9d41cb4655d7e282b9caa6967d00d552fbf6183eb569d95a

Image: registry.k8s.io/kube-apiserver:v1.31.0

Image ID: docker.io/library/import-2024-08-13@sha256:12503b5bb710c8e2834bfc7d31f831a4fdbcca675085a6fd0f8d9b6966074df6

Port: <none>

Host Port: <none>

Command:

kube-apiserver

--advertise-address=172.17.0.3

--allow-privileged=true

--authorization-mode=Node,RBAC

--client-ca-file=/etc/kubernetes/pki/ca.crt

--enable-admission-plugins=NodeRestriction

--enable-bootstrap-token-auth=true

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

--etcd-servers=https://127.0.0.1:2379

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

--kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

--requestheader-allowed-names=front-proxy-client

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

--requestheader-extra-headers-prefix=X-Remote-Extra-

--requestheader-group-headers=X-Remote-Group

--requestheader-username-headers=X-Remote-User

--runtime-config=

--secure-port=6443

--service-account-issuer=https://kubernetes.default.svc.cluster.local

--service-account-key-file=/etc/kubernetes/pki/sa.pub

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key

--service-cluster-ip-range=10.96.0.0/16

--tls-cert-file=/etc/kubernetes/pki/apiserver.crt

--tls-private-key-file=/etc/kubernetes/pki/apiserver.key

State: Running

Started: Sat, 07 Sep 2024 16:25:17 +0900

Ready: True

Restart Count: 0

Requests:

cpu: 250m

Liveness: http-get https://172.17.0.3:6443/livez delay=10s timeout=15s period=10s #success=1 #failure=8

Readiness: http-get https://172.17.0.3:6443/readyz delay=0s timeout=15s period=1s #success=1 #failure=3

Startup: http-get https://172.17.0.3:6443/livez delay=10s timeout=15s period=10s #success=1 #failure=24

Environment: <none>

Mounts:

/etc/ca-certificates from etc-ca-certificates (ro)

/etc/kubernetes/pki from k8s-certs (ro)

/etc/ssl/certs from ca-certs (ro)

/usr/local/share/ca-certificates from usr-local-share-ca-certificates (ro)

/usr/share/ca-certificates from usr-share-ca-certificates (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

ca-certs:

Type: HostPath (bare host directory volume)

Path: /etc/ssl/certs

HostPathType: DirectoryOrCreate

etc-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /etc/ca-certificates

HostPathType: DirectoryOrCreate

k8s-certs:

Type: HostPath (bare host directory volume)

Path: /etc/kubernetes/pki

HostPathType: DirectoryOrCreate

usr-local-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/local/share/ca-certificates

HostPathType: DirectoryOrCreate

usr-share-ca-certificates:

Type: HostPath (bare host directory volume)

Path: /usr/share/ca-certificates

HostPathType: DirectoryOrCreate

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: :NoExecute op=Exists

Events: <none>

# Liveness, Readiness Health Check

❯ docker exec -it myk8s-control-plane curl -k https://localhost:6443/livez ;echo

ok

What's next:

Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-control-plane

Learn more at https://docs.docker.com/go/debug-cli/

❯ docker exec -it myk8s-control-plane curl -k https://localhost:6443/readyz ;echo

ok

What's next:

Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-control-plane

Learn more at https://docs.docker.com/go/debug-cli/

# 노드 정보 확인 : CRI 는 containerd 사용

❯ kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

myk8s-control-plane Ready control-plane 4h26m v1.31.0 172.17.0.3 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

myk8s-worker Ready <none> 4h26m v1.31.0 172.17.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

# 파드 정보 확인 : CNI 는 kindnet 사용

❯ kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-6f6b679f8f-jl2hc 1/1 Running 0 4h32m 10.244.0.2 myk8s-control-plane <none> <none>

kube-system coredns-6f6b679f8f-qmtvt 1/1 Running 0 4h32m 10.244.0.4 myk8s-control-plane <none> <none>

kube-system etcd-myk8s-control-plane 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kindnet-24ncv 1/1 Running 0 4h32m 172.17.0.2 myk8s-worker <none> <none>

kube-system kindnet-mtscs 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-proxy-fw8cw 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-proxy-pk7tm 1/1 Running 0 4h32m 172.17.0.2 myk8s-worker <none> <none>

kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 4h32m 172.17.0.3 myk8s-control-plane <none> <none>

local-path-storage local-path-provisioner-57c5987fd4-cn6zt 1/1 Running 0 4h32m 10.244.0.3 myk8s-control-plane <none> <none

# 네임스페이스 확인 >> 도커 컨테이너에서 배운 네임스페이스와 다릅니다!

❯ kubectl get namespaces

NAME STATUS AGE

default Active 4h27m

kube-node-lease Active 4h27m

kube-public Active 4h27m

kube-system Active 4h27m

local-path-storage Active 4h27m

# 컨트롤플레인, 워커 컨테이너 각각 1대씩 실행 : 도커 컨테이너 이름은 myk8s-control-plane , myk8s-worker 임을 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c043e18c6b86 kindest/node:v1.31.0 "/usr/local/bin/entr…" 4 hours ago Up 4 hours 127.0.0.1:56534->6443/tcp myk8s-control-plane

11c1b7d67e2f kindest/node:v1.31.0 "/usr/local/bin/entr…" 4 hours ago Up 4 hours myk8s-worker

❯ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kindest/node <none> 9d05f134f12f 3 weeks ago 1.04GB

# 디버그용 내용 출력에 ~/.kube/config 권한 인증 로드

❯ kubectl get pod -v6

I0907 20:53:14.079457 25333 loader.go:395] Config loaded from file: /Users/sjkim/.kube/config

I0907 20:53:14.092874 25333 round_trippers.go:553] GET https://127.0.0.1:56534/api/v1/namespaces/default/pods?limit=500 200 OK in 10 milliseconds

No resources found in default namespace.

# kube config 파일 확인

❯ cat ~/.kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJYWZ...0K

server: https://127.0.0.1:56534

name: kind-myk8s

contexts:

- context:

cluster: kind-myk8s

user: kind-myk8s

name: kind-myk8s

current-context: kind-myk8s

kind: Config

preferences: {}

users:

- name: kind-myk8s

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLVENDQWhHZ0F3SUJBZ0lJWnl...lzek5lUWJ4ZmI4Y3dYR2xua2lwZncrCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBdGd...SU0EgUFJJVkFURSBLRVktLS0tLQo=

# local-path 라는 StorageClass 가 설치, local-path 는 노드의 로컬 저장소를 활용함

# 로컬 호스트의 path 를 지정할 필요 없이 local-path provisioner 이 볼륨을 관리

❯ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

standard (default) rancher.io/local-path Delete WaitForFirstConsumer false 4h28m

❯ kubectl get deploy -n local-path-storage

NAME READY UP-TO-DATE AVAILABLE AGE

local-path-provisioner 1/1 1 1 4h28m

# 툴 설치

❯ docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree jq psmisc lsof wget bridge-utils tcpdump htop git nano -y'

Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB]

...Setting up git (1:2.39.2-1.1) ...

Processing triggers for libc-bin (2.36-9+deb12u7) ...

What's next:

Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-control-plane

Learn more at https://docs.docker.com/go/debug-cli/

❯ docker exec -it myk8s-worker sh -c 'apt update && apt install tree jq psmisc lsof wget bridge-utils tcpdump htop git nano -y'

Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB]

...Setting up git (1:2.39.2-1.1) ...

Processing triggers for libc-bin (2.36-9+deb12u7) ...

What's next:

Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-worker

Learn more at https://docs.docker.com/go/debug-cli/- 쿠버네티스 관련 정보 조사

# static pod manifest 위치 찾기

❯ docker exec -it myk8s-control-plane grep staticPodPath /var/lib/kubelet/config.yaml

staticPodPath: /etc/kubernetes/manifests

What's next:

Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-control-plane

Learn more at https://docs.docker.com/go/debug-cli/

# static pod 정보 확인 : kubectl 및 control plane 에서 관리되지 않고 kubelet 을 통해 지정한 컨테이너를 배포

❯ docker exec -it myk8s-control-plane tree /etc/kubernetes/manifests/

/etc/kubernetes/manifests/

|-- etcd.yaml

|-- kube-apiserver.yaml

|-- kube-controller-manager.yaml

`-- kube-scheduler.yaml

1 directory, 4 files

# Worker Node에는 Static Pod가 없음

❯ docker exec -it myk8s-worker tree /etc/kubernetes/manifests/

/etc/kubernetes/manifests/

0 directories, 0 files

# 워커 노드(컨테이너) bash 진입

❯ docker exec -it myk8s-worker bash

root@myk8s-worker:/# whoami

root

# kubelet 상태 확인

root@myk8s-worker:/# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf, 11-kind.conf

Active: active (running) since Sat 2024-09-07 07:25:34 UTC; 4h 44min ago

Docs: http://kubernetes.io/docs/

Main PID: 225 (kubelet)

Tasks: 16 (limit: 6375)

Memory: 53.1M

CPU: 3min 6.985s

CGroup: /kubelet.slice/kubelet.service

└─225 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --config=/var/lib/kubelet/config>

Sep 07 07:25:35 myk8s-worker kubelet[225]: I0907 07:25:35.570899 225 reconciler_common.go:245] "operationExecutor.VerifyControllerAttachedVolume started for volume \"xt>

...

Sep 07 07:25:47 myk8s-worker kubelet[225]: I0907 07:25:47.252986 225 kubelet_node_status.go:488] "Fast updating node status as it just became ready"

lines 1-23/23 (END)

# 컨테이너 확인, WorkerNode안에서는 containerd 실행으로 crictl을 사용해야 함

root@myk8s-worker:/# docker ps

bash: docker: command not found

root@myk8s-worker:/# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

1453741c81209 6a23fa8fd2b78 5 hours ago Running kindnet-cni 0 5393d4d2a8cf6 kindnet-24ncv

5e2343e69263b c573e1357a14e 5 hours ago Running kube-proxy 0 3504f3077d975 kube-proxy-pk7tm

# kube-proxy 확인

root@myk8s-worker:/# pstree

systemd-+-containerd---15*[{containerd}]

|-containerd-shim-+-kindnetd---12*[{kindnetd}]

| |-pause

| `-13*[{containerd-shim}]

|-containerd-shim-+-kube-proxy---9*[{kube-proxy}]

| |-pause

| `-12*[{containerd-shim}]

|-kubelet---15*[{kubelet}]

`-systemd-journal

root@myk8s-worker:/# pstree -p

systemd(1)-+-containerd(102)-+-{containerd}(103)

| |-{containerd}(104)

...

| |-{containerd}(351)

| `-{containerd}(352)

|-containerd-shim(271)-+-kindnetd(408)-+-{kindnetd}(499)

| | |-{kindnetd}(500)

...

| | |-{kindnetd}(595)

| | `-{kindnetd}(596)

| |-pause(318)

| |-{containerd-shim}(272)

...

| |-{containerd-shim}(645)

| `-{containerd-shim}(2107)

|-containerd-shim(289)-+-kube-proxy(382)-+-{kube-proxy}(423)

...

| | |-{kube-proxy}(624)

| | `-{kube-proxy}(625)

| |-pause(325)

| |-{containerd-shim}(296)

...

| |-{containerd-shim}(608)

| `-{containerd-shim}(609)

|-kubelet(225)-+-{kubelet}(226)

| |-{kubelet}(227)

...

| |-{kubelet}(634)

| `-{kubelet}(972)

`-systemd-journal(88)

root@myk8s-worker:/# ps afxuwww |grep proxy

root 4253 0.0 0.0 3076 1360 pts/1 S+ 12:12 0:00 \_ grep proxy

root 382 0.0 0.4 1290144 24212 ? Ssl 07:25 0:10 \_ /usr/local/bin/kube-proxy --config=/var/lib/kube-proxy/config.conf --hostname-override=myk8s-worker

root@myk8s-worker:/# iptables -t filter -S

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-PROXY-CANARY

-N KUBE-PROXY-FIREWALL

-N KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

root@myk8s-worker:/# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N DOCKER_OUTPUT

-N DOCKER_POSTROUTING

-N KIND-MASQ-AGENT

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-IT2ZTR26TO4XFPTO

-N KUBE-SEP-KYAKWNPZMT7TXG57

-N KUBE-SEP-N4G2XR5TDX7PQE7P

-N KUBE-SEP-PUHFDAMRBZWCPADU

-N KUBE-SEP-SF3LG62VAE5ALYDV

-N KUBE-SEP-WXWGHGKZOCNYRYI7

-N KUBE-SEP-YIL6JZP7A3QYXJU2

-N KUBE-SERVICES

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -d 198.19.248.254/32 -j DOCKER_OUTPUT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -d 198.19.248.254/32 -j DOCKER_OUTPUT

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -d 198.19.248.254/32 -j DOCKER_POSTROUTING

-A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT

-A DOCKER_OUTPUT -d 198.19.248.254/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:40825

-A DOCKER_OUTPUT -d 198.19.248.254/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:36395

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 40825 -j SNAT --to-source 198.19.248.254:53

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 36395 -j SNAT --to-source 198.19.248.254:53

-A KIND-MASQ-AGENT -d 10.244.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN

-A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-IT2ZTR26TO4XFPTO -s 10.244.0.2/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-IT2ZTR26TO4XFPTO -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.244.0.2:53

-A KUBE-SEP-KYAKWNPZMT7TXG57 -s 172.17.0.3/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-KYAKWNPZMT7TXG57 -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.17.0.3:6443

-A KUBE-SEP-N4G2XR5TDX7PQE7P -s 10.244.0.2/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-N4G2XR5TDX7PQE7P -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.244.0.2:9153

-A KUBE-SEP-PUHFDAMRBZWCPADU -s 10.244.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-PUHFDAMRBZWCPADU -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.244.0.4:9153

-A KUBE-SEP-SF3LG62VAE5ALYDV -s 10.244.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-SF3LG62VAE5ALYDV -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.244.0.4:53

-A KUBE-SEP-WXWGHGKZOCNYRYI7 -s 10.244.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-WXWGHGKZOCNYRYI7 -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.244.0.4:53

-A KUBE-SEP-YIL6JZP7A3QYXJU2 -s 10.244.0.2/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-YIL6JZP7A3QYXJU2 -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.244.0.2:53

-A KUBE-SERVICES -d 10.96.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.244.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-IT2ZTR26TO4XFPTO

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.244.0.4:53" -j KUBE-SEP-SF3LG62VAE5ALYDV

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.0.2:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-N4G2XR5TDX7PQE7P

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.244.0.4:9153" -j KUBE-SEP-PUHFDAMRBZWCPADU

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.244.0.0/16 -d 10.96.0.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.17.0.3:6443" -j KUBE-SEP-KYAKWNPZMT7TXG57

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.244.0.0/16 -d 10.96.0.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.244.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-YIL6JZP7A3QYXJU2

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.244.0.4:53" -j KUBE-SEP-WXWGHGKZOCNYRYI7

root@myk8s-worker:/# iptables -t mangle -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-N KUBE-PROXY-CANARY

root@myk8s-worker:/# iptables -t raw -S

-P PREROUTING ACCEPT

-P OUTPUT ACCEPT

root@myk8s-worker:/# iptables -t security -S

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

# tcp listen 포트 정보 확인

root@myk8s-worker:/# ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 127.0.0.11:40825 0.0.0.0:*

LISTEN 0 4096 127.0.0.1:10248 0.0.0.0:* users:(("kubelet",pid=225,fd=19))

LISTEN 0 4096 127.0.0.1:10249 0.0.0.0:* users:(("kube-proxy",pid=382,fd=19))

LISTEN 0 4096 127.0.0.1:41875 0.0.0.0:* users:(("containerd",pid=102,fd=10))

LISTEN 0 4096 *:10250 *:* users:(("kubelet",pid=225,fd=20))

LISTEN 0 4096 *:10256 *:* users:(("kube-proxy",pid=382,fd=18))

# 빠져나오기

root@myk8s-worker:/# exit

exit- 파드 생성 및 확인

# 파드 생성

❯ cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Pod

metadata:

name: netpod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

containers:

- name: nginx-pod

image: nginx:alpine

terminationGracePeriodSeconds: 0

EOF

pod/netpod created

pod/nginx created

# 퍄트 확인

❯ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

netpod 0/1 ContainerCreating 0 11s <none> myk8s-worker <none> <none>

nginx 0/1 ContainerCreating 0 11s <none> myk8s-worker <none> <none>

❯ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

netpod 1/1 Running 0 106s 10.244.1.3 myk8s-worker <none> <none>

nginx 1/1 Running 0 106s 10.244.1.2 myk8s-worker <none> <none>

# netpod 파드에서 nginx 웹 접속

❯ kubectl exec -it netpod -- curl -s $(kubectl get pod nginx -o jsonpath={.status.podIP}) | grep -o "<title>.*</title>"

<title>Welcome to nginx!</title>

%- 컨트롤플레인 컨테이너 정보 확인 : 아래 “Node” Container 은 ‘myk8s-control-plane’ 컨테이너 (그림에 빨간색) 입니다

- 해당 “Node” 컨테이너 내부에 쿠버네티스 관련 파드(컨테이너)가 기동되는 구조 → Docker in Docker (DinD)

- 해당 “Node” 컨테이너 내부에 쿠버네티스 관련 파드(컨테이너)가 기동되는 구조 → Docker in Docker (DinD)

# 도커 컨테이너 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c043e18c6b86 kindest/node:v1.31.0 "/usr/local/bin/entr…" 5 hours ago Up 5 hours 127.0.0.1:56534->6443/tcp myk8s-control-plane

11c1b7d67e2f kindest/node:v1.31.0 "/usr/local/bin/entr…" 5 hours ago Up 5 hours myk8s-worker

❯ docker inspect myk8s-control-plane | jq

[

{

"Id": "c043e18c6b866d576312432ea9c6e506fd105595f4bf6cd8f754330523f6a923",

"Created": "2024-09-07T07:25:08.894072479Z",

"Path": "/usr/local/bin/entrypoint",

"Args": [

"/sbin/init"

],

"State": {

"Status": "running",

...

4bf6cd8f754330523f6a923-json.log",

"Name": "/myk8s-control-plane",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "",

"ExecIDs": null,

...

"NetworkMode": "kind",

"PortBindings": {

"6443/tcp": [

{

"HostIp": "127.0.0.1",

"HostPort": "56534"

}

]

},

"RestartPolicy": {

"Name": "on-failure",

...

"Networks": {

"kind": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"MacAddress": "02:42:ac:11:00:03",

"DriverOpts": null,

"NetworkID": "b7b45198a43c00cf25348444656cb96a88fc5dcc46bd2e02047aa3f4c1d41bc3",

"EndpointID": "a73eb964d30f390197da6d41a452694477820dced70866be5ac730fa7d45758c",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.3",

"IPPrefixLen": 16,

"IPv6Gateway": "fc00:f853:ccd:e793::1",

"GlobalIPv6Address": "fc00:f853:ccd:e793::3",

"GlobalIPv6PrefixLen": 64,

"DNSNames": [

"myk8s-control-plane",

"c043e18c6b86"

]

}

}

}

}

]

# 컨트롤플레인 컨테이너 bash 접속 후 확인

❯ docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/# arch

aarch64

root@myk8s-control-plane:/# whoami

root

root@myk8s-control-plane:/# ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

veth8650b37d@if4 UP 10.244.0.1/32

veth138774b0@if4 UP 10.244.0.1/32

vethaa1136ce@if4 UP 10.244.0.1/32

eth0@if31 UP 172.17.0.3/16

root@myk8s-control-plane:/# ip -c route

default via 172.17.0.1 dev eth0

10.244.0.2 dev veth8650b37d scope host

10.244.0.3 dev veth138774b0 scope host

10.244.0.4 dev vethaa1136ce scope host

10.244.1.0/24 via 172.17.0.2 dev eth0

172.17.0.0/16 dev eth0 proto kernel scope link src 172.17.0.3

# Entrypoint 정보 확인

root@myk8s-control-plane:/# cat /usr/local/bin/entrypoint

# 프로세스 확인 : PID 1 은 /sbin/init

root@myk8s-control-plane:/# ps -ef

UID PID PPID C STIME TTY TIME CMD

root 1 0 0 07:25 ? 00:00:01 /sbin/init

root 88 1 0 07:25 ? 00:00:00 /lib/systemd/systemd-journald

...

root 5560 0 0 12:42 pts/1 00:00:00 bash

root 5591 5560 50 12:44 pts/1 00:00:00 ps -ef

# 컨테이터 런타임 정보 확인

root@myk8s-control-plane:/# systemctl status containerd

● containerd.service - containerd container runtime

Loaded: loaded (/etc/systemd/system/containerd.service; enabled; preset: enabled)

Active: active (running) since Sat 2024-09-07 07:25:11 UTC; 5h 19min ago

Docs: https://containerd.io

Main PID: 102 (containerd)

Tasks: 144

Memory: 134.4M

CPU: 3min 28ms

CGroup: /system.slice/containerd.service

├─ 102 /usr/local/bin/containerd

├─ 298 /usr/local/bin/containerd-shim-runc-v2 -namespace k8s.io -id d8f7fa33b18e5862728f8b9823f319d2ccb2d3e172999dcfdabb7395a630af34 -address /run/containerd/c>

├─ 299 /usr/local/bin/containerd-shim-runc-v2 -namespace k8s.io -id ...

65c975d5a19205b92fddb544eb6236493beb6a56b137559751bd89576aa2c5f8 -address /run/containerd/c>

Sep 07 07:25:41 myk8s-control-plane containerd[102]: time="2024-09-07T07:25:41.751732233Z" level=info msg="CreateContainer within sandbox \"65c975d5a19205b92fddb544eb623649>

...

Sep 07 07:25:42 myk8s-control-plane containerd[102]: time="2024-09-07T07:25:42.314646235Z" level=info msg="StartContainer for \"126924df60099ddae0e5c8eed46d8d3c0646192ccfd1>

# DinD 컨테이너 확인 : crictl 사용

root@myk8s-control-plane:/# crictl version

Version: 0.1.0

RuntimeName: containerd

RuntimeVersion: v1.7.18

RuntimeApiVersion: v1

root@myk8s-control-plane:/# crictl info

{

"status": {

"conditions": [

{

"type": "RuntimeReady",

"status": true,

"reason": "",

"message": ""

},

...

"golang": "go1.22.6",

"lastCNILoadStatus": "OK",

"lastCNILoadStatus.default": "OK"

}

root@myk8s-control-plane:/# crictl ps -o json | jq -r '.containers[] | {NAME: .metadata.name, POD: .labels["io.kubernetes.pod.name"]}'

{

"NAME": "local-path-provisioner",

"POD": "local-path-provisioner-57c5987fd4-cn6zt"

}

{

"NAME": "coredns",

"POD": "coredns-6f6b679f8f-jl2hc"

}

{

"NAME": "coredns",

"POD": "coredns-6f6b679f8f-qmtvt"

}

{

"NAME": "kindnet-cni",

"POD": "kindnet-mtscs"

}

{

"NAME": "kube-proxy",

"POD": "kube-proxy-fw8cw"

}

{

"NAME": "etcd",

"POD": "etcd-myk8s-control-plane"

}

{

"NAME": "kube-controller-manager",

"POD": "kube-controller-manager-myk8s-control-plane"

}

{

"NAME": "kube-apiserver",

"POD": "kube-apiserver-myk8s-control-plane"

}

{

"NAME": "kube-scheduler",

"POD": "kube-scheduler-myk8s-control-plane"

}

root@myk8s-control-plane:/# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

126924df60099 282f619d10d4d 5 hours ago Running local-path-provisioner 0 0f761f8318aa3 local-path-provisioner-57c5987fd4-cn6zt

f6acac6622025 2437cf7621777 5 hours ago Running coredns 0 21014e22cab75 coredns-6f6b679f8f-jl2hc

59f768e0885f7 2437cf7621777 5 hours ago Running coredns 0 65c975d5a1920 coredns-6f6b679f8f-qmtvt

7c810bffa8140 6a23fa8fd2b78 5 hours ago Running kindnet-cni 0 f8380ae5d115b kindnet-mtscs

6897b8b706b3e c573e1357a14e 5 hours ago Running kube-proxy 0 7dfa2554e758f kube-proxy-fw8cw

dafd4778c3ad6 27e3830e14027 5 hours ago Running etcd 0 a4a835a3c148c etcd-myk8s-control-plane

e7f26637d852c c50d473e11f63 5 hours ago Running kube-controller-manager 0 126655ba39e59 kube-controller-manager-myk8s-control-plane

aca940217b86f add78c37da6e2 5 hours ago Running kube-apiserver 0 d8f7fa33b18e5 kube-apiserver-myk8s-control-plane

4df2fd30169ab 8377f1e14db4c 5 hours ago Running kube-scheduler 0 635850d6b40c6 kube-scheduler-myk8s-control-plane

# 파드 이미지 확인

root@myk8s-control-plane:/# crictl images

IMAGE TAG IMAGE ID SIZE

docker.io/kindest/kindnetd v20240813-c6f155d6 6a23fa8fd2b78 33.3MB

docker.io/kindest/local-path-helper v20230510-486859a6 d022557af8b63 2.92MB

docker.io/kindest/local-path-provisioner v20240813-c6f155d6 282f619d10d4d 17.4MB

registry.k8s.io/coredns/coredns v1.11.1 2437cf7621777 16.5MB

registry.k8s.io/etcd 3.5.15-0 27e3830e14027 66.5MB

registry.k8s.io/kube-apiserver-arm64 v1.31.0 add78c37da6e2 92.6MB

registry.k8s.io/kube-apiserver v1.31.0 add78c37da6e2 92.6MB

registry.k8s.io/kube-controller-manager-arm64 v1.31.0 c50d473e11f63 86.9MB

registry.k8s.io/kube-controller-manager v1.31.0 c50d473e11f63 86.9MB

registry.k8s.io/kube-proxy-arm64 v1.31.0 c573e1357a14e 95.9MB

registry.k8s.io/kube-proxy v1.31.0 c573e1357a14e 95.9MB

registry.k8s.io/kube-scheduler-arm64 v1.31.0 8377f1e14db4c 67MB

registry.k8s.io/kube-scheduler v1.31.0 8377f1e14db4c 67MB

registry.k8s.io/pause 3.10 afb61768ce381 268kB

# kubectl 확인

root@myk8s-control-plane:/# kubectl get node -v6

I0907 12:45:31.869157 5664 loader.go:395] Config loaded from file: /etc/kubernetes/admin.conf

I0907 12:45:31.882545 5664 round_trippers.go:553] GET https://myk8s-control-plane:6443/api/v1/nodes?limit=500 200 OK in 8 milliseconds

NAME STATUS ROLES AGE VERSION

myk8s-control-plane Ready control-plane 5h20m v1.31.0

myk8s-worker Ready <none> 5h19m v1.31.0

root@myk8s-control-plane:/# cat /etc/kubernetes/admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJYWZ...

0K

server: https://myk8s-control-plane:6443

name: myk8s

contexts:

- context:

cluster: myk8s

user: kubernetes-admin

name: kubernetes-admin@myk8s

current-context: kubernetes-admin@myk8s

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURLVENDQWhHZ0F3SUJBZ0lJWnl...

lzek5lUWJ4ZmI4Y3dYR2xua2lwZncrCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBdGd

...

SU0EgUFJJVkFURSBLRVktLS0tLQo=

root@myk8s-control-plane:/# exit

exit

# 도커 컨테이너 확인 : 다시 한번 자신의 호스트PC에서 도커 컨테이너 확인, DinD 컨테이너가 호스트에서 보이는지 확인결과 보이지 않음

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c043e18c6b86 kindest/node:v1.31.0 "/usr/local/bin/entr…" 5 hours ago Up 5 hours 127.0.0.1:56534->6443/tcp myk8s-control-plane

11c1b7d67e2f kindest/node:v1.31.0 "/usr/local/bin/entr…" 5 hours ago Up 5 hours myk8s-worker

❯ docker port myk8s-control-plane

6443/tcp -> 127.0.0.1:56534

# kubectl 확인 : k8s api 호출 주소 확인

❯ kubectl get node -v6

I0907 21:46:35.553893 27176 loader.go:395] Config loaded from file: /Users/sjkim/.kube/config

I0907 21:46:35.578673 27176 round_trippers.go:553] GET https://127.0.0.1:56534/api/v1/nodes?limit=500 200 OK in 16 milliseconds

NAME STATUS ROLES AGE VERSION

myk8s-control-plane Ready control-plane 5h21m v1.31.0

myk8s-worker Ready <none> 5h21m v1.31.0- 클러스터 삭제

❯ kind delete cluster --name myk8s

Deleting cluster "myk8s" ...

Deleted nodes: ["myk8s-control-plane" "myk8s-worker"]

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES- Multi-Node Cluster (Control-plane, Nodes)

# '컨트롤플레인, 워커 노드 1대' 클러스터 배포 : 파드에 접속하기 위한 포트 맵핑 설정

❯ cat <<EOT> kind-2node.yaml

# two node (one workers) cluster config

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

extraPortMappings:

- containerPort: 31000

hostPort: 31000

listenAddress: "0.0.0.0" # Optional, defaults to "0.0.0.0"

protocol: tcp # Optional, defaults to tcp

- containerPort: 31001

hostPort: 31001

❯ CLUSTERNAME=myk8s

❯ kind create cluster --config kind-2node.yaml --name $CLUSTERNAME

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

# 배포 확인

❯ kind get clusters

myk8s

❯ kind get nodes --name $CLUSTERNAME

myk8s-control-plane

myk8s-worker

# 노드 확인

❯ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

myk8s-control-plane Ready control-plane 107s v1.31.0 172.17.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

myk8s-worker Ready <none> 91s v1.31.0 172.17.0.3 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

# 노드에 Taints 정보 확인

❯ kubectl describe node $CLUSTERNAME-control-plane | grep Taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

❯ kubectl describe node $CLUSTERNAME-worker | grep Taints

Taints: <none>

# 컨테이너 확인 : 컨테이너 갯수, 컨테이너 이름 확인

# kind yaml 에 포트 맵핑 정보 처럼, 자신의 PC 호스트에 31000 포트 접속 시, 워커노드(실제로는 컨테이너)에 TCP 31000 포트로 연결

# 즉, 워커노드에 NodePort TCP 31000 설정 시 자신의 PC 호스트에서 접속 가능!

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

996b61b5731a kindest/node:v1.31.0 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes 127.0.0.1:58755->6443/tcp myk8s-control-plane

f883a97d444f kindest/node:v1.31.0 "/usr/local/bin/entr…" 4 minutes ago Up 4 minutes 0.0.0.0:31000-31001->31000-31001/tcp myk8s-worker

❯ docker port $CLUSTERNAME-worker

31000/tcp -> 0.0.0.0:31000

31001/tcp -> 0.0.0.0:31001

# 컨테이너 내부 정보 확인 : 필요 시 각각의 노드(?)들에 bash로 접속하여 사용 가능

❯ docker exec -it $CLUSTERNAME-control-plane ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

vethc1cd7461@if4 UP 10.244.0.1/32

vethe4b964b4@if4 UP 10.244.0.1/32

veth32884c20@if4 UP 10.244.0.1/32

eth0@if34 UP 172.17.0.2/16

❯ docker exec -it $CLUSTERNAME-worker ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

eth0@if36 UP 172.17.0.3/16- Mapping ports to the host machine

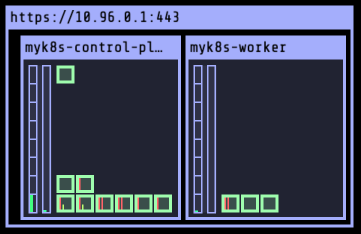

- kube-ops-view : NodePort 31000

- kube-ops-view : NodePort 31000

# kube-ops-view

# helm show values geek-cookbook/kube-ops-view

❯ helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

"geek-cookbook" already exists with the same configuration, skipping

❯ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=31000 --set env.TZ="Asia/Seoul" --namespace kube-system

NAME: kube-ops-view

LAST DEPLOYED: Sat Sep 7 22:33:02 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export NODE_PORT=$(kubectl get --namespace kube-system -o jsonpath="{.spec.ports[0].nodePort}" services kube-ops-view)

export NODE_IP=$(kubectl get nodes --namespace kube-system -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT

# 설치 확인

❯ kubectl get deploy,pod,svc,ep -n kube-system -l app.kubernetes.io/instance=kube-ops-view

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kube-ops-view 1/1 1 1 2m7s

NAME READY STATUS RESTARTS AGE

pod/kube-ops-view-657dbc6cd8-59gx4 1/1 Running 0 2m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-ops-view NodePort 10.96.139.124 <none> 8080:31000/TCP 2m7s

NAME ENDPOINTS AGE

endpoints/kube-ops-view 10.244.1.2:8080 2m7s

# kube-ops-view 접속 URL 확인 (1.5 , 2 배율)

echo -e "KUBE-OPS-VIEW URL = http://localhost:31000/#scale=1.5"

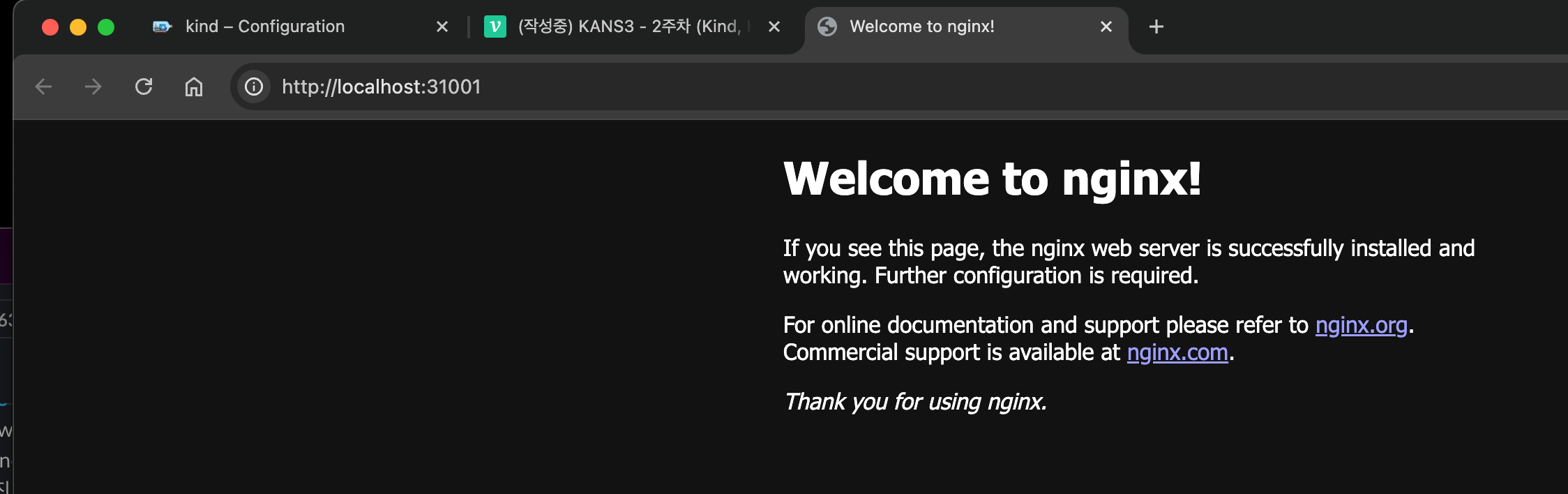

echo -e "KUBE-OPS-VIEW URL = http://localhost:31000/#scale=2"- nginx: NodePort 31001

# 디플로이먼트와 서비스 배포

❯ cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-websrv

spec:

replicas: 2

selector:

matchLabels:

app: deploy-websrv

template:

metadata:

labels:

app: deploy-websrv

spec:

terminationGracePeriodSeconds: 0

containers:

- name: deploy-websrv

image: nginx:alpine

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: deploy-websrv

spec:

ports:

- name: svc-webport

port: 80

targetPort: 80

nodePort: 31001

selector:

app: deploy-websrv

type: NodePort

EOF

deployment.apps/deploy-websrv created

service/deploy-websrv created

# 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

996b61b5731a kindest/node:v1.31.0 "/usr/local/bin/entr…" 18 minutes ago Up 18 minutes 127.0.0.1:58755->6443/tcp myk8s-control-plane

f883a97d444f kindest/node:v1.31.0 "/usr/local/bin/entr…" 18 minutes ago Up 18 minutes 0.0.0.0:31000-31001->31000-31001/tcp myk8s-worker

❯ kubectl get deploy,svc,ep deploy-websrv

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-websrv 2/2 2 2 91s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/deploy-websrv NodePort 10.96.254.67 <none> 80:31001/TCP 91s

NAME ENDPOINTS AGE

endpoints/deploy-websrv 10.244.1.3:80,10.244.1.4:80 91s

# 자신의 PC에 호스트 포트 31001 접속 시 쿠버네티스 서비스에 접속 확인

❯ open http://localhost:31001

❯ curl -s localhost:31001 | grep -o "<title>.*</title>"

<title>Welcome to nginx!</title>

# 디플로이먼트와 서비스 삭제

❯ kubectl delete deploy,svc deploy-websrv

deployment.apps "deploy-websrv" deleted

service "deploy-websrv" deleted

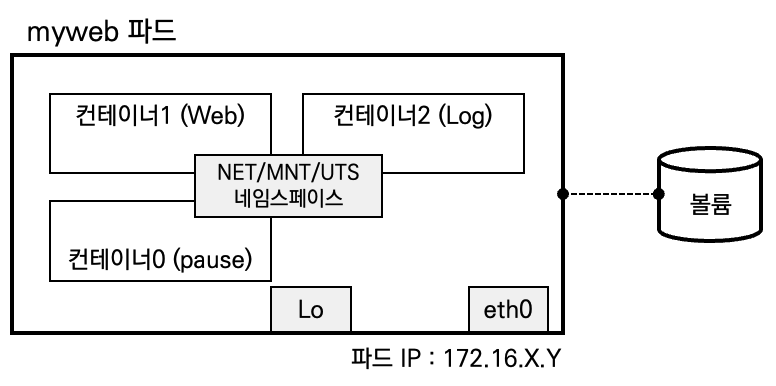

3. 파드 & PAUSE 컨테이너

파드

-

파드(Pod) 는 쿠버네티스에서 생성하고 관리할 수 있는 배포 가능한 가장 작은 컴퓨팅 단위이다.

-

파드

[고래 떼(pod of whales)나 콩꼬투리(pea pod)와 마찬가지로]는 하나 이상의 컨테이너의 그룹이다. 이 그룹은 스토리지 및 네트워크를 공유하고, 해당 컨테이너를 구동하는 방식에 대한 명세를 갖는다. 파드의 콘텐츠는 항상 함께 배치되고, 함께 스케줄되며, 공유 콘텍스트에서 실행된다. 파드는 애플리케이션 별 "논리 호스트"를 모델링한다. 여기에는 상대적으로 밀접하게 결합된 하나 이상의 애플리케이션 컨테이너가 포함된다. 클라우드가 아닌 콘텍스트에서, 동일한 물리 또는 가상 머신에서 실행되는 애플리케이션은 동일한 논리 호스트에서 실행되는 클라우드 애플리케이션과 비슷하다. -

애플리케이션 컨테이너와 마찬가지로, 파드에는 파드 시작 중에 실행되는 초기화 컨테이너가 포함될 수 있다. 클러스터가 제공하는 경우, 디버깅을 위해 임시 컨테이너를 삽입할 수도 있다.

파드란 무엇인가?

-

파드의 공유 콘텍스트는 리눅스 네임스페이스, 컨트롤 그룹(cgroup) 및 컨테이너를 격리하는 것과 같이 잠재적으로 다른 격리 요소들이다. 파드의 콘텍스트 내에서 개별 애플리케이션은 추가적으로 하위 격리가 적용된다.

-

파드는 공유 네임스페이스와 공유 파일시스템 볼륨이 있는 컨테이너들의 집합과 비슷하다.

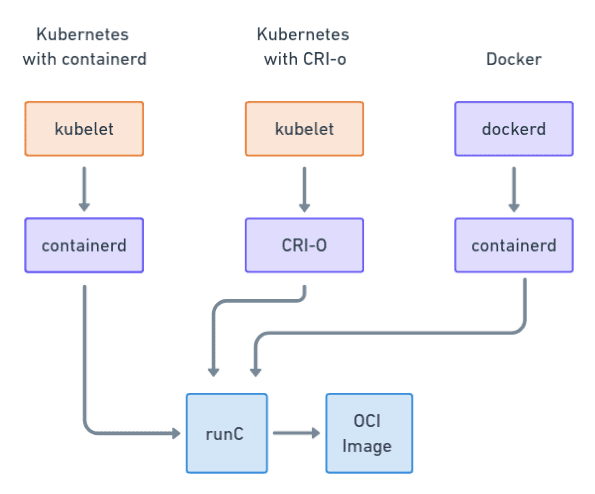

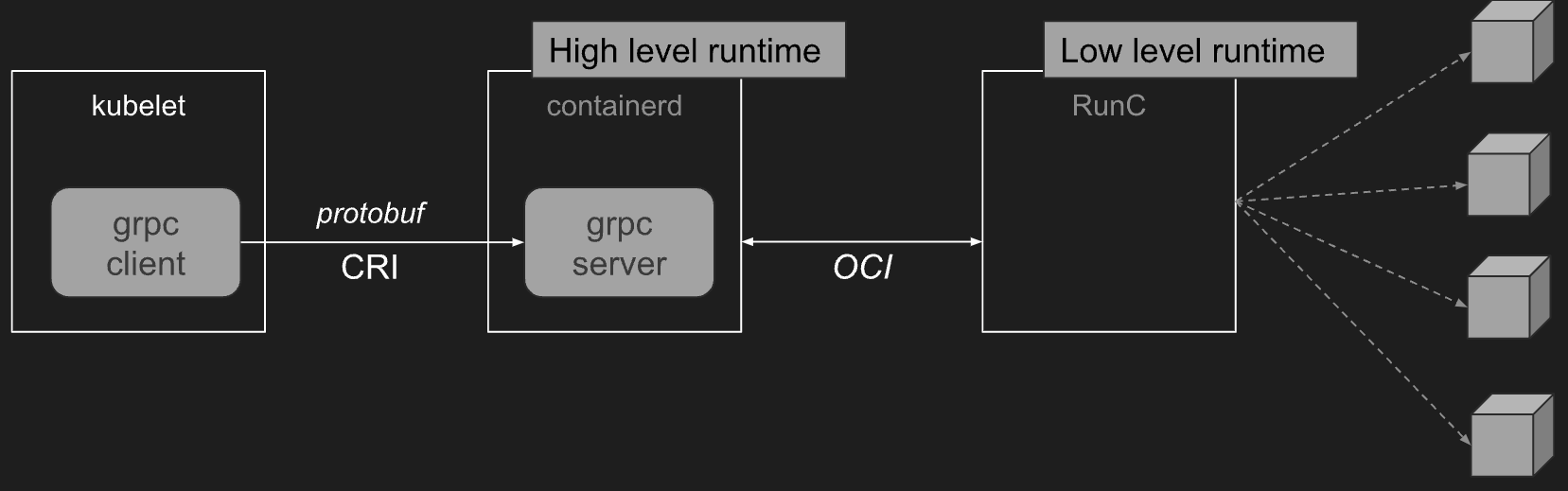

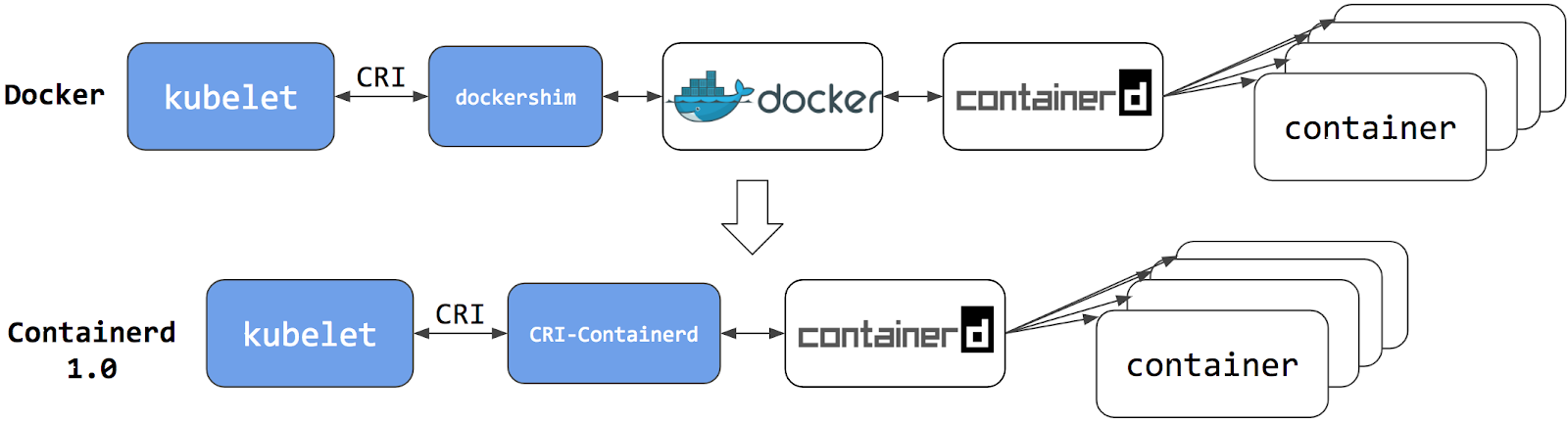

Container Runtime Interface

- Container Runtime : kubelet → CRI → High Level Runtime (containerd) ← OCI → Low Level Runtime (Runc)

- CRI 개선과정

PAUSE 컨테이너

- PAUSE 컨테이너가 Network/IPC/UTS 네임스페이스를 생성하고 유지/공유합니다.

- Pod 는 1개 이상의 컨테이너를 가질 수 있습니다 ⇒ sidecar 패턴 등

- Pod 내에 실행되는 컨테이너들은 반드시 동일한 노드에 할당되며 동일한 생명 주기를 갖습니다 → Pod 삭제 시, Pod 내 모든 컨테이너가 삭제

- Pod IP - Pod 는 노드 IP 와 별개로 클러스터 내에서 접근 가능한 IP를 할당 받으며, 다른 노드에 위치한 Pod 도 NAT 없이 Pod IP로 접근 가능 ⇒ 요걸 CNI 해줌!

- IP 공유 - Pod 내에 있는 컨테이너들은 서로 IP를 공유, 컨테이너끼리는 localhost 통해 서로 접근하며 포트를 이용해 구분

- pause 컨테이너가 'parent' 처럼 network ns 를 만들어 주고, 내부의 컨테이너들은 해당 net ns 를 공유 ← 세상에서 제일 많이 사용되는 컨테이너!

- 쿠버네티스에서 pause 컨테이너는 포드의 모든 컨테이너에 대한 "부모 컨테이너" 역할을 합니다 - Link

- pause 컨테이너에는 두 가지 핵심 책임이 있습니다.

- 첫째, 포드에서 Linux 네임스페이스 공유의 기반 역할을 합니다.

- 둘째, PID(프로세스 ID) 네임스페이스 공유가 활성화되면 각 포드에 대한 PID 1 역할을 하며 좀비 프로세스를 거둡니다.

- volume 공유 - Pod 안의 컨테이너들은 동일한 볼륨과 연결이 가능하여 파일 시스템을 기반으로 서로 파일을 주고받을 수 있음

- Pod는 리소스 제약이 있는 격리된 환경의 애플리케이션 컨테이너 그룹으로 구성됩니다. CRI에서 이 환경을 PodSandbox라고 합니다.

- 포드를 시작하기 전에 kubelet은 RuntimeService.RunPodSandbox를 호출하여 환경을 만듭니다. (파드 네트워킹 설정 ’IP 할당’ 포함)

- Kubelet은 RPC를 통해 컨테이너의 수명 주기를 관리하고, 컨테이너 수명 주기 후크와 활성/준비 확인을 실행하며, Pod의 재시작 정책을 준수합니다

4. Flannel CNI

Flannel

코어 OS 주도의 프로젝트이며 쿠버네티스용으로 설계된 3계층 오버레이 네트워크를 구성하기 쉬운 방법이다. 패킷은 VXLAN 및 다양한 메커니즘을 사용하여 전달된다.

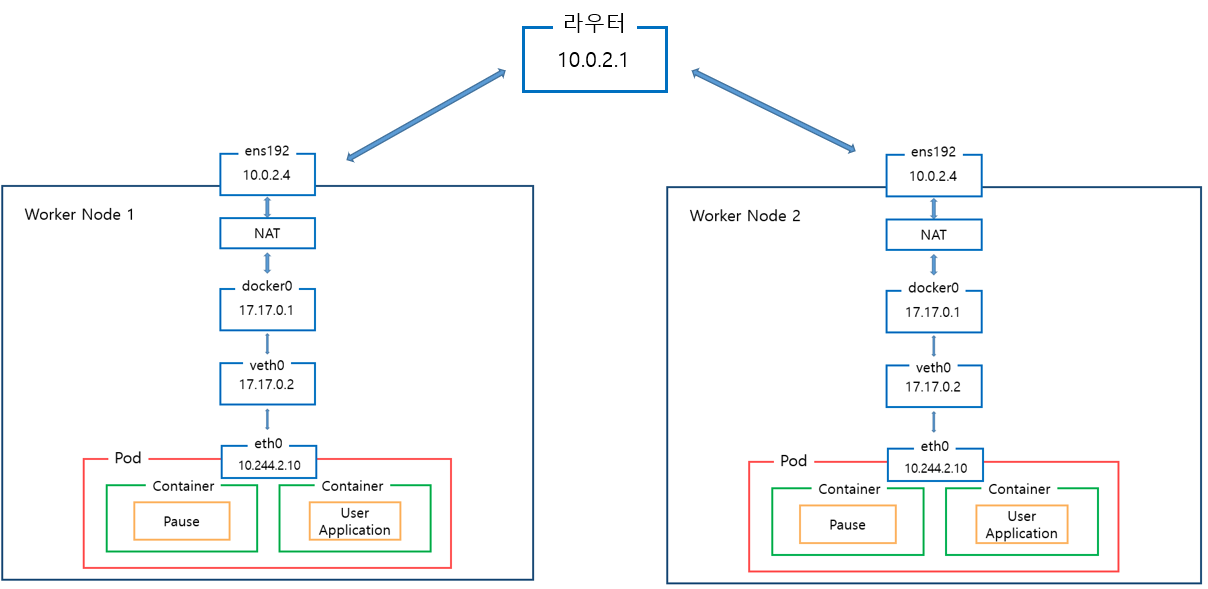

Flannel의 필요성

Kubernetes Default Network 구조 사용시, 아래 그림과 같이 Worker Node가 2개 생생되면 Pod에 부여되는 IP가 동일하여 Worker Node 1의 Pod에서 Work Node 2 Pod로의 통신이 불가하다.

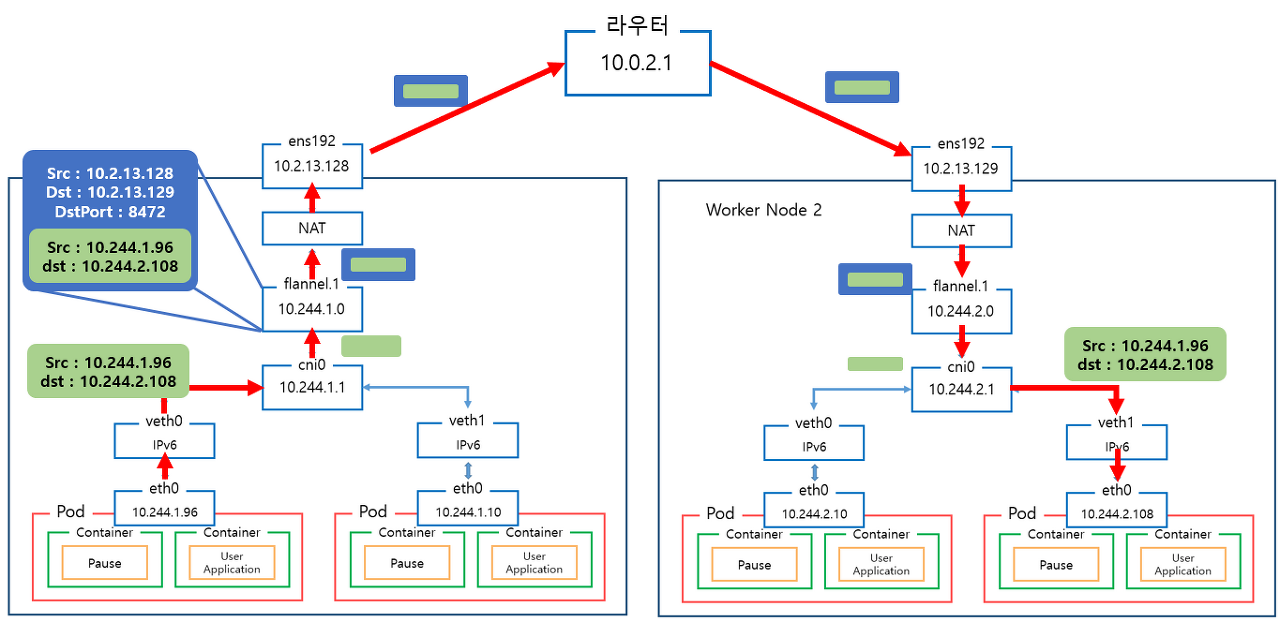

Flannel 사용시, 아래 그림과 같이 다른 Worker Node간 Pod IP가 동일하지 않으므로 라우터를 거쳐 Pod간 통신이 가능해진다.

- 노드마다 VXLAN VTEP 역할을 하는 flannel.1과 bridge 역할을 하는 cni0이 생성된다.

Flannel 설치 실습

# kind manifest

❯ cat <<EOF> kind-cni.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

labels:

mynode: control-plane

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- containerPort: 30002

hostPort: 30002

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

controllerManager:

extraArgs:

bind-address: 0.0.0.0

etcd:

local:

extraArgs:

listen-metrics-urls: http://0.0.0.0:2381

scheduler:

extraArgs:

bind-address: 0.0.0.0

- |

kind: KubeProxyConfiguration

metricsBindAddress: 0.0.0.0

- role: worker

labels:

mynode: worker

- role: worker

labels:

mynode: worker2

networking:

disableDefaultCNI: true

EOF

❯ kind create cluster --config kind-cni.yaml --name myk8s --image kindest/node:v1.30.4

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.30.4) 🖼

✓ Preparing nodes 📦 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Not sure what to do next? 😅 Check out https://kind.sigs.k8s.io/docs/user/quick-start/

# 배포 확인

❯ kind get clusters

myk8s

❯ kind get nodes --name myk8s

myk8s-worker2

myk8s-control-plane

myk8s-worker

❯ kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:60071

CoreDNS is running at https://127.0.0.1:60071/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 네트워크 확인

❯ kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

"--service-cluster-ip-range=10.96.0.0/16",

"--cluster-cidr=10.244.0.0/16",

# 노드 확인 : CRI

❯ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

myk8s-control-plane NotReady control-plane 4m35s v1.30.4 172.17.0.3 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

myk8s-worker NotReady <none> 4m9s v1.30.4 172.17.0.4 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

myk8s-worker2 NotReady <none> 4m9s v1.30.4 172.17.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.10.7-orbstack-00280-gd3b7ec68d3d4 containerd://1.7.18

# 노드 라벨 확인

❯ kubectl get nodes myk8s-control-plane -o jsonpath={.metadata.labels} | jq

{

"beta.kubernetes.io/arch": "arm64",

"beta.kubernetes.io/os": "linux",

"kubernetes.io/arch": "arm64",

"kubernetes.io/hostname": "myk8s-control-plane",

"kubernetes.io/os": "linux",

"mynode": "control-plane",

"node-role.kubernetes.io/control-plane": "",

"node.kubernetes.io/exclude-from-external-load-balancers": ""

}

❯ kubectl get nodes myk8s-worker -o jsonpath={.metadata.labels} | jq

{

"beta.kubernetes.io/arch": "arm64",

"beta.kubernetes.io/os": "linux",

"kubernetes.io/arch": "arm64",

"kubernetes.io/hostname": "myk8s-worker",

"kubernetes.io/os": "linux",

"mynode": "worker"

}

❯ kubectl get nodes myk8s-worker2 -o jsonpath={.metadata.labels} | jq

{

"beta.kubernetes.io/arch": "arm64",

"beta.kubernetes.io/os": "linux",

"kubernetes.io/arch": "arm64",

"kubernetes.io/hostname": "myk8s-worker2",

"kubernetes.io/os": "linux",

"mynode": "worker2"

}

# 컨테이너 확인 : 컨테이너 갯수, 컨테이너 이름 확인

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f59b6f3e664a kindest/node:v1.30.4 "/usr/local/bin/entr…" 7 minutes ago Up 7 minutes myk8s-worker2

dafd80a081ca kindest/node:v1.30.4 "/usr/local/bin/entr…" 7 minutes ago Up 7 minutes 0.0.0.0:30000-30002->30000-30002/tcp, 127.0.0.1:60071->6443/tcp myk8s-control-plane

47e3a4ab04e0 kindest/node:v1.30.4 "/usr/local/bin/entr…" 7 minutes ago Up 7 minutes myk8s-worker

❯ docker port myk8s-control-plane

6443/tcp -> 127.0.0.1:60071

30000/tcp -> 0.0.0.0:30000

30001/tcp -> 0.0.0.0:30001

30002/tcp -> 0.0.0.0:30002

❯ docker port myk8s-worker

❯ docker port myk8s-worker2

# 컨테이너 내부 정보 확인

❯ docker exec -it myk8s-control-plane ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

eth0@if47 UP 172.17.0.3/16

❯ docker exec -it myk8s-worker ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

eth0@if49 UP 172.17.0.4/16

❯ docker exec -it myk8s-worker2 ip -br -c -4 addr

lo UNKNOWN 127.0.0.1/8

eth0@if45 UP 172.17.0.2/16

# 각 노드에 sw 설치

❯ docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree jq psmisc lsof wget bridge-utils tcpdump iputils-ping htop git nano -y'

❯ docker exec -it myk8s-worker sh -c 'apt update && apt install tree jq psmisc lsof wget bridge-utils tcpdump iputils-ping -y'

❯ docker exec -it myk8s-worker2 sh -c 'apt update && apt install tree jq psmisc lsof wget bridge-utils tcpdump iputils-ping -y'- bridge 실행파일 생성 후 로컬에 복사

#

❯ watch -d kubectl get pod -A -owide

#

❯ kubectl describe pod -n kube-system -l k8s-app=kube-dns

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 18m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Warning FailedScheduling 2m41s (x3 over 13m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

# Flannel cni 설치

❯ kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

# namespace 에 pod-security.kubernetes.io/enforce=privileged Label 확인

❯ kubectl get ns --show-labels

NAME STATUS AGE LABELS

app=flannel,kubernetes.io/metadata.name=kube-flannel,pod-security.kubernetes.io/enforce=privileged

❯ kubectl get ds,pod,cm -n kube-flannel

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 3 3 3 3 3 <none> 4m54s

NAME READY STATUS RESTARTS AGE

pod/kube-flannel-ds-cf6n4 1/1 Running 0 4m53s

pod/kube-flannel-ds-hlrnh 1/1 Running 0 4m53s

pod/kube-flannel-ds-wwcq2 1/1 Running 0 4m53s

NAME DATA AGE

configmap/kube-flannel-cfg 2 4m54s

configmap/kube-root-ca.crt 1 4m54s

❯ kubectl describe cm -n kube-flannel kube-flannel-cfg

Name: kube-flannel-cfg

Namespace: kube-flannel

Labels: app=flannel

k8s-app=flannel

tier=node

Annotations: <none>

Data

====

cni-conf.json:

----

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json:

----

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

BinaryData

====

Events: <none>

----

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json:

----

{

"Network": "10.244.0.0/16",

"EnableNFTables": false,

"Backend": {

"Type": "vxlan"

}

}

❯ kubectl describe ds -n kube-flannel kube-flannel-ds

Name: kube-flannel-ds

Selector: app=flannel

Node-Selector: <none>

Labels: app=flannel

k8s-app=flannel

tier=node

Annotations: deprecated.daemonset.template.generation: 1

Desired Number of Nodes Scheduled: 3

Current Number of Nodes Scheduled: 3

Number of Nodes Scheduled with Up-to-date Pods: 3

Number of Nodes Scheduled with Available Pods: 3

Number of Nodes Misscheduled: 0

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=flannel

tier=node

Service Account: flannel

Init Containers:

install-cni-plugin:

Image: docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel2

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/flannel

/opt/cni/bin/flannel

Environment: <none>

Mounts:

/opt/cni/bin from cni-plugin (rw)

install-cni:

Image: docker.io/flannel/flannel:v0.25.6

Port: <none>

Host Port: <none>

Command:

cp

Args:

-f

/etc/kube-flannel/cni-conf.json

/etc/cni/net.d/10-flannel.conflist

Environment: <none>

Mounts:

/etc/cni/net.d from cni (rw)

/etc/kube-flannel/ from flannel-cfg (rw)

Containers:

kube-flannel:

Image: docker.io/flannel/flannel:v0.25.6

Port: <none>

Host Port: <none>

Command:

/opt/bin/flanneld

Args:

--ip-masq

--kube-subnet-mgr

Requests:

cpu: 100m

memory: 50Mi

Environment:

POD_NAME: (v1:metadata.name)

POD_NAMESPACE: (v1:metadata.namespace)

EVENT_QUEUE_DEPTH: 5000

Mounts:

/etc/kube-flannel/ from flannel-cfg (rw)

/run/flannel from run (rw)

/run/xtables.lock from xtables-lock (rw)

Volumes:

run:

Type: HostPath (bare host directory volume)

Path: /run/flannel

HostPathType:

cni-plugin:

Type: HostPath (bare host directory volume)

Path: /opt/cni/bin

HostPathType:

cni:

Type: HostPath (bare host directory volume)

Path: /etc/cni/net.d

HostPathType:

flannel-cfg:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: kube-flannel-cfg

Optional: false

xtables-lock:

Type: HostPath (bare host directory volume)

Path: /run/xtables.lock

HostPathType: FileOrCreate

Priority Class Name: system-node-critical

Node-Selectors: <none>

Tolerations: :NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 7m35s daemonset-controller Created pod: kube-flannel-ds-hlrnh

Normal SuccessfulCreate 7m35s daemonset-controller Created pod: kube-flannel-ds-cf6n4

Normal SuccessfulCreate 7m35s daemonset-controller Created pod: kube-flannel-ds-wwcq2

❯ kubectl exec -it ds/kube-flannel-ds -n kube-flannel -c kube-flannel -- ls -l /etc/kube-flannel

total 8

lrwxrwxrwx 1 root root 20 Sep 7 16:04 cni-conf.json -> ..data/cni-conf.json

lrwxrwxrwx 1 root root 20 Sep 7 16:04 net-conf.json -> ..data/net-conf.json

# failed to find plugin "bridge" in path [/opt/cni/bin]

❯ kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-cf6n4 1/1 Running 0 10m 172.17.0.4 myk8s-worker <none> <none>

kube-flannel kube-flannel-ds-hlrnh 1/1 Running 0 10m 172.17.0.3 myk8s-control-plane <none> <none>

kube-flannel kube-flannel-ds-wwcq2 1/1 Running 0 10m 172.17.0.2 myk8s-worker2 <none> <none>

kube-system coredns-7db6d8ff4d-qtnj6 0/1 ContainerCreating 0 30m <none> myk8s-control-plane <none> <none>

kube-system coredns-7db6d8ff4d-zvdhr 0/1 ContainerCreating 0 30m <none> myk8s-control-plane <none> <none>

kube-system etcd-myk8s-control-plane 1/1 Running 0 30m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 30m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 30m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-proxy-9fsn8 1/1 Running 0 30m 172.17.0.2 myk8s-worker2 <none> <none>

kube-system kube-proxy-lrzbv 1/1 Running 0 30m 172.17.0.4 myk8s-worker <none> <none>

kube-system kube-proxy-sxvvt 1/1 Running 0 30m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 30m 172.17.0.3 myk8s-control-plane <none> <none>

local-path-storage local-path-provisioner-7d4d9bdcc5-tmpvk 0/1 ContainerCreating 0 30m <none> myk8s-control-plane <none> <none>

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 30m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Warning FailedScheduling 15m (x3 over 25m) default-scheduler 0/3 nodes are available: 3 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/3 nodes are available: 3 Preemption is not helpful for scheduling.

Normal Scheduled 10m default-scheduler Successfully assigned kube-system/coredns-7db6d8ff4d-zvdhr to myk8s-control-plane

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "f478888696b89c7ebbd02e68d435d67740e7c05612173533c56e8d0168b96ee6": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "7c374bf0817b4979fddfa8adc76a1ba37fe769cc725b058489946aa07a1fdc84": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "bd362464a61801341b43f88296a0513e70907e0ab7decbf6e5a0d99856f1619a": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "14d2117b56f27ad1c1f0591d185361a2faaafc2e4a7d438adcfb3285aba1491d": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "4f097dafe49e26d143647d6cf71c05774b2aad52d977f0db4f5d2428da114c9f": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "2c3913a3dbe6a3ea3d7018dbf0ed749f122a8d11ec1e6f2d9da323745e9cbdb3": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "b2bfdc8b0c659e9e0af840caac3e9aec1b8251544fa8ded4d77d6be49b705d87": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "497c2d577d631178b90d7ffc602be9b6d3cddb542b9e08e6cc9b6b8441edff0b": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "6148c6019e7754cbe231d66a18518d65b999ba2c187c939974ea8271cf7a0511": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Warning FailedCreatePodSandBox 10m (x4 over 10m) kubelet (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "971e91efe756380106a777b94dbec74e166e996b2ad4fee50e12596cce152c26": plugin type="flannel" failed (add): failed to delegate add: failed to find plugin "bridge" in path [/opt/cni/bin]

Normal SandboxChanged 56s (x576 over 10m) kubelet Pod sandbox changed, it will be killed and re-created.

#

❯ kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-cf6n4 1/1 Running 0 12m 172.17.0.4 myk8s-worker <none> <none>

kube-flannel kube-flannel-ds-hlrnh 1/1 Running 0 12m 172.17.0.3 myk8s-control-plane <none> <none>

kube-flannel kube-flannel-ds-wwcq2 1/1 Running 0 12m 172.17.0.2 myk8s-worker2 <none> <none>

kube-system coredns-7db6d8ff4d-qtnj6 0/1 ContainerCreating 0 31m <none> myk8s-control-plane <none> <none>

kube-system coredns-7db6d8ff4d-zvdhr 0/1 ContainerCreating 0 31m <none> myk8s-control-plane <none> <none>

kube-system etcd-myk8s-control-plane 1/1 Running 0 31m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 31m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 31m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-proxy-9fsn8 1/1 Running 0 31m 172.17.0.2 myk8s-worker2 <none> <none>

kube-system kube-proxy-lrzbv 1/1 Running 0 31m 172.17.0.4 myk8s-worker <none> <none>

kube-system kube-proxy-sxvvt 1/1 Running 0 31m 172.17.0.3 myk8s-control-plane <none> <none>

kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 31m 172.17.0.3 myk8s-control-plane <none> <none>

local-path-storage local-path-provisioner-7d4d9bdcc5-tmpvk 0/1 ContainerCreating 0 31m <none> myk8s-control-plane <none> <none>

# bridge 파일 복사

❯ docker cp bridge myk8s-control-plane:/opt/cni/bin/bridge

Successfully copied 4.37MB to myk8s-control-plane:/opt/cni/bin/bridge

❯ docker cp bridge myk8s-worker:/opt/cni/bin/bridge

Successfully copied 4.37MB to myk8s-worker:/opt/cni/bin/bridge

❯ docker cp bridge myk8s-worker2:/opt/cni/bin/bridge

Successfully copied 4.37MB to myk8s-worker2:/opt/cni/bin/bridge

❯ docker exec -it myk8s-control-plane chmod 755 /opt/cni/bin/bridge