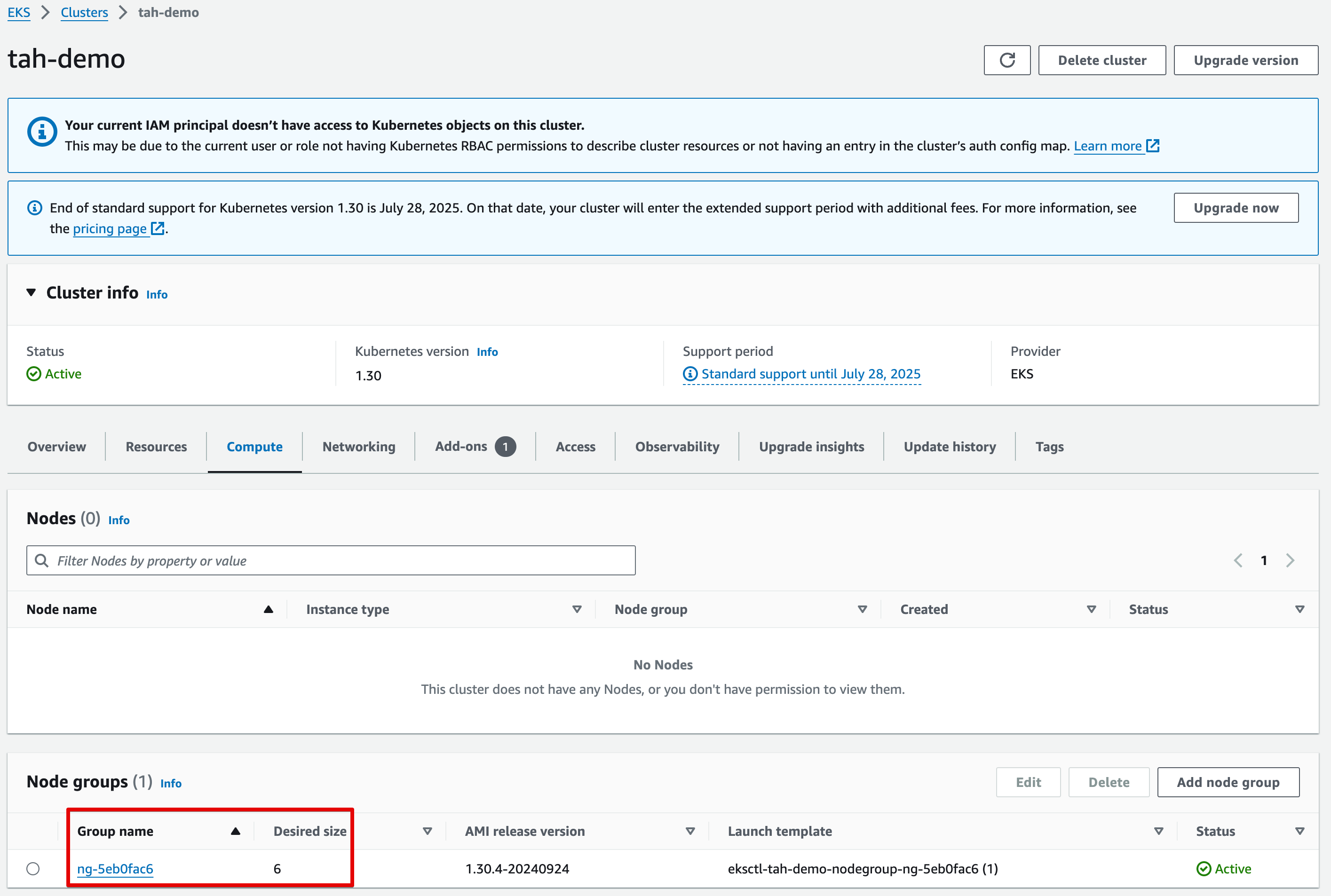

KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 4주차는 K8S Service - ClusterIP, NodePort에 대해 학습하였습니다. 학습한 내용과 EKS 환경에서 az-간 통신비용 절감을 위한 Topology Aware Routing (이전 명칭은 Topology Aware Hint) 을 정리하였습니다.

1. K8S Service

- 서비스는 쿠버네티스에서 동작하는 애플리케이션을 내/외부에서 유현하게 접속하게 해주는 오브젝트입니다.

- 외부와 접하는 단일 엔드포인트 뒤에 있는 클러스터에서 실행되는 애플리케이션을 노출시키며, 이는 워크로드가 여러 백엔드로 나뉘어 있는 경우에도 가능하다.

- 파드 집합에서 실행중인 애플리케이션을 네트워크 서비스로 노출하는 추상화 방법

- 쿠버네티스를 사용하면 익숙하지 않은 서비스 디스커버리 메커니즘을 사용하기 위해 애플리케이션을 수정할 필요가 없으며 쿠버네티스는 파드에게 고유한 IP 주소와 파드 집합에 대한 단일 DNS 명을 부여하고, 그것들 간에 로드-밸런스를 수행할 수 있습니다.

ServiceTypes

애플리케이션 중 일부(예: 프론트엔드)는 서비스를 클러스터 밖에 위치한 외부 IP 주소에 노출하고 싶은 경우가 있을 것입니다.

쿠버네티스 ServiceTypes는 원하는 서비스 종류를 지정할 수 있도록 해 줍니다.

Type 값과 그 동작은 다음과 같습니다.

- ClusterIP: 서비스를 클러스터-내부 IP에 노출시킵니다. 이 값을 선택하면 클러스터 내에서만 서비스에 도달할 수 있으며 이것은 서비스의 type을 명시적으로 지정하지 않았을 때의 기본값입니다.

- NodePort: 고정 포트 (NodePort)로 각 노드의 IP에 서비스를 노출시킵니다. 노드 포트를 사용할 수 있도록 하기 위해, 쿠버네티스는 type: ClusterIP인 서비스를 요청했을 때와 마찬가지로 클러스터 IP 주소를 구성합니다.

- LoadBalancer: 클라우드 공급자의 로드 밸런서를 사용하여 서비스를 외부에 노출시킵니다.

- ExternalName: 값과 함께 CNAME 레코드를 리턴하여, 서비스를 externalName 필드의 내용(예:foo.bar.example.com)에 매핑하며 어떠한 종류의 프록시도 설정되지 않습니다.

참고:

ExternalName 유형을 사용하려면 kube-dns 버전 1.7 또는 CoreDNS 버전 0.0.8 이상이 필요합니다.

type 필드는 중첩(nested) 기능으로 설계되어, 각 단계는 이전 단계에 더해지는 형태이다. 이는 모든 클라우드 공급자에 대해 엄격히 요구되는 사항은 아니다(예: Google Compute Engine에서는 type: LoadBalancer가 동작하기 위해 노드 포트를 할당할 필요가 없지만, 다른 클라우드 공급자 통합 시에는 필요할 수 있음). 엄격한 중첩이 필수 사항은 아니지만, 서비스에 대한 쿠버네티스 API 디자인은 이와 상관없이 엄격한 중첩 구조를 가정합니다.

인그레스를 사용하여 서비스를 노출시킬 수도 있습니다. 인그레스는 서비스 유형은 아니지만, 클러스터의 진입점 역할을 합니다. 동일한 IP 주소로 여러 서비스를 노출시킬 수 있기 때문에 라우팅 규칙을 단일 리소스로 통합할 수 있습니다.

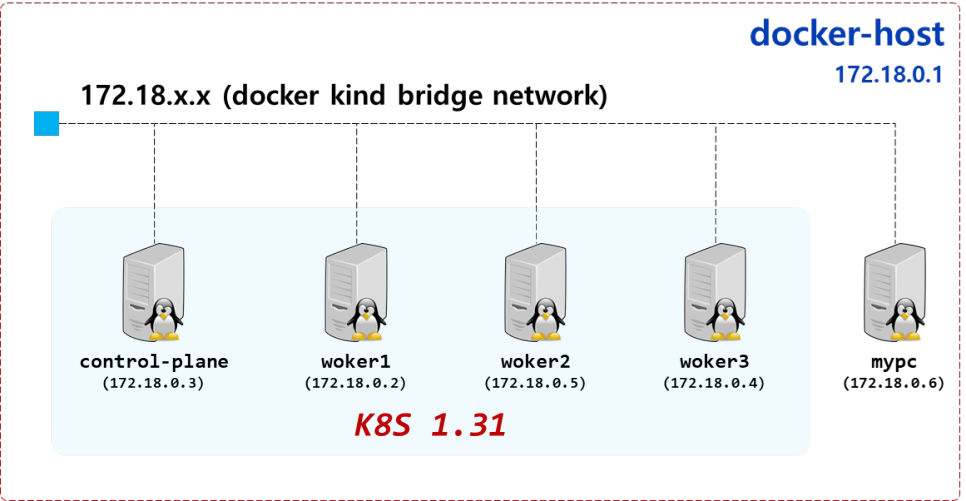

실습을 위한 K8S 설치

환경

-

macos docker 기반위에 vagrant를 이용하여 kind를 설치하겠습니다.

- K8S v1.31.0 , CNI(Kindnet, Direct Routing mode) , IPTABLES proxy mode

- 노드(실제로는 컨테이너) 네트워크 대역 : 172.18.0.0/16

- 파드 사용 네트워크 대역 : 10.10.0.0/16 ⇒ 각각 10.10.1.0/24, 10.10.2.0/24, 10.10.3.0/24, 10.10.4.0/24

- 서비스 사용 네트워크 대역 : 10.200.1.0/24

-

K8S 아키텍처 구성도

-

Docker-Host

-

Docker 네트환경 확인

❯ docker network ls NETWORK ID NAME DRIVER SCOPE 8ac0d5c72eb0 bridge bridge local 88153fefe41f host host local 69ae0c31875a kind bridge local 6e2fe0aabee3 none null local ❯ docker network inspect kind | grep -i -A2 subnet "Subnet": "172.18.0.0/16", "Gateway": "172.18.0.1" },

설치

-

k8s 설치를 위한 Kind manifest

❯ cat <<EOF > kind-svc.yaml kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 featureGates: "InPlacePodVerticalScaling": true #"MultiCIDRServiceAllocator": true nodes: - role: control-plane labels: mynode: control-plane extraPortMappings: - containerPort: 30000 hostPort: 30000 - containerPort: 30001 hostPort: 30001 - containerPort: 30002 hostPort: 30002 - role: worker labels: mynode: worker1 - role: worker labels: mynode: worker2 - role: worker labels: mynode: worker3 networking: podSubnet: 10.10.0.0/16 serviceSubnet: 10.200.1.0/24 EOF -

k8s 클러스터 설치

❯ kind create cluster --config kind-svc.yaml --name myk8s --image kindest/node:v1.31.0 Creating cluster "myk8s" ... ✓ Ensuring node image (kindest/node:v1.31.0) 🖼 ✓ Preparing nodes 📦 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing CNI 🔌 ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜 Set kubectl context to "kind-myk8s" You can now use your cluster with: kubectl cluster-info --context kind-myk8s Have a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂 ❯ kind get nodes --name myk8s myk8s-worker3 myk8s-control-plane myk8s-worker myk8s-worker2 ❯ kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME myk8s-control-plane Ready control-plane 45s v1.31.0 172.18.0.2 <none> Debian GNU/Linux 12 (bookworm) 6.10.4-linuxkit containerd://1.7.18 myk8s-worker Ready <none> 34s v1.31.0 172.18.0.5 <none> Debian GNU/Linux 12 (bookworm) 6.10.4-linuxkit containerd://1.7.18 myk8s-worker2 Ready <none> 34s v1.31.0 172.18.0.3 <none> Debian GNU/Linux 12 (bookworm) 6.10.4-linuxkit containerd://1.7.18 myk8s-worker3 Ready <none> 34s v1.31.0 172.18.0.4 <none> Debian GNU/Linux 12 (bookworm) 6.10.4-linuxkit containerd://1.7.18 ❯ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 8f8066ce74cd kindest/node:v1.31.0 "/usr/local/bin/entr…" About a minute ago Up 58 seconds myk8s-worker3 a1310c7beb13 kindest/node:v1.31.0 "/usr/local/bin/entr…" About a minute ago Up 58 seconds 0.0.0.0:30000-30002->30000-30002/tcp, 127.0.0.1:60493->6443/tcp myk8s-control-plane 72cadc9cd7e1 kindest/node:v1.31.0 "/usr/local/bin/entr…" About a minute ago Up 58 seconds myk8s-worker 022767aae678 kindest/node:v1.31.0 "/usr/local/bin/entr…" About a minute ago Up 58 seconds myk8s-worker2 -

노드에 기본 툴 설치

-

명령어

docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping git vim arp-scan -y' for i in worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; done -

명령어 실행결과

❯ docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping git vim arp-scan -y' for i in worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; done Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB] ... >> node myk8s-worker << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] ... >> node myk8s-worker2 << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] ... >> node myk8s-worker3 << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] ... Processing triggers for libc-bin (2.36-9+deb12u7) ... What's next: Try Docker Debug for seamless, persistent debugging tools in any container or image → docker debug myk8s-worker3 Learn more at https://docs.docker.com/go/debug-cli/

-

-

설치내용 확인

# k8s v1.31.0 버전 확인 ❯ kubectl get nodes NAME STATUS ROLES AGE VERSION myk8s-control-plane Ready control-plane 17m v1.31.0 myk8s-worker Ready <none> 16m v1.31.0 myk8s-worker2 Ready <none> 16m v1.31.0 myk8s-worker3 Ready <none> 16m v1.31.0 # 노드 labels 확인 ❯ kubectl get nodes -o jsonpath="{.items[*].metadata.labels}" | grep mynode {"beta.kubernetes.io/arch":"arm64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"arm64","kubernetes.io/hostname":"myk8s-control-plane","kubernetes.io/os":"linux","mynode":"control-plane","node-role.kubernetes.io/control-plane":"","node.kubernetes.io/exclude-from-external-load-balancers":""} {"beta.kubernetes.io/arch":"arm64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"arm64","kubernetes.io/hostname":"myk8s-worker","kubernetes.io/os":"linux","mynode":"worker1"} {"beta.kubernetes.io/arch":"arm64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"arm64","kubernetes.io/hostname":"myk8s-worker2","kubernetes.io/os":"linux","mynode":"worker2"} {"beta.kubernetes.io/arch":"arm64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"arm64","kubernetes.io/hostname":"myk8s-worker3","kubernetes.io/os":"linux","mynode":"worker3"} ❯ kubectl get nodes -o jsonpath="{.items[*].metadata.labels}" | jq | grep mynode "mynode": "control-plane", "mynode": "worker1" "mynode": "worker2" "mynode": "worker3" # kind network 중 컨테이너(노드) IP(대역) 확인 : 172.18.0.2~ 부터 할당되며, control-plane 이 꼭 172.18.0.2가 안될 수 도 있음 ❯ docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}' /myk8s-control-plane 172.18.0.2 /myk8s-worker 172.18.0.5 /myk8s-worker2 172.18.0.3 /myk8s-worker3 172.18.0.4 # 파드CIDR 과 Service 대역 확인 : CNI는 kindnet 사용 ❯ kubectl get cm -n kube-system kubeadm-config -oyaml | grep -i subnet podSubnet: 10.10.0.0/16 serviceSubnet: 10.200.1.0/24 ❯ kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range" "--service-cluster-ip-range=10.200.1.0/24", "--cluster-cidr=10.10.0.0/16", # feature-gates 확인 : https://kubernetes.io/docs/reference/command-line-tools-reference/feature-gates/ ❯ kubectl describe pod -n kube-system | grep feature-gates --feature-gates=InPlacePodVerticalScaling=true --feature-gates=InPlacePodVerticalScaling=true --feature-gates=InPlacePodVerticalScaling=true ❯ kubectl describe pod -n kube-system | grep runtime-config --runtime-config= # MultiCIDRServiceAllocator : https://kubernetes.io/docs/tasks/network/extend-service-ip-ranges/ kubectl get servicecidr NAME CIDRS AGE kubernetes 10.200.1.0/24 2m13s ❯ kubectl get servicecidr <-- MultiCIDRServiceAllocator": false error: the server doesn't have a resource type "servicecidr" MultiCIDRServiceAllocator": true 조건으로 kind에서 Cluster 생성 시 에러 발생 ✗ Starting control-plane 🕹️ Deleted nodes: ["myk8s-worker2" "myk8s-control-plane" "myk8s-worker" "myk8s-worker3"] ERROR: failed to create cluster: failed to init node with kubeadm: command "docker exec --privileged myk8s-control-plane kubeadm init --config=/kind/kubeadm.conf --skip-token-print --v=6" failed with error: exit status 1 Command Output: I0927 23:54:18.245920 137 initconfiguration.go:261] loading configuration from "/kind/kubeadm.conf" W0927 23:54:18.246329 137 common.go:101] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta3" (kind: "ClusterConfiguration"). Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version. W0927 23:54:18.246713 137 common.go:101] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta3" (kind: "InitConfiguration"). Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version. W0927 23:54:18.246922 137 common.go:101] your configuration file uses a deprecated API spec: "kubeadm.k8s.io/v1beta3" (kind: "JoinConfiguration"). Please use 'kubeadm config migrate --old-config old.yaml --new-config new.yaml', which will write the new, similar spec using a newer API version. W0927 23:54:18.247068 137 initconfiguration.go:361] [config] WARNING: Ignored YAML document with GroupVersionKind kubeadm.k8s.io/v1beta3, Kind=JoinConfiguration# 노드마다 할당된 dedicated subnet (podCIDR) 확인 ❯ kubectl get nodes -o jsonpath="{.items[*].spec.podCIDR}" 10.10.0.0/24 10.10.1.0/24 10.10.3.0/24 10.10.2.0/24 # kube-proxy configmap 확인 ❯ kubectl describe cm -n kube-system kube-proxy Name: kube-proxy Namespace: kube-system Labels: app=kube-proxy Annotations: <none> Data ==== config.conf: ---- apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 bindAddressHardFail: false clientConnection: acceptContentTypes: "" burst: 0 contentType: "" kubeconfig: /var/lib/kube-proxy/kubeconfig.conf qps: 0 clusterCIDR: 10.10.0.0/16 configSyncPeriod: 0s conntrack: maxPerCore: 0 min: null tcpBeLiberal: false tcpCloseWaitTimeout: null tcpEstablishedTimeout: null udpStreamTimeout: 0s udpTimeout: 0s detectLocal: bridgeInterface: "" interfaceNamePrefix: "" detectLocalMode: "" enableProfiling: false featureGates: InPlacePodVerticalScaling: true MultiCIDRServiceAllocator: false healthzBindAddress: "" hostnameOverride: "" iptables: localhostNodePorts: null masqueradeAll: false masqueradeBit: null minSyncPeriod: 1s syncPeriod: 0s ipvs: excludeCIDRs: null minSyncPeriod: 0s scheduler: "" strictARP: false syncPeriod: 0s tcpFinTimeout: 0s tcpTimeout: 0s udpTimeout: 0s kind: KubeProxyConfiguration logging: flushFrequency: 0 options: json: infoBufferSize: "0" text: infoBufferSize: "0" verbosity: 0 metricsBindAddress: "" mode: iptables nftables: masqueradeAll: false masqueradeBit: null minSyncPeriod: 0s syncPeriod: 0s nodePortAddresses: null oomScoreAdj: null portRange: "" showHiddenMetricsForVersion: "" winkernel: enableDSR: false forwardHealthCheckVip: false networkName: "" rootHnsEndpointName: "" sourceVip: "" kubeconfig.conf: ---- apiVersion: v1 kind: Config clusters: - cluster: certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt server: https://myk8s-control-plane:6443 name: default contexts: - context: cluster: default namespace: default user: default name: default current-context: default users: - name: default user: tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token BinaryData ==== Events: <none># 노드 별 네트워트 정보 확인 : CNI는 kindnet 사용 ❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i ls /opt/cni/bin/; echo; done >> node myk8s-control-plane << host-local loopback portmap ptp >> node myk8s-worker << host-local loopback portmap ptp >> node myk8s-worker2 << host-local loopback portmap ptp >> node myk8s-worker3 << host-local loopback portmap ptp ❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i cat /etc/cni/net.d/10-kindnet.conflist; echo; done >> node myk8s-control-plane << { "cniVersion": "0.3.1", "name": "kindnet", "plugins": [ { "type": "ptp", "ipMasq": false, "ipam": { "type": "host-local", "dataDir": "/run/cni-ipam-state", "routes": [

{ "dst": "0.0.0.0/0" }

],

"ranges": [

[ { "subnet": "10.10.0.0/24" } ]

]

}

,

"mtu": 65535

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

] }

!>> node myk8s-worker <<

...

[ { "subnet": "10.10.1.0/24" } ]

...

!>> node myk8s-worker2 <<

...

[ { "subnet": "10.10.3.0/24" } ]

...

!>> node myk8s-worker3 <<

...

[ { "subnet": "10.10.2.0/24" } ]

...

❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-i ip -c route; echo; done

!>> node myk8s-control-plane <<

default via 172.18.0.1 dev eth0

10.10.0.2 dev veth14667454 scope host

10.10.0.3 dev vetha871d686 scope host

10.10.0.4 dev vethaa8cc47e scope host

10.10.1.0/24 via 172.18.0.3 dev eth0

10.10.2.0/24 via 172.18.0.4 dev eth0

10.10.3.0/24 via 172.18.0.5 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.2

!>> node myk8s-worker <<

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.2.0/24 via 172.18.0.4 dev eth0

10.10.3.0/24 via 172.18.0.5 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3

!>> node myk8s-worker2 <<

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.1.0/24 via 172.18.0.3 dev eth0

10.10.2.0/24 via 172.18.0.4 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.5

!>> node myk8s-worker3 <<

default via 172.18.0.1 dev eth0

10.10.0.0/24 via 172.18.0.2 dev eth0

10.10.1.0/24 via 172.18.0.3 dev eth0

10.10.3.0/24 via 172.18.0.5 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.4

❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-i ip -c addr; echo; done

!>> node myk8s-control-plane <<

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: gre0@NONE: mtu 1476 qdisc noop state DOWN group default qlen 1000

link/gre 0.0.0.0 brd 0.0.0.0

4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000

link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

5: erspan0@NONE: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN group default qlen 1000

link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

6: ip_vti0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

7: ip6_vti0@NONE: mtu 1428 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd :: permaddr 22fb:7ab7:c942::

8: sit0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

9: ip6tnl0@NONE: mtu 1452 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd :: permaddr fae8:88aa:ef2::

10: ip6gre0@NONE: mtu 1448 qdisc noop state DOWN group default qlen 1000

link/gre6 :: brd :: permaddr f643:226:d004::

11: veth14667454@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether 8e:ea:9e:2d:04:aa brd ff:ff:ff:ff:ff:ff link-netns cni-0068db9f-b35b-926a-4532-1fde00fa8f99

inet 10.10.0.1/32 scope global veth14667454

valid_lft forever preferred_lft forever

inet6 fe80::8cea:9eff:fe2d:4aa/64 scope link

valid_lft forever preferred_lft forever

12: vetha871d686@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether ce:cc:b6:41:34:a1 brd ff:ff:ff:ff:ff:ff link-netns cni-99ca450c-243c-9d90-1299-772eb7a74b3d

inet 10.10.0.1/32 scope global vetha871d686

valid_lft forever preferred_lft forever

inet6 fe80::cccc:b6ff:fe41:34a1/64 scope link

valid_lft forever preferred_lft forever

13: vethaa8cc47e@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether f2:ef:c8:5f:31:51 brd ff:ff:ff:ff:ff:ff link-netns cni-308fbfc8-6bd3-a43b-3d3d-b7b80ef5d640

inet 10.10.0.1/32 scope global vethaa8cc47e

valid_lft forever preferred_lft forever

inet6 fe80::f0ef:c8ff:fe5f:3151/64 scope link

valid_lft forever preferred_lft forever

34: eth0@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::2/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:2/64 scope link

valid_lft forever preferred_lft forever

!>> node myk8s-worker <<

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: gre0@NONE: mtu 1476 qdisc noop state DOWN group default qlen 1000

link/gre 0.0.0.0 brd 0.0.0.0

4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000

link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

5: erspan0@NONE: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN group default qlen 1000

link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff

6: ip_vti0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

7: ip6_vti0@NONE: mtu 1428 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd :: permaddr 223e:9930:122c::

8: sit0@NONE: mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

9: ip6tnl0@NONE: mtu 1452 qdisc noop state DOWN group default qlen 1000

link/tunnel6 :: brd :: permaddr 7eca:c746:d841::

10: ip6gre0@NONE: mtu 1448 qdisc noop state DOWN group default qlen 1000

link/gre6 :: brd :: permaddr fe13:f720:253d::

36: eth0@if37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::3/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:3/64 scope link

valid_lft forever preferred_lft forever

!>> node myk8s-worker2 <<

...

40: eth0@if41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.5/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::5/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:5/64 scope link

valid_lft forever preferred_lft forever

!>> node myk8s-worker3 <<

...

38: eth0@if39: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default

link/ether 02:42:ac:12:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fc00:f853:ccd:e793::4/64 scope global nodad

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe12:4/64 scope link

valid_lft forever preferred_lft forever

❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-i ip -c -4 addr show dev eth0; echo; done

!>> node myk8s-control-plane <<

34: eth0@if35: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link-netnsid 0

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

!>> node myk8s-worker <<

36: eth0@if37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link-netnsid 0

inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

!>> node myk8s-worker2 <<

40: eth0@if41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link-netnsid 0

inet 172.18.0.5/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

!>> node myk8s-worker3 <<

38: eth0@if39: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link-netnsid 0

inet 172.18.0.4/16 brd 172.18.255.255 scope global eth0

valid_lft forever preferred_lft forever

# iptables 정보 확인

❯ for i in filter nat mangle raw ; do echo ">> IPTables Type : $i <<"; docker exec -t myk8s-control-plane iptables -t $i -S ; echo; done

>> IPTables Type : filter <<

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-PROXY-CANARY

-N KUBE-PROXY-FIREWALL

-N KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

>> IPTables Type : nat <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N DOCKER_OUTPUT

-N DOCKER_POSTROUTING

-N KIND-MASQ-AGENT

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-6GODNNVFRWQ66GUT

-N KUBE-SEP-F2ZDTMFKATSD3GWE

-N KUBE-SEP-OEOVYBFUDTUCKBZR

-N KUBE-SEP-P4UV4WHAETXYCYLO

-N KUBE-SEP-V2PECCYPB6X2GSCW

-N KUBE-SEP-XWEOB3JN6VI62DQQ

-N KUBE-SEP-ZEA5VGCBA2QNA7AK

-N KUBE-SERVICES

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -d 192.168.65.254/32 -j DOCKER_OUTPUT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -d 192.168.65.254/32 -j DOCKER_OUTPUT

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -d 192.168.65.254/32 -j DOCKER_POSTROUTING

-A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT

-A DOCKER_OUTPUT -d 192.168.65.254/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:35503

-A DOCKER_OUTPUT -d 192.168.65.254/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:52746

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 35503 -j SNAT --to-source 192.168.65.254:53

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 52746 -j SNAT --to-source 192.168.65.254:53

-A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN

-A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-6GODNNVFRWQ66GUT -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-6GODNNVFRWQ66GUT -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.3:9153

-A KUBE-SEP-F2ZDTMFKATSD3GWE -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-F2ZDTMFKATSD3GWE -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-OEOVYBFUDTUCKBZR -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-OEOVYBFUDTUCKBZR -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-P4UV4WHAETXYCYLO -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-P4UV4WHAETXYCYLO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.4:9153

-A KUBE-SEP-V2PECCYPB6X2GSCW -s 172.18.0.2/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-V2PECCYPB6X2GSCW -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.2:6443

-A KUBE-SEP-XWEOB3JN6VI62DQQ -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-XWEOB3JN6VI62DQQ -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-ZEA5VGCBA2QNA7AK

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.4:53" -j KUBE-SEP-F2ZDTMFKATSD3GWE

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.3:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-6GODNNVFRWQ66GUT

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.4:9153" -j KUBE-SEP-P4UV4WHAETXYCYLO

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.2:6443" -j KUBE-SEP-V2PECCYPB6X2GSCW

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XWEOB3JN6VI62DQQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.4:53" -j KUBE-SEP-OEOVYBFUDTUCKBZR

>> IPTables Type : mangle <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-N KUBE-PROXY-CANARY

>> IPTables Type : raw <<

-P PREROUTING ACCEPT

-P OUTPUT ACCEPT

❯ for i in filter nat mangle raw ; do echo ">> IPTables Type : $i <<"; docker exec -t myk8s-worker iptables -t $i -S ; echo; done

>> IPTables Type : filter <<

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-N KUBE-EXTERNAL-SERVICES

-N KUBE-FIREWALL

-N KUBE-FORWARD

-N KUBE-KUBELET-CANARY

-N KUBE-NODEPORTS

-N KUBE-PROXY-CANARY

-N KUBE-PROXY-FIREWALL

-N KUBE-SERVICES

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS

-A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL

-A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -j KUBE-FIREWALL

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

-A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT

-A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

>> IPTables Type : nat <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N DOCKER_OUTPUT

-N DOCKER_POSTROUTING

-N KIND-MASQ-AGENT

-N KUBE-KUBELET-CANARY

-N KUBE-MARK-MASQ

-N KUBE-NODEPORTS

-N KUBE-POSTROUTING

-N KUBE-PROXY-CANARY

-N KUBE-SEP-6GODNNVFRWQ66GUT

-N KUBE-SEP-F2ZDTMFKATSD3GWE

-N KUBE-SEP-OEOVYBFUDTUCKBZR

-N KUBE-SEP-P4UV4WHAETXYCYLO

-N KUBE-SEP-V2PECCYPB6X2GSCW

-N KUBE-SEP-XWEOB3JN6VI62DQQ

-N KUBE-SEP-ZEA5VGCBA2QNA7AK

-N KUBE-SERVICES

-N KUBE-SVC-ERIFXISQEP7F7OF4

-N KUBE-SVC-JD5MR3NA4I4DYORP

-N KUBE-SVC-NPX46M4PTMTKRN6Y

-N KUBE-SVC-TCOU7JCQXEZGVUNU

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A PREROUTING -d 192.168.65.254/32 -j DOCKER_OUTPUT

-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-A OUTPUT -d 192.168.65.254/32 -j DOCKER_OUTPUT

-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING

-A POSTROUTING -d 192.168.65.254/32 -j DOCKER_POSTROUTING

-A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT

-A DOCKER_OUTPUT -d 192.168.65.254/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:41039

-A DOCKER_OUTPUT -d 192.168.65.254/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:60616

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 41039 -j SNAT --to-source 192.168.65.254:53

-A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 60616 -j SNAT --to-source 192.168.65.254:53

-A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN

-A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE

-A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

-A KUBE-SEP-6GODNNVFRWQ66GUT -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-6GODNNVFRWQ66GUT -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.3:9153

-A KUBE-SEP-F2ZDTMFKATSD3GWE -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-F2ZDTMFKATSD3GWE -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-OEOVYBFUDTUCKBZR -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-OEOVYBFUDTUCKBZR -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.4:53

-A KUBE-SEP-P4UV4WHAETXYCYLO -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ

-A KUBE-SEP-P4UV4WHAETXYCYLO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.4:9153

-A KUBE-SEP-V2PECCYPB6X2GSCW -s 172.18.0.2/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ

-A KUBE-SEP-V2PECCYPB6X2GSCW -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.2:6443

-A KUBE-SEP-XWEOB3JN6VI62DQQ -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ

-A KUBE-SEP-XWEOB3JN6VI62DQQ -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -s 10.10.0.3/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ

-A KUBE-SEP-ZEA5VGCBA2QNA7AK -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.3:53

-A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4

-A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP

-A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU

-A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS

-A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-ZEA5VGCBA2QNA7AK

-A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.4:53" -j KUBE-SEP-F2ZDTMFKATSD3GWE

-A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.3:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-6GODNNVFRWQ66GUT

-A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.4:9153" -j KUBE-SEP-P4UV4WHAETXYCYLO

-A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ

-A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.2:6443" -j KUBE-SEP-V2PECCYPB6X2GSCW

-A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.3:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XWEOB3JN6VI62DQQ

-A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.4:53" -j KUBE-SEP-OEOVYBFUDTUCKBZR

>> IPTables Type : mangle <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P FORWARD ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N KUBE-IPTABLES-HINT

-N KUBE-KUBELET-CANARY

-N KUBE-PROXY-CANARY

>> IPTables Type : raw <<

-P PREROUTING ACCEPT

-P OUTPUT ACCEPT

# 각 노드 bash 접속

docker exec -it myk8s-control-plane bash

docker exec -it myk8s-worker bash

docker exec -it myk8s-worker2 bash

docker exec -it myk8s-worker3 bash

----------------------------------------

exit

----------------------------------------

# kind 설치 시 kind 이름의 도커 브리지가 생성된다 : 172.18.0.0/16 대역

❯ docker network ls

NETWORK ID NAME DRIVER SCOPE

96debcb61538 bridge bridge local

88153fefe41f host host local

69ae0c31875a kind bridge local

6e2fe0aabee3 none null local

❯ docker inspect kind

[

{

"Name": "kind",

"Id": "69ae0c31875a22cdc2fc428961d44314d099d13a213b8b2faf2d2fe93dc15656",

"Created": "2024-09-22T13:55:28.516763084Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

},

{

"Subnet": "fc00:f853:ccd:e793::/64",

"Gateway": "fc00:f853:ccd:e793::1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"39b737c50f28c273ef540aad68b47be96861871f3559598f931f862ec3330f4c": {

"Name": "myk8s-worker3",

"EndpointID": "cb5831b437820d6e2dc4301a6224765b3885677a445bc1c3de350d92ef839d3b",

"MacAddress": "02:42:ac:12:00:04",

"IPv4Address": "172.18.0.4/16",

"IPv6Address": "fc00:f853:ccd:e793::4/64"

},

"39cf294cfa42426fe9d3bf5c796869edb9b9dc96959d78075225649c1a8b3ec7": {

"Name": "myk8s-worker",

"EndpointID": "75dab2e6384560cd87963e1df23627bd6b25e340cfee6e20c73ebf28898ad86b",

"MacAddress": "02:42:ac:12:00:03",

"IPv4Address": "172.18.0.3/16",

"IPv6Address": "fc00:f853:ccd:e793::3/64"

},

"577e0a7629bede2170017c38d0a05314ffb1f0cede7b0aa704f5c76f728ed87a": {

"Name": "myk8s-control-plane",

"EndpointID": "e571864dea4e8dce32a0b21add65ae9bf1c497b06ad1b4160fbc19c828b45212",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": "fc00:f853:ccd:e793::2/64"

},

"8a63d3a55ab5bc132e740c194fb3c56e987a2661caabdb5e8dda8dc3de5d39a8": {

"Name": "myk8s-worker2",

"EndpointID": "1d58243c51b66c5756e5ef19915c30f7aff60ade3c8caf945f27d3b26b2683d0",

"MacAddress": "02:42:ac:12:00:05",

"IPv4Address": "172.18.0.5/16",

"IPv6Address": "fc00:f853:ccd:e793::5/64"

}

},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "65535"

},

"Labels": {}

}

]

# arp scan 해두기

❯ docker exec -it myk8s-control-plane arp-scan --interfac=eth0 --localnet

Interface: eth0, type: EN10MB, MAC: 02:42:ac:12:00:02, IPv4: 172.18.0.2

Starting arp-scan 1.10.0 with 65536 hosts (https://github.com/royhills/arp-scan)

172.18.0.1 02:42:bc:93:af:d1 (Unknown: locally administered)

172.18.0.3 02:42:ac:12:00:03 (Unknown: locally administered)

172.18.0.4 02:42:ac:12:00:04 (Unknown: locally administered)

172.18.0.5 02:42:ac:12:00:05 (Unknown: locally administered)

# mypc 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 직접 지정

❯ docker run -d --rm --name mypc --network kind --ip 172.18.0.100 nicolaka/netshoot sleep infinity

7e2b0374a97f677f3fb53fbd39f84126b6a1293908a722257f82fb2db3921a21

❯ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7e2b0374a97f nicolaka/netshoot "sleep infinity" 22 seconds ago Up 21 seconds mypc

8a63d3a55ab5 kindest/node:v1.31.0 "/usr/local/bin/entr…" 49 minutes ago Up 49 minutes myk8s-worker2

39cf294cfa42 kindest/node:v1.31.0 "/usr/local/bin/entr…" 49 minutes ago Up 49 minutes myk8s-worker

39b737c50f28 kindest/node:v1.31.0 "/usr/local/bin/entr…" 49 minutes ago Up 49 minutes myk8s-worker3

577e0a7629be kindest/node:v1.31.0 "/usr/local/bin/entr…" 49 minutes ago Up 49 minutes 0.0.0.0:30000-30002->30000-30002/tcp, 127.0.0.1:60889->6443/tcp myk8s-control-plane

## 만약 kind 네트워크 대역이 다를 경우 위 IP 지정이 실패할 수 있으니, 그냥 IP 지정 없이 mypc 컨테이너 기동 할 것

## docker run -d --rm --name mypc --network kind nicolaka/netshoot sleep infinity

# 통신 확인

❯ docker exec -t mypc ping -c 1 172.18.0.1

PING 172.18.0.1 (172.18.0.1) 56(84) bytes of data.

64 bytes from 172.18.0.1: icmp_seq=1 ttl=64 time=0.158 ms

--- 172.18.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.158/0.158/0.158/0.000 ms

❯ for i in {1..5} ; do docker exec -t mypc ping -c 1 172.18.0.$i; done

docker exec -it mypc zsh

PING 172.18.0.1 (172.18.0.1) 56(84) bytes of data.

64 bytes from 172.18.0.1: icmp_seq=1 ttl=64 time=0.095 ms

--- 172.18.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.095/0.095/0.095/0.000 ms

PING 172.18.0.2 (172.18.0.2) 56(84) bytes of data.

64 bytes from 172.18.0.2: icmp_seq=1 ttl=64 time=0.117 ms

--- 172.18.0.2 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.117/0.117/0.117/0.000 ms

PING 172.18.0.3 (172.18.0.3) 56(84) bytes of data.

64 bytes from 172.18.0.3: icmp_seq=1 ttl=64 time=0.124 ms

--- 172.18.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.124/0.124/0.124/0.000 ms

PING 172.18.0.4 (172.18.0.4) 56(84) bytes of data.

64 bytes from 172.18.0.4: icmp_seq=1 ttl=64 time=0.076 ms

--- 172.18.0.4 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.076/0.076/0.076/0.000 ms

PING 172.18.0.5 (172.18.0.5) 56(84) bytes of data.

64 bytes from 172.18.0.5: icmp_seq=1 ttl=64 time=0.067 ms

--- 172.18.0.5 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.067/0.067/0.067/0.000 ms

dP dP dP

88 88 88

88d888b. .d8888b. d8888P .d8888b. 88d888b. .d8888b. .d8888b. d8888P

88' `88 88ooood8 88 Y8ooooo. 88' `88 88' `88 88' `88 88

88 88 88. ... 88 88 88 88 88. .88 88. .88 88

dP dP `88888P' dP `88888P' dP dP `88888P' `88888P' dP

Welcome to Netshoot! (github.com/nicolaka/netshoot)

Version: 0.13

7e2b0374a97f >

-------------

ifconfig

ping -c 1 172.18.0.2

exit

--------------

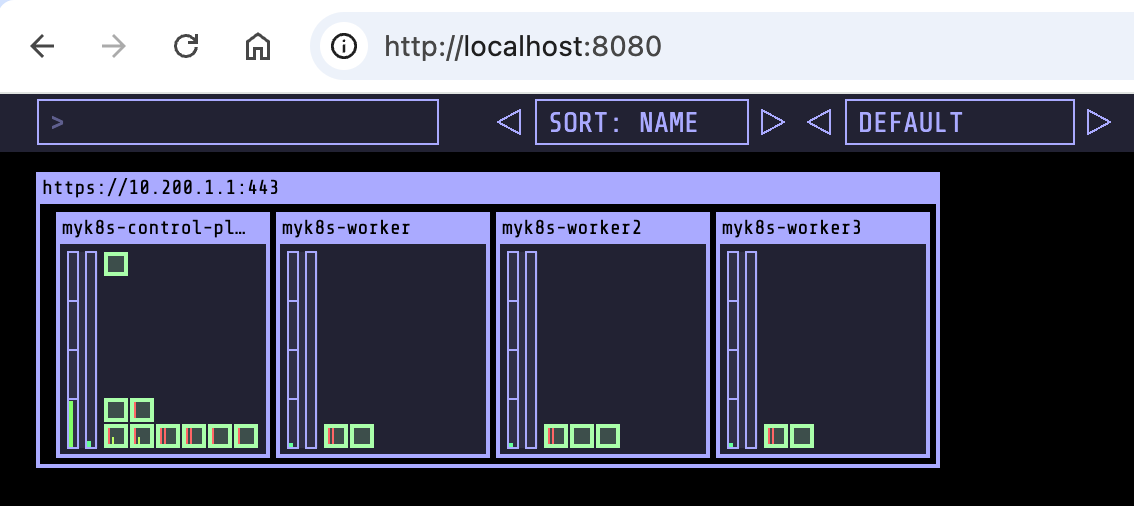

kube-ops-view 설치

# Helm으로 kube-ops-view 설치 ❯ helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30000 --set env.TZ="Asia/Seoul" --namespace kube-system "geek-cookbook" already exists with the same configuration, skipping NAME: kube-ops-view LAST DEPLOYED: Sat Sep 28 09:59:33 2024 NAMESPACE: kube-system STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: Get the application URL by running these commands: export NODE_PORT=$(kubectl get --namespace kube-system -o jsonpath="{.spec.ports[0].nodePort}" services kube-ops-view) export NODE_IP=$(kubectl get nodes --namespace kube-system -o jsonpath="{.items[0].status.addresses[0].address}") echo http://$NODE_IP:$NODE_PORT http://172.18.0.2:30000# port-forwarding 으로 local pc에서 접속하는 방법 ❯ kubectl port-forward -n kube-system svc/kube-ops-view 8080:8080 Forwarding from 127.0.0.1:8080 -> 8080 Forwarding from [::1]:8080 -> 8080 Handling connection for 8080# myk8s-control-plane 배치: nodeSelector 이용 kubectl -n kube-system edit deploy kube-ops-view --- spec: ... template: ... spec: nodeSelector: mynode: control-plane tolerations: - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" --- # 설치 확인 kubectl -n kube-system get pod -o wide -l app.kubernetes.io/instance=kube-ops-view # kube-ops-view 접속 URL 확인 (1.5 , 2 배율) : macOS 사용자 echo -e "KUBE-OPS-VIEW URL = http://localhost:30000/#scale=1.5" echo -e "KUBE-OPS-VIEW URL = http://localhost:30000/#scale=2" # kube-ops-view 접속 URL 확인 (1.5 , 2 배율) : Windows 사용자 echo -e "KUBE-OPS-VIEW URL = http://192.168.50.10:30000/#scale=1.5" echo -e "KUBE-OPS-VIEW URL = http://192.168.50.10:30000/#scale=2"

클러스터 내부를 외부에 노출

파드생성

- K8s 클러스터 내부에서만 접속

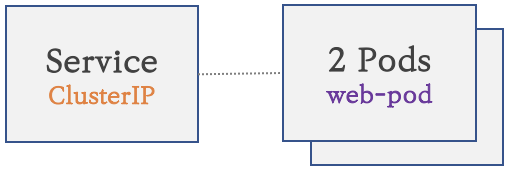

서비스(Cluster Type) 연결

- K8S 클러스터 내부에서만 접속

- 동일한 애플리케이션의 다수의 파드의 접속을 용이하게 하기 위한 서비스에 접속

- 고정 접속(호출) 방법을 제공 : 흔히 말하는 ‘고정 VirtualIP’ 와 ‘Domain주소’ 생성

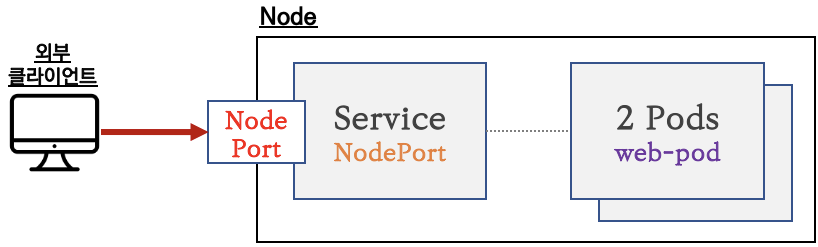

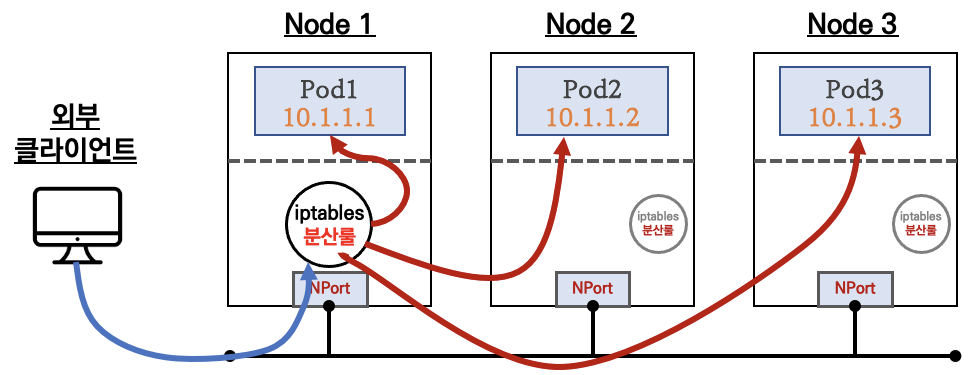

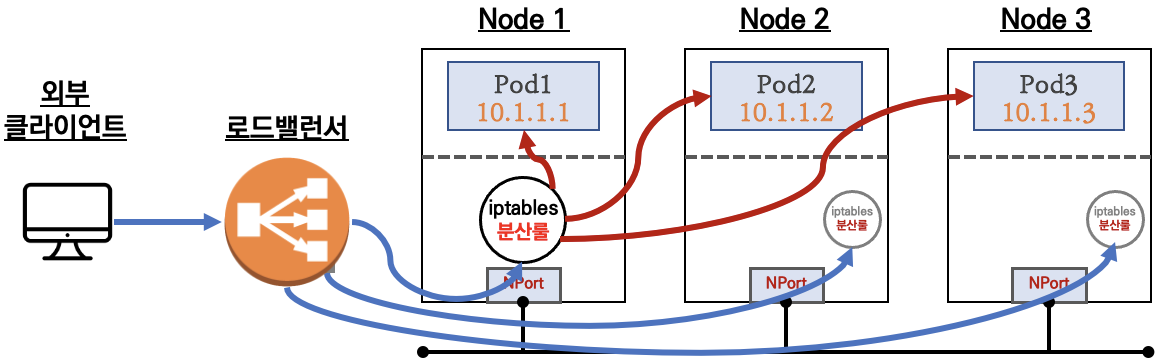

서비스(NodePort Type) 연결

- 외부 클라이언트가 서비스를 통해서 클러스터 내부의 파드로 접속

- ClusterIP 서비스를 포함합니다

- 서비스(NodePort Type)의 일부 단점을 보완한 서비스(LoadBalancer Type) 도 있습니다

서비스 종류

Cluster IP 타입

NodePort 타입

LoadBalancer 타입

약어 소개

- S.IP : Source IP , 출발지(소스) IP

- D.IP : Destination IP, 도착치(목적지) IP

- S.Port : Source Port , 출발지(소스) 포트

- D.Port : Destination Port , 도착지(목적지) 포트

- NAT : Network Address Translation , 네트워크 주소 변환

- SNAT : Source IP 를 NAT 처리, 일반적으로 출발지 IP를 변환

- DNAT : Destination IP 를 NAT 처리, 일반적으로 목적지 IP와 목적지 포트를 변환

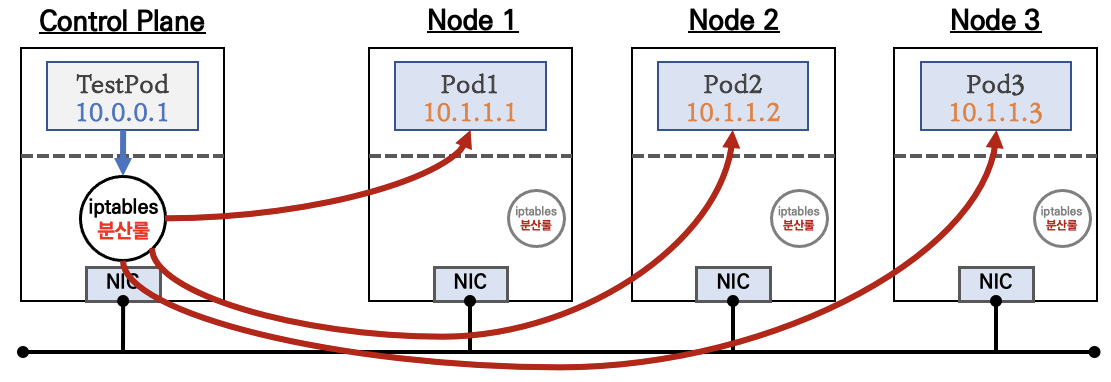

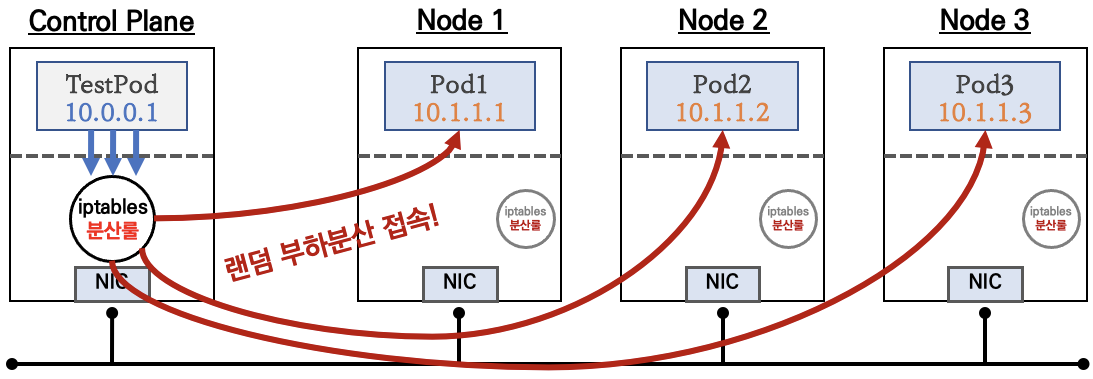

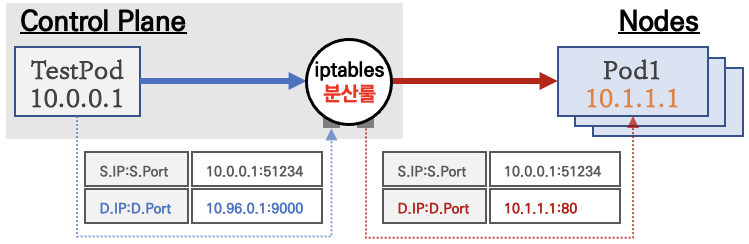

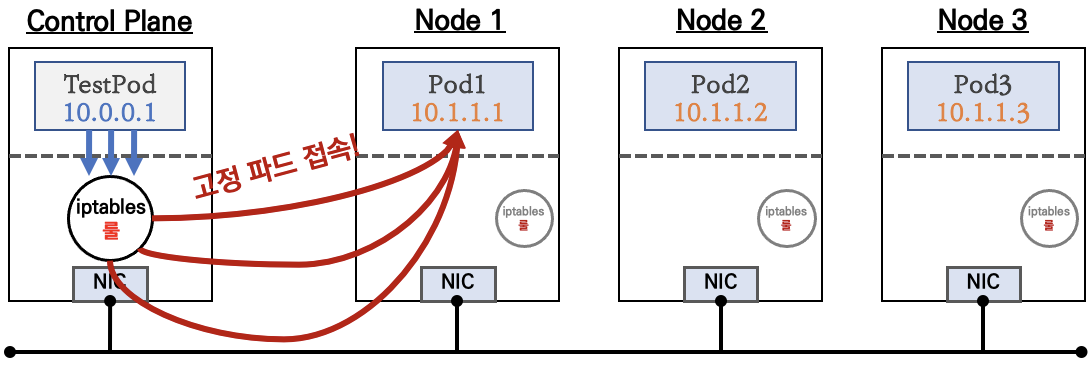

2. ClusterIP

- ClusterIP 서비스 생성 시 kube-proxy에 의해서 모든 노드에 iptables 규칙이 설

정됩니다. 예를 들면 마스터노드에 배포된 TestPod 파드에서 ClusterIP 서비스로 반

복 요청을 하게 되면, 해당 노드에 iptables 규칙에 의해서 서비스에 연동된 파드1,

파드2, 파드3으로 부하분산되어 접속됩니다

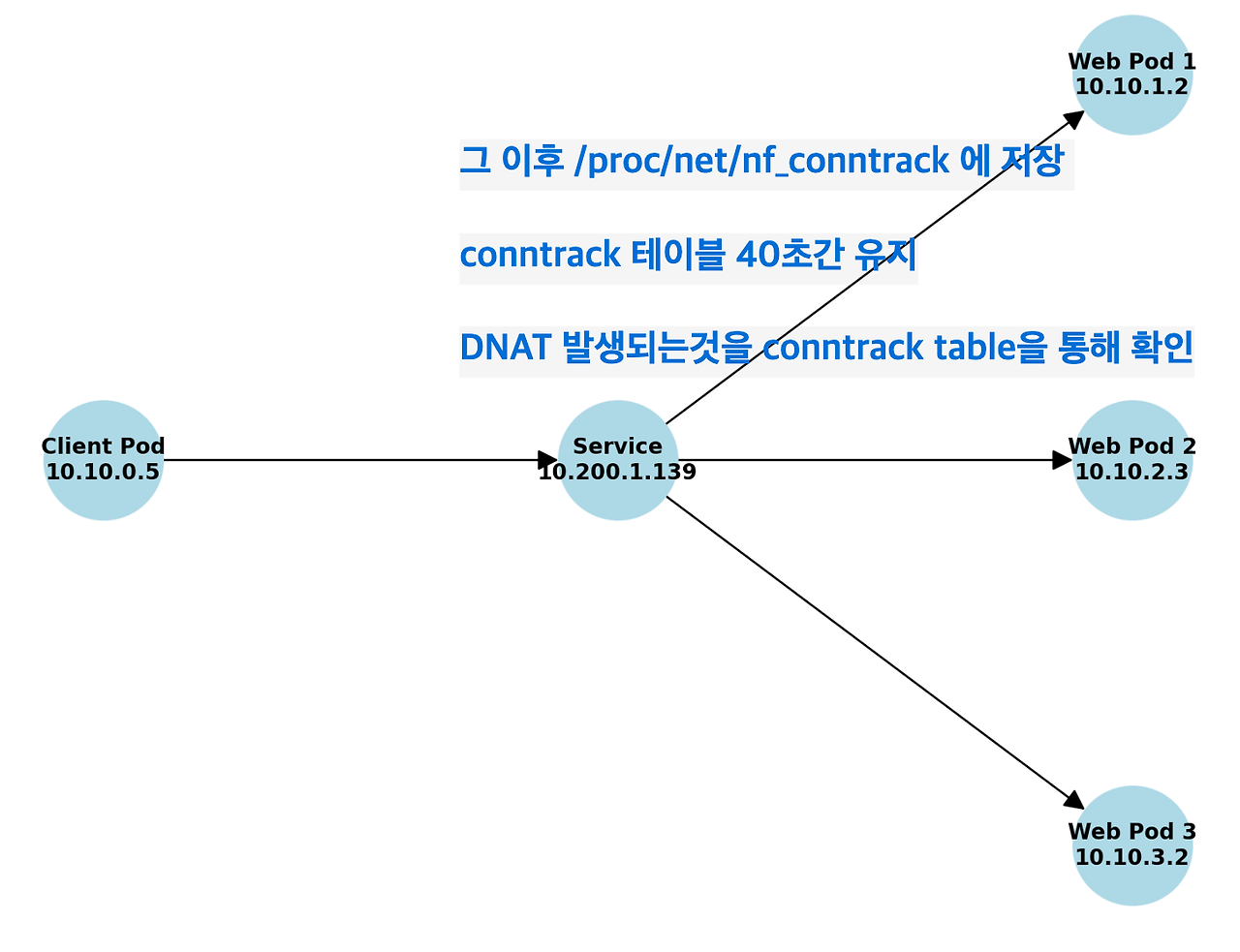

통신 흐름

-

클라이언트(TestPod)가 'CLUSTER-IP' 접속 시 해당 노드의 iptables 룰(랜덤 분산)에 의해서 DNAT 처리가 되어 목적지(backend) 파드와 통신

- iptables 분산룰(정책)은 모든 노드에 자동으로 설정됨

- 10.96.0.1은 Cluster_IP주소

-

클러스터 내부에서만 'CLUSTER-IP' 로 접근 가능 ⇒ 서비스에 DNS(도메인) 접속도 가능!

-

서비스(ClusterIP 타입) 생성하게 되면, apiserver → (kubelet) → kube-proxy → iptables 에 rule(룰)이 생성됨

-

모드 노드(마스터 포함)에 iptables rule 이 설정되므로, 파드에서 접속 시 해당 노드에 존재하는 iptables rule 에 의해서 분산 접속이 됨

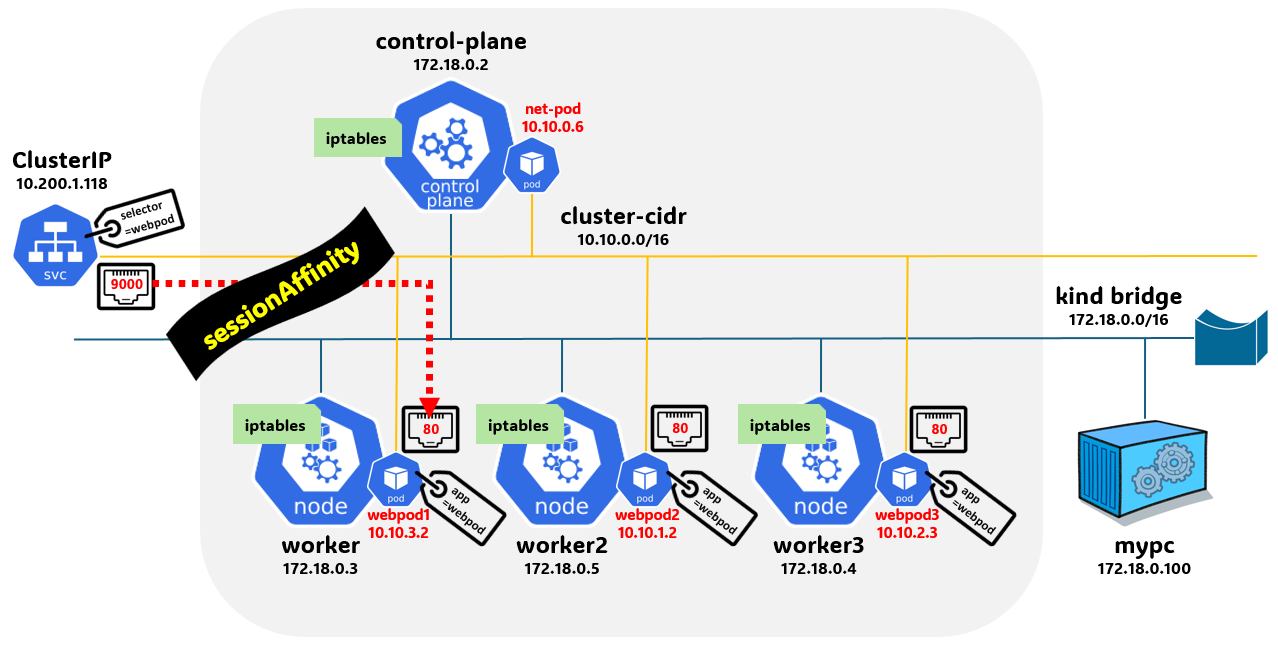

실습 구성

-

목적지(backend) 파드(Pod) 생성 : 3pod.yaml

cat <<EOT> 3pod.yaml apiVersion: v1 kind: Pod metadata: name: webpod1 labels: app: webpod spec: nodeName: myk8s-worker containers: - name: container image: traefik/whoami terminationGracePeriodSeconds: 0 --- apiVersion: v1 kind: Pod metadata: name: webpod2 labels: app: webpod spec: nodeName: myk8s-worker2 containers: - name: container image: traefik/whoami terminationGracePeriodSeconds: 0 --- apiVersion: v1 kind: Pod metadata: name: webpod3 labels: app: webpod spec: nodeName: myk8s-worker3 containers: - name: container image: traefik/whoami terminationGracePeriodSeconds: 0 EOT ❯ k apply -f 3pod.yaml pod/webpod1 created pod/webpod2 created pod/webpod3 created ❯ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES webpod1 1/1 Running 0 12s 10.10.1.2 myk8s-worker <none> <none> webpod2 1/1 Running 0 12s 10.10.3.2 myk8s-worker2 <none> <none> webpod3 1/1 Running 0 12s 10.10.2.2 myk8s-worker3 <none> <none> -

클라이언트(TestPod) 생성 : netpod.yaml

cat <<EOT> netpod.yaml apiVersion: v1 kind: Pod metadata: name: net-pod spec: nodeName: myk8s-control-plane containers: - name: netshoot-pod image: nicolaka/netshoot command: ["tail"] args: ["-f", "/dev/null"] terminationGracePeriodSeconds: 0 EOT ❯ k apply -f netpod.yaml pod/net-pod created # nodeName 지정으로 net-pod는 control-plane에 webpod는 worker1,2,3에 분산되어 배포 됨 ❯ kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES net-pod 1/1 Running 0 35s 10.10.0.6 myk8s-control-plane <none> <none> webpod1 1/1 Running 0 2m32s 10.10.1.2 myk8s-worker <none> <none> webpod2 1/1 Running 0 2m32s 10.10.3.2 myk8s-worker2 <none> <none> webpod3 1/1 Running 0 2m32s 10.10.2.2 myk8s-worker3 <none> <none> -

서비스(ClusterIP) 생성 : svc-clusterip.yaml ← spec.ports.port 와 spec.ports.targetPort 가 어떤 의미인지 꼭 이해하자!

cat <<EOT> svc-clusterip.yaml apiVersion: v1 kind: Service metadata: name: svc-clusterip spec: ports: - name: svc-webport port: 9000 # 서비스 IP 에 접속 시 사용하는 포트 port 를 의미 targetPort: 80 # 타킷 targetPort 는 서비스를 통해서 목적지 파드로 접속 시 해당 파드로 접속하는 포트를 의미 selector: app: webpod # 셀렉터 아래 app:webpod 레이블이 설정되어 있는 파드들은 해당 서비스에 연동됨 type: ClusterIP # 서비스 타입 EOT ❯ kubectl get svc,ep -n default NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.200.1.1 <none> 443/TCP 10h service/svc-clusterip ClusterIP 10.200.1.206 <none> 9000/TCP 16s NAME ENDPOINTS AGE endpoints/kubernetes 172.18.0.4:6443 10h endpoints/svc-clusterip 10.10.1.2:80,10.10.2.2:80,10.10.3.2:80 16s ❯ kubectl get endpointslices -l kubernetes.io/service-name=svc-clusterip NAME ADDRESSTYPE PORTS ENDPOINTS AGE svc-clusterip-cxbwq IPv4 80 10.10.1.2,10.10.3.2,10.10.2.2 12m ❯ kubectl describe svc svc-clusterip Name: svc-clusterip Namespace: default Labels: <none> Annotations: <none> Selector: app=webpod Type: ClusterIP IP Family Policy: SingleStack IP Families: IPv4 IP: 10.200.1.206 IPs: 10.200.1.206 Port: svc-webport 9000/TCP TargetPort: 80/TCP Endpoints: 10.10.1.2:80,10.10.3.2:80,10.10.2.2:80 Session Affinity: None Internal Traffic Policy: Cluster Events: <none>

테스트

- 생성 및 확인

# 모니터링

watch -d 'kubectl get pod -owide ;echo; kubectl get svc,ep svc-clusterip'

# 생성

kubectl apply -f 3pod.yaml,netpod.yaml,svc-clusterip.yaml

# 파드와 서비스 사용 네트워크 대역 정보 확인

kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

# 확인

kubectl get pod -owide

kubectl get svc svc-clusterip

# spec.ports.port 와 spec.ports.targetPort 가 어떤 의미인지 꼭 이해하자!

kubectl describe svc svc-clusterip

# 서비스 생성 시 엔드포인트를 자동으로 생성, 물론 수동으로 설정 생성도 가능

kubectl get endpoints svc-clusterip

kubectl get endpointslices -l kubernetes.io/service-name=svc-clusterip- 서비스(ClusterIP) 접속 확인

- 클라이언트(TestPod) Shell 에서 접속 테스트 & 서비스(ClusterIP) 부하분산 접속 확인

- 클라이언트(TestPod) Shell 에서 접속 테스트 & 서비스(ClusterIP) 부하분산 접속 확인

# webpod 파드의 IP 를 출력

❯ kubectl get pod -l app=webpod -o jsonpath="{.items[*].status.podIP}"

10.10.1.2 10.10.3.2 10.10.2.2

# webpod 파드의 IP를 변수에 지정

❯ WEBPOD1=$(kubectl get pod webpod1 -o jsonpath={.status.podIP})

WEBPOD2=$(kubectl get pod webpod2 -o jsonpath={.status.podIP})

WEBPOD3=$(kubectl get pod webpod3 -o jsonpath={.status.podIP})

echo $WEBPOD1 $WEBPOD2 $WEBPOD3

10.10.1.2 10.10.3.2 10.10.2.2

# net-pod 파드에서 webpod 파드의 IP로 직접 curl 로 반복 접속

❯ for pod in $WEBPOD1 $WEBPOD2 $WEBPOD3; do kubectl exec -it net-pod -- curl -s $pod; done

Hostname: webpod1

IP: 127.0.0.1

IP: ::1

IP: 10.10.1.2

IP: fe80::8c6f:7dff:febe:38d2

RemoteAddr: 10.10.0.6:57610

GET / HTTP/1.1

Host: 10.10.1.2

User-Agent: curl/8.7.1

Accept: */*

Hostname: webpod2

IP: 127.0.0.1

IP: ::1

IP: 10.10.3.2

IP: fe80::785a:afff:febe:a4e6

RemoteAddr: 10.10.0.6:57708

GET / HTTP/1.1

Host: 10.10.3.2

User-Agent: curl/8.7.1

Accept: */*

Hostname: webpod3

IP: 127.0.0.1

IP: ::1

IP: 10.10.2.2

IP: fe80::e4c9:9fff:fede:e267

RemoteAddr: 10.10.0.6:48214

GET / HTTP/1.1

Host: 10.10.2.2

User-Agent: curl/8.7.1

Accept: */*

❯ for pod in $WEBPOD1 $WEBPOD2 $WEBPOD3; do kubectl exec -it net-pod -- curl -s $pod | grep Hostname; done

Hostname: webpod1

Hostname: webpod2

Hostname: webpod3

❯ for pod in $WEBPOD1 $WEBPOD2 $WEBPOD3; do kubectl exec -it net-pod -- curl -s $pod | grep Host; done

Hostname: webpod1

Host: 10.10.1.2

Hostname: webpod2

Host: 10.10.3.2

Hostname: webpod3

Host: 10.10.2.2

❯ for pod in $WEBPOD1 $WEBPOD2 $WEBPOD3; do kubectl exec -it net-pod -- curl -s $pod | egrep 'Host|RemoteAddr'; done

Hostname: webpod1

RemoteAddr: 10.10.0.6:48036

Host: 10.10.1.2

Hostname: webpod2

RemoteAddr: 10.10.0.6:45152

Host: 10.10.3.2

Hostname: webpod3

RemoteAddr: 10.10.0.6:38062

Host: 10.10.2.2

# 서비스 IP 변수 지정 : svc-clusterip 의 ClusterIP주소

❯ SVC1=$(kubectl get svc svc-clusterip -o jsonpath={.spec.clusterIP})

echo $SVC1

10.200.1.206

# 위 서비스 생성 시 kube-proxy 에 의해서 iptables 규칙이 모든 노드에 추가됨

❯ docker exec -it myk8s-control-plane iptables -t nat -S | grep $SVC1

-A KUBE-SERVICES -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i iptables -t nat -S | grep $SVC1; echo; done

>> node myk8s-control-plane <<

-A KUBE-SERVICES -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

>> node myk8s-worker <<

-A KUBE-SERVICES -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

>> node myk8s-worker2 <<

-A KUBE-SERVICES -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

>> node myk8s-worker3 <<

-A KUBE-SERVICES -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-SVC-KBDEBIL6IU6WL7RF

-A KUBE-SVC-KBDEBIL6IU6WL7RF ! -s 10.10.0.0/16 -d 10.200.1.206/32 -p tcp -m comment --comment "default/svc-clusterip:svc-webport cluster IP" -m tcp --dport 9000 -j KUBE-MARK-MASQ

## (참고) ss 툴로 tcp listen 정보에는 없음 , 별도 /32 host 라우팅 추가 없음 -> 즉, iptables rule 에 의해서 처리됨을 확인

❯ docker exec -t myk8s-control-plane ss -tnlp

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 172.18.0.4:2379 0.0.0.0:* users:(("etcd",pid=487,fd=9))

LISTEN 0 4096 172.18.0.4:2380 0.0.0.0:* users:(("etcd",pid=487,fd=7))

LISTEN 0 4096 127.0.0.1:10257 0.0.0.0:* users:(("kube-controller",pid=497,fd=3))

LISTEN 0 4096 127.0.0.1:10259 0.0.0.0:* users:(("kube-scheduler",pid=446,fd=3))

LISTEN 0 4096 127.0.0.1:10248 0.0.0.0:* users:(("kubelet",pid=218,fd=15))

LISTEN 0 4096 127.0.0.1:10249 0.0.0.0:* users:(("kube-proxy",pid=968,fd=12))

LISTEN 0 4096 127.0.0.1:2381 0.0.0.0:* users:(("etcd",pid=487,fd=14))

LISTEN 0 4096 127.0.0.1:2379 0.0.0.0:* users:(("etcd",pid=487,fd=8))

LISTEN 0 4096 127.0.0.11:45917 0.0.0.0:*

LISTEN 0 4096 127.0.0.1:36941 0.0.0.0:* users:(("containerd",pid=139,fd=11))

LISTEN 0 4096 *:10250 *:* users:(("kubelet",pid=218,fd=22))

LISTEN 0 4096 *:10256 *:* users:(("kube-proxy",pid=968,fd=13))

LISTEN 0 4096 *:6443 *:* users:(("kube-apiserver",pid=440,fd=3))

❯ docker exec -t myk8s-control-plane ip -c route

default via 172.18.0.1 dev eth0

10.10.0.2 dev veth3332df74 scope host

10.10.0.3 dev veth5ce7878b scope host

10.10.0.4 dev vethd9e225fc scope host

10.10.0.5 dev veth11d53a74 scope host

10.10.0.6 dev vethff436852 scope host

10.10.1.0/24 via 172.18.0.5 dev eth0

10.10.2.0/24 via 172.18.0.3 dev eth0

10.10.3.0/24 via 172.18.0.2 dev eth0

172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.4

# TCP 80,9000 포트별 접속 확인 : 출력 정보 의미 확인

❯ kubectl exec -it net-pod -- curl -s --connect-timeout 1 $SVC1

command terminated with exit code 28

❯ kubectl exec -it net-pod -- curl -s --connect-timeout 1 $SVC1:9000

Hostname: webpod1

IP: 127.0.0.1

IP: ::1

IP: 10.10.1.2

IP: fe80::8c6f:7dff:febe:38d2

RemoteAddr: 10.10.0.6:37082

GET / HTTP/1.1

Host: 10.200.1.206:9000

User-Agent: curl/8.7.1

Accept: */*

❯ kubectl exec -it net-pod -- curl -s --connect-timeout 1 $SVC1:9000 | grep Hostname

Hostname: webpod2

❯ kubectl exec -it net-pod -- curl -s --connect-timeout 1 $SVC1:9000 | grep Hostname

Hostname: webpod3

# 서비스(ClusterIP) 부하분산 접속 확인

## for 문을 이용하여 SVC1 IP 로 100번 접속을 시도 후 출력되는 내용 중 반복되는 내용의 갯수 출력

## 반복해서 실행을 해보면, SVC1 IP로 curl 접속 시 3개의 파드로 대략 33% 정도로 부하분산 접속됨을 확인

❯ kubectl exec -it net-pod -- zsh -c "for i in {1..10}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr"

4 Hostname: webpod3

3 Hostname: webpod2

3 Hostname: webpod1

kubectl exec -it net-pod -- zsh -c "for i in {1..100}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr"

❯ kubectl exec -it net-pod -- zsh -c "for i in {1..100}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr"

36 Hostname: webpod1

32 Hostname: webpod3

32 Hostname: webpod2

혹은

❯ kubectl exec -it net-pod -- zsh -c "for i in {1..100}; do curl -s $SVC1:9000 | grep Hostname; sleep 1; done"

Hostname: webpod2

Hostname: webpod1

Hostname: webpod1

Hostname: webpod3

Hostname: webpod1

Hostname: webpod3

Hostname: webpod1

Hostname: webpod3

Hostname: webpod1

Hostname: webpod2

...

Hostname: webpod3

Hostname: webpod2

Hostname: webpod1

Hostname: webpod1

❯ kubectl exec -it net-pod -- zsh -c "for i in {1..100}; do curl -s $SVC1:9000 | grep Hostname; sleep 0.1; done"

Hostname: webpod2

Hostname: webpod3

Hostname: webpod2

Hostname: webpod2

Hostname: webpod1

Hostname: webpod2

Hostname: webpod3

Hostname: webpod1

Hostname: webpod2

...

Hostname: webpod1

Hostname: webpod2

Hostname: webpod1

Hostname: webpod3

Hostname: webpod1

❯ kubectl exec -it net-pod -- zsh -c "for i in {1..10000}; do curl -s $SVC1:9000 | grep Hostname; sleep 0.01; done"

# conntrack 확인

❯ docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/#

----------------------------------------

root@myk8s-control-plane:/# conntrack -h

Command line interface for the connection tracking system. Version 1.4.7

Usage: conntrack [commands] [options]

Commands:

-L [table] [options] List conntrack or expectation table

-G [table] parameters Get conntrack or expectation

-D [table] parameters Delete conntrack or expectation

-I [table] parameters Create a conntrack or expectation

-U [table] parameters Update a conntrack

-E [table] [options] Show events

-F [table] Flush table

-C [table] Show counter

-S Show statistics

Tables: conntrack, expect, dying, unconfirmed

Conntrack parameters and options:

-n, --src-nat ip source NAT ip

-g, --dst-nat ip destination NAT ip

-j, --any-nat ip source or destination NAT ip

-m, --mark mark Set mark

-c, --secmark secmark Set selinux secmark

-e, --event-mask eventmask Event mask, eg. NEW,DESTROY

-z, --zero Zero counters while listing

-o, --output type[,...] Output format, eg. xml

-l, --label label[,...] conntrack labels

Expectation parameters and options:

--tuple-src ip Source address in expect tuple

--tuple-dst ip Destination address in expect tuple

Updating parameters and options:

--label-add label Add label

--label-del label Delete label

Common parameters and options:

-s, --src, --orig-src ip Source address from original direction

-d, --dst, --orig-dst ip Destination address from original direction

-r, --reply-src ip Source addres from reply direction

-q, --reply-dst ip Destination address from reply direction

-p, --protonum proto Layer 4 Protocol, eg. 'tcp'

-f, --family proto Layer 3 Protocol, eg. 'ipv6'

-t, --timeout timeout Set timeout

-u, --status status Set status, eg. ASSURED

-w, --zone value Set conntrack zone

--orig-zone value Set zone for original direction

--reply-zone value Set zone for reply direction

-b, --buffer-size Netlink socket buffer size

--mask-src ip Source mask address

--mask-dst ip Destination mask address

root@myk8s-control-plane:/# conntrack -E

[NEW] tcp 6 120 SYN_SENT src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 [UNREPLIED] src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056

[UPDATE] tcp 6 60 SYN_RECV src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056

[UPDATE] tcp 6 86400 ESTABLISHED src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056 [ASSURED]

[UPDATE] tcp 6 120 FIN_WAIT src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056 [ASSURED]

[UPDATE] tcp 6 30 LAST_ACK src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056 [ASSURED]

[UPDATE] tcp 6 120 TIME_WAIT src=10.10.0.1 dst=10.10.0.5 sport=60056 dport=8181 src=10.10.0.5 dst=10.10.0.1 sport=8181 dport=60056 [ASSURED]

[NEW] tcp 6 120 SYN_SENT src=172.18.0.4 dst=172.18.0.4 sport=51790 dport=6443 [UNREPLIED] src=172.18.0.4 dst=172.18.0.4 sport=6443 dport=51790

[UPDATE] tcp 6 60 SYN_RECV src=172.18.0.4 dst=172.18.0.4 sport=51790 dport=6443 src=172.18.0.4 dst=172.18.0.4 sport=6443 dport=51790

[UPDATE] tcp 6 86400 ESTABLISHED src=172.18.0.4 dst=172.18.0.4 sport=51790 dport=6443 src=172.18.0.4 dst=172.18.0.4 sport=6443 dport=51790

root@myk8s-control-plane:/# conntrack -C

463

root@myk8s-control-plane:/# conntrack -S

cpu=0 found=0 invalid=0 insert=0 insert_failed=0 drop=0 early_drop=0 error=0 search_restart=0 clash_resolve=0 chaintoolong=0

cpu=1 found=0 invalid=0 insert=0 insert_failed=0 drop=0 early_drop=0 error=0 search_restart=0 clash_resolve=0 chaintoolong=0

cpu=2 found=0 invalid=0 insert=0 insert_failed=0 drop=0 early_drop=0 error=0 search_restart=0 clash_resolve=0 chaintoolong=0

cpu=3 found=0 invalid=0 insert=0 insert_failed=0 drop=0 early_drop=0 error=0 search_restart=0 clash_resolve=0 chaintoolong=0

root@myk8s-control-plane:/# conntrack -L --src 10.10.0.6 # net-pod IP

tcp 6 112 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=45850 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=45850 [ASSURED] mark=0 use=1

tcp 6 119 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55988 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=55988 [ASSURED] mark=0 use=1

tcp 6 118 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55918 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=55918 [ASSURED] mark=0 use=1

tcp 6 117 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55852 dport=9000 src=10.10.1.2 dst=10.10.0.6 sport=80 dport=55852 [ASSURED] mark=0 use=1

tcp 6 115 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=46202 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=46202 [ASSURED] mark=0 use=1

tcp 6 118 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55910 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=55910 [ASSURED] mark=0 use=1

tcp 6 117 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=46290 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=46290 [ASSURED] mark=0 use=1

tcp 6 119 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55952 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=55952 [ASSURED] mark=0 use=1

tcp 6 119 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=56004 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=56004 [ASSURED] mark=0 use=1

tcp 6 113 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=46018 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=46018 [ASSURED] mark=0 use=1

tcp 6 118 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=55878 dport=9000 src=10.10.1.2 dst=10.10.0.6 sport=80 dport=55878 [ASSURED] mark=0 use=1

root@myk8s-control-plane:/# conntrack -L --dst $SVC1 # service ClusterIP

tcp 6 38 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=45786 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=45786 [ASSURED] mark=0 use=1

tcp 6 48 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=59934 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=59934 [ASSURED] mark=0 use=1

tcp 6 47 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=59886 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=59886 [ASSURED] mark=0 use=1

tcp 6 35 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=49076 dport=9000 src=10.10.1.2 dst=10.10.0.6 sport=80 dport=49076 [ASSURED] mark=0 use=1

tcp 6 29 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=48592 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=48592 [ASSURED] mark=0 use=1

tcp 6 43 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=46176 dport=9000 src=10.10.1.2 dst=10.10.0.6 sport=80 dport=46176 [ASSURED] mark=0 use=1

tcp 6 61 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=50614 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=50614 [ASSURED] mark=0 use=1

tcp 6 31 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=48712 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=48712 [ASSURED] mark=0 use=1

tcp 6 24 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=58558 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=58558 [ASSURED] mark=0 use=1

tcp 6 44 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=46202 dport=9000 src=10.10.1.2 dst=10.10.0.6 sport=80 dport=46202 [ASSURED] mark=0 use=1

tcp 6 54 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=60374 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=60374 [ASSURED] mark=0 use=1

tcp 6 53 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=60304 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=60304 [ASSURED] mark=0 use=1

tcp 6 52 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=60176 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=60176 [ASSURED] mark=0 use=1

tcp 6 58 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=50346 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=50346 [ASSURED] mark=0 use=1

tcp 6 38 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=45758 dport=9000 src=10.10.3.2 dst=10.10.0.6 sport=80 dport=45758 [ASSURED] mark=0 use=2

tcp 6 32 TIME_WAIT src=10.10.0.6 dst=10.200.1.206 sport=48760 dport=9000 src=10.10.2.2 dst=10.10.0.6 sport=80 dport=48760 [ASSURED] mark=0 use=1

tcp

exit

----------------------------------------

# (참고) Link layer 에서 동작하는 ebtables

root@myk8s-control-plane:/# ebtables -L

Bridge table: filter

Bridge chain: INPUT, entries: 0, policy: ACCEPT

Bridge chain: FORWARD, entries: 0, policy: ACCEPT

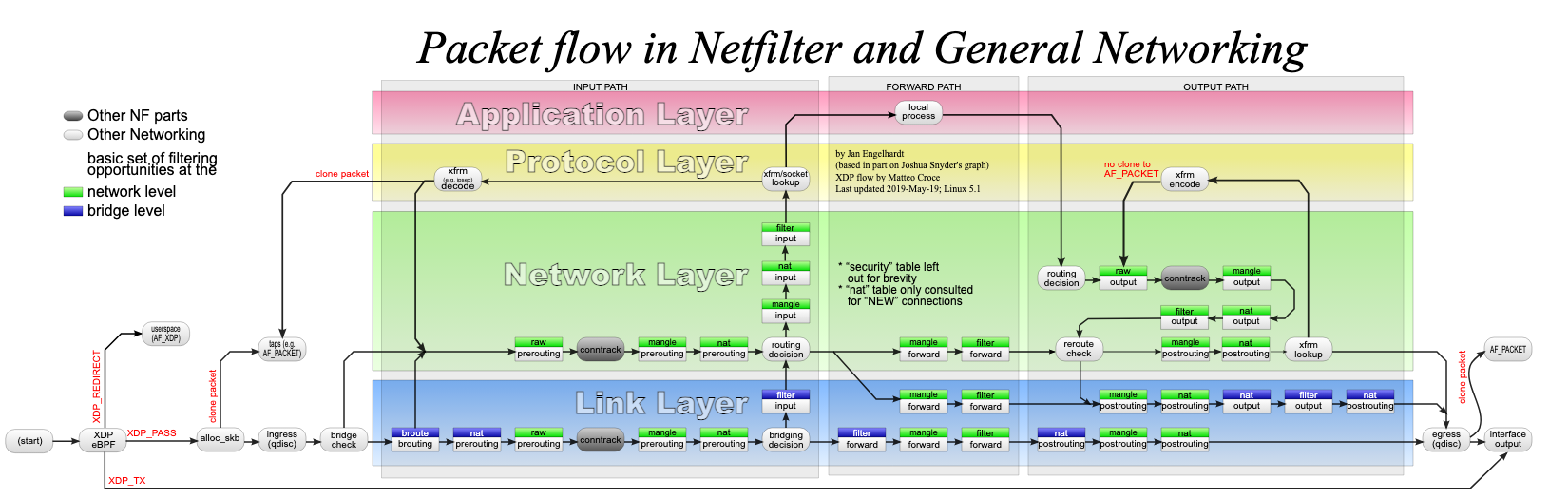

Bridge chain: OUTPUT, entries: 0, policy: ACCEPT- Packet flow in Netfilter and General Networking

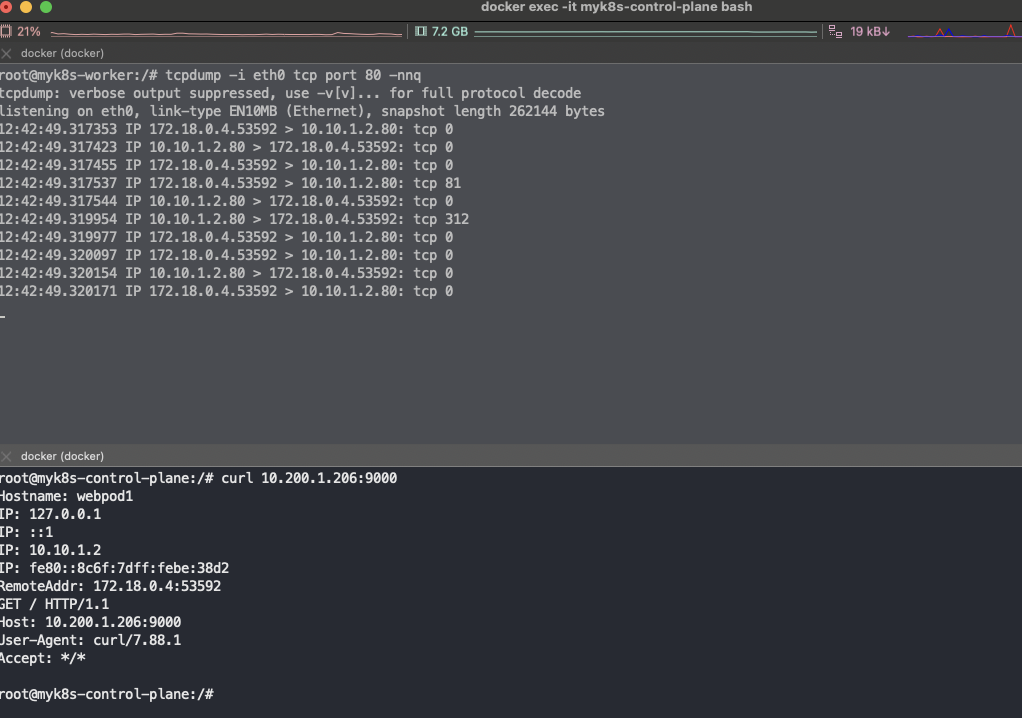

각 워커노드에서 패킷 덤프 확인

-

방안1 : 1대 혹은 3대 bash 진입 후 tcpdump 해둘 것

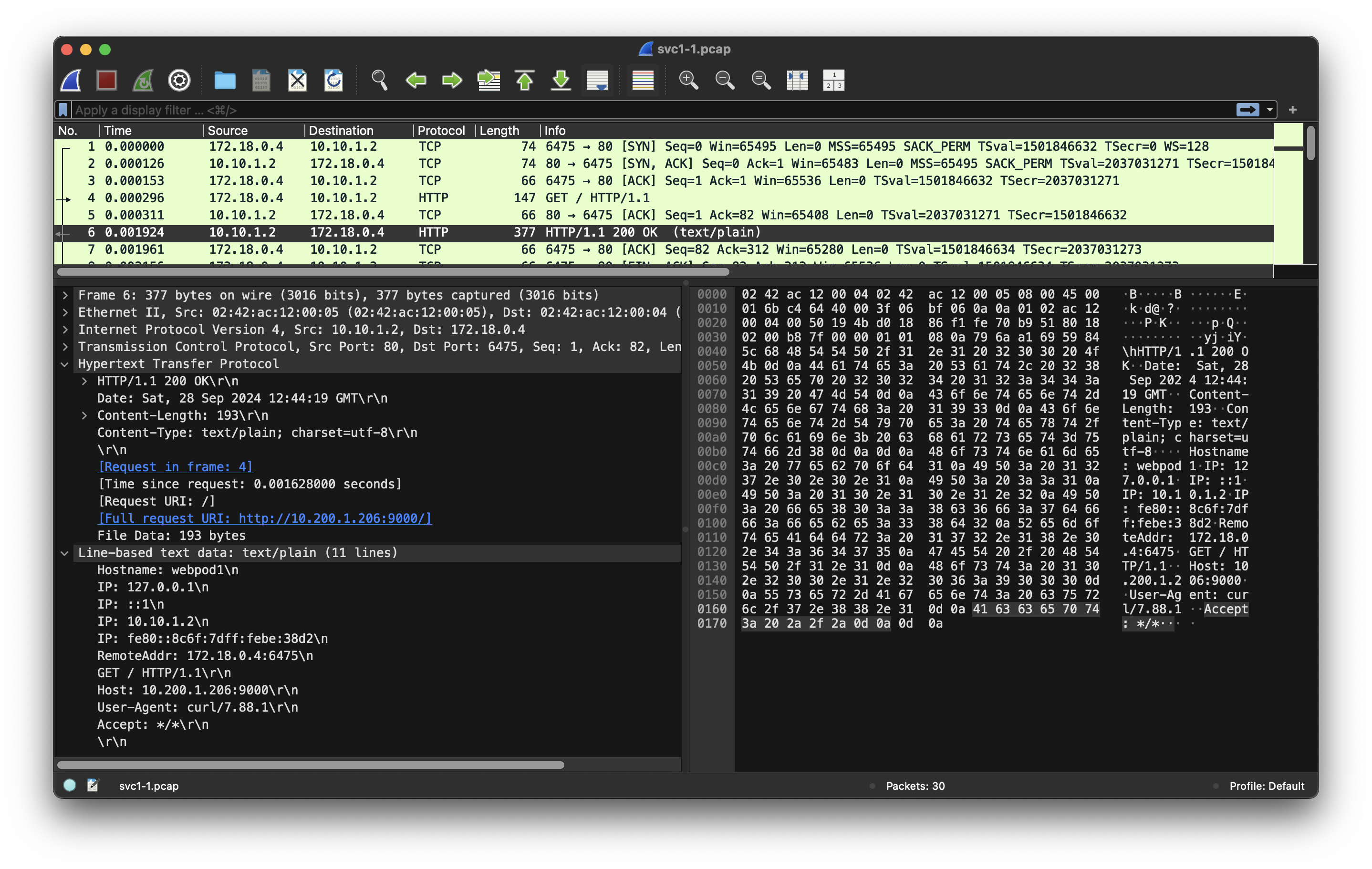

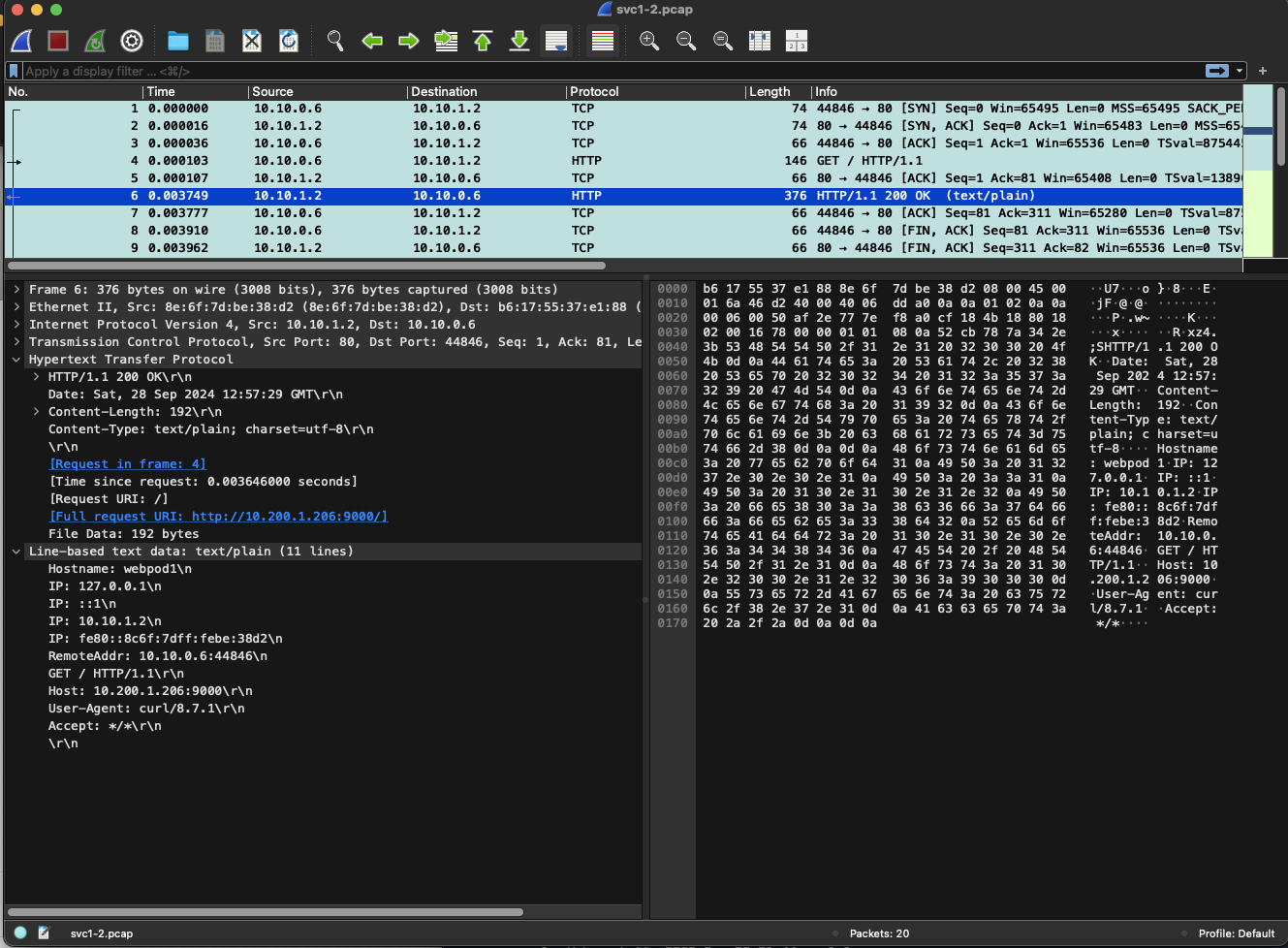

docker exec -it myk8s-worker bash docker exec -it myk8s-worker2 bash docker exec -it myk8s-worker3 bash ---------------------------------- # nic 정보 확인 ip -c link ip -c route ip -c addr # tcpdump/ngrep : eth0 >> tcp 9000 포트 트래픽은 왜 없을까? iptables rule 동작 그림을 한번 더 확인하고 이해해보자 ## ngrep 네트워크 패킷 분석기 활용해보기 : 특정 url 호출에 대해서만 필터 등 깔끔하게 볼 수 있음 - 링크 tcpdump -i eth0 tcp port 80 -nnq

tcpdump -i eth0 tcp port 80 -w /root/svc1-1.pcap tcpdump -i eth0 tcp port 9000 -nnq root@myk8s-worker:~# ngrep -tW byline -d eth0 '' 'tcp port 80' interface: eth0 (172.18.0.0/255.255.0.0) filter: ( tcp port 80 ) and ((ip || ip6) || (vlan && (ip || ip6))) #### T 2024/09/28 12:51:51.023384 10.10.0.6:45094 -> 10.10.1.2:80 [AP] #4 GET / HTTP/1.1. Host: 10.200.1.206:9000. User-Agent: curl/8.7.1. Accept: */*. . ## T 2024/09/28 12:51:51.024149 10.10.1.2:80 -> 10.10.0.6:45094 [AP] #6 HTTP/1.1 200 OK. Date: Sat, 28 Sep 2024 12:51:51 GMT. Content-Length: 192. Content-Type: text/plain; charset=utf-8. . Hostname: webpod1 IP: 127.0.0.1 IP: ::1 IP: 10.10.1.2 IP: fe80::8c6f:7dff:febe:38d2 RemoteAddr: 10.10.0.6:45094 GET / HTTP/1.1. Host: 10.200.1.206:9000. User-Agent: curl/8.7.1. Accept: */*. . #### # tcpdump/ngrep : vethX VETH1=veth232ce77a # <각자 자신의 veth 이름>root@myk8s-worker:~# VETH1=veth232ce77a root@myk8s-worker:~# tcpdump -i $VETH1 tcp port 80 -nn tcpdump: verbose output suppressed, use -v[v]... for full protocol decode listening on veth232ce77a, link-type EN10MB (Ethernet), snapshot length 262144 bytes 12:55:44.539521 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [S], seq 1147449536, win 65495, options [mss 65495,sackOK,TS val 875340513 ecr 0,nop,wscale 7], length 0 12:55:44.539540 IP 10.10.1.2.80 > 10.10.0.6.57148: Flags [S.], seq 90445253, ack 1147449537, win 65483, options [mss 65495,sackOK,TS val 1388961796 ecr 875340513,nop,wscale 7], length 0 12:55:44.539558 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [.], ack 1, win 512, options [nop,nop,TS val 875340513 ecr 1388961796], length 0 12:55:44.539616 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [P.], seq 1:81, ack 1, win 512, options [nop,nop,TS val 875340513 ecr 1388961796], length 80: HTTP: GET / HTTP/1.1 12:55:44.539620 IP 10.10.1.2.80 > 10.10.0.6.57148: Flags [.], ack 81, win 511, options [nop,nop,TS val 1388961796 ecr 875340513], length 0 12:55:44.541805 IP 10.10.1.2.80 > 10.10.0.6.57148: Flags [P.], seq 1:311, ack 81, win 512, options [nop,nop,TS val 1388961799 ecr 875340513], length 310: HTTP: HTTP/1.1 200 OK 12:55:44.541858 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [.], ack 311, win 510, options [nop,nop,TS val 875340516 ecr 1388961799], length 0 12:55:44.542075 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [F.], seq 81, ack 311, win 512, options [nop,nop,TS val 875340516 ecr 1388961799], length 0 12:55:44.542791 IP 10.10.1.2.80 > 10.10.0.6.57148: Flags [F.], seq 311, ack 82, win 512, options [nop,nop,TS val 1388961800 ecr 875340516], length 0 12:55:44.542816 IP 10.10.0.6.57148 > 10.10.1.2.80: Flags [.], ack 312, win 512, options [nop,nop,TS val 875340517 ecr 1388961800], length 0tcpdump -i $VETH1 tcp port 80 -w /root/svc1-2.pcap tcpdump -i $VETH1 tcp port 9000 -nn ngrep -tW byline -d $VETH1 '' 'tcp port 80' exit ---------------------------------- -

방안2 : 노드(?) 컨테이너 bash 직접 접속하지 않고 호스트에서 tcpdump 하기

docker exec -it myk8s-worker tcpdump -i eth0 tcp port 80 -nnq VETH1=<각자 자신의 veth 이름> # docker exec -it myk8s-worker ip -c route docker exec -it myk8s-worker tcpdump -i $VETH1 tcp port 80 -nnq # 호스트PC에 pcap 파일 복사 >> wireshark 에서 분석 docker cp myk8s-worker:/root/svc1-1.pcap . docker cp myk8s-worker:/root/svc1-2.pcap .

Session Affinity

-

서비스 접속 시 엔드포인트에 파드별로 랜덤 분산되어 접속됩니다.

-

클라이언트의 요청을 매번 동일한 목적지 파드로 전달하기 위해서는 세션 어피니티 설정이 필요합니다.

-

클라이언트가 서비스를 통해서 최초 전달된 파드에 대한 연결 상태를 기록해두고, 이후 동일한 클라이언트가 서비스에 접속 시 연결상태 정보를 확인하여 최초 전달된 파드, 즉 동일한 혹은 마치 고정적인 파드로 전달할 수 있습니다.

-

설정 및 파드 접속 확인

# 기본 정보 확인 ❯ kubectl get svc svc-clusterip -o yaml apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"svc-clusterip","namespace":"default"},"spec":{"ports":[{"name":"svc-webport","port":9000,"targetPort":80}],"selector":{"app":"webpod"},"type":"ClusterIP"}} creationTimestamp: "2024-09-28T10:15:27Z" name: svc-clusterip namespace: default resourceVersion: "31042" uid: dd626500-7b5c-41cc-854a-e951285c6a92 spec: clusterIP: 10.200.1.206 clusterIPs: - 10.200.1.206 internalTrafficPolicy: Cluster ipFamilies: - IPv4 ipFamilyPolicy: SingleStack ports: - name: svc-webport port: 9000 protocol: TCP targetPort: 80 selector: app: webpod sessionAffinity: None type: ClusterIP status: loadBalancer: {} ❯ kubectl get svc svc-clusterip -o yaml | grep sessionAffinity sessionAffinity: None # 반복 접속 ❯ kubectl exec -it net-pod -- zsh -c "while true; do curl -s --connect-timeout 1 $SVC1:9000 | egrep 'Hostname|IP: 10|Remote'; date '+%Y-%m-%d %H:%M:%S' ; echo ; sleep 1; done" Hostname: webpod2 IP: 10.10.3.2 RemoteAddr: 10.10.0.6:37044 2024-09-28 13:46:15 Hostname: webpod2 IP: 10.10.3.2 RemoteAddr: 10.10.0.6:37050 2024-09-28 13:46:16 Hostname: webpod2 IP: 10.10.3.2 RemoteAddr: 10.10.0.6:37056 2024-09-28 13:46:18 Hostname: webpod1 IP: 10.10.1.2 RemoteAddr: 10.10.0.6:37062 2024-09-28 13:46:19 Hostname: webpod3 IP: 10.10.2.2 RemoteAddr: 10.10.0.6:37078 2024-09-28 13:46:20 # sessionAffinity: ClientIP 설정 변경 ❯ kubectl patch svc svc-clusterip -p '{"spec":{"sessionAffinity":"ClientIP"}}' service/svc-clusterip patched 혹은 kubectl get svc svc-clusterip -o yaml | sed -e "s/sessionAffinity: None/sessionAffinity: ClientIP/" | kubectl apply -f - # ❯ kubectl get svc svc-clusterip -o yaml ... sessionAffinity: ClientIP sessionAffinityConfig: clientIP: timeoutSeconds: 10800 ... # 클라이언트(TestPod) Shell 실행 ❯ kubectl exec -it net-pod -- zsh -c "for i in {1..100}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr" 100 Hostname: webpod2 ❯ kubectl exec -it net-pod -- zsh -c "for i in {1..1000}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr" 1000 Hostname: webpod2 -

클라이언트(TestPod) → 서비스(ClusterIP) 접속 시 : 1개의 목적지(backend) 파드로 고정 접속됨

-

iptables 정책 적용 확인 : 기존 룰에 고정 연결 관련 추가됨