KANS(Kubernetes Advanced Networking Study) 3기 과정으로 학습한 내용을 정리 또는 실습한 정리한 게시글입니다. 5주차는 LoadBalancer(MetalLB), IPVS에 대해 학습한 내용을 정리하였습니다. 추가적으로 Terraform 이용하여 AWS에서 K8S 실습환경 구성하는 방법을 추가하였습니다.

1. 실습을 위한 K8S 설치

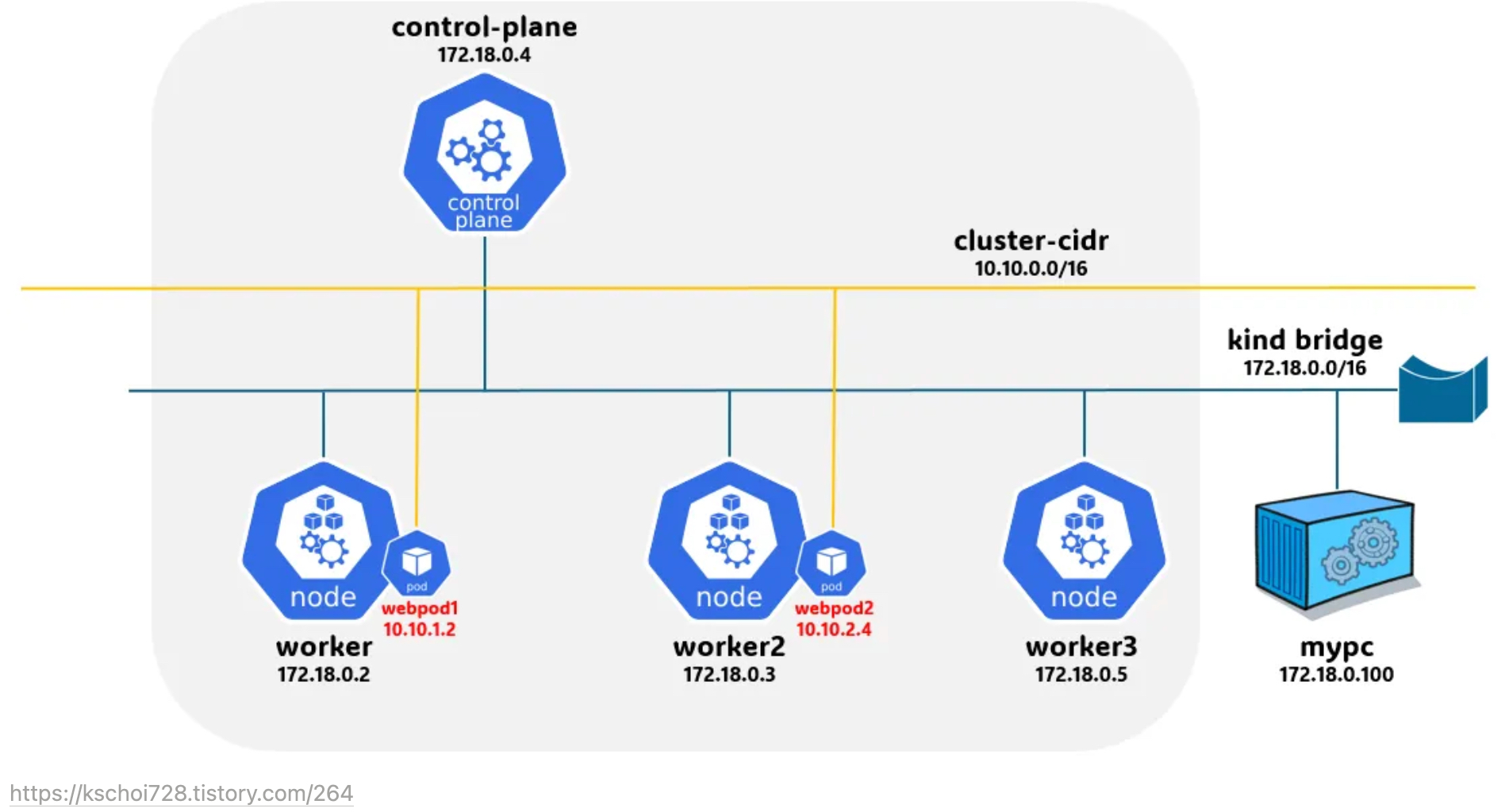

K8S Cluster 구성도

실습 환경

Docker기반으로 kind이용하여 컨테이너 노드 자동 생성 후 k8s 구성합니다

K8S v1.31.0 , CNI(Kindnet, Direct Routing mode) , IPTABLES proxy mode

- 노드(실제로는 컨테이너) 네트워크 대역 : 172.18.0.0/16

- 파드 사용 네트워크 대역 : 10.10.0.0/16 ⇒ 각각 10.10.1.0/24, 10.10.2.0/24, 10.10.3.0/24, 10.10.4.0/24

- 서비스 사용 네트워크 대역 : 10.200.1.0/241) Terraform 이용 AWS EC2 환경에 Kind 이용 K8S 설치

- 사전 준비 : AWS Account, IAM User, Credential, Keypair

- 소스 : https://github.com/icebreaker70/terraform-ec2-kind-k8s.git

- AWS Credential 구성

# IAM Console에서 devops 계정 생성시 AdministratorAccess 권한부여, Access key 생성

# CLI Credential 구성

❯ aws configure --profile devops

AWS Access Key ID [*************]:

AWS Secret Access Key [****************]:

Default region name [ap-northeast-2]:

Default output format [json]:

# devops를 기본 aws profile로 지정

❯ export AWS_PROFILE=devops

❯ aws sts get-caller-identity

{

"UserId": "AIDAX2ZEYLDWQWVQ4DQVY",

"Account": "538*****3**",

"Arn": "arn:aws:iam::538*****3**:user/devops"

}-

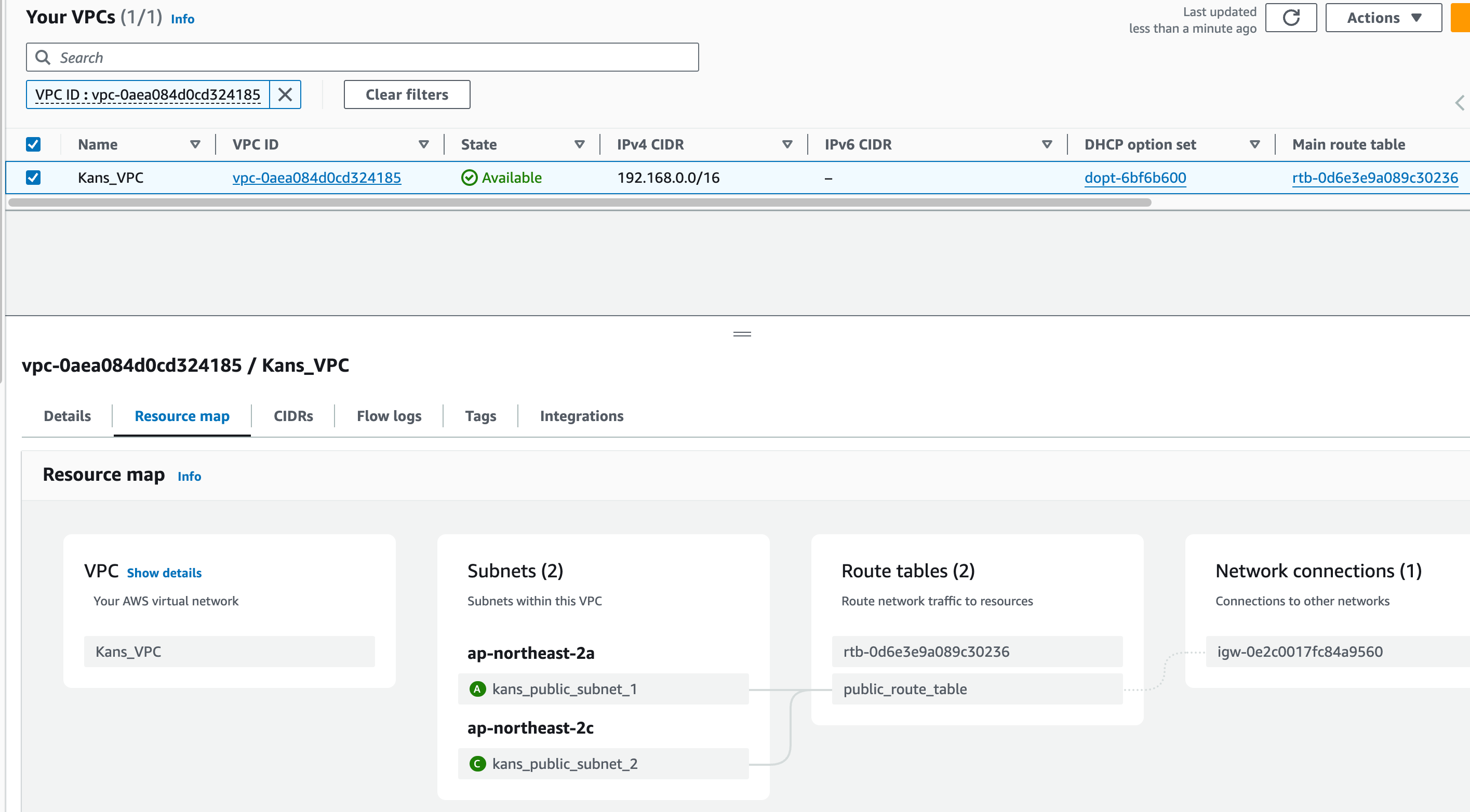

Terraform code : main.tf

# Provider 설정 #-------------- provider "aws" { region = "ap-northeast-2" # 원하는 AWS 리전으로 변경 profile = "devops" } #-------------- # VPC 생성 #-------------- resource "aws_vpc" "main" { cidr_block = "192.168.0.0/16" enable_dns_support = true enable_dns_hostnames = true tags = { Name = "Kans_VPC" } } # 인터넷 게이트웨이 resource "aws_internet_gateway" "gw" { vpc_id = aws_vpc.main.id } # 퍼블릭 서브넷 생성 (3개) resource "aws_subnet" "public_1" { vpc_id = aws_vpc.main.id cidr_block = "192.168.0.0/23" availability_zone = "ap-northeast-2a" # 사용하려는 가용 영역으로 변경 map_public_ip_on_launch = true tags = { Name = "kans_public_subnet_1" } } resource "aws_subnet" "public_2" { vpc_id = aws_vpc.main.id cidr_block = "192.168.2.0/23" availability_zone = "ap-northeast-2c" # 사용하려는 가용 영역으로 변경 map_public_ip_on_launch = true tags = { Name = "kans_public_subnet_2" } } # 라우팅 테이블 생성 resource "aws_route_table" "public" { vpc_id = aws_vpc.main.id route { cidr_block = "0.0.0.0/0" gateway_id = aws_internet_gateway.gw.id } tags = { Name = "public_route_table" } } # 라우팅 테이블을 서브넷에 연결 resource "aws_route_table_association" "public_1_assoc" { subnet_id = aws_subnet.public_1.id route_table_id = aws_route_table.public.id } resource "aws_route_table_association" "public_2_assoc" { subnet_id = aws_subnet.public_2.id route_table_id = aws_route_table.public.id } #------------------- # Security Group 생성 #------------------- resource "aws_security_group" "k8s_security_group" { vpc_id = aws_vpc.main.id ingress { from_port = 0 to_port = 0 protocol = -1 cidr_blocks = ["120.180.150.80/32"] # 본인PC 외부IP 등록 } ingress { from_port = 0 to_port = 65535 protocol = "tcp" cidr_blocks = ["192.168.0.0/16"] } egress { from_port = 0 to_port = 0 protocol = "-1" cidr_blocks = ["0.0.0.0/0"] } tags = { Name = "Kans-SG" } } #------------------- # EC2 인스턴스 생성 #------------------- resource "aws_instance" "k8s_node" { ami = "ami-040c33c6a51fd5d96" # Ubuntu 24.04 LTS AMI instance_type = "t3.medium" subnet_id = aws_subnet.public_1.id security_groups = [aws_security_group.k8s_security_group.id] key_name = "kans" # 본인 Keypair로 변경 root_block_device { # 코드 추가 ( 현재 기본 값인 8 GiB 로 동작 중 ) volume_size = 50 } tags = { Name = "MyServer" } associate_public_ip_address = true # 파일을 인스턴스에 복사 provisioner "file" { source = "init_cfg.sh" # 로컬에서 작성한 스크립트 경로 destination = "/home/ubuntu/init_cfg.sh" connection { type = "ssh" user = "ubuntu" private_key = file("~/.ssh/kans.pem") host = self.public_ip } } # 복사한 스크립트를 실행 provisioner "remote-exec" { connection { type = "ssh" user = "ubuntu" private_key = file("~/.ssh/kans.pem") host = self.public_ip } inline = [ "chmod +x /home/ubuntu/init_cfg.sh", "sudo /home/ubuntu/init_cfg.sh" # 업로드한 스크립트를 실행 ] } } #----------------- # EC2 EIP를 출력 #----------------- output "ec2_public_ip" { description = "Public IP addresses of the EC2 instance" value = aws_instance.k8s_node.public_ip } -

init_cfg.sh : kind외에 k8s 환경 구성

#!/bin/bash hostnamectl --static set-hostname MyServer # Config convenience echo 'alias vi=vim' >> /etc/profile echo "sudo su -" >> /home/ubuntu/.bashrc ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime # Disable AppArmor systemctl stop ufw && systemctl disable ufw systemctl stop apparmor && systemctl disable apparmor # Install packages apt update && apt-get install bridge-utils net-tools jq tree unzip kubecolor tcpdump -y # Install Kind curl -Lo ./kind https://kind.sigs.k8s.io/dl/v0.24.0/kind-linux-amd64 chmod +x ./kind mv ./kind /usr/bin # Install Docker Engine echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf modprobe br_netfilter sysctl -p /etc/sysctl.conf curl -fsSL https://get.docker.com | sh # Install kubectl curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl" install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl # Install Helm curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash # Alias kubectl to k echo 'alias kc=kubecolor' >> /etc/profile echo 'alias k=kubectl' >> /etc/profile echo 'complete -o default -F __start_kubectl k' >> /etc/profile # kubectl Source the completion source <(kubectl completion bash) echo 'source <(kubectl completion bash)' >> /etc/profile # Install Kubectx & Kubens git clone https://github.com/ahmetb/kubectx /opt/kubectx ln -s /opt/kubectx/kubens /usr/local/bin/kubens ln -s /opt/kubectx/kubectx /usr/local/bin/kubectx # Install Kubeps & Setting PS1 git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1 cat <<"EOT" >> ~/.bash_profile source /root/kube-ps1/kube-ps1.sh KUBE_PS1_SYMBOL_ENABLE=true function get_cluster_short() { echo "$1" | cut -d . -f1 } KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short KUBE_PS1_SUFFIX=') ' PS1='$(kube_ps1)'$PS1 EOT # To increase Resource limits by kind k8s sysctl fs.inotify.max_user_watches=524288 sysctl fs.inotify.max_user_instances=512 echo 'fs.inotify.max_user_watches=524288' > /etc/sysctl.d/99-kind.conf echo 'fs.inotify.max_user_instances=512' > /etc/sysctl.d/99-kind.conf sysctl -p sysctl --system -

Terraform 코드 실행

❯ terraform init && terraform fmt && terraform validate ❯ terraform tfplan -out tfplan ❯ terraform apply tfplan ❯ terraform state list aws_instance.k8s_node aws_internet_gateway.gw aws_route_table.public aws_route_table_association.public_1_assoc aws_route_table_association.public_2_assoc aws_security_group.k8s_security_group aws_subnet.public_1 aws_subnet.public_2 aws_vpc.main ❯ terraform output ec2_public_ip = "13.125.83.248" -

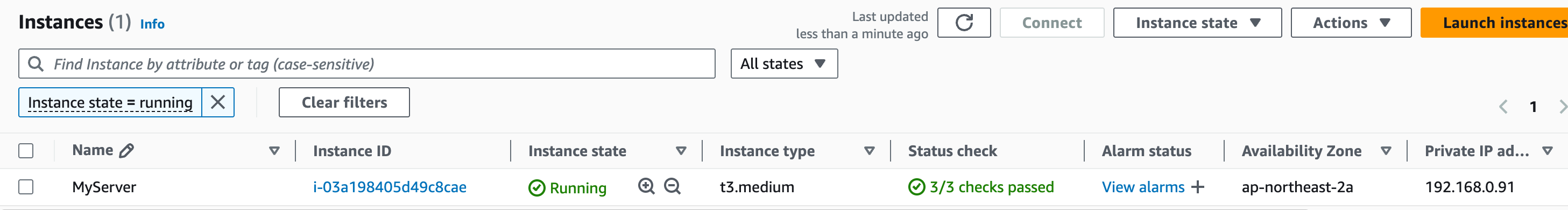

vpc & ec2 생성결과

-

EC2 접속

❯ ssh -i ~/.ssh/martha.pem ubuntu@$(terraform output -raw ec2_public_ip) -

서버 체크

## 현재 디렉터리 확인

pwd

## 계정 정보 확인

whoami

id

## ubuntu 버전 확인

# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 24.04.1 LTS

Release: 24.04

Codename: noble

## 설치 툴 버전 확인

# kind v0.24.0 go1.22.6 linux/amd64

## docker version # 출력 하단에 'WARNING: bridge-nf-call-iptables' 에러 없이 출력 되는지 꼭 확인!

# docker version

Client: Docker Engine - Community

Version: 27.3.1

API version: 1.47

Go version: go1.22.7

Git commit: ce12230

Built: Fri Sep 20 11:40:59 2024

OS/Arch: linux/amd64

Context: default

Server: Docker Engine - Community

Engine:

Version: 27.3.1

API version: 1.47 (minimum version 1.24)

Go version: go1.22.7

Git commit: 41ca978

Built: Fri Sep 20 11:40:59 2024

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.7.22

GitCommit: 7f7fdf5fed64eb6a7caf99b3e12efcf9d60e311c

runc:

Version: 1.1.14

GitCommit: v1.1.14-0-g2c9f560

docker-init:

Version: 0.19.0

GitCommit: de40ad0

# kubectl version --client=true

Client Version: v1.31.1

Kustomize Version: v5.4.2

# helm version

version.BuildInfo{Version:"v3.16.1", GitCommit:"5a5449dc42be07001fd5771d56429132984ab3ab", GitTreeState:"clean", GoVersion:"go1.22.7"}-

kind-svc.yaml

cat <<EOT> kind-svc.yaml kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 featureGates: "InPlacePodVerticalScaling": true #실행 중인 파드의 리소스 요청 및 제한을 변경할 수 있게 합니다. "MultiCIDRServiceAllocator": true #서비스에 대해 여러 CIDR 블록을 사용할 수 있게 합니다. nodes: - role: control-plane labels: mynode: control-plane topology.kubernetes.io/zone: ap-northeast-2a extraPortMappings: #컨테이너 포트를 호스트 포트에 매핑하여 클러스터 외부에서 서비스에 접근할 수 있도록 합니다. - containerPort: 30000 hostPort: 30000 - containerPort: 30001 hostPort: 30001 - containerPort: 30002 hostPort: 30002 - containerPort: 30003 hostPort: 30003 - containerPort: 30004 hostPort: 30004 kubeadmConfigPatches: - | kind: ClusterConfiguration apiServer: extraArgs: #API 서버에 추가 인수를 제공 runtime-config: api/all=true #모든 API 버전을 활성화 controllerManager: extraArgs: bind-address: 0.0.0.0 etcd: local: extraArgs: listen-metrics-urls: http://0.0.0.0:2381 scheduler: extraArgs: bind-address: 0.0.0.0 - | kind: KubeProxyConfiguration metricsBindAddress: 0.0.0.0 - role: worker labels: mynode: worker1 topology.kubernetes.io/zone: ap-northeast-2a - role: worker labels: mynode: worker2 topology.kubernetes.io/zone: ap-northeast-2b - role: worker labels: mynode: worker3 topology.kubernetes.io/zone: ap-northeast-2c networking: podSubnet: 10.10.0.0/16 #파드 IP를 위한 CIDR 범위를 정의합니다. 파드는 이 범위에서 IP를 할당받습니다. serviceSubnet: 10.200.1.0/24 #서비스 IP를 위한 CIDR 범위를 정의합니다. 서비스는 이 범위에서 IP를 할당받습니다. EOT -

K8S 클러스터 설치

root@MyServer:~# kind create cluster --config kind-svc.yaml --name myk8s --image kindest/node:v1.31.0 Creating cluster "myk8s" ... ✓ Ensuring node image (kindest/node:v1.31.0) 🖼 ✓ Preparing nodes 📦 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing CNI 🔌 ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜 Set kubectl context to "kind-myk8s" You can now use your cluster with: kubectl cluster-info --context kind-myk8s Thanks for using kind! 😊 (⎈|kind-myk8s:N/A) root@MyServer:~# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES b588f46c96e1 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker 8b65d403f22e kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 0.0.0.0:30000-30004->30000-30004/tcp, 127.0.0.1:44205->6443/tcp myk8s-control-plane e9995ef80279 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker2 5b1e8ffa85c0 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker3 -

노드에 기본 툴 설치

# docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping git vim arp-scan -y' # for i in worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; done -

실습 후 자원삭제

❯ terraform destroy -auto-approve

2) MacOS Docker-Desktop 환경에 Kind 이용 K8S 설치

K8S cluster 생성

-

kind-cluster.yaml

❯ cat <<EOT> kind-cluster.yaml kind: Cluster apiVersion: kind.x-k8s.io/v1alpha4 featureGates: "InPlacePodVerticalScaling": true "MultiCIDRServiceAllocator": true nodes: - role: control-plane labels: mynode: control-plane extraPortMappings: - containerPort: 30000 hostPort: 30000 - containerPort: 30001 hostPort: 30001 - containerPort: 30002 hostPort: 30002 kubeadmConfigPatches: - | kind: ClusterConfiguration apiServer: extraArgs: runtime-config: api/all=true controllerManager: extraArgs: bind-address: 0.0.0.0 etcd: local: extraArgs: listen-metrics-urls: http://0.0.0.0:2381 scheduler: extraArgs: bind-address: 0.0.0.0 - | kind: KubeProxyConfiguration metricsBindAddress: 0.0.0.0 - role: worker labels: mynode: worker1 - role: worker labels: mynode: worker2 - role: worker labels: mynode: worker3 networking: podSubnet: 10.10.0.0/16 serviceSubnet: 10.200.1.0/24 EOT -

k8s 클러스터 생성

❯ kind create cluster --config kind-cluster.yaml --name myk8s --image kindest/node:v1.31.0 Creating cluster "myk8s" ... ✓ Ensuring node image (kindest/node:v1.31.0) 🖼 ✓ Preparing nodes 📦 📦 📦 📦 ✓ Writing configuration 📜 ✓ Starting control-plane 🕹️ ✓ Installing CNI 🔌 ✓ Installing StorageClass 💾 ✓ Joining worker nodes 🚜 Set kubectl context to "kind-myk8s" You can now use your cluster with: kubectl cluster-info --context kind-myk8s Thanks for using kind! 😊 ❯ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f9101e7f5b99 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker2 66cc844875a5 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker 3771ae0b4ef0 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 0.0.0.0:30000-30002->30000-30002/tcp, 127.0.0.1:62953->6443/tcp myk8s-control-plane 755463634ade kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker3 -

노드에 기본 툴 설치

# control-plane ❯ docker exec -it myk8s-control-plane sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping git vim arp-scan -y' Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB] ... .. Setting up liblwp-protocol-https-perl (6.10-1) ... Setting up libwww-perl (6.68-1) ... Processing triggers for libc-bin (2.36-9+deb12u7) ... ❯ for i in worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i sh -c 'apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; done # worker >> node myk8s-worker << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB] ... >> node myk8s-worker2 << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB] ... >> node myk8s-worker3 << Get:1 http://deb.debian.org/debian bookworm InRelease [151 kB] Get:2 http://deb.debian.org/debian bookworm-updates InRelease [55.4 kB] ...

설치내용 확인

-

k8s v1.31.0 버전 확인

❯ kubectl get nodes NAME STATUS ROLES AGE VERSION myk8s-control-plane Ready control-plane 15m v1.31.0 myk8s-worker Ready <none> 15m v1.31.0 myk8s-worker2 Ready <none> 15m v1.31.0 myk8s-worker3 Ready <none> 15m v1.31.0 -

노드 labels 확인

❯ kubectl get nodes -o jsonpath="{.items[*].metadata.labels}" | jq { "beta.kubernetes.io/arch": "arm64", "beta.kubernetes.io/os": "linux", "kubernetes.io/arch": "arm64", "kubernetes.io/hostname": "myk8s-control-plane", "kubernetes.io/os": "linux", "mynode": "control-plane", "node-role.kubernetes.io/control-plane": "", "node.kubernetes.io/exclude-from-external-load-balancers": "", } { "beta.kubernetes.io/arch": "arm64", "beta.kubernetes.io/os": "linux", "kubernetes.io/arch": "arm64", "kubernetes.io/hostname": "myk8s-worker", "kubernetes.io/os": "linux", "mynode": "worker1" } { "beta.kubernetes.io/arch": "arm64", "beta.kubernetes.io/os": "linux", "kubernetes.io/arch": "arm64", "kubernetes.io/hostname": "myk8s-worker2", "kubernetes.io/os": "linux", "mynode": "worker2" } { "beta.kubernetes.io/arch": "arm64", "beta.kubernetes.io/os": "linux", "kubernetes.io/arch": "arm64", "kubernetes.io/hostname": "myk8s-worker3", "kubernetes.io/os": "linux", "mynode": "worker3" } -

kind network 중 컨테이너(노드) IP(대역) 확인

❯ docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}' /myk8s-control-plane 172.18.0.5 /myk8s-worker 172.18.0.3 /myk8s-worker2 172.18.0.4 /myk8s-worker3 172.18.0.2 -

파드 CIDR와 Service 대역 확인 : CNI는 kindnet 사용

❯ kubectl get cm -n kube-system kubeadm-config -oyaml | grep -i subnet podSubnet: 10.10.0.0/16 serviceSubnet: 10.200.1.0/24 ❯ kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range" "--service-cluster-ip-range=10.200.1.0/24", "--cluster-cidr=10.10.0.0/16", -

MultiCIDRServiceAllocator : https://kubernetes.io/docs/tasks/network/extend-service-ip-ranges/

kubectl get servicecidr NAME CIDRS AGE kubernetes 10.200.1.0/24 2m13s -

노드마다 할당된 dedicated subnet (podCIDR) 확인

❯ kubectl get nodes -o jsonpath="{.items[*].spec.podCIDR}" 10.10.0.0/24 10.10.1.0/24 10.10.3.0/24 10.10.2.0/24 -

kube-proxy configmap 확인

❯ kubectl describe cm -n kube-system kube-proxy Name: kube-proxy Namespace: kube-system Labels: app=kube-proxy Annotations: <none> Data ==== config.conf: ---- apiVersion: kubeproxy.config.k8s.io/v1alpha1 bindAddress: 0.0.0.0 bindAddressHardFail: false clientConnection: acceptContentTypes: "" burst: 0 contentType: "" kubeconfig: /var/lib/kube-proxy/kubeconfig.conf qps: 0 clusterCIDR: 10.10.0.0/16 ... mode: iptables iptables: localhostNodePorts: null masqueradeAll: false masqueradeBit: null minSyncPeriod: 1s syncPeriod: 0s

-

노드 별 네트워트 정보 확인 : CNI는 kindnet 사용

❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -t myk8s-$i cat /etc/cni/net.d/10-kindnet.conflist; echo; done >> node myk8s-control-plane << { "cniVersion": "0.3.1", "name": "kindnet", "plugins": [ { "type": "ptp", "ipMasq": false, "ipam": { "type": "host-local", "dataDir": "/run/cni-ipam-state", "routes": [ { "dst": "0.0.0.0/0" } ], "ranges": [ [ { "subnet": "10.10.0.0/24" } ] ] } , "mtu": 65535 }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } >> node myk8s-worker << ... [ { "subnet": "10.10.1.0/24" } ] ... >> node myk8s-worker2 << ... [ { "subnet": "10.10.3.0/24" } ] ... >> node myk8s-worker3 << ... [ { "subnet": "10.10.2.0/24" } ] ... ❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -t myk8s-$i ip -c route; echo; done >> node myk8s-control-plane << default via 172.18.0.1 dev eth0 10.10.0.2 dev veth56333629 scope host 10.10.0.3 dev veth4dd3b5cd scope host 10.10.0.4 dev veth95503b3e scope host 10.10.1.0/24 via 172.18.0.3 dev eth0 10.10.2.0/24 via 172.18.0.2 dev eth0 10.10.3.0/24 via 172.18.0.4 dev eth0 172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.5 >> node myk8s-worker << default via 172.18.0.1 dev eth0 10.10.0.0/24 via 172.18.0.5 dev eth0 10.10.2.0/24 via 172.18.0.2 dev eth0 10.10.3.0/24 via 172.18.0.4 dev eth0 172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.3 >> node myk8s-worker2 << default via 172.18.0.1 dev eth0 10.10.0.0/24 via 172.18.0.5 dev eth0 10.10.1.0/24 via 172.18.0.3 dev eth0 10.10.2.0/24 via 172.18.0.2 dev eth0 172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.4 >> node myk8s-worker3 << default via 172.18.0.1 dev eth0 10.10.0.0/24 via 172.18.0.5 dev eth0 10.10.1.0/24 via 172.18.0.3 dev eth0 10.10.3.0/24 via 172.18.0.4 dev eth0 172.18.0.0/16 dev eth0 proto kernel scope link src 172.18.0.2 ❯ for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -t myk8s-$i ip -c addr; echo; done >> node myk8s-control-plane << 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 3: gre0@NONE: <NOARP> mtu 1476 qdisc noop state DOWN group default qlen 1000 link/gre 0.0.0.0 brd 0.0.0.0 4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 5: erspan0@NONE: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 6: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 7: ip6_vti0@NONE: <NOARP> mtu 1428 qdisc noop state DOWN group default qlen 1000 link/tunnel6 :: brd :: permaddr a69c:805a:9e52:: 8: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/sit 0.0.0.0 brd 0.0.0.0 9: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN group default qlen 1000 link/tunnel6 :: brd :: permaddr 3629:7d02:2182:: 10: ip6gre0@NONE: <NOARP> mtu 1448 qdisc noop state DOWN group default qlen 1000 link/gre6 :: brd :: permaddr 46cd:3f6e:f0d8:: 11: veth56333629@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link/ether 4e:9f:6f:7f:93:c8 brd ff:ff:ff:ff:ff:ff link-netns cni-72eef25e-ece7-b7a2-3f25-08b3724bd6d1 inet 10.10.0.1/32 scope global veth56333629 valid_lft forever preferred_lft forever inet6 fe80::4c9f:6fff:fe7f:93c8/64 scope link valid_lft forever preferred_lft forever 12: veth4dd3b5cd@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link/ether 3a:8c:24:56:5c:7c brd ff:ff:ff:ff:ff:ff link-netns cni-8fa55bb0-76fc-dae6-3bf1-01e0d84400a8 inet 10.10.0.1/32 scope global veth4dd3b5cd valid_lft forever preferred_lft forever inet6 fe80::388c:24ff:fe56:5c7c/64 scope link valid_lft forever preferred_lft forever 13: veth95503b3e@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link/ether 1a:93:47:78:64:14 brd ff:ff:ff:ff:ff:ff link-netns cni-c179b026-7d73-c1b6-d176-bdc948f1c75b inet 10.10.0.1/32 scope global veth95503b3e valid_lft forever preferred_lft forever inet6 fe80::1893:47ff:fe78:6414/64 scope link valid_lft forever preferred_lft forever 40: eth0@if41: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:05 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.18.0.5/16 brd 172.18.255.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fc00:f853:ccd:e793::5/64 scope global nodad valid_lft forever preferred_lft forever inet6 fe80::42:acff:fe12:5/64 scope link valid_lft forever preferred_lft forever >> node myk8s-worker << 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 3: gre0@NONE: <NOARP> mtu 1476 qdisc noop state DOWN group default qlen 1000 link/gre 0.0.0.0 brd 0.0.0.0 4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1462 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 5: erspan0@NONE: <BROADCAST,MULTICAST> mtu 1450 qdisc noop state DOWN group default qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 6: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 7: ip6_vti0@NONE: <NOARP> mtu 1428 qdisc noop state DOWN group default qlen 1000 link/tunnel6 :: brd :: permaddr 9ec4:7e9f:8ae2:: 8: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000 link/sit 0.0.0.0 brd 0.0.0.0 9: ip6tnl0@NONE: <NOARP> mtu 1452 qdisc noop state DOWN group default qlen 1000 link/tunnel6 :: brd :: permaddr a6f0:a3cc:9030:: 10: ip6gre0@NONE: <NOARP> mtu 1448 qdisc noop state DOWN group default qlen 1000 link/gre6 :: brd :: permaddr 1abc:5f11:2c70:: 36: eth0@if37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 65535 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 172.18.0.3/16 brd 172.18.255.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fc00:f853:ccd:e793::3/64 scope global nodad valid_lft forever preferred_lft forever inet6 fe80::42:acff:fe12:3/64 scope link valid_lft forever preferred_lft forever >> node myk8s-worker2 << ... >> node myk8s-worker3 << -

iptables 정보 확인

- myk8s-control-plane

❯ for i in filter nat mangle raw ; do echo ">> IPTables Type : $i <<"; docker exec -t myk8s-control-plane iptables -t $i -S ; echo; done >> IPTables Type : filter << -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -N KUBE-EXTERNAL-SERVICES -N KUBE-FIREWALL -N KUBE-FORWARD -N KUBE-KUBELET-CANARY -N KUBE-NODEPORTS -N KUBE-PROXY-CANARY -N KUBE-PROXY-FIREWALL -N KUBE-SERVICES -A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS -A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES -A INPUT -j KUBE-FIREWALL -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES -A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A OUTPUT -j KUBE-FIREWALL -A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP -A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP -A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT -A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT >> IPTables Type : nat << -P PREROUTING ACCEPT -P INPUT ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N DOCKER_OUTPUT -N DOCKER_POSTROUTING -N KIND-MASQ-AGENT -N KUBE-KUBELET-CANARY -N KUBE-MARK-MASQ -N KUBE-NODEPORTS -N KUBE-POSTROUTING -N KUBE-PROXY-CANARY -N KUBE-SEP-2XZJVPRY2PQVE3B3 -N KUBE-SEP-F2ZDTMFKATSD3GWE -N KUBE-SEP-OEOVYBFUDTUCKBZR -N KUBE-SEP-P4UV4WHAETXYCYLO -N KUBE-SEP-RT3F6VLY3P67FIV3 -N KUBE-SEP-V2PECCYPB6X2GSCW -N KUBE-SEP-XVHB3NIW2NQLTFP3 -N KUBE-SERVICES -N KUBE-SVC-ERIFXISQEP7F7OF4 -N KUBE-SVC-JD5MR3NA4I4DYORP -N KUBE-SVC-NPX46M4PTMTKRN6Y -N KUBE-SVC-TCOU7JCQXEZGVUNU -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A PREROUTING -d 192.168.65.254/32 -j DOCKER_OUTPUT -A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A OUTPUT -d 192.168.65.254/32 -j DOCKER_OUTPUT -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A POSTROUTING -d 192.168.65.254/32 -j DOCKER_POSTROUTING -A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT -A DOCKER_OUTPUT -d 192.168.65.254/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:37513 -A DOCKER_OUTPUT -d 192.168.65.254/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:47125 -A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 37513 -j SNAT --to-source 192.168.65.254:53 -A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 47125 -j SNAT --to-source 192.168.65.254:53 -A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN -A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully -A KUBE-SEP-2XZJVPRY2PQVE3B3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ -A KUBE-SEP-2XZJVPRY2PQVE3B3 -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.2:53 -A KUBE-SEP-F2ZDTMFKATSD3GWE -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ -A KUBE-SEP-F2ZDTMFKATSD3GWE -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.4:53 -A KUBE-SEP-OEOVYBFUDTUCKBZR -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ -A KUBE-SEP-OEOVYBFUDTUCKBZR -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.4:53 -A KUBE-SEP-P4UV4WHAETXYCYLO -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-P4UV4WHAETXYCYLO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.4:9153 -A KUBE-SEP-RT3F6VLY3P67FIV3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-RT3F6VLY3P67FIV3 -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.2:9153 -A KUBE-SEP-V2PECCYPB6X2GSCW -s 172.18.0.2/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ -A KUBE-SEP-V2PECCYPB6X2GSCW -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.2:6443 -A KUBE-SEP-XVHB3NIW2NQLTFP3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ -A KUBE-SEP-XVHB3NIW2NQLTFP3 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.2:53 -A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y -A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU -A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4 -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ -A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XVHB3NIW2NQLTFP3 -A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.4:53" -j KUBE-SEP-F2ZDTMFKATSD3GWE -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.2:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-RT3F6VLY3P67FIV3 -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.4:9153" -j KUBE-SEP-P4UV4WHAETXYCYLO -A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ -A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.2:6443" -j KUBE-SEP-V2PECCYPB6X2GSCW -A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ -A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-2XZJVPRY2PQVE3B3 -A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.4:53" -j KUBE-SEP-OEOVYBFUDTUCKBZR >> IPTables Type : mangle << -P PREROUTING ACCEPT -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N KUBE-IPTABLES-HINT -N KUBE-KUBELET-CANARY -N KUBE-PROXY-CANARY >> IPTables Type : raw << -P PREROUTING ACCEPT -P OUTPUT ACCEPT- myk8s-worker

❯ for i in filter nat mangle raw ; do echo ">> IPTables Type : $i <<"; docker exec -t myk8s-worker iptables -t $i -S ; echo; done >> IPTables Type : filter << -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -N KUBE-EXTERNAL-SERVICES -N KUBE-FIREWALL -N KUBE-FORWARD -N KUBE-KUBELET-CANARY -N KUBE-NODEPORTS -N KUBE-PROXY-CANARY -N KUBE-PROXY-FIREWALL -N KUBE-SERVICES -A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A INPUT -m comment --comment "kubernetes health check service ports" -j KUBE-NODEPORTS -A INPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES -A INPUT -j KUBE-FIREWALL -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A FORWARD -m comment --comment "kubernetes forwarding rules" -j KUBE-FORWARD -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A FORWARD -m conntrack --ctstate NEW -m comment --comment "kubernetes externally-visible service portals" -j KUBE-EXTERNAL-SERVICES -A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes load balancer firewall" -j KUBE-PROXY-FIREWALL -A OUTPUT -m conntrack --ctstate NEW -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A OUTPUT -j KUBE-FIREWALL -A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP -A KUBE-FORWARD -m conntrack --ctstate INVALID -m nfacct --nfacct-name ct_state_invalid_dropped_pkts -j DROP -A KUBE-FORWARD -m comment --comment "kubernetes forwarding rules" -m mark --mark 0x4000/0x4000 -j ACCEPT -A KUBE-FORWARD -m comment --comment "kubernetes forwarding conntrack rule" -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT >> IPTables Type : nat << -P PREROUTING ACCEPT -P INPUT ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N DOCKER_OUTPUT -N DOCKER_POSTROUTING -N KIND-MASQ-AGENT -N KUBE-KUBELET-CANARY -N KUBE-MARK-MASQ -N KUBE-NODEPORTS -N KUBE-POSTROUTING -N KUBE-PROXY-CANARY -N KUBE-SEP-2XZJVPRY2PQVE3B3 -N KUBE-SEP-F2ZDTMFKATSD3GWE -N KUBE-SEP-OEOVYBFUDTUCKBZR -N KUBE-SEP-P4UV4WHAETXYCYLO -N KUBE-SEP-RT3F6VLY3P67FIV3 -N KUBE-SEP-V2PECCYPB6X2GSCW -N KUBE-SEP-XVHB3NIW2NQLTFP3 -N KUBE-SERVICES -N KUBE-SVC-ERIFXISQEP7F7OF4 -N KUBE-SVC-JD5MR3NA4I4DYORP -N KUBE-SVC-NPX46M4PTMTKRN6Y -N KUBE-SVC-TCOU7JCQXEZGVUNU -A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A PREROUTING -d 192.168.65.254/32 -j DOCKER_OUTPUT -A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES -A OUTPUT -d 192.168.65.254/32 -j DOCKER_OUTPUT -A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING -A POSTROUTING -d 192.168.65.254/32 -j DOCKER_POSTROUTING -A POSTROUTING -m addrtype ! --dst-type LOCAL -m comment --comment "kind-masq-agent: ensure nat POSTROUTING directs all non-LOCAL destination traffic to our custom KIND-MASQ-AGENT chain" -j KIND-MASQ-AGENT -A DOCKER_OUTPUT -d 192.168.65.254/32 -p tcp -m tcp --dport 53 -j DNAT --to-destination 127.0.0.11:44011 -A DOCKER_OUTPUT -d 192.168.65.254/32 -p udp -m udp --dport 53 -j DNAT --to-destination 127.0.0.11:54211 -A DOCKER_POSTROUTING -s 127.0.0.11/32 -p tcp -m tcp --sport 44011 -j SNAT --to-source 192.168.65.254:53 -A DOCKER_POSTROUTING -s 127.0.0.11/32 -p udp -m udp --sport 54211 -j SNAT --to-source 192.168.65.254:53 -A KIND-MASQ-AGENT -d 10.10.0.0/16 -m comment --comment "kind-masq-agent: local traffic is not subject to MASQUERADE" -j RETURN -A KIND-MASQ-AGENT -m comment --comment "kind-masq-agent: outbound traffic is subject to MASQUERADE (must be last in chain)" -j MASQUERADE -A KUBE-MARK-MASQ -j MARK --set-xmark 0x4000/0x4000 -A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN -A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0 -A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully -A KUBE-SEP-2XZJVPRY2PQVE3B3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ -A KUBE-SEP-2XZJVPRY2PQVE3B3 -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.2:53 -A KUBE-SEP-F2ZDTMFKATSD3GWE -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ -A KUBE-SEP-F2ZDTMFKATSD3GWE -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.4:53 -A KUBE-SEP-OEOVYBFUDTUCKBZR -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:dns" -j KUBE-MARK-MASQ -A KUBE-SEP-OEOVYBFUDTUCKBZR -p udp -m comment --comment "kube-system/kube-dns:dns" -m udp -j DNAT --to-destination 10.10.0.4:53 -A KUBE-SEP-P4UV4WHAETXYCYLO -s 10.10.0.4/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-P4UV4WHAETXYCYLO -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.4:9153 -A KUBE-SEP-RT3F6VLY3P67FIV3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:metrics" -j KUBE-MARK-MASQ -A KUBE-SEP-RT3F6VLY3P67FIV3 -p tcp -m comment --comment "kube-system/kube-dns:metrics" -m tcp -j DNAT --to-destination 10.10.0.2:9153 -A KUBE-SEP-V2PECCYPB6X2GSCW -s 172.18.0.2/32 -m comment --comment "default/kubernetes:https" -j KUBE-MARK-MASQ -A KUBE-SEP-V2PECCYPB6X2GSCW -p tcp -m comment --comment "default/kubernetes:https" -m tcp -j DNAT --to-destination 172.18.0.2:6443 -A KUBE-SEP-XVHB3NIW2NQLTFP3 -s 10.10.0.2/32 -m comment --comment "kube-system/kube-dns:dns-tcp" -j KUBE-MARK-MASQ -A KUBE-SEP-XVHB3NIW2NQLTFP3 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp" -m tcp -j DNAT --to-destination 10.10.0.2:53 -A KUBE-SERVICES -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-SVC-NPX46M4PTMTKRN6Y -A KUBE-SERVICES -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-TCOU7JCQXEZGVUNU -A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-ERIFXISQEP7F7OF4 -A KUBE-SERVICES -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-SVC-JD5MR3NA4I4DYORP -A KUBE-SERVICES -m comment --comment "kubernetes service nodeports; NOTE: this must be the last rule in this chain" -m addrtype --dst-type LOCAL -j KUBE-NODEPORTS -A KUBE-SVC-ERIFXISQEP7F7OF4 ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ -A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-XVHB3NIW2NQLTFP3 -A KUBE-SVC-ERIFXISQEP7F7OF4 -m comment --comment "kube-system/kube-dns:dns-tcp -> 10.10.0.4:53" -j KUBE-SEP-F2ZDTMFKATSD3GWE -A KUBE-SVC-JD5MR3NA4I4DYORP ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p tcp -m comment --comment "kube-system/kube-dns:metrics cluster IP" -m tcp --dport 9153 -j KUBE-MARK-MASQ -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.2:9153" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-RT3F6VLY3P67FIV3 -A KUBE-SVC-JD5MR3NA4I4DYORP -m comment --comment "kube-system/kube-dns:metrics -> 10.10.0.4:9153" -j KUBE-SEP-P4UV4WHAETXYCYLO -A KUBE-SVC-NPX46M4PTMTKRN6Y ! -s 10.10.0.0/16 -d 10.200.1.1/32 -p tcp -m comment --comment "default/kubernetes:https cluster IP" -m tcp --dport 443 -j KUBE-MARK-MASQ -A KUBE-SVC-NPX46M4PTMTKRN6Y -m comment --comment "default/kubernetes:https -> 172.18.0.2:6443" -j KUBE-SEP-V2PECCYPB6X2GSCW -A KUBE-SVC-TCOU7JCQXEZGVUNU ! -s 10.10.0.0/16 -d 10.200.1.10/32 -p udp -m comment --comment "kube-system/kube-dns:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ -A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.2:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-2XZJVPRY2PQVE3B3 -A KUBE-SVC-TCOU7JCQXEZGVUNU -m comment --comment "kube-system/kube-dns:dns -> 10.10.0.4:53" -j KUBE-SEP-OEOVYBFUDTUCKBZR >> IPTables Type : mangle << -P PREROUTING ACCEPT -P INPUT ACCEPT -P FORWARD ACCEPT -P OUTPUT ACCEPT -P POSTROUTING ACCEPT -N KUBE-IPTABLES-HINT -N KUBE-KUBELET-CANARY -N KUBE-PROXY-CANARY >> IPTables Type : raw << -P PREROUTING ACCEPT -P OUTPUT ACCEPT -

각 노드 bash 접속

❯ docker exec -it myk8s-control-plane bash root@myk8s-control-plane:/# ❯ docker exec -it myk8s-control-plane bash ❯ docker exec -it myk8s-worker bash ❯ docker exec -it myk8s-worker2 bash ❯ docker exec -it myk8s-worker3 bash ---------------------------------------- exit ---------------------------------------- -

kind 설치 시 kind 이름의 도커 브리지가 생성된다 : 172.18.0.0/16 대역

❯ docker network ls NETWORK ID NAME DRIVER SCOPE 90a6e544b75d bridge bridge local 88153fefe41f host host local 69ae0c31875a kind bridge local 6e2fe0aabee3 none null local

❯ docker inspect kind

[

{

"Name": "kind",

"Id": "69ae0c31875a22cdc2fc428961d44314d099d13a213b8b2faf2d2fe93dc15656",

"Created": "2024-09-22T13:55:28.516763084Z",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

},

{

"Subnet": "fc00:f853:ccd:e793::/64",

"Gateway": "fc00:f853:ccd:e793::1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": { "189df75397becb6fe74cbd49ec70e44de1c3cc37e221670585492eecc0fb1fc8": {

"Name": "myk8s-worker2",

"EndpointID": "3456e42a8655d5cd6354b522c9087e8c024b1f24af99a06cc367584b159a86b6",

"MacAddress": "02:42:ac:12:00:03",

"IPv4Address": "172.18.0.3/16",

"IPv6Address": "fc00:f853:ccd:e793::3/64"

}, "41466b7e8cbb58ca8ca059976423a4dbf86e9dd83ed3d475ad9cadd831f0e765": {

"Name": "myk8s-control-plane",

"EndpointID": "9f067cc0a5fd1ca3e19f02db1f7b0ba5e3b8a832a1b8110e32ebfc9af257cfc7",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": "fc00:f853:ccd:e793::2/64"

}, "c7e7e408c903b0be8c1c606826a7605ab8e9d1fd09671c3c6b6717738aa7bd72": {

"Name": "myk8s-worker3",

"EndpointID": "095b5edf9b7da2f7f42ebb6ad1f51293fad7dac3f9fb2ca845c797f192c54ee3",

"MacAddress": "02:42:ac:12:00:05",

"IPv4Address": "172.18.0.5/16",

"IPv6Address": "fc00:f853:ccd:e793::5/64"

}, "dcaf34f4086d78273cd4a50c0554172cff5495ba9e54313cd9ad1d6286b8d1c6": {

"Name": "myk8s-worker",

"EndpointID": "b06c2e46586095b3ec97c2c87b216023100bd2ea4be84d8c21945e2a0ba21960",

"MacAddress": "02:42:ac:12:00:04",

"IPv4Address": "172.18.0.4/16",

"IPv6Address": "fc00:f853:ccd:e793::4/64"

}

},

"Options": {

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.driver.mtu": "65535"

},

"Labels": {}

}

]-

arp scan 해두기

❯ docker exec -it myk8s-control-plane arp-scan --interfac=eth0 --localnet Interface: eth0, type: EN10MB, MAC: 02:42:ac:12:00:02, IPv4: 172.18.0.2 Starting arp-scan 1.10.0 with 65536 hosts (https://github.com/royhills/arp-scan) 172.18.0.1 02:42:5e:6a:a9:12 (Unknown: locally administered) 172.18.0.3 02:42:ac:12:00:03 (Unknown: locally administered) 172.18.0.4 02:42:ac:12:00:04 (Unknown: locally administered) 172.18.0.5 02:42:ac:12:00:05 (Unknown: locally administered) -

mypc 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 지정 없이 혹은 지정 해서 사용

❯ docker run -d --rm --name mypc --network kind --ip 172.18.0.100 nicolaka/netshoot sleep infinity # IP 지정 실행 시 7715045879ba0911ae50384dfe40f656ced87e5ca75f5d6493f5554c4638f0fe ❯ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7715045879ba nicolaka/netshoot "sleep infinity" 16 seconds ago Up 15 seconds mypc ... -

mypc2 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 지정 없이 혹은 지정 해서 사용

❯ docker run -d --rm --name mypc2 --network kind --ip 172.18.0.200 nicolaka/netshoot sleep infinity # IP 지정 실행 시 f7fddfc68ba011ffe433f173b24e6407093a9e418a0650fb3232d2dee0409399 ❯ docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES f7fddfc68ba0 nicolaka/netshoot "sleep infinity" 3 seconds ago Up 3 seconds mypc2 ... -

kind network 중 컨테이너(노드) IP(대역) 확인

❯ docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}' /mypc 172.18.0.100 /mypc2 172.18.0.200 /myk8s-control-plane 172.18.0.2 /myk8s-worker 172.18.0.4 /myk8s-worker2 172.18.0.3 /myk8s-worker3 172.18.0.5 -

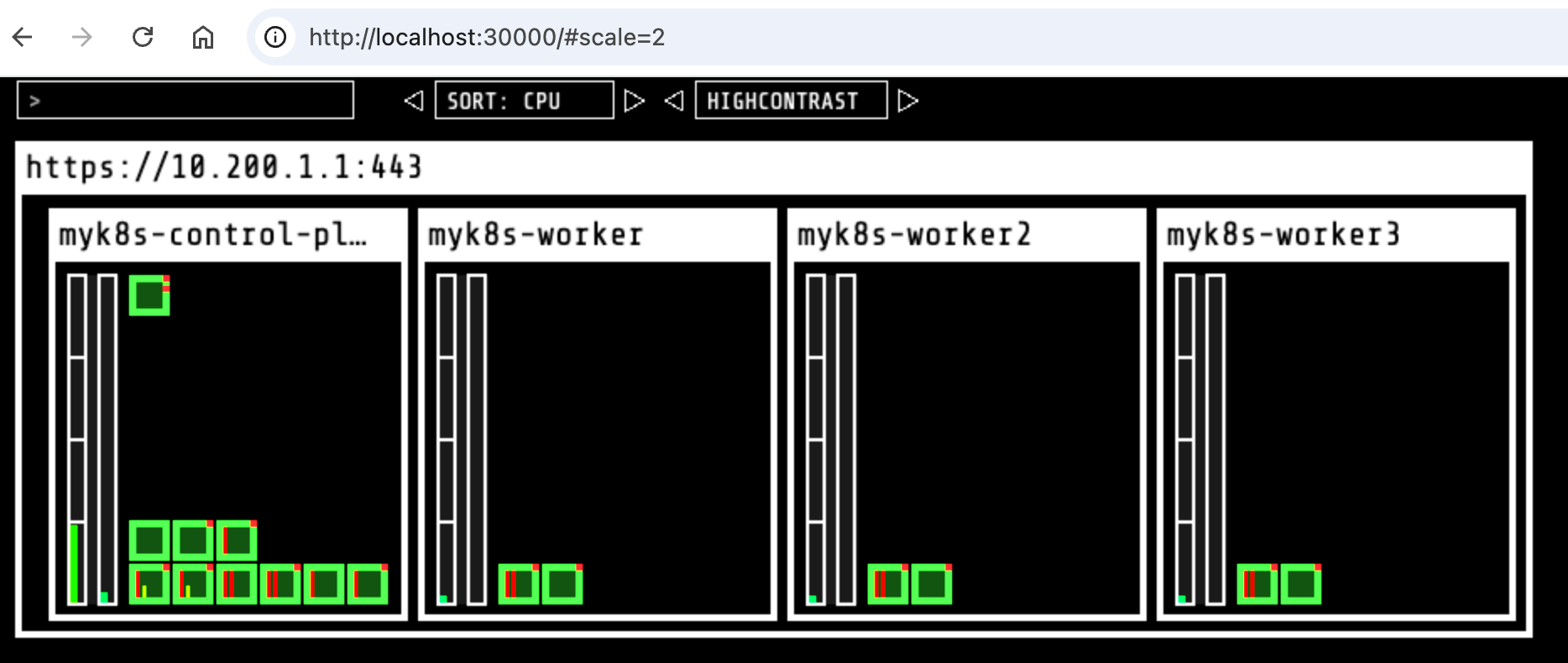

kube-ops-view 설치

❯ helm repo add geek-cookbook https://geek-cookbook.github.io/charts/ "geek-cookbook" already exists with the same configuration, skipping ❯ helm repo update Hang tight while we grab the latest from your chart repositories... ...Successfully got an update from the "geek-cookbook" chart repository Update Complete. ⎈Happy Helming!⎈ ❯ helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30000 --set env.TZ="Asia/Seoul" --namespace kube-system NAME: kube-ops-view LAST DEPLOYED: Thu Oct 3 18:14:02 2024 NAMESPACE: kube-system STATUS: deployed REVISION: 1 TEST SUITE: None NOTES: 1. Get the application URL by running these commands: export NODE_PORT=$(kubectl get --namespace kube-system -o jsonpath="{.spec.ports[0].nodePort}" services kube-ops-view) export NODE_IP=$(kubectl get nodes --namespace kube-system -o jsonpath="{.items[0].status.addresses[0].address}") echo http://$NODE_IP:$NODE_PORT # myk8s-control-plane 배치 ❯ kubectl -n kube-system get pod -o wide -l app.kubernetes.io/instance=kube-ops-view NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-ops-view-657dbc6cd8-r82p6 1/1 Running 0 2m36s 10.10.3.2 myk8s-worker2 <none> <none> ❯ kubectl -n kube-system edit deploy kube-ops-view --- spec: ... template: ... spec: nodeSelector: mynode: control-plane tolerations: - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" --- # 설치 확인 ❯ kubectl -n kube-system get pod -o wide -l app.kubernetes.io/instance=kube-ops-view NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES kube-ops-view-58f96c464d-rjzrd 1/1 Running 0 3m31s 10.10.0.5 myk8s-control-plane <none> <none> # kube-ops-view 접속 URL 확인 (1.5 , 2 배율) : macOS 사용자 echo -e "KUBE-OPS-VIEW URL = http://localhost:30000/#scale=1.5" echo -e "KUBE-OPS-VIEW URL = http://localhost:30000/#scale=2" # kube-ops-view 접속 URL 확인 (1.5 , 2 배율) : Windows 사용자 echo -e "KUBE-OPS-VIEW URL = http://192.168.50.10:30000/#scale=1.5" echo -e "KUBE-OPS-VIEW URL = http://192.168.50.10:30000/#scale=2" # kube-ops-view 접속 URL 확인 (1.5 , 2 배율) : AWS_EC2 사용자 echo -e "KUBE-OPS-VIEW URL = http://$(curl -s ipinfo.io/ip):30000/#scale=1.5" echo -e "KUBE-OPS-VIEW URL = http://$(curl -s ipinfo.io/ip):30000/#scale=2"

2. LoadBalancer 소개

서비스 퍼블리싱 (ServicesTypes)

애플리케이션 중 일부(예: 프론트엔드)는 서비스를 클러스터 밖에 위치한 외부 IP 주소에 노출하고 싶은 경우가 있을 것이다.

쿠버네티스 ServiceTypes는 원하는 서비스 종류를 지정할 수 있도록 해 준다.

Type 값과 그 동작은 다음과 같다.

- ClusterIP: 서비스를 클러스터-내부 IP에 노출시킨다. 이 값을 선택하면 클러스터 내에서만 서비스에 도달할 수 있다. 이것은 서비스의 type을 명시적으로 지정하지 않았을 때의 기본값이다.

- NodePort: 고정 포트 (NodePort)로 각 노드의 IP에 서비스를 노출시킨다. 노드 포트를 사용할 수 있도록 하기 위해, 쿠버네티스는 type: ClusterIP인 서비스를 요청했을 때와 마찬가지로 클러스터 IP 주소를 구성한다.

- LoadBalancer: 클라우드 공급자의 로드 밸런서를 사용하여 서비스를 외부에 노출시킨다.

- ExternalName: 값과 함께 CNAME 레코드를 리턴하여, 서비스를 externalName 필드의 내용 (예: foo.bar.example.com)에 매핑한다. 어떠한 종류의 프록시도 설정되지 않는다.

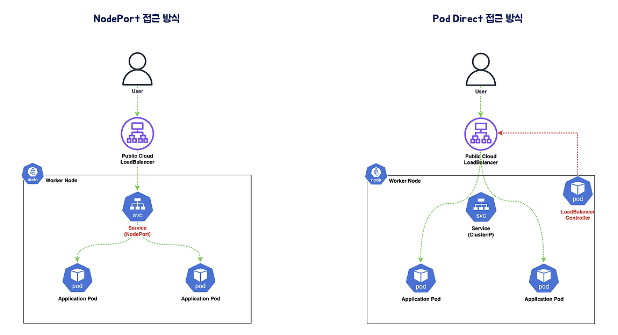

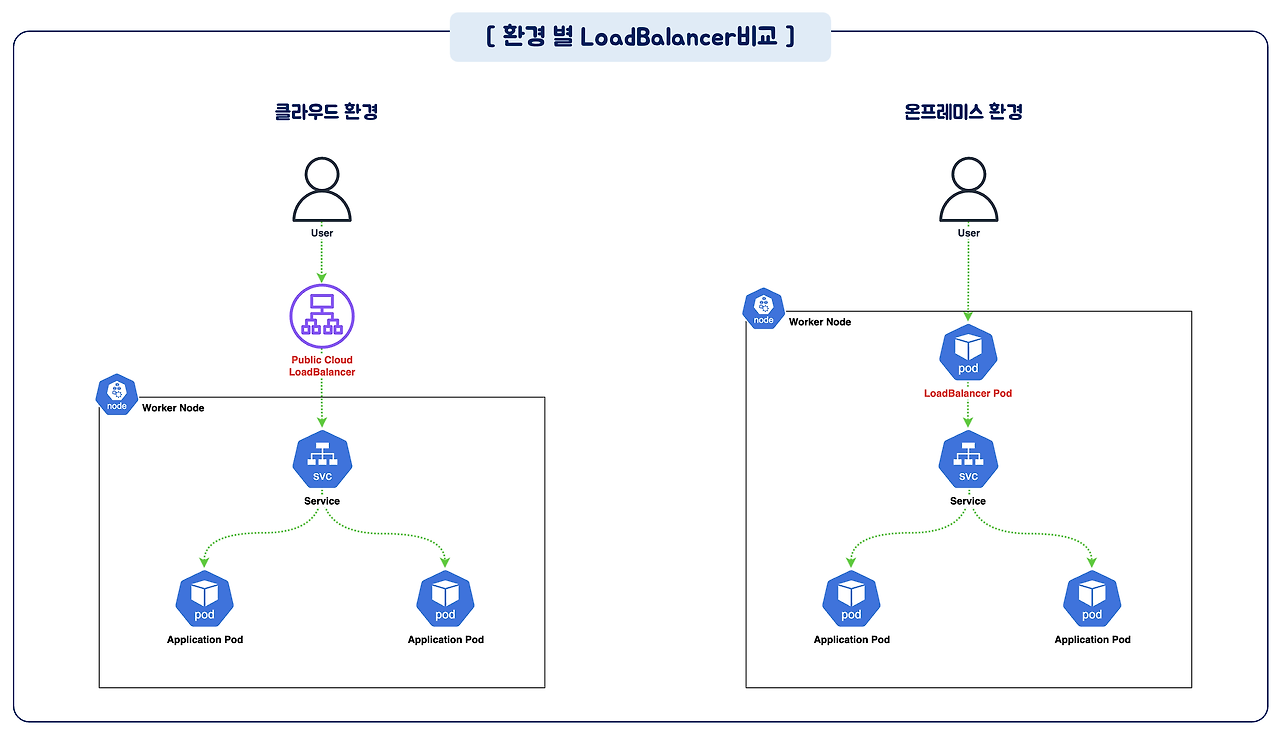

LoadBalancer 비교

-

환경별 LoadBalancer

- AWS : ALB(Application), NLB(Network LoadBalancer)

- GCP : Network Load Balancer

- On-Premise : MetalLB 등을 통하여 LoadBalancer 기능을 구현

-

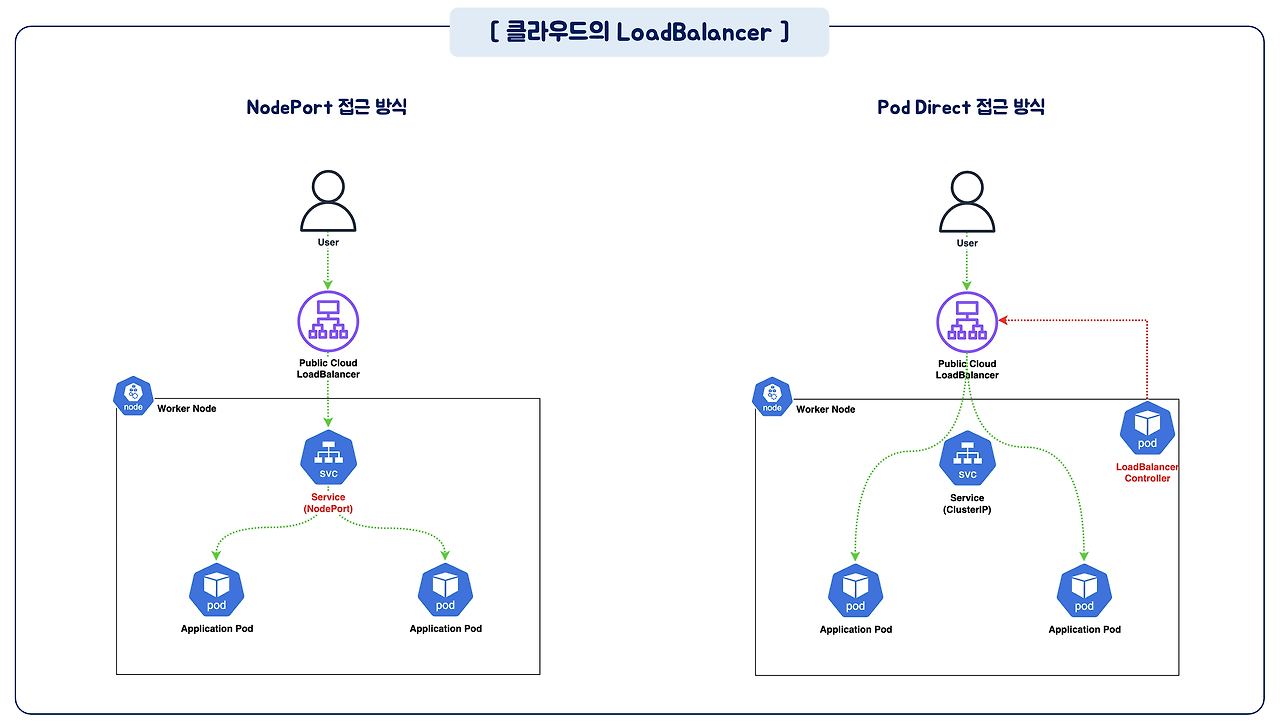

클라우드 LoadBalancer

- NodePort 접근방식 : Instance의 IP가 TargetGroup에 등록 (Network Hop 증가)

- Pod Direct 접근방식 : Pod의 IP가 TargetGroup애 등록, LoadBalancer Controller 필요

Service LoadBalancer Type 통신흐름

-

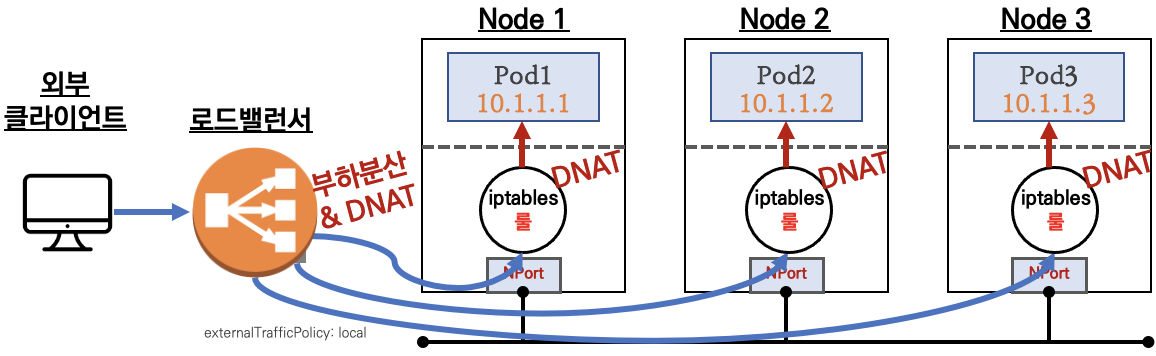

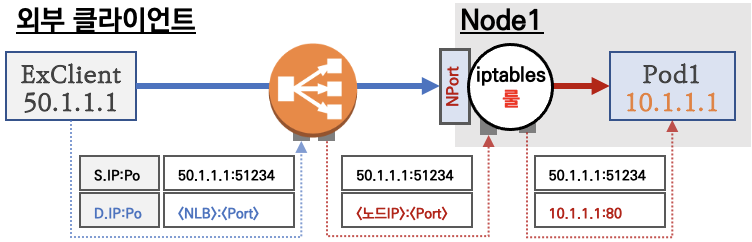

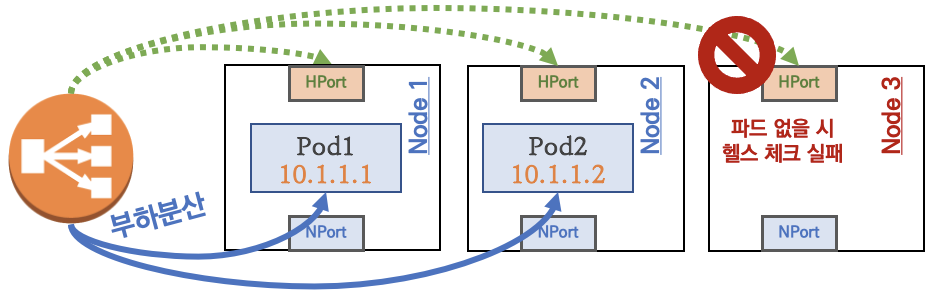

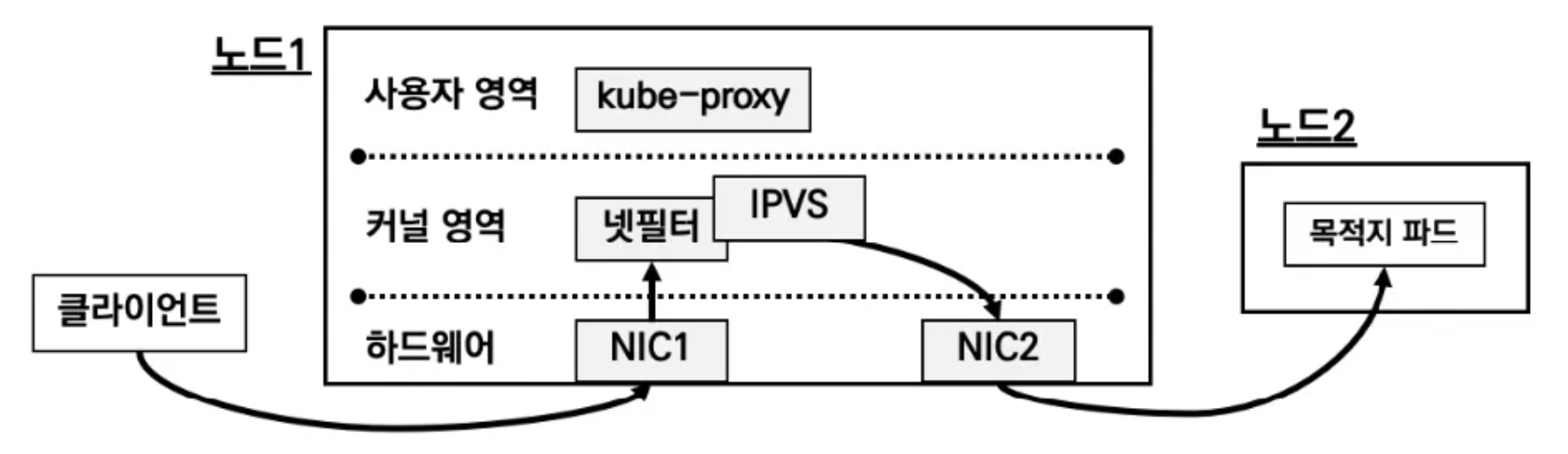

외부 클라이언트가 '로드밸런서' 접속 시 부하분산 되어 노드 도달 후 iptables 룰로 목적지 파드와 통신됨

- 외부에서 로드밸런서 (부하분산) 처리 후 → 노드(NodePort) 이후 기본 과정은 NodePort 과정과 동일하다!

- 노드는 외부에 공개되지 않고 로드밸런서만 외부에 공개되어, 외부 클라이언트는 로드밸랜서에 접속을 할 뿐 내부 노드의 정보를 알 수 없다

- 로드밸런서가 부하분산하여 파드가 존재하는 노드들에게 전달한다, iptables 룰에서는 자신의 노드에 있는 파드만 연결한다 (

externalTrafficPolicy: local) - DNAT 2번 동작 : 첫번째(로드밸런서 접속 후 빠져 나갈때), 두번째(노드의 iptables 룰에서 파드IP 전달 시)

- 외부 클라이언트 IP 보존(유지) : AWS NLB 는 타켓이 인스턴스일 경우 클라이언트 IP를 유지, iptables 룰 경우도

externalTrafficPolicy로 클라이언트 IP를 보존 - 쿠버네티스는 Service(LB Type) API 만 정의하고 실제 구현은 add-on에 맡김

-

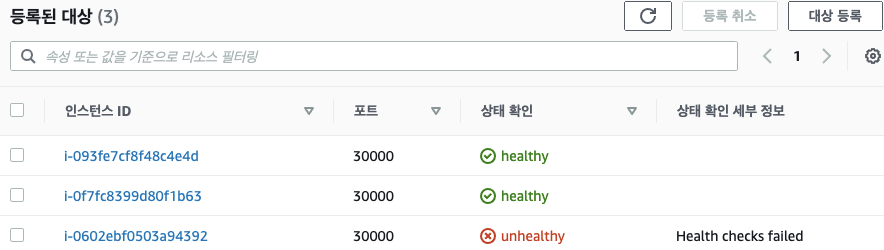

부하분산 최적화 : 노드에 파드가 없을 경우 '로드밸런서'에서 노드에 헬스 체크(상태 검사)가 실패하여 해당 노드로는 외부 요청 트래픽을 전달하지 않는다

-

서비스(LoadBalancer) 부족한 점

- 서비스(LoadBalancer) 생성 시 마다 LB(예 AWS NLB)가 생성되어 자원 활용이 비효율적임 ⇒ HTTP 경우 인그레스(Ingress) 를 통해 자원 활용 효율화 가능!

- 서비스(LoadBalancer)는 HTTP/HTTPS 처리에 일부 부족함(TLS 종료, 도메인 기반 라우팅 등) ⇒ 인그레스(Ingress) 를 통해 기능 동작 가능!

- (참고) 온프레미스 환경에서 제공 불가능??? ⇒ MetalLB 혹은 OpenELB(구 PorterLB) 를 통해서 온프레미스 환경에서 서비스(LoadBalancer) 기능 동작 가능!

-

Service(NodePort) 를 사용하거나 혹은 Ingress 를 사용하면 될텐데, 꼭 Service(LoadBalancer)를 사용해야만 하는 이유

-

특징

- LoadBalancer 서비스는 서비스를 인터넷에 노출하는 일반적인 방식이며 컨트롤러 불필요

- GKE의 경우 Network Load Balancer를 작동시켜 모든 트래픽을 서비스로 포워딩하는 단 하나의 IP주소를 제공한다.

-

사용시기

- 서비스를 직접적으로 노출하기 원할 경우

- 필터, 라우팅, 인증처리, 암호화등 부가 기능이 필요 없을때

- DMZ의 API Gateway등과 통합할 때

- 단, 노출하는 서비스마다 자체 IP를 가지게 된다는 것과, 노출 서비스 마다 LoadBalancer 비용을 지불해야 하는 것은 부담스러움

-

LoadBalancer 생성 Manifest

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app.kubernetes.io/name: MyApp

ports:

- protocol: TCP

port: 80

targetPort: 9376

clusterIP: 10.0.171.239

type: LoadBalancer3. MetalLB

MetalLB 소개

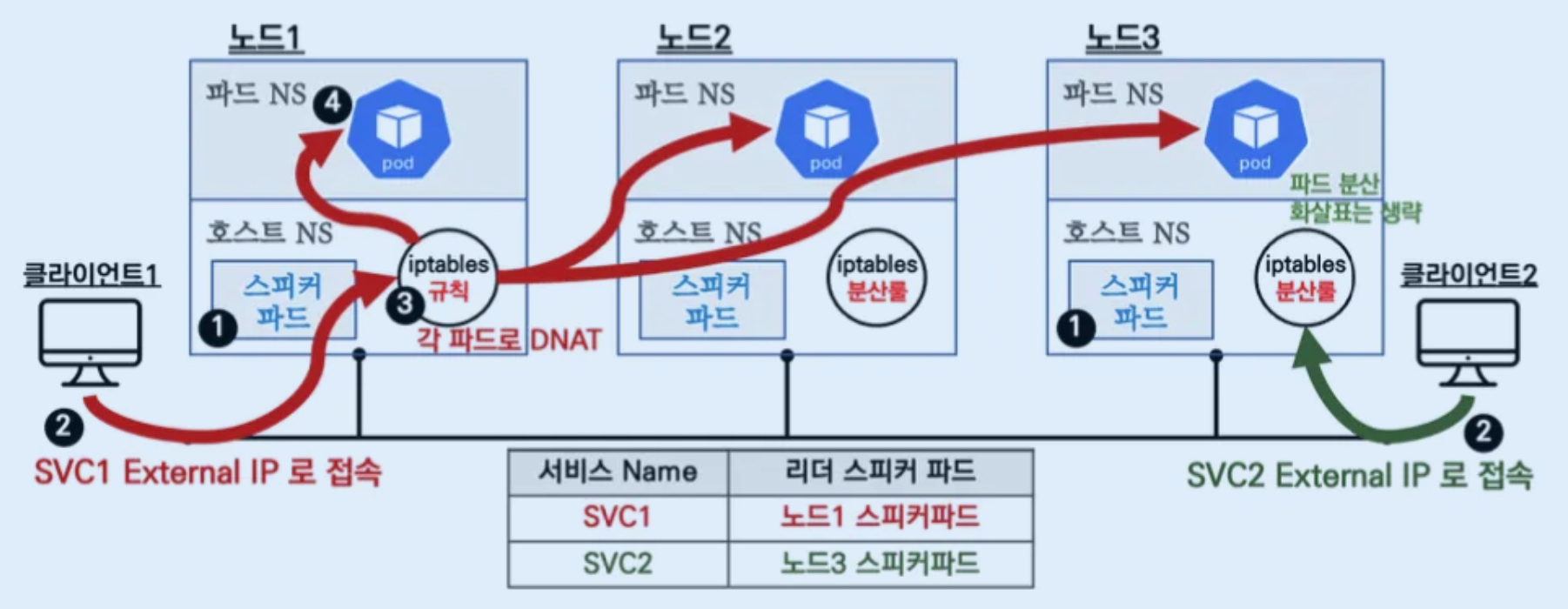

MetalLB는 BareMetal Load Balancer의 약자로, 온프레미스 환경에서 표준 프로토콜을 사용해서 LoadBalancer 서비스를 구현해주는 프로그램입니다. (클라우드 플랫폼 호환안됨, 일부 CNI와 연동불가)

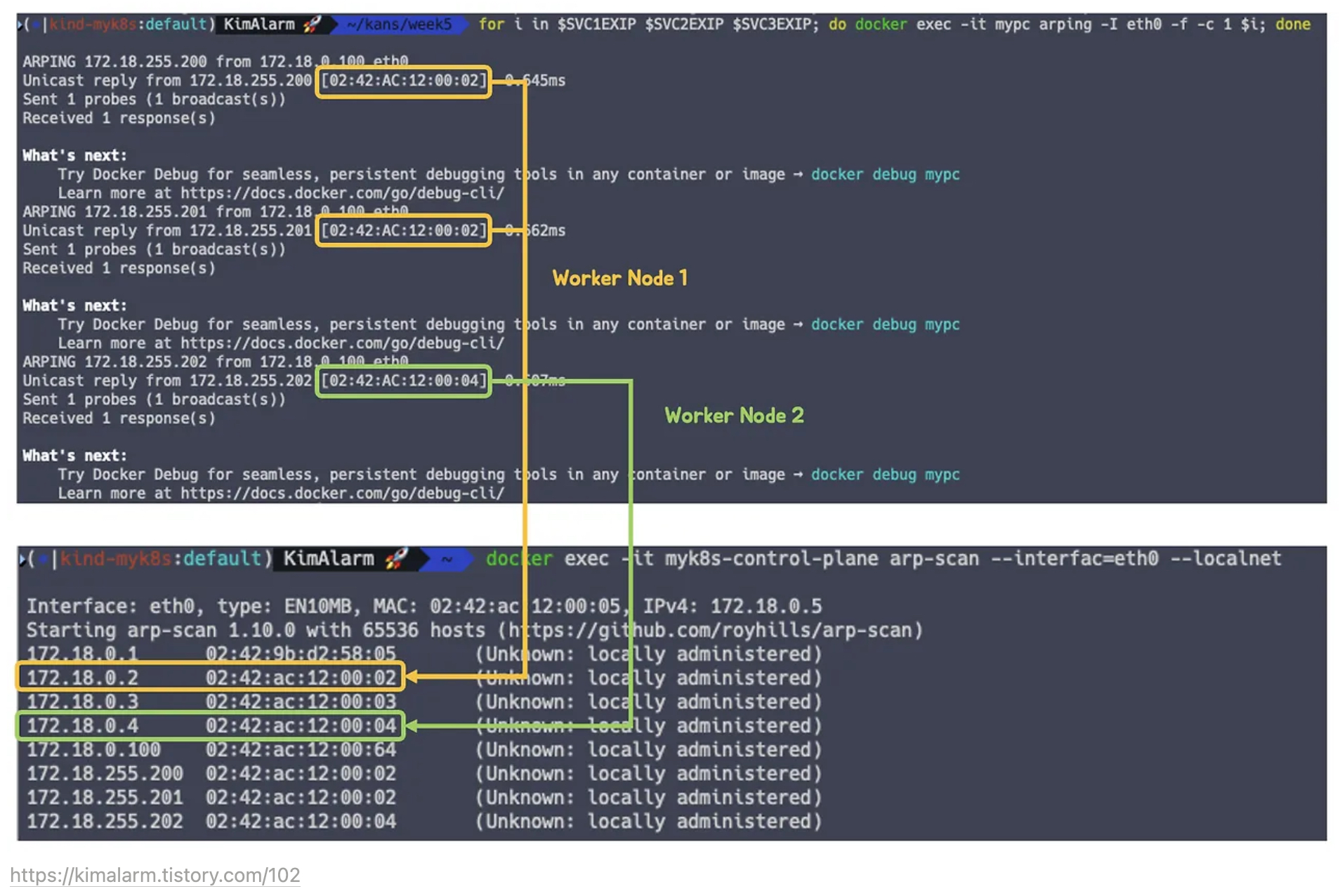

쿠버네티스의 Daemonset으로 Speaker 파드(Pod)를 생성하여, 'External IP'를 전파합니다. External IP는 노드의 IP 대신 외부에서 접속할 수 있습니다. 이를 통해 노드의 IP를 외부에 노출하지 않을 수 있어서 보안성을 높일 수 있습니다.

서비스(로드 밸런서)의 'External IP' 전파를 위해서 표준 프로토콜인 ARP(IPv4)/NDP(IPv6), BGP를 사용합니다

Speaker 파드는 External IP 전파를 위해서 표준 프로토콜링 ARP(Address Resolution Protocol) 혹은 BGP를 사용합니다. MetalLB는 ARP를 사용하는 Layer2 모드와 BGP를 사용하는 BGP 모드 중 하나를 선택하여 동작합니다.

LoadBalancer 서비스 리소스 생성 시 MetalLB Speaker 파드들 중 Leader Speaker가 선출됩니다.

클라이언트는 svc1 External IP로 접속을 시도하고, 해당 트래픽은 svc1 External IP정보를 전파하는 리더 Speaker 파드가 있는 노드1로 들어오게 되며, 마찬가지로 클라이언트2는 svc2 External IP로 접속을 시도하고 해당 트래픽은 노드3으로 들어오게 됩니다.

노드에 인입 후 IPTABLES 규칙에 의해서 ClusterIP와 동일하게 해당 서비스에 연동된 엔드포인트 파드들로 랜덤 부하분산되어 전달됩니다.

-

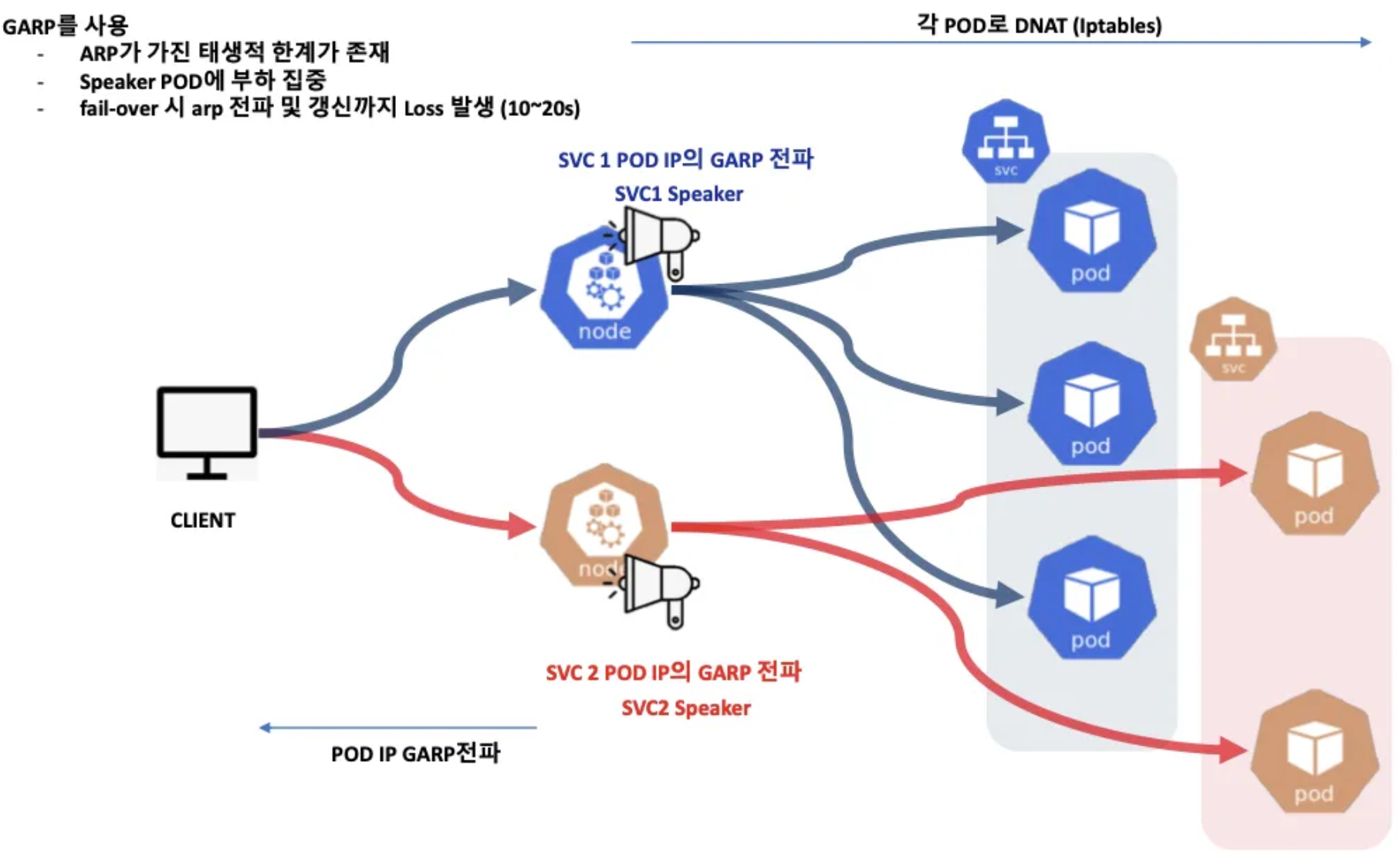

MetalLB는 Layer2 모드 동작(MetalLB 컨트롤러 파드는 그림에서 생략됨)

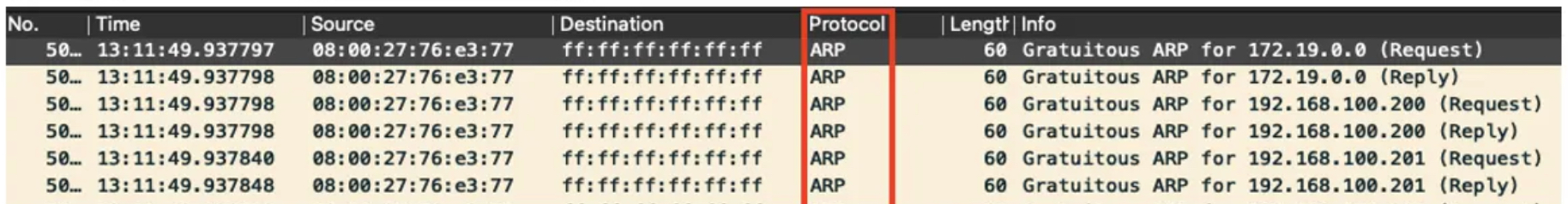

- 리더 파드가 뿌리는 GARP 패킷 (예시)

- 리더 파드가 선출되고 해당 리더 파드가 생성된 노드로만 트래픽이 인입되어 해당 노드에서 iptables 분산되어 파드로 접속

- 서비스(로드밸런서) 'External IP' 생성 시 speaker 파드 중 1개가 리더가 되고, 리더 speaker 파드가 존재하는 노드로 서비스 접속 트래픽이 인입되게 됩니다

- 데몬셋으로 배포된 speaker 파드는 호스트 네트워크를 사용합니다 ⇒ "NetworkMode": "host"

- 리더는 ARP(GARP, Gratuitous APR)로 해당 'External IP' 에 대해서 자신이 소유(?)라며 동일 네트워크에 전파를 합니다

- 만약 리더(노드)가 장애 발생 시 자동으로 나머지 speaker 파드 중 1개가 리더가 됩니다.

- 멤버 리스터 및 장애 발견은 hashicorp 의 memberlist 를 사용 - Gossip based membership and failure detection

- 만약 리더(노드)가 장애 발생 시 자동으로 나머지 speaker 파드 중 1개가 리더가 됩니다.

- Layer 2에서 멤버 발견 및 자동 절체에 Keepalived(VRRP)도 있지만 사용하지 않은 이유 - 링크

- 리더 파드가 뿌리는 GARP 패킷 (예시)

-

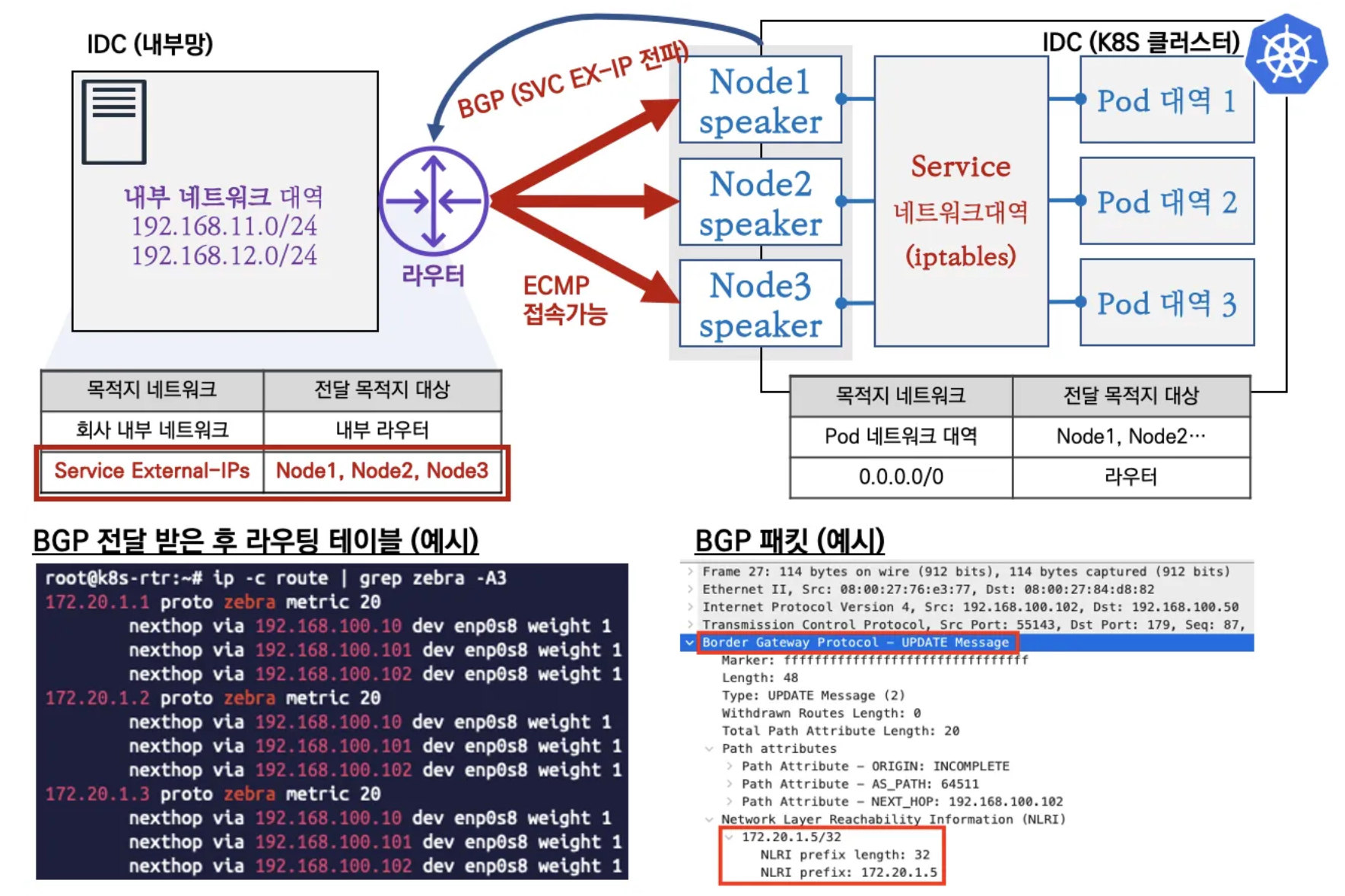

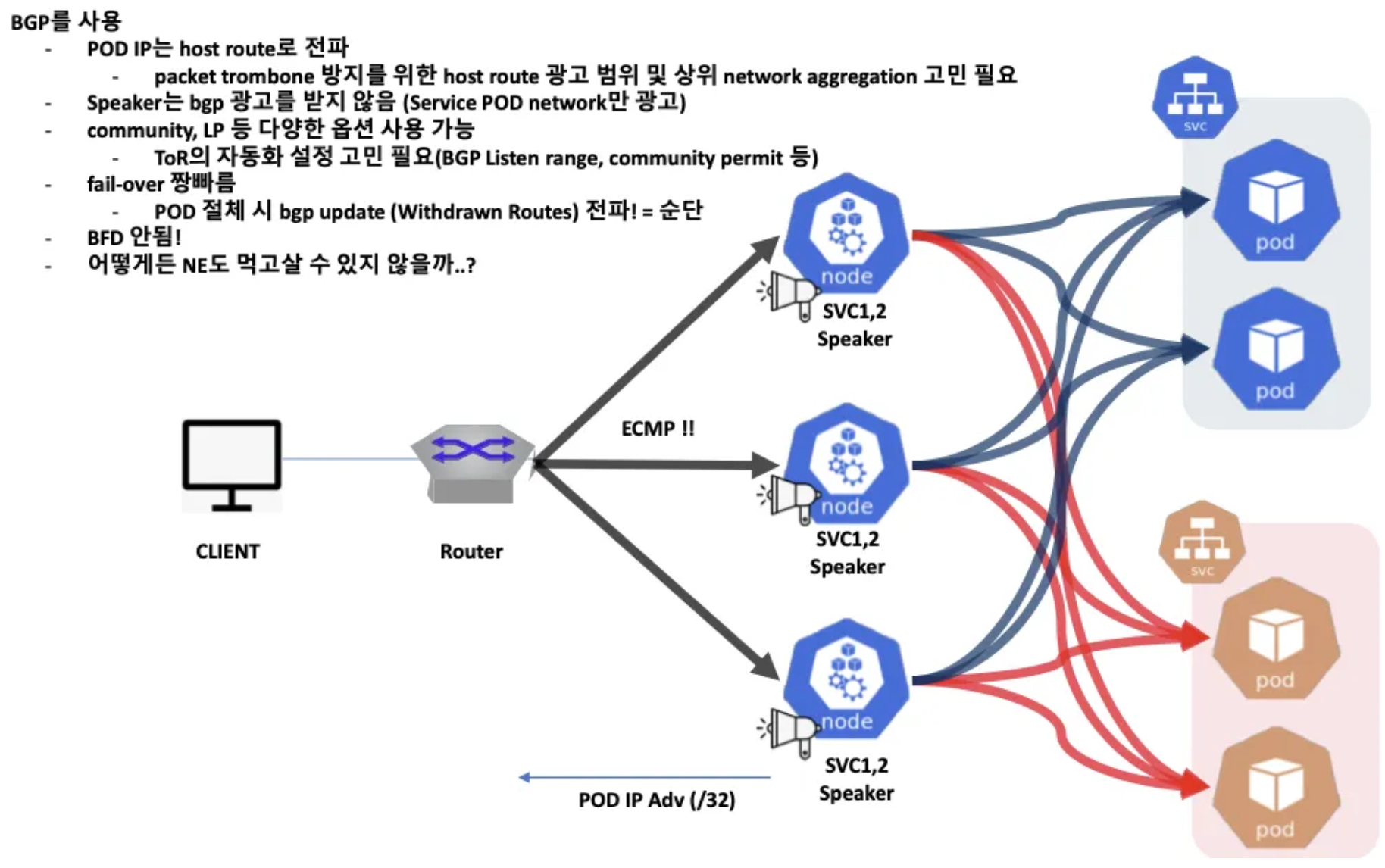

MetalLB - BGP 모드

- speaker 파드가 BGP로 서비스 정보(EXTERNAL-IP)를 전파 후, 외부에서 라우터를 통해 ECMP 라우팅으로 부하 분산 접속

- 일반적인 BGP 데몬과 다르게 BGP 업데이트(외부에서 광고하는 네트워크 대역)을 받지 않습니다.

- 권장 사용 환경 : 규모가 있고, 클라이언트 IP 보존과 장애 시 빠른 절체가 필요하며, 네트워크 팀 협조가 가능할때 사용을 추천합니다.

metalLB 실습 기본정보 확인

-

Pod 생성

❯ cat <<EOF | kubectl apply -f - apiVersion: v1 kind: Pod metadata: name: webpod1 labels: app: webpod spec: nodeName: myk8s-worker containers: - name: container image: traefik/whoami terminationGracePeriodSeconds: 0 --- apiVersion: v1 kind: Pod metadata: name: webpod2 labels: app: webpod spec: nodeName: myk8s-worker2 containers: - name: container image: traefik/whoami terminationGracePeriodSeconds: 0 EOF pod/webpod1 created pod/webpod2 created -

Pod 접속 확인

# 파드 정보 확인 ❯ kubectl get pod -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES webpod1 1/1 Running 0 105s 10.10.1.2 myk8s-worker <none> <none> webpod2 1/1 Running 0 105s 10.10.3.2 myk8s-worker2 <;none> <none> # 파드 IP주소를 변수에 지정 ❯ WPOD1=$(kubectl get pod webpod1 -o jsonpath="{.status.podIP}") ❯ WPOD2=$(kubectl get pod webpod2 -o jsonpath="{.status.podIP}") ❯ echo $WPOD1 $WPOD2 10.10.1.2 10.10.3.2 # 접속 확인 ❯ docker exec -t myk8s-control-plane ping -i 1 -W 1 -c 1 $WPOD1 PING 10.10.1.2 (10.10.1.2) 56(84) bytes of data. 64 bytes from 10.10.1.2: icmp_seq=1 ttl=63 time=0.063 ms --- 10.10.1.2 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 0.063/0.063/0.063/0.000 ms ❯ docker exec -t myk8s-control-plane ping -i 1 -W 1 -c 1 $WPOD2 PING 10.10.3.2 (10.10.3.2) 56(84) bytes of data. 64 bytes from 10.10.3.2: icmp_seq=1 ttl=63 time=0.118 ms --- 10.10.3.2 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 0ms rtt min/avg/max/mdev = 0.118/0.118/0.118/0.000 ms ❯ docker exec -it myk8s-control-plane curl -s --connect-timeout 1 $WPOD1 | grep Hostname Hostname: webpod1 ❯ docker exec -it myk8s-control-plane curl -s --connect-timeout 1 $WPOD2 | grep Hostname Hostname: webpod2 ❯ docker exec -it myk8s-control-plane curl -s --connect-timeout 1 $WPOD1 | egrep 'Hostname|RemoteAddr|Host:' Hostname: webpod1 RemoteAddr: 172.18.0.2:52746 Host: 10.10.1.2 ❯ docker exec -it myk8s-control-plane curl -s --connect-timeout 1 $WPOD2 | egrep 'Hostname|RemoteAddr|Host:' Hostname: webpod2 RemoteAddr: 172.18.0.2:37860 Host: 10.10.3.2

MetalLB - Layer 2 모드

-

MetalLB 설치

- 설치방법 지원 : Kubernetes manifests, using Kustomize, or using Helm

- 참고 : kube-proxy 의 ipvs 모드 사용 시 'strictARP: true' 설정 필요

- 설치방법 지원 : Kubernetes manifests, using Kustomize, or using Helm

-

간단하게 manifests 로 설치 진행!

# Kubernetes manifests 로 설치 ❯ kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/refs/heads/main/config/manifests/metallb-native-prometheus.yaml namespace/metallb-system created customresourcedefinition.apiextensions.k8s.io/bfdprofiles.metallb.io created customresourcedefinition.apiextensions.k8s.io/bgpadvertisements.metallb.io created customresourcedefinition.apiextensions.k8s.io/bgppeers.metallb.io created customresourcedefinition.apiextensions.k8s.io/communities.metallb.io created customresourcedefinition.apiextensions.k8s.io/ipaddresspools.metallb.io created customresourcedefinition.apiextensions.k8s.io/l2advertisements.metallb.io created customresourcedefinition.apiextensions.k8s.io/servicel2statuses.metallb.io created serviceaccount/controller created serviceaccount/speaker created role.rbac.authorization.k8s.io/controller created role.rbac.authorization.k8s.io/pod-lister created role.rbac.authorization.k8s.io/prometheus-k8s created clusterrole.rbac.authorization.k8s.io/metallb-system:controller created clusterrole.rbac.authorization.k8s.io/metallb-system:speaker created rolebinding.rbac.authorization.k8s.io/controller created rolebinding.rbac.authorization.k8s.io/pod-lister created rolebinding.rbac.authorization.k8s.io/prometheus-k8s created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller created clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker created configmap/metallb-excludel2 created secret/metallb-webhook-cert created service/controller-monitor-service created service/metallb-webhook-service created service/speaker-monitor-service created deployment.apps/controller created daemonset.apps/speaker created validatingwebhookconfiguration.admissionregistration.k8s.io/metallb-webhook-configuration created resource mapping not found for name: "controller-monitor" namespace: "metallb-system" from "https://raw.githubusercontent.com/metallb/metallb/refs/heads/main/config/manifests/metallb-native-prometheus.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1" ensure CRDs are installed first resource mapping not found for name: "speaker-monitor" namespace: "metallb-system" from "https://raw.githubusercontent.com/metallb/metallb/refs/heads/main/config/manifests/metallb-native-prometheus.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1" ensure CRDs are installed first # metallb crd 확인 ❯ kubectl get crd | grep metallb bfdprofiles.metallb.io 2024-10-05T07:23:49Z bgpadvertisements.metallb.io 2024-10-05T07:23:49Z bgppeers.metallb.io 2024-10-05T07:23:49Z communities.metallb.io 2024-10-05T07:23:49Z ipaddresspools.metallb.io 2024-10-05T07:23:49Z l2advertisements.metallb.io 2024-10-05T07:23:49Z servicel2statuses.metallb.io 2024-10-05T07:23:49Z # 생성된 리소스 확인 : metallb-system 네임스페이스 생성, 파드(컨트롤러, 스피커) 생성, RBAC(서비스/파드/컨피그맵 조회 등등 권한들), SA 등 kubectl get-all -n metallb-system # kubectl krew 플러그인 get-all 설치 후 사용 가능 ❯ kubectl get-all -n metallb-system NAME NAMESPACE AGE configmap/kube-root-ca.crt metallb-system 21m configmap/metallb-excludel2 metallb-system 21m endpoints/controller-monitor-service metallb-system 21m endpoints/metallb-webhook-service metallb-system 21m endpoints/speaker-monitor-service metallb-system 21m pod/controller-679855f7d7-ntlkm metallb-system 21m pod/speaker-f2mg6 metallb-system 21m pod/speaker-hf5qv metallb-system 21m pod/speaker-vtxtd metallb-system 21m pod/speaker-w8l64 metallb-system 21m secret/memberlist metallb-system 21m secret/metallb-webhook-cert metallb-system 21m serviceaccount/controller metallb-system 21m serviceaccount/default metallb-system 21m serviceaccount/speaker metallb-system 21m service/controller-monitor-service metallb-system 21m service/metallb-webhook-service metallb-system 21m service/speaker-monitor-service metallb-system 21m controllerrevision.apps/speaker-5dc69b85f7 metallb-system 21m daemonset.apps/speaker metallb-system 21m deployment.apps/controller metallb-system 21m replicaset.apps/controller-679855f7d7 metallb-system 21m endpointslice.discovery.k8s.io/controller-monitor-service-qlfwl metallb-system 21m endpointslice.discovery.k8s.io/metallb-webhook-service-b2rpk metallb-system 21m endpointslice.discovery.k8s.io/speaker-monitor-service-fkzwz metallb-system 21m rolebinding.rbac.authorization.k8s.io/controller metallb-system 21m rolebinding.rbac.authorization.k8s.io/pod-lister metallb-system 21m rolebinding.rbac.authorization.k8s.io/prometheus-k8s metallb-system 21m role.rbac.authorization.k8s.io/controller metallb-system 21m role.rbac.authorization.k8s.io/pod-lister metallb-system 21m role.rbac.authorization.k8s.io/prometheus-k8s metallb-system 21m ❯ kubectl get all,configmap,secret,ep -n metallb-system NAME READY STATUS RESTARTS AGE pod/controller-679855f7d7-ntlkm 2/2 Running 0 22m pod/speaker-f2mg6 2/2 Running 0 22m pod/speaker-hf5qv 2/2 Running 0 22m pod/speaker-vtxtd 2/2 Running 0 22m pod/speaker-w8l64 2/2 Running 0 22m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/controller-monitor-service ClusterIP None <none> 9120/TCP 22m service/metallb-webhook-service ClusterIP 10.200.1.226 <none> 443/TCP 22m service/speaker-monitor-service ClusterIP None <none> 9120/TCP 22m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/speaker 4 4 4 4 4 kubernetes.io/os=linux 22m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/controller 1/1 1 1 22m NAME DESIRED CURRENT READY AGE replicaset.apps/controller-679855f7d7 1 1 1 22m NAME DATA AGE configmap/kube-root-ca.crt 1 22m configmap/metallb-excludel2 1 22m NAME TYPE DATA AGE secret/memberlist Opaque 1 22m secret/metallb-webhook-cert Opaque 4 22m NAME ENDPOINTS AGE endpoints/controller-monitor-service 10.10.2.5:9120 22m endpoints/metallb-webhook-service 10.10.2.5:9443 22m endpoints/speaker-monitor-service 172.18.0.2:9120,172.18.0.3:9120,172.18.0.4:9120 + 1 more... 22m # 파드 내에 kube-rbac-proxy 컨테이너는 프로메테우스 익스포터 역할 제공 ❯ kubectl get pods -n metallb-system -l app=metallb -o jsonpath="{range .items[*]}{.metadata.name}{':\n'}{range .spec.containers[*]}{' '}{.name}{' -> '}{.image}{'\n'}{end}{end}" controller-679855f7d7-ntlkm: kube-rbac-proxy -> gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 controller -> quay.io/metallb/controller:main speaker-f2mg6: kube-rbac-proxy -> gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 speaker -> quay.io/metallb/speaker:main speaker-hf5qv: kube-rbac-proxy -> gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 speaker -> quay.io/metallb/speaker:main speaker-vtxtd: kube-rbac-proxy -> gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 speaker -> quay.io/metallb/speaker:main speaker-w8l64: kube-rbac-proxy -> gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 speaker -> quay.io/metallb/speaker:main ## metallb 컨트롤러는 디플로이먼트로 배포됨 ❯ kubectl get ds,deploy -n metallb-system NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/speaker 4 4 4 4 4 kubernetes.io/os=linux 25m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/controller 1/1 1 1 25m ## 데몬셋으로 배포되는 metallb 스피커 파드의 IP는 네트워크가 host 모드이므로 노드의 IP를 그대로 사용 ❯ kubectl get pod -n metallb-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES controller-679855f7d7-ntlkm 2/2 Running 0 25m 10.10.2.5 myk8s-worker3 <none> <none> speaker-f2mg6 2/2 Running 0 25m 172.18.0.5 myk8s-worker <none> <none> speaker-hf5qv 2/2 Running 0 25m 172.18.0.3 myk8s-worker2 <none> <none> speaker-vtxtd 2/2 Running 0 25m 172.18.0.2 myk8s-control-plane <none> <none> speaker-w8l64 2/2 Running 0 25m 172.18.0.4 myk8s-worker3 <none> <none> # (참고) 상세 정보 확인 ❯ kubectl get sa,cm,secret -n metallb-system NAME SECRETS AGE serviceaccount/controller 0 26m serviceaccount/default 0 26m serviceaccount/speaker 0 26m NAME DATA AGE configmap/kube-root-ca.crt 1 26m configmap/metallb-excludel2 1 26m NAME TYPE DATA AGE secret/memberlist Opaque 1 26m secret/metallb-webhook-cert Opaque 4 26m ❯ kubectl describe role -n metallb-system Name: controller Labels: app=metallb Annotations: <none> PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- secrets [] [] [create delete get list patch update watch] bfdprofiles.metallb.io [] [] [get list watch] bgpadvertisements.metallb.io [] [] [get list watch] communities.metallb.io [] [] [get list watch] ipaddresspools.metallb.io [] [] [get list watch] l2advertisements.metallb.io [] [] [get list watch] bgppeers.metallb.io [] [] [get list] deployments.apps [] [controller] [get] secrets [] [memberlist] [list] Name: pod-lister Labels: app=metallb Annotations: <none> PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- configmaps [] [] [get list watch] secrets [] [] [get list watch] bfdprofiles.metallb.io [] [] [get list watch] bgpadvertisements.metallb.io [] [] [get list watch] bgppeers.metallb.io [] [] [get list watch] communities.metallb.io [] [] [get list watch] ipaddresspools.metallb.io [] [] [get list watch] l2advertisements.metallb.io [] [] [get list watch] pods [] [] [list get] Name: prometheus-k8s Labels: <none> Annotations: <none> PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- endpoints [] [] [get list watch] pods [] [] [get list watch] services [] [] [get list watch] ❯ kubectl describe deploy controller -n metallb-system Name: controller Namespace: metallb-system CreationTimestamp: Sat, 05 Oct 2024 16:23:49 +0900 Labels: app=metallb component=controller Annotations: deployment.kubernetes.io/revision: 1 Selector: app=metallb,component=controller Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=metallb component=controller Annotations: prometheus.io/port: 7472 prometheus.io/scrape: true Service Account: controller Containers: kube-rbac-proxy: Image: gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 Port: 9120/TCP Host Port: 0/TCP Args: --secure-listen-address=0.0.0.0:9120 --upstream=http://127.0.0.1:7472/ --logtostderr=true --v=0 Limits: cpu: 500m memory: 128Mi Requests: cpu: 5m memory: 64Mi Environment: <none> Mounts: <none> controller: Image: quay.io/metallb/controller:main Ports: 7472/TCP, 9443/TCP Host Ports: 0/TCP, 0/TCP Args: --port=7472 --log-level=info --tls-min-version=VersionTLS12 Liveness: http-get http://:monitoring/metrics delay=10s timeout=1s period=10s #success=1 #failure=3 Readiness: http-get http://:monitoring/metrics delay=10s timeout=1s period=10s #success=1 #failure=3 Environment: METALLB_ML_SECRET_NAME: memberlist METALLB_DEPLOYMENT: controller Mounts: /tmp/k8s-webhook-server/serving-certs from cert (ro) Volumes: cert: Type: Secret (a volume populated by a Secret) SecretName: metallb-webhook-cert Optional: false Node-Selectors: kubernetes.io/os=linux Tolerations: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: controller-679855f7d7 (1/1 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 27m deployment-controller Scaled up replica set controller-679855f7d7 to 1 ❯ kubectl describe ds speaker -n metallb-system Name: speaker Selector: app=metallb,component=speaker Node-Selector: kubernetes.io/os=linux Labels: app=metallb component=speaker Annotations: deprecated.daemonset.template.generation: 1 Desired Number of Nodes Scheduled: 4 Current Number of Nodes Scheduled: 4 Number of Nodes Scheduled with Up-to-date Pods: 4 Number of Nodes Scheduled with Available Pods: 4 Number of Nodes Misscheduled: 0 Pods Status: 4 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: app=metallb component=speaker Annotations: prometheus.io/port: 7472 prometheus.io/scrape: true Service Account: speaker Containers: kube-rbac-proxy: Image: gcr.io/kubebuilder/kube-rbac-proxy:v0.12.0 Port: 9120/TCP Host Port: 0/TCP Args: --secure-listen-address=0.0.0.0:9120 --upstream=http://$(METALLB_HOST):7472/ --logtostderr=true --v=0 Limits: cpu: 500m memory: 128Mi Requests: cpu: 5m memory: 64Mi Environment: METALLB_HOST: (v1:status.hostIP) Mounts: <none> speaker: Image: quay.io/metallb/speaker:main Ports: 7472/TCP, 7946/TCP, 7946/UDP Host Ports: 0/TCP, 0/TCP, 0/UDP Args: --port=7472 --log-level=info Liveness: http-get http://:monitoring/metrics delay=10s timeout=1s period=10s #success=1 #failure=3 Readiness: http-get http://:monitoring/metrics delay=10s timeout=1s period=10s #success=1 #failure=3 Environment: METALLB_NODE_NAME: (v1:spec.nodeName) METALLB_POD_NAME: (v1:metadata.name) METALLB_HOST: (v1:status.hostIP) METALLB_ML_BIND_ADDR: (v1:status.podIP) METALLB_ML_LABELS: app=metallb,component=speaker METALLB_ML_SECRET_KEY_PATH: /etc/ml_secret_key Mounts: /etc/metallb from metallb-excludel2 (ro) /etc/ml_secret_key from memberlist (ro) Volumes: memberlist: Type: Secret (a volume populated by a Secret) SecretName: memberlist Optional: false metallb-excludel2: Type: ConfigMap (a volume populated by a ConfigMap) Name: metallb-excludel2 Optional: false Node-Selectors: kubernetes.io/os=linux Tolerations: node-role.kubernetes.io/control-plane:NoSchedule op=Exists node-role.kubernetes.io/master:NoSchedule op=Exists Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 27m daemonset-controller Created pod: speaker-vtxtd Normal SuccessfulCreate 27m daemonset-controller Created pod: speaker-f2mg6 Normal SuccessfulCreate 27m daemonset-controller Created pod: speaker-hf5qv Normal SuccessfulCreate 27m daemonset-controller Created pod: speaker-w8l64 -

컨피그맵 생성 : 모드 및 서비스 대역 지정

- 서비스(External-IP) 대역을 노드가 속한 eth0의 대역이 아니여도 상관없다! → 다만 이 경우 GW 역할의 라우터에서 노드들로 라우팅 경로 지정 필요

# kind 설치 시 kind 이름의 도커 브리지가 생성된다 : 172.18.0.0/16 대역 docker network ls docker inspect kind # kind network 중 컨테이너(노드) IP(대역) 확인 : 172.18.0.2~ 부터 할당되며, control-plane 이 꼭 172.18.0.2가 안될 수 도 있음 ❯ docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}' /myk8s-control-plane 172.18.0.2 /myk8s-worker2 172.18.0.3 /myk8s-worker 172.18.0.5 /myk8s-worker3 172.18.0.4 # IPAddressPool 생성 : LoadBalancer External IP로 사용할 IP 대역 ## MetalLB는 서비스를 위한 외부 IP 주소를 관리하고, 서비스가 생성될 때 해당 IP 주소를 동적으로 할당할 수 있습니다. ❯ kubectl explain ipaddresspools.metallb.io GROUP: metallb.io KIND: IPAddressPool VERSION: v1beta1 DESCRIPTION: IPAddressPool represents a pool of IP addresses that can be allocated to LoadBalancer services. FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <ObjectMeta> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <Object> -required- IPAddressPoolSpec defines the desired state of IPAddressPool. status <Object> IPAddressPoolStatus defines the observed state of IPAddressPool. ❯ cat <<EOF | kubectl apply -f - apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: my-ippool namespace: metallb-system spec: addresses: - 172.18.255.200-172.18.255.250 EOF ipaddresspool.metallb.io/my-ippool created ❯ kubectl get ipaddresspools -n metallb-system NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES my-ippool true false ["172.18.255.200-172.18.255.250"] # L2Advertisement 생성 : 설정한 IPpool을 기반으로 Layer2 모드로 LoadBalancer IP 사용 허용 ## Kubernetes 클러스터 내의 서비스가 외부 네트워크에 IP 주소를 광고하는 방식을 정의 ❯ kubectl explain l2advertisements.metallb.io GROUP: metallb.io KIND: L2Advertisement VERSION: v1beta1 DESCRIPTION: L2Advertisement allows to advertise the LoadBalancer IPs provided by the selected pools via L2. FIELDS: apiVersion <string> APIVersion defines the versioned schema of this representation of an object. Servers should convert recognized schemas to the latest internal value, and may reject unrecognized values. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources kind <string> Kind is a string value representing the REST resource this object represents. Servers may infer this from the endpoint the client submits requests to. Cannot be updated. In CamelCase. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds metadata <ObjectMeta> Standard object's metadata. More info: https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata spec <Object> L2AdvertisementSpec defines the desired state of L2Advertisement. status <Object> L2AdvertisementStatus defines the observed state of L2Advertisement. ❯ cat <<EOF | kubectl apply -f - apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: my-l2-advertise namespace: metallb-system spec: ipAddressPools: - my-ippool EOF l2advertisement.metallb.io/my-l2-advertise created ❯ kubectl get l2advertisements -n metallb-system NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES my-l2-advertise ["my-ippool"] -

(참고) 로그 확인 : 아래 로그 -f 모니터링 해두기

# (옵션) metallb-speaker 파드 로그 확인 ❯ kubectl logs -n metallb-system -l app=metallb -f Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, controller Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker I1005 07:23:50.514596 1 main.go:186] Valid token audiences: I1005 07:23:50.514658 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.189323 1 main.go:186] Valid token audiences: I1005 07:23:51.191028 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.505883 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.506045 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:50.806955 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:50.808503 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.119509 1 main.go:186] Valid token audiences: I1005 07:23:51.119601 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.734195 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.734386 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.202538 1 main.go:186] Valid token audiences: I1005 07:23:51.202701 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.493108 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.493411 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.157231 1 main.go:186] Valid token audiences: I1005 07:23:51.157360 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.651198 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.651378 1 main.go:363] Listening securely on 0.0.0.0:9120 ❯ kubectl logs -n metallb-system -l component=speaker --since 1h Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker I1005 07:23:51.202538 1 main.go:186] Valid token audiences: I1005 07:23:51.202701 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.493108 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.493411 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.119509 1 main.go:186] Valid token audiences: I1005 07:23:51.119601 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.734195 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.734386 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.157231 1 main.go:186] Valid token audiences: I1005 07:23:51.157360 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.651198 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.651378 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.189323 1 main.go:186] Valid token audiences: I1005 07:23:51.191028 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.505883 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.506045 1 main.go:363] Listening securely on 0.0.0.0:9120 ❯ kubectl logs -n metallb-system -l component=speaker -f Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker Defaulted container "kube-rbac-proxy" out of: kube-rbac-proxy, speaker I1005 07:23:51.157231 1 main.go:186] Valid token audiences: I1005 07:23:51.157360 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.651198 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.651378 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.189323 1 main.go:186] Valid token audiences: I1005 07:23:51.191028 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.505883 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.506045 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.119509 1 main.go:186] Valid token audiences: I1005 07:23:51.119601 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.734195 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.734386 1 main.go:363] Listening securely on 0.0.0.0:9120 I1005 07:23:51.202538 1 main.go:186] Valid token audiences: I1005 07:23:51.202701 1 main.go:306] Generating self signed cert as no cert is provided I1005 07:23:51.493108 1 main.go:356] Starting TCP socket on 0.0.0.0:9120 I1005 07:23:51.493411 1 main.go:363] Listening securely on 0.0.0.0:9120 # (옵션) kubectl krew 플러그인 stern 설치 후 아래 명령 사용 가능 ❯ kubectl stern -n metallb-system -l app=metallb + controller-679855f7d7-ntlkm › kube-rbac-proxy + controller-679855f7d7-ntlkm › controller + speaker-f2mg6 › speaker + speaker-f2mg6 › kube-rbac-proxy + speaker-hf5qv › speaker + speaker-hf5qv › kube-rbac-proxy + speaker-vtxtd › speaker + speaker-vtxtd › kube-rbac-proxy + speaker-w8l64 › speaker + speaker-w8l64 › kube-rbac-proxy controller-679855f7d7-ntlkm controller {"branch":"dev","caller":"main.go:178","commit":"dev","goversion":"gc / go1.22.7 / arm64","level":"info","msg":"MetalLB controller starting (commit dev, branch dev)","ts":"2024-10-05T07:23:50Z","version":""} controller-679855f7d7-ntlkm controller {"action":"setting up cert rotation","caller":"webhook.go:31","level":"info","op":"startup","ts":"2024-10-05T07:23:50Z"} controller-679855f7d7-ntlkm controller {"caller":"k8s.go:400","level":"info","msg":"secret successfully created","op":"CreateMlSecret","ts":"2024-10-05T07:23:50Z"} controller-679855f7d7-ntlkm controller {"caller":"k8s.go:423","level":"info","msg":"Starting Manager","op":"Run","ts":"2024-10-05T07:23:50Z"} speaker-f2mg6 speaker ...... kubectl stern -n metallb-system -l component=speaker --since 1h kubectl stern -n metallb-system -l component=speaker # 기본 설정이 follow kubectl stern -n metallb-system speaker # 매칭 사용 가능

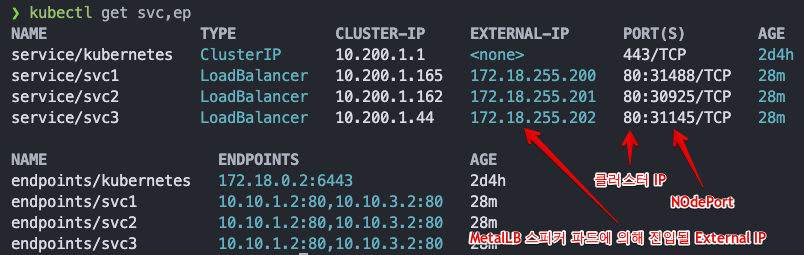

metalLB 서비스 생성 및 확인

-

서비스(LoadBalancer 타입) 생성