가시다(gasida) 님이 진행하는 Terraform T101 4기 실습 스터디 게시글입니다.

책 '테라폼으로 시작하는 IaC' 를 참고하였고, 스터디하면서 도움이 될 내용들을 정리하고자 합니다.

7주차는 테라폼으로 AWS EKS 배포 여러 방법에 대해 학습을 하였습니다.

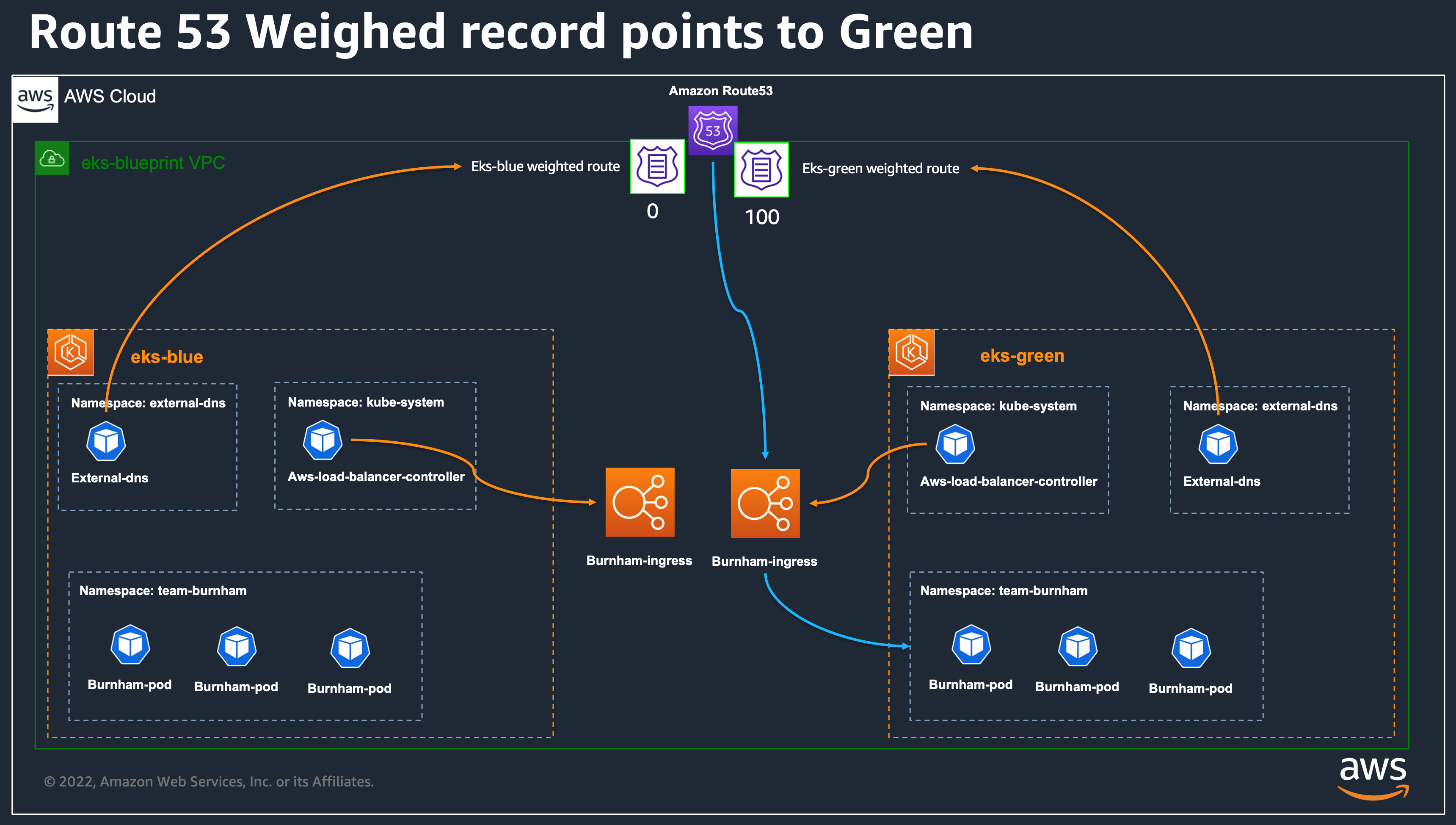

이번엔 Amazon EKS Blueprints for Terraform 사이트 (https://aws-ia.github.io/terraform-aws-eks-blueprints/)에 대한 간략한 소개와 Blue/Green Migration에서 제공하는 패턴을 활용하여 EKS 1.27을 EKS 1.30으로 트래픽을 Route 53 가중치를 조정하여 카나리 전략으로 마이크레이션 실습한 것을 정리하였습니다.

1. Kubernetes, AmazonEKS 설명

Kubnernetes(K8S)

- 쿠버네티스는 컨테이너화된 워크로드와 서비스를 관리하기 위한 이식성이 있고, 확장가능한 오픈소스 플랫폼입니다. 쿠버네티스는 선언적 구성과 자동화를 모두 용이하게 해주는 컨테이너 오케스트레이션 툴입니다.

-

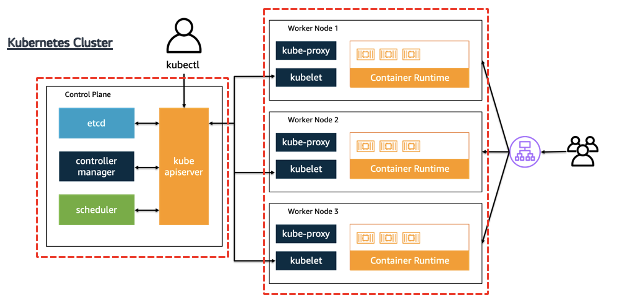

쿠버네티스를 배포하면 클러스터를 얻습니다. 그리고 이 클러스터는 노드들의 집합입니다. 노드들은 크게 두 가지 유형으로 나눠지는데, 각각이 컨트롤 플레인과 데이터 플레인입니다.

- 컨트롤 플레인(Control Plane)은 워커 노드와 클러스터 내 파드를 관리하고 제어합니다.

- 데이터 플레인(Data Plane)은 워커 노드들로 구성되어 있으며 컨테이너화된 애플리케이션의 구성 요소인 파드를 호스트합니다.

-

추가적으로 필수는 아니지만 사용할 수 있는 애드온용 컴포넌트도 있습니다.

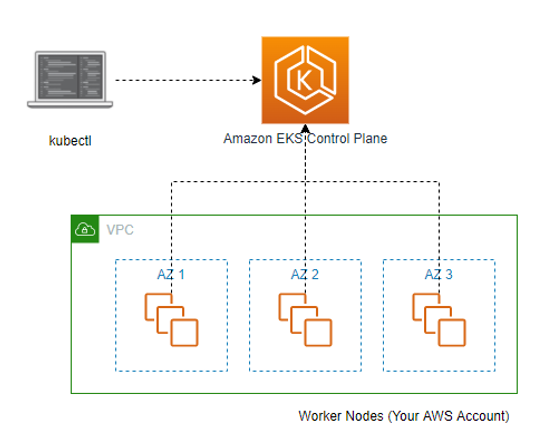

Amazon EKS

- Amazon Elastic Kubernetes Service(Amazon EKS)는 AWS 또는 On-Premises 에서 Kubernetes 애플리케이션을 실행하고 크기를 조정하는 관리형 컨테이너 서비스입니다.

Amazon EKS를 사용하면 AWS 환경에서 Kubernetes 컨트롤 플레인 또는 (노드)를 직접 설치, 운영 및 유지할 필요가 없습니다.

-

Amazon EKS는 여러 가용 영역에서 Kubernetes 컨트롤 플레인 인스턴스를 실행하여 고가용성을 보장합니다. 또한, 비정상 컨트롤 플레인 인스턴스를 자동으로 감지하고 교체하며 자동화된 버전 업그레이드 및 패치를 제공합니다.

-

Amazon EKS는 다양한 AWS 서비스들과 연동하여 애플리케이션에 대한 확장성 및 보안을 제공하는 서비스를 제공합니다.

- 컨테이너 이미지 저장소인 Amazon ECR(Elastic Container Registry)

- 로드 분산을 위한 AWS ELB(Elastic Load Balancing)

- 인증을 위한 AWS IAM

- 격리된 Amazon VPC

-

Amazon EKS는 오픈 소스 Kubernetes 소프트웨어의 최신 버전을 실행하므로 Kubernetes 커뮤니티에서 사용되는 플러그인과 툴을 모두 사용할 수 있습니다. 온프레미스 데이터 센터에서 실행 중인지, 퍼블릭 클라우드에서 실행 중인지에 상관없이 Amazon EKS에서 실행 중인 애플리케이션은 표준 Kubernetes 환경에서 실행 중인 애플리케이션과 완벽하게 호환됩니다. 즉, 코드를 수정하지 않고 표준 Kubernetes 애플리케이션을 Amazon EKS로 손쉽게 마이그레이션할 수 있습니다.

-

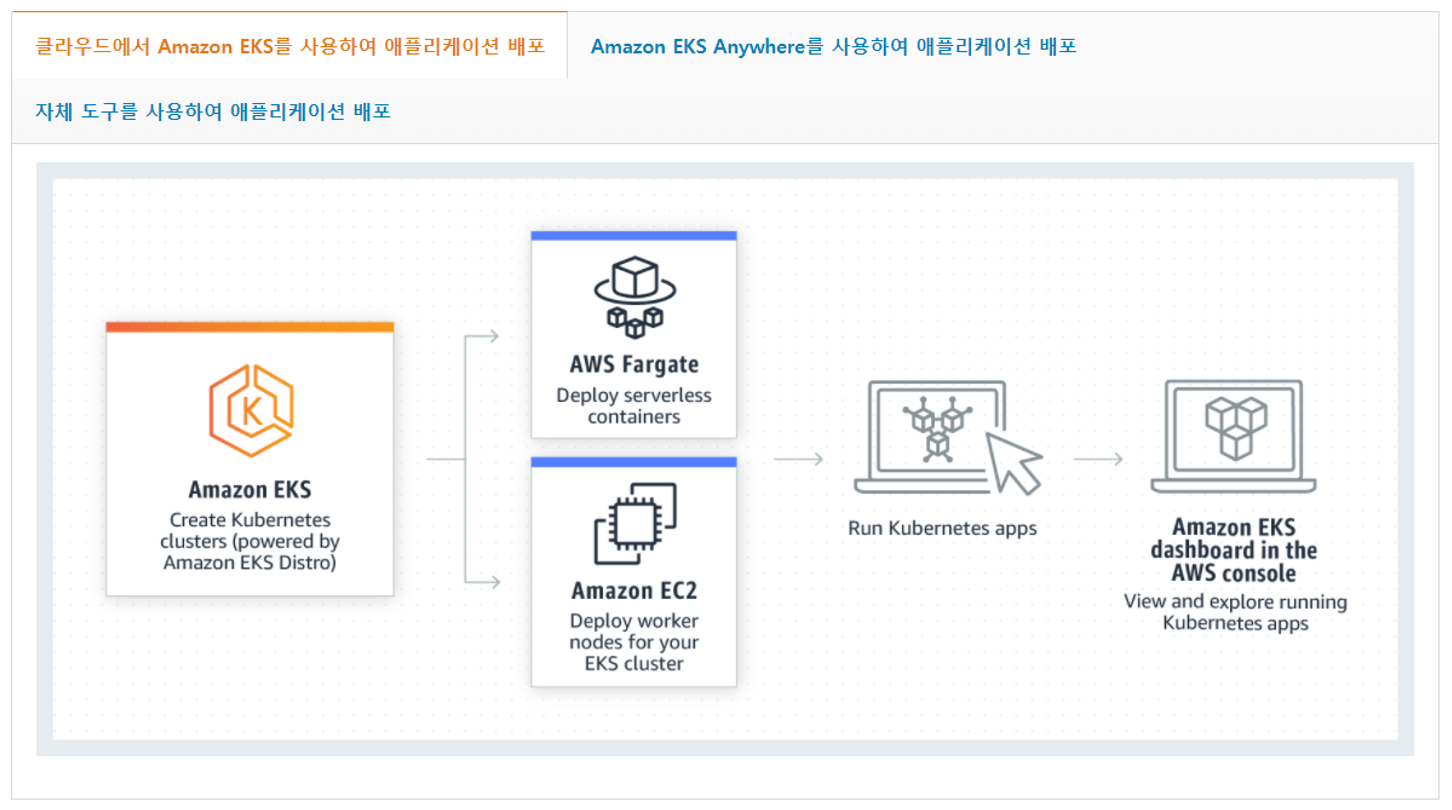

클라우드에서 실행되는 EKS는 Application인 Pod를 serverless인 Fargate 또는 EC2에 배포/실행할 수 있습니다.

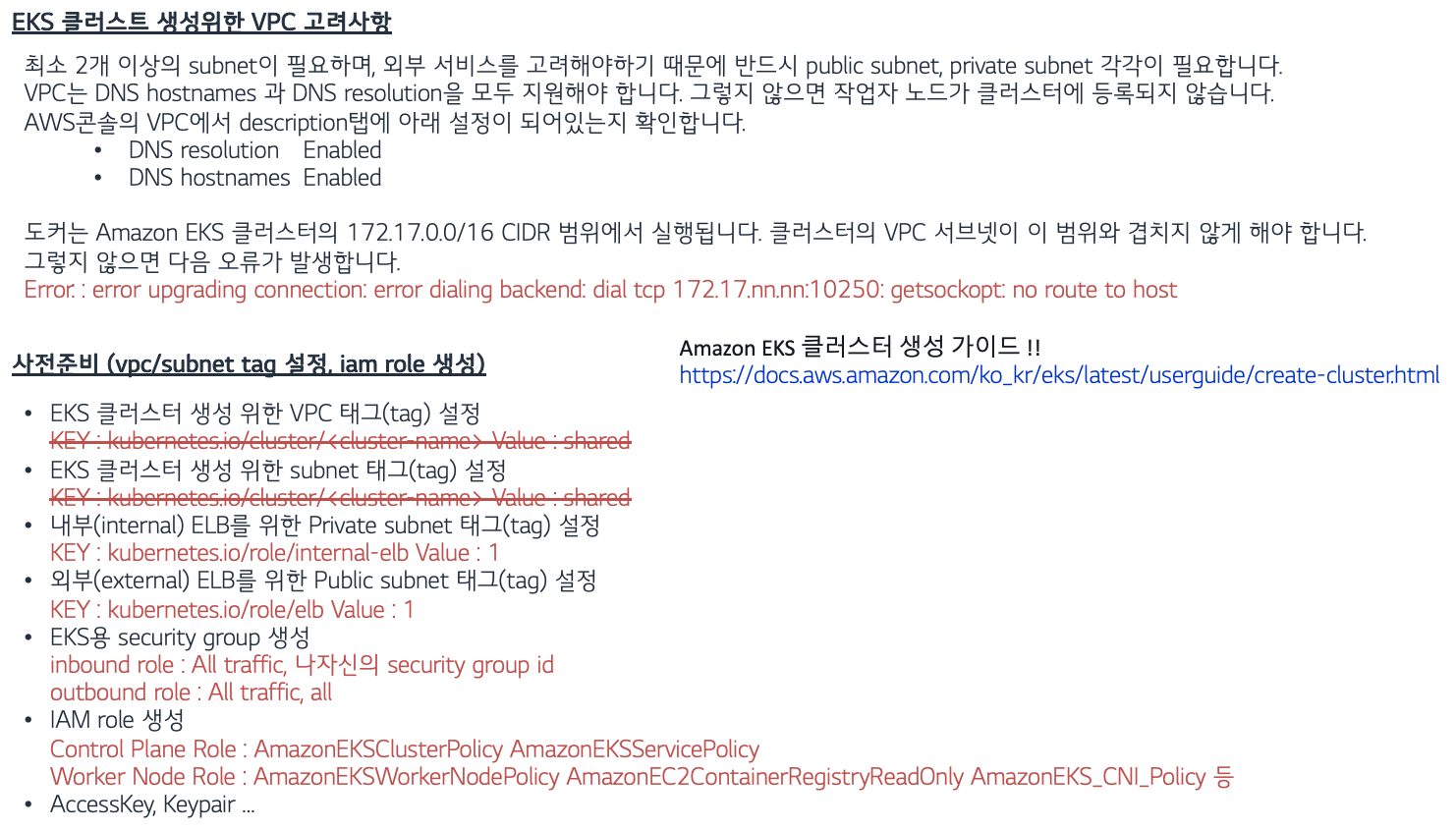

Amazon EKS 생성을 위한 준비사항

- 그렇지만 EKS Cluster를 사용하기 위해선 이해하고, 준비하고, 생성하는데 많은 Effort이 소요됩니다. https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/create-cluster.html

- IaC Tool인 Terraform을 활용하고 공개된 EKS 모듈을 활용한다면 훨씬 쉽고 자동화하여 생성/삭제 할 수 있습니다.

2. Amazon EKS Blueprints

개요

-

사이트

-

Amazon EKS Blueprints 프로젝트에는 고객이 Amazon EKS를 얼마나 빠르고 쉽게 채택할 수 있는지 보여주는 Terraform에 구현된 Amazon EKS 클러스터 패턴 모음이 포함되어 있습니다. AWS 고객, 파트너 및 내부 AWS 팀은 패턴을 사용하여 워크로드를 배포하고 운영하는 데 필요한 운영 소프트웨어로 완전히 부트스트랩되는 완전한 EKS 클러스터를 구성하고 관리할 수 있습니다.

-

Amazon EKS Blueprints 사이트를 통해 사용자들은 제공된 패턴(Patterns)과 조각(snippets)을 참조하여 원하는 솔루션에 대한 가이드 얻을 수 잇습니다.

-

사용자는 EKS Blueprint를 구현의 시작점으로 사용하여 패턴과 조각을 복사하여 자신의 환경에 붙여넣을 수 있습니다. 그런 다음 사용자는 초기 패턴을 조정하여 특정 요구 사항에 맞게 사용자 정의할 수 있습니다.

고려사항

-

EKS Blueprints for Terraform are not intended to be consumed as-is directly from this project. → 그대로 사용은 의도된 것이 아님

-

In "Terraform speak" - the patterns and snippets provided in this repository are not designed to be consumed as a Terraform module. → 패턴과 스니펫은 Terraform 모듈로 사용하도록 설계되지 않음

-

Therefore, the patterns provided only contain

variableswhen certain information is required to deploy the pattern (i.e. - a Route53 hosted zone ID, or ACM certificate ARN) and generally use local variables. If you wish to deploy the patterns into a different region or with other changes, it is recommended that you make those modifications locally before applying the pattern. → 일반적으로 Local 블록을 통해 변경하고, 특정 정보가 필요한 경우(R53 호스트영역 ID 등)만 variables 블록을 사용 -

EKS Blueprints for Terraform will not expose variables and outputs in the same manner that Terraform modules follow in order to avoid confusion around the consumption model. → 복잡성(혼란?)을 줄이기 위해 variables 과 outputs 을 최대한 노출하지 않음

-

However, we do have a number of Terraform modules that were created to support EKS Blueprints in addition to the community hosted modules. Please see the respective projects for more details on the modules constructed to support EKS Blueprints for Terraform; those projects are listed below. → EKS Blueprints 지원을 위해 제작한 모듈은 아래와 같음

-

terraform-aws-eks-blueprint-addon(단수)-

Terraform module which can provision an addon using the Terraform

helm_releaseresource in addition to an IAM role for service account (IRSA). -

서비스 계정(IRSA)에 대한 IAM 역할 외에도 Terraform 리소스를 사용하여 애드온을 프로비저닝할 수 있는 Terraform 모듈입니다.

-

-

terraform-aws-eks-blueprint-addons(복수)-

Terraform module which can provision multiple addons; both EKS addons using the

aws_eks_addonresource as well as Helm chart based addons using theterraform-aws-eks-blueprint-addonmodule. -

여러 애드온을 프로비저닝할 수 있는 Terraform 모듈입니다 - ‘aws_eks_addon 기반 애드온’ + ‘terraform-aws-eks-blueprint-addon 모듈 사용 헬름 차트 기반 애드온’

-

-

terraform-aws-eks-blueprints-teams- Terraform module that creates Kubernetes multi-tenancy resources and configurations, allowing both administrators and application developers to access only the resources which they are responsible for.

- 관리자와 애플리케이션 개발자 모두 자신이 담당하는 리소스에만 액세스할 수 있도록 Kubernetes 멀티테넌시 리소스 및 구성을 생성하는 Terraform 모듈.

3. Blue/Green or Canary Amazon EKS clusters migration for stateless ArgoCD workloads

개요

- 사이트

-

소스 : https://github.com/aws-ia/terraform-aws-eks-blueprints/tree/main/patterns/blue-green-upgrade

-

SJ Github :

- Terraform 코드 : https://github.com/icebreaker70/eks-blue-green-upgrade

- Add-ons 코드 : https://github.com/icebreaker70/eks-blueprints-add-ons

- 어플리케이션 코드 : https://github.com/icebreaker70/eks-blueprints-workloads

-

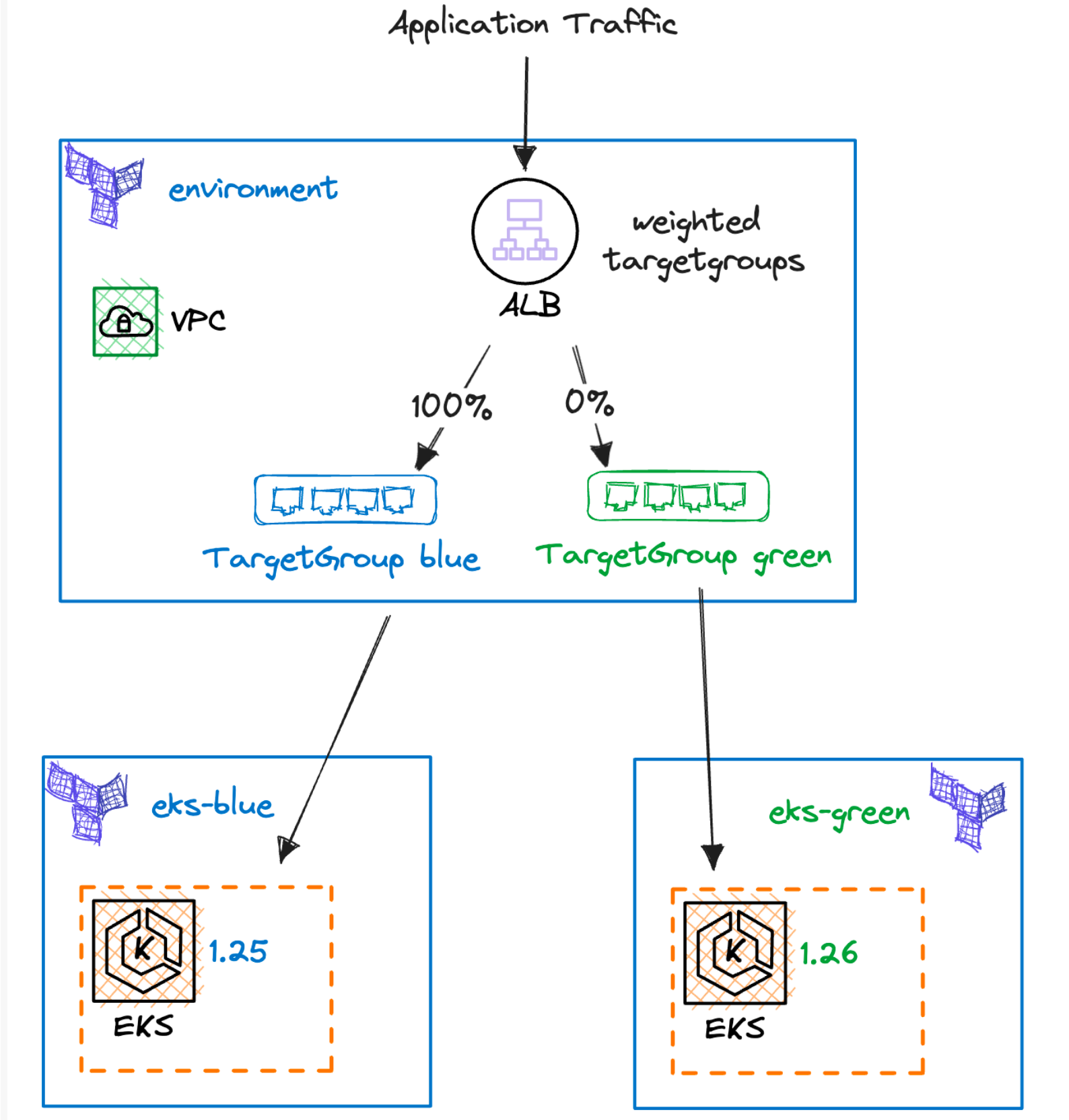

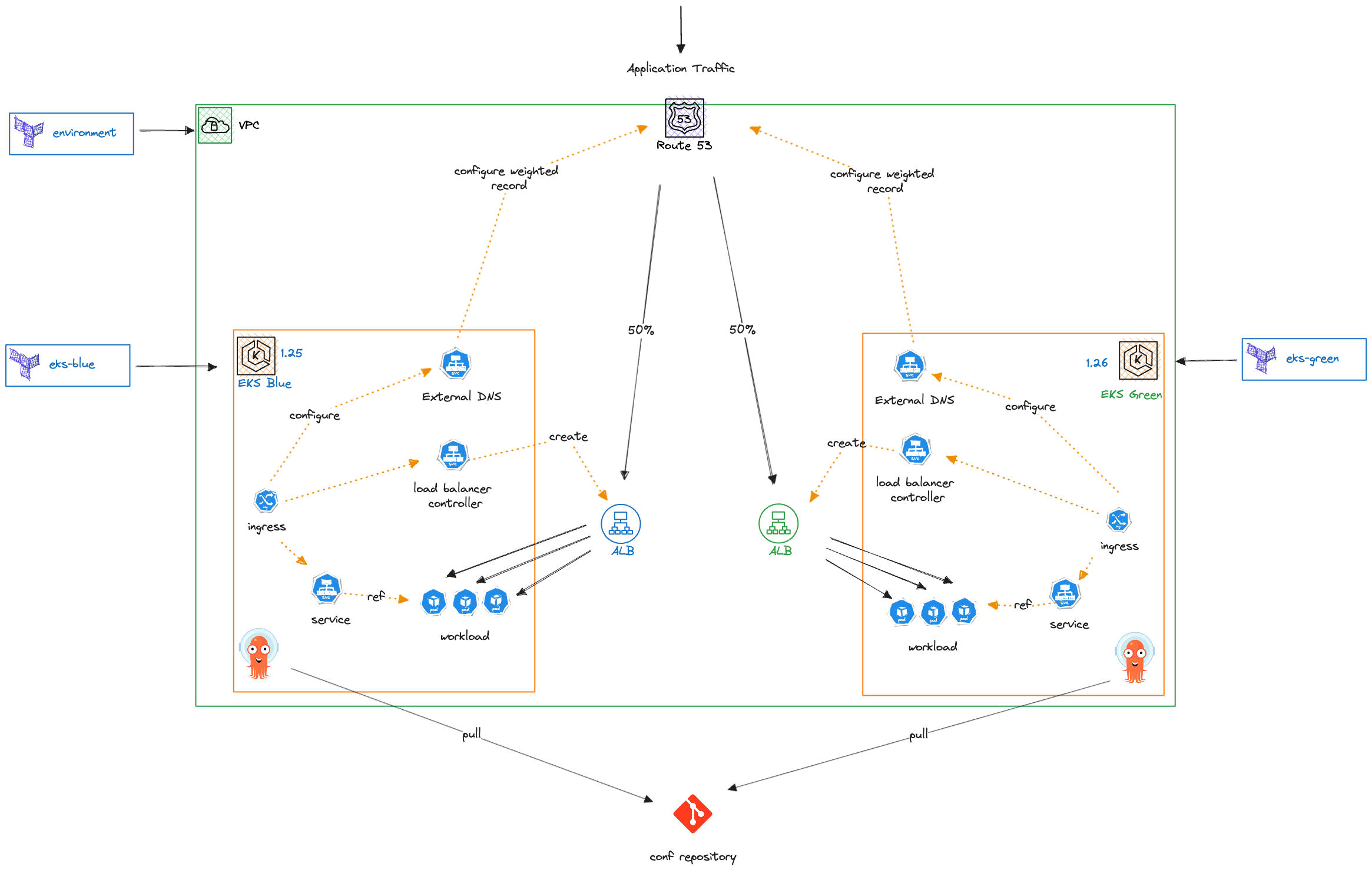

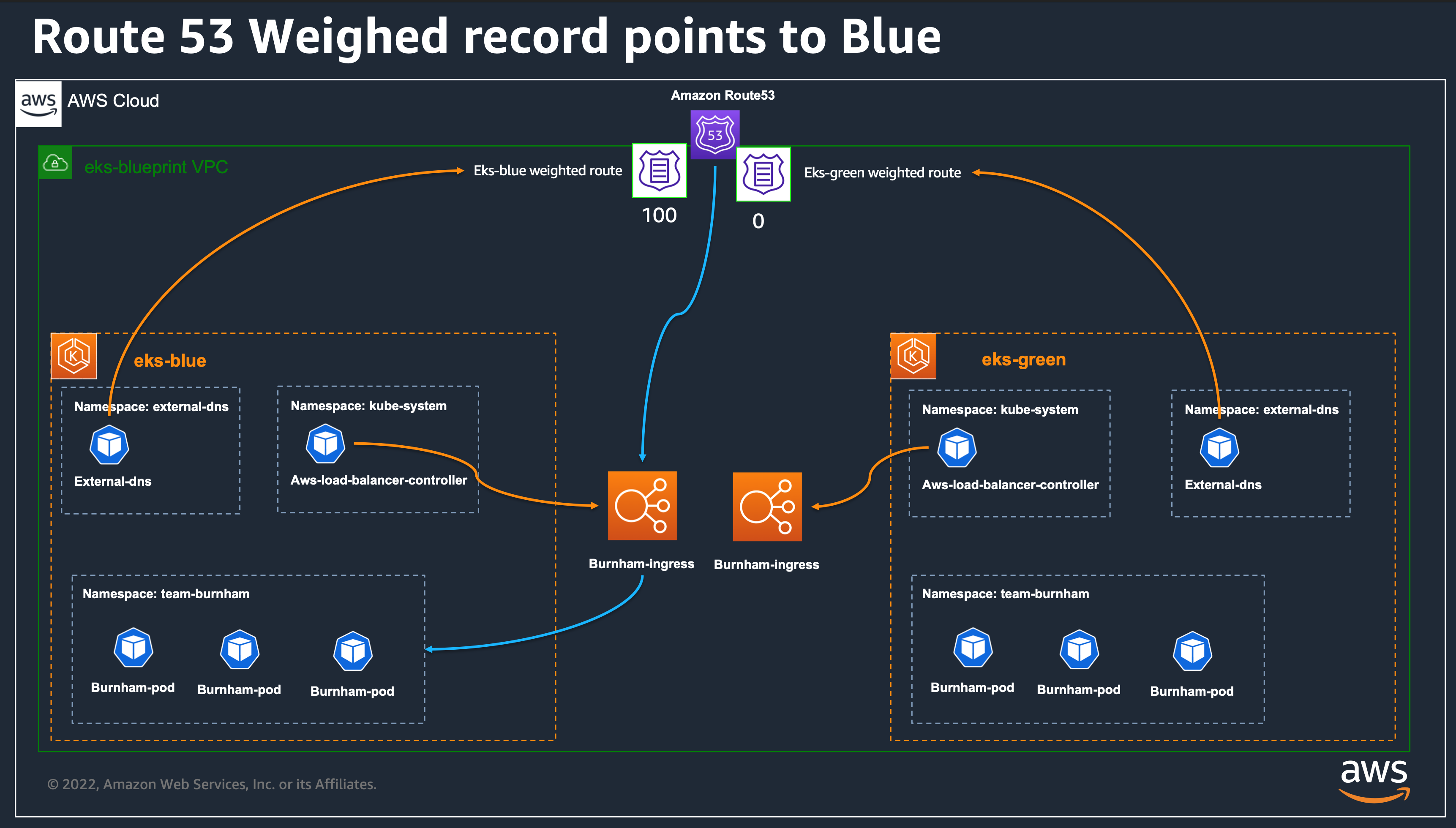

Blue/Green or Canary upgrade 개념도

-

Solution Overview

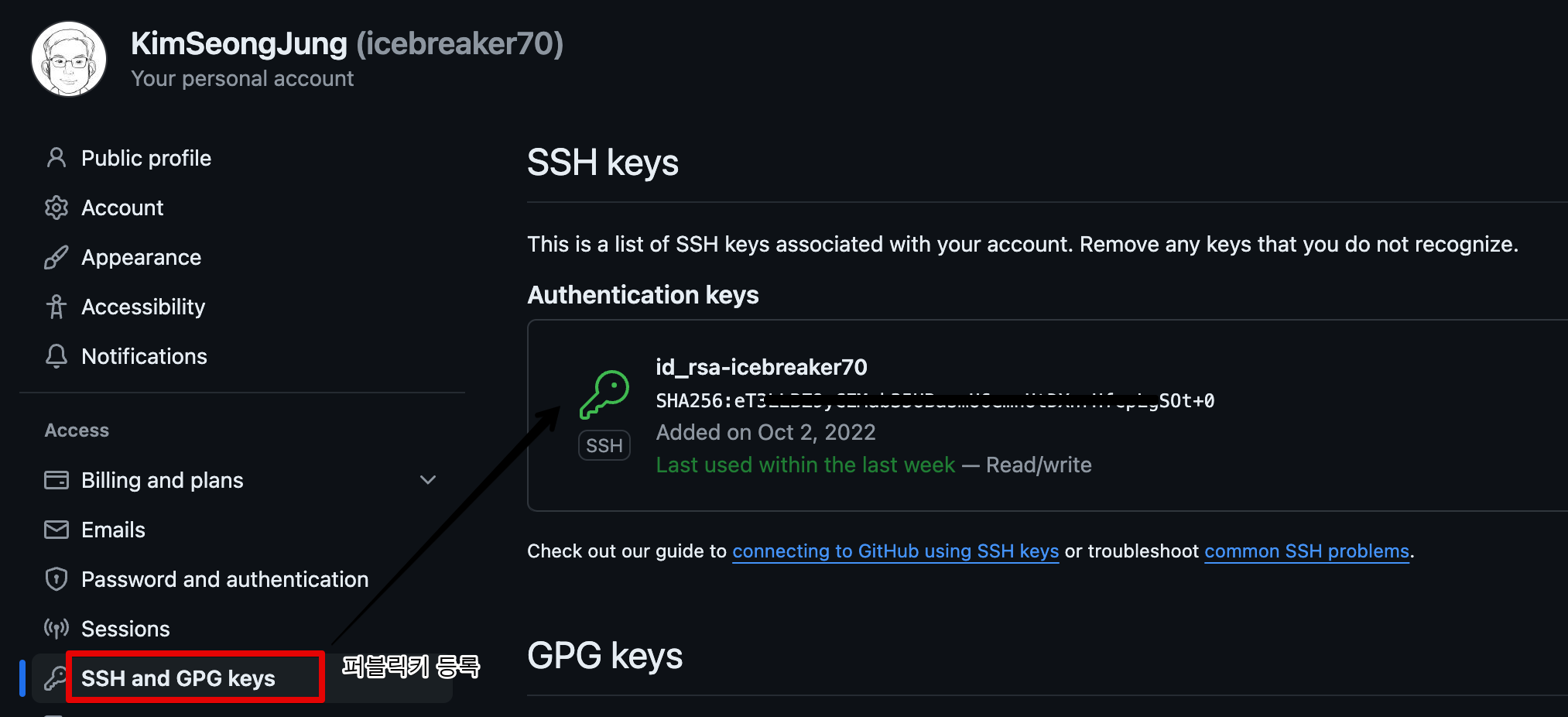

Github과 ArgoCD 연동을 위한 SSH 통신 설정

eks-blue-green-upgrade과 eks-blueprints-workloads Repository Fork (ArgoCD와 Github간 SSH 통신 설정을 위해)

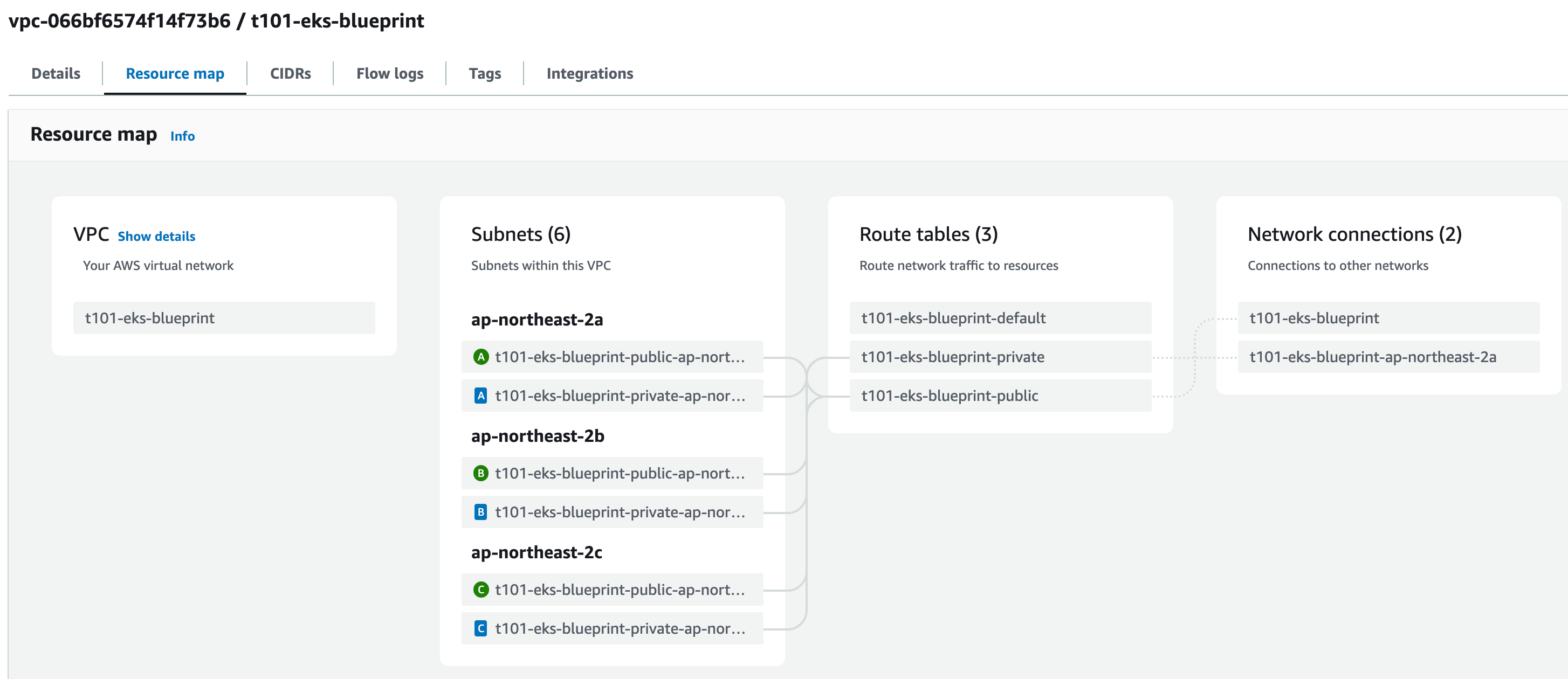

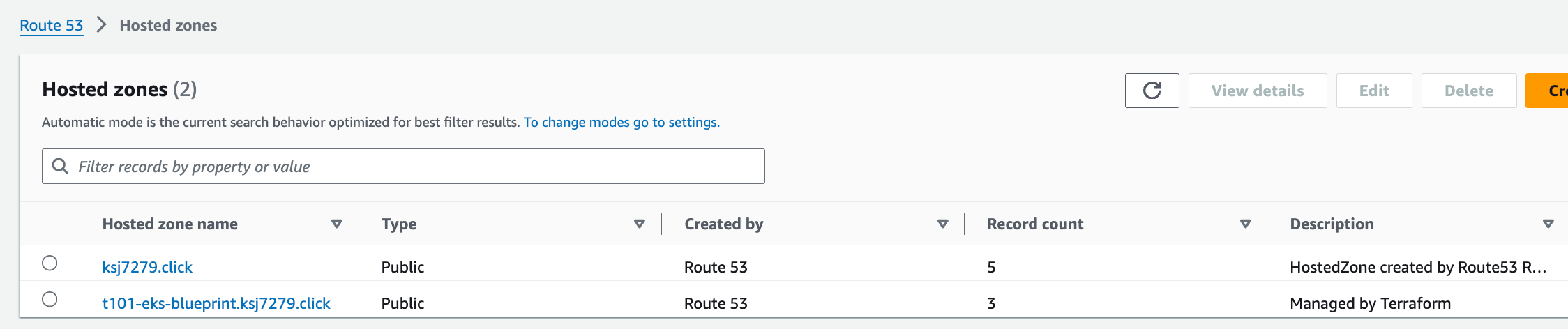

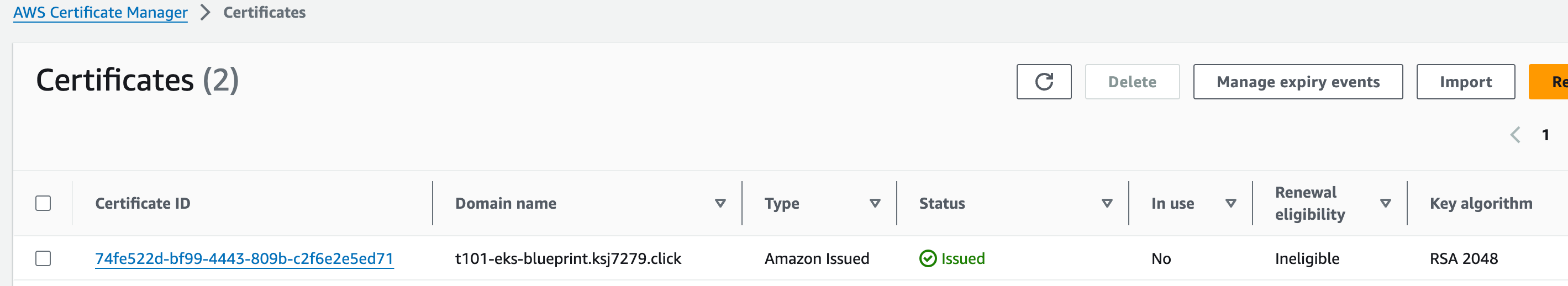

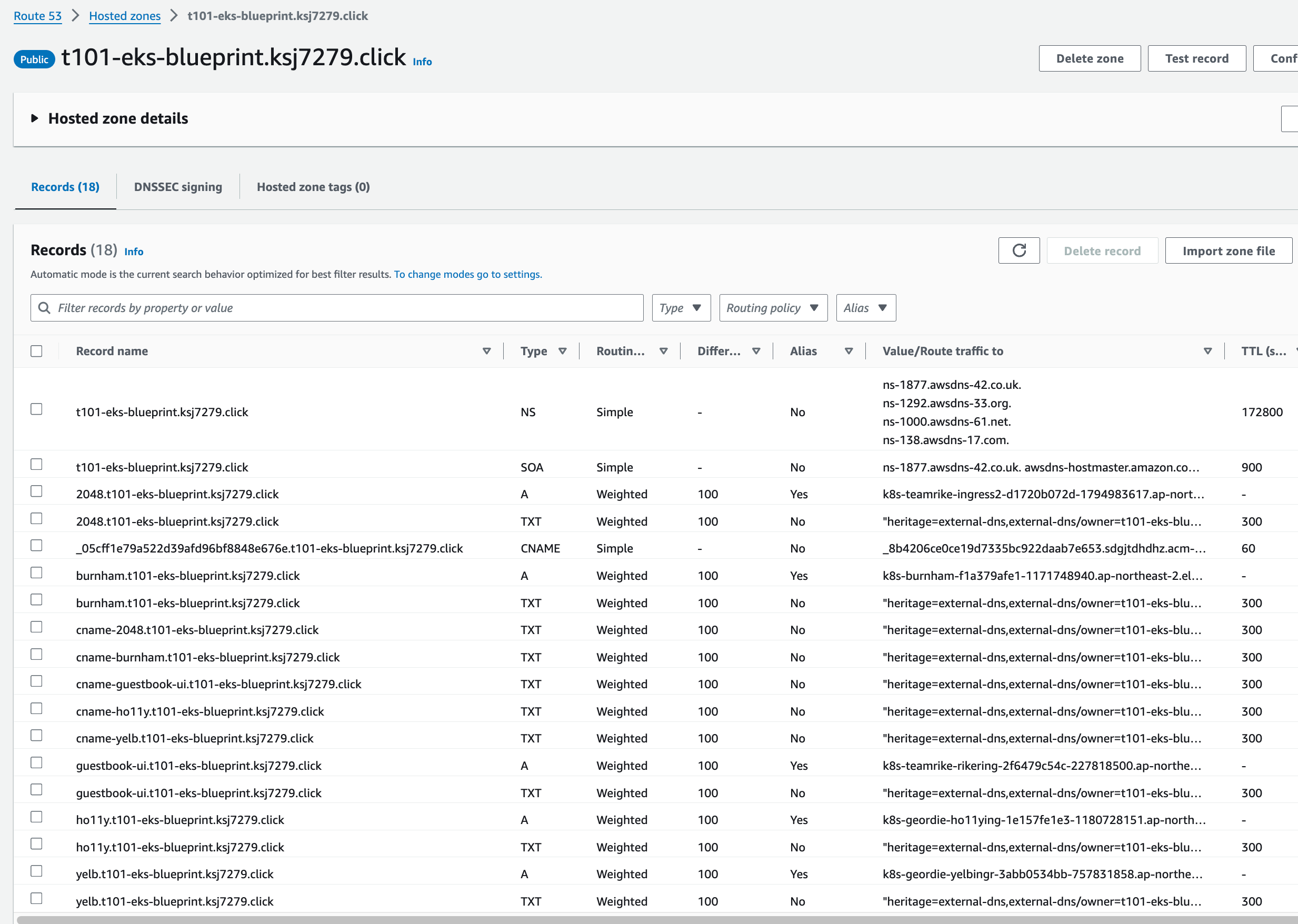

Environment VPC 생성, Route 53 Hosted Zone과 ACM 생성

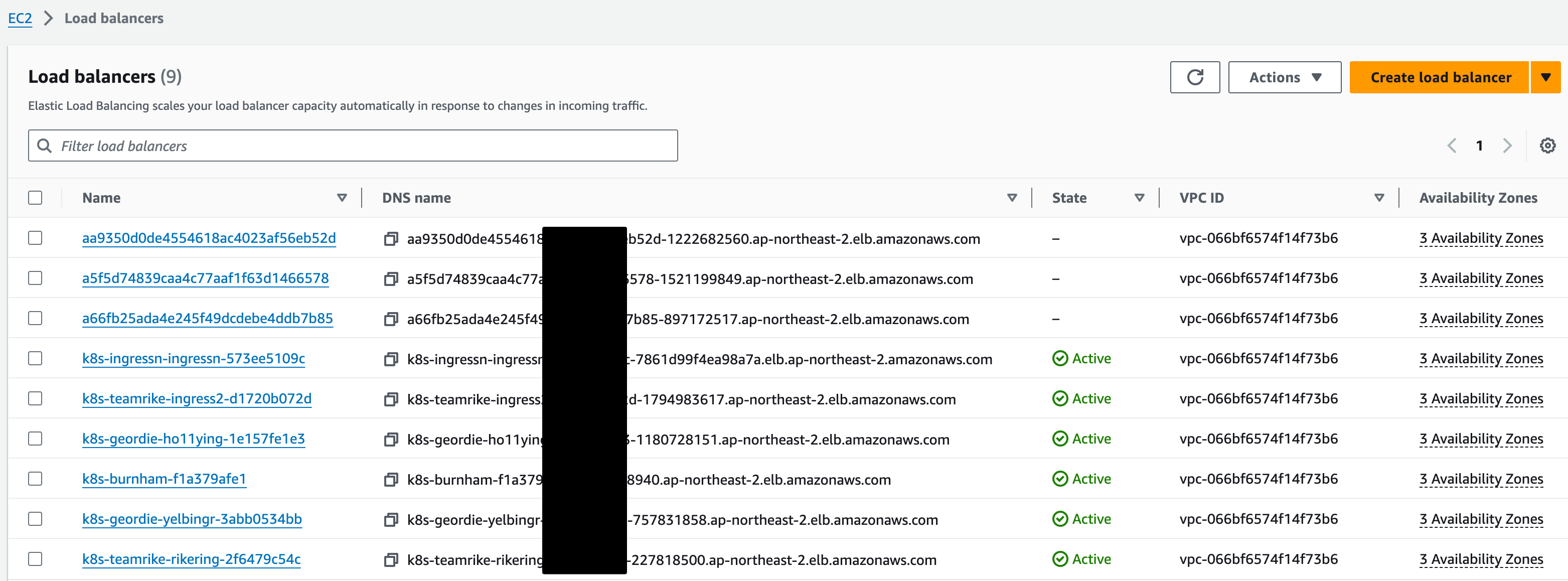

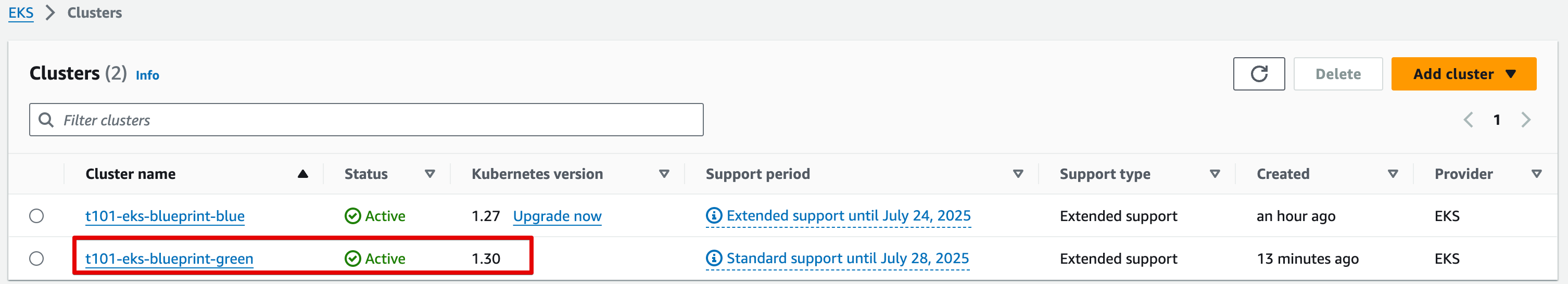

EKS Blue Cluster v1.27 생성, ArgoCD, 어플리케이션 배포, ALB와 Route 53 Record 생성

EKS Green Cluster v1.30 생성, ArgoCD, 어플리케이션 배포, ALB와 Route 53 Record 생성

Route 53 가중치 조정하여 트래픽을 점진적으로 Blue에서 Green으로 전환

사전준비

- kubectl, aws cli 환경 구성

- helm, argocd cli 설치

- argocd helm repository 등록

- $ helm repo add arogo-cd https://argoproj.github.io/argo-helm

- $ helm repo update

Blue/Green Terraform 주요 코드

-

https://github.com/aws-ia/terraform-aws-eks-blueprints/tree/main/patterns/blue-green-upgrade 코드를 Download 하여 My Github에 업로드 한 후 내 환경에 맞게 코드를 수정 함

- 리전 : 서울(ap-northeast-2)

- Route 53 도메인 : ksj7279.click (AWS Rout 53 > Domains > Registered domains에서 구매)

- hosted_zone_name : ksj7279.click

- envrionment_name : t101-eks-blueprint

- gitops_addons_org, gitops_workloads_org : git@github.com:icebreaker70

(https://github.com/aws-samples로 할 경우 ArgoCD에서 접속 실패로 Sync 에러 발생)

-

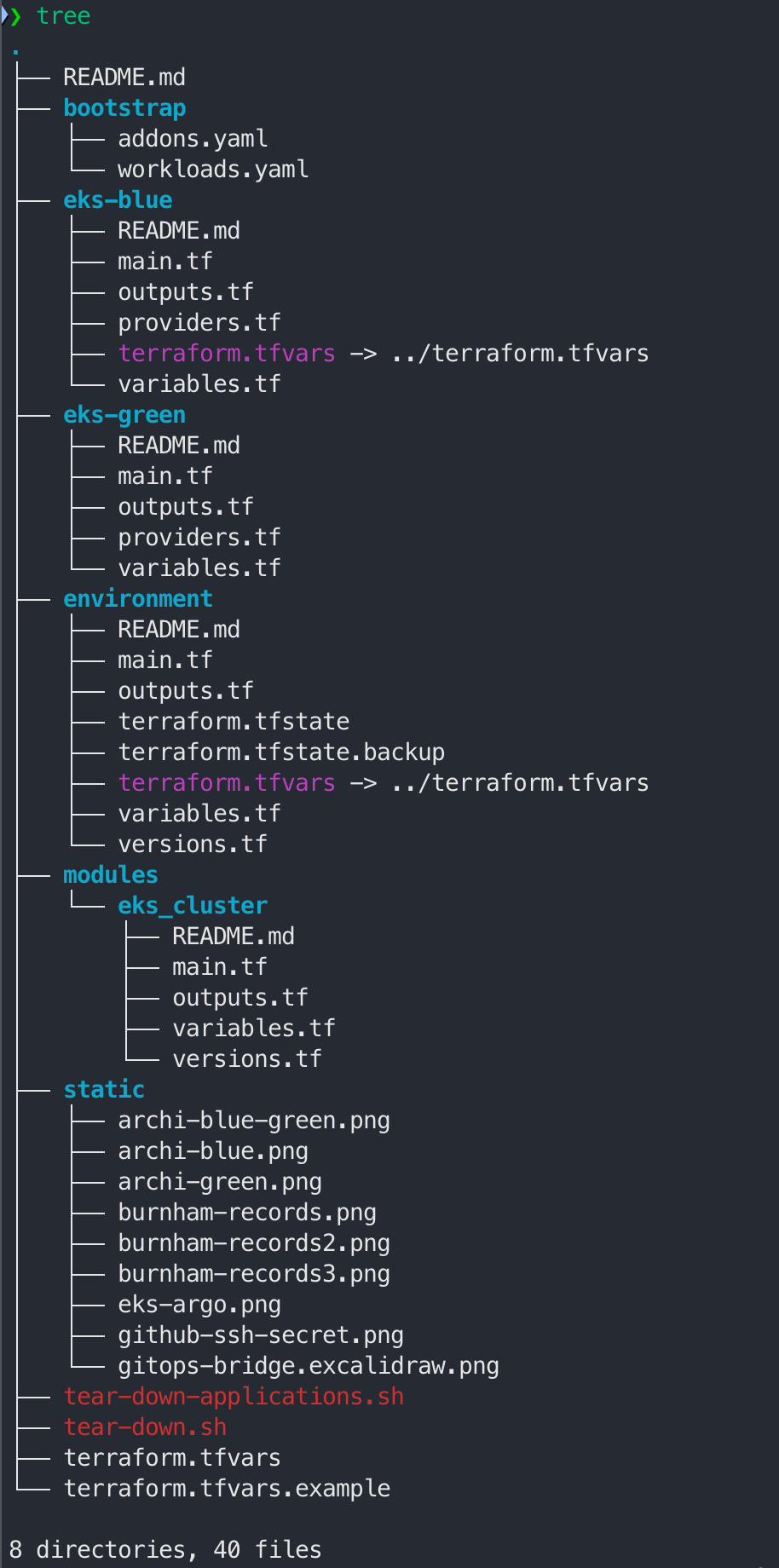

Terraform 코드 다운

git clone https://github.com/icebreaker70/eks-blue-green-upgrade.git

-

디렉토리 & 파일

-

terraform.tfvars

$ cp terraform.tfvars.example terraform.tfvars 한 후 수정

# You should update the below variables

aws_region = "ap-northeast-2"

environment_name = "t101-eks-blueprint"

hosted_zone_name = "ksj7279.click" # your Existing Hosted Zone

eks_admin_role_name = "AWSReservedSSO_AWSAdministratorAccess_0a9b1bfd0c855752" # Additional role admin in the cluster (usually the role I use in the AWS console)

gitops_addons_org = "git@github.com:icebreaker70"

gitops_addons_repo = "eks-blueprints-add-ons"

gitops_addons_path = "argocd/bootstrap/control-plane/addons"

gitops_addons_basepath = "argocd/"

# EKS Blueprint Workloads ArgoCD App of App repository

gitops_workloads_org = "git@github.com:icebreaker70"

gitops_workloads_repo = "eks-blueprints-workloads"

gitops_workloads_revision = "main"

gitops_workloads_path = "envs/dev"

#Secret manager secret for github ssk jey

aws_secret_manager_git_private_ssh_key_name = "github-blueprint-ssh-key"$ ln -s ../terraform.tfvars environment/terraform.tfvars

$ ln -s ../terraform.tfvars eks-blue/terraform.tfvars

$ ln -s ../terraform.tfvars eks-green/terraform.tfvars

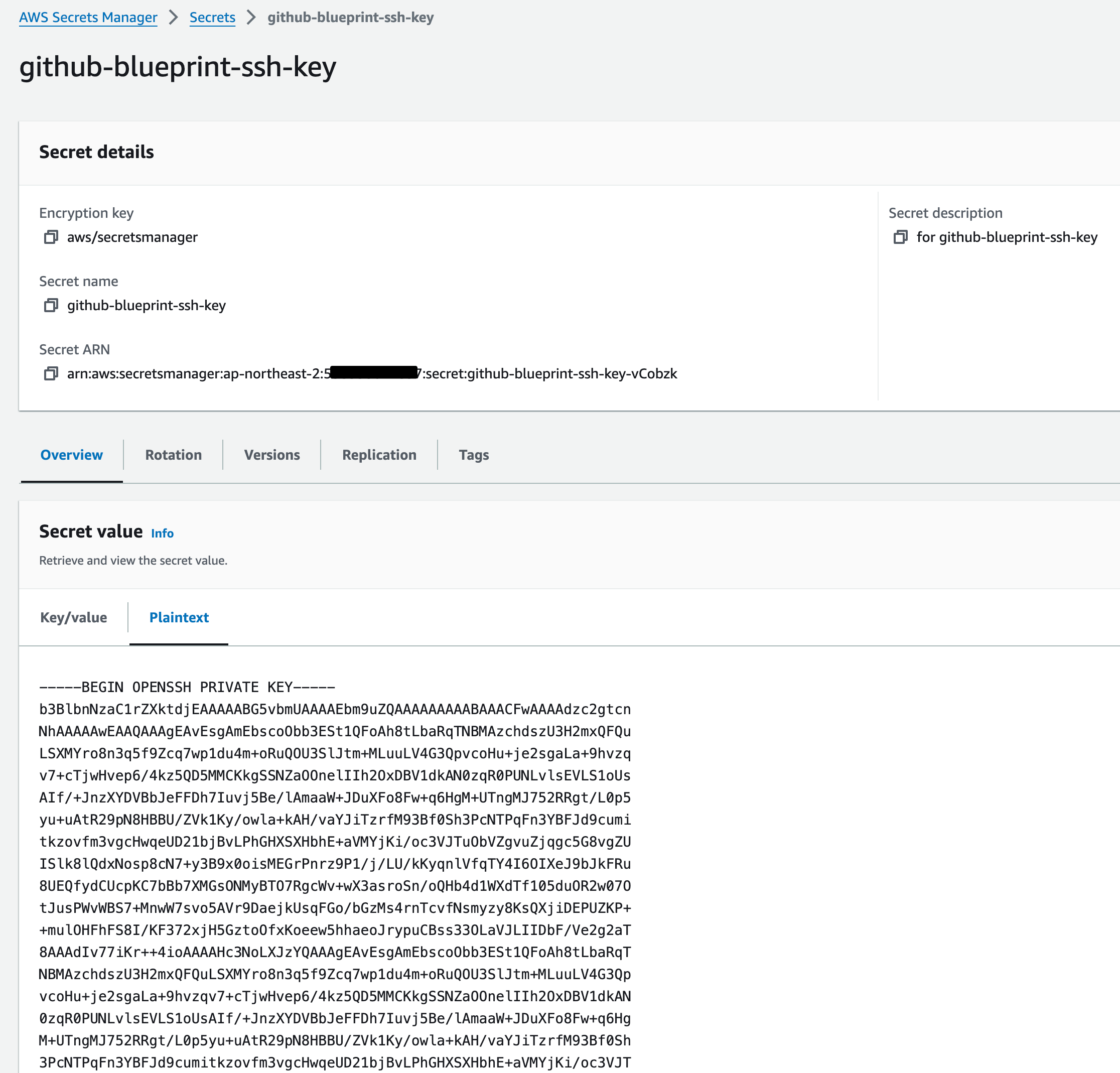

- Secret Manager에 github용 SSH 키 생성과 연동

- OpenSSH 키 생성 및 Github 연동방법 : https://www.lainyzine.com/ko/article/creating-ssh-key-for-github/#google_vignette

- AWS Secrets Manager에 github-blueprint-ssh-key에 Private 키 등록

- Github에 OpenSSH 공개키 등록

Blue/Green Terraform 코드 실행

Environment 자원 생성

$ cd environment

$ terraform init

$ terraform plan -out tfplan

$ terraform apply

- terraform state list

data.aws_availability_zones.available

data.aws_route53_zone.root

aws_route53_record.ns

aws_route53_zone.sub

aws_secretsmanager_secret.argocd

aws_secretsmanager_secret_version.argocd

random_password.argocd

module.acm.aws_acm_certificate.this[0]

module.acm.aws_acm_certificate_validation.this[0]

module.acm.aws_route53_record.validation[0]

module.vpc.aws_default_network_acl.this[0]

module.vpc.aws_default_route_table.default[0]

module.vpc.aws_default_security_group.this[0]

module.vpc.aws_eip.nat[0]

module.vpc.aws_internet_gateway.this[0]

module.vpc.aws_nat_gateway.this[0]

module.vpc.aws_route.private_nat_gateway[0]

module.vpc.aws_route.public_internet_gateway[0]

module.vpc.aws_route_table.private[0]

module.vpc.aws_route_table.public[0]

module.vpc.aws_route_table_association.private[0]

module.vpc.aws_route_table_association.private[1]

module.vpc.aws_route_table_association.private[2]

module.vpc.aws_route_table_association.public[0]

module.vpc.aws_route_table_association.public[1]

module.vpc.aws_route_table_association.public[2]

module.vpc.aws_subnet.private[0]

module.vpc.aws_subnet.private[1]

module.vpc.aws_subnet.private[2]

module.vpc.aws_subnet.public[0]

module.vpc.aws_subnet.public[1]

module.vpc.aws_subnet.public[2]

module.vpc.aws_vpc.this[0]- terraform output

aws_acm_certificate_status = "ISSUED"

aws_route53_zone = "t101-eks-blueprint.ksj7279.click"

vpc_id = "vpc-066bf6574f14f73b6"-

VPC Reousrce map

-

Route 53 Hosted zones

-

AWS Certificate Manager (ACM)

-

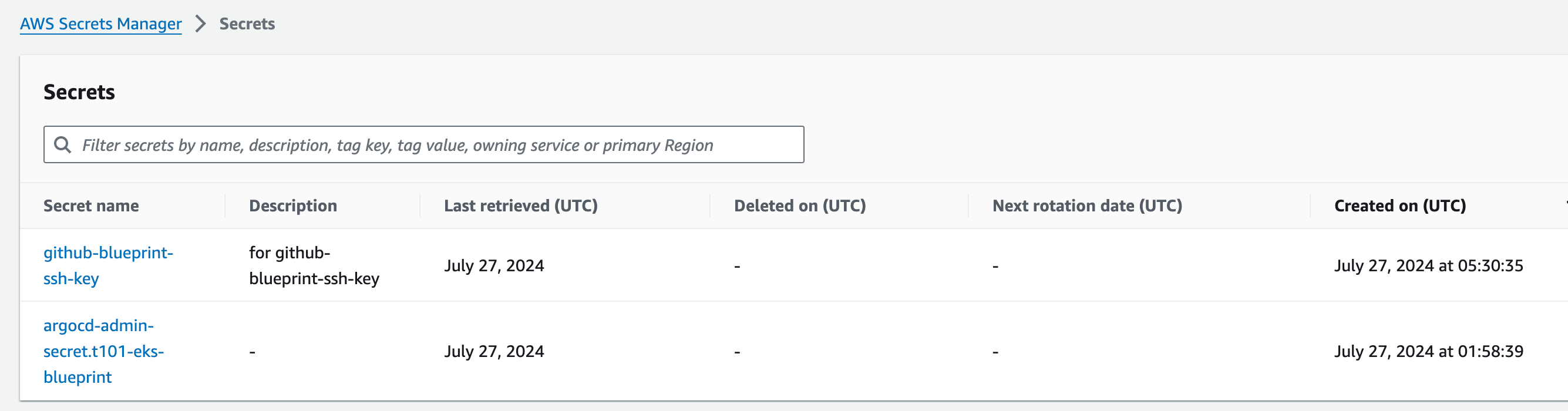

AWS Secrets Manager

- github-blueprint-ssh-key : ArgoCD - Github 연동용 비밀키 보관

- argocd-admin-secret.t101-eks-blueprint : ArgoCD 접속용 비밀키 보관

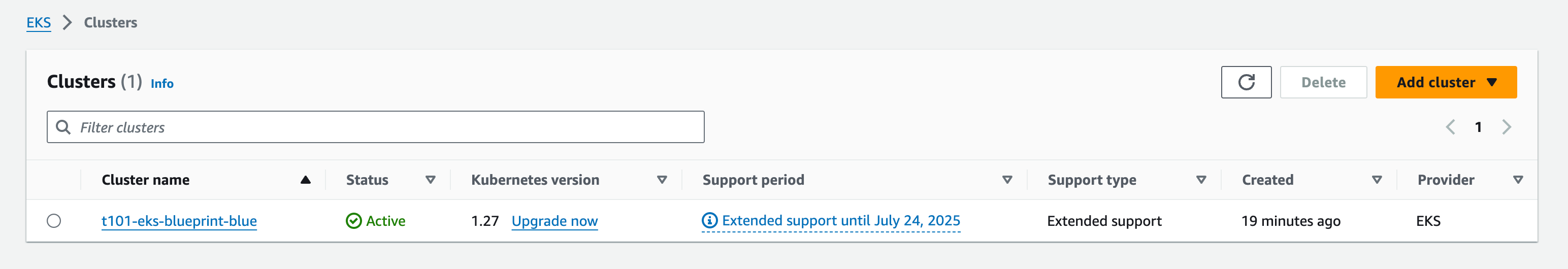

eks-blue 클러스터 생성

$ cd eks-blue

$ terraform init

$ terraform plan -out tfplan

$ terraform apply

-

EKS Blue Cluster 생성 v1.27

-

terraform state list

module.eks_cluster.data.aws_caller_identity.current

module.eks_cluster.data.aws_iam_role.eks_admin_role_name[0]

module.eks_cluster.data.aws_route53_zone.sub

module.eks_cluster.data.aws_secretsmanager_secret.argocd

module.eks_cluster.data.aws_secretsmanager_secret.workload_repo_secret

module.eks_cluster.data.aws_secretsmanager_secret_version.admin_password_version

module.eks_cluster.data.aws_secretsmanager_secret_version.workload_repo_secret

module.eks_cluster.data.aws_subnets.private

module.eks_cluster.data.aws_subnets.public

module.eks_cluster.data.aws_vpc.vpc

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-090394eb75fbdcf77"]

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-095d0191184343fe9"]

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-0bacf82946c91d40a"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-03917c8077029d05c"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-0690ffae4248076a8"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-082e948761a110503"]

module.eks_cluster.kubernetes_namespace.argocd

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_caller_identity.current

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.ebs_csi[0]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_partition.current

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_region.current

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_policy.ebs_csi[0]

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role.this[0]

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role_policy_attachment.ebs_csi[0]

module.eks_cluster.module.eks.data.aws_caller_identity.current

module.eks_cluster.module.eks.data.aws_iam_policy_document.assume_role_policy[0]

module.eks_cluster.module.eks.data.aws_iam_session_context.current

module.eks_cluster.module.eks.data.aws_partition.current

module.eks_cluster.module.eks.data.tls_certificate.this[0]

module.eks_cluster.module.eks.aws_cloudwatch_log_group.this[0]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["Blueprint"]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["GithubRepo"]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["karpenter.sh/discovery"]

module.eks_cluster.module.eks.aws_eks_cluster.this[0]

module.eks_cluster.module.eks.aws_iam_openid_connect_provider.oidc_provider[0]

module.eks_cluster.module.eks.aws_iam_policy.cluster_encryption[0]

module.eks_cluster.module.eks.aws_iam_role.this[0]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.cluster_encryption[0]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSClusterPolicy"]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSVPCResourceController"]

module.eks_cluster.module.eks.aws_security_group.cluster[0]

module.eks_cluster.module.eks.kubernetes_config_map_v1_data.aws_auth[0]

module.eks_cluster.module.eks.time_sleep.this[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_caller_identity.current

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["coredns"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["kube-proxy"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["vpc-cni"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.aws_load_balancer_controller[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.cert_manager[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_dns[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_secrets[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_partition.current

module.eks_cluster.module.eks_blueprints_addons.data.aws_region.current

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["kube-proxy"]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["vpc-cni"]

module.eks_cluster.module.eks_blueprints_addons.time_sleep.this

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_limit_range_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_namespace_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_resource_quota_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_role_binding_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_service_account_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_limit_range_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_namespace_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_resource_quota_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_role_binding_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_service_account_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_limit_range_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_namespace_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_resource_quota_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_role_binding_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_service_account_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_limit_range_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_namespace_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_resource_quota_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_role_binding_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_service_account_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_limit_range_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_namespace_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_resource_quota_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_role_binding_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_service_account_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_policy.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_role_policy_attachment.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_limit_range_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_namespace_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_resource_quota_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_service_account_v1.this["team-platform"]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_caller_identity.current

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.vpc_cni[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_partition.current

module.eks_cluster.module.vpc_cni_irsa.data.aws_region.current

module.eks_cluster.module.vpc_cni_irsa.aws_iam_policy.vpc_cni[0]

module.eks_cluster.module.vpc_cni_irsa.aws_iam_role.this[0]

module.eks_cluster.module.vpc_cni_irsa.aws_iam_role_policy_attachment.vpc_cni[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_caller_identity.current

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_iam_policy_document.assume_role_policy[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_partition.current

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_eks_node_group.this[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role.this[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_launch_template.this[0]

module.eks_cluster.module.eks.module.kms.data.aws_caller_identity.current

module.eks_cluster.module.eks.module.kms.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks.module.kms.data.aws_partition.current

module.eks_cluster.module.eks.module.kms.aws_kms_alias.this["cluster"]

module.eks_cluster.module.eks.module.kms.aws_kms_key.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_role_policy_attachment.this[0]- terraform output

access_argocd = <<EOT

export KUBECONFIG="/tmp/t101-eks-blueprint-blue"

aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.t101-eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-burnham-20240727125110468500000006",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-riker-20240727125110468400000005",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-crystal-20240727125110482300000008",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-frontend-20240727125110482300000009",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-nodejs-20240727125110482300000007",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue --role-arn arn:aws:iam::538558617837:role/team-platform-20240727125110102800000002"

eks_cluster_id = "t101-eks-blueprint-blue"

gitops_metadata = <sensitive>- kubeconfig 환경 설정 및 Node, Pod 조회

$ aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-blue

Updated context arn:aws:eks:ap-northeast-2:538558617837:cluster/t101-eks-blueprint-blue in /tmp/t101-eks-blueprint-blue

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-43-110.ap-northeast-2.compute.internal Ready <none> 18m v1.27.12-eks-ae9a62a

ip-10-0-47-132.ap-northeast-2.compute.internal Ready <none> 18m v1.27.12-eks-ae9a62a

ip-10-0-49-150.ap-northeast-2.compute.internal Ready <none> 18m v1.27.12-eks-ae9a62a

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argo-cd-argocd-application-controller-0 1/1 Running 0 11m

argocd argo-cd-argocd-applicationset-controller-86856f4c89-m2qhf 1/1 Running 0 12m

argocd argo-cd-argocd-dex-server-b886f88f5-4zpmt 1/1 Running 0 12m

argocd argo-cd-argocd-notifications-controller-6975775ff6-2x84n 1/1 Running 0 12m

argocd argo-cd-argocd-redis-68d9d55bd7-fmf5t 1/1 Running 0 12m

argocd argo-cd-argocd-repo-server-bffbbc684-5d2gz 1/1 Running 0 10m

argocd argo-cd-argocd-repo-server-bffbbc684-gkcz5 1/1 Running 0 11m

argocd argo-cd-argocd-repo-server-bffbbc684-lqzxj 1/1 Running 0 8m32s

argocd argo-cd-argocd-server-6fdd674d8-g4rqg 1/1 Running 0 11m

cert-manager cert-manager-5468bbb5fd-9j97m 1/1 Running 0 11m

cert-manager cert-manager-cainjector-6f455799dd-f664r 1/1 Running 0 11m

cert-manager cert-manager-webhook-54bd8d56d6-6fll7 1/1 Running 0 11m

external-dns external-dns-5b45f79bbb-vsdll 1/1 Running 0 10m

external-secrets external-secrets-777fdd5cfd-b8dsd 1/1 Running 0 11m

external-secrets external-secrets-cert-controller-6cf5f89bbd-45bbb 1/1 Running 0 11m

external-secrets external-secrets-webhook-7b675c5b79-m874v 1/1 Running 0 11m

geolocationapi geolocationapi-6f4c677b78-7s2jm 2/2 Running 0 11m

geolocationapi geolocationapi-6f4c677b78-9rlpk 2/2 Running 0 11m

geordie downstream0-5977767554-k88z2 1/1 Running 0 11m

geordie downstream1-7b69c454d7-r7t9l 1/1 Running 0 11m

geordie frontend-6ccb9cb849-8574j 1/1 Running 0 11m

geordie redis-server-6d68bf9898-fzcrs 1/1 Running 0 11m

geordie yelb-appserver-5559878686-krrsn 1/1 Running 0 11m

geordie yelb-db-bb8c8545-4d22h 1/1 Running 0 11m

geordie yelb-ui-568d5f57f-9tzrg 1/1 Running 0 11m

ingress-nginx ingress-nginx-controller-576dfb4fcd-25gk4 1/1 Running 0 10m

ingress-nginx ingress-nginx-controller-576dfb4fcd-njx27 1/1 Running 0 10m

ingress-nginx ingress-nginx-controller-576dfb4fcd-z4mn7 1/1 Running 0 10m

kube-system aws-load-balancer-controller-5d4f67876c-9mfmq 1/1 Running 0 11m

kube-system aws-load-balancer-controller-5d4f67876c-fpnhz 1/1 Running 0 11m

kube-system aws-node-8bzkf 2/2 Running 0 18m

kube-system aws-node-wskbg 2/2 Running 0 18m

kube-system aws-node-z9rrp 2/2 Running 0 18m

kube-system coredns-6b7b8f567d-htgf9 1/1 Running 0 19m

kube-system coredns-6b7b8f567d-vnkdf 1/1 Running 0 19m

kube-system kube-proxy-4rmr2 1/1 Running 0 18m

kube-system kube-proxy-w75bn 1/1 Running 0 18m

kube-system kube-proxy-xb69h 1/1 Running 0 18m

kube-system metrics-server-76c55fc4fc-wgjgb 1/1 Running 0 11m

kyverno kyverno-admission-controller-79dcbc777c-s7846 1/1 Running 0 9m15s

kyverno kyverno-background-controller-67f4b647d7-rrxn7 1/1 Running 0 9m15s

kyverno kyverno-cleanup-admission-reports-28701430-n8kh4 0/1 Completed 0 71s

kyverno kyverno-cleanup-cluster-admission-reports-28701430-x87cp 0/1 Completed 0 71s

kyverno kyverno-cleanup-controller-566f7bc8c-q94mq 1/1 Running 0 9m15s

kyverno kyverno-reports-controller-6f96648477-rsb8d 1/1 Running 0 9m15s

team-burnham burnham-579d495f96-2dcx9 1/1 Running 0 11m

team-burnham burnham-579d495f96-8fj82 1/1 Running 0 11m

team-burnham burnham-579d495f96-qzz67 1/1 Running 0 11m

team-burnham nginx-68f46dfb7c-zhfkc 1/1 Running 0 11m

team-riker deployment-2048-5dd84b4c59-b7hxj 1/1 Running 0 11m

team-riker deployment-2048-5dd84b4c59-cskxd 1/1 Running 0 11m

team-riker deployment-2048-5dd84b4c59-fcg2c 1/1 Running 0 11m

team-riker guestbook-ui-dc9c64bb8-99kx2 1/1 Running 0 11m- ArgoCD 접속 URL과 계정 확인

$ echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

$ echo "ArgoCD Username: admin"

$ echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.t101-eks-blueprint --query SecretString --output text --region ap-northeast-2)"

ArgoCD URL: https://aa9*******4023af56eb52d-1222682560.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: Dr0NXLlBx$j$nY?W-

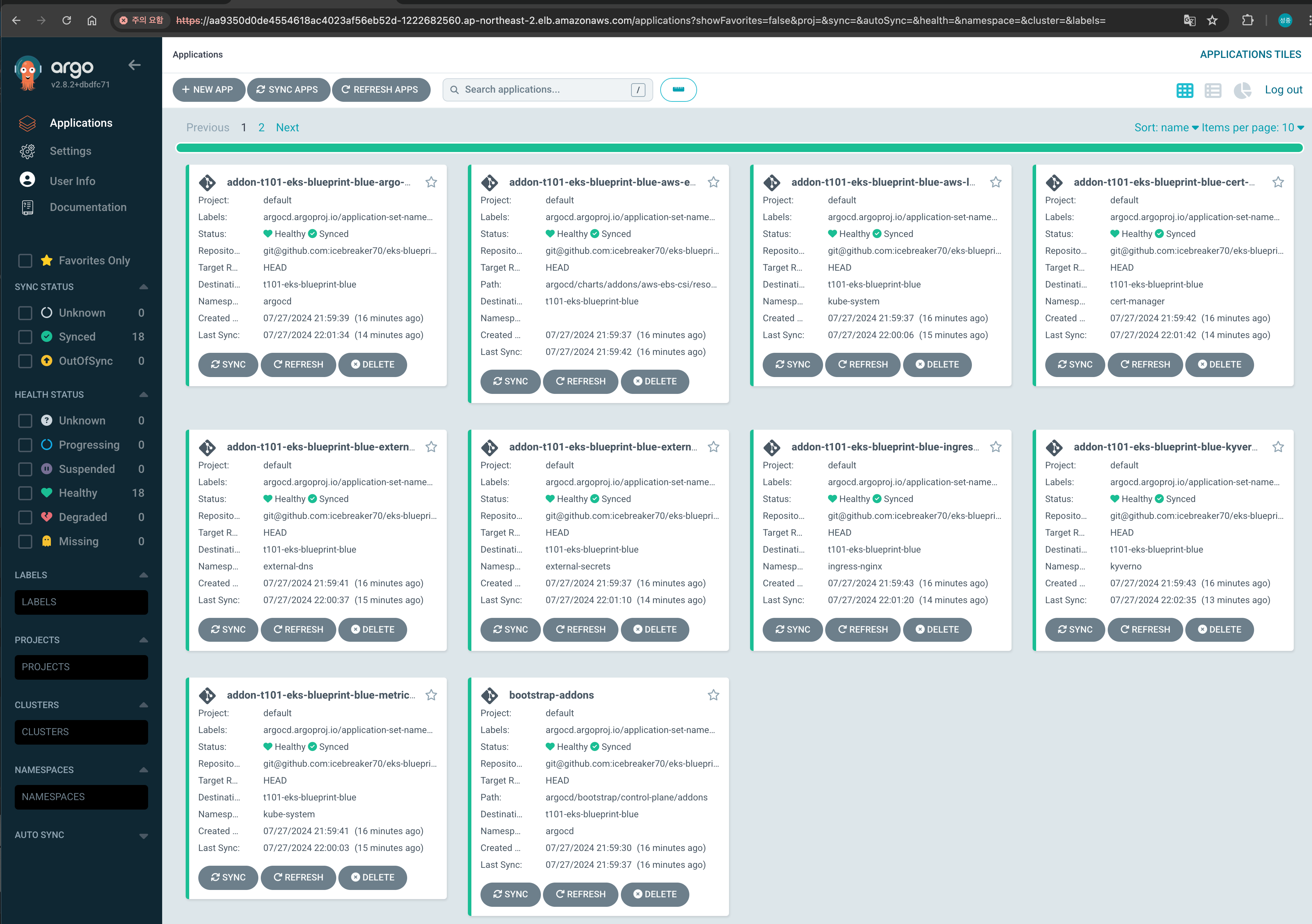

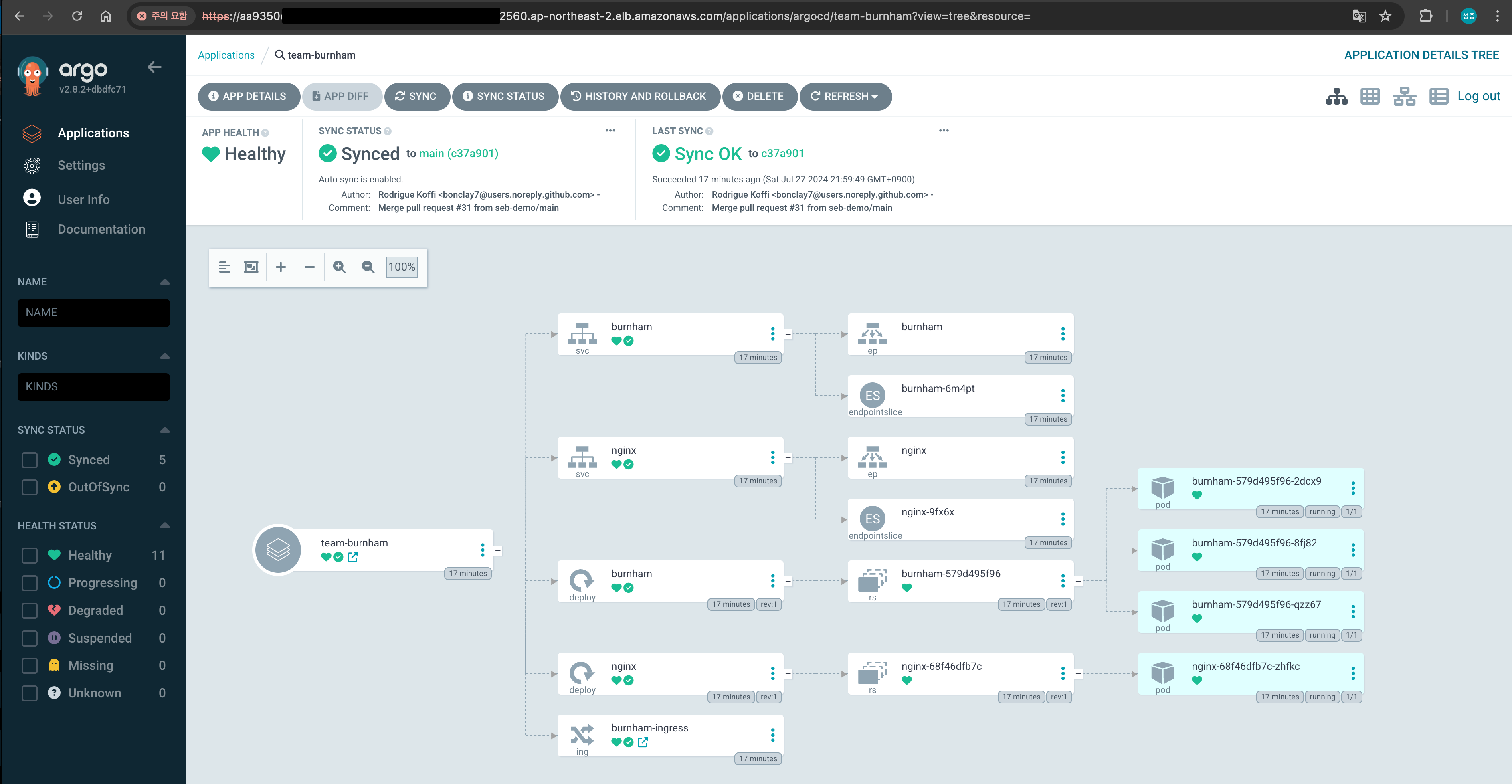

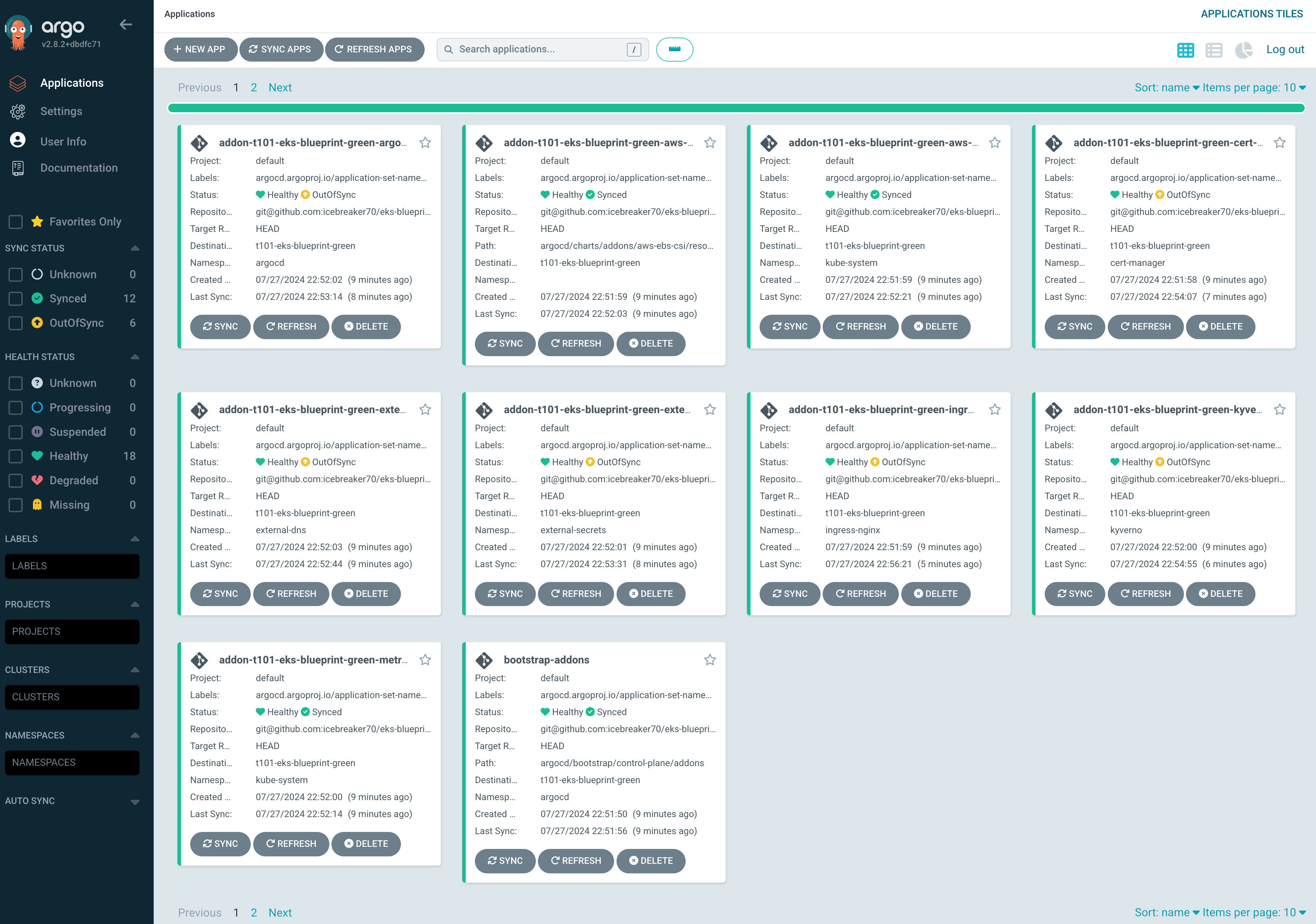

ArgoCD 접속화면

eks-green 클러스터 생성

$ cd eks-green

$ terraform init

$ terraform plan -out tfplan

$ terraform apply

-

EKS Blue Cluster 생성 v1.27

-

terraform state list

module.eks_cluster.data.aws_caller_identity.current

module.eks_cluster.data.aws_iam_role.eks_admin_role_name[0]

module.eks_cluster.data.aws_route53_zone.sub

module.eks_cluster.data.aws_secretsmanager_secret.argocd

module.eks_cluster.data.aws_secretsmanager_secret.workload_repo_secret

module.eks_cluster.data.aws_secretsmanager_secret_version.admin_password_version

module.eks_cluster.data.aws_secretsmanager_secret_version.workload_repo_secret

module.eks_cluster.data.aws_subnets.private

module.eks_cluster.data.aws_subnets.public

module.eks_cluster.data.aws_vpc.vpc

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-090394eb75fbdcf77"]

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-095d0191184343fe9"]

module.eks_cluster.aws_ec2_tag.private_subnets["subnet-0bacf82946c91d40a"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-03917c8077029d05c"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-0690ffae4248076a8"]

module.eks_cluster.aws_ec2_tag.public_subnets["subnet-082e948761a110503"]

module.eks_cluster.kubernetes_namespace.argocd

module.eks_cluster.kubernetes_secret.git_secrets["git-addons"]

module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_caller_identity.current

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.ebs_csi[0]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_partition.current

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_region.current

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_policy.ebs_csi[0]

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role.this[0]

module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role_policy_attachment.ebs_csi[0]

module.eks_cluster.module.eks.data.aws_caller_identity.current

module.eks_cluster.module.eks.data.aws_iam_policy_document.assume_role_policy[0]

module.eks_cluster.module.eks.data.aws_iam_session_context.current

module.eks_cluster.module.eks.data.aws_partition.current

module.eks_cluster.module.eks.data.tls_certificate.this[0]

module.eks_cluster.module.eks.aws_cloudwatch_log_group.this[0]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["Blueprint"]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["GithubRepo"]

module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["karpenter.sh/discovery"]

module.eks_cluster.module.eks.aws_eks_cluster.this[0]

module.eks_cluster.module.eks.aws_iam_openid_connect_provider.oidc_provider[0]

module.eks_cluster.module.eks.aws_iam_policy.cluster_encryption[0]

module.eks_cluster.module.eks.aws_iam_role.this[0]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.cluster_encryption[0]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSClusterPolicy"]

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSVPCResourceController"]

module.eks_cluster.module.eks.aws_security_group.cluster[0]

module.eks_cluster.module.eks.kubernetes_config_map_v1_data.aws_auth[0]

module.eks_cluster.module.eks.time_sleep.this[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_caller_identity.current

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["coredns"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["kube-proxy"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["vpc-cni"]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.aws_load_balancer_controller[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.cert_manager[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_dns[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_secrets[0]

module.eks_cluster.module.eks_blueprints_addons.data.aws_partition.current

module.eks_cluster.module.eks_blueprints_addons.data.aws_region.current

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["kube-proxy"]

module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["vpc-cni"]

module.eks_cluster.module.eks_blueprints_addons.time_sleep.this

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_limit_range_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_namespace_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_resource_quota_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_role_binding_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_service_account_v1.this["team-burnham"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_limit_range_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_namespace_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_resource_quota_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_role_binding_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_service_account_v1.this["team-riker"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_limit_range_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_namespace_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_resource_quota_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_role_binding_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_service_account_v1.this["ecsdemo-crystal"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_limit_range_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_namespace_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_resource_quota_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_role_binding_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-frontend"].kubernetes_service_account_v1.this["ecsdemo-frontend"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_cluster_role_binding_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_cluster_role_v1.this[0]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_limit_range_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_namespace_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_resource_quota_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_role_binding_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-nodejs"].kubernetes_service_account_v1.this["ecsdemo-nodejs"]

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_policy.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_platform_teams.aws_iam_role_policy_attachment.admin[0]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_limit_range_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_namespace_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_resource_quota_v1.this["team-platform"]

module.eks_cluster.module.eks_blueprints_platform_teams.kubernetes_service_account_v1.this["team-platform"]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.argocd[0]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["addons"]

module.eks_cluster.module.gitops_bridge_bootstrap.helm_release.bootstrap["workloads"]

module.eks_cluster.module.gitops_bridge_bootstrap.kubernetes_secret_v1.cluster[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_caller_identity.current

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.vpc_cni[0]

module.eks_cluster.module.vpc_cni_irsa.data.aws_partition.current

module.eks_cluster.module.vpc_cni_irsa.data.aws_region.current

module.eks_cluster.module.vpc_cni_irsa.aws_iam_policy.vpc_cni[0]

module.eks_cluster.module.vpc_cni_irsa.aws_iam_role.this[0]

module.eks_cluster.module.vpc_cni_irsa.aws_iam_role_policy_attachment.vpc_cni[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_caller_identity.current

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_iam_policy_document.assume_role_policy[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_partition.current

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_eks_node_group.this[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role.this[0]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_iam_role_policy_attachment.this["arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].aws_launch_template.this[0]

module.eks_cluster.module.eks.module.kms.data.aws_caller_identity.current

module.eks_cluster.module.eks.module.kms.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks.module.kms.data.aws_partition.current

module.eks_cluster.module.eks.module.kms.aws_kms_alias.this["cluster"]

module.eks_cluster.module.eks.module.kms.aws_kms_key.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.aws_iam_role_policy_attachment.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_caller_identity.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.assume[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_partition.current[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_policy.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_role.this[0]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.aws_iam_role_policy_attachment.this[0]- terraform output

access_argocd = <<EOT

export KUBECONFIG="/tmp/t101-eks-blueprint-green"

aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green

echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

echo "ArgoCD Username: admin"

echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.t101-eks-blueprint --query SecretString --output text --region ap-northeast-2)"

EOT

configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green"

eks_blueprints_dev_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-burnham-20240727134728185800000014",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-riker-20240727134728186300000017",

]

eks_blueprints_ecsdemo_teams_configure_kubectl = [

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-crystal-20240727134728186300000018",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-frontend-20240727134728186300000016",

"aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-ecsdemo-nodejs-20240727134729059600000019",

]

eks_blueprints_platform_teams_configure_kubectl = "aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green --role-arn arn:aws:iam::538558617837:role/team-platform-20240727134728149400000012"

eks_cluster_id = "t101-eks-blueprint-green"

gitops_metadata = <sensitive>- kubeconfig 환경 설정 및 Node, Pod 조회

$ aws eks --region ap-northeast-2 update-kubeconfig --name t101-eks-blueprint-green

Added new context arn:aws:eks:ap-northeast-2:538558617837:cluster/t101-eks-blueprint-green to /tmp/t101-eks-blueprint-blue

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-40-96.ap-northeast-2.compute.internal Ready <none> 7m3s v1.30.0-eks-036c24b

ip-10-0-44-46.ap-northeast-2.compute.internal Ready <none> 7m8s v1.30.0-eks-036c24b

ip-10-0-51-217.ap-northeast-2.compute.internal Ready <none> 7m4s v1.30.0-eks-036c24b

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

argocd argo-cd-argocd-application-controller-0 1/1 Running 0 3m55s

argocd argo-cd-argocd-applicationset-controller-6cd6588d6d-l7d4c 1/1 Running 0 5m34s

argocd argo-cd-argocd-dex-server-6f64db88cf-k6gdg 1/1 Running 0 5m34s

argocd argo-cd-argocd-notifications-controller-54498dfcd9-pmpdz 1/1 Running 0 5m34s

argocd argo-cd-argocd-redis-69fb858cb6-w4ng5 1/1 Running 0 5m34s

argocd argo-cd-argocd-repo-server-5b66949f84-7l2qb 1/1 Running 0 88s

argocd argo-cd-argocd-repo-server-5b66949f84-b4cq8 1/1 Running 0 2m59s

argocd argo-cd-argocd-repo-server-5b66949f84-m8ht5 1/1 Running 0 2m59s

argocd argo-cd-argocd-repo-server-5b66949f84-p28cb 1/1 Running 0 2m44s

argocd argo-cd-argocd-repo-server-5b66949f84-ptb74 1/1 Running 0 3m59s

argocd argo-cd-argocd-server-7cdfdcfc87-bf62l 1/1 Running 0 3m59s

cert-manager cert-manager-754c68dff4-gxgmq 1/1 Running 0 4m31s

cert-manager cert-manager-cainjector-75b6657b-x6lxr 1/1 Running 0 4m31s

cert-manager cert-manager-webhook-5855d44694-frw7n 1/1 Running 0 4m31s

external-dns external-dns-6b5f6b65f9-2mqsv 1/1 Running 0 4m24s

external-secrets external-secrets-66f7f9ccdd-w2m96 1/1 Running 0 4m24s

external-secrets external-secrets-cert-controller-657cf9b7db-pwfcm 1/1 Running 0 4m24s

external-secrets external-secrets-webhook-79b599c6cc-p8k46 1/1 Running 0 4m24s

geolocationapi geolocationapi-5c64c7954c-5vrl9 2/2 Running 0 4m19s

geolocationapi geolocationapi-5c64c7954c-fz86l 2/2 Running 0 4m19s

geordie downstream0-5d575fc95c-j29p9 1/1 Running 0 4m26s

geordie downstream1-7744695958-fbv9h 1/1 Running 0 4m26s

geordie frontend-f6dcd958d-w7tbb 1/1 Running 0 4m26s

geordie redis-server-7955b49b8f-zbll9 1/1 Running 0 4m19s

geordie yelb-appserver-6d6ff6c4cc-tdvkp 1/1 Running 0 4m19s

geordie yelb-db-fd55c4fd8-wf4w7 1/1 Running 0 4m19s

geordie yelb-ui-5655446b4b-tgqnb 1/1 Running 0 4m19s

ingress-nginx ingress-nginx-controller-54f8b55b87-9d9jh 1/1 Running 0 4m33s

ingress-nginx ingress-nginx-controller-54f8b55b87-jmbjm 1/1 Running 0 4m33s

ingress-nginx ingress-nginx-controller-54f8b55b87-whhr5 1/1 Running 0 4m33s

kube-system aws-load-balancer-controller-86dd6f86c9-5mg9f 1/1 Running 0 4m29s

kube-system aws-load-balancer-controller-86dd6f86c9-mlskg 1/1 Running 0 4m28s

kube-system aws-node-k7n8g 2/2 Running 0 7m39s

kube-system aws-node-mzt9d 2/2 Running 0 7m35s

kube-system aws-node-xmptb 2/2 Running 0 7m34s

kube-system coredns-5d99cdb6c5-bw98t 1/1 Running 0 8m42s

kube-system coredns-5d99cdb6c5-vpgfj 1/1 Running 0 8m42s

kube-system kube-proxy-cpmk2 1/1 Running 0 7m34s

kube-system kube-proxy-p9vdd 1/1 Running 0 7m39s

kube-system kube-proxy-wjk4r 1/1 Running 0 7m35s

kube-system metrics-server-74cd756cb7-xhc8x 1/1 Running 0 4m34s

kyverno kyverno-admission-controller-54b8bdb86f-vmvdj 1/1 Running 0 2m29s

kyverno kyverno-background-controller-64fcf87c7b-c6l4f 1/1 Running 0 2m29s

kyverno kyverno-cleanup-controller-5b4b8f645b-ndv96 1/1 Running 0 2m29s

kyverno kyverno-reports-controller-55b9787f78-slbrj 1/1 Running 0 2m29s

team-burnham burnham-54c6f7dd6b-672n6 1/1 Running 0 4m40s

team-burnham burnham-54c6f7dd6b-f68fg 1/1 Running 0 4m40s

team-burnham burnham-54c6f7dd6b-jqdxs 1/1 Running 0 4m40s

team-burnham nginx-84dbf974d4-4vfmb 1/1 Running 0 4m40s

team-riker deployment-2048-6c9b97954b-52lql 1/1 Running 0 4m40s

team-riker deployment-2048-6c9b97954b-nwvjb 1/1 Running 0 4m40s

team-riker deployment-2048-6c9b97954b-qs277 1/1 Running 0 4m40s

team-riker guestbook-ui-686bbcb9db-pfq9l 1/1 Running 0 4m40s- ArgoCD 접속 URL과 계정 확인

$ echo "ArgoCD URL: https://$(kubectl get svc -n argocd argo-cd-argocd-server -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')"

$ echo "ArgoCD Username: admin"

$ echo "ArgoCD Password: $(aws secretsmanager get-secret-value --secret-id argocd-admin-secret.t101-eks-blueprint --query SecretString --output text --region ap-northeast-2)"

ArgoCD URL: https://ad315ff73afbc4237ac3ad37fe6c4820-1399727412.ap-northeast-2.elb.amazonaws.com

ArgoCD Username: admin

ArgoCD Password: Dr0NXLlBx$j$nY?W-

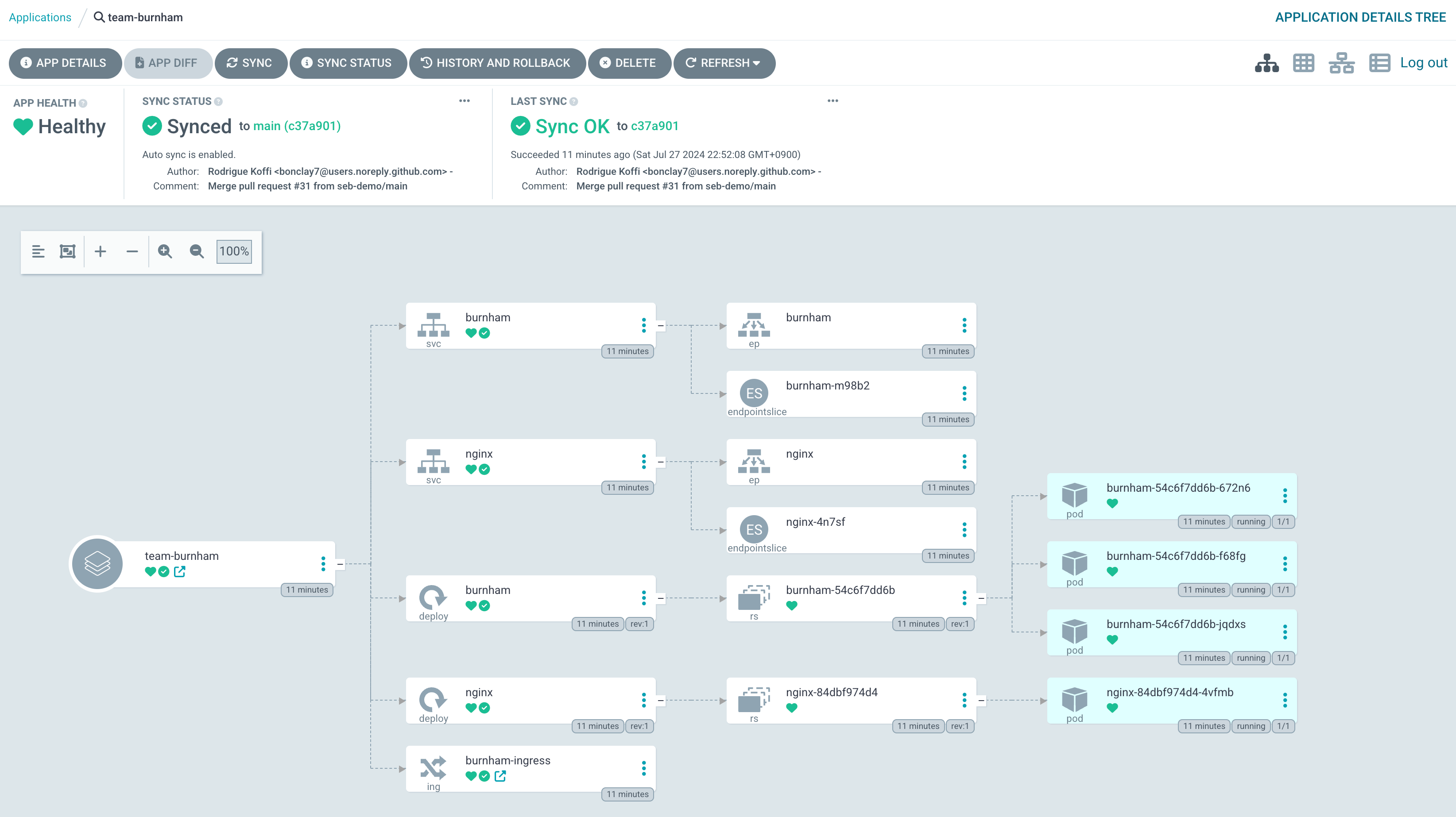

ArgoCD 접속화면

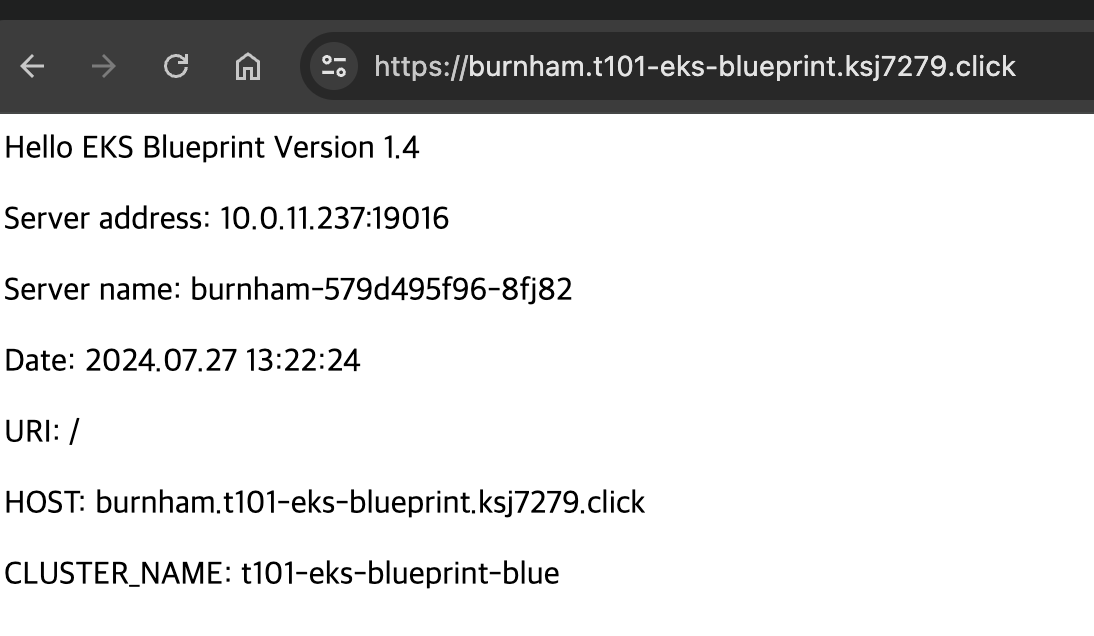

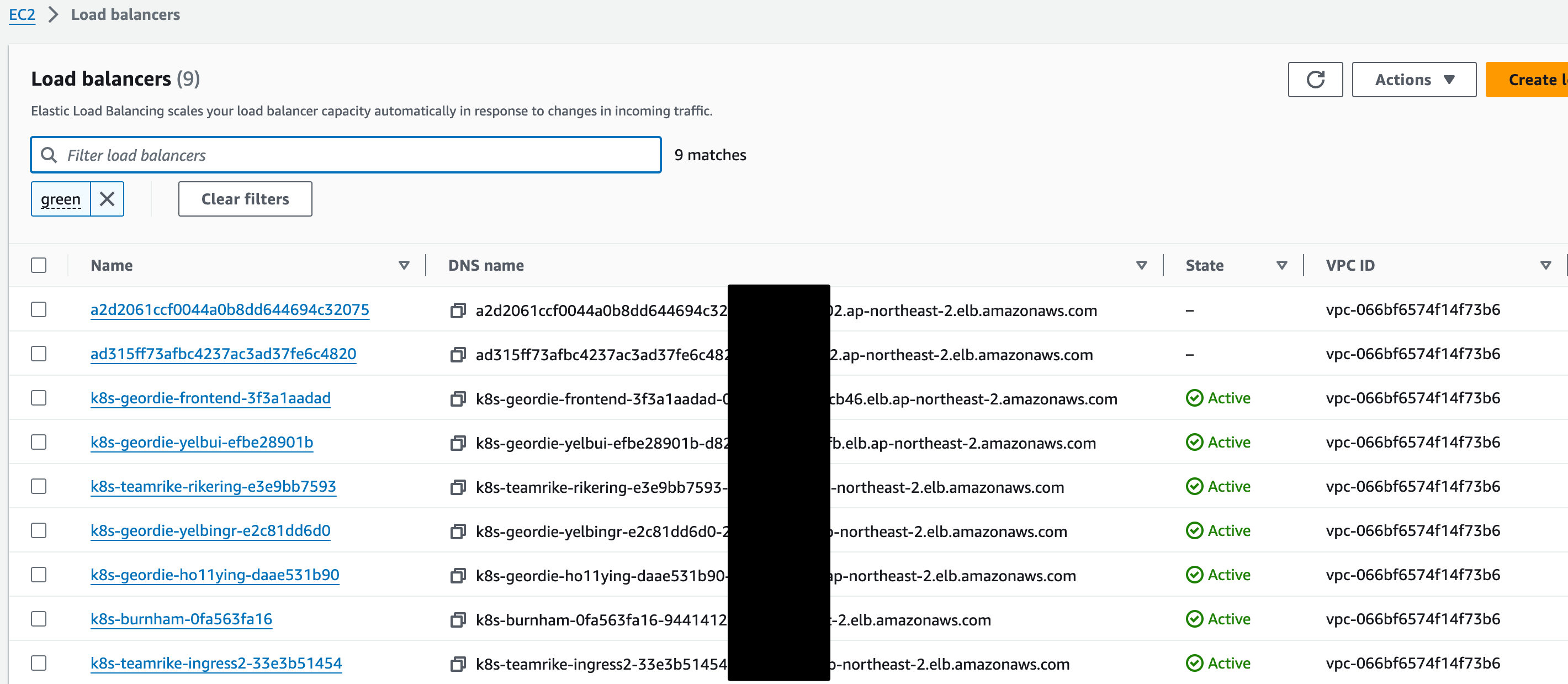

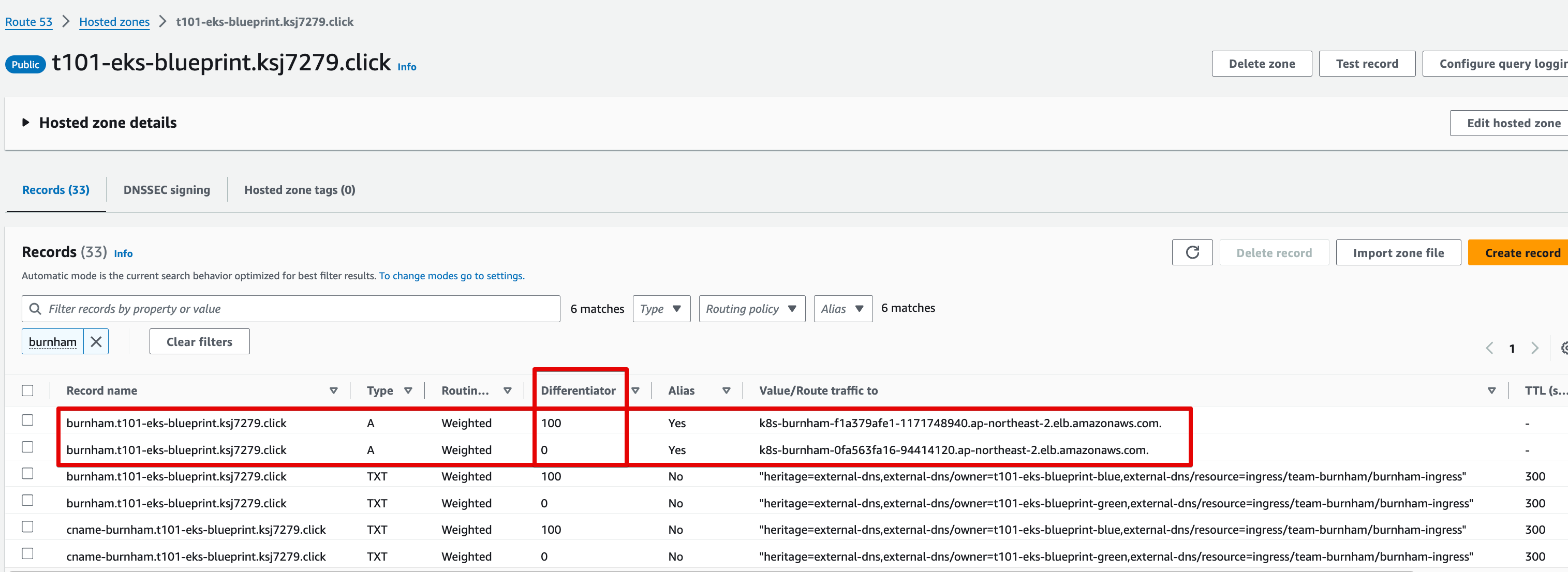

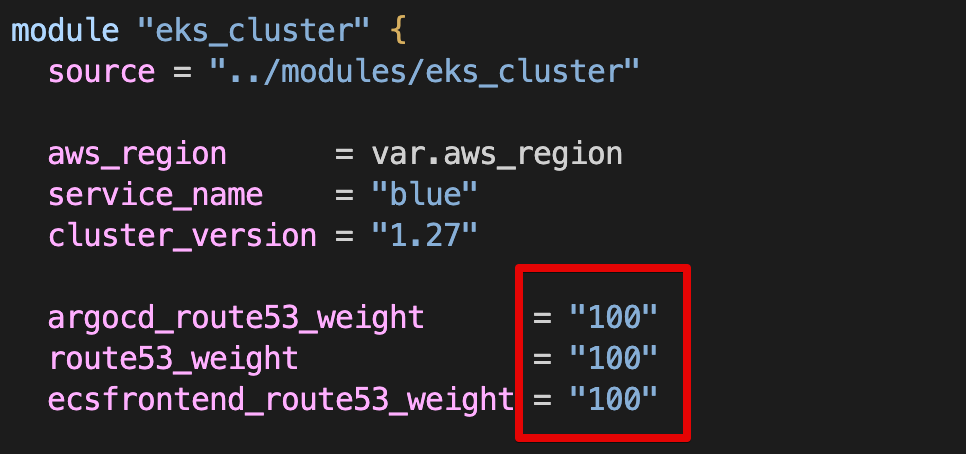

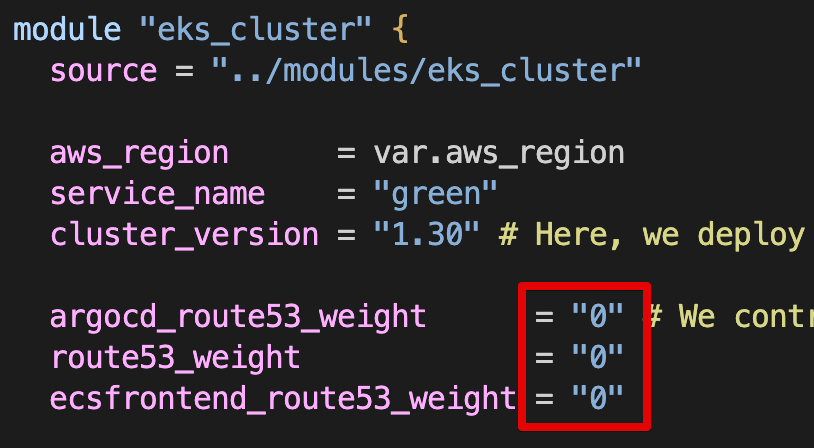

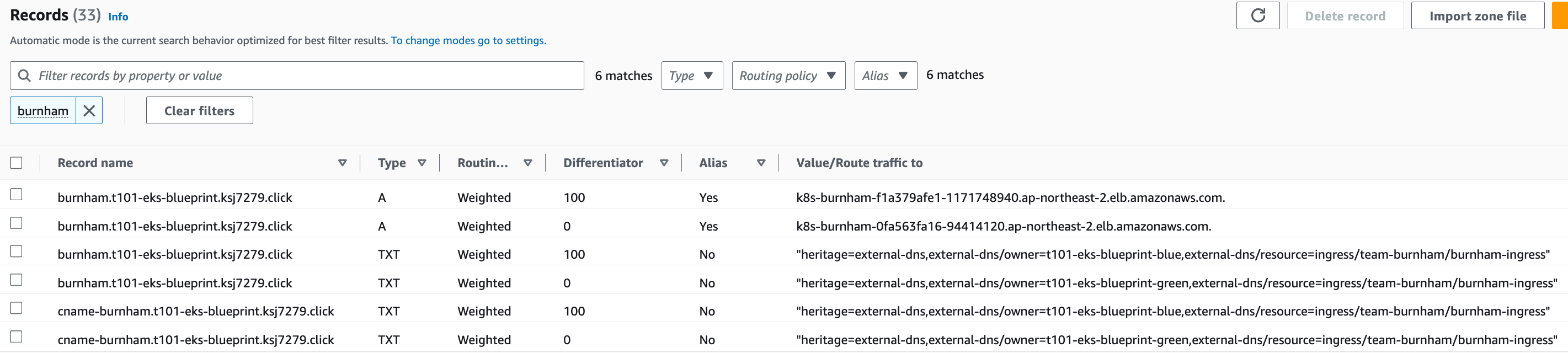

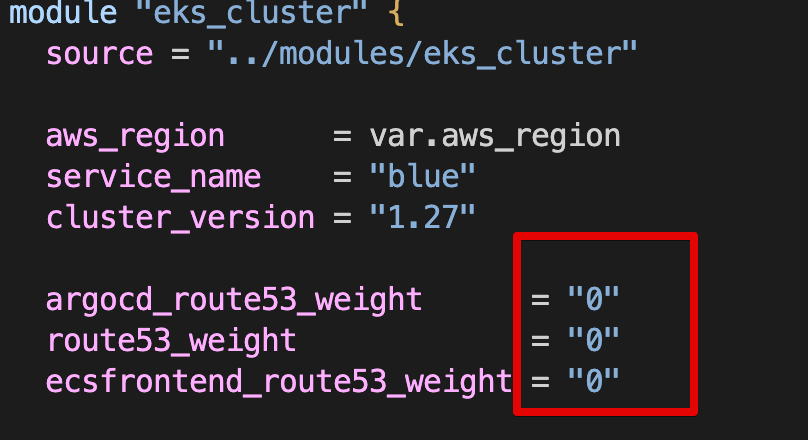

Canary 방식으로 트래픽 전환

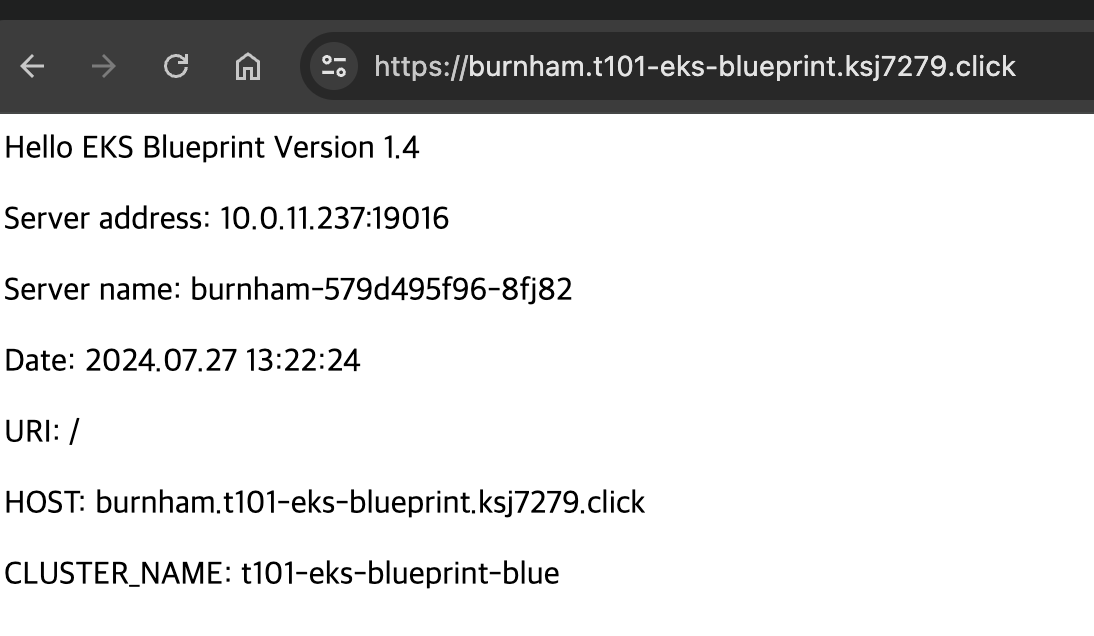

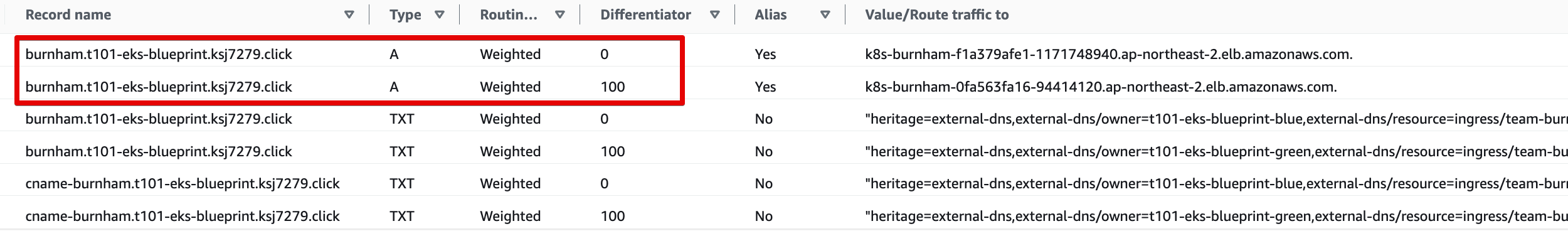

Route 53 burnham.t101-eks-blueprint.ksj7279.click A 레크드 Blue 가중치 100, Green 0

-

Blue main.tf Route 53 가중치 100, Green main.tf Route 53 가중치 0으로 수정

-

URL 호출

$ repeat 10 curl -s https://burnham.t101-eks-blueprint.ksj7279.click | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}' && sleep 60

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-blue

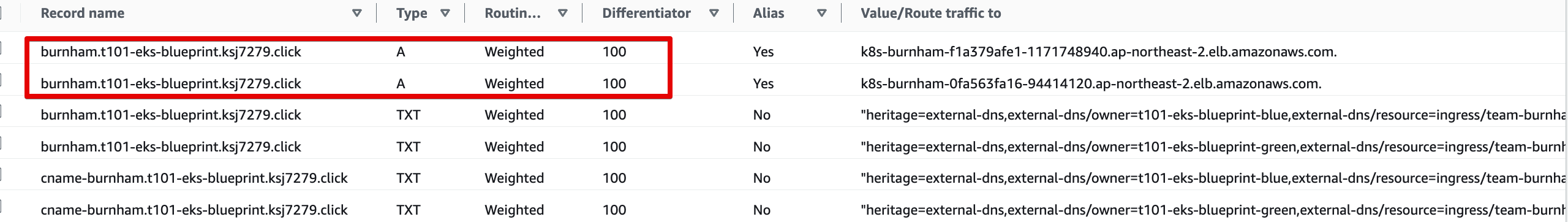

t101-eks-blueprint-blueRoute 53 burnham.t101-eks-blueprint.ksj7279.click A 레크드 Blue 가중치 100, Green 100

-

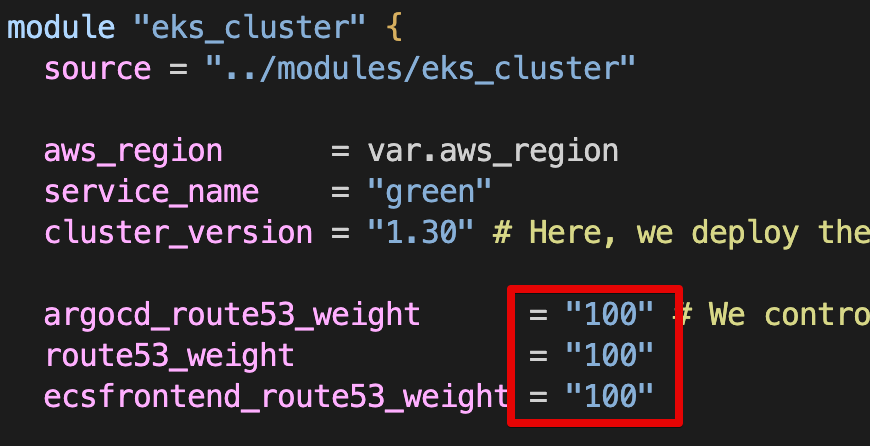

Green main.tf Route 53 가중치 100으로 수정

-

URL 호출

$ repeat 10 curl -s https://burnham.t101-eks-blueprint.ksj7279.click | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}' && sleep 60

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-blue

t101-eks-blueprint-blue

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-blue

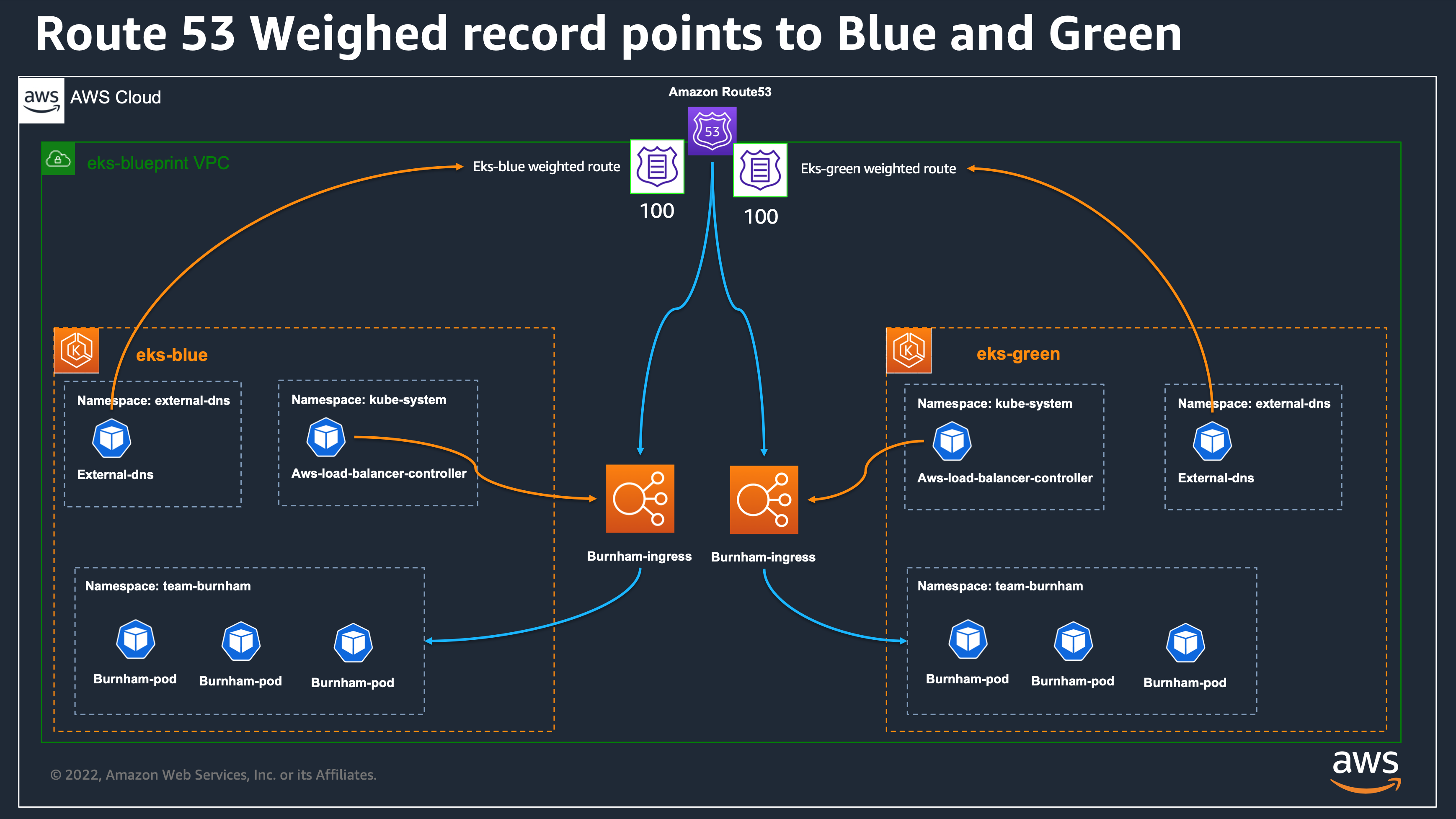

t101-eks-blueprint-blueRoute 53 burnham.t101-eks-blueprint.ksj7279.click A 레크드 Blue 가중치 0, Green 100

-

Blue main.tf Route 53 가중치 0으로 수정

-

URL 호출

$ repeat 10 curl -s https://burnham.t101-eks-blueprint.ksj7279.click | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}' && sleep 60

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green

t101-eks-blueprint-green4. 자원 삭제

$ cd eks-green

$ sh ../tear-down.sh

$ cd eks-blue

$ sh ../tear-down.sh

$ cd environment

$ sh ../tear-down.sh

이상으로 Amazon EKS Blueprints for Terraform 사이트에서 제공하는 blue/green Upgrade 패턴을 활용한 EKS1.27을 EKS1.30 마이그레이션 하는 데모를 마치겠습니다.

참고해 주셔서 감사합니다.