가시다(gasida) 님이 진행하는 Terraform T101 4기 실습 스터디 게시글입니다.

책 '테라폼으로 시작하는 IaC' 를 참고하였고, 스터디하면서 도움이 될 내용들을 정리하고자 합니다.

8주차는 OpenTofu에 대해 학습을 하였습니다.

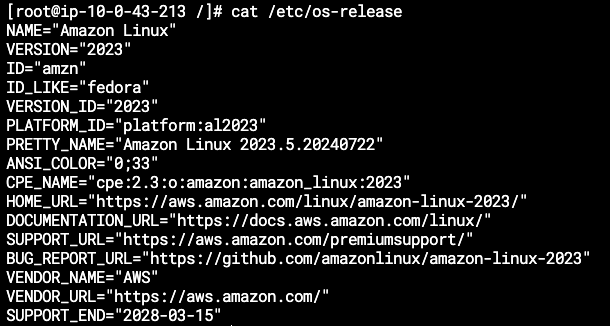

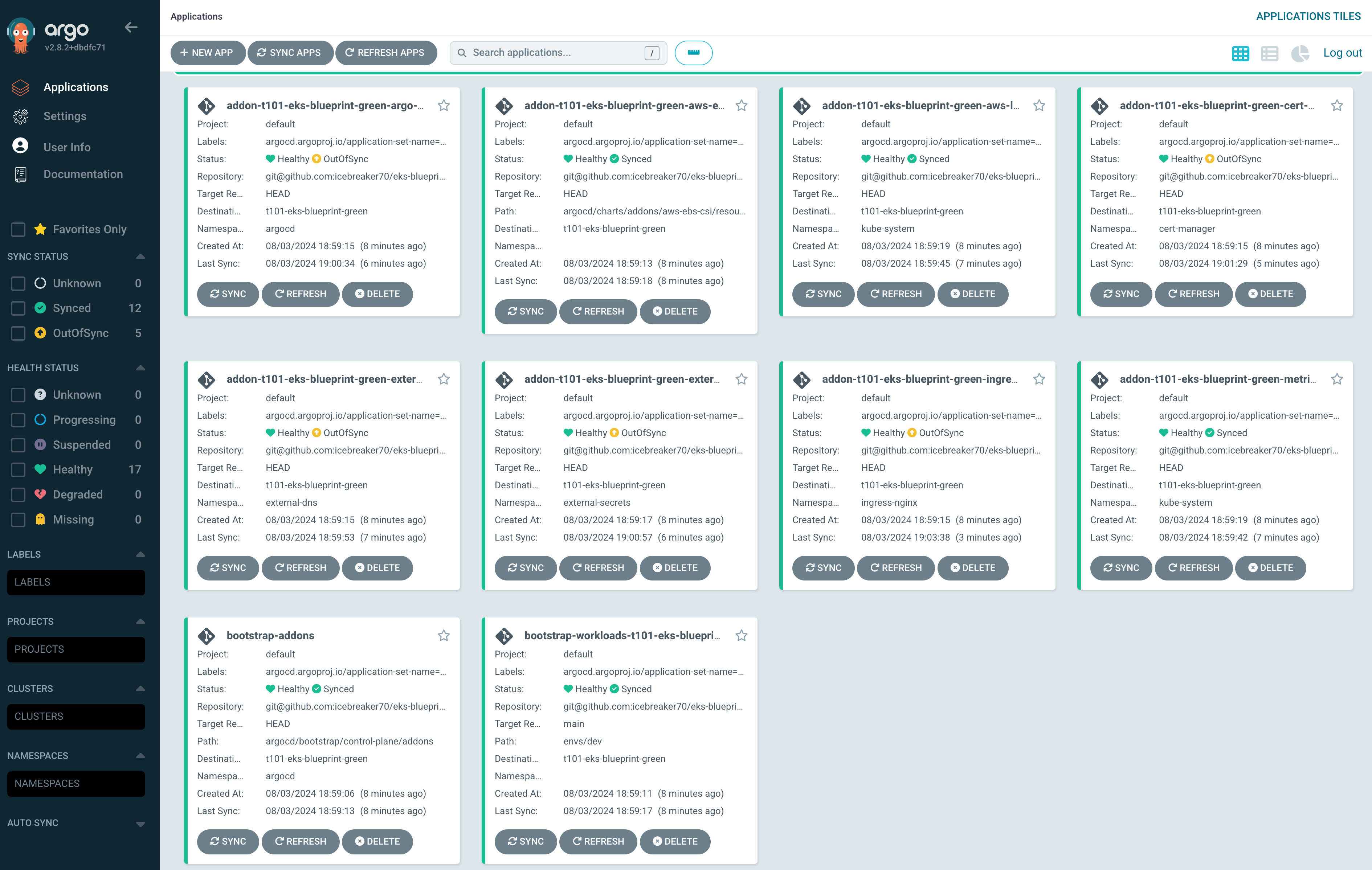

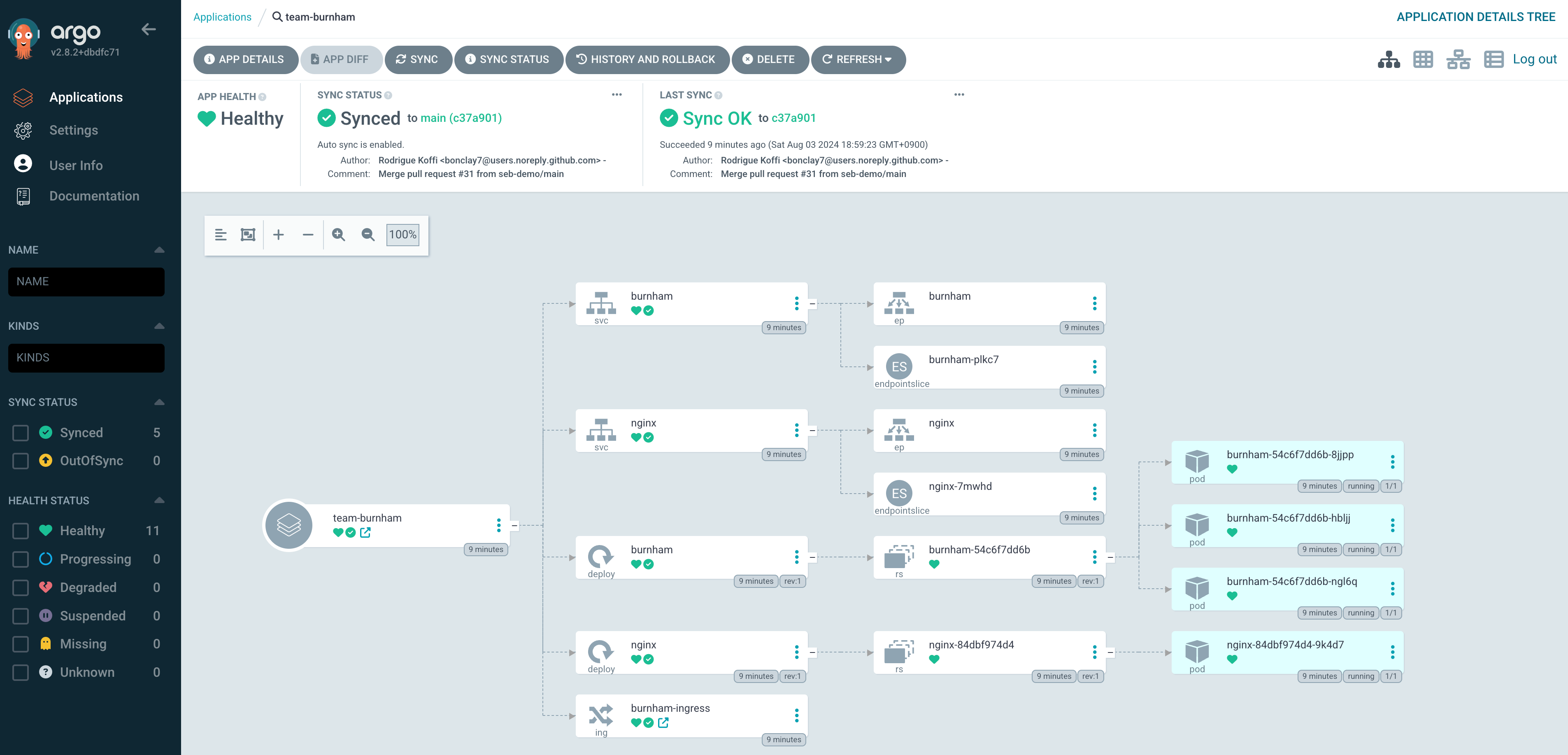

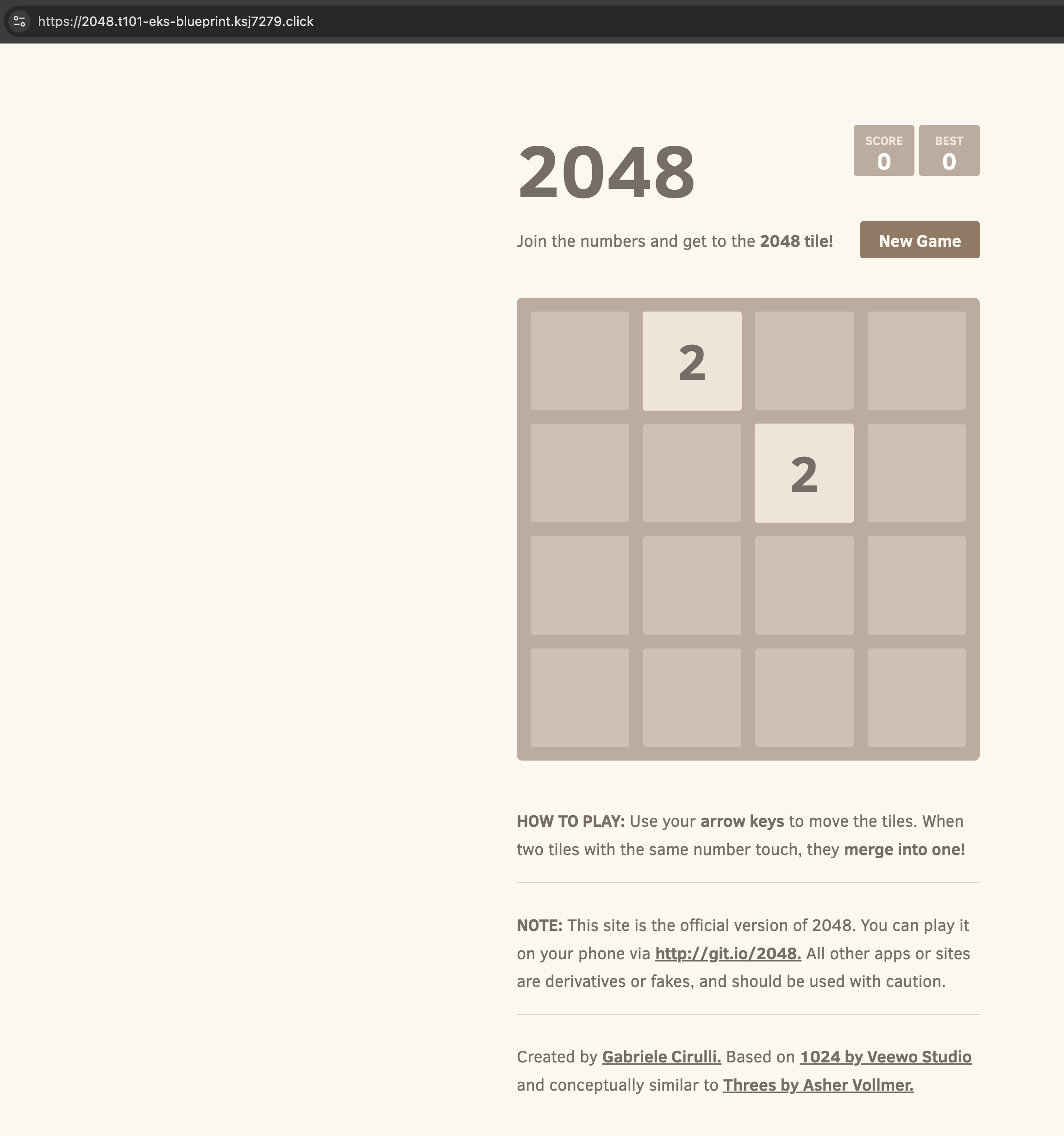

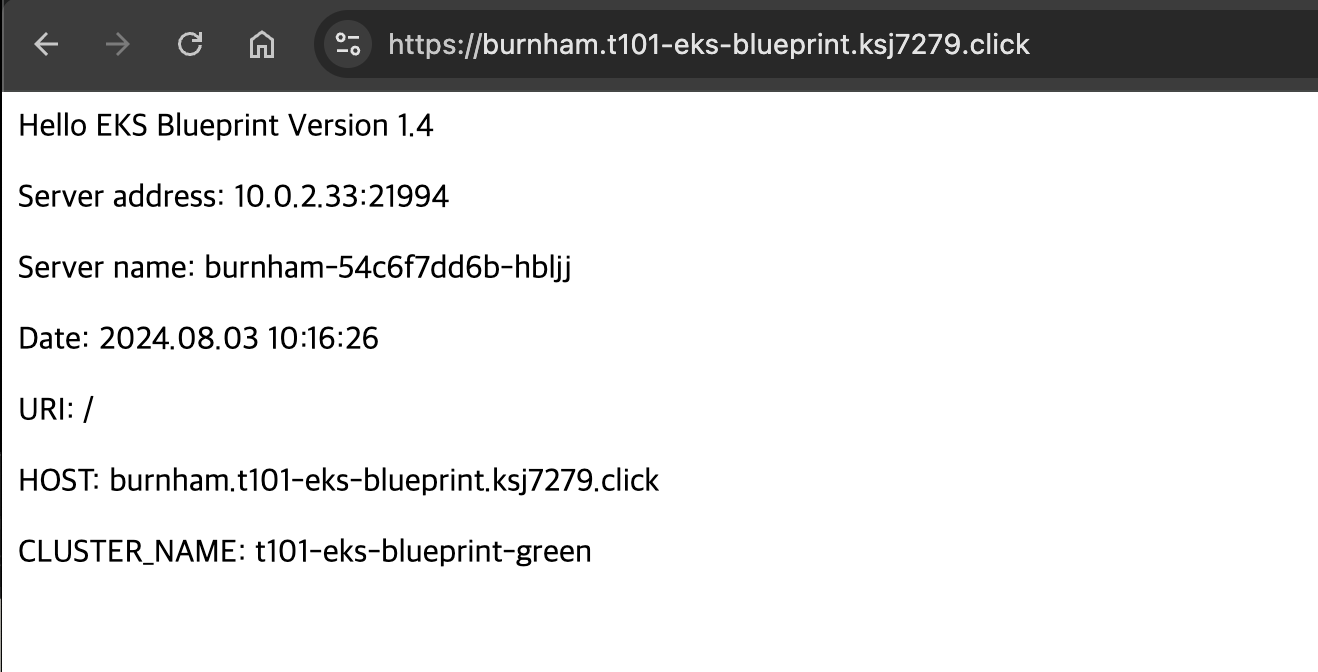

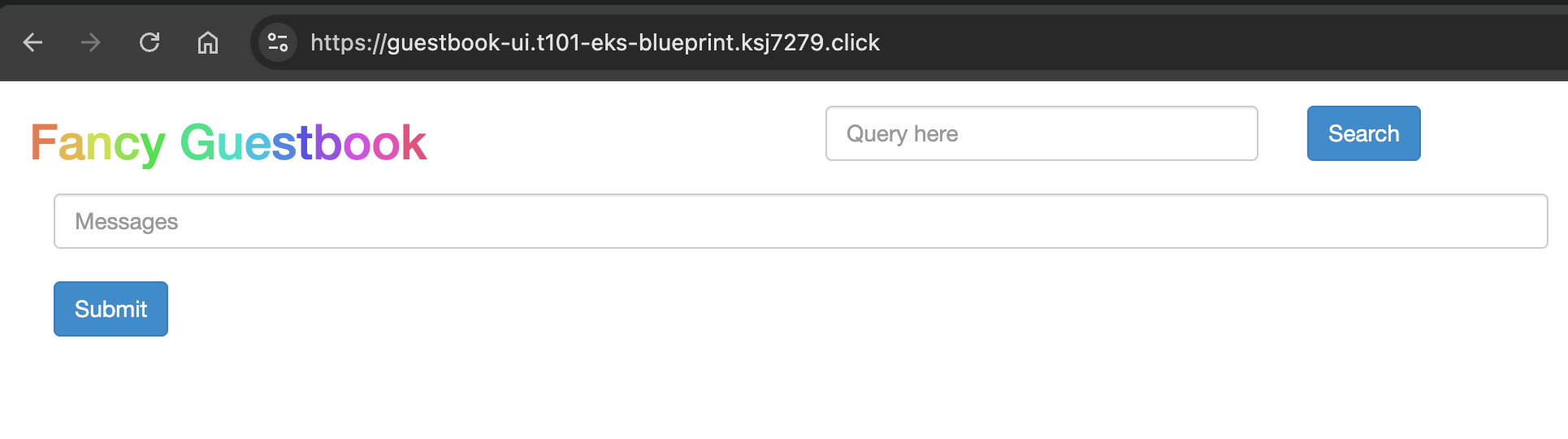

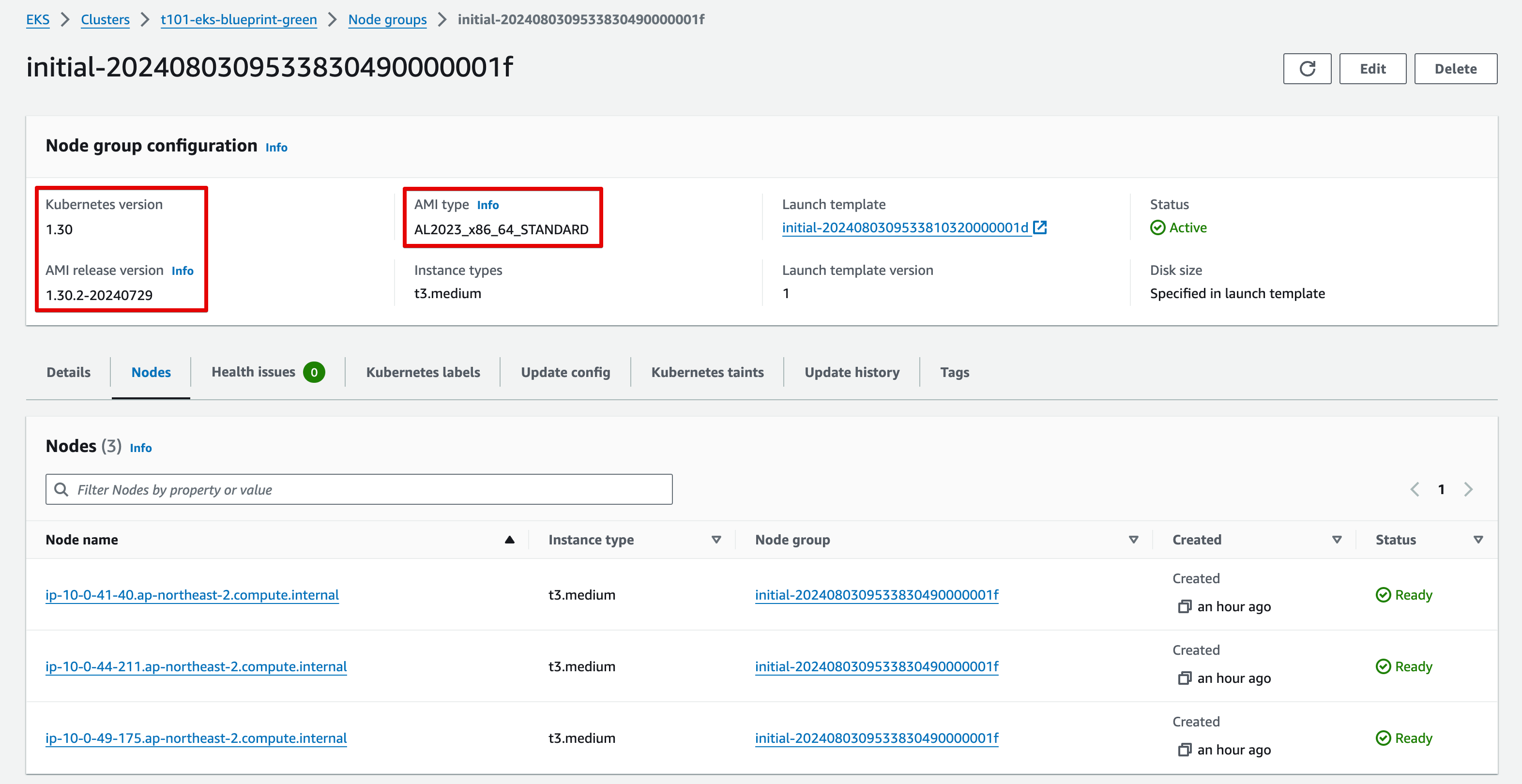

이번엔 OpenTofu(이하 tofu)에 대한 간략한 설명과 tofu를 활용하여 최신 버전인 EKS Cluster 1.30을 생성하고, EKS 1.30부터는 Managed NodeGroup으로 WorkerNode 생성 시 Amazon Linux 2(이하 AL2) 대신 Amazon Linux 2023(이하 AL2023)으로 생성 되는데 달라지는 점과 고려할 사항에 대해 정리하였습니다.

1. OpenTofu

개요 (https://opentofu.org)

OpenTofu는

- 이전에 OpenTF로 명명된 OpenTofu는 오픈 소스, 커뮤니티 기반 및 Linux Foundation에서 관리하는 Terraform의 포크입니다.

- OpenTofu는 HashiCorp가 Mozilla Public License(이하 MPL) 오픈 소스 라이선스에서 비오픈 소스 라이선스인 Business Source License(이하 BUSL)로 전환한 것에 대응하여 Gruntwork, Spacelift, Harness, Env0, Scalr 등의 주도로 만들어진 Terraform 포크입니다. 이 이니셔티브에는 많은 지지자가 있습니다.

OpenTofu가 만들어진 이유

-

HashiCorp 팀이 설명하는 BUSL 및 추가 사용 허가는 모호하므로 Terraform을 사용하는 회사, 공급업체 및 개발자는 자신의 작업이 허용된 사용 범위를 벗어나는 것으로 해석될 수 있는지 여부를 결정하기가 어렵습니다.

-

Hashicorp의 FAQ는 현재로서는 최종 사용자와 시스템 통합자에게 어느 정도 마음의 평화를 제공하지만 라이센스 조건이 향후 사용에 미치는 영향은 불분명합니다. "경쟁적" 또는 "임베딩"에 대한 회사의 정의가 변경되거나 라이센스가 추가로 수정되어 비공개 소스가 될 수 있다는 가능성은 Terraform 사용자에게 불확실성을 불러일으킵니다.

-

우리는 Terraform이 많은 회사에서 사용하는 프로젝트이고 많은 기여자가 Terraform을 오늘날의 모습으로 만들었기 때문에 오픈 소스로 유지되어야 한다고 굳게 믿습니다. Terraform의 성공은 이를 중심으로 많은 지원 프로젝트를 구축하려는 커뮤니티의 노력이 없었다면 불가능했을 것입니다.

OpenTofu와 Terraform 차이점

-

기술 수준에서 OpenTofu 1.6.x는 기능 면에서 Terraform 1.6.x와 매우 유사합니다. 앞으로는 프로젝트 기능 세트가 다양해질 것입니다.

-

또 다른 주요 차이점은 OpenTofu가 오픈 소스이며 그 목표는 단일 회사가 로드맵을 지시할 수 없는 협업 방식으로 추진되는 것입니다.

Terraform 대신 OpenTofu 사용해야 하는 이유

개인적인 사용

- BUSL 라이선스에는 비상업적 사용 사례에 대한 제한이 없으므로 초기 인상에서는 OpenTofu 또는 Terraform을 개인적인 용도로 사용할 수 있음을 시사합니다. Terraform 생태계가 점점 불안정해지고 다른 라이선스로 전환되면 상황이 바뀔 수 있습니다. Terraform에 익숙한 사람들은 OpenTofu를 개인적인 용도로 채택하는 데 아무런 문제가 없으므로 적어도 처음에는 지식 격차가 없을 것입니다.

컨설턴트

- 컨설턴트는 고객에게 예산에 맞는 최상의 솔루션을 제공해야 합니다. OpenTofu는 Terraform과 동등할 것이며 프로젝트의 핵심 목표 중 하나는 커뮤니티의 문제를 경청하는 것이므로 항상 오픈 소스로 유지되는 프로젝트를 추천하는 것이 합리적입니다. 지난 8년 동안 Terraform을 사용해 본 사람이라면 아마도 해결하는 데 시간이 걸리는 문제를 접했을 것입니다. OpenTofu 개발에 참여하는 대규모 커뮤니티는 이것이 더 이상 사실이 아니라는 것을 의미합니다.

회사

- 회사는 상황에 따라 더 많은 어려움을 겪게 될 것입니다. 새로운 프로젝트로 전환하는 것은 위험을 수반하지만 경고 없이 라이선스를 변경하는 프로젝트를 계속 유지하는 것은 훨씬 더 위험합니다. 이러한 위험은 Linux Foundation에 OpenTofu를 제공함으로써 최소화되며, 향후 릴리스에서 Terraform과의 기능 패리티를 유지하려는 OpenTofu의 목표는 기술적 위험을 줄입니다.

OpenTofu는 향후 Terraform 릴리스와 호환됩니까?

-

OpenTofu에 어떤 기능이 포함될지는 커뮤니티에서 결정합니다. 오랫동안 기다려온 Terraform 기능 중 일부가 곧 공개될 예정입니다.

-

Terraform에서 사용할 수 있는 OpenTofu의 기능이 누락된 경우 언제든지 문제를 생성해 주세요.

OpenTofu를 Terraform의 즉시 대체품으로 사용할 수 있습니까? OpenTofu는 생산용으로 적합합니까?

-

현재 OpenTofu는 Terraform 버전 1.5.x 및 대부분의 1.6.x와 호환되므로 Terraform을 즉시 대체할 수 있습니다. 호환성을 보장하기 위해 코드를 변경할 필요는 없습니다. 최신버전 1.8.x

-

OpenTofu는 예외 없이 프로덕션 사용 사례에 적합합니다.

tofu 설치

MacOS

- brew로 설치법

brew update

brew install opentofu

tofu -version- tenv로 설치법(OpenTofu, Terraform, Terragrunt 설치 가능하여 추천하는 방법)

# (옵션) tfenv 제거

brew remove tfenv

# Tenv 설치

## brew install cosign # 설치 권장

brew install tenv

which tenv

tenv --version

tenv -h

# (옵션) Install shell completion

tenv completion zsh > ~/.tenv.completion.zsh

echo "source '~/.tenv.completion.zsh'" >> ~/.zshrc- tenv 설치 후 tofu 설치 및 확인

# Tofu 설치

$ tenv tofu -h

Subcommand to manage several versions of OpenTofu (https://opentofu.org).

Usage:

tenv tofu [command]

Aliases:

tofu, opentofu

Available Commands:

constraint Set a default constraint expression for OpenTofu.

detect Display OpenTofu current version.

install Install a specific version of OpenTofu.

list List installed OpenTofu versions.

list-remote List installable OpenTofu versions.

reset Reset used version of OpenTofu.

uninstall Uninstall versions of OpenTofu.

use Switch the default OpenTofu version to use.

Flags:

-h, --help help for tofu

Global Flags:

-q, --quiet no unnecessary output (and no log)

-r, --root-path string local path to install versions of OpenTofu, Terraform, Terragrunt, and Atmos (default "/Users/sjkim/.tenv")

-v, --verbose verbose output (and set log level to Trace)

Use "tenv tofu [command] --help" for more information about a command.

$ tenv tofu list-remote

Fetching all releases information from https://api.github.com/repos/opentofu/opentofu/releases

1.6.0-alpha1

...

1.6.0-rc1

1.6.0

...

1.7.2

1.7.3

1.8.0-alpha1

1.8.0-beta1

1.8.0-beta2

1.8.0-rc1

1.8.0

$ tenv tofu install 1.8.0

Installing OpenTofu 1.8.0

Fetching release information from https://api.github.com/repos/opentofu/opentofu/releases/tags/v1.8.0

Downloading https://github.com/opentofu/opentofu/releases/download/v1.8.0/tofu_1.8.0_darwin_arm64.zip

Downloading https://github.com/opentofu/opentofu/releases/download/v1.8.0/tofu_1.8.0_SHA256SUMS

Downloading https://github.com/opentofu/opentofu/releases/download/v1.8.0/tofu_1.8.0_SHA256SUMS.sig

Downloading https://github.com/opentofu/opentofu/releases/download/v1.8.0/tofu_1.8.0_SHA256SUMS.pem

cosign executable not found, fallback to pgp check

Downloading https://github.com/opentofu/opentofu/releases/download/v1.8.0/tofu_1.8.0_SHA256SUMS.gpgsig

Downloading https://get.opentofu.org/opentofu.asc

Installation of OpenTofu 1.8.0 successful

$ tenv tofu list

1.8.0 (never used)

$ tenv tofu use 1.8.0

Written 1.8.0 in /Users/sjkim/.tenv/OpenTofu/version

$ tenv tofu detect

Resolved version from /Users/sjkim/.tenv/OpenTofu/version : 1.8.0

OpenTofu 1.8.0 will be run from this directory.

$ tofu -version

OpenTofu v1.8.0

on darwin_arm64Linux (Debian, Ubuntu)

# Download the installer script:

curl --proto '=https' --tlsv1.2 -fsSL https://get.opentofu.org/install-opentofu.sh -o install-opentofu.sh

# Alternatively: wget --secure-protocol=TLSv1_2 --https-only https://get.opentofu.org/install-opentofu.sh -O install-opentofu.sh

# Give it execution permissions:

chmod +x install-opentofu.sh

# Please inspect the downloaded script

# Run the installer:

./install-opentofu.sh --install-method deb

# Remove the installer:

rm -f install-opentofu.shWorking with OpenTofu (https://opentofu.org/docs/intro/core-workflow/)

The core OpenTofu workflow has three steps:

- Write - Author infrastructure as code.

- Plan - Preview changes before applying.

- Apply - Provision reproducible infrastructure.

This guide walks through how each of these three steps plays out in the context of working as an individual practitioner, how they evolve when a team is collaborating on infrastructure, and how a cloud backend enables this workflow to run smoothly for entire organizations.

Working as an Individual Practitioner

Let's first walk through how these parts fit together as an individual working on infrastructure as code.

1. Write

You write OpenTofu configuration just like you write code: in your editor of choice. It's common practice to store your work in a version controlled repository even when you're just operating as an individual.

# Create repository

$ git init my-infra && cd my-infra

Initialized empty Git repository in /.../my-infra/.git/

# Write initial config

$ vim main.tf

cat <<EOT > main.tf

provider "aws" {

region = "ap-northeast-2"

}

resource "aws_instance" "example" {

ami = "ami-062cf18d655c0b1e8"

instance_type = "t2.micro"

tags = {

Name = "t101-study"

}

}

EOT

# Initialize OpenTofu

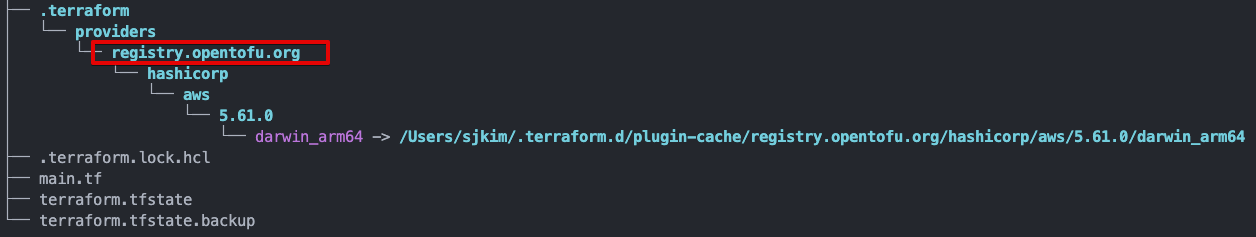

$ tofu init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v5.61.0...

- Installed hashicorp/aws v5.61.0 (signed, key ID 0C0AF313E5FD9F80)

Providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://opentofu.org/docs/cli/plugins/signing/

OpenTofu has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that OpenTofu can guarantee to make the same selections by default when

you run "tofu init" in the future.

OpenTofu has been successfully initialized!

You may now begin working with OpenTofu. Try running "tofu plan" to see

any changes that are required for your infrastructure. All OpenTofu commands

should now work.

If you ever set or change modules or backend configuration for OpenTofu,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

As you make progress on authoring your config, repeatedly running plans can help flush out syntax errors and ensure that your config is coming together as you expect.

# Review plan

$ tofu plan

Planning failed. OpenTofu encountered an error while generating this plan.

╷

│ Error: Retrieving AWS account details: validating provider credentials: retrieving caller identity from STS: operation error STS: GetCallerIdentity, https response error StatusCode: 403, RequestID: 9b72e86f-65b1-427a-9995-bfc00ad7e188, api error InvalidClientTokenId: The security token included in the request is invalid.

│

│ with provider["registry.opentofu.org/hashicorp/aws"],

│ on main.tf line 1, in provider "aws":

│ 1: provider "aws" {

│

╵

$ export AWS_PROFILE=t101 <-- AWS t101 credential profile을 기본으로 설정

$ tofu plan

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

OpenTofu will perform the following actions:

# aws_instance.example will be created

+ resource "aws_instance" "example" {

+ ami = "ami-062cf18d655c0b1e8"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ disable_api_stop = (known after apply)

+ disable_api_termination = (known after apply)

+ ebs_optimized = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ host_resource_group_arn = (known after apply)

+ iam_instance_profile = (known after apply)

+ id = (known after apply)

+ instance_initiated_shutdown_behavior = (known after apply)

+ instance_lifecycle = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = (known after apply)

+ monitoring = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ placement_partition_number = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ spot_instance_request_id = (known after apply)

+ subnet_id = (known after apply)

+ tags = {

+ "Name" = "t101-study"

}

+ tags_all = {

+ "Name" = "t101-study"

}

+ tenancy = (known after apply)

+ user_data = (known after apply)

+ user_data_base64 = (known after apply)

+ user_data_replace_on_change = false

+ vpc_security_group_ids = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

───────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so OpenTofu can't guarantee to take exactly these actions if you run "tofu apply" now.

# Make additional edits, and repeat

$ vim main.tfThis parallels working on application code as an individual, where a tight feedback loop between editing code and running test commands is useful.

2. Plan

When the feedback loop of the Write step has yielded a change that looks good, it's time to commit your work and review the final plan.

$ git status

On branch main

No commits yet

Untracked files:

(use "git add <file>..." to include in what will be committed)

.terraform.lock.hcl

.terraform/

main.tf

nothing added to commit but untracked files present (use "git add" to track)

$ git add main.tf

$ git commit -m 'Managing infrastructure as code!'

[main (root-commit) d803c45] Managing infrastructure as code!

1 file changed, 13 insertions(+)

create mode 100644 main.tf```Because tofu apply will display a plan for confirmation before proceeding to change any infrastructure, that's the command you run for final review.

$ tofu apply

An execution plan has been generated and is shown below.

# ...3. Apply

After one last check, you are ready to tell OpenTofu to provision real infrastructure.

$ tofu apply

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

OpenTofu will perform the following actions:

# aws_instance.example will be created

+ resource "aws_instance" "example" {

+ ami = "ami-062cf18d655c0b1e8"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ disable_api_stop = (known after apply)

+ disable_api_termination = (known after apply)

+ ebs_optimized = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ host_resource_group_arn = (known after apply)

+ iam_instance_profile = (known after apply)

+ id = (known after apply)

+ instance_initiated_shutdown_behavior = (known after apply)

+ instance_lifecycle = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = (known after apply)

+ monitoring = (known after apply)

+ outpost_arn = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ placement_partition_number = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ secondary_private_ips = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ spot_instance_request_id = (known after apply)

+ subnet_id = (known after apply)

+ tags = {

+ "Name" = "t101-study"

}

+ tags_all = {

+ "Name" = "t101-study"

}

+ tenancy = (known after apply)

+ user_data = (known after apply)

+ user_data_base64 = (known after apply)

+ user_data_replace_on_change = false

+ vpc_security_group_ids = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

OpenTofu will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

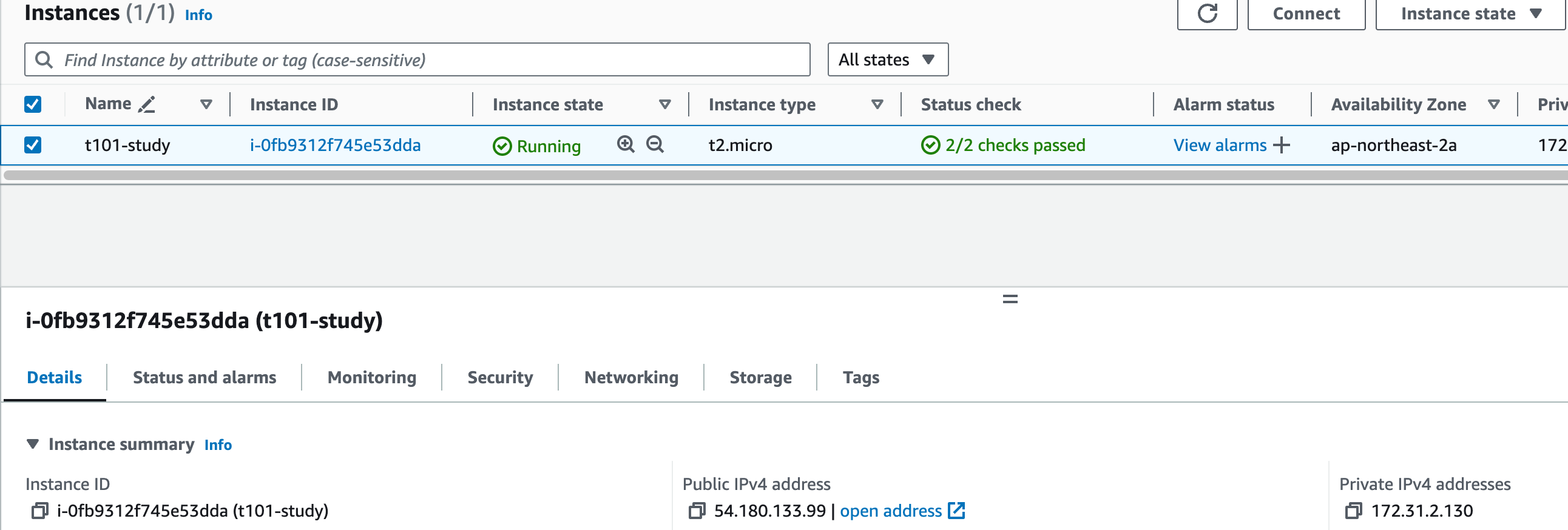

aws_instance.example: Creating...

aws_instance.example: Still creating... [10s elapsed]

aws_instance.example: Still creating... [20s elapsed]

aws_instance.example: Still creating... [30s elapsed]

aws_instance.example: Creation complete after 31s [id=i-0fb9312f745e53dda]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

$ tofu state list

aws_instance.example

$ tofu state show aws_instance.example

# aws_instance.example:

resource "aws_instance" "example" {

ami = "ami-062cf18d655c0b1e8"

arn = "arn:aws:ec2:ap-northeast-2:1**********9:instance/i-0fb9312f745e53dda"

associate_public_ip_address = true

availability_zone = "ap-northeast-2a"

cpu_core_count = 1

cpu_threads_per_core = 1

disable_api_stop = false

disable_api_termination = false

ebs_optimized = false

get_password_data = false

hibernation = false

id = "i-0fb9312f745e53dda"

instance_initiated_shutdown_behavior = "stop"

instance_state = "running"

instance_type = "t2.micro"

ipv6_address_count = 0

ipv6_addresses = []

monitoring = false

placement_partition_number = 0

primary_network_interface_id = "eni-02f793197e2ae9a16"

private_dns = "ip-172-31-2-130.ap-northeast-2.compute.internal"

private_ip = "172.31.2.130"

public_dns = "ec2-54-180-133-99.ap-northeast-2.compute.amazonaws.com"

public_ip = "54.180.133.99"

secondary_private_ips = []

security_groups = [

"default",

]

source_dest_check = true

subnet_id = "subnet-0dc5a51c96d2db619"

tags = {

"Name" = "t101-study"

}

tags_all = {

"Name" = "t101-study"

}

tenancy = "default"

user_data_replace_on_change = false

vpc_security_group_ids = [

"sg-0e6cdaaea796e7bbd",

]

capacity_reservation_specification {

capacity_reservation_preference = "open"

}

cpu_options {

core_count = 1

threads_per_core = 1

}

credit_specification {

cpu_credits = "standard"

}

enclave_options {

enabled = false

}

maintenance_options {

auto_recovery = "default"

}

metadata_options {

http_endpoint = "enabled"

http_protocol_ipv6 = "disabled"

http_put_response_hop_limit = 2

http_tokens = "required"

instance_metadata_tags = "disabled"

}

private_dns_name_options {

enable_resource_name_dns_a_record = false

enable_resource_name_dns_aaaa_record = false

hostname_type = "ip-name"

}

root_block_device {

delete_on_termination = true

device_name = "/dev/sda1"

encrypted = true

iops = 3000

kms_key_id = "arn:aws:kms:ap-northeast-2:1**********9:key/50a438fb-1baf-4094-b63e-706141a94d9a"

tags = {}

tags_all = {}

throughput = 125

volume_id = "vol-0ba34f0d5c67701ab"

volume_size = 8

volume_type = "gp3"

}

}Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

At this point, it's common to push your version control repository to a remote location for safekeeping.

$ git remote add origin https://github.com/*user*/*repo*.git

$ git push origin mainThis core workflow is a loop; the next time you want to make changes, you start the process over from the beginning.

Notice how closely this workflow parallels the process of writing application code or scripts as an individual? This is what we mean when we talk about OpenTofu enabling infrastructure as code.

4. Destroy Resource

❯ tofu destroy -auto-approve

aws_instance.example: Refreshing state... [id=i-0fb9312f745e53dda]

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

- destroy

OpenTofu will perform the following actions:

# aws_instance.example will be destroyed

- resource "aws_instance" "example" {

- ami = "ami-062cf18d655c0b1e8" -> null

- arn = "arn:aws:ec2:ap-northeast-2:1**********9:instance/i-0fb9312f745e53dda" -> null

- associate_public_ip_address = true -> null

- availability_zone = "ap-northeast-2a" -> null

- cpu_core_count = 1 -> null

- cpu_threads_per_core = 1 -> null

- disable_api_stop = false -> null

- disable_api_termination = false -> null

- ebs_optimized = false -> null

- get_password_data = false -> null

- hibernation = false -> null

- id = "i-0fb9312f745e53dda" -> null

- instance_initiated_shutdown_behavior = "stop" -> null

- instance_state = "running" -> null

- instance_type = "t2.micro" -> null

- ipv6_address_count = 0 -> null

- ipv6_addresses = [] -> null

- monitoring = false -> null

- placement_partition_number = 0 -> null

- primary_network_interface_id = "eni-02f793197e2ae9a16" -> null

- private_dns = "ip-172-31-2-130.ap-northeast-2.compute.internal" -> null

- private_ip = "172.31.2.130" -> null

- public_dns = "ec2-54-180-133-99.ap-northeast-2.compute.amazonaws.com" -> null

- public_ip = "54.180.133.99" -> null

- secondary_private_ips = [] -> null

- security_groups = [

- "default",

] -> null

- source_dest_check = true -> null

- subnet_id = "subnet-0dc5a51c96d2db619" -> null

- tags = {

- "Name" = "t101-study"

} -> null

- tags_all = {

- "Name" = "t101-study"

} -> null

- tenancy = "default" -> null

- user_data_replace_on_change = false -> null

- vpc_security_group_ids = [

- "sg-0e6cdaaea796e7bbd",

] -> null

- capacity_reservation_specification {

- capacity_reservation_preference = "open" -> null

}

- cpu_options {

- core_count = 1 -> null

- threads_per_core = 1 -> null

}

- credit_specification {

- cpu_credits = "standard" -> null

}

- enclave_options {

- enabled = false -> null

}

- maintenance_options {

- auto_recovery = "default" -> null

}

- metadata_options {

- http_endpoint = "enabled" -> null

- http_protocol_ipv6 = "disabled" -> null

- http_put_response_hop_limit = 2 -> null

- http_tokens = "required" -> null

- instance_metadata_tags = "disabled" -> null

}

- private_dns_name_options {

- enable_resource_name_dns_a_record = false -> null

- enable_resource_name_dns_aaaa_record = false -> null

- hostname_type = "ip-name" -> null

}

- root_block_device {

- delete_on_termination = true -> null

- device_name = "/dev/sda1" -> null

- encrypted = true -> null

- iops = 3000 -> null

- kms_key_id = "arn:aws:kms:ap-northeast-2:1**********9:key/50a438fb-1baf-4094-b63e-706141a94d9a" -> null

- tags = {} -> null

- tags_all = {} -> null

- throughput = 125 -> null

- volume_id = "vol-0ba34f0d5c67701ab" -> null

- volume_size = 8 -> null

- volume_type = "gp3" -> null

}

}

Plan: 0 to add, 0 to change, 1 to destroy.

aws_instance.example: Destroying... [id=i-0fb9312f745e53dda]

aws_instance.example: Still destroying... [id=i-0fb9312f745e53dda, 10s elapsed]

...

aws_instance.example: Destruction complete after 51s

Destroy complete! Resources: 1 destroyed.Working as a Team

Once multiple people are collaborating on OpenTofu configuration, new steps must be added to each part of the core workflow to ensure everyone is working together smoothly. You'll see that many of these steps parallel the workflow changes we make when we work on application code as teams rather than as individuals.

1. Write

While each individual on a team still makes changes to OpenTofu configuration in their editor of choice, they save their changes to version control branches to avoid colliding with each other's work. Working in branches enables team members to resolve mutually incompatible infrastructure changes using their normal merge conflict workflow.

$ git checkout -b add-load-balancerSwitched to a new branch 'add-load-balancer'

Running iterative plans is still useful as a feedback loop while authoring configuration, though having each team member's computer able to run them becomes more difficult with time. As the team and the infrastructure grows, so does the number of sensitive input variables (e.g. API Keys, SSL Cert Pairs) required to run a plan.

To avoid the burden and the security risk of each team member arranging all sensitive inputs locally, it's common for teams to migrate to a model in which OpenTofu operations are executed in a shared Continuous Integration (CI) environment. The work needed to create such a CI environment is nontrivial, and is outside the scope of this core workflow overview.

This longer iteration cycle of committing changes to version control and then waiting for the CI pipeline to execute is often lengthy enough to prohibit using speculative plans as a feedback loop while authoring individual OpenTofu configuration changes. Speculative plans are still useful before new OpenTofu changes are applied or even merged to the main development branch, however, as we'll see in a minute.

2. Plan

For teams collaborating on infrastructure, OpenTofu's plan output creates an opportunity for team members to review each other's work. This allows the team to ask questions, evaluate risks, and catch mistakes before any potentially harmful changes are made.

The natural place for these reviews to occur is alongside pull requests within version control--the point at which an individual proposes a merge from their working branch to the shared team branch. If team members review proposed config changes alongside speculative plan output, they can evaluate whether the intent of the change is being achieved by the plan.

The problem becomes producing that speculative plan output for the team to review. Some teams that still run OpenTofu locally make a practice that pull requests should include an attached copy of speculative plan output generated by the change author. Others arrange for their CI system to post speculative plan output to pull requests automatically.

In addition to reviewing the plan for the proper expression of its author's intent, the team can also make an evaluation whether they want this change to happen now. For example, if a team notices that a certain change could result in service disruption, they may decide to delay merging its pull request until they can schedule a maintenance window.

3. Apply

Once a pull request has been approved and merged, it's important for the team to review the final concrete plan that's run against the shared team branch and the latest version of the state file.

This plan has the potential to be different than the one reviewed on the pull request due to issues like merge order or recent infrastructural changes. For example, if a manual change was made to your infrastructure since the plan was reviewed, the plan might be different when you merge.

It is at this point that the team asks questions about the potential implications of applying the change. Do we expect any service disruption from this change? Is there any part of this change that is high risk? Is there anything in our system that we should be watching as we apply this? Is there anyone we need to notify that this change is happening?

Depending on the change, sometimes team members will want to watch the apply output as it is happening. For teams that are running OpenTofu locally, this may involve a screen share with the team. For teams running OpenTofu in CI, this may involve gathering around the build log.

Just like the workflow for individuals, the core workflow for teams is a loop that plays out for each change. For some teams this loop happens a few times a week, for others, many times a day.

Conclusion

There are many different ways to use OpenTofu: as an individual user, a single team, or an entire organization at scale. Choosing the best approach for the density of collaboration needed will provide the most return on your investment in the core OpenTofu workflow. For organizations using OpenTofu at scale, TACOS (TF Automation and Collaboration Software) introduces new layers that build on this core workflow to solve problems unique to teams and organizations.

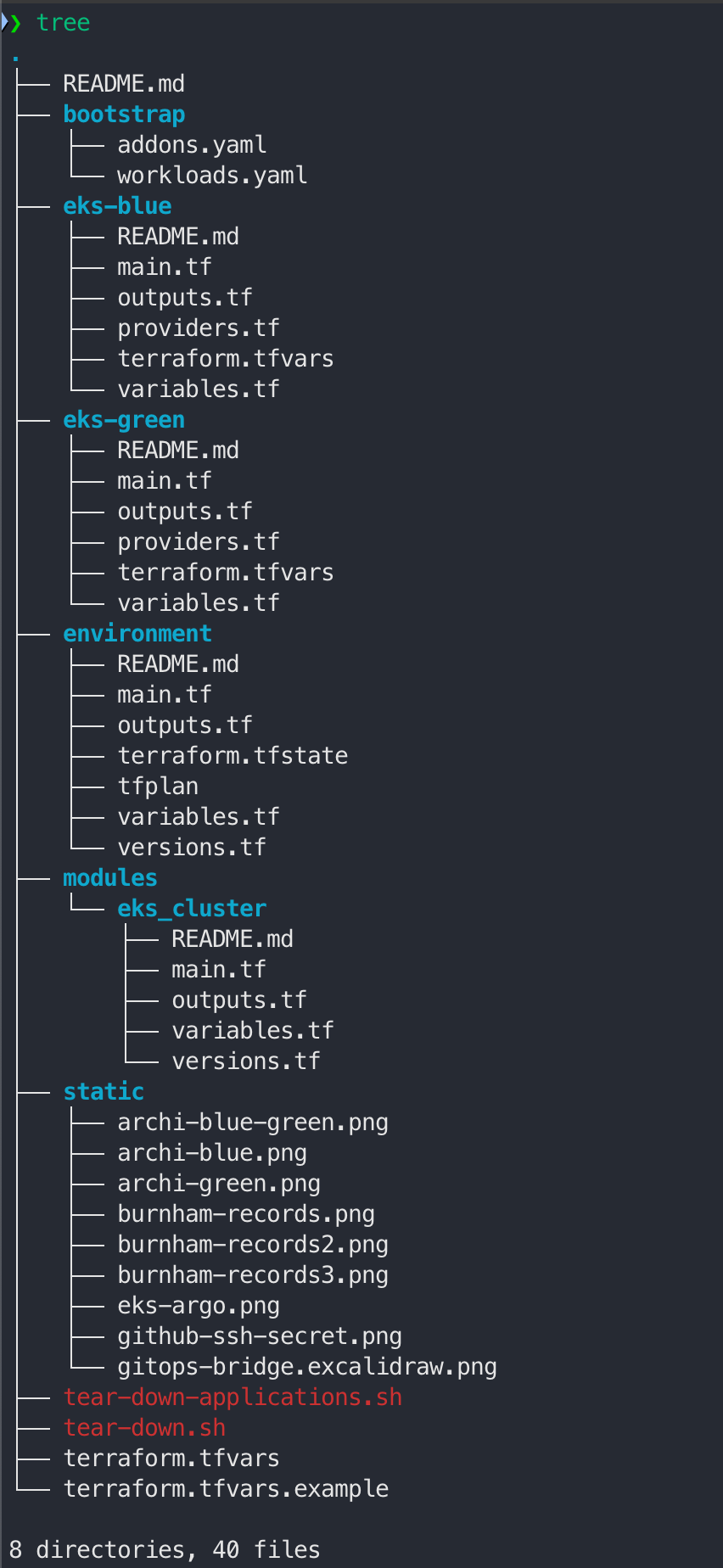

tofu로 EKS v1.30 설치해보기

Terraform 코드

- 소스 다운로드

git clone https://github.com/icebreaker70/eks-blue-green-upgrade.git

VPC 생성

- 디렉토리 이동

cd eks-blue-green-upgrade/environment- tofu 초기화

$ tofu init

Initializing the backend...

Initializing modules...

Downloading registry.opentofu.org/terraform-aws-modules/acm/aws 4.5.0 for acm...

- acm in .terraform/modules/acm

Downloading registry.opentofu.org/terraform-aws-modules/vpc/aws 5.10.0 for vpc...

- vpc in .terraform/modules/vpc

Initializing provider plugins...

- Finding hashicorp/aws versions matching ">= 4.40.0, >= 4.67.0, >= 5.46.0"...

- Finding hashicorp/random versions matching ">= 3.0.0"...

- Installing hashicorp/aws v5.61.0...

- Installed hashicorp/aws v5.61.0 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/random v3.6.2...

- Installed hashicorp/random v3.6.2 (signed, key ID 0C0AF313E5FD9F80)

Providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://opentofu.org/docs/cli/plugins/signing/

OpenTofu has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that OpenTofu can guarantee to make the same selections by default when

you run "tofu init" in the future.

OpenTofu has been successfully initialized!

You may now begin working with OpenTofu. Try running "tofu plan" to see

any changes that are required for your infrastructure. All OpenTofu commands

should now work.

If you ever set or change modules or backend configuration for OpenTofu,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

- tofu plan

$ tofu plan -out tfplan

data.aws_route53_zone.root: Reading...

data.aws_availability_zones.available: Reading...

data.aws_availability_zones.available: Read complete after 0s [id=ap-northeast-2]

data.aws_route53_zone.root: Read complete after 2s [id=Z0703281374FZDJKGMOKK]

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

OpenTofu will perform the following actions:

# aws_route53_record.ns will be created

+ resource "aws_route53_record" "ns" {

...

# aws_route53_zone.sub will be created

+ resource "aws_route53_zone" "sub" {

# aws_secretsmanager_secret.argocd will be created

+ resource "aws_secretsmanager_secret" "argocd" {

...

# aws_secretsmanager_secret_version.argocd will be created

+ resource "aws_secretsmanager_secret_version" "argocd" {

...

# random_password.argocd will be created

+ resource "random_password" "argocd" {

...

# module.acm.aws_acm_certificate.this[0] will be created

+ resource "aws_acm_certificate" "this" {

...

# module.acm.aws_acm_certificate_validation.this[0] will be created

+ resource "aws_acm_certificate_validation" "this" {

+ certificate_arn = (known after apply)

+ id = (known after apply)

+ validation_record_fqdns = (known after apply)

+ timeouts {}

}

# module.acm.aws_route53_record.validation[0] will be created

+ resource "aws_route53_record" "validation" {

+ allow_overwrite = true

+ fqdn = (known after apply)

+ id = (known after apply)

+ name = (known after apply)

+ records = (known after apply)

+ ttl = 60

+ type = (known after apply)

+ zone_id = (known after apply)

}

# module.vpc.aws_default_network_acl.this[0] will be created

+ resource "aws_default_network_acl" "this" {

...

# module.vpc.aws_default_route_table.default[0] will be created

+ resource "aws_default_route_table" "default" {

+ arn = (known after apply)

+ default_route_table_id = (known after apply)

+ id = (known after apply)

+ owner_id = (known after apply)

+ route = (known after apply)

+ tags = {

+ "Blueprint" = "t101-eks-blueprint"

+ "GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

+ "Name" = "t101-eks-blueprint-default"

}

+ tags_all = {

+ "Blueprint" = "t101-eks-blueprint"

+ "GithubRepo" = "github.com/aws-ia/terraform-aws-eks-blueprints"

+ "Name" = "t101-eks-blueprint-default"

}

+ vpc_id = (known after apply)

+ timeouts {

+ create = "5m"

+ update = "5m"

}

}

# module.vpc.aws_default_security_group.this[0] will be created

+ resource "aws_default_security_group" "this" {

...

# module.vpc.aws_eip.nat[0] will be created

+ resource "aws_eip" "nat" {

...

# module.vpc.aws_internet_gateway.this[0] will be created

+ resource "aws_internet_gateway" "this" {

...

# module.vpc.aws_nat_gateway.this[0] will be created

+ resource "aws_nat_gateway" "this" {

...

# module.vpc.aws_route.private_nat_gateway[0] will be created

+ resource "aws_route" "private_nat_gateway" {

...

# module.vpc.aws_route.public_internet_gateway[0] will be created

+ resource "aws_route" "public_internet_gateway" {

...

# module.vpc.aws_route_table.private[0] will be created

+ resource "aws_route_table" "private" {

...

# module.vpc.aws_route_table.public[0] will be created

+ resource "aws_route_table" "public" {

...

# module.vpc.aws_route_table_association.private[0] will be created

+ resource "aws_route_table_association" "private" {

...

# module.vpc.aws_route_table_association.private[1] will be created

+ resource "aws_route_table_association" "private" {

...

# module.vpc.aws_route_table_association.private[2] will be created

+ resource "aws_route_table_association" "private" {

...

# module.vpc.aws_route_table_association.public[0] will be created

+ resource "aws_route_table_association" "public" {

...

# module.vpc.aws_route_table_association.public[1] will be created

+ resource "aws_route_table_association" "public" {

...

# module.vpc.aws_route_table_association.public[2] will be created

+ resource "aws_route_table_association" "public" {

...

# module.vpc.aws_subnet.private[0] will be created

+ resource "aws_subnet" "private" {

...

# module.vpc.aws_subnet.private[1] will be created

+ resource "aws_subnet" "private" {

...

# module.vpc.aws_subnet.private[2] will be created

+ resource "aws_subnet" "private" {

...

# module.vpc.aws_subnet.public[0] will be created

+ resource "aws_subnet" "public" {

...

# module.vpc.aws_subnet.public[1] will be created

+ resource "aws_subnet" "public" {

...

# module.vpc.aws_subnet.public[2] will be created

+ resource "aws_subnet" "public" {

...

# module.vpc.aws_vpc.this[0] will be created

+ resource "aws_vpc" "this" {

...

Plan: 31 to add, 0 to change, 0 to destroy.

Changes to Outputs:

+ aws_acm_certificate_status = (known after apply)

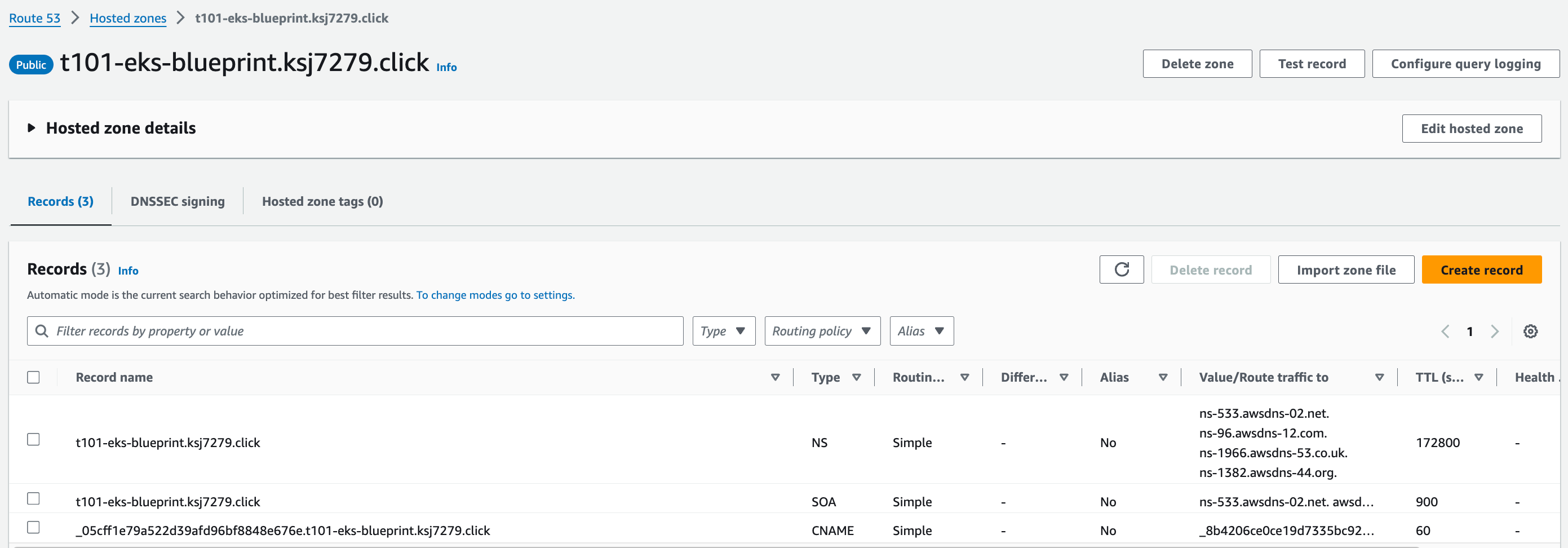

+ aws_route53_zone = "t101-eks-blueprint.ksj7279.click"

+ vpc_id = (known after apply)

───────────────────────────────────────────────────────────────────────────

Saved the plan to: tfplan

To perform exactly these actions, run the following command to apply:

tofu apply "tfplan"- tofu apply

$ tofu apply tfplan

random_password.argocd: Creating...

random_password.argocd: Creation complete after 0s [id=none]

aws_route53_zone.sub: Creating...

aws_secretsmanager_secret.argocd: Creating...

module.acm.aws_acm_certificate.this[0]: Creating...

module.vpc.aws_vpc.this[0]: Creating...

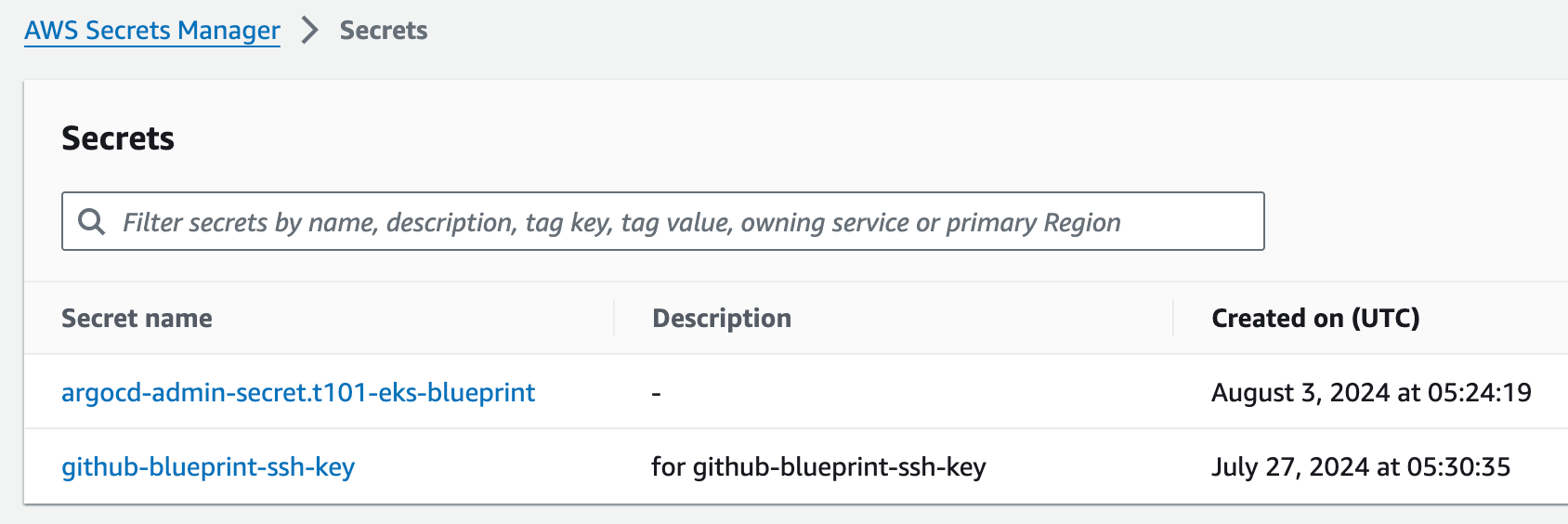

aws_secretsmanager_secret.argocd: Creation complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:argocd-admin-secret.t101-eks-blueprint-CVR6HE]

aws_secretsmanager_secret_version.argocd: Creating...

aws_secretsmanager_secret_version.argocd: Creation complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:argocd-admin-secret.t101-eks-blueprint-CVR6HE|terraform-20240803052419249800000004]

module.acm.aws_acm_certificate.this[0]: Creation complete after 6s [id=arn:aws:acm:ap-northeast-2:538558617837:certificate/4e657b65-a098-43e6-b204-56e859820516]

aws_route53_zone.sub: Still creating... [10s elapsed]

module.vpc.aws_vpc.this[0]: Still creating... [10s elapsed]

module.vpc.aws_vpc.this[0]: Creation complete after 11s [id=vpc-05b4be7d3c1dd1a8c]

module.vpc.aws_subnet.private[1]: Creating...

module.vpc.aws_subnet.public[2]: Creating...

module.vpc.aws_subnet.private[0]: Creating...

module.vpc.aws_route_table.private[0]: Creating...

module.vpc.aws_subnet.private[2]: Creating...

module.vpc.aws_default_security_group.this[0]: Creating...

module.vpc.aws_route_table.public[0]: Creating...

module.vpc.aws_default_network_acl.this[0]: Creating...

module.vpc.aws_internet_gateway.this[0]: Creating...

module.vpc.aws_internet_gateway.this[0]: Creation complete after 1s [id=igw-031c48fd896d5d11a]

module.vpc.aws_subnet.public[1]: Creating...

module.vpc.aws_route_table.public[0]: Creation complete after 1s [id=rtb-0c80088069eaa2784]

module.vpc.aws_subnet.public[0]: Creating...

module.vpc.aws_subnet.public[2]: Creation complete after 1s [id=subnet-06627f3add85ebd7c]

module.vpc.aws_default_route_table.default[0]: Creating...

module.vpc.aws_subnet.private[0]: Creation complete after 1s [id=subnet-01fe1645a681d5fc0]

module.vpc.aws_eip.nat[0]: Creating...

module.vpc.aws_route_table.private[0]: Creation complete after 1s [id=rtb-0dd3cb90c3e201364]

module.vpc.aws_route.public_internet_gateway[0]: Creating...

module.vpc.aws_subnet.private[1]: Creation complete after 1s [id=subnet-03500a596603dad59]

module.vpc.aws_subnet.private[2]: Creation complete after 1s [id=subnet-0cf1aed9073e71533]

module.vpc.aws_route_table_association.private[2]: Creating...

module.vpc.aws_route_table_association.private[0]: Creating...

module.vpc.aws_default_route_table.default[0]: Creation complete after 0s [id=rtb-03b8b0e63e49b0450]

module.vpc.aws_route_table_association.private[1]: Creating...

module.vpc.aws_default_network_acl.this[0]: Creation complete after 2s [id=acl-042f031a8689d38f8]

module.vpc.aws_route_table_association.private[0]: Creation complete after 1s [id=rtbassoc-0bf40a59075f14937]

module.vpc.aws_route_table_association.private[2]: Creation complete after 1s [id=rtbassoc-0952ceef8d61d6834]

module.vpc.aws_route.public_internet_gateway[0]: Creation complete after 1s [id=r-rtb-0c80088069eaa27841080289494]

module.vpc.aws_route_table_association.private[1]: Creation complete after 1s [id=rtbassoc-0a2987afd90d6a230]

module.vpc.aws_default_security_group.this[0]: Creation complete after 2s [id=sg-01b7e5a68a48f461b]

module.vpc.aws_subnet.public[0]: Creation complete after 1s [id=subnet-0aa2100c4e165c818]

module.vpc.aws_subnet.public[1]: Creation complete after 1s [id=subnet-03952b0e382514595]

module.vpc.aws_route_table_association.public[2]: Creating...

module.vpc.aws_route_table_association.public[0]: Creating...

module.vpc.aws_route_table_association.public[1]: Creating...

module.vpc.aws_eip.nat[0]: Creation complete after 1s [id=eipalloc-0a9622edcc225903d]

module.vpc.aws_nat_gateway.this[0]: Creating...

module.vpc.aws_route_table_association.public[0]: Creation complete after 0s [id=rtbassoc-085b1f5b0fbf5a303]

module.vpc.aws_route_table_association.public[1]: Creation complete after 0s [id=rtbassoc-013de568fb0fd86a7]

module.vpc.aws_route_table_association.public[2]: Creation complete after 0s [id=rtbassoc-07e3a7b4e1dd51306]

aws_route53_zone.sub: Still creating... [20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [10s elapsed]

aws_route53_zone.sub: Still creating... [30s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [20s elapsed]

aws_route53_zone.sub: Still creating... [40s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [30s elapsed]

aws_route53_zone.sub: Creation complete after 45s [id=Z026501333WN58PFHTHGV]

aws_route53_record.ns: Creating...

module.acm.aws_route53_record.validation[0]: Creating...

module.vpc.aws_nat_gateway.this[0]: Still creating... [40s elapsed]

aws_route53_record.ns: Still creating... [10s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [10s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [50s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [20s elapsed]

aws_route53_record.ns: Still creating... [20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m0s elapsed]

aws_route53_record.ns: Still creating... [30s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [30s elapsed]

aws_route53_record.ns: Creation complete after 31s [id=Z0703281374FZDJKGMOKK_t101-eks-blueprint.ksj7279.click_NS]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m10s elapsed]

module.acm.aws_route53_record.validation[0]: Still creating... [40s elapsed]

module.acm.aws_route53_record.validation[0]: Creation complete after 41s [id=Z026501333WN58PFHTHGV__05cff1e79a522d39afd96bf8848e676e.t101-eks-blueprint.ksj7279.click._CNAME]

module.acm.aws_acm_certificate_validation.this[0]: Creating...

module.acm.aws_acm_certificate_validation.this[0]: Creation complete after 1s [id=2024-08-03 05:25:40.948 +0000 UTC]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m20s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m30s elapsed]

module.vpc.aws_nat_gateway.this[0]: Still creating... [1m40s elapsed]

module.vpc.aws_nat_gateway.this[0]: Creation complete after 1m44s [id=nat-0e93f0bb708b876ac]

module.vpc.aws_route.private_nat_gateway[0]: Creating...

module.vpc.aws_route.private_nat_gateway[0]: Creation complete after 0s [id=r-rtb-0dd3cb90c3e2013641080289494]

Apply complete! Resources: 31 added, 0 changed, 0 destroyed.

Outputs:

aws_acm_certificate_status = "PENDING_VALIDATION"

aws_route53_zone = "t101-eks-blueprint.ksj7279.click"

vpc_id = "vpc-05b4be7d3c1dd1a8c"- tofu state list

$ tofu state list

data.aws_availability_zones.available

data.aws_route53_zone.root

aws_route53_record.ns

aws_route53_zone.sub

aws_secretsmanager_secret.argocd

aws_secretsmanager_secret_version.argocd

random_password.argocd

module.acm.aws_acm_certificate.this[0]

module.acm.aws_acm_certificate_validation.this[0]

module.acm.aws_route53_record.validation[0]

module.vpc.aws_default_network_acl.this[0]

module.vpc.aws_default_route_table.default[0]

module.vpc.aws_default_security_group.this[0]

module.vpc.aws_eip.nat[0]

module.vpc.aws_internet_gateway.this[0]

module.vpc.aws_nat_gateway.this[0]

module.vpc.aws_route.private_nat_gateway[0]

module.vpc.aws_route.public_internet_gateway[0]

module.vpc.aws_route_table.private[0]

module.vpc.aws_route_table.public[0]

module.vpc.aws_route_table_association.private[0]

module.vpc.aws_route_table_association.private[1]

module.vpc.aws_route_table_association.private[2]

module.vpc.aws_route_table_association.public[0]

module.vpc.aws_route_table_association.public[1]

module.vpc.aws_route_table_association.public[2]

module.vpc.aws_subnet.private[0]

module.vpc.aws_subnet.private[1]

module.vpc.aws_subnet.private[2]

module.vpc.aws_subnet.public[0]

module.vpc.aws_subnet.public[1]

module.vpc.aws_subnet.public[2]

module.vpc.aws_vpc.this[0]- Environment 자원생성 결과

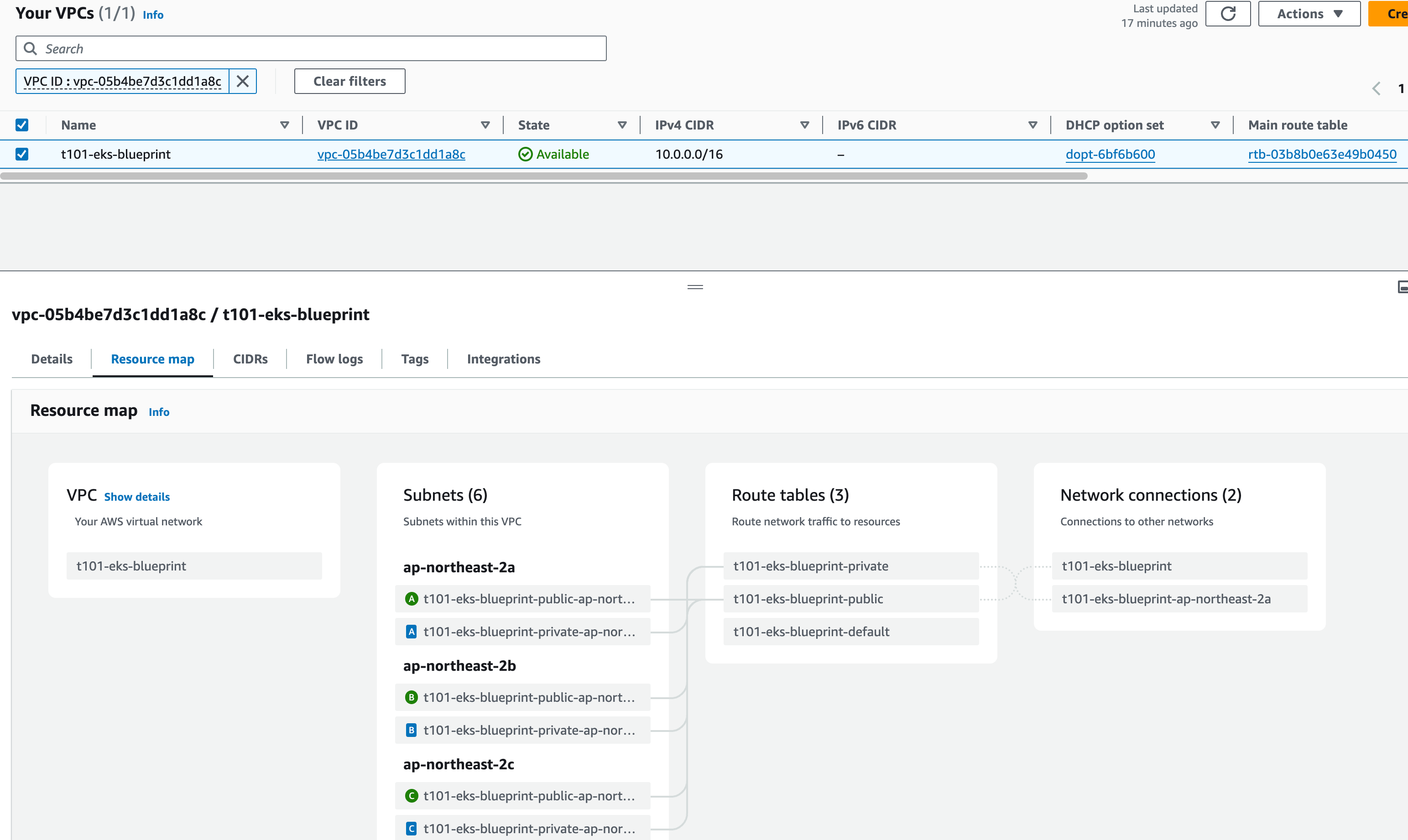

VPC

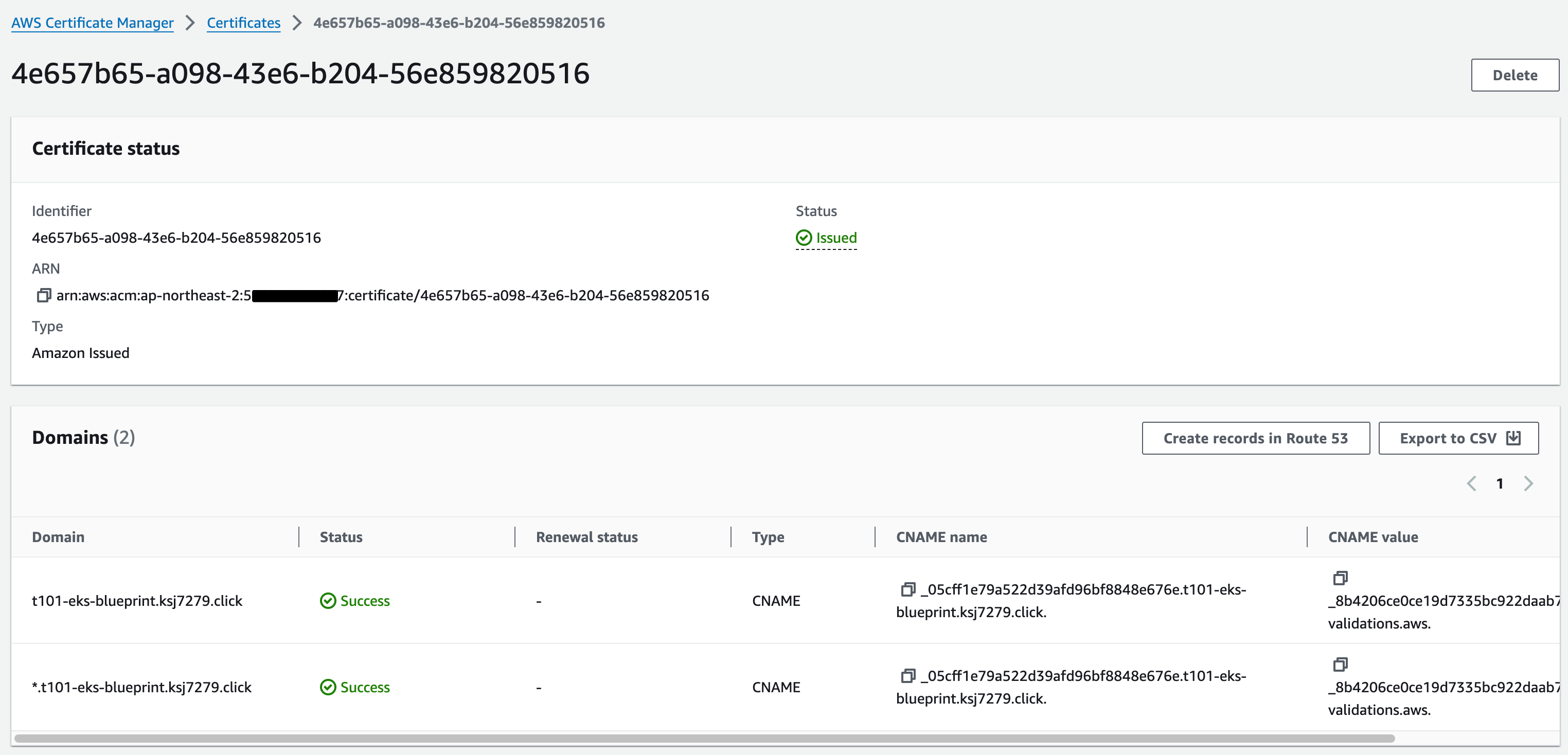

ACM

Route 53

Secrets Manager

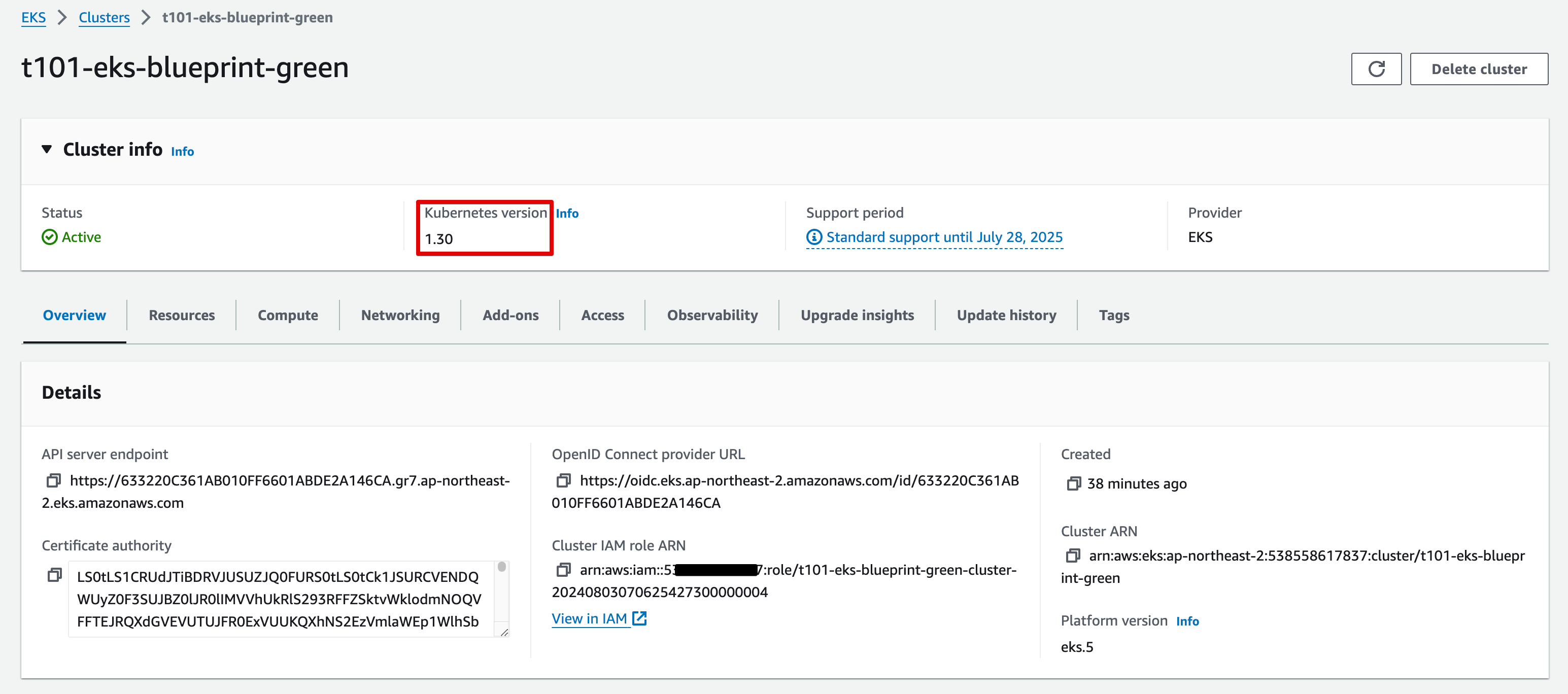

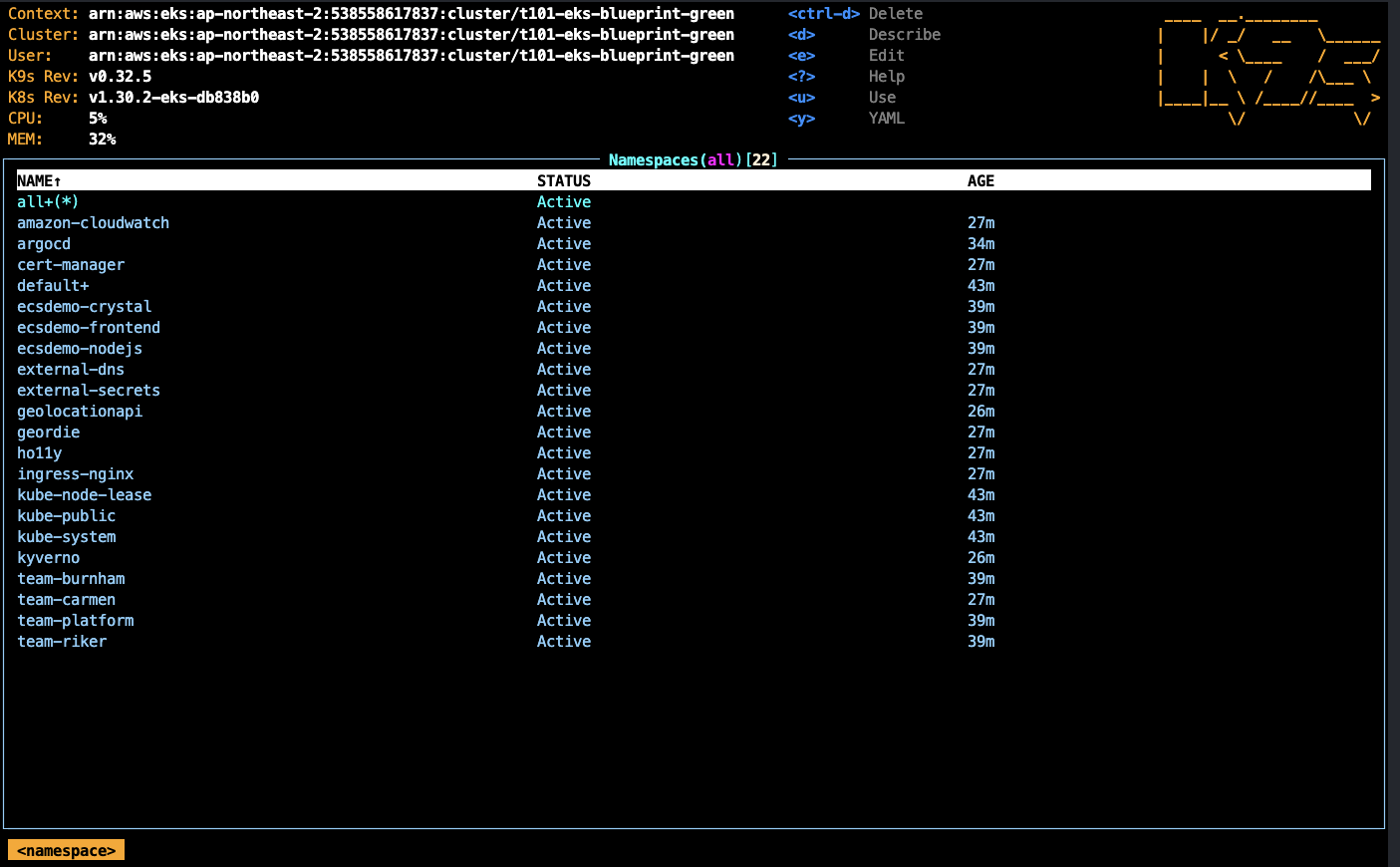

EKS v1.30 생성

- 디렉토리 이동

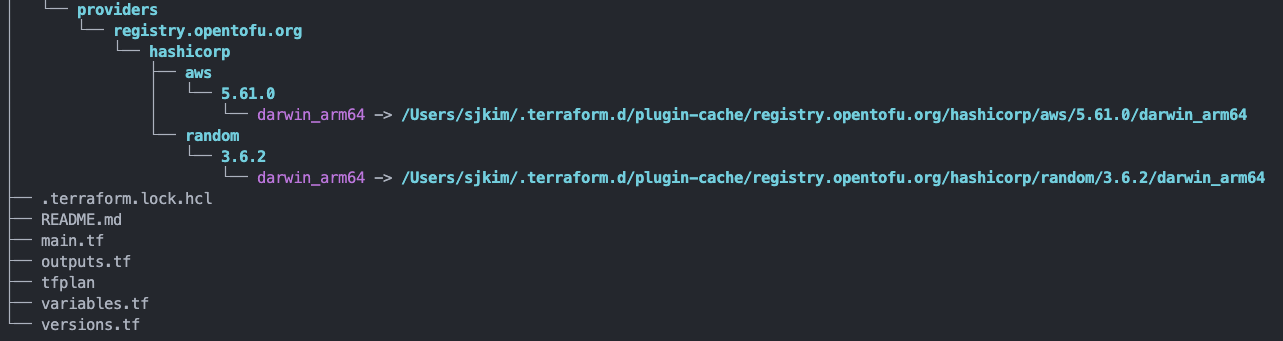

$ cd eks-blue-green-upgrade/eks-green- tofu 초기화

$ tofu init

Initializing the backend...

Initializing modules...

- eks_cluster in ../modules/eks_cluster

Downloading registry.opentofu.org/terraform-aws-modules/iam/aws 5.42.0 for eks_cluster.ebs_csi_driver_irsa...

- eks_cluster.ebs_csi_driver_irsa in .terraform/modules/eks_cluster.ebs_csi_driver_irsa/modules/iam-role-for-service-accounts-eks

Downloading registry.opentofu.org/terraform-aws-modules/eks/aws 19.15.4 for eks_cluster.eks...

- eks_cluster.eks in .terraform/modules/eks_cluster.eks

- eks_cluster.eks.eks_managed_node_group in .terraform/modules/eks_cluster.eks/modules/eks-managed-node-group

- eks_cluster.eks.eks_managed_node_group.user_data in .terraform/modules/eks_cluster.eks/modules/_user_data

- eks_cluster.eks.fargate_profile in .terraform/modules/eks_cluster.eks/modules/fargate-profile

Downloading registry.opentofu.org/terraform-aws-modules/kms/aws 1.1.0 for eks_cluster.eks.kms...

- eks_cluster.eks.kms in .terraform/modules/eks_cluster.eks.kms

- eks_cluster.eks.self_managed_node_group in .terraform/modules/eks_cluster.eks/modules/self-managed-node-group

- eks_cluster.eks.self_managed_node_group.user_data in .terraform/modules/eks_cluster.eks/modules/_user_data

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addons/aws 1.16.3 for eks_cluster.eks_blueprints_addons...

- eks_cluster.eks_blueprints_addons in .terraform/modules/eks_cluster.eks_blueprints_addons

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.argo_events...

- eks_cluster.eks_blueprints_addons.argo_events in .terraform/modules/eks_cluster.eks_blueprints_addons.argo_events

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.argo_rollouts...

- eks_cluster.eks_blueprints_addons.argo_rollouts in .terraform/modules/eks_cluster.eks_blueprints_addons.argo_rollouts

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.argo_workflows...

- eks_cluster.eks_blueprints_addons.argo_workflows in .terraform/modules/eks_cluster.eks_blueprints_addons.argo_workflows

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.argocd...

- eks_cluster.eks_blueprints_addons.argocd in .terraform/modules/eks_cluster.eks_blueprints_addons.argocd

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_cloudwatch_metrics...

- eks_cluster.eks_blueprints_addons.aws_cloudwatch_metrics in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_cloudwatch_metrics

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_efs_csi_driver...

- eks_cluster.eks_blueprints_addons.aws_efs_csi_driver in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_efs_csi_driver

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_for_fluentbit...

- eks_cluster.eks_blueprints_addons.aws_for_fluentbit in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_for_fluentbit

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_fsx_csi_driver...

- eks_cluster.eks_blueprints_addons.aws_fsx_csi_driver in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_fsx_csi_driver

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_gateway_api_controller...

- eks_cluster.eks_blueprints_addons.aws_gateway_api_controller in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_gateway_api_controller

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_load_balancer_controller...

- eks_cluster.eks_blueprints_addons.aws_load_balancer_controller in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_load_balancer_controller

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_node_termination_handler...

- eks_cluster.eks_blueprints_addons.aws_node_termination_handler in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_node_termination_handler

Downloading registry.opentofu.org/terraform-aws-modules/sqs/aws 4.0.1 for eks_cluster.eks_blueprints_addons.aws_node_termination_handler_sqs...

- eks_cluster.eks_blueprints_addons.aws_node_termination_handler_sqs in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_node_termination_handler_sqs

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.aws_privateca_issuer...

- eks_cluster.eks_blueprints_addons.aws_privateca_issuer in .terraform/modules/eks_cluster.eks_blueprints_addons.aws_privateca_issuer

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.bottlerocket_shadow...

- eks_cluster.eks_blueprints_addons.bottlerocket_shadow in .terraform/modules/eks_cluster.eks_blueprints_addons.bottlerocket_shadow

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.bottlerocket_update_operator...

- eks_cluster.eks_blueprints_addons.bottlerocket_update_operator in .terraform/modules/eks_cluster.eks_blueprints_addons.bottlerocket_update_operator

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.cert_manager...

- eks_cluster.eks_blueprints_addons.cert_manager in .terraform/modules/eks_cluster.eks_blueprints_addons.cert_manager

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.cluster_autoscaler...

- eks_cluster.eks_blueprints_addons.cluster_autoscaler in .terraform/modules/eks_cluster.eks_blueprints_addons.cluster_autoscaler

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.cluster_proportional_autoscaler...

- eks_cluster.eks_blueprints_addons.cluster_proportional_autoscaler in .terraform/modules/eks_cluster.eks_blueprints_addons.cluster_proportional_autoscaler

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.external_dns...

- eks_cluster.eks_blueprints_addons.external_dns in .terraform/modules/eks_cluster.eks_blueprints_addons.external_dns

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.external_secrets...

- eks_cluster.eks_blueprints_addons.external_secrets in .terraform/modules/eks_cluster.eks_blueprints_addons.external_secrets

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.gatekeeper...

- eks_cluster.eks_blueprints_addons.gatekeeper in .terraform/modules/eks_cluster.eks_blueprints_addons.gatekeeper

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.ingress_nginx...

- eks_cluster.eks_blueprints_addons.ingress_nginx in .terraform/modules/eks_cluster.eks_blueprints_addons.ingress_nginx

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.karpenter...

- eks_cluster.eks_blueprints_addons.karpenter in .terraform/modules/eks_cluster.eks_blueprints_addons.karpenter

Downloading registry.opentofu.org/terraform-aws-modules/sqs/aws 4.0.1 for eks_cluster.eks_blueprints_addons.karpenter_sqs...

- eks_cluster.eks_blueprints_addons.karpenter_sqs in .terraform/modules/eks_cluster.eks_blueprints_addons.karpenter_sqs

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.kube_prometheus_stack...

- eks_cluster.eks_blueprints_addons.kube_prometheus_stack in .terraform/modules/eks_cluster.eks_blueprints_addons.kube_prometheus_stack

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.metrics_server...

- eks_cluster.eks_blueprints_addons.metrics_server in .terraform/modules/eks_cluster.eks_blueprints_addons.metrics_server

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.secrets_store_csi_driver...

- eks_cluster.eks_blueprints_addons.secrets_store_csi_driver in .terraform/modules/eks_cluster.eks_blueprints_addons.secrets_store_csi_driver

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.secrets_store_csi_driver_provider_aws...

- eks_cluster.eks_blueprints_addons.secrets_store_csi_driver_provider_aws in .terraform/modules/eks_cluster.eks_blueprints_addons.secrets_store_csi_driver_provider_aws

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.velero...

- eks_cluster.eks_blueprints_addons.velero in .terraform/modules/eks_cluster.eks_blueprints_addons.velero

Downloading registry.opentofu.org/aws-ia/eks-blueprints-addon/aws 1.1.1 for eks_cluster.eks_blueprints_addons.vpa...

- eks_cluster.eks_blueprints_addons.vpa in .terraform/modules/eks_cluster.eks_blueprints_addons.vpa

Downloading registry.opentofu.org/aws-ia/eks-blueprints-teams/aws 1.1.0 for eks_cluster.eks_blueprints_dev_teams...

- eks_cluster.eks_blueprints_dev_teams in .terraform/modules/eks_cluster.eks_blueprints_dev_teams

Downloading registry.opentofu.org/aws-ia/eks-blueprints-teams/aws 1.1.0 for eks_cluster.eks_blueprints_ecsdemo_teams...

- eks_cluster.eks_blueprints_ecsdemo_teams in .terraform/modules/eks_cluster.eks_blueprints_ecsdemo_teams

Downloading registry.opentofu.org/aws-ia/eks-blueprints-teams/aws 1.1.0 for eks_cluster.eks_blueprints_platform_teams...

- eks_cluster.eks_blueprints_platform_teams in .terraform/modules/eks_cluster.eks_blueprints_platform_teams

Downloading git::https://github.com/gitops-bridge-dev/gitops-bridge-argocd-bootstrap-terraform.git?ref=v2.0.0 for eks_cluster.gitops_bridge_bootstrap...

- eks_cluster.gitops_bridge_bootstrap in .terraform/modules/eks_cluster.gitops_bridge_bootstrap

Downloading registry.opentofu.org/terraform-aws-modules/iam/aws 5.42.0 for eks_cluster.vpc_cni_irsa...

- eks_cluster.vpc_cni_irsa in .terraform/modules/eks_cluster.vpc_cni_irsa/modules/iam-role-for-service-accounts-eks

Initializing provider plugins...

- Finding hashicorp/aws versions matching ">= 3.72.0, >= 4.0.0, >= 4.36.0, >= 4.47.0, >= 4.57.0, >= 5.0.0"...

- Finding hashicorp/kubernetes versions matching ">= 2.10.0, >= 2.17.0, >= 2.20.0, >= 2.22.0, 2.22.0"...

- Finding hashicorp/helm versions matching ">= 2.9.0, >= 2.10.1"...

- Finding hashicorp/tls versions matching ">= 3.0.0"...

- Finding hashicorp/time versions matching ">= 0.9.0"...

- Finding hashicorp/cloudinit versions matching ">= 2.0.0"...

- Installing hashicorp/aws v5.61.0...

- Installed hashicorp/aws v5.61.0 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/kubernetes v2.22.0...

- Installed hashicorp/kubernetes v2.22.0 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/helm v2.14.0...

- Installed hashicorp/helm v2.14.0 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/tls v4.0.5...

- Installed hashicorp/tls v4.0.5 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/time v0.12.0...

- Installed hashicorp/time v0.12.0 (signed, key ID 0C0AF313E5FD9F80)

- Installing hashicorp/cloudinit v2.3.4...

- Installed hashicorp/cloudinit v2.3.4 (signed, key ID 0C0AF313E5FD9F80)

Providers are signed by their developers.

If you'd like to know more about provider signing, you can read about it here:

https://opentofu.org/docs/cli/plugins/signing/

OpenTofu has created a lock file .terraform.lock.hcl to record the provider

selections it made above. Include this file in your version control repository

so that OpenTofu can guarantee to make the same selections by default when

you run "tofu init" in the future.

OpenTofu has been successfully initialized!

You may now begin working with OpenTofu. Try running "tofu plan" to see

any changes that are required for your infrastructure. All OpenTofu commands

should now work.

If you ever set or change modules or backend configuration for OpenTofu,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.- tofo plan

$ tofu plan -out tfplan

module.eks_cluster.module.vpc_cni_irsa.data.aws_partition.current: Reading...

module.eks_cluster.data.aws_secretsmanager_secret.workload_repo_secret: Reading...

module.eks_cluster.module.vpc_cni_irsa.data.aws_caller_identity.current: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_partition.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_partition.current[0]: Read complete after 0s [id=aws]

module.eks_cluster.module.vpc_cni_irsa.data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_caller_identity.current[0]: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_region.current: Reading...

module.eks_cluster.data.aws_iam_role.eks_admin_role_name[0]: Reading...

module.eks_cluster.module.vpc_cni_irsa.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_region.current: Read complete after 0s [id=ap-northeast-2]

module.eks_cluster.data.aws_vpc.vpc: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_partition.current: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_caller_identity.current[0]: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.data.aws_region.current: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_caller_identity.current: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_partition.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_region.current: Read complete after 0s [id=ap-northeast-2]

module.eks_cluster.module.eks.module.kms.data.aws_caller_identity.current: Reading...

module.eks_cluster.data.aws_secretsmanager_secret.argocd: Reading...

module.eks_cluster.module.eks.module.kms.data.aws_partition.current: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_partition.current[0]: Read complete after 0s [id=aws]

module.eks_cluster.module.eks.module.kms.data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_caller_identity.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.aws_cloudwatch_metrics.data.aws_partition.current[0]: Reading...

module.eks_cluster.data.aws_subnets.public: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.aws_cloudwatch_metrics.data.aws_partition.current[0]: Read complete after 0s [id=aws]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_partition.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_partition.current[0]: Read complete after 0s [id=aws]

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.data.aws_caller_identity.current: Reading...

module.eks_cluster.data.aws_subnets.private: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_secrets[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_secrets[0]: Read complete after 0s [id=2831063531]

module.eks_cluster.module.eks_blueprints_addons.data.aws_partition.current: Reading...

module.eks_cluster.module.eks.module.kms.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.data.aws_caller_identity.current: Reading...

module.eks_cluster.module.eks.data.aws_partition.current: Reading...

module.eks_cluster.module.eks.data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.data.aws_route53_zone.sub: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_caller_identity.current[0]: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_caller_identity.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.vpc_cni_irsa.data.aws_region.current: Reading...

module.eks_cluster.module.vpc_cni_irsa.data.aws_region.current: Read complete after 0s [id=ap-northeast-2]

module.eks_cluster.module.eks_blueprints_addons.module.aws_cloudwatch_metrics.data.aws_caller_identity.current[0]: Reading...

module.eks_cluster.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks.data.aws_caller_identity.current: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.aws_cloudwatch_metrics.data.aws_caller_identity.current[0]: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks.data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_caller_identity.current[0]: Read complete after 0s [id=538558617837]

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.vpc_cni[0]: Reading...

module.eks_cluster.module.vpc_cni_irsa.data.aws_iam_policy_document.vpc_cni[0]: Read complete after 0s [id=1079910255]

module.eks_cluster.module.eks.data.aws_iam_session_context.current: Reading...

module.eks_cluster.module.eks.data.aws_iam_session_context.current: Read complete after 0s [id=arn:aws:iam::538558617837:user/terraform]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.cert_manager[0]: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.ebs_csi[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.cert_manager[0]: Read complete after 0s [id=3416383923]

module.eks_cluster.module.eks.data.aws_iam_policy_document.assume_role_policy[0]: Reading...

module.eks_cluster.module.eks.data.aws_iam_policy_document.assume_role_policy[0]: Read complete after 0s [id=2764486067]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.aws_load_balancer_controller[0]: Reading...

module.eks_cluster.module.ebs_csi_driver_irsa.data.aws_iam_policy_document.ebs_csi[0]: Read complete after 0s [id=212299854]

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.this[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_secrets.data.aws_iam_policy_document.this[0]: Read complete after 0s [id=2831063531]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.aws_load_balancer_controller[0]: Read complete after 0s [id=3712473960]

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.this[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.cert_manager.data.aws_iam_policy_document.this[0]: Read complete after 0s [id=3416383923]

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.this[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.aws_load_balancer_controller.data.aws_iam_policy_document.this[0]: Read complete after 0s [id=3712473960]

module.eks_cluster.data.aws_secretsmanager_secret.workload_repo_secret: Read complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:github-blueprint-ssh-key-vCobzk]

module.eks_cluster.data.aws_secretsmanager_secret_version.workload_repo_secret: Reading...

module.eks_cluster.data.aws_secretsmanager_secret.argocd: Read complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:argocd-admin-secret.t101-eks-blueprint-CVR6HE]

module.eks_cluster.data.aws_secretsmanager_secret_version.admin_password_version: Reading...

module.eks_cluster.data.aws_secretsmanager_secret_version.admin_password_version: Read complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:argocd-admin-secret.t101-eks-blueprint-CVR6HE|AWSCURRENT]

module.eks_cluster.data.aws_secretsmanager_secret_version.workload_repo_secret: Read complete after 0s [id=arn:aws:secretsmanager:ap-northeast-2:538558617837:secret:github-blueprint-ssh-key-vCobzk|AWSCURRENT]

module.eks_cluster.data.aws_subnets.private: Read complete after 0s [id=ap-northeast-2]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_caller_identity.current: Reading...

module.eks_cluster.data.aws_subnets.public: Read complete after 0s [id=ap-northeast-2]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_partition.current: Reading...

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_partition.current: Read complete after 0s [id=aws]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_iam_policy_document.assume_role_policy[0]: Reading...

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_iam_policy_document.assume_role_policy[0]: Read complete after 0s [id=2560088296]

module.eks_cluster.module.eks.module.eks_managed_node_group["initial"].data.aws_caller_identity.current: Read complete after 0s [id=538558617837]

module.eks_cluster.data.aws_vpc.vpc: Read complete after 1s [id=vpc-05b4be7d3c1dd1a8c]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["kube-proxy"]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["vpc-cni"]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["coredns"]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["kube-proxy"]: Read complete after 0s [id=kube-proxy]

module.eks_cluster.data.aws_iam_role.eks_admin_role_name[0]: Read complete after 1s [id=AWSReservedSSO_AWSAdministratorAccess_0a9b1bfd0c855752]

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.this[0]: Reading...

module.eks_cluster.module.eks_blueprints_platform_teams.data.aws_iam_policy_document.this[0]: Read complete after 0s [id=4284527764]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["coredns"]: Read complete after 0s [id=coredns]

module.eks_cluster.module.eks_blueprints_addons.data.aws_eks_addon_version.this["vpc-cni"]: Read complete after 0s [id=vpc-cni]

module.eks_cluster.data.aws_route53_zone.sub: Read complete after 1s [id=Z026501333WN58PFHTHGV]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_caller_identity.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_partition.current[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_dns[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_partition.current[0]: Read complete after 0s [id=aws]

module.eks_cluster.module.eks_blueprints_addons.data.aws_iam_policy_document.external_dns[0]: Read complete after 0s [id=3049371310]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.this[0]: Reading...

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_caller_identity.current[0]: Read complete after 0s [id=538558617837]

module.eks_cluster.module.eks_blueprints_addons.module.external_dns.data.aws_iam_policy_document.this[0]: Read complete after 0s [id=3049371310]

OpenTofu used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

<= read (data resources)

OpenTofu will perform the following actions:

# module.eks_cluster.aws_ec2_tag.private_subnets["subnet-01fe1645a681d5fc0"] will be created

+ resource "aws_ec2_tag" "private_subnets" {

...

# module.eks_cluster.aws_ec2_tag.private_subnets["subnet-03500a596603dad59"] will be created

+ resource "aws_ec2_tag" "private_subnets" {

...

# module.eks_cluster.aws_ec2_tag.private_subnets["subnet-0cf1aed9073e71533"] will be created

+ resource "aws_ec2_tag" "private_subnets" {

...

# module.eks_cluster.aws_ec2_tag.public_subnets["subnet-03952b0e382514595"] will be created

+ resource "aws_ec2_tag" "public_subnets" {

...

# module.eks_cluster.aws_ec2_tag.public_subnets["subnet-06627f3add85ebd7c"] will be created

+ resource "aws_ec2_tag" "public_subnets" {

...

# module.eks_cluster.aws_ec2_tag.public_subnets["subnet-0aa2100c4e165c818"] will be created

+ resource "aws_ec2_tag" "public_subnets" {

...

# module.eks_cluster.kubernetes_namespace.argocd will be created

+ resource "kubernetes_namespace" "argocd" {

+ resource "kubernetes_secret" "git_secrets" {

...

# module.eks_cluster.kubernetes_secret.git_secrets["git-workloads"] will be created

+ resource "kubernetes_secret" "git_secrets" {

...

# module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_policy.ebs_csi[0] will be created

+ resource "aws_iam_policy" "ebs_csi" {

...

# module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role.this[0] will be created

+ resource "aws_iam_role" "this" {

...

# module.eks_cluster.module.ebs_csi_driver_irsa.aws_iam_role_policy_attachment.ebs_csi[0] will be created

+ resource "aws_iam_role_policy_attachment" "ebs_csi" {

...

...

# module.eks_cluster.module.eks.aws_cloudwatch_log_group.this[0] will be created

+ resource "aws_cloudwatch_log_group" "this" {

...

# module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["Blueprint"] will be created

+ resource "aws_ec2_tag" "cluster_primary_security_group" {

...

# module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["GithubRepo"] will be created

+ resource "aws_ec2_tag" "cluster_primary_security_group" {

...

# module.eks_cluster.module.eks.aws_ec2_tag.cluster_primary_security_group["karpenter.sh/discovery"] will be created

+ resource "aws_ec2_tag" "cluster_primary_security_group" {

...

# module.eks_cluster.module.eks.aws_eks_cluster.this[0] will be created

+ resource "aws_eks_cluster" "this" {

...

# module.eks_cluster.module.eks.aws_iam_openid_connect_provider.oidc_provider[0] will be created

+ resource "aws_iam_openid_connect_provider" "oidc_provider" {

...

# module.eks_cluster.module.eks.aws_iam_policy.cluster_encryption[0] will be created

+ resource "aws_iam_policy" "cluster_encryption" {

...

# module.eks_cluster.module.eks.aws_iam_role.this[0] will be created

+ resource "aws_iam_role" "this" {

...

# module.eks_cluster.module.eks.aws_iam_role_policy_attachment.cluster_encryption[0] will be created

+ resource "aws_iam_role_policy_attachment" "cluster_encryption" {

...

#

module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSClusterPolicy"] will be created

+ resource "aws_iam_role_policy_attachment" "this" {

...

# module.eks_cluster.module.eks.aws_iam_role_policy_attachment.this["AmazonEKSVPCResourceController"] will be created

+ resource "aws_iam_role_policy_attachment" "this" {

...

# module.eks_cluster.module.eks.aws_security_group.cluster[0] will be created

+ resource "aws_security_group" "cluster" {

...

# module.eks_cluster.module.eks.kubernetes_config_map_v1_data.aws_auth[0] will be created

+ resource "kubernetes_config_map_v1_data" "aws_auth" {

...

# module.eks_cluster.module.eks.time_sleep.this[0] will be created

+ resource "time_sleep" "this" {

...

# module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["coredns"] will be created

+ resource "aws_eks_addon" "this" {

...

# module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["kube-proxy"] will be created

+ resource "aws_eks_addon" "this" {

...

# module.eks_cluster.module.eks_blueprints_addons.aws_eks_addon.this["vpc-cni"] will be created

+ resource "aws_eks_addon" "this" {

...

# module.eks_cluster.module.eks_blueprints_addons.time_sleep.this will be created

+ resource "time_sleep" "this" {

...

# ...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].aws_iam_role.this[0] will be created

+ resource "aws_iam_role" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_binding_v1.this[0] will be created

+ resource "kubernetes_cluster_role_binding_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_cluster_role_v1.this[0] will be created

+ resource "kubernetes_cluster_role_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_limit_range_v1.this["team-burnham"] will be created

+ resource "kubernetes_limit_range_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_namespace_v1.this["team-burnham"] will be created

+ resource "kubernetes_namespace_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_resource_quota_v1.this["team-burnham"] will be created

+ resource "kubernetes_resource_quota_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_role_binding_v1.this["team-burnham"] will be created

+ resource "kubernetes_role_binding_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["burnham"].kubernetes_service_account_v1.this["team-burnham"] will be created

+ resource "kubernetes_service_account_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].data.aws_iam_policy_document.this[0] will be read during apply

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].aws_iam_role.this[0] will be created

+ resource "aws_iam_role" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_binding_v1.this[0] will be created

+ resource "kubernetes_cluster_role_binding_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_cluster_role_v1.this[0] will be created

+ resource "kubernetes_cluster_role_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_limit_range_v1.this["team-riker"] will be created

+ resource "kubernetes_limit_range_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_namespace_v1.this["team-riker"] will be created

+ resource "kubernetes_namespace_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_resource_quota_v1.this["team-riker"] will be created

+ resource "kubernetes_resource_quota_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_role_binding_v1.this["team-riker"] will be created

+ resource "kubernetes_role_binding_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_dev_teams["riker"].kubernetes_service_account_v1.this["team-riker"] will be created

+ resource "kubernetes_service_account_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].aws_iam_role.this[0] will be created

+ resource "aws_iam_role" "this" {

...

# module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_binding_v1.this[0] will be created

+ resource "kubernetes_cluster_role_binding_v1" "this" {

...

# module.eks_cluster.module.eks_blueprints_ecsdemo_teams["ecsdemo-crystal"].kubernetes_cluster_role_v1.this[0] will be created