사용 방법

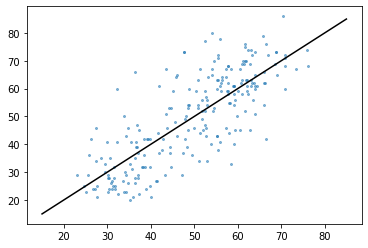

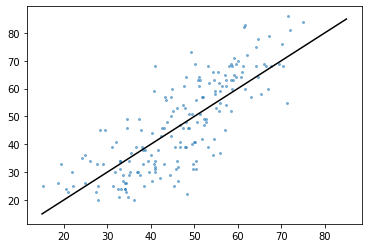

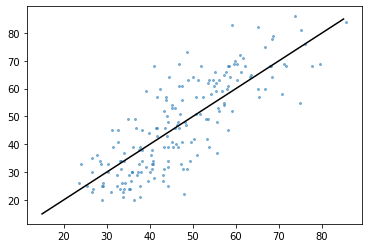

KNeighborsRegressor - man

from sklearn.neighbors import KNeighborsRegressor

neigh = KNeighborsRegressor()

param_grid4 = { 'n_neighbors': np.arange(1,50,2)}

grid4 = GridSearchCV(neigh, param_grid = param_grid4,

cv=RepeatedKFold(n_splits=5, n_repeats=5, random_state = 123),

return_train_score=True)

grid4.fit(x_man_train,y_man_train)

print(f"best parameters: {grid4.best_params_}")

print(f"valid-set score: {grid4.score(x_man_valid, y_man_valid):.3f}")best parameters: {'n_neighbors': 9}

valid-set score: 0.622

neigh = KNeighborsRegressor(n_neighbors = 9)

y_woman_pred = cross_val_predict(neigh, x_man_train, y_man_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_woman_pred, y_man_train, alpha=.5, s=4)

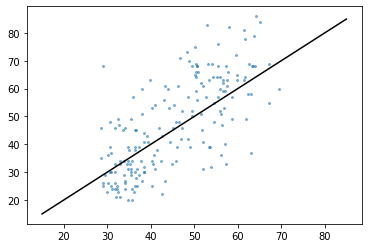

KNeighborsRegressor - woman

neigh = KNeighborsRegressor()

param_grid4 = { 'n_neighbors': np.arange(1,50,2)}

grid4 = GridSearchCV(neigh, param_grid = param_grid4,

cv=RepeatedKFold(n_splits=5, n_repeats=5, random_state = 123),

return_train_score=True)

grid4.fit(x_woman_train,y_woman_train)

print(f"best parameters: {grid4.best_params_}")

print(f"valid-set score: {grid4.score(x_woman_valid, y_woman_valid):.3f}")best parameters: {'n_neighbors': 7}

valid-set score: 0.623

neigh = KNeighborsRegressor(n_neighbors=7)

y_woman_pred = cross_val_predict(neigh, x_woman_train, y_woman_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_woman_pred, y_woman_train, alpha=.5, s=4)

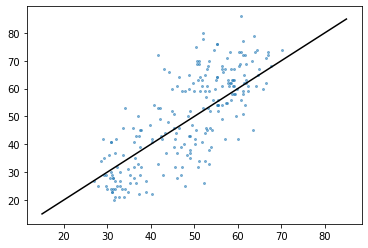

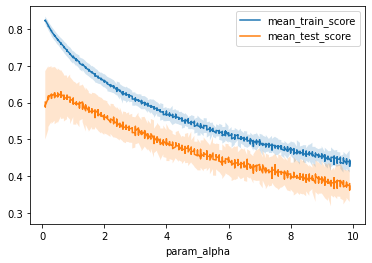

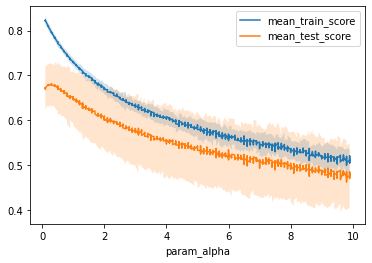

SGDRegressor - man

from sklearn.linear_model import SGDRegressor

sgd = SGDRegressor(max_iter=1000, tol=1e-3)

param_grid5 = {'alpha' : np.arange(0.1,10,0.1), 'l1_ratio' : np.arange(0.1,1,0.1)}

grid5 = GridSearchCV(sgd, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_man_train,y_man_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_man_valid, y_man_valid):.3f}")best parameters: {'alpha': 0.6, 'l1_ratio': 0.4}

valid-set score: 0.751

results = pd.DataFrame(grid5.cv_results_)

results.plot('param_alpha', 'mean_train_score')

results.plot('param_alpha', 'mean_test_score', ax=plt.gca())

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_train_score'] + results['std_train_score'],

results['mean_train_score'] - results['std_train_score'], alpha=0.2)

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_test_score'] + results['std_test_score'],

results['mean_test_score'] - results['std_test_score'], alpha=0.2)

plt.legend()

sgd = SGDRegressor(max_iter=1000, tol=1e-3, alpha = 0.6, l1_ratio = 0.4)

y_man_pred = cross_val_predict(sgd, x_man_train, y_man_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_man_pred, y_man_train, alpha=.5, s=4)

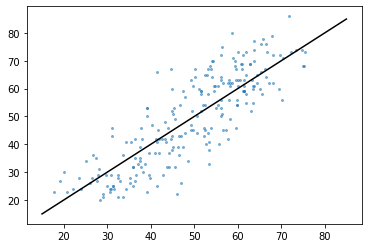

SGDRegressor - woman

sgd = SGDRegressor(max_iter=1000, tol=1e-3)

param_grid5 = {'alpha' : np.arange(0.1,10,0.1), 'l1_ratio' : np.arange(0.1,1,0.1)}

grid5 = GridSearchCV(sgd, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_woman_train,y_woman_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_woman_valid, y_woman_valid):.3f}")best parameters: {'alpha': 0.30000000000000004, 'l1_ratio': 0.2}

valid-set score: 0.552

results = pd.DataFrame(grid5.cv_results_)

results.plot('param_alpha', 'mean_train_score')

results.plot('param_alpha', 'mean_test_score', ax=plt.gca())

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_train_score'] + results['std_train_score'],

results['mean_train_score'] - results['std_train_score'], alpha=0.2)

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_test_score'] + results['std_test_score'],

results['mean_test_score'] - results['std_test_score'], alpha=0.2)

plt.legend()

sgd = SGDRegressor(max_iter=1000, tol=1e-3, alpha = 0.3, l1_ratio = 0.2)

y_woman_pred = cross_val_predict(sgd, x_woman_train, y_woman_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_woman_pred, y_woman_train, alpha=.5, s=4)

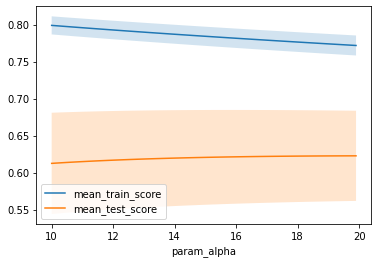

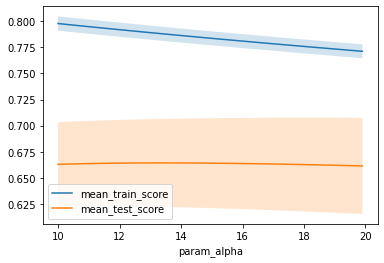

TweedieRegressor - man

from sklearn.linear_model import TweedieRegressor

reg = TweedieRegressor(power=1, link='log')

param_grid5 = {'alpha' : np.arange(10,20,0.1)}

grid5 = GridSearchCV(reg, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_man_train,y_man_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_man_valid, y_man_valid):.3f}")best parameters: {'alpha': 19.899999999999963}

valid-set score: 0.712

results = pd.DataFrame(grid5.cv_results_)

results.plot('param_alpha', 'mean_train_score')

results.plot('param_alpha', 'mean_test_score', ax=plt.gca())

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_train_score'] + results['std_train_score'],

results['mean_train_score'] - results['std_train_score'], alpha=0.2)

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_test_score'] + results['std_test_score'],

results['mean_test_score'] - results['std_test_score'], alpha=0.2)

plt.legend()

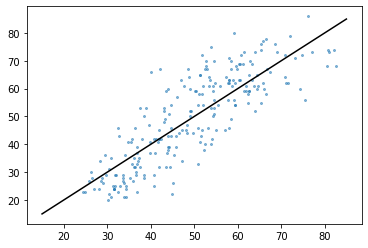

reg = TweedieRegressor(power=1, link='log', alpha = 19.9)

y_man_pred = cross_val_predict(reg, x_man_train, y_man_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_man_pred, y_man_train, alpha=.5, s=4)

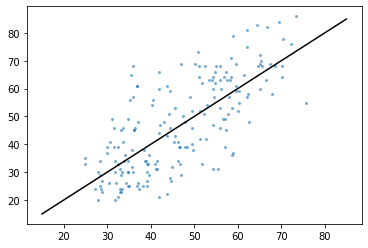

TweedieRegressor - woman

reg = TweedieRegressor(power=1, link='log')

param_grid5 = {'alpha' : np.arange(10,20,0.1)}

grid5 = GridSearchCV(reg, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_woman_train,y_woman_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_woman_valid, y_woman_valid):.3f}")best parameters: {'alpha': 13.399999999999988}

valid-set score: 0.514

results = pd.DataFrame(grid5.cv_results_)

results.plot('param_alpha', 'mean_train_score')

results.plot('param_alpha', 'mean_test_score', ax=plt.gca())

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_train_score'] + results['std_train_score'],

results['mean_train_score'] - results['std_train_score'], alpha=0.2)

plt.fill_between(results.param_alpha.astype(np.float64),

results['mean_test_score'] + results['std_test_score'],

results['mean_test_score'] - results['std_test_score'], alpha=0.2)

plt.legend()

reg = TweedieRegressor(power=1, link='log', alpha = 13.4)

y_woman_pred = cross_val_predict(reg, x_woman_train, y_woman_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_woman_pred, y_woman_train, alpha=.5, s=4)

DecisionTreeRegressor - man

from sklearn import tree

clf = tree.DecisionTreeRegressor()

param_grid5 = {'max_depth' : np.arange(1,20,1)}

grid5 = GridSearchCV(clf, param_grid = param_grid5, cv = RepeatedKFold(n_splits=5, n_repeats=3), return_train_score=True)

grid5.fit(x_man_train,y_man_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_man_valid, y_man_valid):.3f}")best parameters: {'max_depth': 1}

valid-set score: 0.505

DecisionTreeRegressor - woman

from sklearn import tree

clf = tree.DecisionTreeRegressor()

param_grid5 = {'max_depth' : np.arange(1,20,1)}

grid5 = GridSearchCV(clf, param_grid = param_grid5, cv = RepeatedKFold(n_splits=5, n_repeats=3), return_train_score=True)

grid5.fit(x_woman_train,y_woman_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_woman_valid, y_woman_valid):.3f}")best parameters: {'max_depth': 2}

valid-set score: 0.609

GradientBoostingRegressor - man

from sklearn.ensemble import GradientBoostingRegressor

reg = GradientBoostingRegressor(random_state=0)

param_grid5 = {'n_estimators' : np.arange(60,80,1)}

grid5 = GridSearchCV(reg, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_man_train,y_man_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_man_valid, y_man_valid):.3f}")best parameters: {'n_estimators': 69}

valid-set score: 0.741

reg = GradientBoostingRegressor(random_state=0, n_estimators = 69)

y_man_pred = cross_val_predict(reg, x_man_train, y_man_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_man_pred, y_man_train, alpha=.5, s=4)

GradientBoostingRegressor - woman

reg = GradientBoostingRegressor(random_state=0)

param_grid5 = {'n_estimators' : np.arange(80,90,1)}

grid5 = GridSearchCV(reg, param_grid = param_grid5,

cv = RepeatedKFold(n_splits=5, n_repeats=3, random_state = 123),

return_train_score=True)

grid5.fit(x_woman_train,y_woman_train)

print(f"best parameters: {grid5.best_params_}")

print(f"valid-set score: {grid5.score(x_woman_valid, y_woman_valid):.3f}")best parameters: {'n_estimators': 86}

valid-set score: 0.676

reg = GradientBoostingRegressor(random_state=0, n_estimators = 81)

y_woman_pred = cross_val_predict(reg, x_woman_train, y_woman_train, cv=5)

plt.plot([15, 85], [15, 85], color='k')

plt.scatter(y_woman_pred, y_woman_train, alpha=.5, s=4)