NLP 논문 리뷰

1.[논문 리뷰] ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

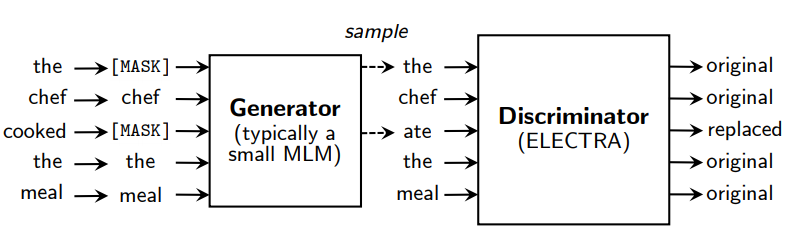

현재 state-of-the-art representation learning 방식은 일종의 denoising autoencoder의 학습 방법레이블링 되지 않은 입력 시퀀스의 토큰 중 일부(주로 15%)를 마스킹하여 이를 복원하는 방식인 masked language

2022년 8월 12일

2.[논문 리뷰] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

언어 모델의 사전 학습은 많은 자연어처리 분야에서 효과적임을 보였음언어 모델의 사전 학습에는 크게 두 가지 학습 전략이 있음. 두 가지 전략은 모두 general language representation을 학습하기 위해서 단방향의 언어 모델을 사용함.feature-b

2022년 8월 17일

3.[논문 리뷰] RoBERTa: A Robustly Optimized BERT Pretraining Approach

⚠️ BERT 논문 리뷰를 먼저 보시는 것을 추천 드립니다 ⚠️ > * Reference * https://arxiv.org/pdf/1907.11692.pdf 1. Introduction 최근 ELMO, GPT, BERT, XLM, XLNet 등의 self-trai

2022년 8월 28일