여기 코드 다시 정리

https://github.com/hope69034/Main-Repository/tree/main/%EA%B4%91%EC%A3%BC%EC%9D%B8%EA%B3%B5%EC%A7%80%EB%8A%A5%EC%82%AC%EA%B4%80%ED%95%99%EA%B5%90%202022.10/web_portfolio_1/1017

[colab] 폴더 설정

/content/drive/MyDrive/project/scalp_weights/project - (train.ipynb)

(test.ipynb)

(wep.ipynb)

sample - model1sample - 0, 1, 2, 3 - (testset.jpg)

model2sample - 0, 1, 2, 3 - (testset.jpg)

model3sample - 0, 1, 2, 3 - (testset.jpg)

model4sample - 0, 1, 2, 3 - (testset.jpg)

model5sample - 0, 1, 2, 3 - (testset.jpg)

model6sample - 0, 1, 2, 3 - (testset.jpg)

scalp_weights - (model1~6.pt)

static - (ngrok_auth.txt)

img - (stardust.jpg)

(please-wait.gif)

(favicon.ico)

upload - upload - (upload.jpg)

templates - (.html)

[colab] train code

from google.colab import drive

drive.mount('/content/drive')

!pip install efficientnet_pytorch

import time

import datetime

import os

import copy

import cv2

import random

import numpy as np

import json

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

from torch.optim import lr_scheduler

from torchvision import transforms, datasets

from torch.utils.data import Dataset, DataLoader

from torch.utils.tensorboard import SummaryWriter

import matplotlib.pyplot as plt

from PIL import Image

from efficientnet_pytorch import EfficientNet

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

hyper_param_batch = 2

random_seed = 100

random.seed(random_seed)

torch.manual_seed(random_seed)

num_classes = 4

model_name = 'efficientnet-b7'

train_name = 'model1'

PATH = '/content/drive/MyDrive/project/scalp_weights/'

data_train_path ='/content/drive/MyDrive/project/train_data/'+train_name+'/train'

data_validation_path = '/content/drive/MyDrive/project/train_data/'+train_name+'/validation'

data_test_path ='/content/drive/MyDrive/project/train_data/'+train_name+'/test'

image_size = EfficientNet.get_image_size(model_name)

print(image_size)

model = EfficientNet.from_pretrained(model_name, num_classes=num_classes)

model = model.to(device)

def func(x):

return x.rotate(90)

transforms_train = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.RandomHorizontalFlip(p=0.5),

transforms.RandomVerticalFlip(p=0.5),

transforms.Lambda(func),

transforms.RandomRotation(10),

transforms.RandomAffine(0, shear=10, scale=(0.8, 1.2)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

transforms_val = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

train_data_set = datasets.ImageFolder(data_train_path, transform=transforms_train)

val_data_set = datasets.ImageFolder(data_validation_path, transform=transforms_val)

dataloaders, batch_num = {}, {}

dataloaders['train'] = DataLoader(train_data_set,

batch_size=hyper_param_batch,

shuffle=True,

num_workers=0)

dataloaders['val'] = DataLoader(val_data_set,

batch_size=hyper_param_batch,

shuffle=False,

num_workers=0)

batch_num['train'], batch_num['val'] = len(train_data_set), len(val_data_set)

print('batch_size : %d, train/val : %d / %d' % (hyper_param_batch, batch_num['train'], batch_num['val']))

class_names = train_data_set.classes

print(class_names)

from tqdm import tqdm

def train_model(model, criterion, optimizer, scheduler, num_epochs=25):

if __name__ == '__main__':

start_time = time.time()

since = time.time()

best_acc = 0.0

best_model_wts = copy.deepcopy(model.state_dict())

train_loss, train_acc, val_loss, val_acc = [], [], [], []

for epoch in tqdm(range(num_epochs)):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

epoch_start = time.time()

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_corrects = 0

num_cnt = 0

for inputs, labels in tqdm(dataloaders[phase]):

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

num_cnt += len(labels)

if phase == 'train':

scheduler.step()

epoch_loss = float(running_loss / num_cnt)

epoch_acc = float((running_corrects.double() / num_cnt).cpu() * 100)

if phase == 'train':

train_loss.append(epoch_loss)

train_acc.append(epoch_acc)

else:

val_loss.append(epoch_loss)

val_acc.append(epoch_acc)

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

if phase == 'val' and epoch_acc > best_acc:

best_idx = epoch

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print('==> best model saved - %d / %.1f' % (best_idx, best_acc))

epoch_end = time.time() - epoch_start

print('Training epochs {} in {:.0f}m {:.0f}s'.format(epoch, epoch_end // 60,

epoch_end % 60))

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best valid Acc: %d - %.1f' % (best_idx, best_acc))

model.load_state_dict(best_model_wts)

torch.save(model, PATH + 'aram_' + train_name + '.pt')

torch.save(model.state_dict(), PATH + 'president_aram_' + train_name + '.pt')

print('model saved')

end_sec = time.time() - start_time

end_times = str(datetime.timedelta(seconds=end_sec)).split('.')

end_time = end_times[0]

print("end time :", end_time)

return model, best_idx, best_acc, train_loss, train_acc, val_loss, val_acc

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.Adam(model.parameters(), lr=1e-4)

exp_lr_scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

num_epochs = 2

train_model(model, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=num_epochs)

[colab] test code

from google.colab import drive

drive.mount('/content/drive')

!pip install efficientnet_pytorch

import torchvision

from torchvision import transforms

import os

from torch.utils.data import Dataset,DataLoader

import torch

PATH = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model6.pt'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = torch.load(PATH, map_location=device)

transforms_test = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

testset = torchvision.datasets.ImageFolder(root = '/content/drive/MyDrive/project/sample/model6sample' ,

transform = transforms_test)

from torch.utils.data import Dataset,DataLoader

testloader = DataLoader(testset, batch_size=2, shuffle=False, num_workers=0)

model.eval()

correct = 0

import torch.nn.functional as F

test_loss = 0

from tqdm import tqdm

if __name__ == '__main__':

with torch.no_grad():

for data, target in tqdm(testloader):

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction = 'sum').item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(testloader.dataset)

print('\nTest set: Average Loss: {:.4f}, Accuracy: {}/{} ({:.4f}%)\n'.format(test_loss, correct, len(testloader.dataset), 100. * correct / len(testloader.dataset)))

[colab] web code

from google.colab import drive

drive.mount('/content/drive')

with open('drive/MyDrive/project/static/ngrok_auth.txt') as nf:

ngrok_auth = nf.read()

!pip install flask-ngrok > /dev/null 2>&1

!pip install pyngrok > /dev/null 2>&1

!ngrok authtoken $ngrok_auth

!pip install efficientnet_pytorch

from flask import Flask, render_template, request

from flask_ngrok import run_with_ngrok

import torchvision

from torchvision import transforms

import os

from torch.utils.data import Dataset,DataLoader

import torch

PATH1 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model1.pt'

PATH2 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model2.pt'

PATH3 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model3.pt'

PATH4 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model4.pt'

PATH5 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model5.pt'

PATH6 = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model6.pt'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model1 = torch.load(PATH1, map_location=device)

model2 = torch.load(PATH2, map_location=device)

model3 = torch.load(PATH3, map_location=device)

model4 = torch.load(PATH4, map_location=device)

model5 = torch.load(PATH5, map_location=device)

model6 = torch.load(PATH6, map_location=device)

model1.eval()

model2.eval()

model3.eval()

model4.eval()

model5.eval()

model6.eval()

app = Flask(__name__, static_folder='/content/drive/MyDrive/project/static',

template_folder='/content/drive/MyDrive/project/templates')

run_with_ngrok(app)

@app.route('/')

def home():

menu = {'home':1, 'menu':0}

return render_template('index.html', menu=menu, )

@app.route('/menu', methods=['GET','POST'])

def menu():

menu = {'home':0, 'menu':1}

if request.method == 'GET':

languages = []

return render_template('menu.html', menu=menu,

options=languages)

else:

f_image = request.files['image']

fname = f_image.filename

newname = 'upload.jpg'

filename = os.path.join(app.static_folder, 'upload/upload/') + newname

f_image.save(filename)

return render_template('menu_spinner.html', menu=menu , filename=filename )

@app.route('/menu_res', methods=['POST'])

def menu_res():

menu = {'home':0, 'menu':1}

transforms_test = transforms.Compose([ transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) ])

testset = torchvision.datasets.ImageFolder(root = '/content/drive/MyDrive/project/static/upload' ,

transform = transforms_test)

testloader = DataLoader(testset, batch_size=2, shuffle=False, num_workers=0)

with torch.no_grad():

for data, target in testloader:

data, target = data.to(device), target.to(device)

output1 = model1(data)

output2 = model2(data)

output3 = model3(data)

output4 = model4(data)

output5 = model5(data)

output6 = model6(data)

m1p = output1.argmax(dim=1, keepdim=True)[0][0].tolist()

m2p = output2.argmax(dim=1, keepdim=True)[0][0].tolist()

m3p = output3.argmax(dim=1, keepdim=True)[0][0].tolist()

m4p = output4.argmax(dim=1, keepdim=True)[0][0].tolist()

m5p = output5.argmax(dim=1, keepdim=True)[0][0].tolist()

m6p = output6.argmax(dim=1, keepdim=True)[0][0].tolist()

d_list = []

if m1p == 0 and m2p == 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d1 = '정상입니다.'

d_list.append(d1)

elif m1p != 0 and m2p == 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d2 = '건성 두피입니다.'

d_list.append(d2)

elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d3 = '지성 두피입니다.'

d_list.append(d3)

elif m2p == 0 and m3p != 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d4 = '민감성 두피입니다.'

d_list.append(d4)

elif m2p != 0 and m3p != 0 and m4p == 0 and m6p == 0 :

d5 = '지루성 두피입니다.'

d_list.append(d5)

elif m3p == 0 and m4p != 0 and m6p == 0 :

d6 = '염증성 두피입니다.'

d_list.append(d6)

elif m3p == 0 and m4p == 0 and m5p != 0 and m6p == 0 :

d7 = '비듬성 두피입니다.'

d_list.append(d7)

elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p != 0 :

d8 = '탈모입니다.'

d_list.append(d8)

else:

d9 = '복합성 두피입니다.'

d_list.append(d9)

final = d_list[0]

result = {'미세각질':m1p, '피지과다':m2p,'모낭사이홍반':m3p,'모낭홍반농포':m4p,'비듬':m5p,'탈모':m6p}

final2 = '0:양호, 1:경증, 2:중등도, 3:중증'

filename = request.args['filename']

mtime = int(os.stat(filename).st_mtime)

return render_template('menu_res.html', final2=final2,final=final, menu=menu, result=result,

m1p=m1p,m2p=m2p,m3p=m3p,m4p=m4p,m5p=m5p,m6p=m6p , mtime=mtime )

@app.route('/menu_res_inf')

def menu_res_inf():

menu = {'home':0, 'menu':0}

m1p = int(request.args['m1p'])

m2p = int(request.args['m2p'])

m3p = int(request.args['m3p'])

m4p = int(request.args['m4p'])

m5p = int(request.args['m5p'])

m6p = int(request.args['m6p'])

return render_template('menu_res_inf.html', menu=menu, m1p=m1p,m2p=m2p,m3p=m3p,m4p=m4p,m5p=m5p,m6p=m6p, )

if __name__ == '__main__':

app.run()

[vscode] 폴더 설정

project - (train.py)

(test.py)

(wep.py)

content - sample - model1sample - 0, 1, 2, 3 - (testset.jpg)

model2sample - 0, 1, 2, 3 - (testset.jpg)

model3sample - 0, 1, 2, 3 - (testset.jpg)

model4sample - 0, 1, 2, 3 - (testset.jpg)

model5sample - 0, 1, 2, 3 - (testset.jpg)

model6sample - 0, 1, 2, 3 - (testset.jpg)

- scalp_weights - (model1~6.pt)

- static - (ngrok_auth.txt)

- img - (stardust.jpg)

(please-wait.gif)

(favicon.ico)

- upload - upload - (upload.jpg)

- templates - (.html)

[vscode] test code

import torchvision

from torchvision import transforms

import os

from torch.utils.data import Dataset,DataLoader

import torch

PATH = './content/scalp_weights/'+'aram_model6.pt'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model = torch.load(PATH, map_location=device)

transforms_test = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

testset = torchvision.datasets.ImageFolder(root = './content/sample/model6sample' ,

transform = transforms_test)

from torch.utils.data import Dataset,DataLoader

testloader = DataLoader(testset, batch_size=2, shuffle=False, num_workers=0)

model.eval()

correct = 0

import torch.nn.functional as F

test_loss = 0

from tqdm import tqdm

if __name__ == '__main__':

with torch.no_grad():

for data, target in tqdm(testloader):

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction = 'sum').item()

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

test_loss /= len(testloader.dataset)

print('\nTest set: Average Loss: {:.4f}, Accuracy: {}/{} ({:.4f}%)\n'.format(test_loss, correct, len(testloader.dataset), 100. * correct / len(testloader.dataset)))

[vscode] web code

with open('./content/static/ngrok_auth.txt') as nf:

ngrok_auth = nf.read()

from flask import Flask, render_template, request

import torchvision

from torchvision import transforms

import os

from torch.utils.data import Dataset,DataLoader

import torch

PATH1 = './content/scalp_weights/'+'aram_model1.pt'

PATH2 = './content/scalp_weights/'+'aram_model2.pt'

PATH3 = './content/scalp_weights/'+'aram_model3.pt'

PATH4 = './content/scalp_weights/'+'aram_model4.pt'

PATH5 = './content/scalp_weights/'+'aram_model5.pt'

PATH6 = './content/scalp_weights/'+'aram_model6.pt'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model1 = torch.load(PATH1, map_location=device)

model2 = torch.load(PATH2, map_location=device)

model3 = torch.load(PATH3, map_location=device)

model4 = torch.load(PATH4, map_location=device)

model5 = torch.load(PATH5, map_location=device)

model6 = torch.load(PATH6, map_location=device)

model1.eval()

model2.eval()

model3.eval()

model4.eval()

model5.eval()

model6.eval()

app = Flask(__name__, static_folder='./content/static',

template_folder='./content/templates')

@app.route('/')

def home():

menu = {'home':1, 'menu':0}

return render_template('index.html', menu=menu, )

@app.route('/menu', methods=['GET','POST'])

def menu():

menu = {'home':0, 'menu':1}

if request.method == 'GET':

languages = []

return render_template('menu.html', menu=menu,

options=languages)

else:

f_image = request.files['image']

fname = f_image.filename

newname = 'upload.jpg'

filename = os.path.join(app.static_folder, 'upload/upload/') + newname

f_image.save(filename)

return render_template('menu_spinner.html', menu=menu , filename=filename )

@app.route('/menu_res', methods=['POST'])

def menu_res():

menu = {'home':0, 'menu':1}

transforms_test = transforms.Compose([ transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]) ])

testset = torchvision.datasets.ImageFolder(root = './content/static/upload' ,

transform = transforms_test)

testloader = DataLoader(testset, batch_size=2, shuffle=False, num_workers=0)

with torch.no_grad():

for data, target in testloader:

data, target = data.to(device), target.to(device)

output1 = model1(data)

output2 = model2(data)

output3 = model3(data)

output4 = model4(data)

output5 = model5(data)

output6 = model6(data)

m1p = output1.argmax(dim=1, keepdim=True)[0][0].tolist()

m2p = output2.argmax(dim=1, keepdim=True)[0][0].tolist()

m3p = output3.argmax(dim=1, keepdim=True)[0][0].tolist()

m4p = output4.argmax(dim=1, keepdim=True)[0][0].tolist()

m5p = output5.argmax(dim=1, keepdim=True)[0][0].tolist()

m6p = output6.argmax(dim=1, keepdim=True)[0][0].tolist()

d_list = []

if m1p == 0 and m2p == 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d1 = '정상입니다.'

d_list.append(d1)

elif m1p != 0 and m2p == 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d2 = '건성 두피입니다.'

d_list.append(d2)

elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d3 = '지성 두피입니다.'

d_list.append(d3)

elif m2p == 0 and m3p != 0 and m4p == 0 and m5p == 0 and m6p == 0 :

d4 = '민감성 두피입니다.'

d_list.append(d4)

elif m2p != 0 and m3p != 0 and m4p == 0 and m6p == 0 :

d5 = '지루성 두피입니다.'

d_list.append(d5)

elif m3p == 0 and m4p != 0 and m6p == 0 :

d6 = '염증성 두피입니다.'

d_list.append(d6)

elif m3p == 0 and m4p == 0 and m5p != 0 and m6p == 0 :

d7 = '비듬성 두피입니다.'

d_list.append(d7)

elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p != 0 :

d8 = '탈모입니다.'

d_list.append(d8)

else:

d9 = '복합성 두피입니다.'

d_list.append(d9)

final = d_list[0]

result = {'미세각질':m1p, '피지과다':m2p,'모낭사이홍반':m3p,'모낭홍반농포':m4p,'비듬':m5p,'탈모':m6p}

final2 = '0:양호, 1:경증, 2:중등도, 3:중증'

filename = request.args['filename']

mtime = int(os.stat(filename).st_mtime)

return render_template('menu_res.html', final2=final2,final=final, menu=menu, result=result,

m1p=m1p,m2p=m2p,m3p=m3p,m4p=m4p,m5p=m5p,m6p=m6p , mtime=mtime )

@app.route('/menu_res_inf')

def menu_res_inf():

menu = {'home':0, 'menu':0}

m1p = int(request.args['m1p'])

m2p = int(request.args['m2p'])

m3p = int(request.args['m3p'])

m4p = int(request.args['m4p'])

m5p = int(request.args['m5p'])

m6p = int(request.args['m6p'])

return render_template('menu_res_inf.html', menu=menu, m1p=m1p,m2p=m2p,m3p=m3p,m4p=m4p,m5p=m5p,m6p=m6p, )

if __name__ == '__main__':

app.run()

[HTML]

[HTML] base

<!DOCTYPE html>

<html lang="ko">

<head>

<title>{% block title %}Project{% endblock %}</title>

<script src="https://code.jquery.com/jquery-3.4.1.js"></script>

<meta charset="utf-8">

<meta name="viewport" content="width=device-width, initial-scale=1">

<link href="https://cdn.jsdelivr.net/npm/bootstrap@5.1.3/dist/css/bootstrap.min.css" rel="stylesheet">

<link href="https://cdnjs.cloudflare.com/ajax/libs/font-awesome/6.1.2/css/all.min.css" rel="stylesheet">

<script src="https://cdn.jsdelivr.net/npm/bootstrap@5.1.3/dist/js/bootstrap.bundle.min.js"></script>

<link rel="shortcut icon" href="{{url_for('static', filename='img/favicon.ico')}}">

{% block additional_head %}{% endblock %}

</head>

<body>

<nav class="navbar bg-dark navbar-dark fixed-top navbar-expand-lg">

<a class="navbar-brand" style="margin-left:50px;margin-right:100px;" href="#">

<img src="{{url_for('static', filename='img/stardust.jpg')}}" alt="Logo" style="height:45px;">

</a>

<ul class="nav nav-pills mr-auto">

<li class="nav-item mr-5">

<a class="nav-link {% if menu.home %}active{% endif %}"

href="{% if menu.home %}#{% else %}/{% endif %}">

<i class="fa-solid fa-home"></i> Home</a>

</li>

<li class="nav-item mr-5">

<a class="nav-link {% if menu.menu %}active{% endif %}"

href="{% if menu.menu %}#{% else %}/menu{% endif %}">

<i class="fa-solid fa-bolt"></i> Diagnosis</a>

</li>

<li class="nav-item mr-5" style="margin-left: 100px;">

<a class="nav-link disabled" style="margin-right:50px;">

<strong>딥러닝을 통한 두피 자가진단 시스템</strong>

</a>

</li>

</ul>

</nav>

<div class="container" style="margin-top:88px;">

<div class="row mb-5">

<div class="col-1"></div>

<div class="col-10">

<h3>{% block subtitle %}{% endblock %}</h3>

<hr>

{% block content %}{% endblock %}

</div>

<div class="col-1"></div>

</div>

</div>

<nav class="navbar navbar-expand-lg navbar-light bg-light justify-content-center fixed-bottom">

<span class="navbar-text">

Copyright © 2022 JS_B_Stardust_Project. All rights reserved.

</span>

</nav>

{% block additional_body %}{% endblock %}

</body>

</html>

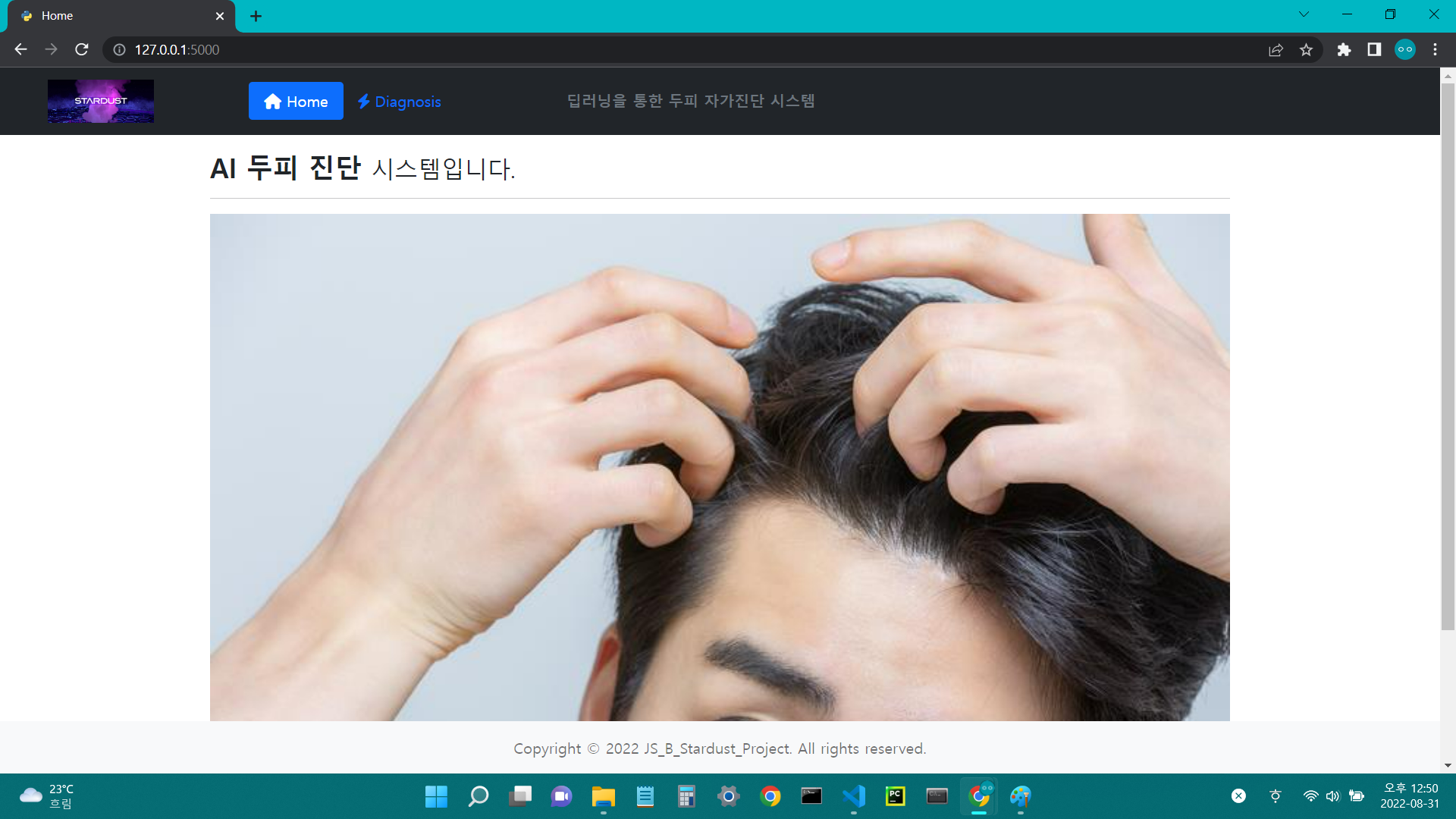

[HTML] index

{% extends "base.html" %}

{% block title %}Home{% endblock %}

{% block subtitle %}

<strong>AI 두피 진단</strong> <small>시스템입니다.</small>

{% endblock %}

{% block content %}

<img src="http://cdn.kormedi.com/wp-content/uploads/2021/09/07_012.jpg" class="d-block w-100" alt="임의의 사진">

{% endblock %}

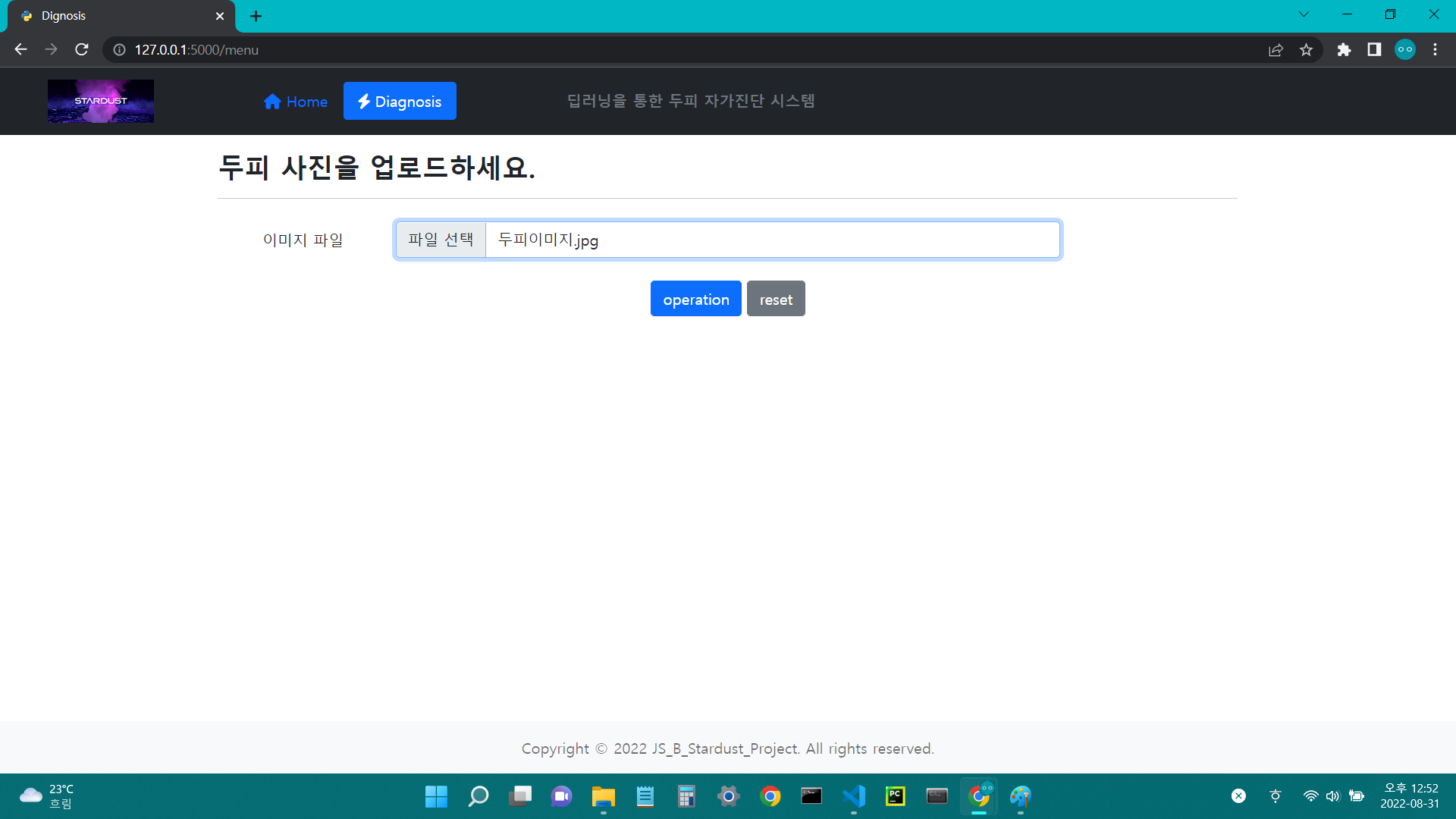

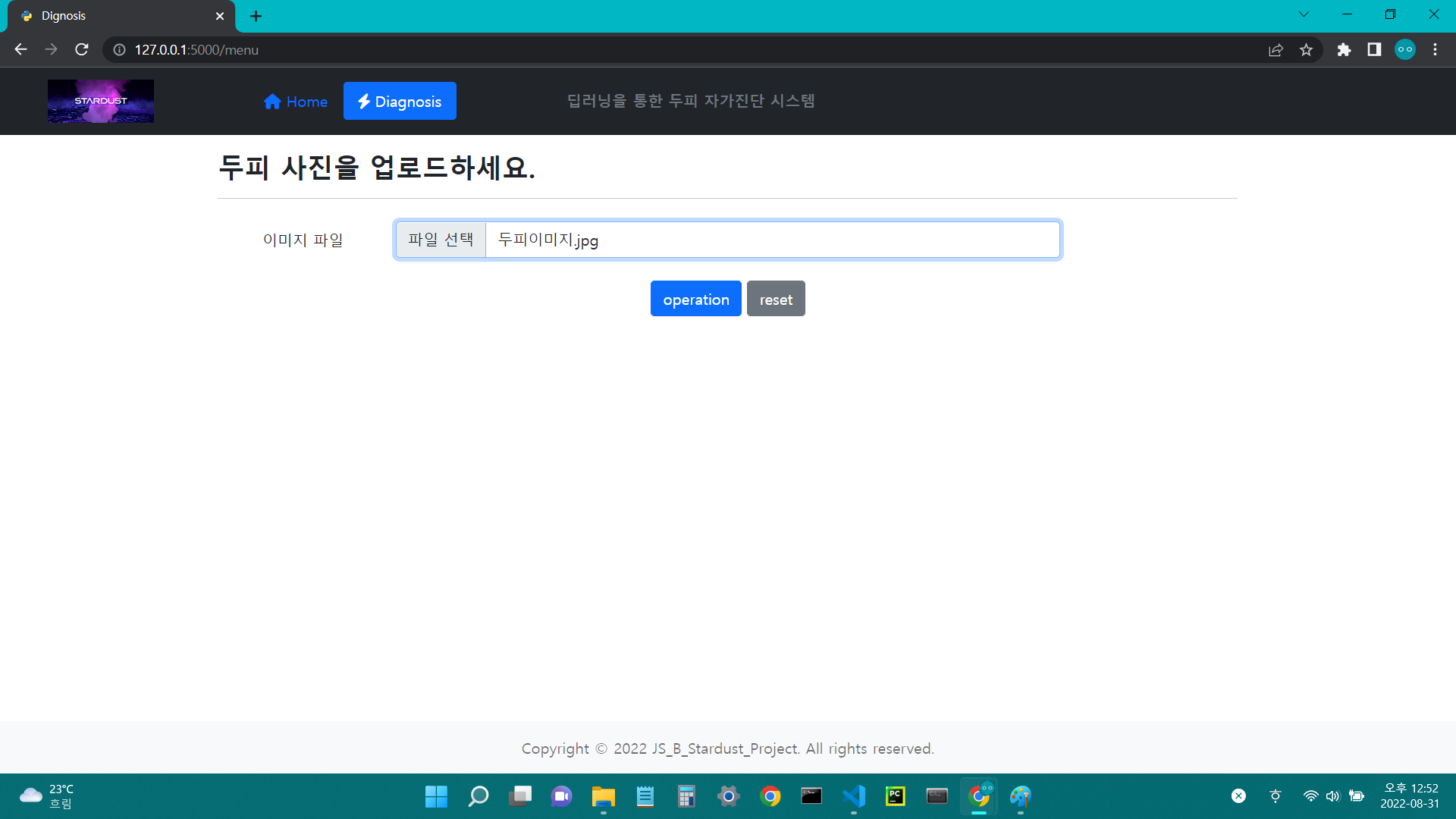

{% extends "base.html" %}

{% block title %}Dignosis{% endblock %}

{% block additional_head %}

<style>

td { text-align: center; }

</style>

{% endblock %}

{% block subtitle %}

<strong>두피 사진을 업로드하세요.</strong>

{% endblock %}

{% block content %}

<form action="/menu" method="POST" enctype="multipart/form-data">

<table class="table table-borderless">

<tr class="d-flex">

<td class="col-2 mt-2">이미지 파일</td>

<td class="col-8">

<input class="form-control" type="file" id="image" name="image">

</td>

<td class="col-2"></td>

</tr>

<tr class="d-flex">

<td class="col-2"></td>

<td class="col-8 mt-2">

<button type="submit" class="btn btn-primary mr-2">operation</button>

<button type="reset" class="btn btn-secondary">reset</button>

</td>

<td class="col-2"></td>

</tr>

</table>

</form>

{% endblock %}

{% block additional_body %}

<script>

$(".custom-file-input").on("change", function() {

var fileName = $(this).val().split("\\").pop();

$(this).siblings(".custom-file-label").addClass("selected").html(fileName);

});

</script>

{% endblock %}

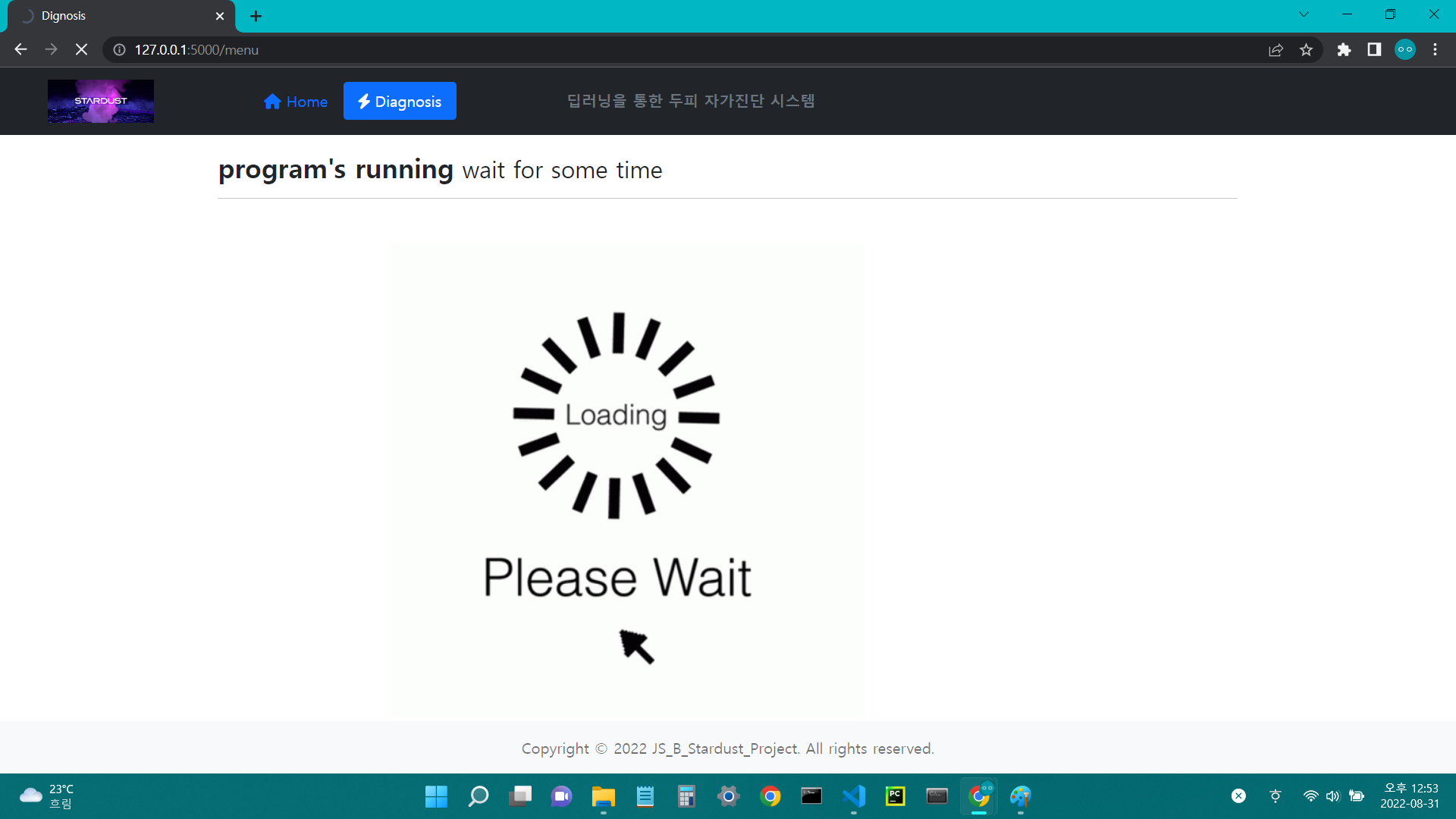

{% extends "base.html" %}

{% block title %}Dignosis{% endblock %}

{% block additional_head %}

<style>

td { text-align: center; }

</style>

{% endblock %}

{% block subtitle %}

<strong>program's running</strong><small> wait for some time</small>

{% endblock %}

{% block content %}

<div class="row mt-5">

<div class="col-2"></div>

<div class="col-8">

<div class="d-flex align-items-center">

<img src="{{url_for('static', filename='img/please-wait.gif')}}" alt="실행중">

</div>

</div>

<div class="col-2"></div>

</div>

<form style="display: none" action="/menu_res?filename={{filename}}" method="POST" id="form">

</form>

{% endblock %}

{% block additional_body %}

<script>

window.onload = function() {

console.log('menu_spinner.html')

$("#form").submit();

}

</script>

{% endblock %}

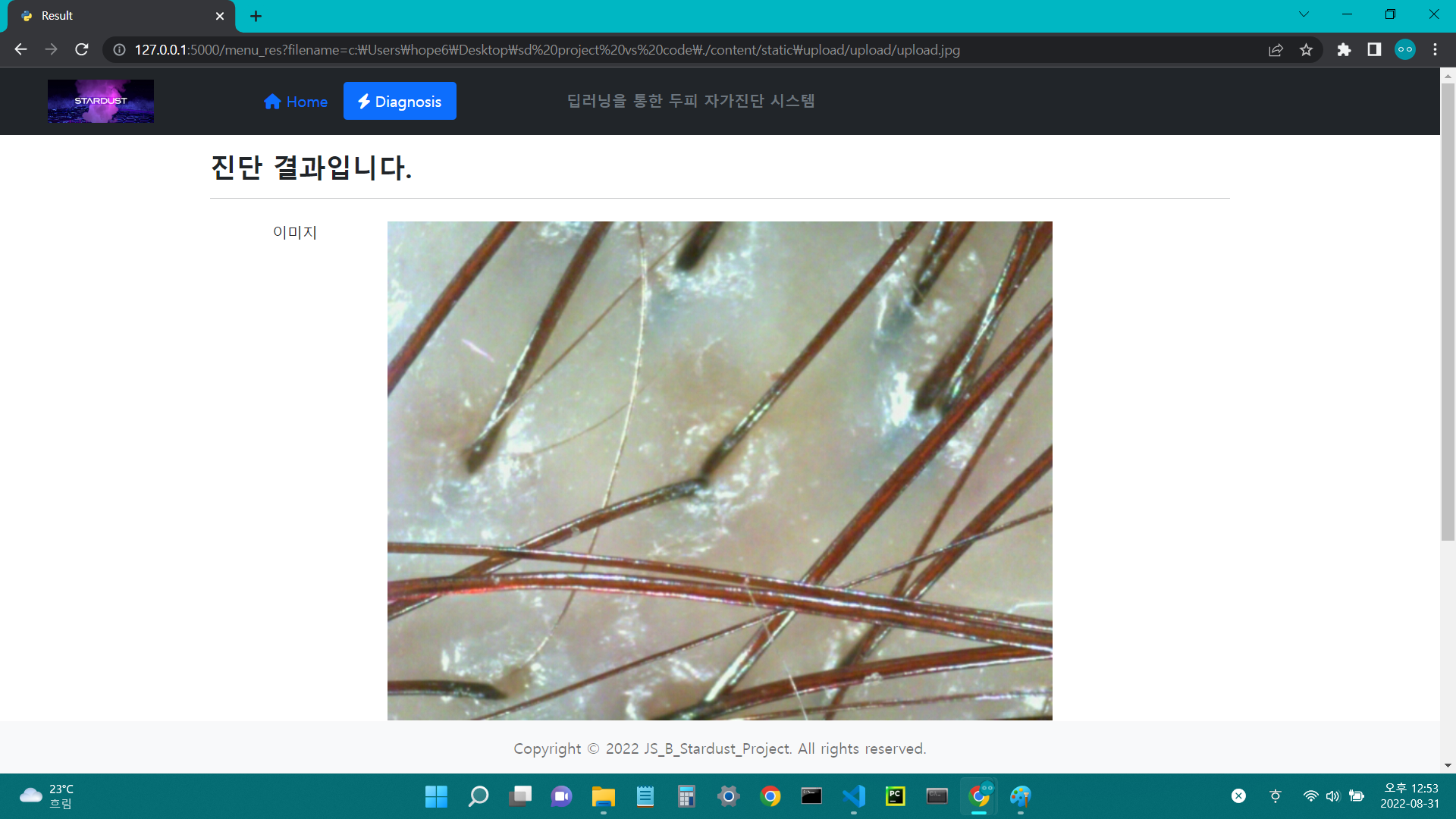

{% extends "base.html" %}

{% block title %}Result{% endblock %}

{% block additional_head %}

<style>

td { text-align: center; }

</style>

{% endblock %}

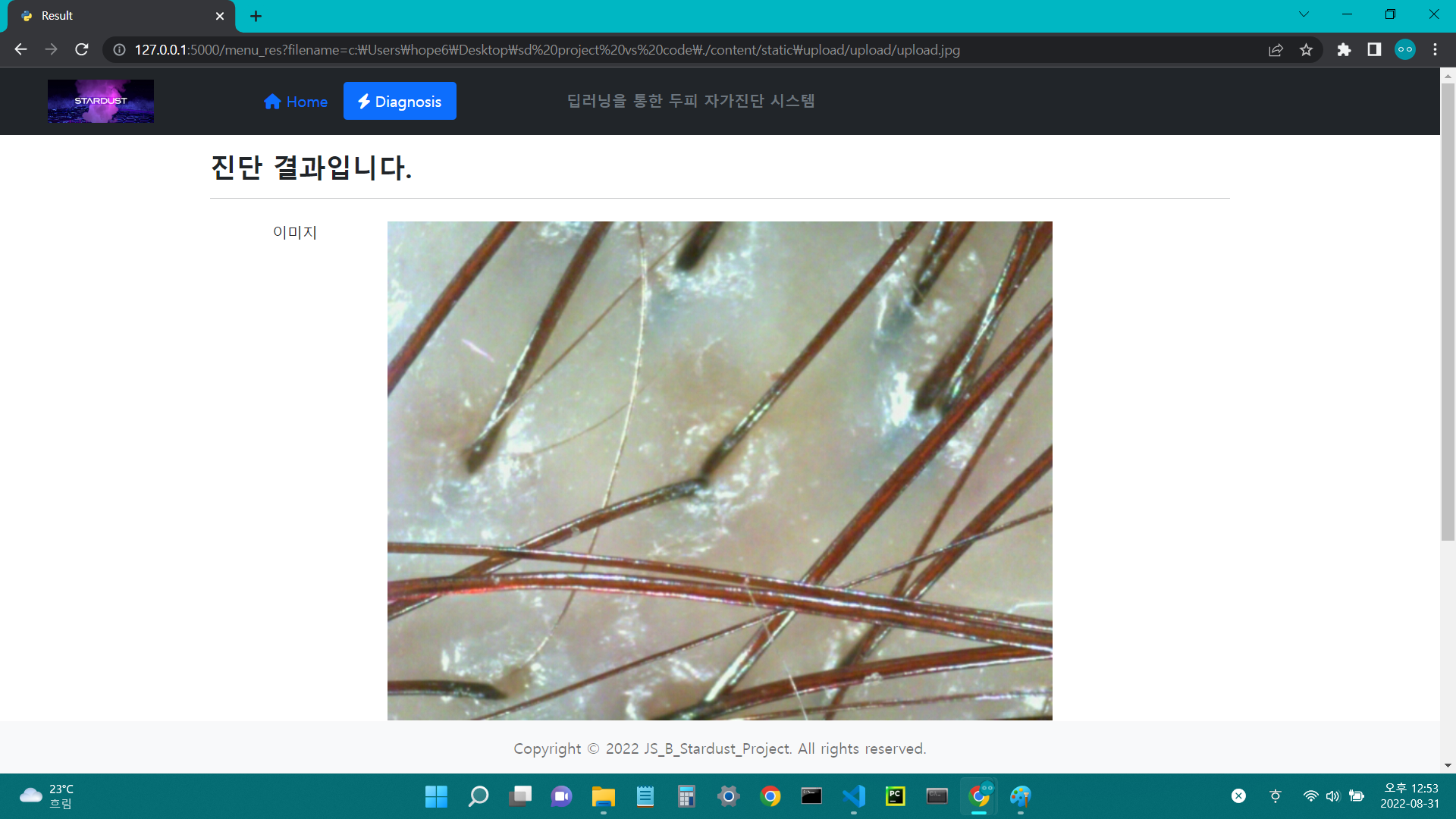

{% block subtitle %}

<strong>진단 결과입니다.</strong>

{% endblock %}

{% block content %}

<table class="table table-borderless">

<tr class="d-flex">

<td class="col-2">이미지</td>

<td class="col-8">

<img src="{{url_for('static', filename='upload/upload/'+'upload.jpg', q=mtime)}}"

class="d-block w-100" alt="입력한 사진">

</td>

<td class="col-2"></td>

</tr class="d-flex">

<tr class="d-flex">

<td class="col-2 mt-1 mb-1">결과</td>

<td class="col-8 mt-1 mb-1" style="background-color: beige;">

<strong>{{final}}</strong>

<table class="table table-sm">

{% for key, value in result.items() %}

<tr>

<td>{{key}}</td>

<td>{{value}}</td>

</tr>

{% endfor %}

</table>

<strong>{{final2}}</strong>

</td>

<td class="col-2"></td>

</tr class="d-flex">

<tr class="d-flex">

<td class="col-2"></td>

<td class="col-8">

<button class="btn btn-primary" onclick="location.href='/menu'">back</button>

{% if final != '정상입니다.' %}

<button class="btn btn-primary" onclick="location.href='/menu_res_inf?m1p={{m1p}}&m2p={{m2p}}&m3p={{m3p}}&m4p={{m4p}}&m5p={{m5p}}&m6p={{m6p}}'">solution</button>

{% endif %}

</td>

<td class="col-2"></td>

</tr>

</table>

{% endblock %}

{% extends "base.html" %}

{% block title %}Solution{% endblock %}

{% block subtitle %}

<small>solution</small>

{% endblock %}

{% block content %}

{% if m1p != 0 and m2p == 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 %}

<br><br>

건성<br>

<br>

두피의 유·수분 밸런스 조절.<br>

두피와 모발에 풍부한 수분을 공급하는 모이스처 라인의 제품으로 보습력을 높여주고, 트리트먼트나 천연 두피 팩으로 윤기를 더해 두피의 유·수분 균형을 맞추는 것이 중요하다.<br>

<br>

-건성 두피 케어 제품 추천-<br>

<a href="https://sivillage.ssg.com/item/itemView.ssg?itemId=1000044129247&siteNo=6300&salestrNo=6005"> <img

src="data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAAMCAgICAgMCAgIDAwMDBAYEBAQEBAgGBgUGCQgKCgkICQkKDA8MCgsOCwkJDRENDg8QEBEQCgwSExIQEw8QEBD/2wBDAQMDAwQDBAgEBAgQCwkLEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBD/wAARCAFRAVEDASIAAhEBAxEB/8QAHgABAAEEAwEBAAAAAAAAAAAAAAcFBggJAQMEAgr/xABTEAABAwMBBQMGCAcMCAcAAAABAAIDBAURBgcIEiExE0FRCRQiYXGxFSMyUnKBkaEWY4KSorTBFxglJjM3QlZ2o7LCGUNGU2KDs9EkRVVz4fDx/8QAGwEBAAMBAQEBAAAAAAAAAAAAAAMEBQIBBgf/xAAtEQEAAgEBBwMDBAMBAAAAAAAAAQIDEQQFEiExQXEzUfAiMrE0YYGREyNCwf/aAAwDAQACEQMRAD8A2poiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIi4JwEHKL44x48h1KsDXW8BsW2YzupNfbTLDZJ2NL3RVVUA8N8eEZKCQkWPrt/zc2YCTvB6WOPCSQ+5i+B5QLc2d8jb7p930Y6g+6NBkKii3Qu85sH2m1PmehNpVtu0p7o2SsH2vYApOjliljEkUjZGno5hyD9i80kdiLgEEZHeuV6CIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgLg9FyiDFret3btu+2DWdj1bsf22TaSprdaprdV2h9bV01PUyOl42z5gJBdg8PpM6AYKwy207pW+Vs70fedeap1vT3uy2Kkkrq6Sk1DUSysgYCXuEckbScDJIB6LbgMDvUc7yFOKrd72mQfO0hd+h8KSQ/sXPOOkvYn3h+f247Tb4YXNp6msp5COb5raHP8AziMq2H6/1k6T4vV1waSeXZ+h9wUn6vkkktL3F7jx04PMn5oUFsPDI1w6hwOfrXtZn3e3iNWbG7puUb2m8Vo6PXmm9sw09aDVzUZbdLlXw1DJI8cWImM5jBBBzhZ97u25Pr/ZVrPT+ttcbYZLxNYJJZRS0bqp7Kp0lM+AtkdNIRwfGceA35TWq6vJ7ymXd9YS8u4bzVDJPiyI/tWTGQupmY5aueXYaCGgFcoi8BERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQfHQLHnZ9vU1G0LaRtI0HS6KZQxbP746yecy13G+se3IMnAGARglpwMuOMLIc94Wv8A3cfR3jd5IH+vbz971lb22jJsuz/5Mc6TrH7r+7sFNoyTXJGvJlfedRa+rp45tP3GioYgwNkhlhZLxO+cHEjB7sYIUPbXtDbzOutN32yWDbn5lBe6Woon26Wy25tMIJYnsdGZuB03PiALgcgEkcwFMNJjC7pMcPNYFN67TP1Tbn87dGlbZMUfTEcvnfq1c3ryde8n5h5rPfdEVQbEGEi5OZjAxgfFDPtWP+udx3bNs9idVahq9MiBhyX090MhAHq4Blbp7zyhf7FiFvTkixVI8WlcTv3a8d4rExzn2T4d2YMv3RP9o33a9/nQOwrZ1Jo/VWq9UGvbcpajsLXpqGenEZZGwenJI1xceAk93RSh/patm/n9JLBU6snpGvD5oBpWnEkzAebWvNWAzPjg48CtWN9yLhUj8Y7r7Svmhd8bBy/oO96+nj6ojJMzr5nT+ujJmtYtNNI08R+erePsK37LJvDfhHJoDZveKSn0xRtra2W81kEBc08ZDY2xdoXE8B5nAGQsidC6ro9eaMsWtrdBPBSX6201zgimAEkcc0bZGtdgkcQDsHBwtYXkwGYsW1+Q/wDosDf0J1sc3exw7BdnIxj+Klp/VY1zse0XzZb1tPKNNEW14aYoiax1SEiItJSEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREHB6LX7u5Efvj95I4665k97wtgR6LX7u58t47eR5/7dSe96w9/wD6SfMflq7o9WfDK2lzwj2L0SEFvVeem+QPYu559FfK0n6WraOag3o/EuJWIO9Qf4DqM+Bysvb1nsXjHcsP96fJsc48QVTyT/tr5aGzd/DWDfx/CVSPxjveuq3/AMpT/Qd712X/ACLlUD8Y73lddB/KQfQd71+hU9OHy9vUn53bGvJhs/ittfeB1tUA/u51sZ2A8thWzsD+qtq/VI1rp8mJn8ENrrTyBtcPP/lzrYrsB/mL2ef2WtX6rGqm7OebJ/DveH2R87JAREW2yhERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERBwei197uvLeP3kTkYOuZPe9bBD0Wvrd0x++R3ks8/48Sf4nrD3/wDpJ8x+Wruj1Z8Mr6U+iPYu+T5OV56U+iCPBd8nycZXyVPta1uqgXo/EOPqWIG9Ln4EqCe4EhZf3ofEux4LEHekybJUAeBCrZPVr5X9l7+GsG/gC51OB/rXD7yum38pIP8A23D713ag5XSpB7pHe8rooT8bBn/du96/Qq+nD5i3qT87tjnkxyfwT2uuJ9EWeEYx+LnWxfd+/mJ2dZ/qrav1SNa5vJjPzpPa+znys0R/u51sa3f/AOYnZz/ZS0/qkSqbr9bI63h9sfOy/wBERbbKEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREHHh7Vr53dSP3x28ly5/hzKAfynrYMVr43c+e8VvItzkfh5N/ikWHv8A/ST5j8tXc/q28MrqYnhHNd0hGOa6afJYPBdkmA3wXyNejYmNZUS9HMLsjuWIe9IP4Fn9hwsur0fiXY8FiLvR87HUZP8ARKr5PVr5Xdm7+GsDUPK61WP96/3leehI7Wnz8x3vXp1AMXWqB69q73leejIMsH0He9foVPTh8zf1J+d2xXyZDiNL7YTnGLHEf0J1sf2A4/cJ2c4/qnaf1OJa3fJlPB0vtiaW/wDkMfP8idbItgGTsI2cE/1StH6nEqu7PWyfw83h9tfnZf6Ii2mZAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIg4PRa9t3AEbxG8jJnkde1Ax/wAyRbCT0WvfdvI/fBbxzu86/qR/eSLD3/8Ao/5hr7o9W3j/ANhldT44G58F2ydMLppubAfALtkPor5GnRrz1UK9nMTvYsRN6I5slQCO44WXN7PxDvYsRN6I4slRjvaVVt6tfK9s3drG1Fn4WqeX+td715aMntYOf9B3vXs1EM3Opd4yO968VF/LwfRcv0SnpR4fL39T57tiXkySDpvbI0ciNPxH9CdbJdgGf3CNnGTn+KVo/U4lrX8mOf4A2yHH+z0f+GdbKdgP8xOzj+yVo/U4lV3b62T+Hm8OkfO0L9REW0zBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERB8kepa9920g7wG8eM8xtAqf+pKtg5yAsC9k+lr3su3gduT9oMMFgi1Vqp97sktbVxRx3Che6Q9tES7mBxAOzjhJweaxN+0tfZdKxrzj8tTdN4rltrPZk1TE8AyuyQ9F4LVdbRcGA2+92ur5cvN66GX/C4qpPpqtzctppHetrSfcvkK1tEc4bHFWea3r2cwu59yxA3pnEWWfB7isv77HMyJwfE9vI9WkLEDenif8AANQQw8geZVWfWry7r+z6TWfDWXf5C+41A/GO968dIcTQfQd716LwWSV1Q9rhjtH9/rK6aRpM0PBz9B3T2r9EppFIfMXn/Y2G+TFJOndsmRn+L8YI/InWyrYGMbCtnX9k7R+pxLW15L6lqqix7X4oIJHy1Flgp4I2t9KWVzJ+FjB/Sce4Dmtluxe23GzbHtC2a70UtHX0GmrZTVVNMMSQzMpY2vY4dzmuBB9YVbd1ZjNknw52+YmK/Oy9URFss0REQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQfJGW4K0JX29TbxG9LrzVesqioulDJcqyVlPJO8x+bR1DoqWDkf5JjGjDemefeVvK2lagbpPZ1qnVLnhos9lra/JOAOyge/wDyrRHuq0stVPqC+Sgl8vm0LneLiHSO+8rqk6RMvaxrKXrjo/RNjoSbRo200ha3k6CAMI+sc1Gd2uuqIZ5o9LtvJkpWGR4t0tU90TfnOEbvRB8TgKXdXvMVC7HXhPVRhte1XUW+w6f05ZbtNarHFZ6e4PFPUugFdXSAmqqZ3NIL3h47INcT2bYwABk554YmdNElpmOa1Kfev3gtOUslNY9otw7CJpz5xC2r7IDlkmQOxj1lWne96Xb1qkOiuuq464YLnBtopz6PzvRZ09anZloq9OaG0MNR3aosWqbtfbfcbhG54pzJVuoql1qhrnEfFedQNh7VzgSGyMe5p4iVZVdWttGyKvrb/R3CxPNHb/PKa0RtpZ6V51BdzJTsD/kBhBYAc44GjmAq+TNSs8qR1iPnJ5WbT/1KCn651dcnOqHmGbHpPfHbmcOOvPhbheSq1NeK1vBNNBw/8FOxp+ogKQdolyNnsFukNwr7bLcqm61sEVteIIeGfsHNZMwkOGGvw5vM9VFDQA3AIx3YU+PJN68SO8cM6JF0MyrqtEav1DbrtcqG96UdQXW2VNLWSRmH40scQGkekCWuDhzBHXBIX6BN3rXVRtN2F6B2gVtQJ6u+6doK2qkGPSqHQt7U8v8Aj4uS0B7EmGvh1/Ye+4aTqHtb4uie14+4FbmPJkalbqTct0Dk5fahW2t/Pn8VVygZ/JLfqwpJj6Ycx1ZUIiLh0IiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiIMft/bU40puf7UbiyYRy1FkfboueCX1L2wAD1/GH7Fql3X6AU2haiuxg1tzmPT+jG1rB7is/wDyuOo22jdWjsrZg2W/ant9Nwd7o4hJO7/pN+1YP7CqMUGy/T7SzDpqd1S4euR7nZ9y6jo6r1XHreUGl4cDmMFQ1W6q1HpmZ82nrzLQvY8ysLY45Ax/z2iRrg13L5QAPJTtcdKV2qLBc7tQ1TGOthkApzTyvM3BCZXDtGgsjJaMND8cbiGjmQob1Zsq2ismnibpOrqHRsc95pXxzta0A8RJY4gcJDmu5+i5rmnBBC8i1ZnSZe3iUJapv18u7K6O73u4V7a+sdcasVVS+Y1FWWlpnkLiS6Thc5vETnBIVMqNp20apmq5arXF4qHVsTIKkzVJk7aNjy9jXcWQeF7nOGehcT1JV7XvY3tRbCKmXSFRFBJTVdaZZKiFrI4KdofM97i/DMMIeA4gvaQWh2QqXZ9iF51Fs/ptbWW/0FTcaxlTVw2ExStqZaOCpjp5JWSlvZOcJZWfF8Qdw5d3YXOS+KNNZj8uYi3ZZ9FrDVVFC2Gmv9W1jZXzta94fiR+ON44wfSdgZPfgKlgk5c45JJJPrKuWp2Ya6oK5tsq7EGVBrJaDgFXC4dtG57X5cH44A6ORvaZ4CY3gOOCvm9bONb6bsX4SX2wvo7aa+S2NnfPEe0qIwC8MaHcT2jLfjGgt5jnzC8rfH/zMGlu67d2+UO2rU9uPS62u4UGPEvgdgfaFtH8jjqDzvdw1FpOQ5l0/q6qY71NlhheP0g9apNg9aLbtn0dVOdhvwmyJ2eWQ9rm/tWyTyRF1baNbbddnkhxJBdaW4xsPc0S1ETv8innnRx0lsuREUToREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQaxfLWaobDZdmOjoZPjJJ7pdpWZ6hkcUTOXtleog0dbvgrStooGt4fNbfTxkeBEYz96qPlcb0dUb0OldFw+k206fooHtHz6qrkcf0WtXqp4gyIxgcm+iPYOQS1tI0S466yoFcNT3enlsOnnENp62G6uaJ2wk1LSI4cF7g154i0NjOcuwcK2qvaPtztN0lu2ktIupjJDBUOpqXTczqcN7eacy9mc47aWaZ0hJw7kOQaAq5X3uo0/PVVMFBRVZnbH6FUxzmsfFK2WOQBpGS17WnByDjmCraqdrO0fUd5otMWltsZW3iqdT9o90zRUVVQ8ccshDjw8TuZa0cI58LeeFDM69o0dWqivUuudsNdpOntNZpyrkt1Lp74NNY+0VLnzWyXs4o5XvJLM4hjYyVrWgnOeJxyvHo3U22uh0TWaY01pa2RRaTke2smqaRsd0ZE+YVktIGyPD5GF1N2j2MZx8EbgTw5BmO4av242WW/2mt2ZtuF4hqKtlDcbZUnzKK4ENdVVMrZX+kx0YaxsZayMPBa1vHkCw6W47y1JctR6rodCMFZqCpp562llj7WppxDTCWAiNz+0YxsXZkF3Nxwwg8Raat7xaNOGPfnPztq9iswjak1XremraOC8aHdWNp6mpidTG1yskqHOM0rqV3ixj55H8AAcAcZwBhqzX20Gp0hUaVv1loaO1Xa5x3HziOgczjkhhDY4InuJYGxRva0hg4wOFr3EABVrWu1HXXw9JBqTTtPSXaGR7q+nmlkdhj3NlihHC/LAzDDnPEehIGWqztX61v+t/MW3zzcC3NmbAIWua341wc8kEkZyB0wpsX1TEzWP7c2rOmmqm6Oq3W7V9ir2Hh83udLJnwHatB962O+T/ALxFpHyge0PSr3cMWqLFUTQtPe9j4JwfzXS/etakbTTzRVABBhkZKPyXA/sWdGxrUB0p5RbZVqSKXgh1PRU9FI7uf5xSTQ4/PDFerMTSUNq6TDc4iIowREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAXBIHVcr5d3Z8UGkXe3ur9oPlCdRZIkitt8o7aMdAyjpWZH54f9eVJsVIGwOefBQXp64ya93o9W62lJk89u96uPGeh7SdzWH7CFkLLT4oyG+Heq18nNepj0hFOrQBx5IAGeZOAP+yszUekdY6KLtc0T7bL8AVMU5kpphUmF+GyMdJEWghhacnjA5Zz3ZkDVlLwlzuEk9fYQo31FdNQ3itrm1+pKjivWY7hPVVDgyoaRg9uWglww0DOCenrXM5NXU45K+47wNVFXVdNRWushjqX2yo7OkpHRHsnBzKZwdjighk4ZIx0ifh2ckKlXGo3lrBS1VwuFFDBTU9E6GpeYIGtkjDTxSOyQXPDgSMkHtB6LT0XxcX6ulnZVSbWKLtYz8W83KoBHIAdIcZwAOfXAzlUOoGrHRyUk21SikifFJA+N90qXNfE/PGw5i5g5Oc+JVXJznpH9O641uXfQ2u7hdhcbrb2yVl7p4rtxhzYxIyZjXBxBxwnhILh0bnmVa9wt01vrZ7fUhvbUsr4ZOB3E3ia4g4PeMjr3hXdV3DUNHVmGPVE1SYnCRtRT1UjmF2B8kuAPLAGMAclRKmmnnkknqHukkke58j3nLnOJyST45Kmxzbu8tjUGogLoX4HPhI+5ZH6l1G/Te0Hd82pwy8JoZbdI+QdR2VVA4n817lA/mrXMyWej06KUtpbH1u7loK+QAma11UlPx/NJjeG/fGFc2efuiVXPTTSX6Dm8s8896+laWybUbdX7LtIasE3a/DNhoK8vzniMtOx5P2kq7V2riIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICtbahqBulNm+qtTukbH8EWWtrQ53QGOB7h94V0qBt+S/GwbrOv3xy8E1xt7bXEfF1TKyIj817lzadImXtI4rRDU9u2WaSS8V9znjzI2iijc4/Pe/id7lk1PbHCkIDDngPL1qNd3fS5ZSXCr7PDZKqONnsaz/uVPNVaQIHDHdnHrWX/AJNebbimkoD1bb8sc4txnn/8KxrLouHU+qKaz14qW0cge+Y0wHHgNJawF3otLncLRnmSQ0AuICmnUlmkqZhDFC5xllbEAB8oucB348VSZdmccb6jzTUtUI6ntmiqoaSQuZBSyETuLOLs3N7VsTWl55fLHCcFc/5HV6xEItm2dbK6upZSu1hLbnTROlxWTtZ5qXOja2OZpZxB8ZdIHM5OPZSE8IAzR4tBbLJooaaTUFPBLUMhzVfCvFLGXvnmfmHgDC9sMcEOC4N7SYknkAr2uuxyopIZblqLUDGNc+onM8UDqhskLGCTtQ4uBe6TtIg1vUmVvM+ljxHYXPT/ABd3rpYJwGsAZShzI5X1XYM43F4y3DZnuI5gR9+cqC2WI62OCO0oav8AYLJTVULLF2hhlhE7myzMmfE9zjiMvYA04bw8x1zzAIIVu1NrewlobnuxjvUs1miacSUzrfVtqo6ol0E/YGPtGOeY43lpJw0lrjxeBHLoFS9UaNgstPR1ENxFSa18rWtFM6LDYuEFx4jkguccHGCBnrkCemWOjmawi8UTnNc0MJ4fcpXFv+Ft1C5ks4nWW6Nm+iGzgH7pFaLbW4Pfz5EY4sKY9lthdfthW0bTYaC801TJGD84RNlB+2NXtmvreY/ZV2mv0a/vDZZ5PLVB1Xuc7NqqSd0s1vt0lqmLuodTTyQgfU1jVkaeeQsHPJEalZdN2q76d4yZLFqmsAbn5MdRHFO0fa96zjHUq115s20aTMOUREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQcDosUfKNXFo2MWfTbHkSXnUdLluPlRwMkld97WLK9Ybb+MrrxqbQmmYzkUsNdc5G92XGOJp/wAar7TbgxWlNs1eLLWEM7EtNsodMRy9iGmaplk9XcP2KR622jsyW4JHM4HVc6AsbaPT9DGI8Yi4sesklXJV0PoFxj5dOncsmv2ta1vqRNeLDRzRNnqMgGcxycJaSG468J5g5PQ9eeDyVrV2mImNvVJ8K04onUMcoMbWufUNDw5rQ7I4MkAvaASQG5HepZutuDg6Nw4stx0yVZtdYWxuL2Rs4mel0ycnvUVpTVnijqiy5bLrTDUtZLqmCMMOfRa3Lscy1o48cWDkZwMY5k+iLfOz+3tqaymm1NTxiExcE8Q42yNfHxkgEgkgkN5HvPsUjXfTdMSSabjLsuPLkO/3q36qwPA4RH6IHcM8uvTu6qLWe0u+GfdGt201T0dcaaWsZOAz+VjGGcPLmMZ5Z5jODjBwF9VmzWFlrr7mdQ0QfRxmSKmeczVHMZa0cRwcvHqOcjPMi76uxdmTG/jDic8IPXkVQ6iyNHCxjDwtPI4weQwAD4j3KSt7e7maz7o3baMvkxE7AHTn/wDeSmjdlt7J5NSWSVh4a2FrOE94fG+M+8K0BZCHOdlnEW54gDjl/wDqkvd+phbNX1QAAElO1wH0ZAf2q9s+TTJVXz01xyvvyQdzNnuO1TQExIfHJb7gxvhwGaB5/RZ9i2UrWFuQynQO/Jq7SRYWRX+ku1Ixp6ZjmZUsP5vH9q2eHotOk6wyM0fU5REXaMREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREHA6LEneVt0l02yQSSDibSWKBkQx86WUn71lusfd4OziLWdlvRaAysoZKTiHe+N/EAfyXn7FW2uvFimEuC3DfVadhtjaeggia3kImjH1L31NECOh+1dtpINHGAObRg/UvXK0PbkgLO0jRZi86rRrLcMucWjI5DkrcrLQ48Xfnv4QMK/qmFp5AclR6unbxYwDnx7lFauqxW8o4rbAw8QfFyySMqjVGniGl+QORwccwpJqqaM8nDOPFUerpI+Ita31hQzVNXJKMK3TrOIfFh7RkAY5g+pUOu0zxcTWt5A8hjIUq1NAHO6cvvVOktLAcluc9V5CTiRb+DIDscDuEg81dey7T8tPqvt2x4a2B3GT0wcY+9V/4JY0lzmDJ9SufZ7aGCrqJuzAJcyMHH1+9WMEa5IQ5r6Y5WXpWyy2Xfw01e6SNzfOq+Nsjh3iage1+fuWyMdywg2N2Qay3pKe/RxcVPZ/Oq1zu4Nji7CM/W5/vWb47itfBziZ/eWXmnnHh9IiKdCIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAo427WF110JNcqaLiqbJM24MwMksbkSgfkOcfqUjrz1lNDXUs1FUs44aiN0UjT3tcMEfYVxevHWYe1nhnVi3Yq1r48NeCCA4c1VnT+iQFZVpM1oqprZMSX26plpHg9fi3lvP6gro7TLch3sWPOscl2IiXNRKM9VSqubPLvyvTUPcMknn3Km1D3EElQXtMJ6w8M78knrlU6ctJwQu+pkweRXgldkkqvNlilO7olx1avJJz54XdK45wV0OOTheRMzKTSHycYyfcq3QVRs2mqm4NAEjw/s/pu9Fv2dVQpDwt9Hrjl7V7NcSuordR2mFhfJHGJDGOrnkYaPf9qubNE6zMK20aaRCXtzrSZhodR64mY0urKhlrpXfi4fSkP1vfj8hZJ96snYzpT8CtmOntPPdxzRUbZqh2Os0uZH/AKTyPqV7Lbx14KxDJvbitMuURFI5EREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAXBGVyiDDrXtfR0G2rWWlRK1tXBLTXQR9C+GpjBDx6uNsjT6wqjbqozUrXZ5t9Ej2KCPKlXjVOxTa3sx296PwTcKCs0/c6d3KKsZBI2eOKTHe5ss3C4cwWjCvDQO0b8ILRT3ekGae5UMFwp2v4XZbI0OxxD5WAcZx3FVsmyTe3HTv1SVy6RpZJM0gd1PVUuqfgEDkuiPUFBVjMmYXnkWu5L4qJ45COzdxfRGc/YqOXZckcpqtY81Zjq8NW/BwvC+T1qvjSGpbg3taW0zuGMgnDc/aqVcdK6ot+XVtpmgZ892OHPtGVXnZMvWKz/SzXaMccptClyPaTkO5roMje5VBliIZ29fcY4oyejGlxXlqLlR0ALbZShzxy7eo9Jw+i3oPrU+LduS3O3KHGTbaV5V5y5pKGapuFJC+GQRvmZxu4TgNByT7MBeXWd9+CL7aYH9lPf9XX2isdno+L5DqiZrMn1iISPPzQ0r0Vuua2z6agtgla+tuDpJ5aiUAmCmz92eE+wBQXufapr953fos1/DnyaY2b2643albxejJIQKaOod4ue+biA7mxj1rRw7JTBz11Ucu0WzdeTbPExkTRHGA1jAGtA6AAdF2oinQiIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiDDfyrGz+TWW6VdL9TUolqdF3ahvzSB6QiDzBNj1cE7nH1NWI+57q03fZPY6eWTilsNbPZZhnpEHcUX6Mg/NW1PavoOi2o7M9VbOLiWNg1NZ6u1ue8EhhmicwP5c/RJB+paft1zZxtZ2L681Vso2p6GvlhlljjqqaWqo5BS1FRTO4HuhqMdnI1zHNILXHIAKkx26w5tHJlw0Peebcnp7FW7GZG1sMR6ukaC0espUQRU0MUsQxxsD8Y8ea8mmKh0+qaSBwGJamNv6SnryRyyDe0NjDXAkADqqRrBhOm6x3CcsaHYz61XJwHhwPj+1UzVTeLTV0bjpSvcPaOa6coKqXOeHNa7JLuY9SolXSYe4HuGfaF7ZKxvDG9xOXvOMJXtc4vwMkt5Y65UV+cpK8mPm8xrGbTOzfUlTSVRirJqeKxUj88wZRwuI9YZ2pUleRi0DHS6Y2j7TpqU/+NuFHp6jkxyEdPEZZmjx9KdmforGTfErbjXV2mtnlloau7XGsqJ7k+koIH1E0jieyiYGMBJcSX4C2i7imw697v+7XpjQ+rKeODUVQ6ovF4ijORFVVMhf2ZI5FzI+zjOMjLDgkYKiv7O4ZBoiLh6IiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiICIiAiIgIiIOCMqnXiwWnUFG6gvFGyqpndYn54T9SqSIIyq93vZ1UtDII7pSRtGGx09e9rWjwAOcKnQ7tGhqOqjrrdd7/BURPEjJDVtfhw5g4czCl5F7Fpju80hHU2yu7uJdBtCuTM/PoqZ/+ReOt2O3yvo5qGq2lV7oqhhjkAttMCWnrz4eSlFE4re5pCAJd0ay1DGxy7Q9QNY08QEUVO059vAVV6TdY0JE6KS46i1TcHROa4CW4BjSR0yI2Nz7FNCLzWZ6vVDsGj7BpiHsbLbooDjBkEbeMjwLsZI9SrYGBjK5RAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREBERAREQEREH/2Q=="

class="d-block w-20" alt="헤어세럼"><br>풍부한 영양을 공급하는 헤어 세럼<br>

자연 유래 성분이 두피에 영양을 공급해 유수분 밸런스를 유지해준다<br>

푸석해진 모발에 윤기를 부여하는 헤어 세럼<br>

ORIBE by LA PERVA<br>

파워 드롭스 • 30ml, 7만9천원<br>

</a>

<br><br>

{% elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p == 0 %}

<br><br>

지성<br>

<br>

피지와 각종 오염 물질 제거.<br>

지성 두피는 과다한 피지 분비로 인해 각질, 먼지 등 각종 오염 물질이 쌓여 세균이 쉽게 번식할 수 있다. 피지 분비 조절을 돕는 제품이나 두피 전용 딥 클렌저를 사용하는 것이 좋다.<br>

<br>

-지성 두피 케어 제품 추천-<br></a>

<a

href="https://www.amoremall.com/kr/ko/product/detail?onlineProdSn=52532&prodSn=189570&utm_source=google&utm_medium=da&utm_campaign=ao_google_productdpa&utm_content=ryo&utm_campaign=ao_google_productdpa&gclid=CjwKCAjwmJeYBhAwEiwAXlg0AaaxDRX5U3cftRg1s_muTB8qgVrTzC-MPMapzLLPAmQu_ZYgQ3SG7RoCQXwQAvD_BwE&onlineProdCode=111410000499"><img

src="data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAAMCAgICAgMCAgIDAwMDBAYEBAQEBAgGBgUGCQgKCgkICQkKDA8MCgsOCwkJDRENDg8QEBEQCgwSExIQEw8QEBD/2wBDAQMDAwQDBAgEBAgQCwkLEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBD/wAARCAFRAVEDASIAAhEBAxEB/8QAHQABAAEFAQEBAAAAAAAAAAAAAAYCAwQFBwEICf/EAEUQAAICAQIDBAUHCAkEAwAAAAABAgMEBREGEiETMUFRByJhcZEUMlKBobHBIzNCYnKCktEVFyRDU3OEouEIJZTCNIOj/8QAFwEBAQEBAAAAAAAAAAAAAAAAAAECA//EAB8RAQEBAAICAwEBAAAAAAAAAAABEQIhMVESQWEDIv/aAAwDAQACEQMRAD8A/VMAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACiy2uqPNZNRXm2UZN3yfHtvUebs4Snt57Lcg92q6hmylY7XW3+l3v6vBATKWo466R5p+5fzKXqK/wANL3z2IRJ2P85kXTfjvN/cuhSqMdr16oP9qKYE4eqVp7dpSvfMo/pVP5tuO/3jn12irVZONW2PFxcOeMev1IhHGHCmTg65VZiZ84xjWo7WJzU213tbrYmju8tWsgvzNcvdZt+BbfEGPDpfRZD9naSPkDVZcQcOahLBs1HJrmoqcZVXSipRfc13HuLxzxfhyi6tfzJJP5ttjmv925n5Lj7IxdW0/Nl2ePlwlP6De0vg+8zTgWl5Gs6tpFGZHPg7LIKTVkNuu3hJd3wZ0H0b65reXPK0jWd7XjxVlVze75d9uVvx9n1mpUTwAFAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAeNJ9GaDM4XplN24VnZbvrXL5v1Pw+0kAAhGToep0yf9knJecPW+4w5VW0y2uqlHbwlHY6GeNJ94EK05ytu5lHlgmopfeRz0lY218ZxTe9fh49DoupQisnGlGKW7fd9RAPTDnWaPplmrU1xnbjUuyKl1Tkl0T9m5L4WPnfU8zVNbzI5GdJ8lUOypi3u4VptpNvq+/vZeweFdb1NpabpGbl/5GPOz7kfY2mY+P8jx71hY9M7KoTkq61FJtbtIzyfFNcn4M4G16OkY1OXivE2rjv23Rrp9Hv396R0LQ9BxtErn2c5WW27dpZJbb+xLwRtgaAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAa7U9nkYy8nL8CB+lvDWoadLCa37amcUv3XsTrPalnVRX6Mf5kT49/KZONHyaj8RRNtOaen4rXc6YP/ajJNbw5d8o4f0vI/xcKifxgmbIAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFLait5NJe0CoGNbqGJV861P3dTDt16mPSupy9rewG1BGsvirsd0uVPyit2aa7ibUrd9rclr9qMfuiBJsi+qWZKxzSS9VMi/FslkZKlU1KVbjJLfv2LVGVffJQsraju31k5PcwtVjKrMVlcW9119ZrdeW4Et4D1GrM4fx8ONi7XT4rFsh3OKivU3Xthyv37rwZJThEeGdLx8qzO0+/XtLybfn3YWpyi5LffZprqt2+m/iSHQsniHT8qu2HHmfmY6frYefRW3L2drs38CQdWBHIcWThKKydLm4vpzUWqe3vUuV/Dc2VGu6VktQhmwhN90LU65fCW25RsQeJprdPc9AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAWrsmmj85NJ+QF0s3ZVFCfPNb+S7zWZOr2S3VPqrz8TXTusse83uwNnkazLqqYKPtfVmuuy77m5Sm39ZbUeZljKqz2l8inR3PmjbGXX3ST6fBgazO4iisp6bo+M9SzY2KF0IT5asbpvvbZs1Dpt6q3m901FrdqxHjHhaHY05PEumqy7eKnG9KuclFylyyb27k33+Bi4/CuTiYmRo1fDmFLS8mm2i2mGsZPLKFjk7PyThyxcnKTck0923uVatwtg63gYGm6rw3nzq0zKozcJ4+obui+n81NOU478u/c015ona9Lt+t8N4+S8bI1zBpt7Ky9RuvjXJ1VreyxczW8YrrKS3SXVs9o1zhrJldDG1/TLnjwjZcq8uuXZwk2oyls+ibTSb72mYev8BaBxTkY2ZxBw7qebfiNqqd1tD5a5OLsrUefljGxQUZ8qTlDmi3yyaeDxb6O9A4i4gxuOdW03iF6no/Y5ODPHuql8neOrnFQp3lGxy7ee6lGXXka2cYtOzpuq+JOF4xvujxHpTrxeV5ElmVuNPM9o8732ju3st9t2zJjbianRXqODlU5WNbBSrupsU65x81JPZr2pnK+MdF4f4m1nT+LOMfRf6QMnV9MpqnjZGNj41sqKasinMjS1TLaztb8WuDi4ylFpveqMlNzbI9JmVRK2vI9FnGva1Rc2q9PrtjJfkvmzjY4ye1vdvvvCxfosm+1+PphcO8ZYPGmI9V4S0zL1LSJOUcfU4zqhj5XLJxk6eaSlOKcWuflUZbbxcls3tYZNNem/wBI63StHjFtWRzLqoqv1uVNzUnHZ9Nuvil0fQ13DnDGFwnhVVcCR1jStJu3y6tIyNN58XGdz7SahXLkupbnJt186jF7pRRj8ZcE4HH12mz4qxMq+rT5W80MXTXU8iq2DjZROU7J/k5Pkk+XlkpV1yjKLimROkjXEXDWHUq8jiXSouKXz8ytbKUVKPj4xaa9jTGHxDhZOdLR8lxx83lU4UzmpK6t78s65LpKL2l7Vs90um+l0ngXQ9JyZ6jgadrL1HIVTy86+vHnflzrgoQstdkXF2KMYrnSUnsuZvYvS4O0xZFEo6BmPFrpsqnjSlXyc0p1zjZBdqo1SjKvmTgl1k2tn1NauJbh5uRgv+zSda36xj0Xw7jc4vEzfq5VKf60XsRnCV0a4Y08PMhGqCXa5E65OW3Tq4zbb9rRelW13blZTjGzcbLipUWxe/hv1+BkHP677qJKVcnFx7nubrA4mnBqvMSmvpLowJMCxj5WPlwVlFkZL2d6L4AAAAAAAAAAAAAAAAAAAAAAAAAAACiyyFcXOySil4srNLrWW1aseMtlFbv3gXMjWP0aVsvN95rrb53S3e7Zah18S9CK7wLLjJvu+09ULF05UZSgvYVqC26JFwYiVn0F8T31/wDD+0zI17lXYrzIMFSmv7tnqlPbrW/aZ/Yx232HyeL8EBr+af8Agy+w87Rr+4n9hsfk0fIfJo+QGtd0u7sZ/BHjufjVZ8DZPHj4I8eNHyQGsd/Td02fwlPbx7nXYv3TZvHRQ8aOzA1zsjL9GS96KZSj5P4GwljR26ItyoQGBLZ9yZbkk/Azp07PYtTra8FsBr5Q36otup77pozpx80Y1iiu5gVYeVk4dqsqyIw29pJsTiLCthGN9ihPubS6EMuly7vcwL8qUN3zNAdWrnCyCnXJSi+5p77lZz7hLijssxYWRa3VY+Vb+DOggAAAAAAAAAAAAAAAAAAAAAAAACK61N/0lb18vuRKiJavLfUbkvpbAW6ptLqZNc2Ylb67mRAC/Gb38y9GfgzGh+Jei/Eui/GaS9pcUtyzFlcWQXU0Vwku5otrbxPVt4AZCcF05UVrsn39/sLEX0R6m2wK2od5blyIpl3FL226sD3ZPu2KHt3M8k3v3lEgEmvMtTlFnsvxLMmB5Oa3MeyxbbblU9zHs8kBbtsiu8wMi9b9DJufXb2GuyHtuBi5OYo+q922abPz2k+nQy8xvmS3NLnvo9/Aw1FGn51ktQg4tx2kjvGm5tOoYdWXj2KyE01zLzT2f2pnz7o8VLU6ovu50dv4Ji48L4D+lGc/4pyf4molb4AFQAAAAAAAAAAAAAAAAAAAAACHam+bUL/8yS+0mJC9Y3xM62eRF1xnNuMmvVab8H3Aewa8i/BmJVkVT+bZF+5mTCSa6NAXovdl1eBYhuvAvx32TAux8C5FlqLZcjv5AXE/ArXcW1vuXIgVxaXTY9j5ooXuK107u8DyXd3FDexVLuKOveBQ+8ok9typvw2KJdOgFEmWZtd3mXJPv9ham/ACzNmNMvzZj2PzYGNc+/Y12Q99zPueya9hrsh9GtzOjU5j2kn7TS5+2z2NxmveaW3iaXPfR7EajH0V/wDdan+svvO68JRceGNK/WxKpfGKf4nCtETlqlXT9Jfed54aio8OaVFberhULp/lo1ErZgAqAAAAAAAAAAAAAAAAAAAAAAUShGcXCaUotbNNbplYAh/FfCemx0nN1HTYW4eTTTO5dhJJScVvtytNeHgjkWTq3G2NGN2nal2sJLdc9MW/skvI+iMimGRRZjzW8bYOEvc1scgWDXXCNXZpcu6227upmzVlQ2HH3pAxXy3fJWl381E4/c2Z+J6TuL2nKzDwpRW7b7Zx2X1xNrm6fW11rT+ohvHNdWFpnyapbWZL2aX0U1uTLPs6Sa30ucQYfS7Ra59O+F6a+4VenPPXSfD10n+pZB/iYfC2NDK4cwLLIRlJVcjbX0W4/gZORo+JLpLFrfvghl9nTPx/TjfNbT4Y1D92Nb/9jNh6adutnDGqpeapi/ukQjiTHq0zDgsSKqsuny7x6dF1f4ET1PL1GvHVdOfkQaW/S2Sf3mbbLmrkdmfpw0+Cbs0LU1t3743/ACUf1/8AD0HtZp2oR99P/JzzhS+zUsXE+U2ytVsJVzUnv6y8fs+03lvDGmSlu8Kt/uifK/a5EpX/AFA8KS6Sxs9f6aT+4vf178INbuvOXj/8Oz+RCVwtpnabrEgtveR7W8rIws67Dxb3XRQlFxil37bvqLec+2enVZ+njg1LdrOX+it/kWZenvgtb7rO/wDCsX4HCcPWtUet49WRnTlj2zUXGW23XovtJzpWGs7Mnh5aUouPNHdLdNd63JvP21kTd+n3gp9FHO/8Sa/At/178JWNqurNk/L5NP8AkRi3g7SVNp4u/wC8/wCZRTwrpsZtwxILbxLn9PcP8pPZ6a9Ae/Jg5z/08/5GDkem7RYPaOnZr91Ev5HGePtWz6b6sfAzLaOa2x/k5cvqR6JdPeTDgPQNW1jgPD17OTsjkZmTRXOS6yhBrZt+PVyX7jG8qZIk93pt06bar0rL+upoxJelS/Le2NpNz3/V/wCTGXCeN2j7aqMnuZOZo2JpulX5FVUYyVbSaXc30X3jOc8kxg38eZeS3y1QhLy3i38EyQ8H8O8TcdytsozMfHoocO0dnqy2lzbcqSe/zX37e8gun4Csy16nQ776GMRU6Hn3OvllLLVUX5wjVCS/3WTHG23KXw2fD3o00PQ1C+6VuXlR+dZOTUd/Yl3EsqqrqrjVVCMIQSjGMVsopdyS8i6DqwAAAAAAAAAAAAAAAAAAAAAAAAAAAcy1ahUalk1dyjkz6exvdfYdMT3IBxdRKrV8uxL1XCq77OX8GWJbjSX0uViijnfGPJqeo8mO+aNFnYdPNd/2s65o+B/SWdVGUXyvZy6d0V1Zz3iLhfIwOPNXw9JxY7O6ueHBraDtuUVBPyj2k0m/BJmeU6WNrwbpl1fCryp0ShRVn2YtM31VsVCLlNf/AGdpD3wZmXU7zS2J7m6DjaVwfVpOK3KOn1QUZy+dNxfrTlt+k95NvzbIXnW42HTbn5VkYUU188pN7dxZMhe3PeLbJZGsuhb8mPFRiv1n3v8AD6jn1WrfLtd1LElNOrFujTXs9+mzjL/dF/En1lOdka3dg21wWb8qdChJ7J3ys5Iwb/baRzH0ZcF61rGs8TUWQsjbpenZGVkLb+9qnHeH7T3l8Gc82ta6P6N6fUy8eyPWm2Nsd/BPp/6o6G6Vvvt1T2IV6HtIztX1LUr6oc2Lj6dOdst+va80eyil7UrP4Cd1vnSe3evtNzwzb211ihj03ZVi9WqEpv3JbnFuM9clpGi5etXveyVkejffKc0mvg38DsnFU7cbh7Lspi3LlipNfoxckm/d1S/eRyjiTg27jbgHi++Fbm9B0yGpxlu+lit36ef5KnIT8uZMnLtqNEoQv7O2uW6T3Ul9F9U/gdc0LGVlOFq8X+crhY9v1l1/E4fwrTqEuDNL1e+qSotlZgxs3+dOrl3T/dnD7fI+jOGtCv030fcO5mYt5ZmPOx7fRnZKyr/85Q+A4zUtxk3Y0ZPf2GBlcuHp+VmT6KquUl70un2m3glKtbeK6fV0I5x1d8m0GOOns8m6EHv4R6yf3I1UlcE4wt5tXVbfSuqEOvm25P7Nj634K4Pekeh3QtDnS4ZGPgV5dkGusb7N7bV/FZNHytpPC2p676UsHhnWMayEtQzKJzrffHGtjGfNt/lSTPu5pNcrXR+BnjGrXEsnE/tPRdJI1vE9P/bHSn1lKO69i6v7diZa5p0cTUraIraMJ7rf6L6nPuJdRUOLdL0uS/J5FMoSa707JNL7YRHLwzx/WJw9hQd1tk4r1YndPR9hTweFcSu2HLOc7rW9vnRlbJwf8HL9RyTS6K8aNtdr5Zdpy2brZRSfU7vpuIsDTsXC33+T0wq38+WKX4FkW1lAAqAAAAAAAAAAAAAAAAAAAAAAAAAAAGp1TRK9Tvsdr2jZR2b96ba+82wA0HCWBLGwFfZBqdiS69+y/wCfuKsnQPlHFGLrrdKqx6Zbx5Xzyu2cYS37uVQnatvFyi+nL13cYxhHlikkvBFQFjLx1k4t2M/72uUPitjm9WkR1zUdH0m2MZUPKWo5MWu+rGnGcEn59v2HR98ef2nTzRaLw8tJ1PVNTsuVk8+yEako7dljwTca/a+edst/10vBD8PvUZ07gOK9JepcQZWKniQlDKxnJdHdKGzfk9m5v2PlN/w/wLw9w1qetarpmNL5Rr+Q8jNlY1JSe8nypbdI7zk/r9xJABD/AEdcB1cB6Xlad8ojkSyMqdqmo7ctXRQhu+r2SbftnIi1uO8bNzcOKW+Fk8klv1UZOXI2vJqEvgdZNHr+kY92nZ9+Lh75VqhbN1w3ssdfcva9t0l7faWXEs1GdD4bq1/D1qvM27K/Fen1trrXKS55yXmnvQ/fBlHot4CnovBGZpfEOJy5GtTt+WUy2bjW4KpQ38nGLl7Odks4V0zJ0jh/BwM6UZ5ca+fJlF7xd825Wcv6vNKWy8FsjckVyjU/QTpUvRzo/o/0PNljw0nNjkLKuW9k1KUu1b2Xe1N7Lu9WK326k04p0uiHC9uNiUxrhgVRlTCPRRhBbbL91MkZatqrvrlTbBThOLjKMlumn3pidJZrluFpmdHhuOs5KaVubONEOXZxo2UE35804Tmn9GyJHuItDlxLquiaSkrsbKzp4V8Yt7x9SFlu78GqW5L3HY9V0qGRoN2l4sFFRpUaIp9E4pci+KRjcHaRdouh10ZcFHKyLLMnI6ptTnJtRbXR8seWG/lBCmNLlejjEu9K2n+kiDhB4ml24c610bt3Srn5fm52xfugTgAKiHHGE+WvOUd012cvf4fiQTWOB8zJ4k4P4gxsOycZ5ypzYuLahGuxyUn5JwjLr3dF5nVeIdPyNU0m/ExJVxyGt6XY2oc66rmaW+3uMvAxXg4ONgu2Vjx6YVc8u+XKkt379i6md65jn8P9hxvRpNUI8mTk1XRjLqp0p801/DGZ1gwrdMwLs+jU7MWEsrHUo1W+MU+j+9/F+bM0hJgAAoAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA//9k="

class="d-block w-100" alt="샴푸브러시"><br>노폐물 제거에 효과적인 샴푸 브러시<br>

음이온이 발생하는 마사지 돌기로 두피에 쌓인<br>

각질을 효과적으로 제거하고 혈액순환에 도움을 준다<br>

LEONOR GREYL by CHICOR<br>

샴푸 브러시 • 1만5천원<br><br></a>

<br><br>

{% elif m2p == 0 and m3p != 0 and m4p == 0 and m5p == 0 and m6p == 0 %}

<br><br>

민감성<br>

<br>

외부 자극을 최소화하는 두피 케어.<br>

약한 자극에도 두피가 쉽게 붉어지고 트러블이 발생하는 민감성 두피. 자극을 최소화하기 위해 청결 유지가 중요하며, 진정 및 쿨링 효과가 있는 제품으로 예민해진 두피를 케어해준다.<br>

<br>

-민감성 두피 케어 제품 추천-<br>

<a

href="https://chicor.ssg.com/item/itemView.ssg?itemId=1000026587189&siteNo=7012&salestrNo=1020&tlidSrchWd=%EB%A7%88%EC%8A%A4%ED%81%AC%ED%8C%A9&srchPgNo=1&src_area=chlist"><img

src="data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAAMCAgICAgMCAgIDAwMDBAYEBAQEBAgGBgUGCQgKCgkICQkKDA8MCgsOCwkJDRENDg8QEBEQCgwSExIQEw8QEBD/2wBDAQMDAwQDBAgEBAgQCwkLEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBD/wAARCAFRAVEDASIAAhEBAxEB/8QAHQABAAICAwEBAAAAAAAAAAAAAAQFAwYBAgcICf/EAFEQAAEDAwIDAwYICQkECwAAAAABAgMEBREGIQcSMRNBURQiYXGB0QgWIzKRscHSFTNCRlJyk6HTJDRTYoKEkpThZIOy8BclJjZDRHN0osLD/8QAFwEBAQEBAAAAAAAAAAAAAAAAAAECA//EACERAQEBAAIDAAEFAAAAAAAAAAABEQISEyExYQNBUXGB/9oADAMBAAIRAxEAPwD9UwAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAANdv2p5LTeLZZoaNs0tx7Ryvc/CRMZy5XGN1Xm6bdF3A2IGuLfq97la3sETOEXkXP1nWsuVxgpVnStcjkTOEjZj96E0bKDwzVnE/V9sSTyO5JGjOirDGv1tPJb78IPirTK5tNqjkxnCeSwfawzecXq+zBlD4Ar/hMcb2KvY6zmanglFT/wAMqnfCe49qu2tatEX/AGGn/hmfLFnG1+ipwi5Pzrb8Jjj+9cprOvX1UEH8IzN+Elx9VUzq+4Ln/YYf4Y8k/helfoeD89mfCO48O66uuSL/AOyi/hkuH4Q3HJ+ebV1yX+5xJ/8AmO/4OlffuUGUPhKm48ca5Fy7VdyVM4X+Sx/wy7pONPGB6ortS3Bc9y00f3DXkidX2llBlD5EpOMXFdyJz6irV9dLF9wt6bi1xMdvJf6hd0600X3B5IdX1JlBlD5ri4rcQ1cqPvsuOu9PFn/hJ0PFLXHV98cvrgj+6PJDq+hweH0PEnV8vLz3VFyn9Cz3Gz2/WN/mx21ajvH5JqfYPJEx6SDWqK7XCfHPOu/g1vuLRktS7rUyJ7G+4s5SosQQkdVImUnav6zU+zBCrrtXUaK5GQSY7uVU+0XlILoHlt+40yadRZKvTvbsb87s6nC+xFb9puOh9YWvXumqXVFoZNHT1avb2cqIj2PY9WOa5EVU6tX2YJx58eVyLZY2EAG0AAAAAAAAAAAAAAAAAAAAAHHgePXG611315dqxZ1ZFbXpQUqNREw1E8/PpV6u38ERD1W73KCz2qsu1UqJDRQSVD1X9FjVcv1HimjUq57Oy53Jc1lwe6rnXHWR7lc796qWDbaRksmHOqqnPfiZ6fUpB1AxjoXI+Sd+2POmev1qW1ExUZlSl1LK1sLsuTvGDyPUtHb3TP56WN+P0kz9ZrTaWga9OWkgTf8Ao0L/AFHOjpnb95rzH5cmCNrSGOmaxMU8Sf2EO+WtTLY2p6moYoXfJpudlXzV6korbhUSNzhypjwNZuFbUJle2dn1l/cnbKatcHdRCKSvuVY1zsVD+niU016r2Ox5XJ9JNuCcznqUdQ13N0KJ8N6r1dhal6746lvTXStXCLUOVOuDWIEXnT6S6pEVFRFToBs9JX1Pm/K9euyFpDXVOMds71FBS5RGqWsCrtsBbRTvcuHKSo0a9URzUX1oQIFyqL0J8GeZFwBa0FPC/wA10aY8Db7HQUjnoqxb96oqopqtAq8yKbnY085q7Fk0b/p+20eEVGvRVXqkjk+03OitsDURzJalF9FTIn/2NVsTum5ulC7LURS5GKz+RR8v46p9tTJ94pr5RYhXlqKlP985315NhRVxjBWXhnPTrv3DJUjwLiOs6QysdO9yeDkRfsNj+CvqVJrffNHPYiOoKhtbC79JkuUcnscz/wCaFVxGpnKkio3bBqHAe8usHGGhp3SIyG8QTUEmenNjtGe3mjRPaY68Z7ka/Z9gAAqAAAAAAAAAAAAAAAAAAAAADTOLFHXXHRFZb6CB0i1D4mz8u6thR6OeuE67Jj2nl0eubJa6eKnVlQrYWo3Zqdx9BqmTzbiJwit2qKeavsrY6O5rl6p0jnXwcn5K+lPb4llP7afDxRpZYlfSWydWdEc+RGov7iku2tPwiitcyOJP11cqfuQ1SubW2KeSxX2mfRVlKiNdHJtlF6Ki9FRfFNjXq+5oj1bG/K9MN3GtZFvc57ZJIr566RMr+TF71K5tVpyNcvr6jOf6JPea/Xuu07FdDbqyXPTs6Z7l/chQ1Ns1jMuYNMXl6eihl+6TVekxXfSrURq1tV/gaSo7jpCRN66tT+y08gdY+IGcppO8J66R6fYd2WXiEn5sXVPQsCp9ZLZTHr3kehqv8ZcrgmfBGe4xu0dw5qfn3i6ovo7P7p5hDZ+ITfzauKeuNPeTYrXr5Pzdrs+pPeT/AEb4/hlwwn3de7yniiLF90xLwg4VSdb7fN/60X3DUGW3X3fp2u+hvvJDLbr9Pzbrvbye8g2yPg3wnRUxe73n9aL7hOg4ScMWfNvN39qx/dNOitfEPbGmq7/Ez7xPhtXEPOPi5W/4o/vF38jb4+FnDtuEZfLp9EfuJLOGWg2fNv1y9rI1+w1aC08Q87acrf8AFH94nRWniCqb6crPpZ94amNhTh3otnzb/Xp64mHZNB6Qavm6grP2DfeUjbLr92y6brvoav2ndth4gKv/AHZuK+qNF+0aYv4dIaahXzL/AFG3jA33lvQ2qy0zkVt3kdhc7wp9imnR2HXvfpa5r/uFJkNm1qxfP0xdU7/5q/3GpYr0i3XC30mGpWufhe9mDYqXVduiREV7l9SHk0FDqiP8bYbm310knuLGOO7tb8rbK1n61M9PsNbGceq/Hayt+c6X2NQwVes7FNGrO1lTKYwrP9TzB89Q3aSKVv60aoR310OcLIiL6VGwyJ2sam33JzooKlEcvc5qplDzRujNUxX6gvGmoI6msoaqKqpo2yJl72ORyJhcbLg3+z6auutLuyktkapTwfj6lyfJxZ7l8V9B7fprR1l0vCjaGFXzq3D6iTd7vV4J6EJVtxdQue+Jj5GKxzmoqtXuXwMpxhEOSMgAAAAAAAAAAAAAAAAAAAADpJJHExZJHo1qdVVcIUtbeauR6w2ynXGcLM9u3sT3/QWdY5GrGq9OZfpwY0m8AmqH8EMqJfKrhTMq6hUxzyxo5UTwTKbJ6ED7XG3eOhjZ6o0QvXzKqEV8rlXHME1Q1Nue/rD7EaQ3WN8i/itv1TZXSO6ZMSyuzsoRry6WV/8A4afQcLo9i9WNQvlkdjd2x0dI7Gzl6BtR/EunVPOVn0oPiTRflTRJ7ULWSV++6/SQ5apEkSJZU53JlG53VPHAGBNG2tuzqmBPW5pkbpOzJ1rKZP7bfeYZKhVXHNlUXC79FI7qh6LhFAs26bsbcZr6X9o33mdljsLf/P0uf/UaUfbvX8o4Wof+mTINjba7C3pX0n7VvvMzKGyJslwpf2rTVvKHqueZOhy2Z/e5TI2xtNaE+bXU37RplZDbU6VkH7RvvNSZO9V+eZ2TyJ+VsgMbW1lGnSqhx+uh3RKfuqIl/toa1FO9d8khs787rkGa2FEj7pWexyHdMJ0e1fbkp4Z3LjKE+KRUToaglqxVTdqL7DFJRQSpiSkif+tGimRj08E+gydongZEekp/IGrHSU0UUarzKxjEamV79idHMjvNcnK4jOkRU6EeeRGsVc4NaLYGOHPYsz15U+oyFAAAAAAAAAAAAAAAAAAAAABEuLVdSucnVio4htflMlo9iPYrHdHJhSmjRYldA5UVY15VUJUhFzuYJEwpla7Gx1kTO6BlGd0VTE7w9hnVP0lMDkwu4bdFXCHReh3XfodF6AfP3wg9e0fCLiTo7iRXUVbWws0/qC3yU9OjsOdz0M0bnqiKkcbeSRXyKmGM53dEwtvRaZ+J1h1Nxtu9db9W6yns9XXQXCNv8lio2RLNDQUa5VWUuWtXKLzSOcsjt1RG7XxD0XcNSau0HfaSkpqmmsV0rVucc7kw6iqbdU070RqoqPzJJDlvemfA0Ox8J9YcO7zdtFaQWnr+F+o6Sq7O3VFSrajTdVIxUWOmRyYfRvVdokVFjVV5cplFJFQnECfRvBLVvFaxXXTWoLk2x/GiemhR/ayVHk6OxUv7d6qiMYyNrUaxGtjRrUREwmxad4lXW461s+lJqqyXn8J2aS7VclqjfE+0onZdk2djpZUVJVkejF5mLmF3mqmVbq1g4Xa4X4Plx4QXTQlktN1m0Q+wPudNcIntra1KTsWuejY0cjVcrncyqqp4blhpjhrq/hfc21+hqW2utV3szUu9ia9tPDFe4adEZWwuRMcsysbHK3CZXkl3VHIpU+i4yyVfGN3DqTTVRHZqqOpo7dfFd8nVXSka2SrpkTHRscmzsp59PO3fG2Dh1xdn4h6ivNhZdLHZrpYbvVUVZpurgkfc0poZVa2fmWViI2ViNkaqRPaiPROZ3zimv3BuZnDa20GhrrRSa905V0tzgu006otVc45e0qklciqrfKOapY5FRdpnZRdzvrWwf9JVzp0uGgrTZ7larvTz0msI7nSSy00NNO2Z7YZGYqEkdFG9jonNaxqPcquciedfQ2abUnESLiXTaBbd9MOiqbHUXpan8CVPMxI6mKFIuXyzC57VV5v6vRc7YH8aUbxmoeHEFjc+xV7KmgbfFVUYl6gjbO6iTud8gquz+m1zerXIk28WDUz+JcnEHT9NRVUEOjqi2ULJKnlSWtfUsmY12EXEaoxuXb9eimtan4IXGfhva6ay1NNU68sddR3+G6yK6GOsusU6TTve3Koxs6unauEXDZe/oPQvNI8XV1rrnUeiaO62Wy3XTV1ko5bJcIXvuFVSMRi+Vx/KsRscjXKrMMkRERFVfO5UnaQ4k3DV+sNU2WG5afoKbTuoX2OGnmRz6usSOnhlle35VvL50r2phjscmVzuhUa40HceJ1fFRXjhxbbXV2y5U1VQapS4RSVNPDDUsk5qZzWduyV7GK1WKjWJzr570TDsnC7QWptJ6o1LVXzRVhmZedU3O9QXltc11TDTzKnYsSNYebKMa1FTnRE5l698yD2SPbCqSmbkWJMond6CXGmVT0GBLhRVxgsIWkSnZ3k2JMdwGZNjlV7jg4cuEA4V3gRqhVkcyBnWRyN+kyquyrkxW9FqbmmFy2BvO7v3XZPt+g2L1qYRE8NjkAAAAAAAAAAAAAAAAAAAAAAAFTcY2xVLZkTCSph3rTp+76i2I1dB5TA5jUTnTzmKvc5AK1iojsGZcKhDY9XJldlRcKngSWORW9QwwvTfJhkTckypndDA/oBhd12Ma7JgyLhNkMbkXChthlTKEWROpLl3TZSLIiJsBCk6KncQpvBFLengppo5/KJuzVjMs85Eyuem54brTiXxMstfLT2XRrauJjla160FQ/KZXfLXAjdK/R9kmiYkiVTOzrn3Brop1a5s6yPlR2U7mue/Dfm4e5FRcqVFfw90lbbTLHPDXVEPb1NSkGWzulknp3072o17VRyKyRURipypttjKGj6U4rcS77fX2vVGj222g8nfIk6UM8K9ojmo1vM9ypvlfTsTNTcReIlq1alLRaRp6qldBzsVYpZnxLlWuRUYqcvVu6ouc9e5ON/Uvbq3OPrXrdqpko7XSUidtiCCONO1fzv81qJ5zvyl8V7ya1dkU1XQmob7f0T4wWtKHx+SfH/xqpsmpZ1tVH29qZ5TJzMTlXz9lciLs30HZnE1i4w4zM6GCNcIqEhidEyYKlQt6E2Bud1QiQIqIWMEfeES4GIiITGoiKYIm95nTZC/Byq4TJiVyZOXOwmDG5dx8GOok5IlVVJtjp3w0SSStw+d3aKmN0Tuz7EQreybX1kdErvNdu/H6Kdfp6e02RERERPA0OQAAAAAAAAAAAAAAAAAAAAAAAAABR3CFaaqWRu0c26eh3f/AM+s4hf3KqlpWU6VVM+LCc2Mtz3L3FHC9UXDkVFTZUXuCWJrk80juTCqZmKitwdJG439IZRXJgxu9HeZ3tTOTCvT2hthemykaREJTsZ3PPG3TizE6obcNP6fdyV8SUzIKlUdPQ9pL2rl5nIjJUjSDlTdvM5VVUzyxheVF8trb1Hp5070rpY0lYxYX8qtXmx5+OXPmO2znbpumaj45aeno46+C4PfTzVHksbm0syq6VWK/CNRufmIq5xj052NUrKniy+uW6TcPrJJcqenlZTztqWJG+VqVPZK9fKEVjMLAieY92Z5UzEjcrIiqdc3COqprvo6lo46CiqKm1MhruzkWoa+aOnhc+ObLVdDyc2PNy9yI7bBcFhqKtt14jqbVDcnMktsrZat/k8j44eViSKxz2t5UdyOa7lznDkXG5U6jgsNx1BFqCnvstBcNMtdU1j0gkWJaXsud7XrjlXzHtdsqu9HhCvEetVmmult0LallrGolZiq7VZEWrSBV5O0jZK5KJO053K1dmxp4Jz5brhzLtQV+gaCeirpoYljiqokWeFWqyoV/NLhy9jHGxvzd3tRUwjnHO8ON3VlsbrHqG1OuEVsbVPWomdyM+Rk5Fd2facnPjlR3Z+dyqucKi43Qju1vpqG1097W5c9HVRUk0L2QvcsjKpysgVG4z5zkVOm2N8Jua3eqK8x39l3sGi7c6ro4GrFW1MrV7OVZEiVeVJGrhKZ0u6ojt2tRcZQiR2TUsdkraRuidOpNI+Kalp4ahUjdLFTyKzDudrkYlRHCjMIzljl+aitVU2np6jC5Hta9MojkRUymF39HcS4t1Q8yu154xQRVHxb0dZZFYkS0sVZVNa9WpTy9rz8svKmZ2wNYqPxyvXmwqcyb5pv4xPSsdqJtGjvK5W0iUzFankyL8mr8vdl6puvTrjCGF+tggbncs4G7ZINM3LkXHQs4W7IgKkRtwm52c7uOU6HRy56msR1e7wMEsiRtVyqZFXddyNHClwrmUqKqRs8+RUT8lF6Z9P1Z8DIsbHSOZC6rlbiSfdMpujO73/QWxxhDk2AAAAAAAAAAAAAAAAAAAAAAAAAAAFJdaVIJkqmJ5ki4f6HePtLsxzwtnifC/o9MAUcL0VEzkyuXKEXlfTyugk3cxcKvcvpJLVym4ZrDIm2DA7oSpE70QjStVMhYwP+cUt505bLxI2aujmV7OTlWOZzMcruZOi9c9/+phvtu1pU175rJf6akpVRiNikha9dvndY1VqrumcuTvx3Lmjp9RNubpamvo5KBebEbYVbJ3cu+/pz7AqkuOg9OXCnfR1dPNJHI2Rq80yqqI9I0d167RRphcp5qbZ3K5+gbJExkUU1W1kb+dqc7VVF5+ZfOVvNhcInXom2FVyruU2y4IU/iE1Q01hoLfQwUUXauSDs/lOfke9WfN5lZyovTGMYXvKqo0JYapHR1TJJonuk543tjVHNfnLV8zPevnZ5lzhyuRExs8y4I+cfSZq6qJNG2d8czIn1MC1EawyvjkRXOYrEYjcuRemEXPXKb53Rc9Fo61Udzp7xBLV+UwMkYrnSIrZedGovMmMJ8xq4byplFXGVVVtWO78GZq5whpfaqj0RYEY+JYahzJIVgVHzvd5iuV2OZcu/KcnXGF9RtlKzdPYm5rd0ptVTSv8AwDcaGmjWkexizxK57ahXIrX9FRWo3KcuOq9+2JdHQa2bJJzXW3yske5WI6NWLG3o1Ew3fx3zuYK26mjXYsYk7yJStdyI57URypujVyiL6F/0JrUwmDUR2XKbGJ78qdnuwmDE52EyvcLRgq6htPEr3KiIiLnJa2ajdS0qumx2sq87vQncns+vJWW2mW5V6ySNcsFOqOVe5z+5PZ1+g2UQAAUAAAAAAAAAAAAAAAAAAAAAAAAAAAAAFVeKXLEq42+czaRU72/6EGKTKeg2FzUeitciKiphUUoKu319JIvkVN28a5wiPRFRPBchKyrhUI8ze9EMEst9hbtYJn/qyMX7SJJc7u3KSaXuOf6rGu+pRsMSXpt0MEibewjSXK4r+bd2T1Uzl+oxvr7g5Fxp27f5R/uJsXK4mTfJCqOp3mq7l1TTV4X+5v8AcQJqm7O/Na9/5F/uHaGUm6ZUjKqpnc6yS3l2f+y17/yEnuMSNvLlz8Wryn9wl+6TYuJUa5M7FXKEaGG7O62C6t9dBL90mQ0lyVU/6ouKeujl+6NiJdO3OC4pI9yupqSuyirba1O/elkT7C2poqpq72+q9sLkGr9WEDemU7jMqqYY/KcfzKoT1sMvZ1Tk/mkqetEIjG93tIdRI97m08KK6R68rUTxLBKKqcm8Dm/R7zPb7WlLKtTK7mkVMJ/VTvLBJoKOOgpm00W6N6uXq5e9VJQBoAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAOMIcgAAABxhDkAcYQcqf8qcgDjCHIAAAAcYQ5AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAP//Z"

class="d-block w-100" alt="임의의 사진"><br>민감해진 두피를 진정시키는 헤어 마스크<br>

모링가 추출물과 비타민 E 성분이 두피에 풍부한 수분과 영양을 공급해 진정 효과를 발휘한다<br>

KÉRASTASE by CHICOR<br>

수딩 젤 마스크 • 200ml, 6만9천원<br>

</a>

<br><br>

{% elif m1p == 0 and m2p != 0 and m3p == 0 and m4p == 0 and m5p == 0 and m6p != 0 %}

<br><br>

탈모<br>

<br>

모근과 모발에 에너지 공급.<br>

탈모의 원인은 다양하지만 머리카락이 자라는 모근에 충분한 영양소를 공급해주는 것이 중요하다. 모근에 영양을 공급해줄 집중 앰풀 및 세럼은 현재의 모량을 유지시켜 탈모 예방에 도움을 준다.<br>

<br>

-탈모 두피 케어 제품 추천-<br>

<a

href="https://www.1300k.com/product/103905037?channelType=GOSHAD&gclid=CjwKCAjwmJeYBhAwEiwAXlg0AYLSWpY73hBivOFOplSoGEUItCDO6MIhV2gv1vGdbRjIoJFIE2vsIhoCCcsQAvD_BwE"><img

src="data:image/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wBDAAMCAgICAgMCAgIDAwMDBAYEBAQEBAgGBgUGCQgKCgkICQkKDA8MCgsOCwkJDRENDg8QEBEQCgwSExIQEw8QEBD/2wBDAQMDAwQDBAgEBAgQCwkLEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBAQEBD/wAARCAFRAVEDASIAAhEBAxEB/8QAHQABAAICAwEBAAAAAAAAAAAAAAMEBQYBAgcICf/EAEoQAAICAQIEAwUEBgQJDQAAAAABAgMEBREGITFBElFhBxNxgZEiMqGxFEJScsHRM2Ki8AgVFiMkNIKTskNERVNUZHN0kqOzwvH/xAAXAQEBAQEAAAAAAAAAAAAAAAAAAQID/8QAGxEBAQEAAwEBAAAAAAAAAAAAABEBAiExElH/2gAMAwEAAhEDEQA/AP1TAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAABHO2qv79sI/GSR0ebiL/AJeD+D3AnBW/T8T/AK9fRnKzsR/84gvi9gLAI4XVWf0dsJfuyTJAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAEd99WPW7bpqEV3YEhXyMyjFW91mz7Jc2/kYzI1W/IbhjR93W+Xif3n/IrwrSfie7b57vm2Bet1W2xbY1XhT7z6/QgnkZNq2svm0+qXL8joovoSRrb57ARKqO26ivodlX/fYnVe3U7qHkBXVaZw6nts0WvB6nDimTsUpY8H96J3jfl0/0WRNJdFLn+ZYlFEcoFHerWLK9o5VPi/rQ/kzIY+Xj5UVKmxS813XxRhpR7EFlW0lKLcZLmnF7NDcGzgweLrdtLVecvFDp7xLmvijMV2V3QVtU1OMuaae6AkAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAxuqapHCXual4r5reK25R9WBNnahTgw+19qyX3YLq/5IwdluRm2e9yJv0gukfgRRjZbbK61uU5vdt9y1XBJAcwgkttiWEG+x2hBdyaMEuwHEK+W7JFDdfE7RguuxJ4fUJ6jVfLuc+HbkSJJBpMqumyOrW3REjXY6voQ8RtcyOS7EknuyKcuyGiGaIpeiJJyWxDJ7iUQXrffkRY+fkabd7yredT38dTfJ+vo/UktaZUue6A27DzaM6mN1E00+q35xfkyyaJhajfpWT7+ptwk172H7S/mbriZVObjwyseW9di3TAmAAAAAAAAAAAAAAAAAAAAAAAAAAAAAACjqWo16fR43zsluq4ftP8AkBHquqLArUKvDK+f3YvsvNmBqrlKTssk5Tk922+bOqVt1ksi5uVk3vJlmuIEtcF1ZPCPoRwWxPDoN6EsI7EsUnzZ0XM7ppdQO8ep2Oifc7eJAcg48XmcOQHMn2I5y5bCU0yKU0gE3siCcnvuxZZ5ley1RXUDmc0u5XssOllu76kE7NmWrjvOXLfcrWzXc4st35Irzt9SGul8lJNlrhvWVpucsO+x/o2TJLn+pN8k/RPo/kY+2xPkihkyUk9wj1wGA4Q1mer6b4ciSeRjNV2eq2+zL5r8UzPgAAAAAAAAAAAAAAAAAAAAAAAAAABFffVjVTuukowgt22ajflW6hkSybm1FvauHaMSzxHqUsjJ/wAXUzXu6tnbt3l1S+X5/Ao1PlyAs17FiBWra5E8GvMLqzHk0TRZXjJEimNxFmLWx2T8mQKe3c7xs7CwTKXbc58S9CJS3Dmkx0JXM6ylv1I3Yl0IZW+oEs7duW6K9lqS5sisvS+JUtvfmBNZft0Ktl6ff8SG2/fuVp3c+YE87vUgnd6kFly8yvZkbAWJ3ciCVvqV5X9iGV3qSrmJ7LV0KV9my5HE7irdcn3FXMZXhbWFpWv0TnZ4aMh+5t3lstn0b+D2/E9aPAcixPdeZ7TwxqD1PQMHNm25zpSm33lH7Mn9UynLGVAAZAAAAAAAAAAAAAAAAAAAAAAx+talHSsGzKezm/sVx85vp/f0MgaPxhqTydTjp8Gvd4qTlt3m1v8AgtvqwMfTNtOUnvJvdt92W67FySMfTPZ7dCzCYF6Mt+5PCxdGyhGwljakkBkIz7Ikjb5lBXbc0zurm+jCxfVi35cjurNumxj1d57ndX7C1Ive9f8AdnDt82ilK/1I5ZHfcdC7O+KW+5Wtyd+jKlmTtyK9mR6/iBYsyVtyZVsv37kNly26ledz58wJp3eqK87tiCy/fuV7L9u+4XE9l/kyrZf23/Er25C6Nla2/wBTOri1K/ZdSKWRyKcr+5DO/ltv+JKsW55HqV5379CpblRgnOc1GK3bbe2xBDLrvg51zUkpOO6e/f8Aj1+DRN1YntuT33Z7J7O3vwfp736+9/8AlkeEajnww8eV0nz6RXmz3L2ZKX+QukOT3cqpTfznJ/xNcf1OXjagAaYAAAAAAAAAAAAAAAAAAAAAEWRdDHosyLHtCqLnL4Jbnlc8q3NyLMy5r3l83OW3bd9De+M86OFoN68W0slqiK8/F1/sqR59TyitwLsH0RNGzbqVYy+R3U9uuwFtW9jsrimprkc+8Xn0CxeV3kzvG/1KEbEukkd427BGQVz8zl3MoK5Luc+/WwF2V2/cind6lSWQl3IJ5K8wLdl23cgneipPIXmiCzIXVMLFqy/1ILMhbPcq2ZS8yrZkrbZMlWLNuSvMqW5LfLcq2ZHMrWZA3VWrcj1K1mRv3Ktl75kEru5jdWLU8h+ZTz9Qjg4luZZGUoUxc5qPXwrq/kt2dHbv3OvvH3f4kVj8Wq3Vv9J1OraEZTdD3Sc4SUWny5pdVs+e22/NF+duNp+Mucaqao7Jfw+JT1HWcbBW05eK3bdVx6v4+S/vzNV1DUsvUbE75bRj92Efur+fx/8Awsp4t5uoz1TLU9nGuPKEe6R9TezuCr4H0WP/AHOD+q3PlDBrlK2MYxbbaSS7s+veF8CzS+HNM0+9bW4+JVXYvKSit19ToxyZYABkAAAAAAAAAAAAAAAAAAAAAaL7Sclu7T8JdPt2y+PJL+JqtVmySZs/tKo8F2n5m3KSnS3235NL/i+hqULAuLyn6nKn5lVW9kzn3r8w0tqwePkVVY0d/eLyDCb3hz75oqTt2exHK7buFi88hrv+J0eT/WMfPIXZkMslrcLGSnlbP725BPKa7mPllN8myGeSCMhPJX92V7MrbfdlCeTv3IZ5L89zNVdnk7d/xK88nl1Kc8heZBLI5Mm6sWrL0yvZdt3K8rd3vuVsjNox0pZGRXUn+3JLczVW5WtkUrF3Zg8zijFqfu8WuV723cvuRXpu1v8ARNGGyNb1LJf2sj3cW/u1rw7L49d/nsXM3RtGXq2Fh7q7IXjj+pH7UvounxfIwmbxFlXr3dEfcRfdPeX17fL5Mwy5bRW23N8vN9QaziJHJzbk3u29233fmcwTb6FPO1bTNMVS1DNrpldLw01t72XS/ZrgvtTl/Vimzpp9Gr67NXW1W6dgfbUa3JwybtnsnNpf5qLW78EX7x7x8Tq2cXpI9F9jstKzOOcD9Lq9/TCyUa5xknBX7S8O/Z7Sj5/eaXPaSX1Ouh81ey7SYPiTScWitQrpuhKEYrZRjWt0kl0SUUtlySWx9KroGeTkABkAAAAAAAAAAAAAAAAAAAAAYziHSYa3o2XpzUfHbW/dSl+pYlvCXylszwXR+J678i7StTg8PUcW2ePkVWbLa2D2a69e/qnut0fRp4X7fPZVmZ7s9ovB1ahrNNarz6Wt68qiK5SlFbPxR2X2lzUfNJplz8SqxL0OVa91ueJaH7UMrBvo0vNlPCy7X4YYmbzjOaXNV2LlLvtFNS84o3bB9oGPv4dRw7KmuXirfiT+KfT8Q1G8q3lsmHcujNaq4z4ftXLPUJeUq5L+BM+KNCa56rjr96fh/MUjNTv7pkE7/NsxMuJdC6/43xP96v5la3ibQ1/0pjv92fi/IlWMvLIaRBPJ3MHdxboVcW56jH0+xP8AkY+7jjQ4v7Fttv7sNvz2JSNknkc+pFPI9dzTMvj+lf6ngSmvO2fh/BJ/mY6/jjUrHtTVRH1cG2vx/gXsjf5ZDIrcqFcXOyyMYrq5PZHmuVxHrOXynnWRj5VvwfjHZlJ222tSsm5Nd31+vckV6DlcS6XjSUP0pWyfapeJfVcl82YzK4xrSaxcWUpbNJ2NJb/Bb7/U1CTUIu2c1GCW7lJ7JfFswlntA4JqueNHibByLl1qxLP0ia+Ma/E/wHzhW63cR6rkLZ5Lgn2hFRXy7r6lJzsslK2c3KcuUpt7yfxfVmsPjjCnzwdD1rMi+ko4qoi38ciVZXp1X2j6pfvhcM6ZpeI39i7Nyp5Fkl/4dajBfK1l6w7bium2yOuTk42HRLKzMmrHphznbbNQhFerfJGvT4b4r1KEq9T4zyseE+U46bRVipL0lJW2J/CcSxpPs64U0qxZENMjmZakpfpmfKWXkeLz95c5yj8ItIpENfHmk52b+gcOYmdr9sVvKzTqk8WC9cqyUKN/RWOXoTrE411jLrdubh6HgRW9kMTbJy7ZPolbbBVVpd9q7f3l1NmrpUdmo7tfrNty+r5/LoWa6k3yB1jFaNwzpulZN2fRXZZm5NapuyrrZ23Tr338DnNtqO/PwR8MP6qNjxaV9mKikkkkkui8jpTUvIymDjynNJJvfpt8Qza9K9jWlOzW557h9nFpk/F5SlyX4eI9ofQ1P2c8OZHD+iyWbX7vJypqc4d4xS2in69X8zbH0DOuQAEAAAAAAAAAAAAAAAAAAAAAA6yipJxkt0+qOwA+cPbR7HcDElbquHpVeRo+Q976nWpLGnvy5P8AUb6dk+XkeKXcFaphzhPhriXLwfd8vcWS99Q12ThPxKK9IeA+88jHoyqZ4+TVC2q2LhOE4pxlF9U0+qPIOMfYZ4756hwbbCEZLeWFfPaKfX/Ny25deknt6hrOT5rlk8bYspQy9G07KUeSsrdlCl6pJXP67FO7ivV8WxxzOBdT2W6cse+lr/3JVv8AA9O1HRdT0fIlhavp92LdF7ONsNt/g+jXqilPGrf6q+garzyHGuBL/WNJ1fGfdTqrlt/6JyOJcZaE3/R6g3/5WRv86F25fMj9w10b+oW486u4xw1/QaJq13rGuqK/tWIh/wAqtRte2PwhqT/ftoX/AAzkz0l43p9Tq8WD/VQK89hqvEt0fEuF41J9HbkWflGl/mcK7jSVm60rFcX+ym0vTeU4b/Q9A/RY9NiKWMunhROxo9ui8ZZnOet4+JF/q49KTXzmp/mdKeB86zaWbxLqlnmllWRT+KhKK/A3l4+3Ye52EGlx9mvDtkve5mFVkz33crK42N/Ofif4maxOHdJwoqGLp9VUUvuxWy+nQzXuX5nKp27FiXFOnEppf+aohD92KX5FhR77Eyp59CSFe3YKgjB7E1dfoWsTAysyz3WHi3Xz/Zqrc39Ebho3si451euNsdJ/RK5bbSy5qv8As85fgE8aXXW2Wa633/I9g0P/AAfpRnGziDW4+Db7VWHB7v8A25r/AOpv2h+zfg7QJQuw9GrnfDnG69u2SfmvFyT+CQTeWPD+GvZ5xPxDZCWNpltONPZvJvi4Vpea35y/2Uz2Xg/2Z6Pwt4crIazs5JbWzglGv9yPPZ+vX4G5pJHIZ3QABAAAAAAAAAAAAAAAAAAAAAAAAAAAAABBlYmLm1OjMxqr6n1hbBSi/kzTNV9j3BepzlbVh34M5f8AZrdop/uyTS+SN6AHj2o+wJOLlpHET3/Vhk08vnKL/ga3l+xHjehv3NWBkLs6sjb/AIkj6E3Q3QWvmm/2T+0Crrw7OSX7GRVL8pFafs046h14ZzPkov8AJn0/shsgv0+XH7OuN+n+TGf/ALs4fs144m9lwvm/OG38T6k2Q2QPp8vQ9lHH9j2jwzkL96yuP5yLNXsW9oNvKWiwrX9fKq/hJn0yAlfOuN7BONrmvf3aXjx7+O+UmvlGL/M2DB/wdV4U9R4n+13jRjcvq5fwPagC68xw/YDwdTtLKzNTyn3UrYRj/Zjv+Jsmk+zLgfRl/onDmLOX7eQndL6z32+RtO6G6BdR0UUY1aqx6YVQXSMIqKXyRKAEAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAH//2Q=="

class="d-block w-100" alt="임의의 사진"><br>탈모 개선 효과가 있는 LED 치료기<br>

2백50개의 레이저와 LED 광원이 모낭 세포의 대사를 활성화시켜 영양 공급을 원활하게 하고<br>

모발 굵기 강화 및 모발 수 증가에 도움을 준다<br>

LG<br>

프라엘 메디헤어 • 150만원<br></a>

<br><br>

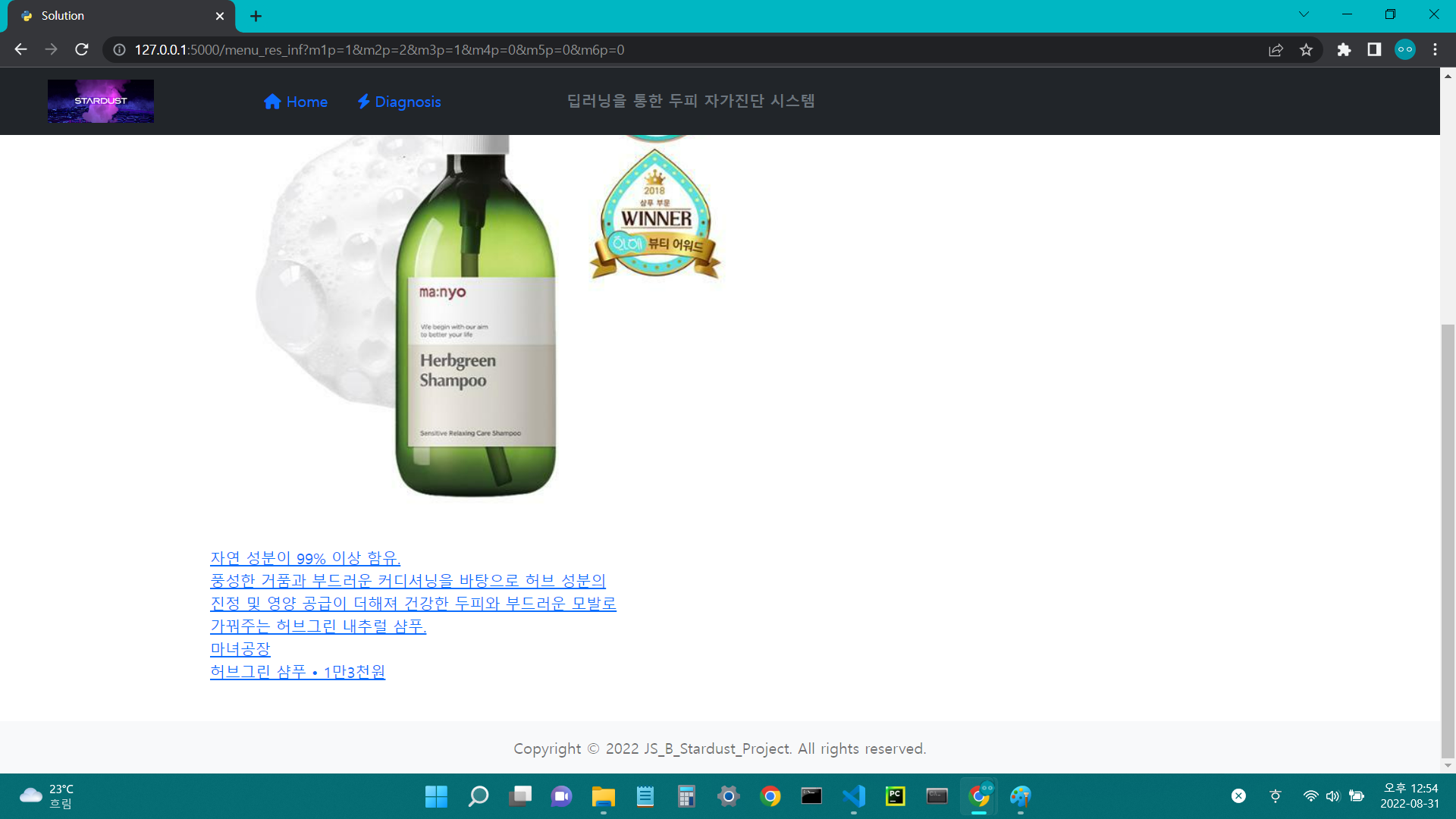

{% elif m2p != 0 and m3p != 0 and m4p == 0 and m6p == 0 %}

지루성<br>

<br>

스트레스와 면역력 관리, 생활습관 개선.<br>

약용 샴푸를 꾸준히 사용하여 말라세지아 진균의 억제를 목표로 하거나, 피부의 각질형성을 정상화하고 피지분비를 억제하는 식으로 지루성 피부염을 치료한다.<br>

<br>

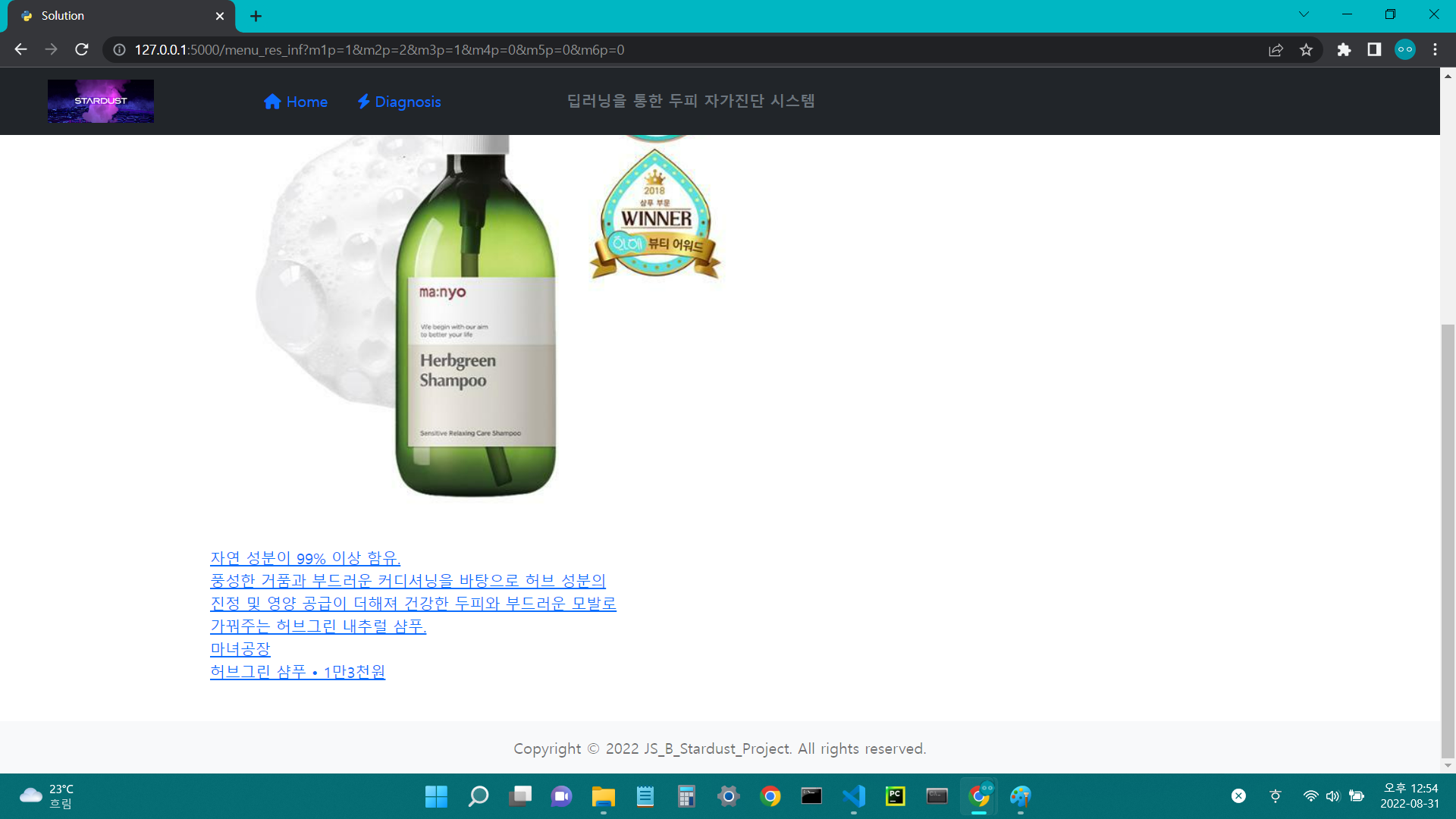

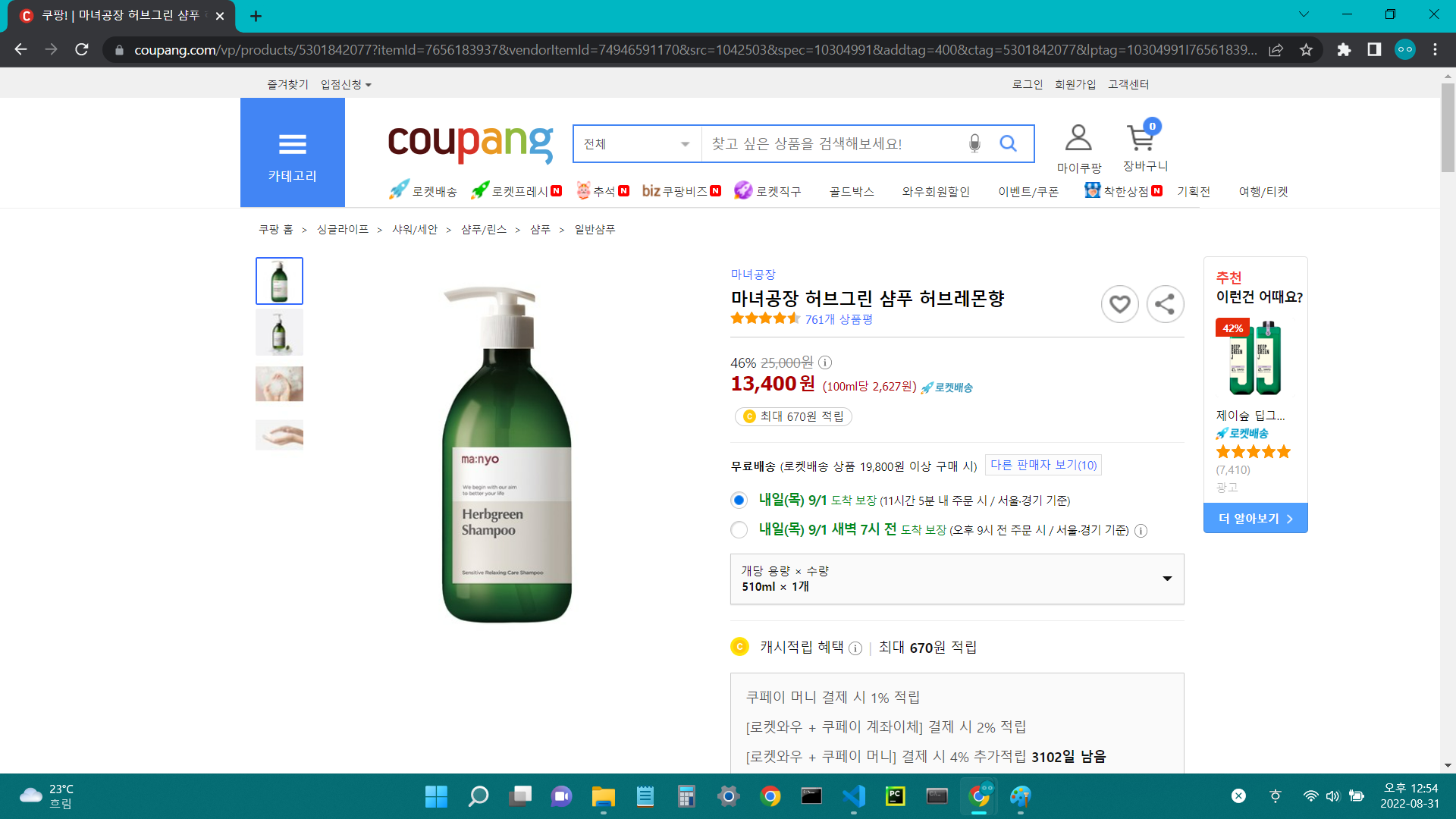

-지루성 두피 케어 제품 추천-<br>

<br>

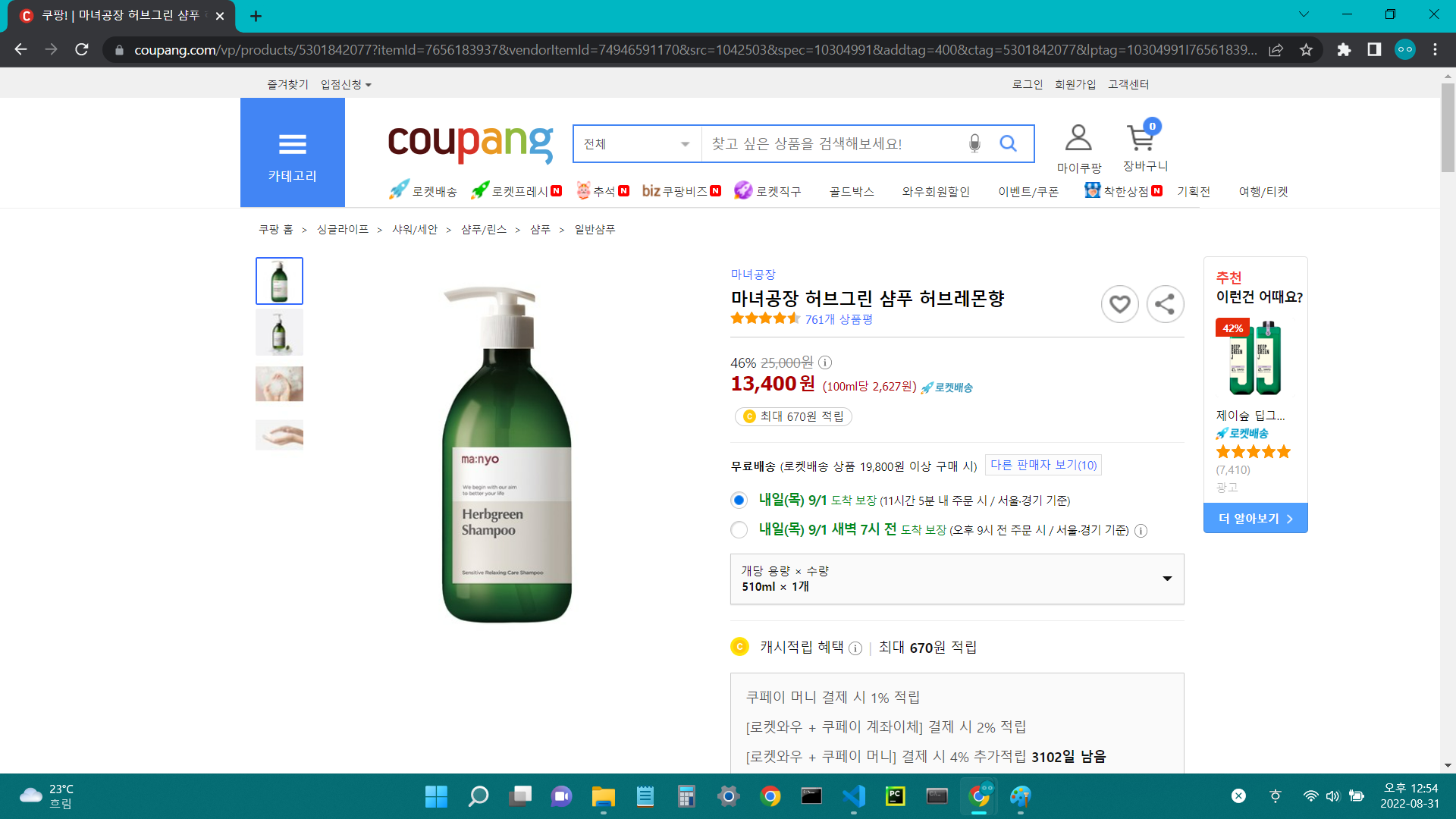

<a

href="https://www.coupang.com/vp/products/5301842077?itemId=7656183937&vendorItemId=74946591170&src=1042503&spec=10304991&addtag=400&ctag=5301842077&lptag=10304991I7656183937&itime=20220825084720&pageType=PRODUCT&pageValue=5301842077&wPcid=16596243712310861427697&wRef=&wTime=20220825084720&redirect=landing&gclid=CjwKCAjwmJeYBhAwEiwAXlg0AeyBAbpi1m2zdNmkiD4AsJ4pqhMGPUFka9ExPZz79SRjjFxEXMLM5BoCvdEQAvD_BwE&campaignid=15022502786&adgroupid=&isAddedCart="><img

src="https://image.oliveyoung.co.kr/uploads/images/goods/550/10/0000/0012/A00000012233908ko.jpg?l=ko"

class="d-block w-20" alt="임의의 사진"><br> 자연 성분이 99% 이상 함유.<br>

풍성한 거품과 부드러운 커디셔닝을 바탕으로 허브 성분의<br>

진정 및 영양 공급이 더해져 건강한 두피와 부드러운 모발로 <br>

가꿔주는 허브그린 내추럴 샴푸.<br>

마녀공장<br>

허브그린 샴푸 • 1만3천원<br></a>

<br><br>

{% elif m3p == 0 and m4p != 0 and m6p == 0 %}

염증성<br>

<br>

두피가 붉은 색을 띠며 표면에 홍반이 확인되는 경우나 세균 감염으로 인한 염증.<br>

<br>

-염증성 두피 케어 제품 추천-<br>

<br>

<a

href="https://www.coupang.com/vp/products/1321815613?itemId=2314868722&vendorItemId=70311610532&src=1139000&spec=10799999&addtag=400&ctag=1321815613&lptag=AF4891311&itime=20220825085628&pageType=PRODUCT&pageValue=1321815613&wPcid=16596243712310861427697&wRef=www.lowchart.com&wTime=20220825085628&redirect=landing&traceid=V0-101-a96024a09f36a0f6&placementid=&clickBeacon=&campaignid=&contentcategory=&imgsize=&pageid=&deviceid=&token=&contenttype=&subid=&impressionid=&campaigntype=&requestid=&contentkeyword=&subparam=&isAddedCart=">

<img src="https://thumbnail6.coupangcdn.com/thumbnails/remote/490x490ex/image/retail/images/2020/02/28/11/3/73c69caa-9de3-4835-b13c-56712717c33e.jpg"

class="d-block w-20" alt="임의의 사진">

<br> 두피 및 모낭을 보호.<br>

티트리, 쑥잎, 홍삼, 판테놀 등 21가지 성분 함유.<br>

제노트리<br>

두피 에센스 • 1만4천원<br> </a>

<br><br>

{% elif m3p == 0 and m4p == 0 and m5p != 0 and m6p == 0 %}

비듬성<br>

<br>

호르몬, 영양상태, 샴푸 습관, 스트레스, 다이어트 등이 비듬 두피에 영향을 미친다.<br>

각화주기 이상으로 인해 정상두피에 비해 각질이 생성되고 탈락된다.<br>

<br>

-비듬성 두피 케어 제품 추천-<br>

<br>

<a

href="https://epunol.co.kr/product/detail.html?product_no=41&gclid=CjwKCAjwmJeYBhAwEiwAXlg0AeAokMdca6Q1nvhvaL6c2UXzxUxYmz9n57_Kek7sVLopzZsxAwpqgRoCHJIQAvD_BwE"><img

src="https://img.29cm.co.kr/next-product/2021/09/23/9e88a0d1dabd4b03a3bfca0363c69db3_20210923173432.jpg?width=700"

class="d-block w-20" alt="임의의 사진">

<br>두피 속 피지 분비물과 잔여 노폐물을 씻어내는데 도움.<br>

에퓨놀

약산성 샴푸 • 2만6천원<br>

</a>

<br><br>

{% else %}

복합성<br>

<br>

두피를 중점적으로 샴푸 후 모발 끝 부분에 트리트먼트와 에센스를 발라 주면 효과적.<br>

<br>

-복합성 두피 케어 제품 추천-<br>

<a

href="https://ch6mall.com/product/detail.html?product_no=314&srvc_anl=gcs_ssc_c000000827&gclid=CjwKCAjwmJeYBhAwEiwAXlg0AXaJD_vL_cfNog1tIKD4wXMChThuWFUekNyp2V3yIMWW_GY32IwlTRoC2NkQAvD_BwE">

<img src="http://cdn.hantoday.net/news/photo/202111/31856_32006_2123.png" class="d-block w-20" alt="헤어세럼"><br>풍부한

영양을 공급하는 헤어 세럼<br>

자연 유래 성분이 두피에 영양을 공급해 유수분 밸런스를 유지해준다<br>

푸석해진 모발에 윤기를 부여하는 헤어 세럼<br>

CH6<br>

스칼프 싹 세럼 두피 에센스 • 140ml, 1만7천원<br>

</a>

<br><br>

{% endif %}

</tr>

{% endblock %}

[operation]

[colab] train code add graph

from google.colab import drive

drive.mount('/content/drive')

!pip install efficientnet_pytorch

import time

import datetime

import os

import copy

import cv2

import random

import numpy as np

import json

import torch

import torch.nn as nn

import torch.optim as optim

import torchvision

from torch.optim import lr_scheduler

from torchvision import transforms, datasets

from torch.utils.data import Dataset, DataLoader

from torch.utils.tensorboard import SummaryWriter

import matplotlib.pyplot as plt

from PIL import Image

from efficientnet_pytorch import EfficientNet

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

hyper_param_batch = 1

random_seed = 100

random.seed(random_seed)

torch.manual_seed(random_seed)

num_classes = 4

model_name = 'efficientnet-b4'

train_name = 'model1'

PATH = '/content/drive/MyDrive/project/scalp_weights/'

data_train_path ='/content/drive/MyDrive/project/train_data/'+train_name+'/train'

data_validation_path = '/content/drive/MyDrive/project/train_data/'+train_name+'/validation'

data_test_path ='/content/drive/MyDrive/project/train_data/'+train_name+'/test'

image_size = EfficientNet.get_image_size(model_name)

print(image_size)

model = EfficientNet.from_pretrained(model_name, num_classes=num_classes)

num_classes = 4

model = model.to(device)

def func(x):

return x.rotate(90)

transforms_train = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.RandomHorizontalFlip(p=0.5),

transforms.RandomVerticalFlip(p=0.5),

transforms.Lambda(func),

transforms.RandomRotation(10),

transforms.RandomAffine(0, shear=10, scale=(0.8, 1.2)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

transforms_val = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

train_data_set = datasets.ImageFolder(data_train_path, transform=transforms_train)

val_data_set = datasets.ImageFolder(data_validation_path, transform=transforms_val)

dataloaders, batch_num = {}, {}

dataloaders['train'] = DataLoader(train_data_set,

batch_size=hyper_param_batch,

shuffle=True,

num_workers=2)

dataloaders['val'] = DataLoader(val_data_set,

batch_size=hyper_param_batch,

shuffle=False,

num_workers=2)

batch_num['train'], batch_num['val'] = len(train_data_set), len(val_data_set)

print('batch_size : %d, train/val : %d / %d' % (hyper_param_batch, batch_num['train'], batch_num['val']))

class_names = train_data_set.classes

print(class_names)

from tqdm import tqdm

def train_model(model, criterion, optimizer, scheduler, num_epochs=25):

if __name__ == '__main__':

start_time = time.time()

since = time.time()

best_acc = 0.0

best_model_wts = copy.deepcopy(model.state_dict())

train_loss, train_acc, val_loss, val_acc = [], [], [], []

for epoch in tqdm(range(num_epochs)):

print('Epoch /{{}}'.format(epoch, num_epochs - 1))

print('-' * 10)

epoch_start = time.time()

for phase in ['train', 'val']:

if phase == 'train':

model.train()

else:

model.eval()

running_loss = 0.0

running_corrects = 0

num_cnt = 0

for inputs, labels in dataloaders[phase]:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

if phase == 'train':

loss.backward()

optimizer.step()

running_loss += loss.item() * inputs.size(0)

running_corrects += torch.sum(preds == labels.data)

num_cnt += len(labels)

if phase == 'train':

scheduler.step()

epoch_loss = float(running_loss / num_cnt)

epoch_acc = float((running_corrects.double() / num_cnt).cpu() * 100)

if phase == 'train':

train_loss.append(epoch_loss)

train_acc.append(epoch_acc)

else:

val_loss.append(epoch_loss)

val_acc.append(epoch_acc)

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

if phase == 'val' and epoch_acc > best_acc:

best_idx = epoch

best_acc = epoch_acc

best_model_wts = copy.deepcopy(model.state_dict())

print('==> best model saved - %d / %.1f' % (best_idx, best_acc))

epoch_end = time.time() - epoch_start

print('Training epochs {} in {:.0f}m {:.0f}s'.format(epoch, epoch_end // 60,

epoch_end % 60))

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best valid Acc: %d - %.1f' % (best_idx, best_acc))

model.load_state_dict(best_model_wts)

torch.save(model, PATH + 'aram_' + train_name + '.pt')

torch.save(model.state_dict(), PATH + 'president_aram_' + train_name + '.pt')

print('model saved')

end_sec = time.time() - start_time

end_times = str(datetime.timedelta(seconds=end_sec)).split('.')

end_time = end_times[0]

print("end time :", end_time)

print('best model : %d - %1.f / %.1f'%(best_idx, val_acc[best_idx], val_loss[best_idx]))

fig, ax1 = plt.subplots()

ax1.plot(train_acc, 'b-')

ax1.plot(val_acc, 'r-')

plt.plot(best_idx, val_acc[best_idx], 'ro')

ax1.set_xlabel('epoch')

ax1.set_ylabel('acc', color='k')

ax1.tick_params('y', colors='k')

fig.tight_layout()

plt.show()

return model, best_idx, best_acc, train_loss, train_acc, val_loss, val_acc

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.Adam(model.parameters(), lr=1e-4)

exp_lr_scheduler = optim.lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

num_epochs = 10

train_model(model, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=num_epochs)

[colab] test code add graph

from google.colab import drive

drive.mount('/content/drive')

!pip install efficientnet_pytorch

import torchvision

from torchvision import transforms

import os

from torch.utils.data import Dataset,DataLoader

import torch

PATH = '/content/drive/MyDrive/project/scalp_weights/'+'aram_model1.pt'

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

model = torch.load(PATH, map_location=device)

transforms_test = transforms.Compose([

transforms.Resize([int(600), int(600)], interpolation=transforms.InterpolationMode.BOX),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

])

testset = torchvision.datasets.ImageFolder(root = '/content/drive/MyDrive/project/sample/model1sample' ,

transform = transforms_test)

from torch.utils.data import Dataset,DataLoader

testloader = DataLoader(testset, batch_size=1, shuffle=False, num_workers=0)

model.eval()

import torch.nn.functional as F

from tqdm import tqdm

def aaa() :

with torch.no_grad():

test_acc_0 = []

test_acc_1 = []

test_acc_2 = []

test_acc_3 = []

count = 0

global correct

correct = 0

for data, target in tqdm(testloader):

data, target = data.to(device), target.to(device)

output = model(data)

pred = output.argmax(dim=1, keepdim=True)

correct += pred.eq(target.view_as(pred)).sum().item()

count += 1

if count <= 78 :

test_acc_0.append( int(( correct / count ) * 100 ) )

elif count <= 211:

test_acc_1.append( int(( correct / count ) * 100 ) )

elif count <= 334:

test_acc_2.append( int(( correct / count ) * 100 ) )

else :

test_acc_3.append( int(( correct / count ) * 100 ) )