CPU vs GPU

Throughput: number of computing tasks per time unit.

Latency: delay between invoking the operation and getting the response.

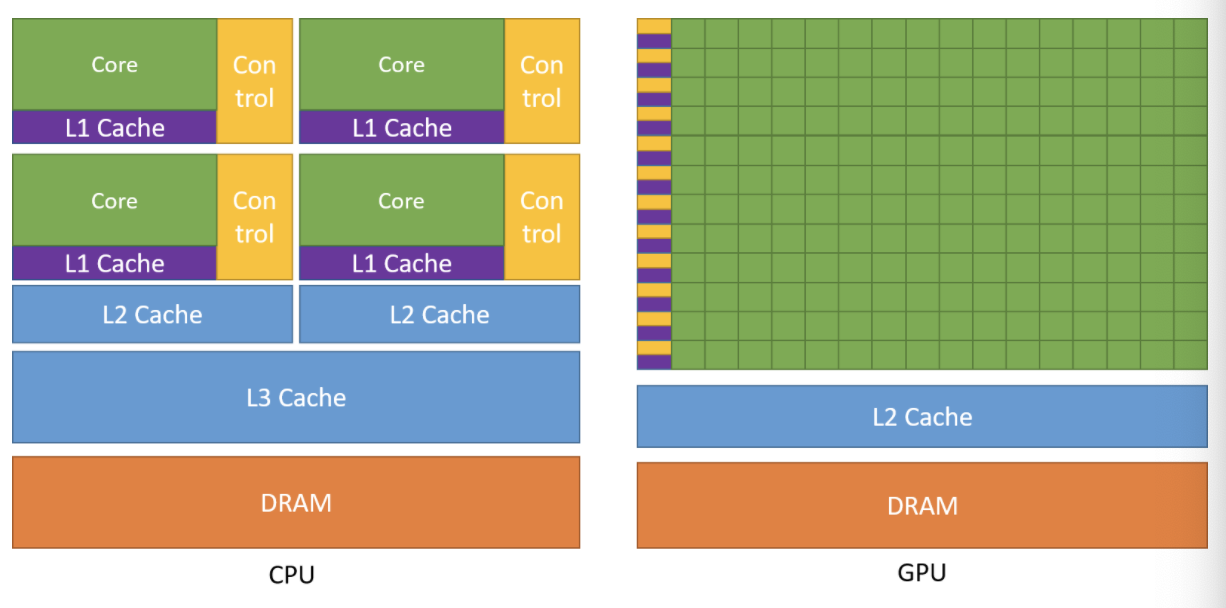

Fundamentally different design philosophy of two processors

CPU

- execl at sequence of operations (threads) asap

- can exectue tens of these trheads in parallel

- data caching and flow control to reduce intstruction and data access latencies

- designed to minimize latency at the cost of increasesd use of chip area and power (latency oriented style)

GPU

- execl at executing thousdands of them in parallel

- data processing

- memory bandwidth x10 that of CPU (data can be transfered from memory system to processors more quickly)

- reducing latency is more expensive than increasing throughput, thus make hardware smaller to put more ALUs on chips (throughput oriented style)

***

Challenges

- it can be difficult to design parallel algorithms that works better than sequential one.

- execution speed of many applications are limited by memory access speed

- execution speed of parallel program is often sensitive to the input data characteristics than their sequential counter parts.

- many real world problems are most naturally described with mathematical recurrences. Parallelizing these problems often require non-intuitive way of thinking about the problem and may require redundant work during execution.

Amdahl's Law

- The level of speedup one can achieve through parallel execution can be severely limited by the parallelizable portion of the application.