PaperReview

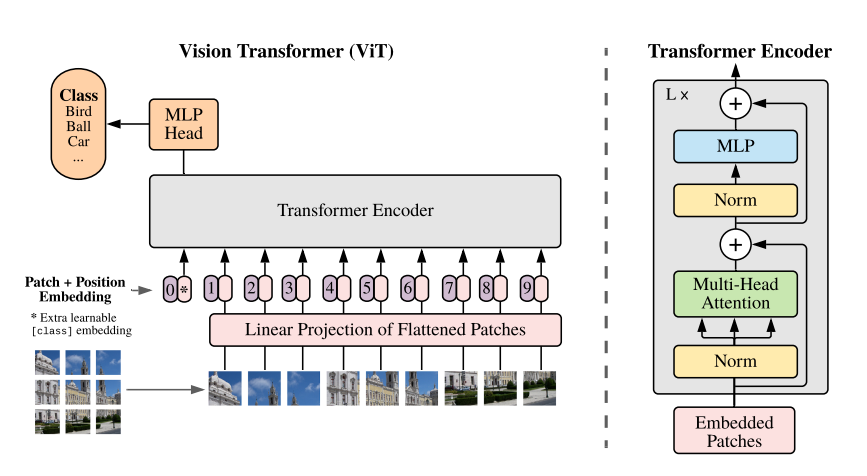

1.AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE : Review

In NLP, Self-Attention-based arcitectures became major model. Transformers'computational efficiecy and scalability make it unprecedented size trains.O

2024년 6월 30일

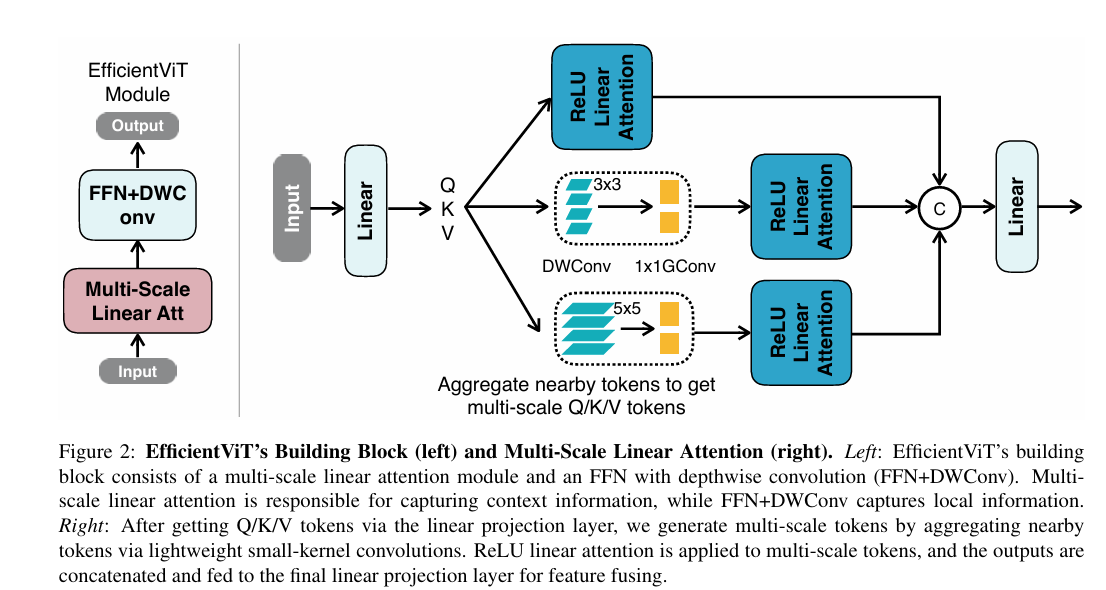

2.EfficientViT: Multi-Scale Linear Attention for High-Resolution Dense Prediction : Review

Introduction

2024년 6월 30일

3.VAE and VAQVANE, Auto-Encoder Variational Bayes : Review and Short

Introduction

2024년 6월 30일

4.EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks : Review

Introduction

2024년 7월 3일