S3 사용 용도

- backup and storage

- disaster recovery

- archive (cheap)

- hybrid cloud storage

- application hosting

- media hosting

- data lakes & big data analystics

- software delivery

- static website

구성

Buckets

S3 allows people to store objects(files) in "buckets"(directories)

- buckets must have globally unique name (across all regions all accounts)

- buckets are defined at the region level

- S3 looks like a global service but buckets are created in a region

Objects

Keys

- objects(files) have a key

- key: FULL path of object

- ex. s3://my-bucket/file.txt

- key = prefix + object name

- s3://my-bucket/folder1/folder2/file.txt

- there are no concepts of 'directories' within buckets. just keys with very long names that contain slashes("/")

Values

- object values are the content of the body

- max object size is 5TB

- if uploading more than 5GB, must use "multi-part upload"

Metadata

- list of text key/value pairs

- system or user metadata

Tags

- unicode key/value pari

- up to 10

- useful for security/lifecycle

Version ID

- if versioning is enabled

S3 - Static Website Hosting

S3 can host static websites and have them accessible on the Internet.

- The website URL will be depending on the AWS region(where S3 was created).

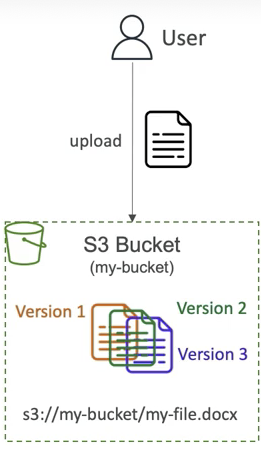

S3 Versioning

S3 versioning is a feature in Amazon S3 that allows you to keep multiple versions of an object in the same bucket. When versioning is enabled, every time you upload a new version of an object with the same key, S3 retains the previous versions instead of overwriting them.

- it is enabled at the bucket level

- same key overwrite will change the 'version': 1, 2, 3...

- any file that's not versioned prior to enabling versioining will have version "null"

advantages

- can protect against unintended deletes (ability to restore a version)

- easy roll back to previous version

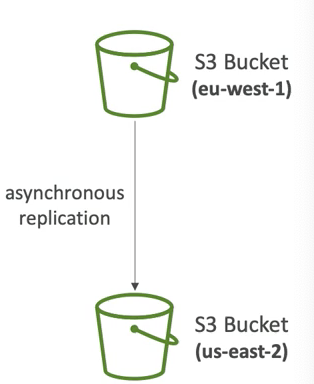

S3 Replication(CRR & SRR)

S3 Replication is a feature in Amazon S3 that automatically copies objects from one S3 bucket to another. This can be within the same AWS region (same-region replication, SRR) or across different AWS regions (cross-region replication, CRR).

- buckets can be in different AWS accounts

- copying is asynchronous

- must give proper IAM permissions to S3

Use cases

- CRR

- compliance, lower latency access, replication across accounts

- SRR

- log aggregation

- live replication between production and test accounts

Notes

- after you enable replication, only new objcets are replicated

- optionally, you can replicate existing objects using S3 Batch Replication

- this replicates existing objects and objects that failed replication

- for DELETE operations

- can replicate delete markers from source to target (optional setting)

- deletions with a version ID are not replicated(to avoid malicious deletes)

- there is no "chaining" or replciation

- if bucket 1 has replication into bucket 2, which has replication into bucket 3

- then objects created in bucket 1 are not replicated to bucket 3

S3 Storage Classes

S3 Storage Classes are different tiers of storage in Amazon S3 designed to optimize cost and performance based on your data access patterns. Each class offers different pricing and performance characteristics. User can mobe between classes manually or using S3 Lifecycle configurations.

S3 Durability

- high durability(99.9999999%, || 9's) or objects across multiple AZ

- if you store 10,000,000 objects with S3, you can on average expect to incur a loss of a single objects once every 10,000 years

- same for all storage classes

Availability

- measures how readily available a service is

- varies depending on storage class

- ex. S3 standard has 00.099% availability = not available 53 minutes a year

1. S3 Standard - Gereral Purpose

- 99.99% Availability

- used for frequently accessed data

- low latency and high throughput

- sustain 2 conccurrent facility failures

- use cases

- big data analytics

- mobile & gaming applications

- content distribution

2. S3 Infrequent Access (IA) Storage Classes

- for data that is less frequently accessed, but requries rapid access when needed

- lower cost than S3 Standard

2.1 S3 Standard - Infrequent Access (IA)

- 99.9% availability

- use cases

- disaster recovery

- backups

2.2 S3 One Zone - Infrequent Access (IA)

- 99.5% availability

- high durability (99.999999999%) in a single AZ; data lost when AZ is destroyed

- use cases

- storing secondary backup copies of on-premise data, or data you can create

3. S3 Glacier Storage Classes

- low-cost obejct storage meant for archiving/backup

- pricing: price for storage + object retrieval cost

3.1 S3 Glacier Instant Retrieval

- millisecond retrieval

- great for data acessed once a quarter

- minimum storage duration of 90 days

3.2 S3 Glacier Flexible Retrieval

- expedited (1~5 minutes), Standard (3~5 hours), Bulk(5~12 hours) - free

- minimum storage duration of 90 days

3.3 S3 Glacier Deep Archive

- standard(12hours), bulk(48hours)

- minimum storage duration of 180 days

7. Intelligent Tiering

- this storage class allows you to move objects automatically between access tiers based on usage

- small montly monitoring and auto-tiering free

- there are not retrieval charges in S3 Intelligent-Tiering

tiers

- Frequent Access tier (atuomatic)

- default tier

- Infrequent Access tier (automatic)

- objects not accessed for 30 days

- Archive Instant Access tier (automatic)

- objects not accessed for 90 days

- Archive Access tier (optional)

- configurable from 90 ~ 700+ days

- Deep Archive Access tier (optional)

- configurable from 180 ~ 700+ days

- Frequent Access tier (atuomatic)

S3 Lifecycle rules

S3 Lifecycle rules allow you to automatically manage your objects over time based on specified policies. It automatically changes S3 storage classes based on rules. Rules can be created for a certain prefix(ex. s3://mybucket/mp3/*) or certain object tags(ex. Department:Finance). There are two key features.

Transition Actions

: configure objects to transition to another storage class- move objects to Standard IA class 60 dyas after creation

- move to Glacier for archiving after 6 months

Expiration actions

: configure objects to expire(delete) after some time- access log files can be set to delete after 365 days

- can be used to delete old versions of files (if versioning is enabled)

S3 Analaytics

You can use S3 analytics to help you decide when to transition objects tot eh right storage class.

- recommendations are for Standarda dn Standard IA only

- does not work for One-zone IA or Glacier

- report is updated daily

- 24~48 hours to start seeing data analysis

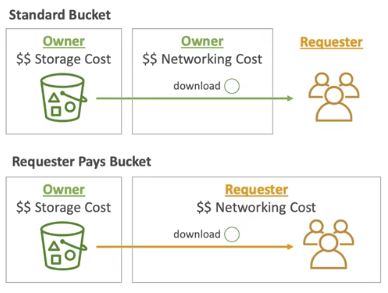

Requester Pays buckets

- in gernal, bucket owners pay for all S3 storage and data transfer costs associated with their bucket

- however, with Requester Pays buckets, the requester instead of the bucket owner pays the cost of the request and the data download from the bucket

- 장점: it's helpful when you want to share large datasets with other accouns

- the requester must be authenticated in AWS (can't be anonymous)

S3 Event Notifications

S3 Event Notifications allow you to automatically send alerts or trigger actions when specific events occur in your S3 bucket, such as when an object is uploaded, deleted, or modified.

- event types

: object creation, deletion, restoration, replication... - destinations

: notifications can be sent to Amazon SNS, SQS, or trigger Lambda functions - object name filtering is possible (*.jpg)

- can create as many S3 events as desired

- s3 event notifications typically deliver events in seconds but can sometimes take a minute or longer

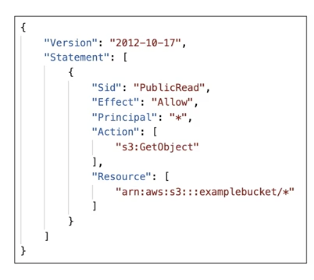

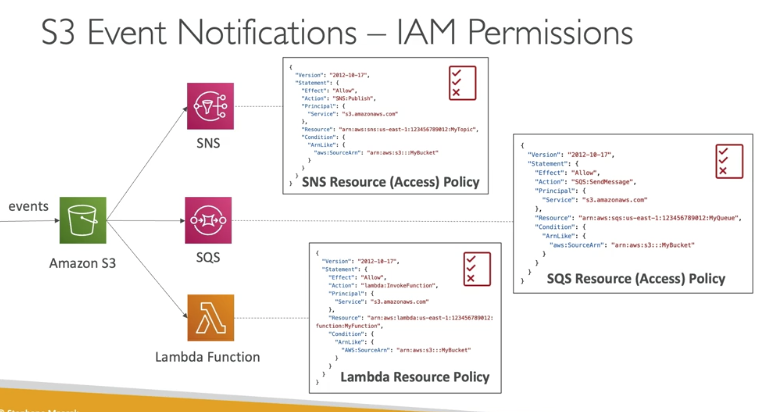

S3 Event Notifications - IAM Permissions

- ACL (X)

- Resource access policy (O)

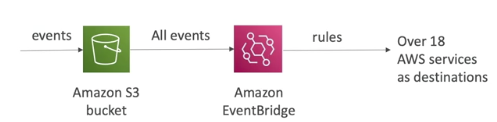

S3 Event Notifications with Amazon EventBridge

- advanced filtering options with JSON rules( metadata, object size, name...)

- Multiple Destinations: ex. Step Fucntions, Kinesis Streams/Firehose...)

- EventBridge capabilities: Archive, Replay Events, Reliable delivery

Performance

Multi-part upload

Multi-part upload is a method provided by Amazon S3 that allows you to upload a single large object as a set of smaller parts. This can improve the efficiency and speed of uploads, especially for large files. You can upload parts in parallel, and if an upload of a part fails, you can re-upload that part without affecting other parts.

S3 transfer acceleration

S3 Transfer Acceleration is a feature that speeds up data transfers to and from Amazon S3 by using Amazon CloudFront’s globally distributed edge locations. When data is uploaded to an edge location, it is then routed to S3 over an optimized network path, reducing latency and improving transfer speeds.

S3 Select

S3 Select is a feature that allows you to retrieve a subset of data from an object stored in S3 using SQL-like queries. Instead of retrieving the entire object, you can use S3 Select to pull only the necessary data, which can reduce the amount of data transferred and speed up the retrieval process.

Batch operations

S3 Batch Operations

- Purpose: Allows bulk actions on multiple S3 objects.

- Features: Perform operations like copying, tagging, ACL updates, and more.

- Manifest File: Uses a manifest (often generated by S3 Inventory) to specify objects.

- Use Case: Automates repetitive tasks across large datasets.

S3 Inventory

- Purpose: Provides a scheduled report of objects in an S3 bucket.

- Features: Lists objects and their metadata in CSV, ORC, or Parquet format.

- Frequency: Generates reports daily or weekly.

- Use Case: Helps in auditing and managing S3 storage efficiently.

Summary

- S3 is a key/value store for objects

- great for bigger objects, not so great for many small objects

- serverless, scales infinitely, max object size is 5TB, versioning capability

- tiers

- S3 standard, S3 infrequent access, S3 intelligent, S3 glacier + lifecycle policy

- features

- Versioing, encryption, replication, MFA-delete, Access logs ...

- Security

- IAM, Bucket policies, ACL, Access points, object lambda, CORS, Object/vault lock for Glacier

- enrcyption

- SSE-S3, SSE-KMS, SSE-C, client-side, TLS in transit, default encryption

- Batch operations

- on objects using S3 batch, listing files using S3 Inventory

- Performance

- multi-part upload, S3 transfer acceleration, S3 Select

- Automation

- S3 event notifications (SNS, SQS, Lambda, EventBridge)

- Use cases

- static files, key value store for big files, website hosting

S3 Batch Use Cases

- when you want to encrypt unencrypted objects on an existing S3 buckets

- when you want to copy existing files from one bucket to another before enabling S3 replication