🏆 학습목표

- Image Segmentation 개념을 이해하고 대표 모델을 활용할 수 있다.

- Image Augmentation의 개념을 이해하고, 기본적인 증강방식을 활용할 수 있다.

- Object Recognition 개념을 이해하고, 활용할 수 있다.

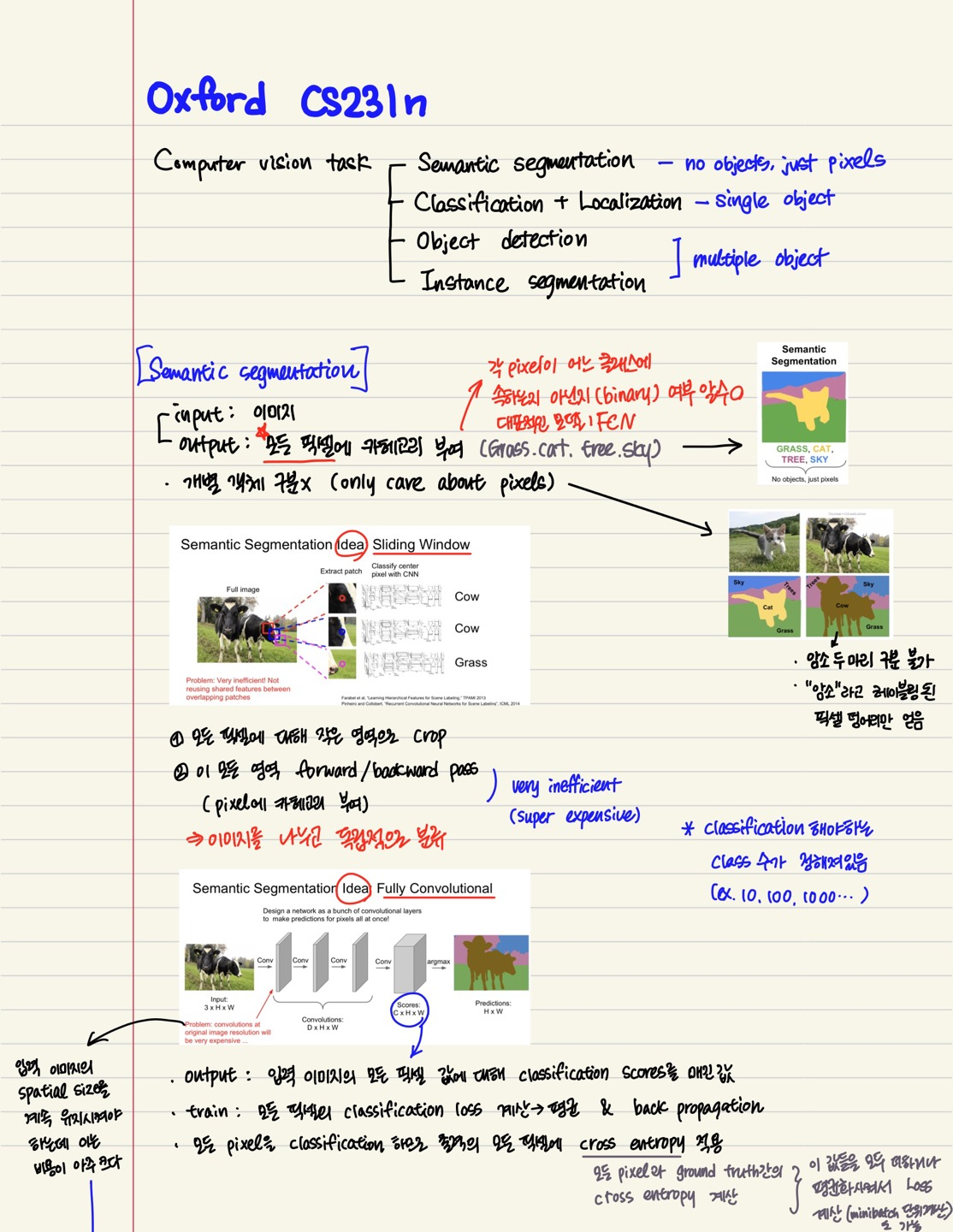

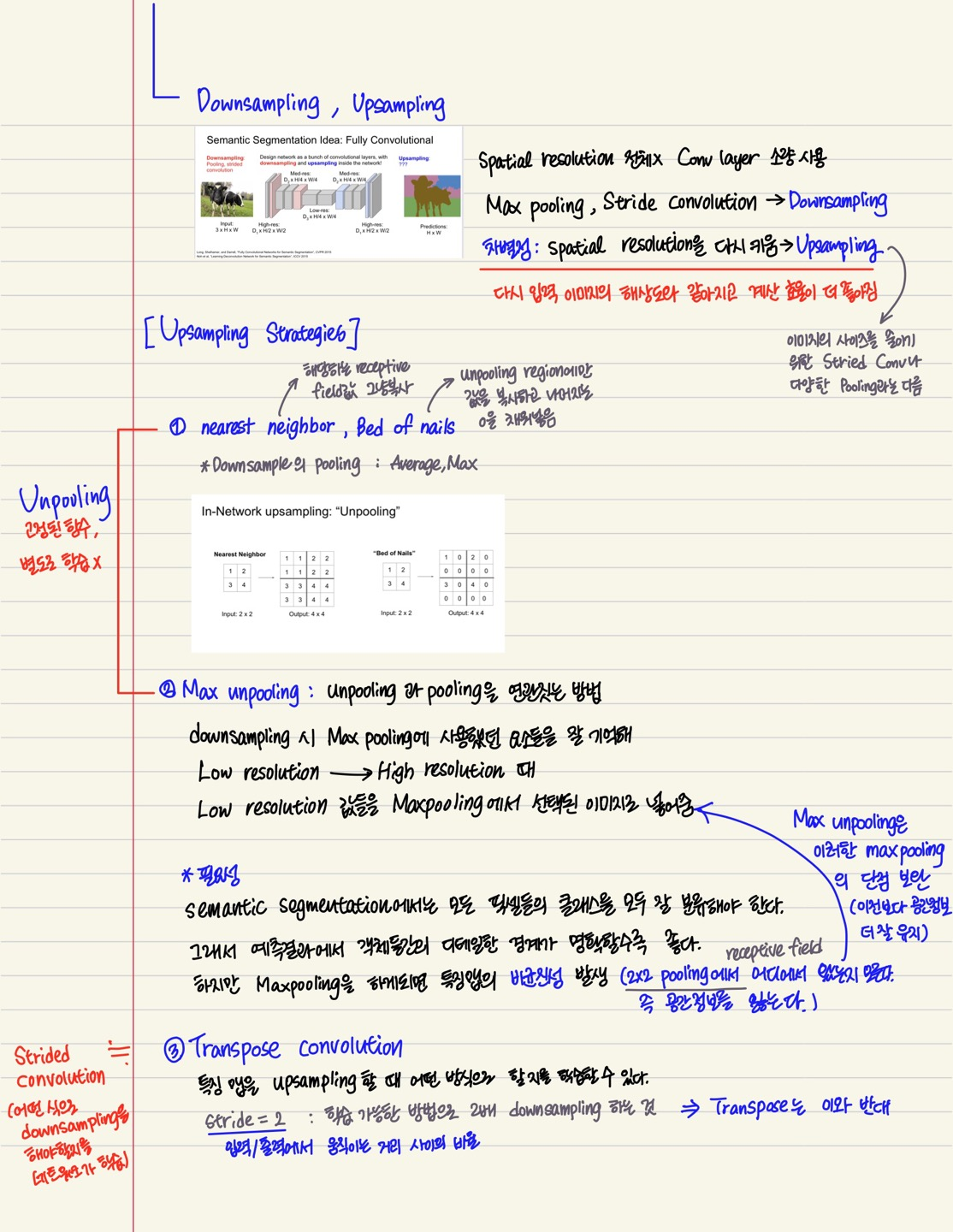

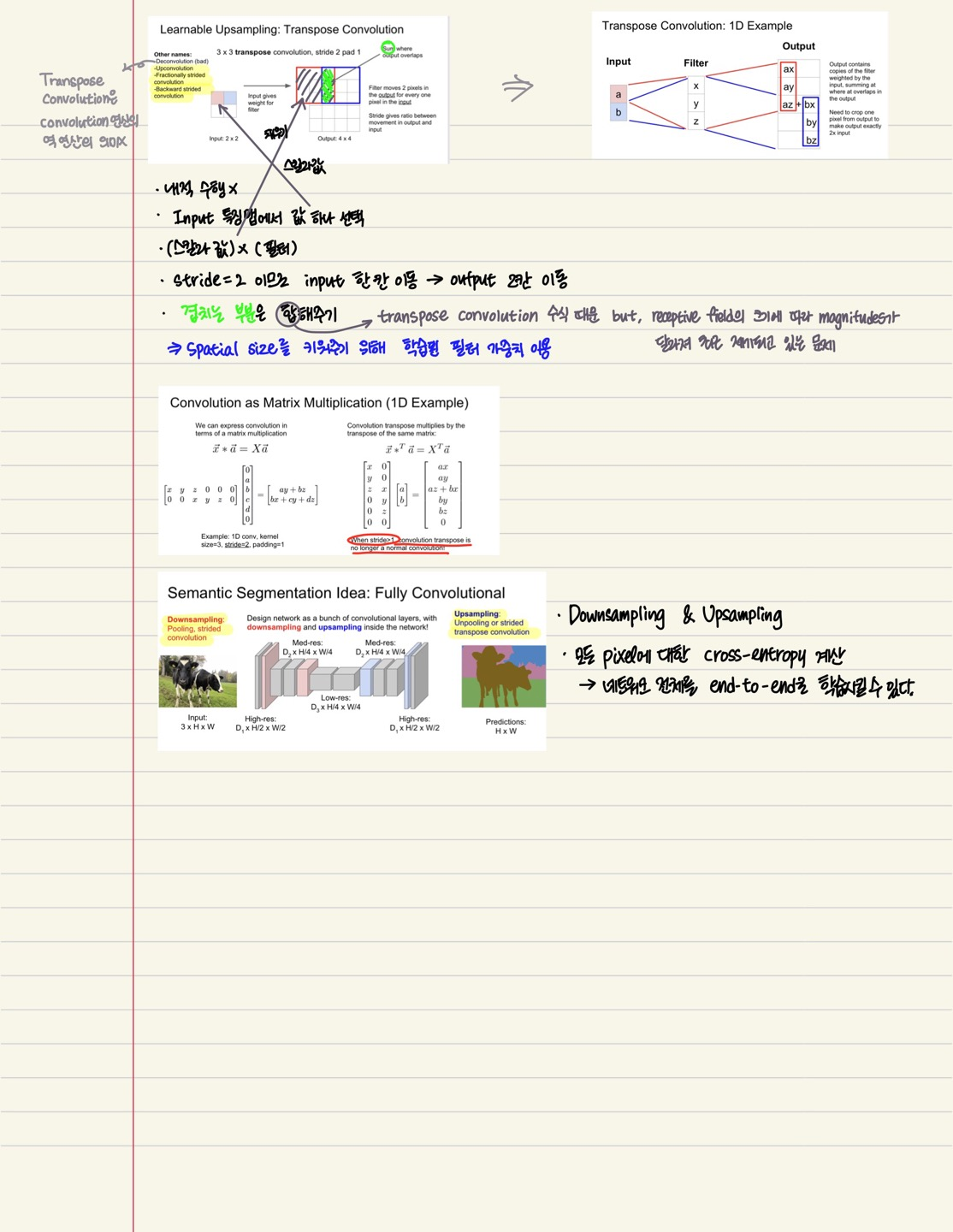

0. warm-up : oxford cs231n

*transposed convolutional layer

1. 이미지 분할(Image Segmentation)

분할(segmentation)

분할이란 이미지를 픽셀 수준에서 분류하는 문제이다.

신경망을 이용해 분할하는 가장 단순한 방법은 모든 픽셀 각각을 추론하는 것이다. ( ) 그러나 이러한 식으로는 픽셀 수만큼 forward 처리를 해야 하여 긴 시간이 걸리게 된다. (정확히는 합성곱 연산에서 많은 영역을 쓸데없이 다시 계산하는 것이 문제가 된다.)이러한 낭비를 줄여주는 기법이 바로 이다. 이는 단 한번의 forward 처리로 모든 픽셀의 클래스를 분류해주는 놀라운 기법이다.(입력 이미지의 모든 픽셀 값에 대해 classification scores를 매긴 값이 출력되어 모든 픽셀에 각각 를 적용하여 loss를 계산하여 평균내고 함으로써 훈련을 진행한다.) 위 그림에서 볼 수 있듯 사물 인식에서 사용한 신경망의 완전연결 계층에서는 중간 데이터의 공간 볼륨(다차원 형태)을 1차원으로 변환하여() 한 줄로 늘어선 노드들이 처리했으나, 에서는 공간 볼륨을 유지한 채 마지막 출력까지 처리할 수 있으며 마지막에 공간 크기를 확대하는 처리를 도입했다는 것도 특징이다. 이 확대 처리로 인해 줄어든 중간 데이터를 입력 이미지와 같은 크기까지 단번에 확대할 수 있다.

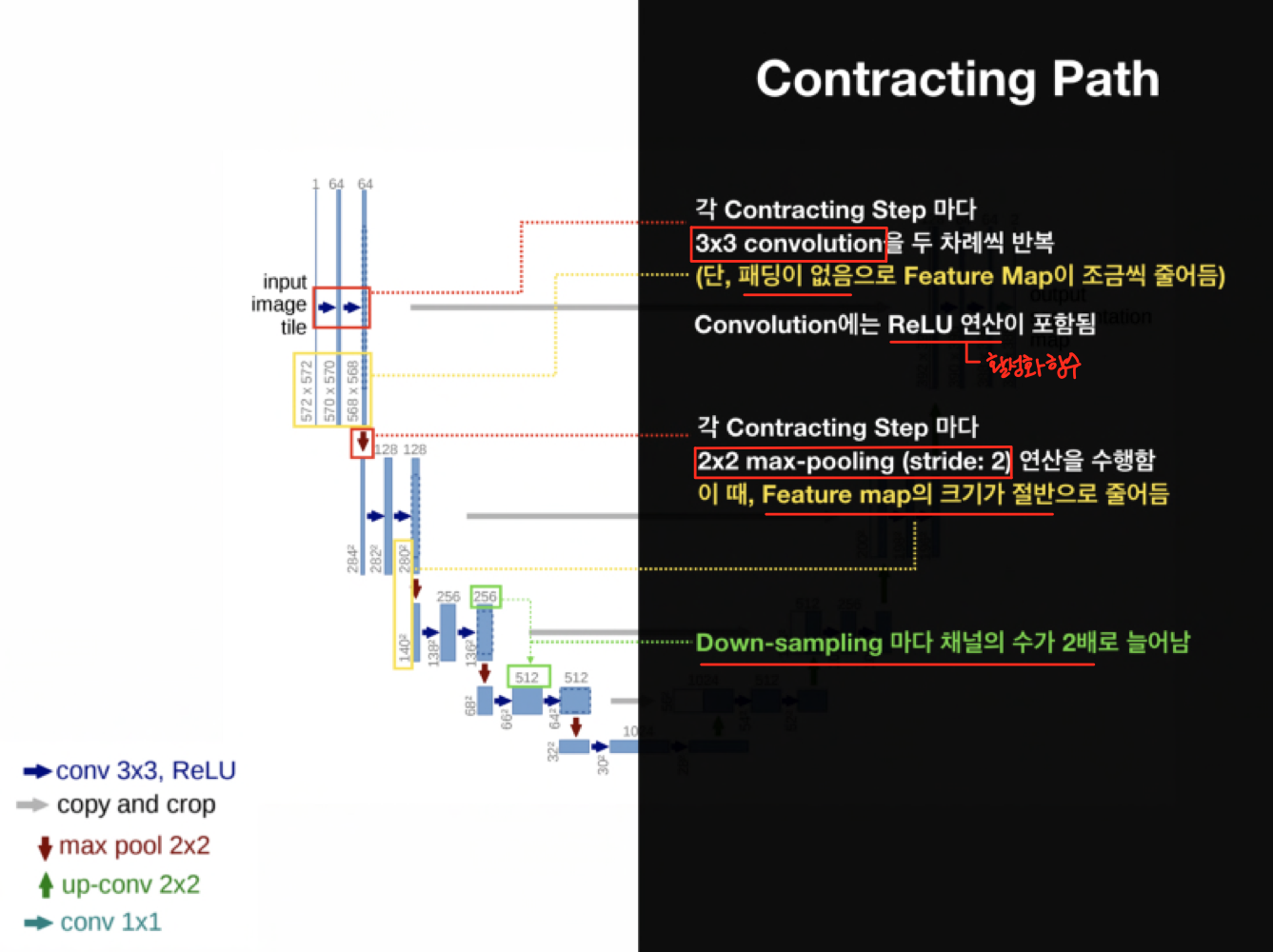

1.1 U-Net

main idea

1) Contracting Path : 이미지의 context(맥락) 파악

2) Expanding Path : feature map을 upsampling 하고 1)에서 포착한 feature map의 context와 concatenation(결합) → 이는 더욱 정확한 localization을 하는 역할을 한다.

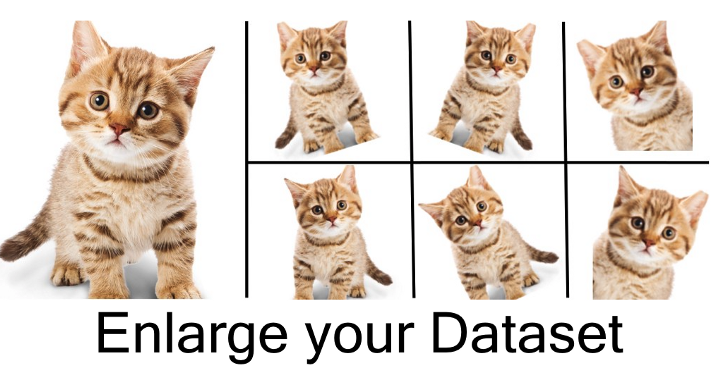

2. 데이터 증강 (Data Augmentation)

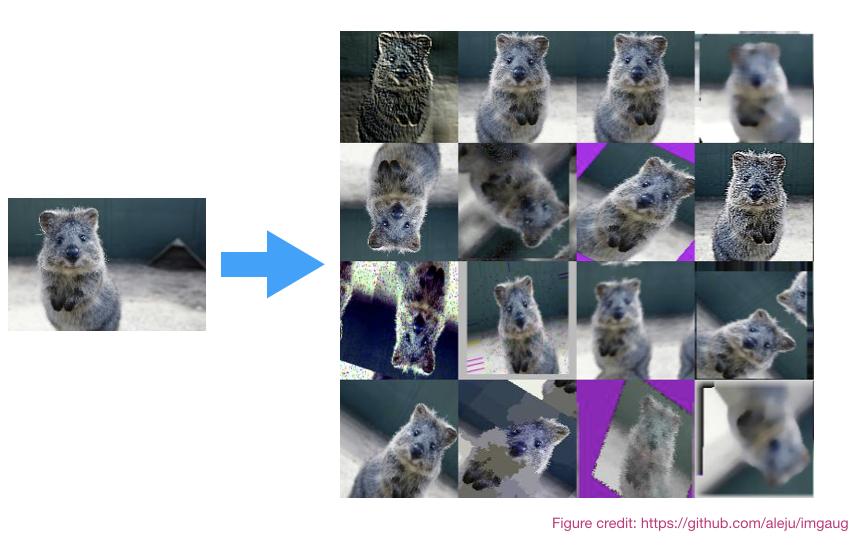

- 무작위(그러나 사실적인) 변환을 적용하여 훈련 세트의 다양성을 증가시키는 기술

- 갖고 있는 데이터셋을 여러 가지 방법으로 augment하여 실질적인 학습 데이터셋의 규모를 키울 수 있는 방법

- = 원래의 데이터를 부풀려서 더 좋은 성능을 만든다는 뜻

- 데이터가 많아진다는 것은 overfitting을 줄일 수 있다는 것을 의미한다. 갖고 있는 데이터셋이 실제 상황에서의 입력값과 다를 경우, augmentation을 통해 실제 입력값과 비슷한 데이터 분포를 만들 수 있다. 예를 들어, 학습한 데이터는 노이즈가 많이 없는 사진이지만 테스트 이미지는 다양한 노이즈가 있는 경우 테스트에서 좋은 성능을 내기 위해서는 노이즈의 분포를 예측하고 학습 데이터에 노이즈를 삽입하여 모델이 노이즈에 잘 대응할 수 있도록 해야 한다.

=> Data augmentation는 데이터를 늘릴 뿐 아니라 모델이 실제로 환경에서 잘 동작할 수 있도록 도와준다. - 중요한 이유

1) Preprocessing & augmentation을 하면 대부분 성능이 좋아진다.

2) 원본에 추가되는 개념이니 성능이 떨어지지 않는다.

3) 쉽고 패턴이 정해져 있다. - 데이터 증강을 적용하는 방법 :

Keras 전처리 레이어,tf.image

2.1 Keras 전처리 레이어

크기 및 배율 조정

- 크기 조정 :

tf.keras.layers.Resizing - 배율 조정 :

tf.keras.layers.Rescaling

### Resizing & Rescaling

IMG_SIZE = 180

resize_and_rescale = tf.keras.Sequential([

layers.experimental.preprocessing.Resizing(IMG_SIZE, IMG_SIZE),

layers.experimental.preprocessing.Rescaling(1./255) # 픽셀 값을 [0,1]로 표준화 / [-1,1]을 원할 경우, Rescaling(1./127.5, offset=-1)

])

### 결과 확인

result = resize_and_rescale(image)

_ = plt.imshow(result)

### 픽셀 값이 [0,1]로 표준화되었는지 확인

print("Min and max pixel values:", (result.numpy().min(), result.numpy().max()))

"""

>>> Min and max pixel values: (0.0, 1.0)

"""데이터 증강

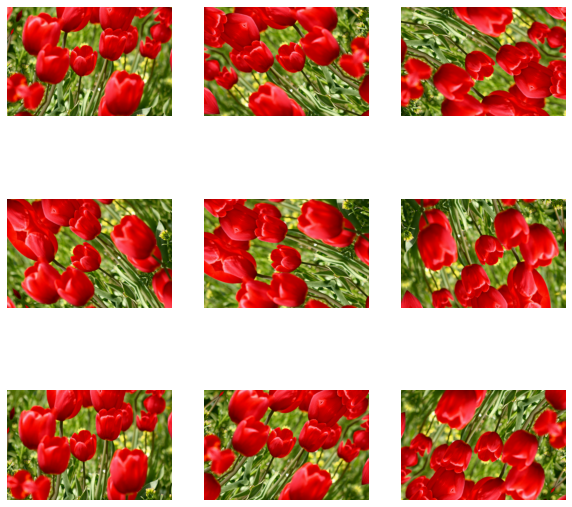

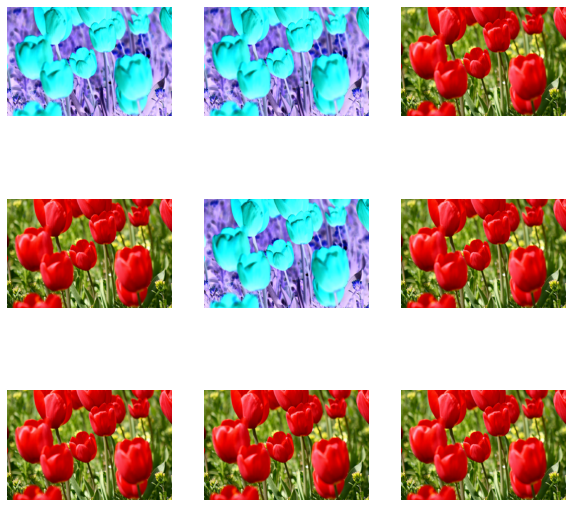

### 몇 개의 전처리 레이어를 동일한 이미지에 반복적 적용

data_augmentation = tf.keras.Sequential([

layers.experimental.preprocessing.RandomFlip("horizontal_and_vertical"),

layers.experimental.preprocessing.RandomRotation(0.2),

])

# Add the image to a batch

image = tf.expand_dims(image, 0)

plt.figure(figsize=(10, 10))

for i in range(9):

augmented_image = data_augmentation(image)

ax = plt.subplot(3, 3, i + 1)

plt.imshow(augmented_image[0])

plt.axis("off")

이러한 다양한 전처리 레이어를 사용할 수 있는 방법은 2가지가 있다.

옵션 1: 전처리 레이어를 모델의 일부로 만들기

[유의사항]

- 데이터 증강은 나머지 레이어와 동기적으로 기기에서 실행되며 GPU 가속을 이용한다.

model.save를 사용하여 모델을 내보낼 때 전처리 레이어가 모델의 나머지 부분과 함께 저장된다. 나중에 이 모델을 배포하면 레이어 구성에 따라 이미지가 자동으로 표준화된다.

이를 통해 서버측 논리를 다시 구현해야 하는 노력을 덜 수 있다.- 참고) 데이터 증강은 테스트할 때 비활성화되므로 입력 이미지는

model.fit(model.evaluate또는model.predict가 아님) 호출 중에만 증강된다.

model = tf.keras.Sequential([

resize_and_rescale,

data_augmentation,

layers.Conv2D(16, 3, padding='same', activation='relu'),

# 특별한 기준은 없습니다. 16개를 해도 되고, 32개를 해도 됩니다. 여러 가지로 해보고 성능이 더 좋은 쪽을 선택합니다.

# (,3) -> (3, 3)

layers.MaxPooling2D(),

# Rest of your model

])옵션 2: 데이터세트에 전처리 레이어 적용하기

[유의사항]

- 데이터 증강은 CPU에서 비동기적으로 이루어지며 차단되지 않는다. 아래와 같이

Dataset.prefetch를 사용하여 GPU에서 모델 훈련을 데이터 전처리와 중첩할 수 있다.(tf.data API로 성능 향상하기가이드에서 데이터세트 성능에 대해 자세히 알아볼 수 있다.)- 이 경우, 전처리 레이어는

model.save를 호출할 때 모델과 함께 내보내지지 않는다. 저장하기 전에 이 레이어를 모델에 연결하거나 서버측에서 다시 구현해야 한다. 훈련 후, 내보내기 전에 전처리 레이어를 연결할 수 있다.- 참고) 데이터 증강은 훈련 세트에만 적용해야 한다.

### Dataset.map

aug_ds = train_ds.map(

lambda x, y: (resize_and_rescale(x, training=True), y))

batch_size = 32

AUTOTUNE = tf.data.experimental.AUTOTUNE

def prepare(ds, shuffle=False, augment=False):

# Resize and rescale all datasets

ds = ds.map(lambda x, y: (resize_and_rescale(x), y),

num_parallel_calls=AUTOTUNE) # 어떻게 동시에 병렬처리를 할 것인가?

if shuffle:

ds = ds.shuffle(1000)

# Batch all datasets

ds = ds.batch(batch_size)

# Use data augmentation only on the training set

if augment:

ds = ds.map(lambda x, y: (data_augmentation(x, training=True), y),

num_parallel_calls=AUTOTUNE)

# Use buffered prefecting on all datasets

return ds.prefetch(buffer_size=AUTOTUNE)

train_ds = prepare(train_ds, shuffle=True, augment=True)

val_ds = prepare(val_ds)

test_ds = prepare(test_ds)

### 모델 훈련

model = tf.keras.Sequential([

layers.Conv2D(16, 3, padding='same', activation='relu'),

layers.MaxPooling2D(),

layers.Conv2D(32, 3, padding='same', activation='relu'),

layers.MaxPooling2D(),

layers.Conv2D(64, 3, padding='same', activation='relu'),

layers.MaxPooling2D(),

layers.Flatten(),

layers.Dense(128, activation='relu'),

layers.Dense(num_classes)

])

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True), # 5개의 레이블(다중 분류)

metrics=['accuracy'])

epochs=5

history = model.fit(

train_ds,

validation_data=val_ds,

epochs=epochs

)

loss, acc = model.evaluate(test_ds)

print("Accuracy", acc)사용자 정의 데이터 증강

다음으로 소개되는 두 레이어는 확률에 따라 이미지의 색상을 무작위로 반전한다.

layers.Lambda 레이어

def random_invert_img(x, p=0.5):

if tf.random.uniform([]) < p:

x = (255-x)

else:

x

return x

def random_invert(factor=0.5):

return layers.Lambda(lambda x: random_invert_img(x, factor))

random_invert = random_invert()

plt.figure(figsize=(10, 10))

for i in range(9):

augmented_image = random_invert(image)

ax = plt.subplot(3, 3, i + 1)

plt.imshow(augmented_image[0].numpy().astype("uint8"))

plt.axis("off")

서브 클래스 생성

class RandomInvert(layers.Layer):

def __init__(self, factor=0.5, **kwargs):

super().__init__(**kwargs)

self.factor = factor

def call(self, x):

return random_invert_img(x)

_ = plt.imshow(RandomInvert()(image)[0])위의 layers.preprocessing 유틸리티는 편리하다. 보다 세밀한 제어를 위해서는 tf.data 및 tf.image를 사용하여 고유한 데이터 증강 파이프라인 또는 레이어를 작성할 수 있다. (TensorFlow 애드온 이미지: 작업 및 TensorFlow I/O: 색 공간 변환도 확인해볼 것)

2.2 tf.image

데이터 증강

### 꽃 데이터세트 불러오기

(train_ds, val_ds, test_ds), metadata = tfds.load(

'tf_flowers',

split=['train[:80%]', 'train[80%:90%]', 'train[90%:]'],

with_info=True,

as_supervised=True,

)

### 작업할 이미지 검색

image, label = next(iter(train_ds))

_ = plt.imshow(image)

_ = plt.title(get_label_name(label))

### 원본 이미지와 증강 이미지를 나란히 시각화 & 비교

def visualize(original, augmented):

fig = plt.figure()

plt.subplot(1,2,1)

plt.title('Original image')

plt.imshow(original)

plt.subplot(1,2,2)

plt.title('Augmented image')

plt.imshow(augmented)

### 이미지 뒤집기 : 이미지를 수직 또는 수평으로 뒤집기

flipped = tf.image.flip_left_right(image)

visualize(image, flipped)

### 이미지를 회색조로 만들기

grayscaled = tf.image.rgb_to_grayscale(image)

visualize(image, tf.squeeze(grayscaled))

_ = plt.colorbar()

### 이미지 포화시키기 : 채도 계수를 제공하여 이미지를 포화시킨다.(smoothing 효과)

saturated = tf.image.adjust_saturation(image, 3)

visualize(image, saturated)

### 이미지 밝기 변경하기 : 밝기 계수를 제공하여 이미지의 밝기를 변경

bright = tf.image.adjust_brightness(image, 0.4)

visualize(image, bright)

### 이미지 중앙 자르기 : 이미지를 중앙에서 원하는 이미지 부분까지 자르기

cropped = tf.image.central_crop(image, central_fraction=0.5)

visualize(image,cropped)

### 이미지 회전하기 : 90도 회전

rotated = tf.image.rot90(image)

visualize(image, rotated)

데이터세트에 증강 적용하기

Dataset.map 사용하여 데이터 증강을 데이터세트에 적용

def resize_and_rescale(image, label):

image = tf.cast(image, tf.float32)

image = tf.image.resize(image, [IMG_SIZE, IMG_SIZE])

image = (image / 255.0)

return image, label

def augment(image,label):

image, label = resize_and_rescale(image, label)

# Add 6 pixels of padding

image = tf.image.resize_with_crop_or_pad(image, IMG_SIZE + 6, IMG_SIZE + 6)

# Random crop back to the original size

image = tf.image.random_crop(image, size=[IMG_SIZE, IMG_SIZE, 3])

image = tf.image.random_brightness(image, max_delta=0.5) # Random brightness

image = tf.clip_by_value(image, 0, 1)

return image, label

### 데이터세트 구성

train_ds = (

train_ds

.shuffle(1000)

.map(augment, num_parallel_calls=AUTOTUNE)

.batch(batch_size)

.prefetch(AUTOTUNE)

)

val_ds = (

val_ds

.map(resize_and_rescale, num_parallel_calls=AUTOTUNE)

.batch(batch_size)

.prefetch(AUTOTUNE)

)

test_ds = (

test_ds

.map(resize_and_rescale, num_parallel_calls=AUTOTUNE)

.batch(batch_size)

.prefetch(AUTOTUNE)

)3. 객체 인식 (Object Recognition)

- 사전 학습(pre-trained)된 이미지 분류 신경망을 사용하여 다중 객체 검출하기

- Colab 실습