mini project

시카고 맛집페이지 분석

🌟 목표 | 시카고 맛집페이지 크롤링을 통한 분석

( 총 50개 페이지의 정보크롤링 )

🌟 크롤링 출처

1. 메인페이지 불러오기

from urllib.request import urlopen, Request

from bs4 import BeautifulSoup

url_base = "https://www.chicagomag.com"

url_sub = "/Chicago-Magazine/November-2012/Best-Sandwiches-Chicago/"

url = url_base + url_subresponse = urlopen(url)

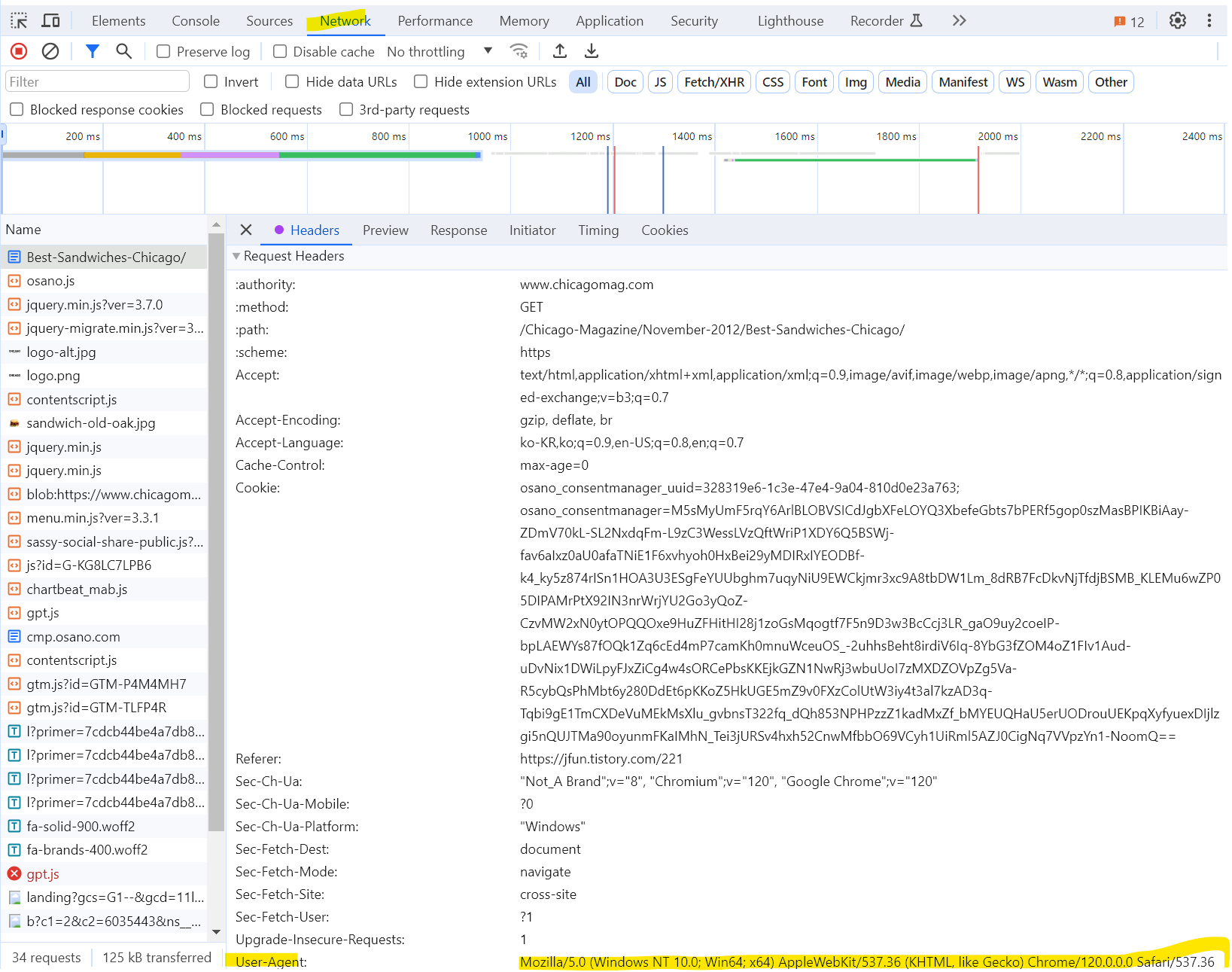

response.status➡️ 404 error

간혹 페이지 크롤링이 막힐경우에는

개발자도구 -> network -> user-agent 내용확인 후

Request(url, headers = 로 해당값을 넣어주야한다.)

req = Request(url,headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"})

response = urlopen(req)

response.status➡️ 200

🚩 결론

from urllib.request import urlopen, Request

from bs4 import BeautifulSoup

url_base = "https://www.chicagomag.com"

url_sub = "/Chicago-Magazine/November-2012/Best-Sandwiches-Chicago/"

url = url_base + url_sub

req = Request(url,headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"})

response = urlopen(req)

soup = BeautifulSoup(response,"html.parser")2. 메인페이지 - 필요정보가져오기

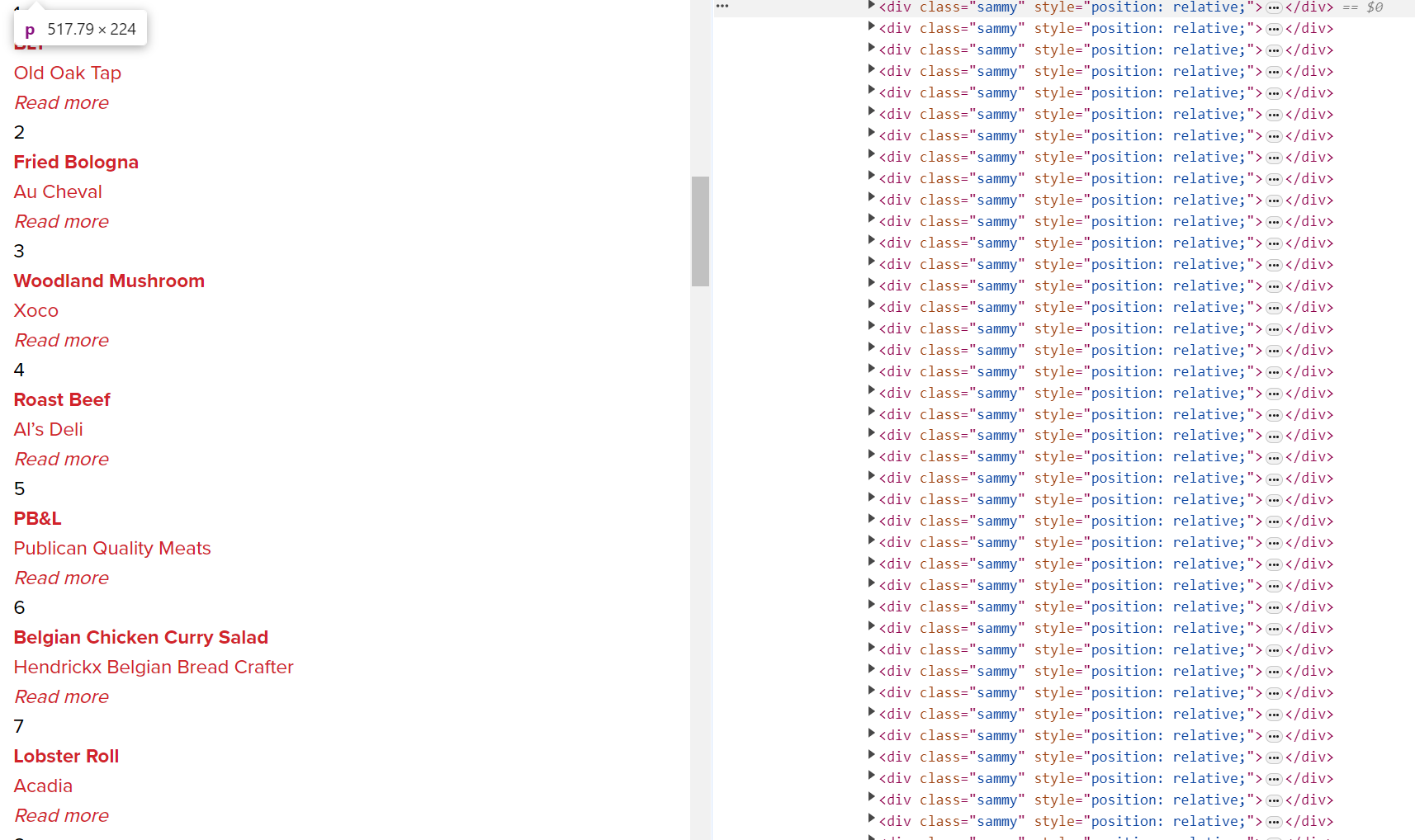

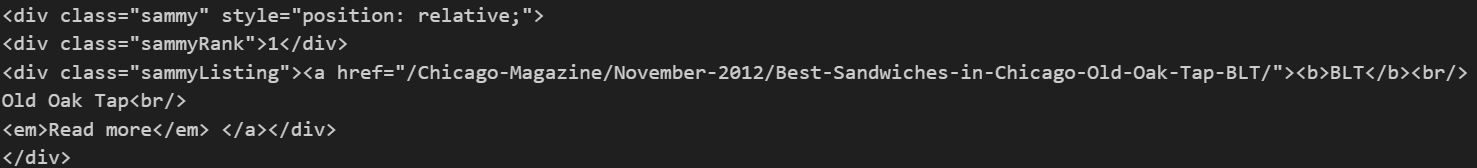

맛집 페이지 링크의 경우

div의 class sammy로 되어있으므로,

- rank 50 불러오기

rank = soup.find_all("div",class_ = "sammy")

orrank = soup.select(".sammy")

- sample test code

tmp_one = soup.find_all("div",class_ = "sammy")[0]

: 0번째 list 가져오기

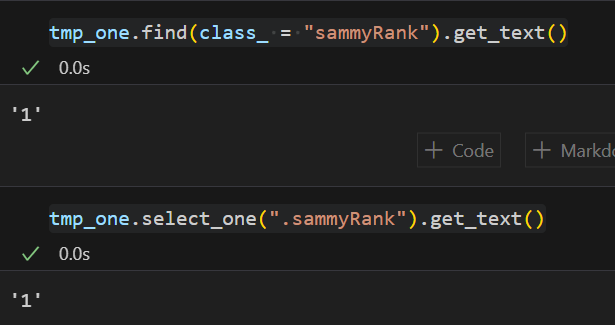

tmp_one.find(class_ = "sammyRank").get_text()

ortmp_one.select_one(".sammyRank").get_text()

: ranking 불러오기

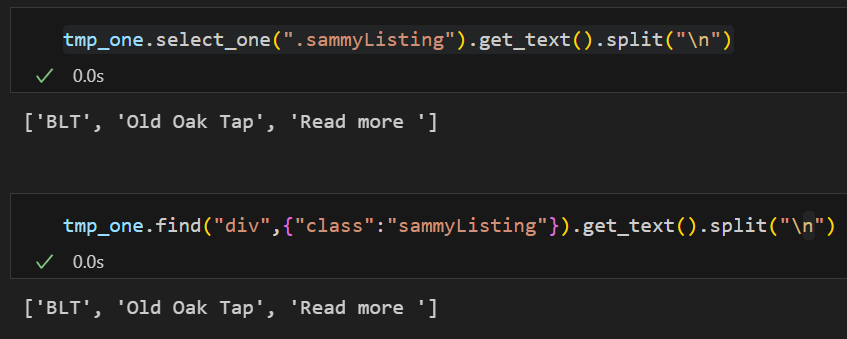

tmp_one.find("div",{"class":"sammyListing"}).get_text().split("\n")

ortmp_one.select_one(".sammyListing").get_text().split("\n")

: 가게정보 , 메뉴이름 불러오고 text 나누기

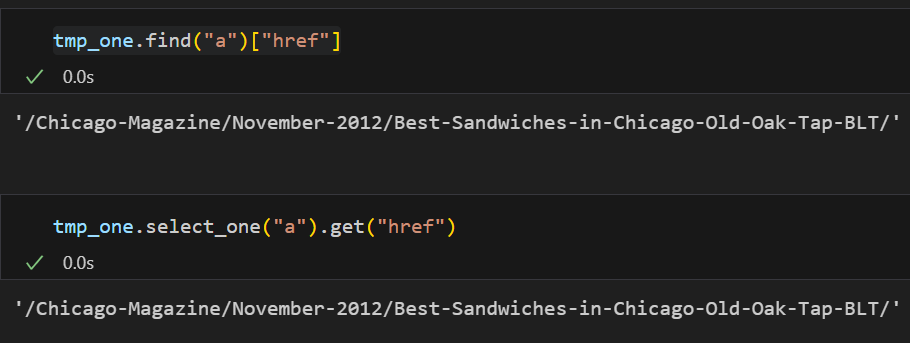

tmp_one.find("a")["href"]

ortmp_one.select_one("a").get("href")

: 링크 불러오기

- for문으로 데이터담기

from urllib.parse import urljoin

url_base = "https://www.chicagomag.com"

rank = []

main_menu = []

store_name = []

url_add = []

list_soup = soup.find_all("div", class_= "sammy") # soup.select(".sammy")

for item in list_soup :

rank.append(item.find("div", class_ = "sammyRank").get_text()),

main_menu.append(item.find("div", class_ = "sammyListing").get_text().split("\n")[0]),

store_name.append(item.find("div", class_ = "sammyListing").get_text().split("\n")[1])

url_add.append(urljoin(url_base,item.find("a")["href"]))- pandas변환 후 excel로 보내기

import pandas as pd

data = {

"Rank" : rank,

"Menu" : main_menu,

"Store" : store_name,

"URL" : url_add

}

df = pd.DataFrame(data, columns=("Rank","Store","Menu","URL"))

df.to_csv("./03. best_sandwiches_list_chicago.csv",sep=",", encoding="utf-8")3. 세부페이지 - 필요정보 가져오기

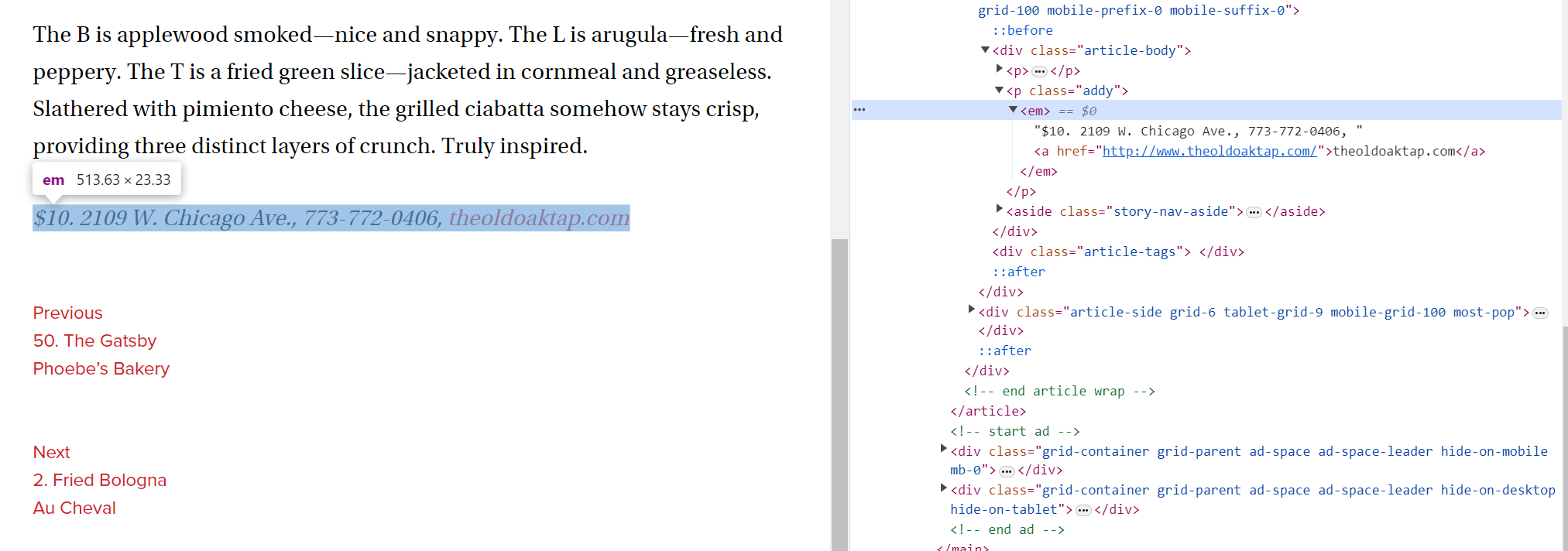

세부페이지의 HTML을 불러온 후 가격, 주소 정보 가져오기

- URL컬럼 test code

req = Request(df["URL"][0], headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"})

response = urlopen(req)

soup_tmp = BeautifulSoup(response,"html.parser")

print(soup_tmp.prettify()): 세부페이지 HTML 가져오기

: p의 addy 클래스 확인

: 정보 확인

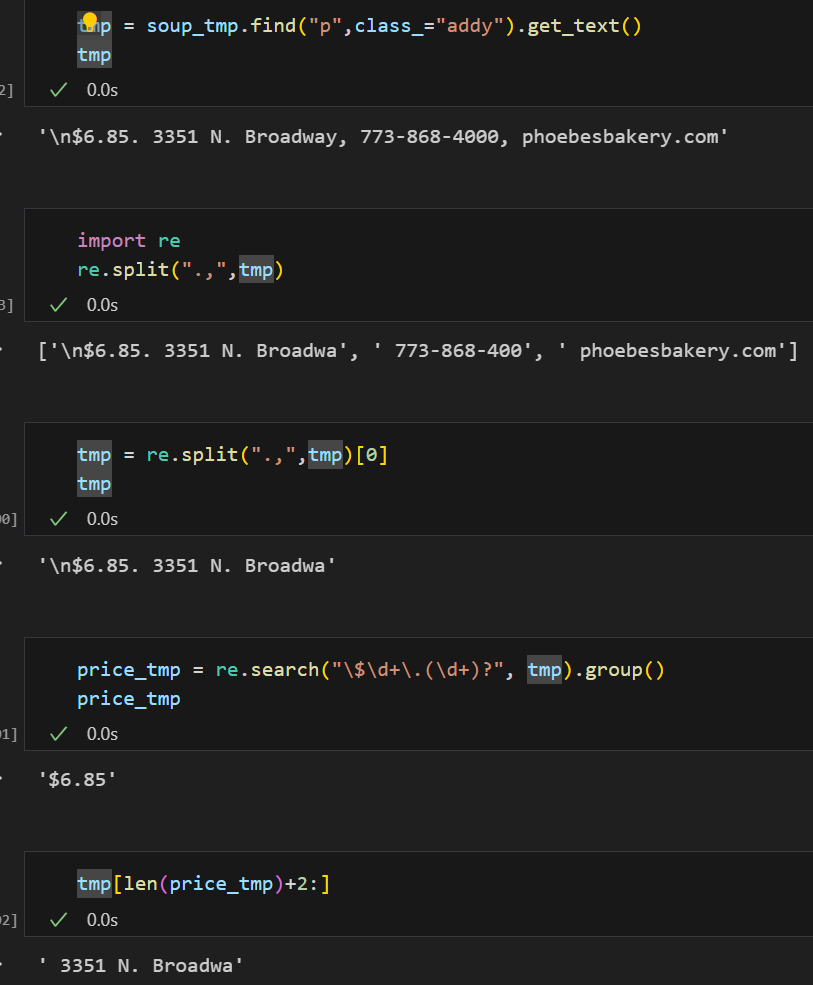

🌟re 라이브러리의 split | text 나누기

🌟re 라이브러리의 search | text 찾기

- for문으로 data 불러오기

from tqdm import tqdm

price = []

address = []

for idx, row in tqdm(df.iterrows()) :

req = Request(row["URL"], headers={"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"})

response = urlopen(req)

soup_tmp = BeautifulSoup(response,"html.parser")

getting = soup_tmp.find("p",class_="addy").get_text()

import re

price_tmp = re.split(".,",getting)[0]

pri = re.search("\$\d+\.(\d+)?", price_tmp).group()

add = price_tmp[len(pri)+2:]

price.append(pri)

address.append(add)🌟 tqdm | print(idx)할 필요없이 for문의 진행상황 확인가능

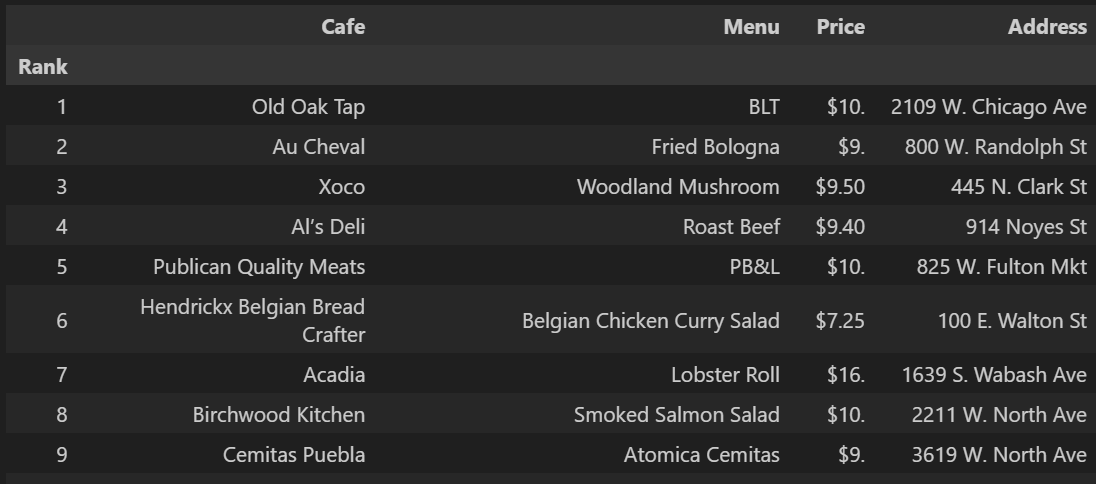

- Data Frame 정리

df["Price"] = price

df["Address"] = address

: price, Address 테이블에 추가

del df["URL"]

: URL 컬럼 삭제

df.set_index("Rank",inplace=True)

: Rank 컬럼 index 추가

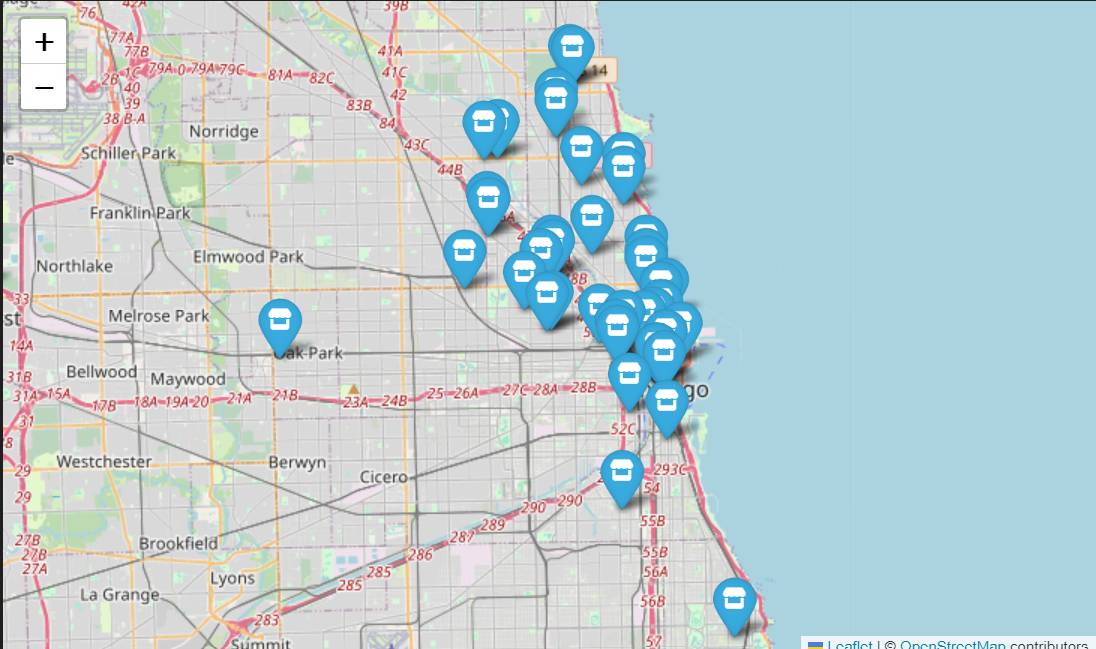

4. 지도 시각화 w/Folium

import folium

import pandas as pd

import numpy

import googlemaps

from tqdm import tqdm

gmaps_key = "키값"

gmaps = googlemaps.Client(key = gmaps_key)- for문으로 구글api연동 후 좌표값 가져오기

lat = []

lng = []

for idx, row in tqdm(df.iterrows()):

if not row["Address"] == "Multiple location" :

target_name = row["Address"] + ", " + "Chicago"

# print(target_name)

gmaps_output = gmaps.geocode(target_name)

# print(gmaps_output[0])

location_output = gmaps_output[0].get("geometry")

# print(location_output)

lat_temp = location_output["location"]["lat"]

lng_temp = location_output["location"]["lng"]

lat.append(lat_temp)

lng.append(lng_temp)

else :

lat.append(np.nan)

lng.append(np.nan)- Data Frame에 추가

df["lat"] = lat

df["lng"] = lng

- folium 시각화

mapping = folium.Map(location = [41.896113,-87.677857],zoom_start=11)

for idx, row in df.iterrows():

if not row["Address"] == "Multiple location" :

folium.Marker(

location=[row["lat"],row["lng"]],

popup=row["Cafe"],

tooltip=row["Menu"],

icon=folium.Icon(

icon="store",

prefix="fa"

)

).add_to(mapping)

mapping