논문 제목: On Neural Network Equivalence Checking using SMT Solvers

📕 Summary

Abstract

Equivalence checking of neural networks is important for replacing learning-enabled components with equivalent ones, and this paper presents an SMT-based encoding of the problem, exploring its utility and limitations.

The paper also proposes avenues for future research and improvements towards more scalable and practically applicable solutions for general and application-independent equivalence checking of diverse types of neural network models

Introduction

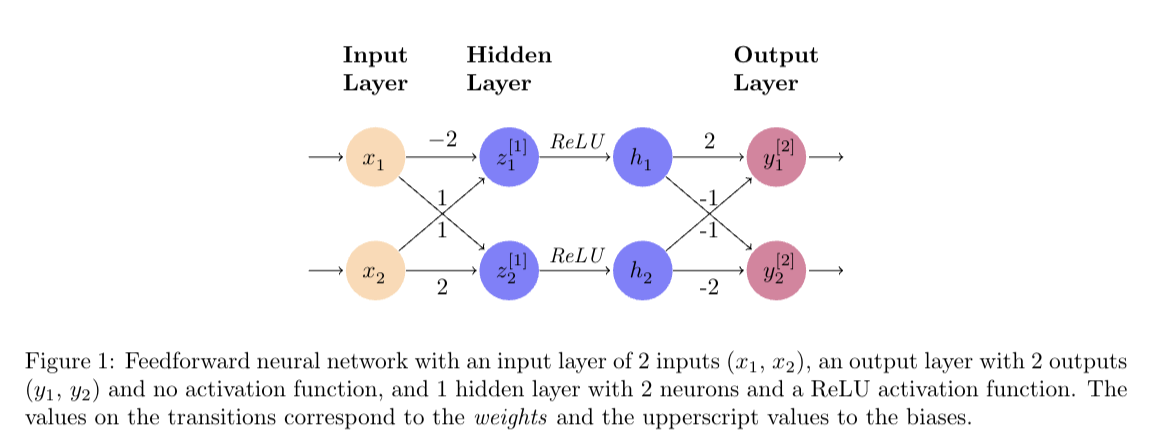

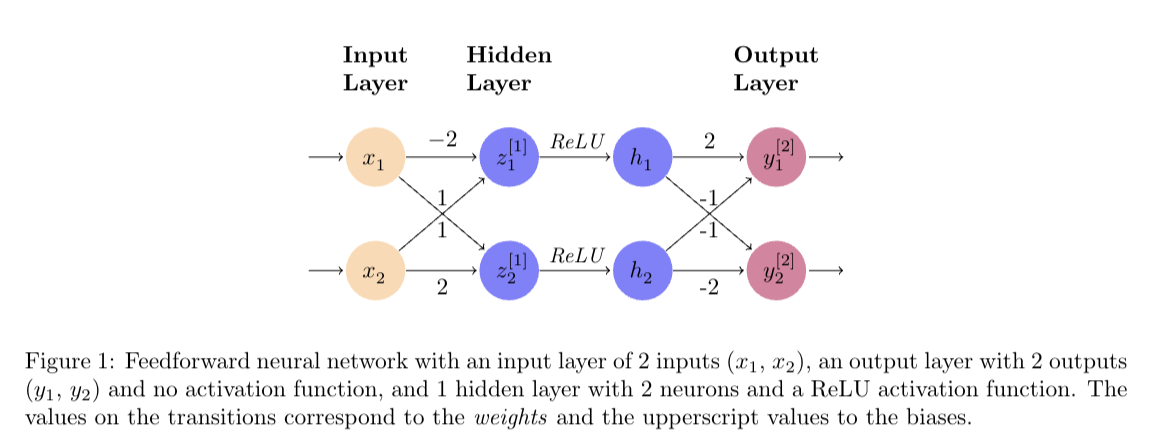

image 설명

간단한 피드포워드 신경망을 나타내며, 입력층은 2개의 입력((x_1, x_2))을, 숨겨진 층은 ReLU (Rectified Linear Unit, 정류된 선형 단위) 활성화 함수를 사용하는 2개의 뉴런을, 출력층은 2개의 출력((y1^2, y2^2))을 가집니다. 화살표에는 가중치, 뉴런 옆 상자에는 편향 값이 명시되어 있습니다. 이 다이어그램의 목적은 일반적으로 신경망의 구조와 입력이 네트워크의 층을 통해 출력으로 어떻게 변환되는지를 설명하기 위한 것입니다.]

The problem of equivalence checking in neural networks involves determining if two pretrained networks yield similar outputs for the same inputs, which is important for tasks like knowledge distillation and regularization. SMT solvers are proposed as a potential solution, offering sound and complete verification procedures for network equivalence, but with limitations in scalability for large networks like those in the MNIST dataset. The paper aims to address this problem by presenting an SMT-based encoding for equivalence checking, exploring its utility and limitations, and providing experimental results for diverse neural network models and equivalence criteria.

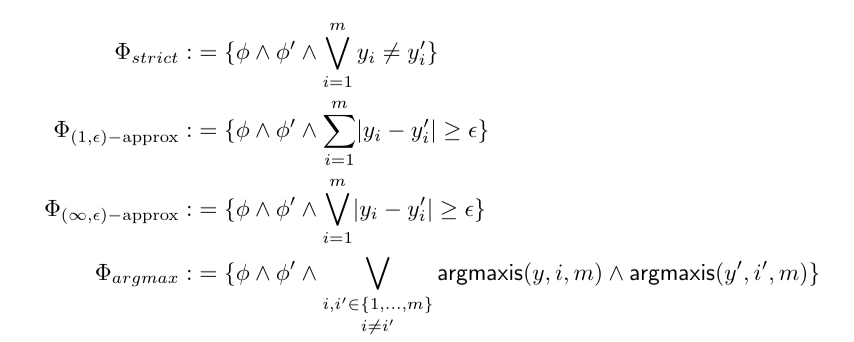

식 설명

Φstrict: 이것은 두 네트워크의 출력이 모든 입력에 대해 정확히 동일해야 하는 엄격한 동등성을 나타냅니다.

Φ(1,ϵ) - approx, Φ(lim, ϵ) - approx: 각각 L1 norm, L2 norm에 따른 ϵ-근사 동등성을 나타냅니다. 두 네트워크의 출력이 ϵ 오차 범위 내에 있을 경우 근사적으로 동등하다고 간주합니다.

Φargmax: 이것은 분류 작업에서 가장 높은 확률을 가진 출력을 네트워크의 예측으로 간주하는 argmax 함수를 기반으로 한 동등성의 형태를 나타냅니다. 두 네트워크가 그들의 출력의 argmax에 동의하는 경우, 이 기준에 따라 동등하다고 간주됩니다.

📕 Solution

Method

The paper proposes an SMT-based encoding of the equivalence checking problem for neural networks .

The authors explore the utility and limitations of this approach and present experimental results for diverse types of neural network models and equivalence criteria

The scalability bounds of the SMT-based encoding are studied with respect to the neural network model parameters and the number of derived SMT variables

The paper conducts experiments to sanity-check the prototype implementation and perform an empirical scalability study on the computational demands required for equivalence checking of neural networks of increasing complexity

Equivalence checking is performed on neural networks with different architectures to test the efficiency of the proposed methodology and identify computation time limitations

Note: The provided sources do not provide detailed information on the specific techniques or algorithms used in the SMT-based encoding.

📕 Conclusion

The paper formally defines the equivalence checking problem for neural networks and introduces various equivalence criteria for specific applications and verification requirements .

The authors propose an SMT-based encoding for neural network equivalence checking and provide experimental results to demonstrate its feasibility and scalability limitations .

Future research plans include exploring the integration of the equivalence checking problem into state-of-the-art verification tools for neural networks and investigating alternative solution encodings for improved scalability .

The paper suggests exploring the practical effectiveness of technical solutions from other fields, such as the equivalence checking of digital circuits, and considering layer-by-layer checking of equivalence between neural networks

Experimental results show the results of equivalence checking for different types of neural network models, including classifiers and regression networks, towards a general and application-independent approach