Linear Regression 구현 해보기

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

tf.random.set_seed(777)가상 데이터셋

W_true = 3.0

B_true = 2.0

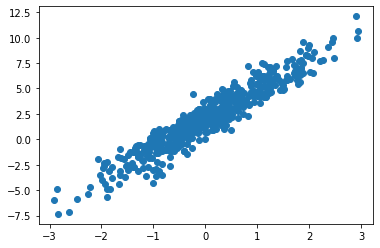

X = tf.random.normal((500, 1))

noise = tf.random.normal((500, 1))

y = X * W_true + B_true + noiseplt.scatter(X, y)

plt.show()

w = tf.Variable(5.)

b = tf.Variable(0.)# learning rate

lr = 0.03w_records = []

b_records = []

loss_records = []

for epoch in range(100):

# 매 epoch마다 한 번씩 학습을 할 거다.

with tf.GradientTape() as tape:

y_hat = X * w + b

loss = tf.reduce_mean(tf.square(y - y_hat))

w_records.append(w.numpy())

b_records.append(b.numpy())

loss_records.append(loss.numpy())

dw, db = tape.gradient(loss, [w, b])

w.assign_sub(lr * dw)

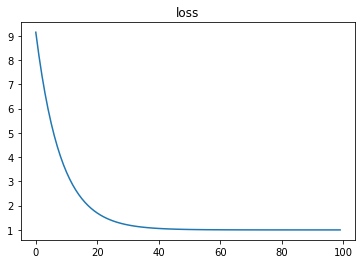

b.assign_sub(lr * db)plt.plot(loss_records)

plt.title('loss')

plt.show()

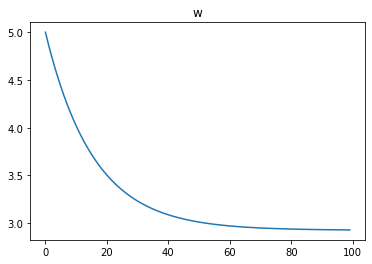

plt.plot(w_records)

plt.title('w')

plt.show()

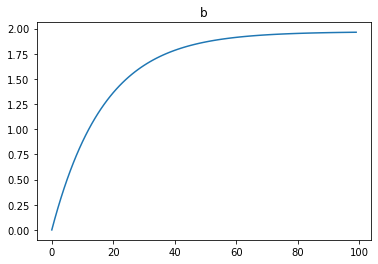

plt.plot(b_records)

plt.title('b')

plt.show()

Dataset 당뇨병 진행도 예측 하기

from sklearn.datasets import load_diabetes

import pandas as pd

diabetes = load_diabetes()

df = pd.DataFrame(diabetes.data, columns=diabetes.feature_names, dtype=np.float32)

df['const'] = np.ones(df.shape[0])

df.tail(3)| age | sex | bmi | bp | s1 | s2 | s3 | s4 | s5 | s6 | const | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 439 | 0.041708 | 0.050680 | -0.015906 | 0.017293 | -0.037344 | -0.013840 | -0.024993 | -0.011080 | -0.046883 | 0.015491 | 1.0 |

| 440 | -0.045472 | -0.044642 | 0.039062 | 0.001215 | 0.016318 | 0.015283 | -0.028674 | 0.026560 | 0.044529 | -0.025930 | 1.0 |

| 441 | -0.045472 | -0.044642 | -0.073030 | -0.081413 | 0.083740 | 0.027809 | 0.173816 | -0.039493 | -0.004222 | 0.003064 | 1.0 |

먼저!

를 Feature, ,를 가중치 벡터, 를 Target이라고 할 때,

의 역행령이 존재 한다고 가정했을 때,

아래의 식을 이용해 의 추정치 를 구해봅시다.

X = df

y = np.expand_dims(diabetes.target, axis=1)XT = tf.transpose(X)

w = tf.matmul(tf.matmul(tf.linalg.inv(tf.matmul(XT, X)), XT), y)

y_pred = tf.matmul(X, w)print('에측한 진행도 : ', y_pred[0].numpy(), '실제 진행도 : ', y[0])

print('에측한 진행도 : ', y_pred[19].numpy(), '실제 진행도 : ', y[19])

print('에측한 진행도 : ', y_pred[31].numpy(), '실제 진행도 : ', y[31])에측한 진행도 : [206.11667747] 실제 진행도 : [151.]

에측한 진행도 : [124.01754101] 실제 진행도 : [168.]

에측한 진행도 : [69.47575835] 실제 진행도 : [59.]이번에는, SGD 방식으로 구현해보세요!!

- Conditions

- steepest gradient descents(전체 데이터 사용)

- 가중치는 Gaussian normal distribution에서의 난수로 초기화함.

- step size == 0.03

- 100 iteration

lr = 0.03

num_iter = 100w_init = tf.random.normal((X.shape[-1], 1))

w = tf.Variable(w_init)w<tf.Variable 'Variable:0' shape=(11, 1) dtype=float32, numpy=

array([[-0.19601157],

[ 0.21892233],

[-0.69331926],

[-0.7571666 ],

[ 0.62700933],

[ 0.7786061 ],

[-1.2000623 ],

[-0.20798121],

[-2.2410874 ],

[-0.48892695],

[-1.3168424 ]], dtype=float32)>for i in range(num_iter):

with tf.GradientTape() as tape:

y_hat = tf.matmul(X, w)

loss = tf.reduce_mean((y - y_hat)**2)

dw = tape.gradient(loss, w)

w.assign_sub(lr * dw)---------------------------------------------------------------------------

InvalidArgumentError Traceback (most recent call last)

<ipython-input-64-7730a3dc1e13> in <module>

1 for i in range(num_iter):

2 with tf.GradientTape() as tape:

----> 3 y_hat = tf.matmul(X, w)

4 loss = tf.reduce_mean((y - y_hat)**2)

5

c:\Users\theo\miniconda3\envs\ds_study\lib\site-packages\tensorflow\python\util\traceback_utils.py in error_handler(*args, **kwargs)

151 except Exception as e:

152 filtered_tb = _process_traceback_frames(e.__traceback__)

--> 153 raise e.with_traceback(filtered_tb) from None

154 finally:

155 del filtered_tb

c:\Users\theo\miniconda3\envs\ds_study\lib\site-packages\tensorflow\python\framework\ops.py in raise_from_not_ok_status(e, name)

7105 def raise_from_not_ok_status(e, name):

7106 e.message += (" name: " + name if name is not None else "")

-> 7107 raise core._status_to_exception(e) from None # pylint: disable=protected-access

7108

7109

InvalidArgumentError: cannot compute MatMul as input #1(zero-based) was expected to be a double tensor but is a float tensor [Op:MatMul]X.dtypesage float32

sex float32

bmi float32

bp float32

s1 float32

s2 float32

s3 float32

s4 float32

s5 float32

s6 float32

const float64

dtype: object# dtype이 안맞아서 에러가 나오므로 dtype 변경

w_init = tf.random.normal((X.shape[-1], 1), dtype=tf.float64)

w = tf.Variable(w_init)for i in range(num_iter):

with tf.GradientTape() as tape:

y_hat = tf.matmul(X, w)

loss = tf.reduce_mean((y - y_hat)**2)

dw = tape.gradient(loss, w)

w.assign_sub(lr * dw)print('에측한 진행도 : ', y_hat[0].numpy(), '실제 진행도 : ', y[0])

print('에측한 진행도 : ', y_hat[19].numpy(), '실제 진행도 : ', y[19])

print('에측한 진행도 : ', y_hat[31].numpy(), '실제 진행도 : ', y[31])에측한 진행도 : [152.9045582] 실제 진행도 : [151.]

에측한 진행도 : [149.8566597] 실제 진행도 : [168.]

에측한 진행도 : [147.85657442] 실제 진행도 : [59.]