Logistic Regression

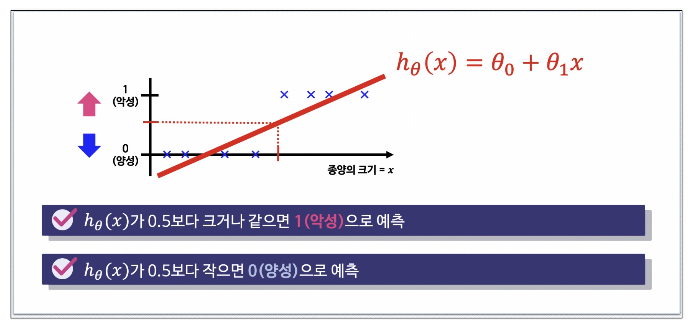

악성 종양을 찾는 문제 -> 분류? 회귀?

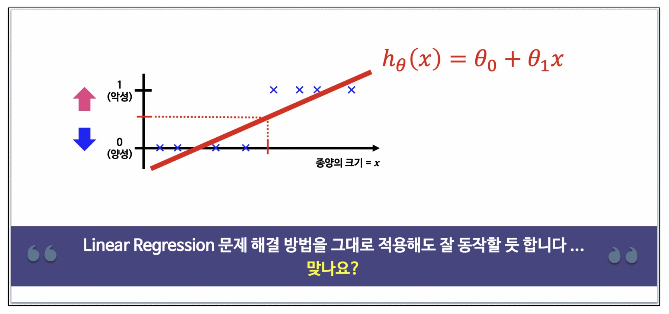

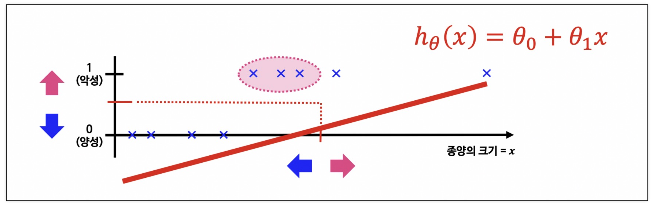

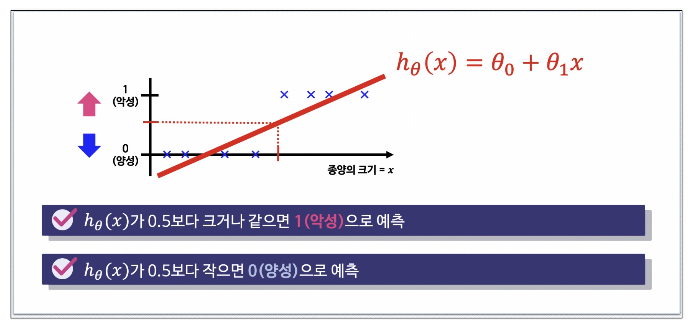

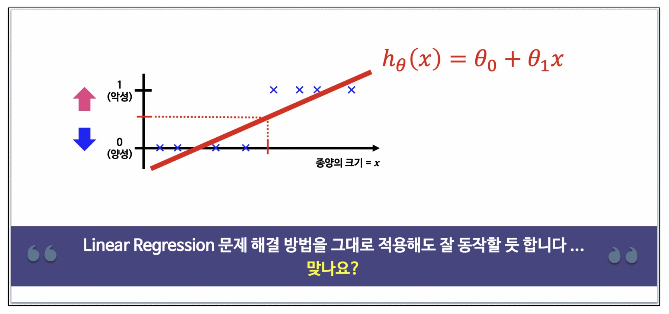

Linear Regression을 분류 문제에 적용할 수 있을까?

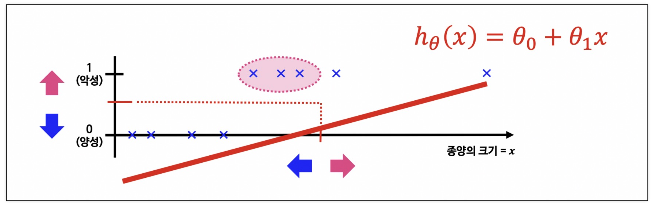

Linear Regression으로는 분류하는 게 적용하기 힘들듯 하다

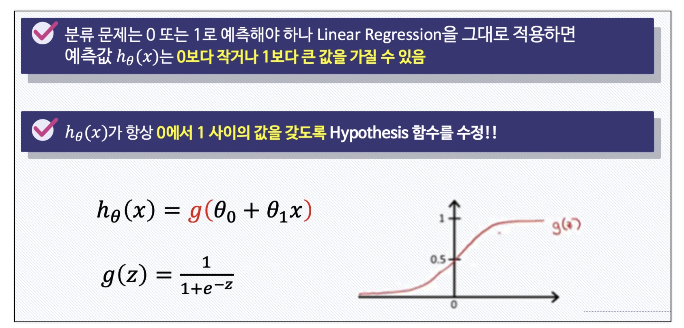

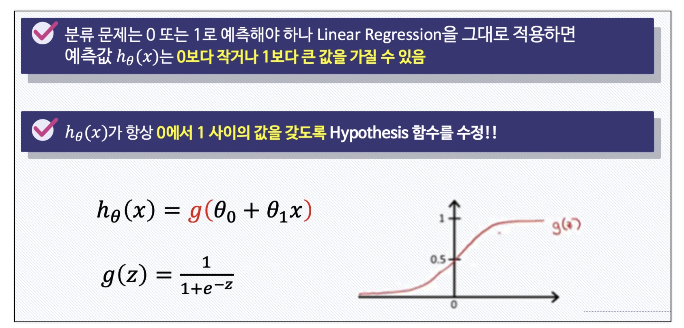

모델 재설정

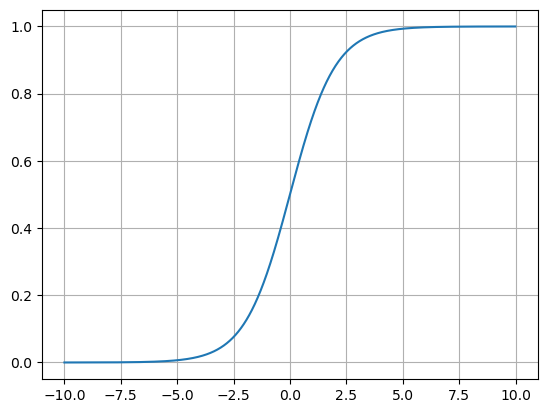

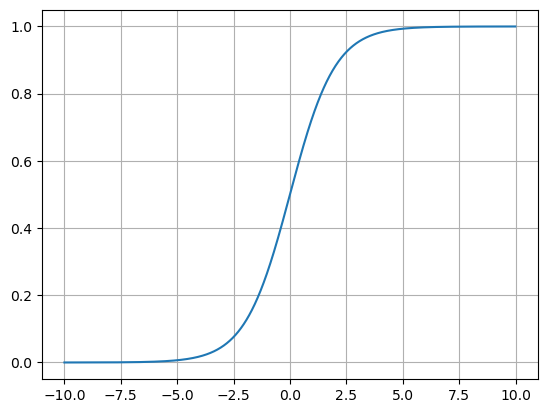

Logistic Function

import numpy as np

z = np.arange(-10, 10, 0.01)

g = 1 / (1+np.exp(-z))

import matplotlib.pyplot as plt

plt.plot(z,g)

plt.grid()

plt.show()

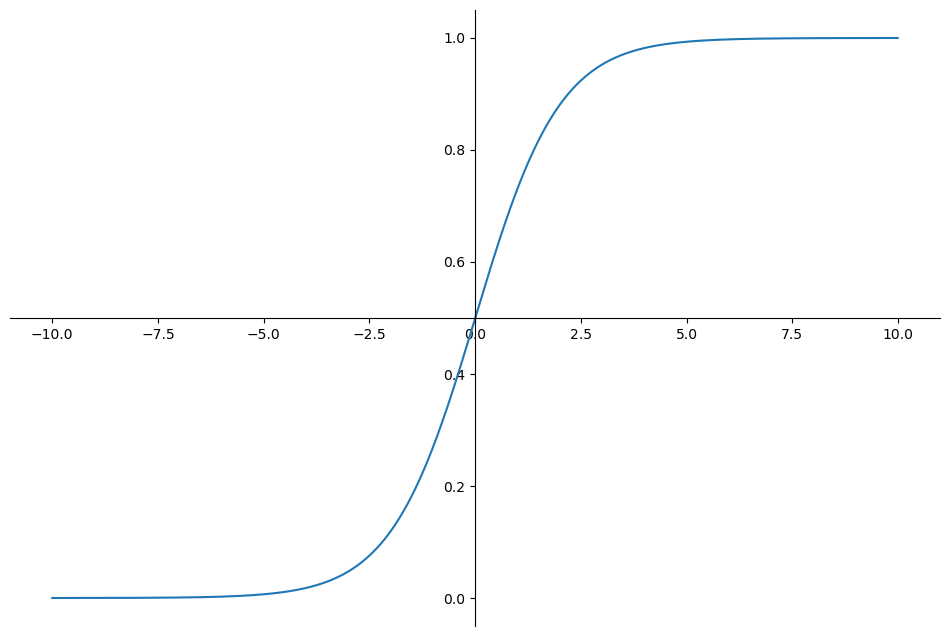

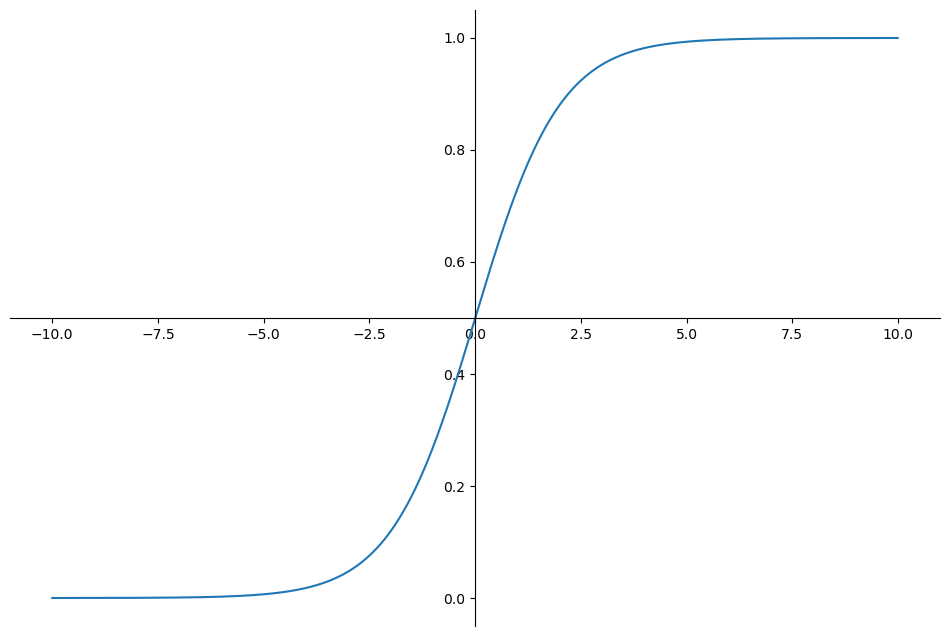

detail

plt.figure(figsize=(12,8))

ax = plt.gca()

ax.plot(z,g)

ax.spines['left'].set_position('zero')

ax.spines['bottom'].set_position('center')

ax.spines['right'].set_color('none')

ax.spines['top'].set_color('none')

plt.show()

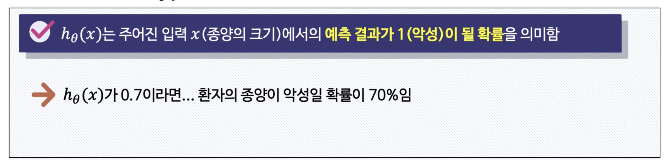

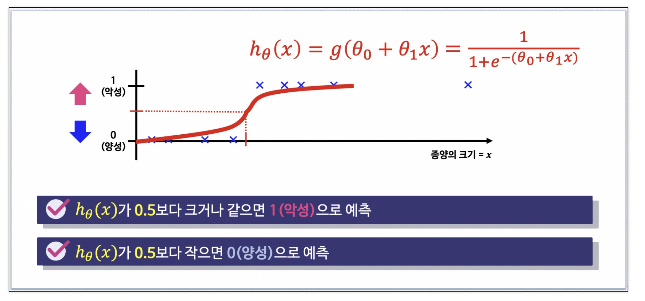

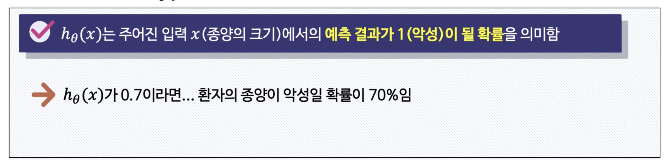

Hypothesis 함수의 결과에 따른 분류

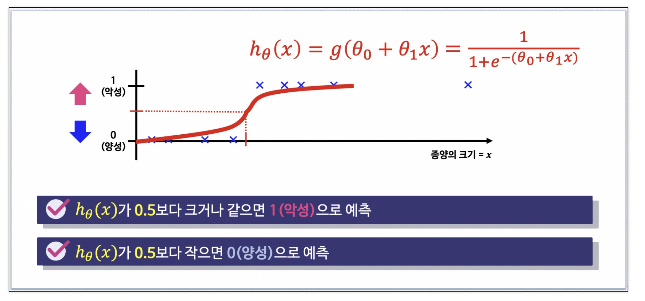

분류 문제용 Hypothesis

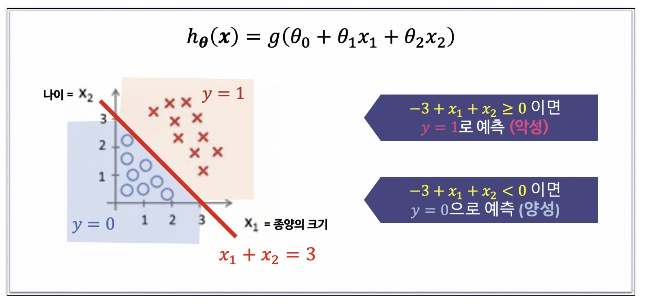

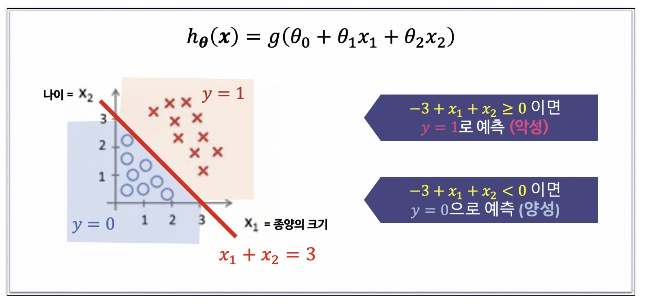

Decision Boundary

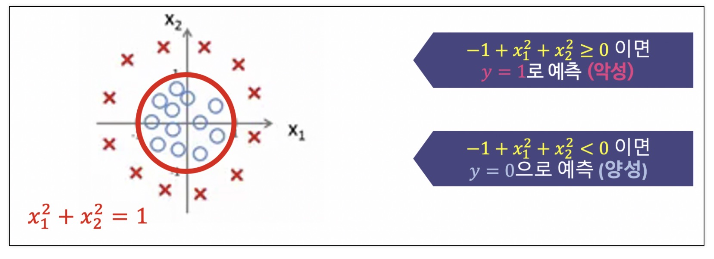

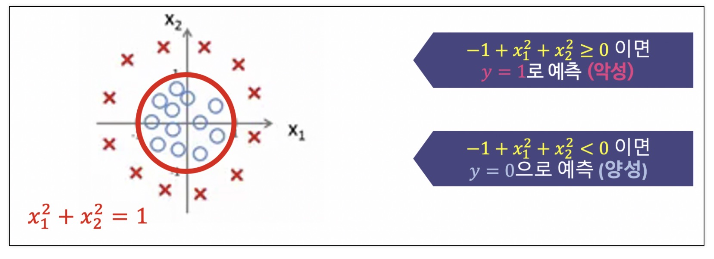

Decision Boundary 2

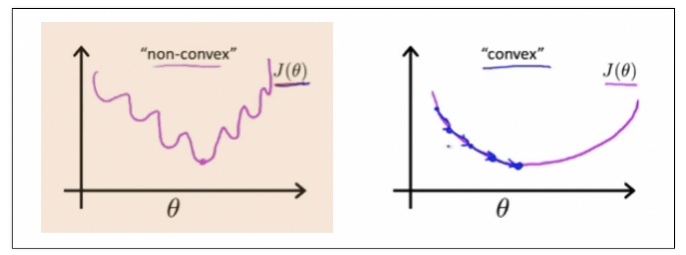

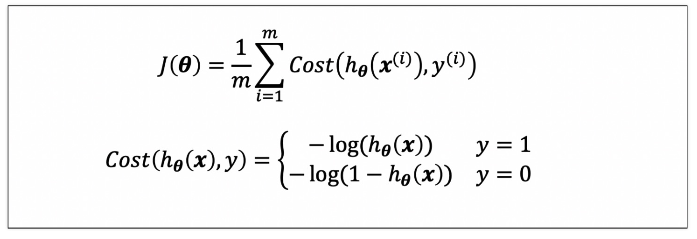

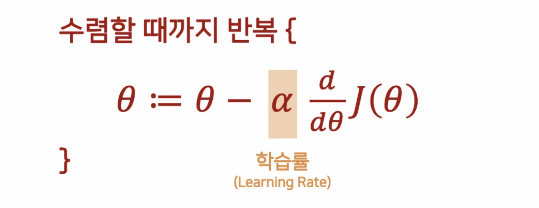

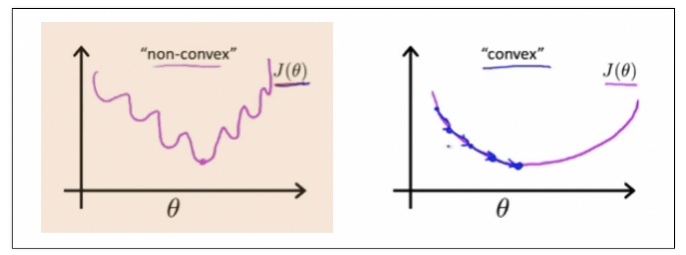

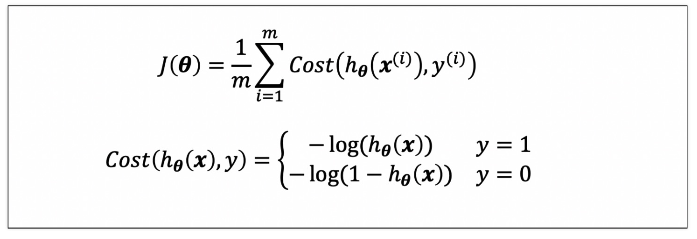

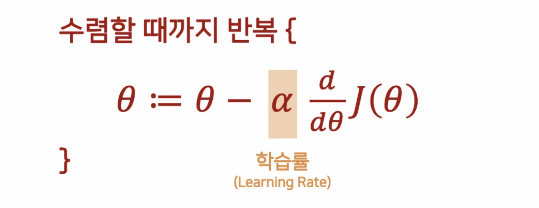

Cost Function

Logistic Regression에서 Cost Function을 재정의

Learning 알고리즘은 동일

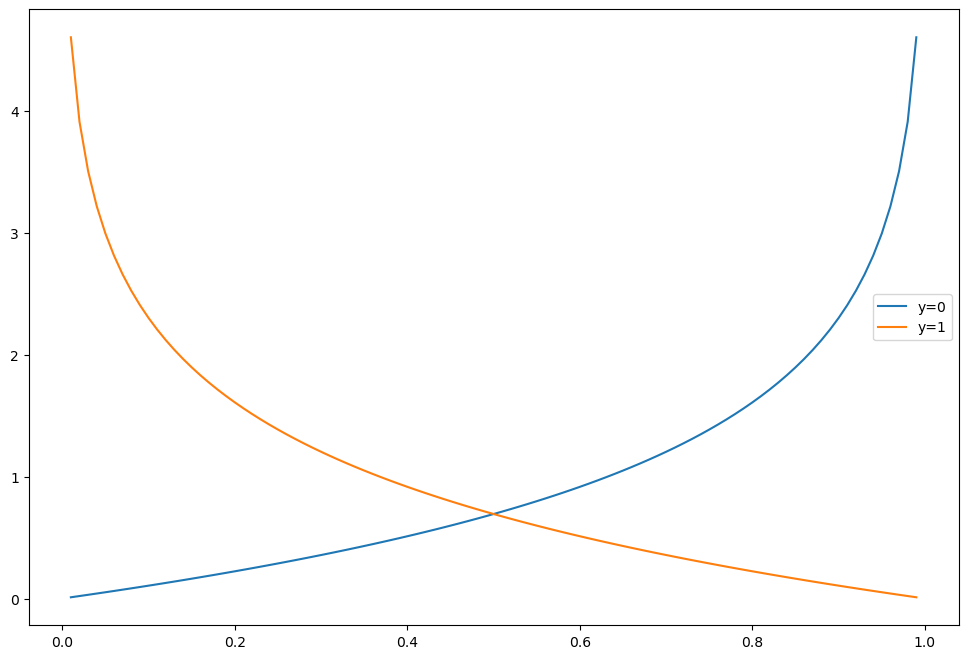

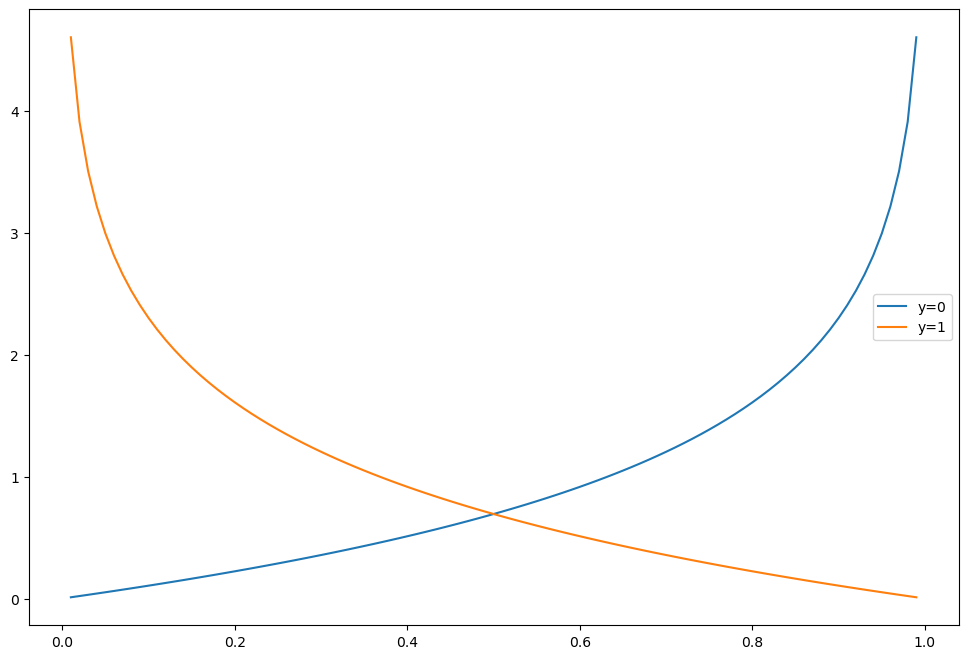

Logistic Reg. Cost Function의 그래프

h = np.arange(0.01, 1, 0.01)

C0 = -np.log(1-h)

C1 = -np.log(h)

plt.figure(figsize=(12,8))

plt.plot(h, C0, label='y=0')

plt.plot(h, C1, label='y=1')

plt.legend()

plt.show()

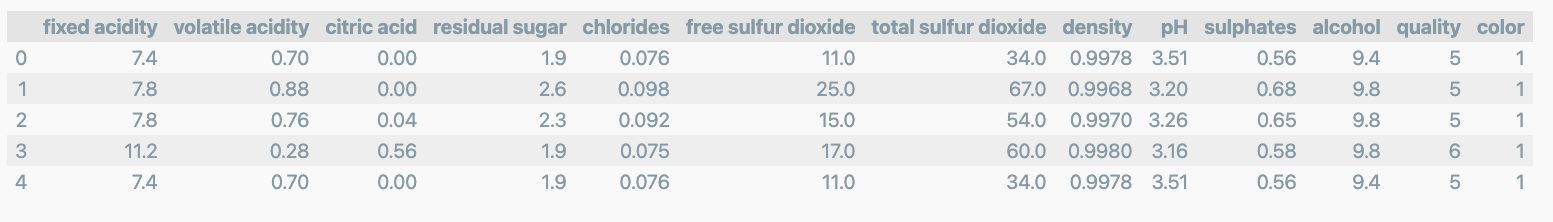

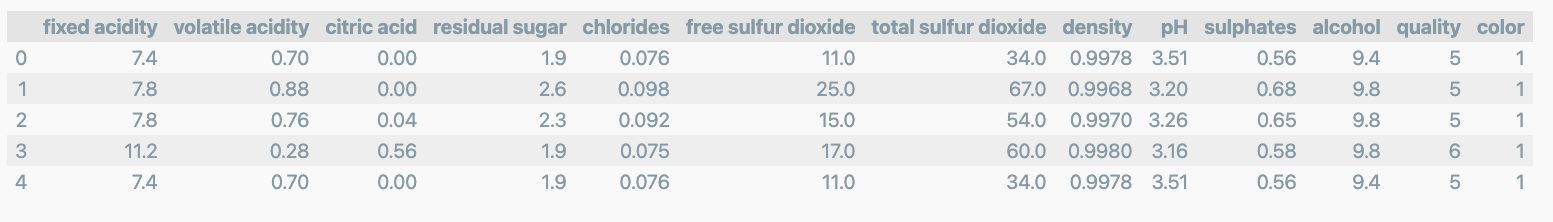

Wine Taset

데이터 읽기

import pandas as pd

wine_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/wine.csv'

wine = pd.read_csv(wine_url, index_col=0)

wine.head()

맛 등급 만들기

wine['taste'] = [1. if grade > 5 else 0. for grade in wine['quality']]

X = wine.drop(['taste','quality'], axis=1)

y = wine['taste']

데이터 분리

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size=0.2, random_state=13)

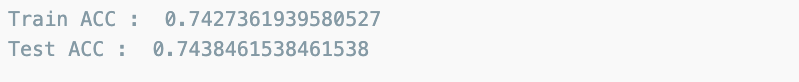

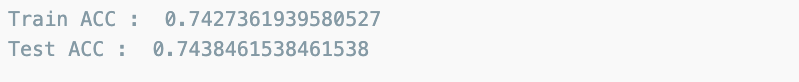

로지스틱 회귀 테스트

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import accuracy_score

lr = LogisticRegression(solver='liblinear', random_state=13)

lr.fit(X_train, y_train)

y_pred_tr = lr.predict(X_train)

y_pred_test = lr.predict(X_test)

print('Train ACC : ', accuracy_score(y_train,y_pred_tr))

print('Test ACC : ', accuracy_score(y_test,y_pred_test))

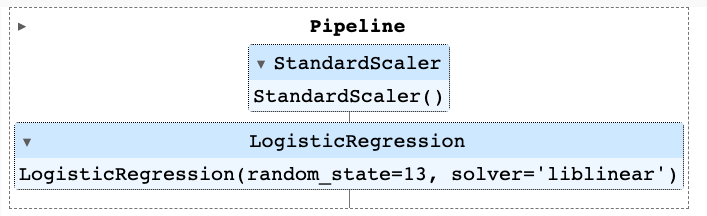

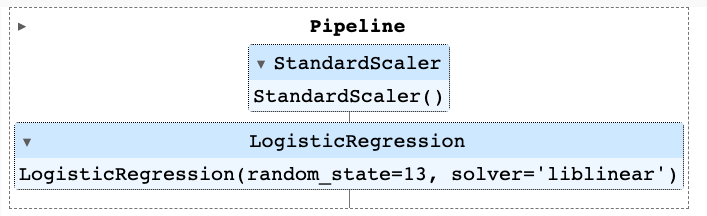

스케일러 적용하여 파이프라인 구축

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

estimators = [

('scaler', StandardScaler()),

('clf', LogisticRegression(solver='liblinear', random_state=13))

]

pipe = Pipeline(estimators)

pipe.fit(X_train, y_train)

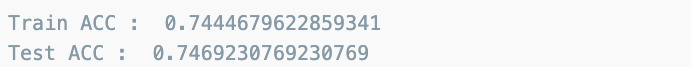

상승효과 확인

y_pred_tr = pipe.predict(X_train)

y_pred_test = pipe.predict(X_test)

print('Train ACC : ', accuracy_score(y_train,y_pred_tr))

print('Test ACC : ', accuracy_score(y_test,y_pred_test))

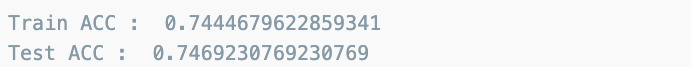

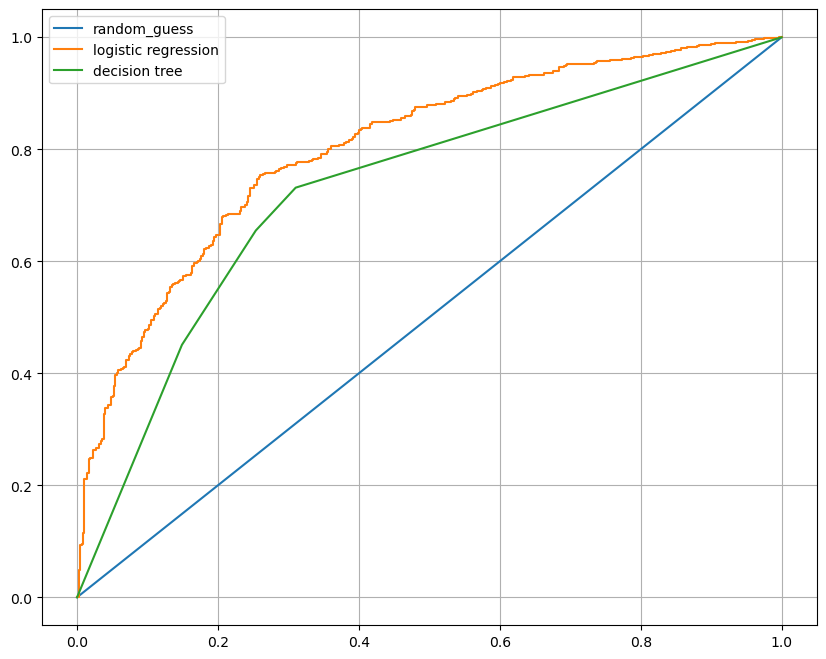

Decision Tres와의 비교를 위한 작업

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, y_train)

models = {

'logistic regression' : pipe,

'decision tree' : wine_tree

}

AUC 그래프를 이용한 모델간 비교

from sklearn.metrics import roc_curve

plt.figure(figsize=(10,8))

plt.plot([0,1],[0,1], label='random_guess')

for model_name, model in models.items():

pred = model.predict_proba(X_test)[:,1]

fpr, tpr, thresholds = roc_curve(y_test, pred)

plt.plot(fpr, tpr, label = model_name)

plt.grid()

plt.legend()

plt.show()

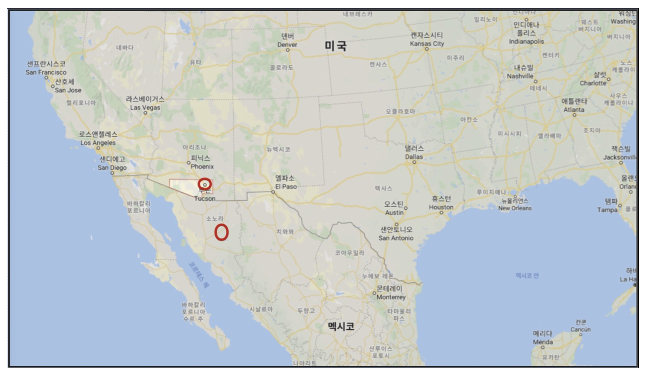

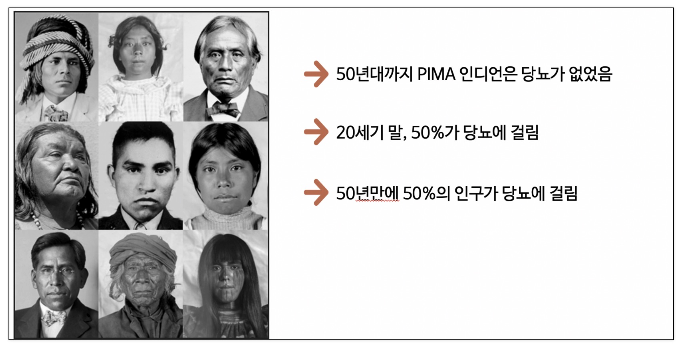

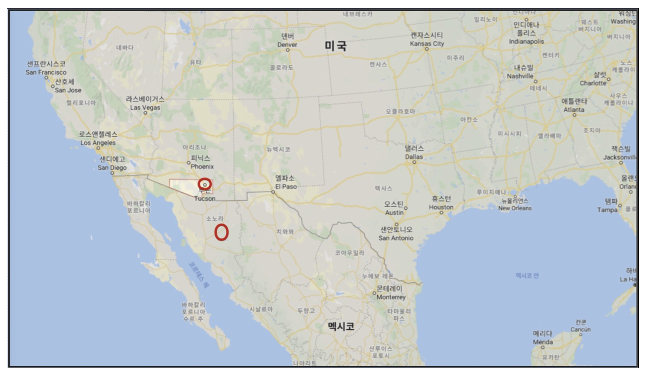

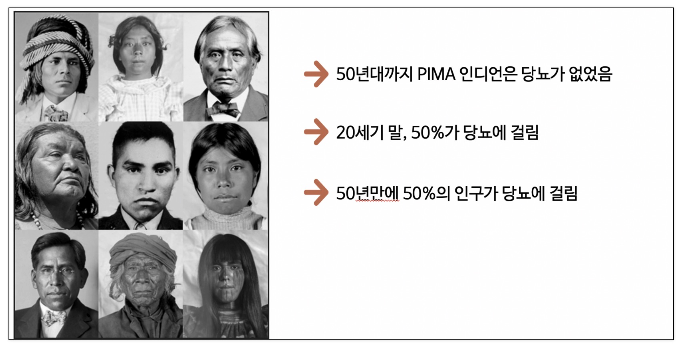

PIMA 인디언 당뇨병 예측

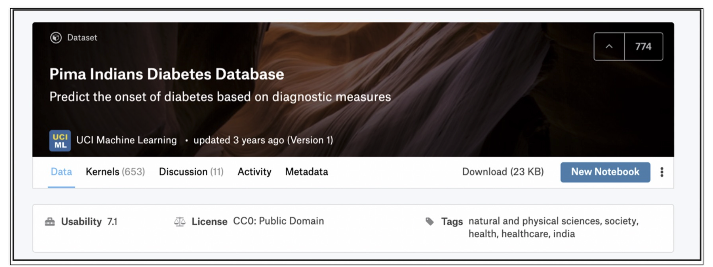

데이터

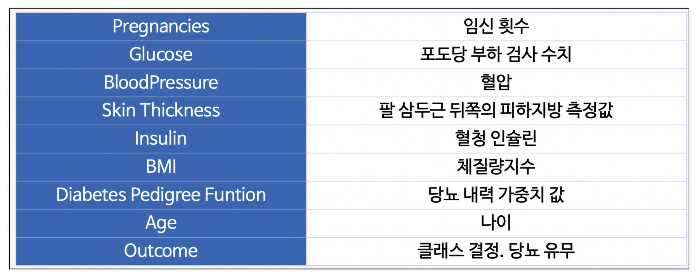

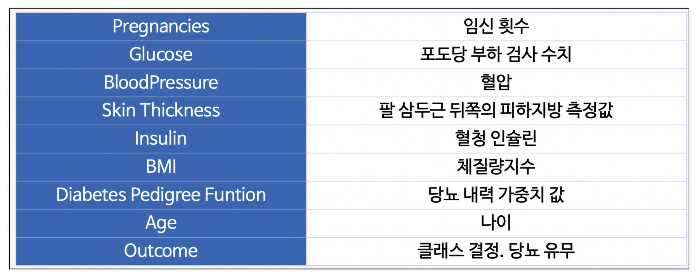

Data의 컬럼의 의미

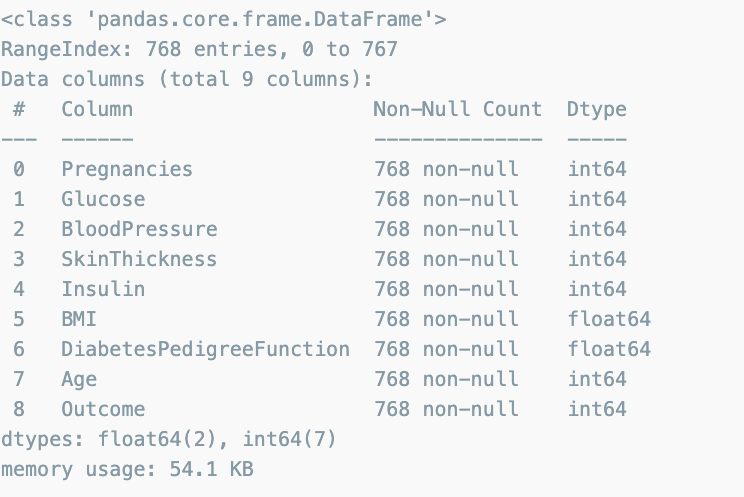

데이터 읽기

import pandas as pd

PIMA_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/diabetes.csv'

PIMA = pd.read_csv(PIMA_url)

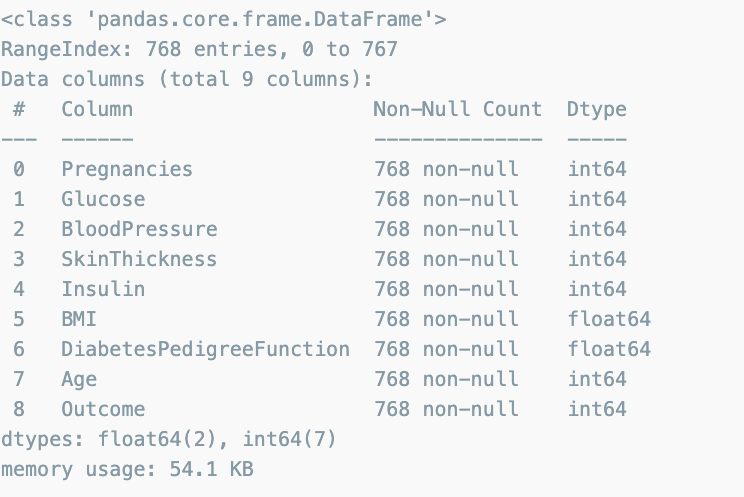

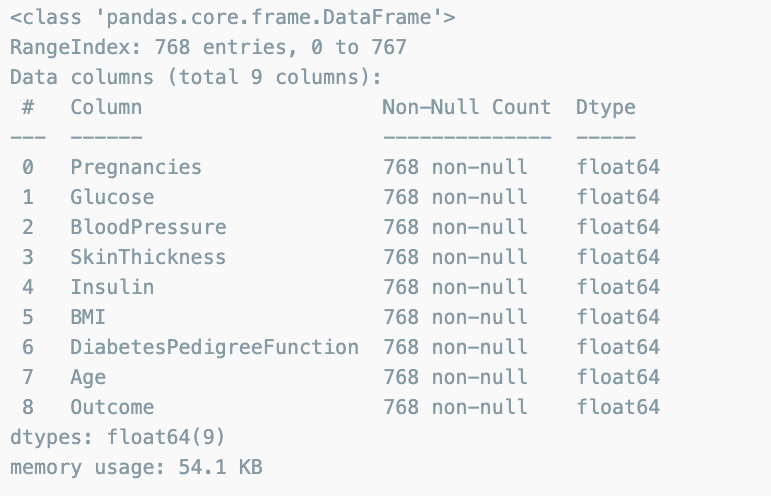

PIMA.info()

float으로 데이터 변환

PIMA = PIMA.astype('float')

PIMA.info()

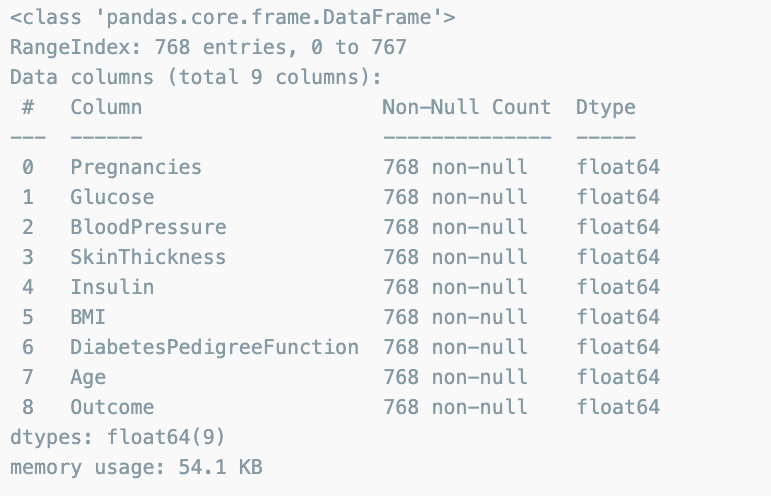

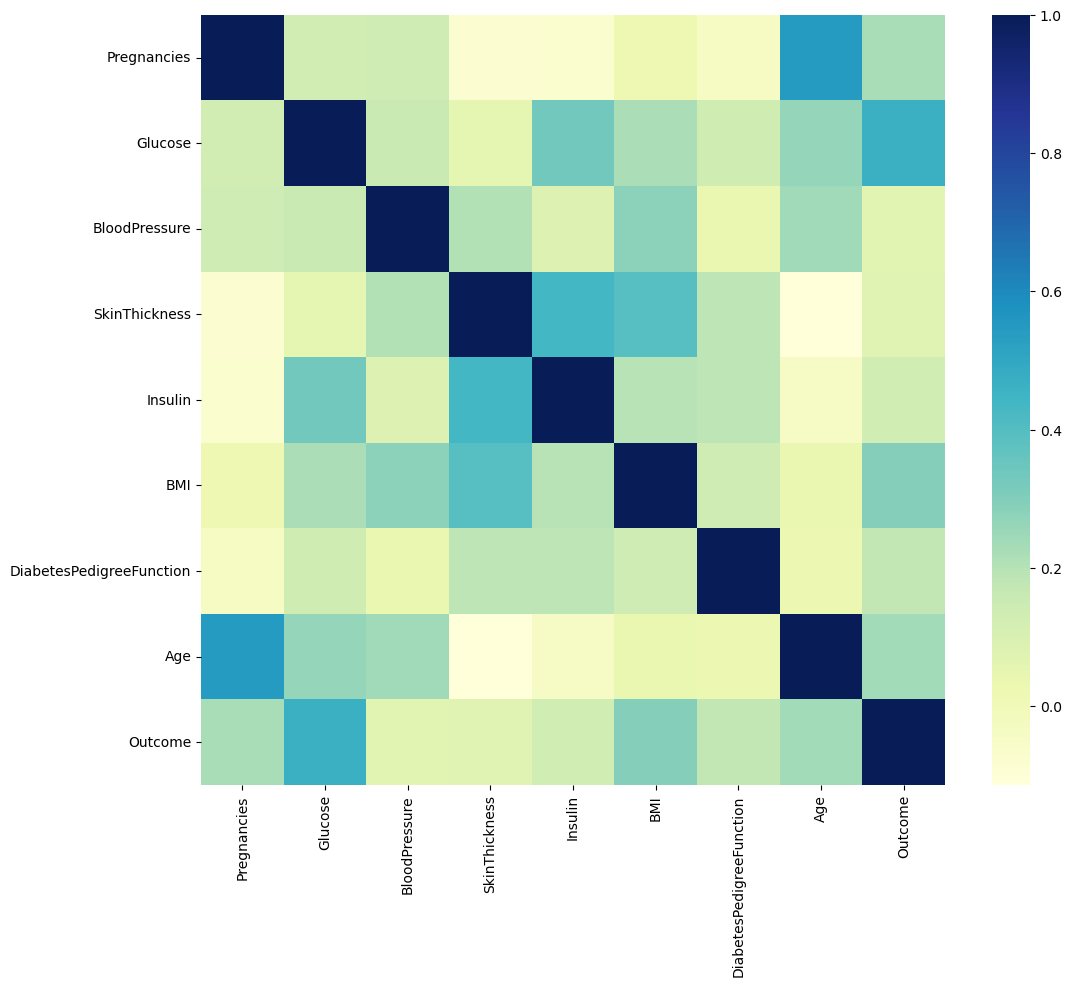

상관관계 확인

import seaborn as sns

import matplotlib.pyplot as plt

plt.figure(figsize=(12,10))

sns.heatmap(PIMA.corr(), cmap='YlGnBu')

plt.show()

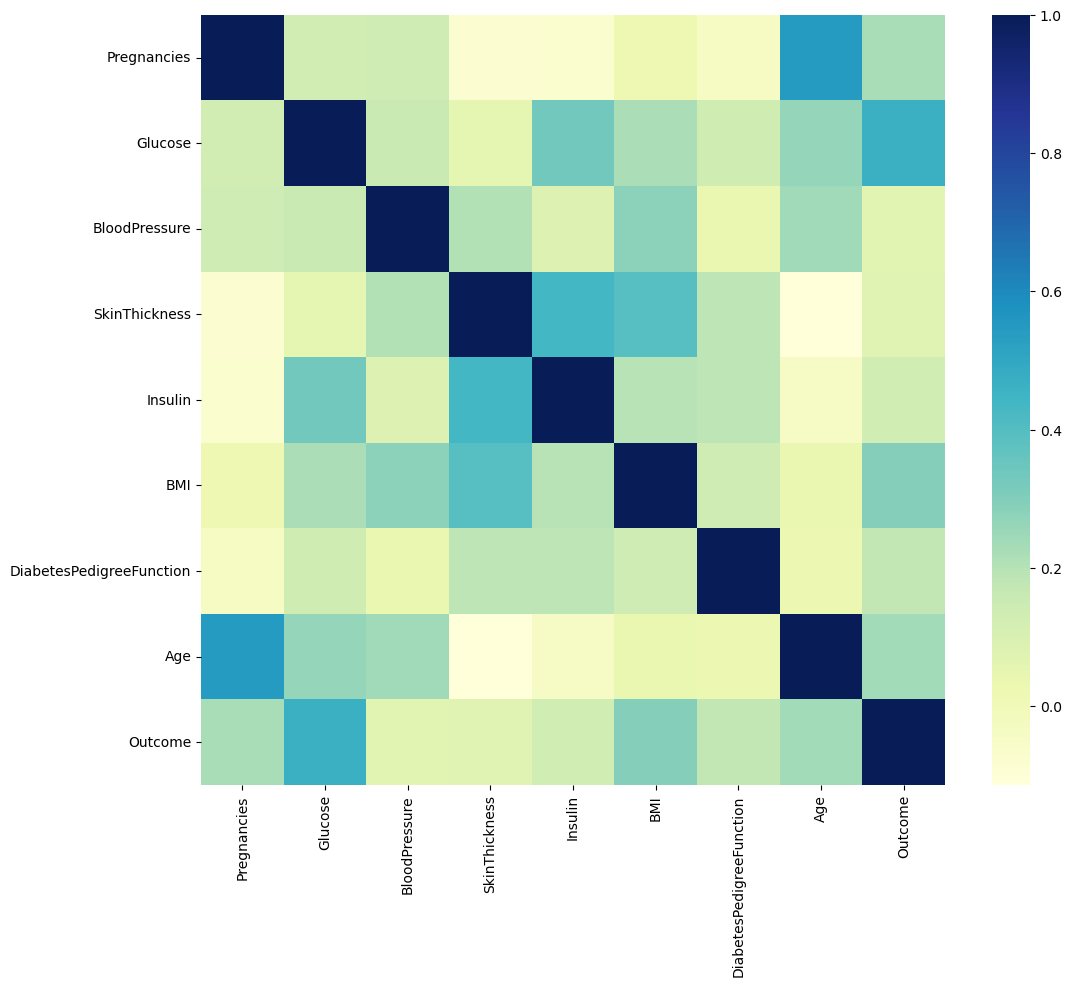

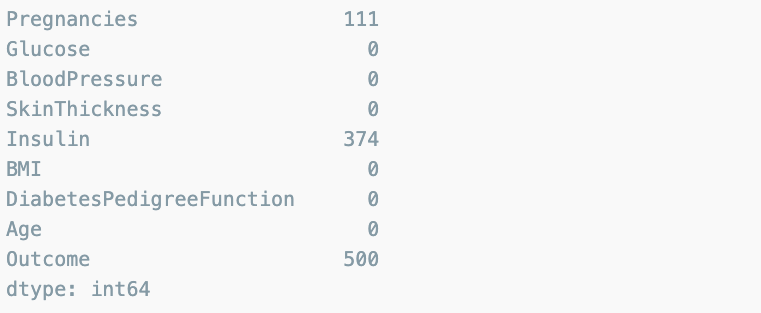

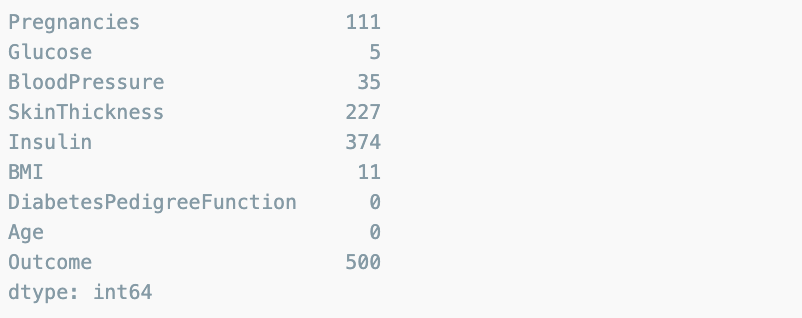

데이터 0

(PIMA==0).astype(int).sum()

- 결측치는 데이터에 따라 그 정의가 다르다. 지금은 0이라는 숫자가 혈압에 있다는 것은 확실이 문제가 된다.

- 의학적 지식과 PIMA 인디언에 대한 정보가 없으므로 일단 평균값으로 대체

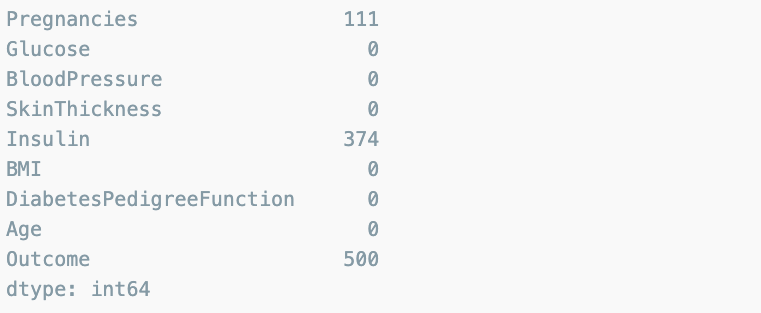

zero_features = ['Glucose','BloodPressure','SkinThickness','BMI']

PIMA[zero_features] = PIMA[zero_features].replace(0, PIMA[zero_features].mean())

(PIMA==0).astype(int).sum()

데이터 나누기

from sklearn.model_selection import train_test_split

X = PIMA.drop(['Outcome'], axis=1)

y = PIMA['Outcome']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2,

stratify=y, random_state=13)

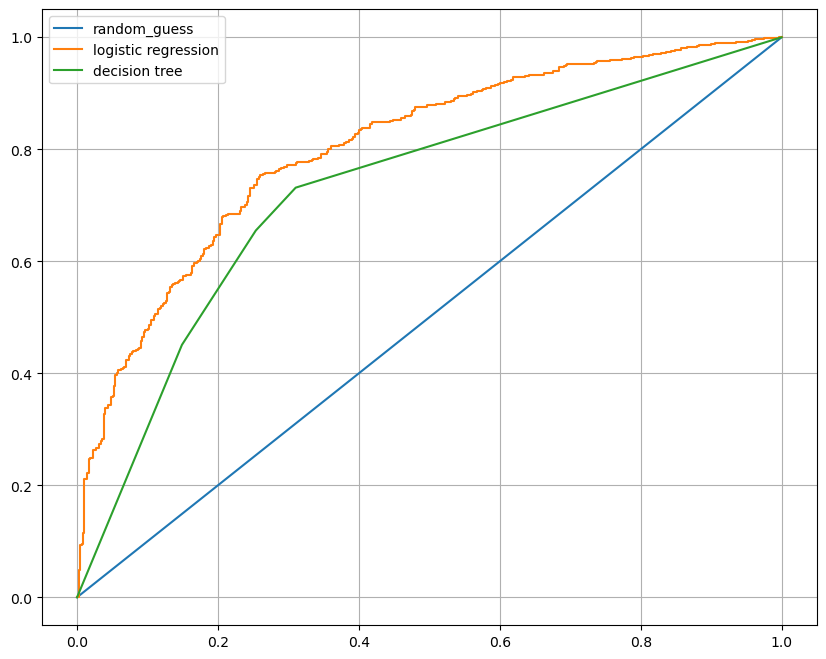

Pipeline을 만들기

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

estimators = [

('scaler', StandardScaler()),

('clf', LogisticRegression(solver='liblinear', random_state=13))

]

pipe_lr = Pipeline(estimators)

pipe_lr.fit(X_train, y_train)

pred = pipe_lr.predict(X_test)

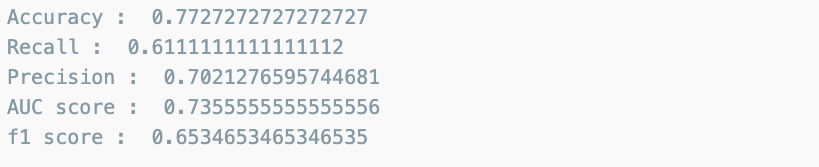

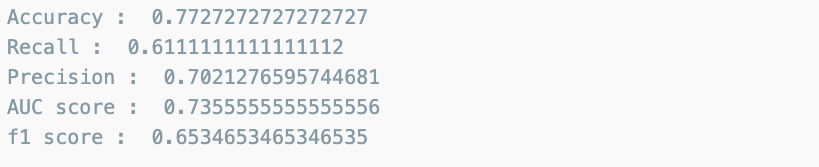

수치 확인

- 상대적 의미를 가질 수 없어서 이 수치 자체를 평가할 수는 없다.

from sklearn.metrics import (accuracy_score, recall_score, precision_score, roc_auc_score, f1_score)

print('Accuracy : ', accuracy_score(y_test, pred))

print('Recall : ', recall_score(y_test, pred))

print('Precision : ', precision_score(y_test, pred))

print('AUC score : ', roc_auc_score(y_test, pred))

print('f1 score : ', f1_score(y_test, pred))

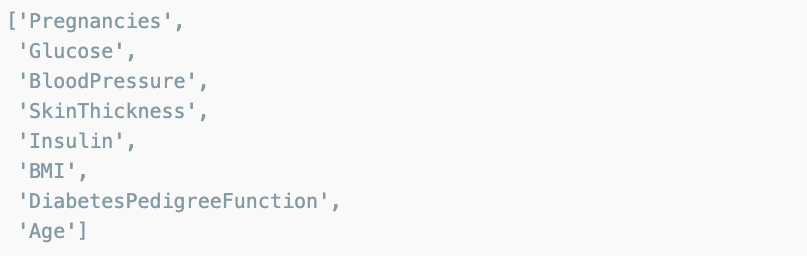

다변수 방적식의 각 계수 값을 확인

coef = list(pipe_lr['clf'].coef_[0])

labels = list(X_train.columns)

labels

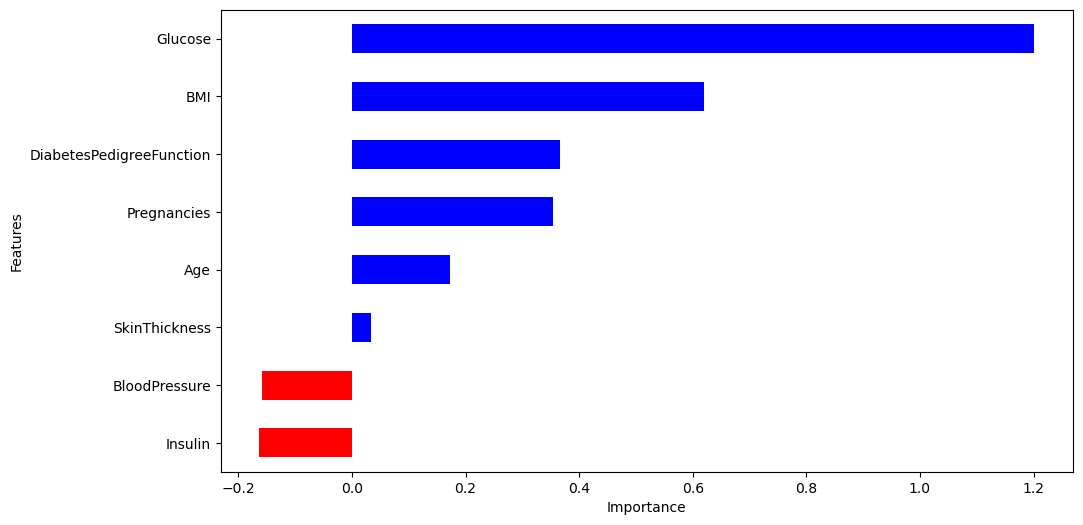

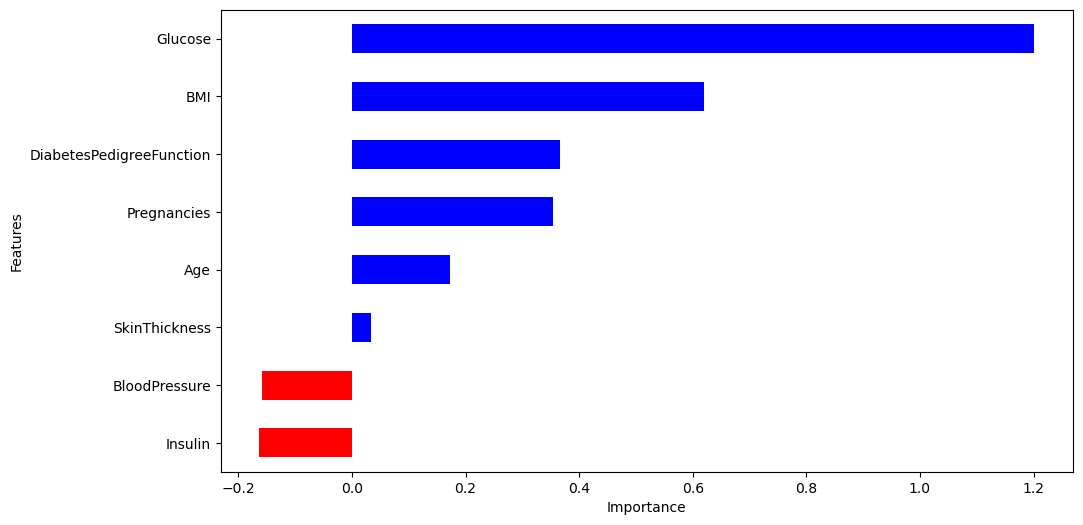

중요한 feature 그리기

features = pd.DataFrame({'Features': labels, 'importance' : coef})

features.sort_values(by=['importance'], ascending=True, inplace=True)

features['positive'] = features['importance'] > 0

features.set_index('Features', inplace=True)

features['importance'].plot(kind='barh', figsize=(11,6), color= features['positive'].map({True:'blue', False:'red'}))

plt.xlabel('Importance')

plt.show()

결론

- 포도당, BMI 등은 당뇨에 영향을 미치는 정도가 높다.

- 혈압은 예측에 부정적 영향을 준다.

- 연령이 BMI보다 출력 변수와 더 관련되어 있었지만, 모델은 BMI와 Glucose에 더 의존함.