9일차: 머신러닝 앙상블, CNN

오전: 보스턴 집값 회귀 앙상블

오후: CNN

1. 앙상블

1.1 보팅

보팅은 기본적으로 여러 개의 분류기를 사용하여 각각의 분류기 결과를 투표하여 예측

하드보팅

- 각각의 분류기의 결괏값중 가장 많은 걸 따른다.

소프트 보팅

- 분류기의 확률을 더하고 각각 평균을 내서 확률이 제일 높은 값으로 결괏값을 선정

1.2 배깅

-

보팅과 다르개 서로 같은 알고리즘의 분류기 조합

-

분류기 1의 데이터는 [0, 0, 3, 4, 5, 5] 분류기, 2의 데이터는 [0, 1, 2, 3, 4, 5] 분류기, 분류기 3의 데이터는 [0, 1, 1, 2, 4, 5] 이런 식으로 개별 데이터의 중첩을 허락

2. 보스턴 집값 예측하기

2.1 데이터 전처리

csv_path = '/content/drive/MyDrive/fly_ai/2주차/2주차학습데이터/Boston_house.csv'

df = pd.read_csv(csv_path)

df

AGE B RM CRIM DIS INDUS LSTAT NOX PTRATIO RAD ZN TAX CHAS Target

0 65.2 396.90 6.575 0.00632 4.0900 2.31 4.98 0.538 15.3 1 18.0 296 0 24.0

1 78.9 396.90 6.421 0.02731 4.9671 7.07 9.14 0.469 17.8 2 0.0 242 0 21.6

2 61.1 392.83 7.185 0.02729 4.9671 7.07 4.03 0.469 17.8 2 0.0 242 0 34.7

3 45.8 394.63 6.998 0.03237 6.0622 2.18 2.94 0.458 18.7 3 0.0 222 0 33.4

4 54.2 396.90 7.147 0.06905 6.0622 2.18 5.33 0.458 18.7 3 0.0 222 0 36.2

missing_values = df.isnull().sum()

missing_values

AGE 0

B 0

RM 0

CRIM 0

DIS 0

INDUS 0

LSTAT 0

NOX 0

PTRATIO 0

RAD 0

ZN 0

TAX 0

CHAS 0

Target 0

dtype: int64

2.2 학습데이터, 모델 준비

X = df.drop('Target', axis=1)

y = df['Target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

from sklearn.ensemble import VotingRegressor

from sklearn.linear_model import LinearRegression

from sklearn.neighbors import KNeighborsRegressor

from sklearn.model_selection import train_test_split

from sklearn.datasets import make_regression

from sklearn.metrics import mean_squared_error

lr_reg = LinearRegression()

knn_reg = KNeighborsRegressor(n_neighbors=8)

2.3 앙상블

vo_reg = VotingRegressor(estimators=[("LR", lr_reg), ("KNN", knn_reg)])

regression = [vo_reg, lr_reg, knn_reg]

for reg in regression:

reg.fit(X_train, y_train)

pred = reg.predict(X_test)

name = reg.__class__.__name__

print(name)

mse = mean_squared_error(y_test, pred)

print(f"mse: {mse}")

VotingRegressor

mse: 20.54148031998295

LinearRegression

mse: 24.291119474973588

KNeighborsRegressor

mse: 33.369483762254895

2.4 데이터 스케일링 후 앙상블

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_test_scaled = scaler.transform(X_test)

for reg in regression:

reg.fit(X_train_scaled, y_train)

pred = reg.predict(X_test_scaled)

name = reg.__class__.__name__

print(name)

mse = mean_squared_error(y_test, pred)

print(f"mse: {mse}")

VotingRegressor

mse: 19.957068817174882

LinearRegression

mse: 24.291119474973545

KNeighborsRegressor

mse: 23.7098299632352973. CNN

3.1 MNIST 데이터

import tensorflow as tf

# 1. MNIST 데이터셋 임포트

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# 2. 데이터 전처리

x_train, x_test = x_train/255.0, x_test/255.0

# 3. 모델 구성

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(512, activation=tf.nn.relu),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)

])

# 4. 모델 컴파일

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# 5. 모델 훈련

model.fit(x_train, y_train, epochs=5)

# 6. 정확도 평가

test_loss, test_acc = model.evaluate(x_test, y_test)

print('테스트 정확도:', test_acc)

Epoch 1/5

1875/1875 [==============================] - 5s 2ms/step - loss: 0.2028 - accuracy: 0.9409

Epoch 2/5

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0823 - accuracy: 0.9746

Epoch 3/5

1875/1875 [==============================] - 5s 2ms/step - loss: 0.0531 - accuracy: 0.9835

Epoch 4/5

1875/1875 [==============================] - 5s 3ms/step - loss: 0.0387 - accuracy: 0.9875

Epoch 5/5

1875/1875 [==============================] - 6s 3ms/step - loss: 0.0286 - accuracy: 0.9905

313/313 [==============================] - 1s 2ms/step - loss: 0.0778 - accuracy: 0.9773

테스트 정확도: 0.97729998826980593.2 shape 데이터 classification

data_path = '/content/drive/MyDrive/fly_ai/2주차/2주차학습데이터/shapes/shapes'

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# 이미지 데이터 생성기 인스턴스화

train_datagen = ImageDataGenerator(rescale=1./255, validation_split=0.2)

train_generator = train_datagen.flow_from_directory(

data_path,

target_size=(28, 28),

batch_size=32,

class_mode='categorical',

subset='training')

validation_generator = train_datagen.flow_from_directory(

data_path,

target_size=(28, 28),

batch_size=32,

class_mode='categorical',

subset='validation')

Found 240 images belonging to 3 classes.

Found 60 images belonging to 3 classes.

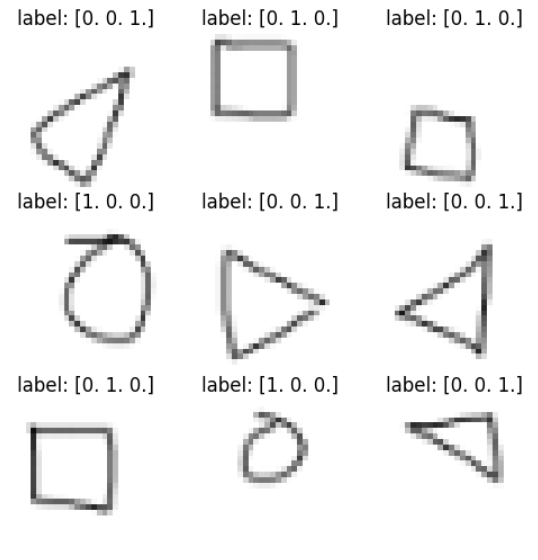

import matplotlib.pyplot as plt

# 훈련 제너레이터에서 이미지와 레이블의 배치를 가져옵니다

images, labels = train_generator.next()

# 처음 9장의 이미지와 레이블을 출력합니다

plt.figure(figsize=(6, 6))

for i in range(9):

plt.subplot(3, 3, i + 1)

plt.imshow(images[i])

plt.title(f'label: {labels[i]}')

plt.axis('off')

plt.show()데이터셋 9장 확인

모델 생성, 학습

from tensorflow.keras.layers import Flatten, Dense

model = tf.keras.models.Sequential([

Conv2D(32, kernel_size=(3, 3), activation='relu', input_shape=(28, 28, 3)),

MaxPooling2D(pool_size=(2, 2)),

Flatten(),

Dense(128, activation='relu'),

Dense(3, activation='softmax')

])

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# 모델 훈련

model.fit(transformed_data, epochs=200, validation_data=(x_test, y_test))

test_loss, test_acc = model.evaluate(x_test, y_test)

print('테스트 정확도:', test_acc)

Epoch 199/200

8/8 [==============================] - 0s 32ms/step - loss: 0.4236 - accuracy: 0.8625 - val_loss: 0.1886 - val_accuracy: 0.9500

Epoch 200/200

8/8 [==============================] - 0s 31ms/step - loss: 0.4120 - accuracy: 0.8667 - val_loss: 0.1593 - val_accuracy: 0.9500

2/2 [==============================] - 0s 7ms/step - loss: 0.1593 - accuracy: 0.9500

테스트 정확도: 0.949999988079071