ACK(AWS Controllers for Kubenetes)

소개

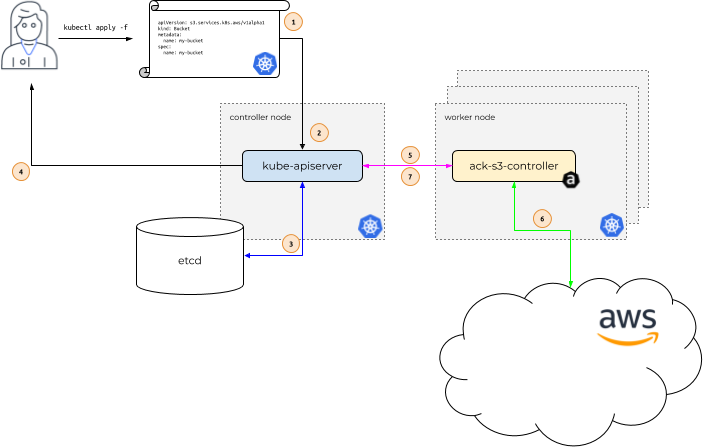

AWS 자원 정의 및 사용을 Kubenetes Cluster를 통해서 하여 자원 관리 Point를 단일화 하게 제공한다.

아래는 github에 있는 ACK 설명서이다.

https://aws-controllers-k8s.github.io/community/docs/community/overview/

기본적인 Flow는 Kubenetes 사용자는 관리하고자 하는 AWS 자원에 대한 manifest 파일을 생성하여 API Server에 전달하면 API Server는 요청한 CR(Custome Resource)에 대한 사용자의 권한 확인 및 CR이 유효한지 체크를 하고, 유효하면 사용자에게 CR 생성함을 전달

이후 사용자가 요청한 CR 관련 명령어를 ACK가 인지를 하고 , CR 요청을 관련 AWS API를 호출하여 최정적으로 관련 결과를 API Server로 전달하여 상태 관리를 한다.

Pod의 AWS 자원 접근 관련하여 IRSA를 통해서 권한 관리를 한다.

AWS S3 자원 실습

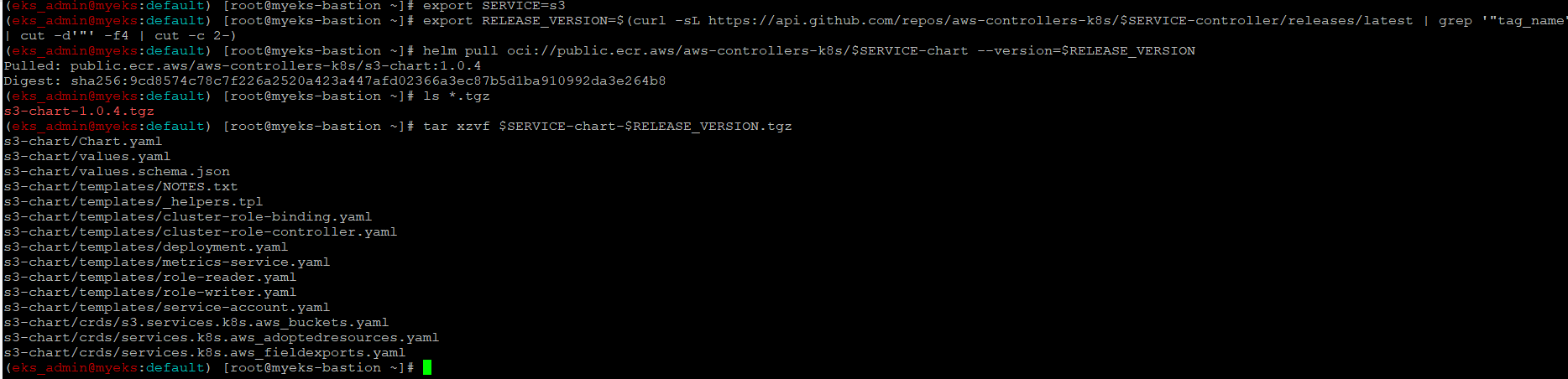

ACK S3 Controller 설치 with Helm

S3 controller Helm Chart Download

$> export SERVICE=s3

$> export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$> helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

$> tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

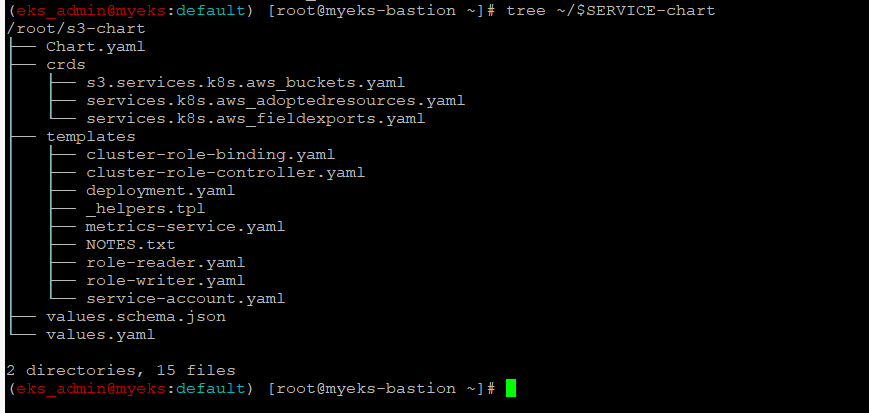

helm chart 확인

$> tree ~/$SERVICE-chart

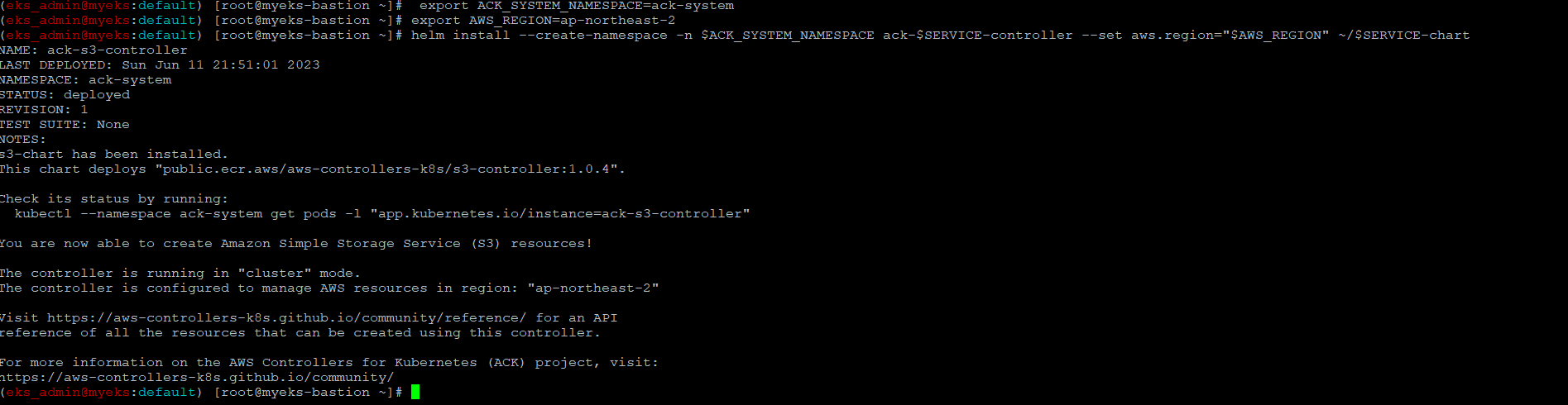

ACK S3 Controller 배포

$> export ACK_SYSTEM_NAMESPACE=ack-system

$> export AWS_REGION=ap-northeast-2

$> helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

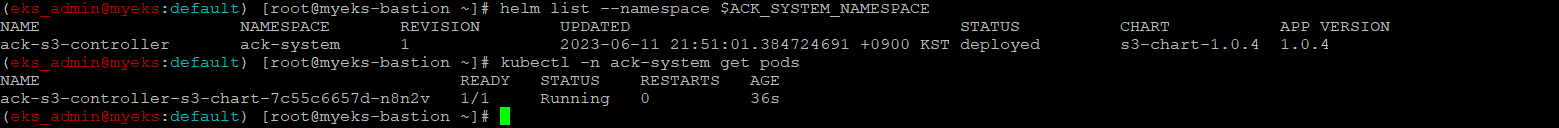

배포 확인

$> helm list --namespace $ACK_SYSTEM_NAMESPACE

$> kubectl -n ack-system get pods

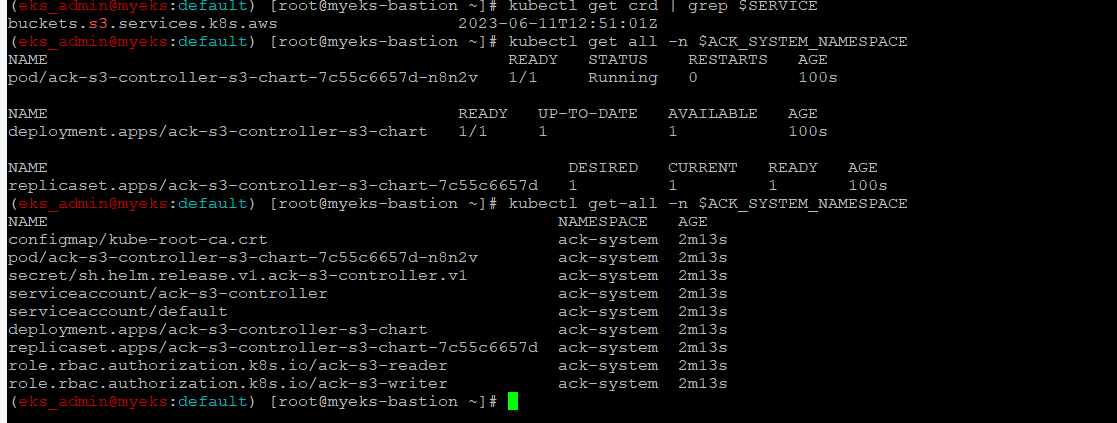

$> kubectl get crd | grep $SERVICE

$> kubectl get all -n $ACK_SYSTEM_NAMESPACE

$> kubectl get-all -n $ACK_SYSTEM_NAMESPACE

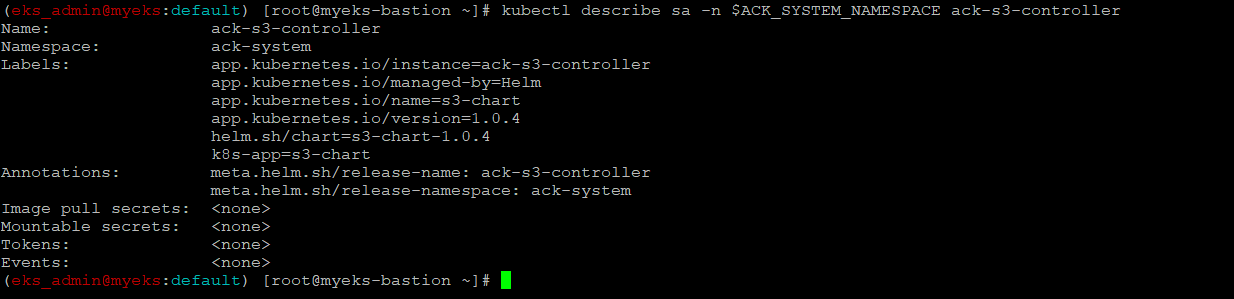

$> kubectl describe sa -n $ACK_SYSTEM_NAMESPACE ack-s3-controller

IRSA 설정

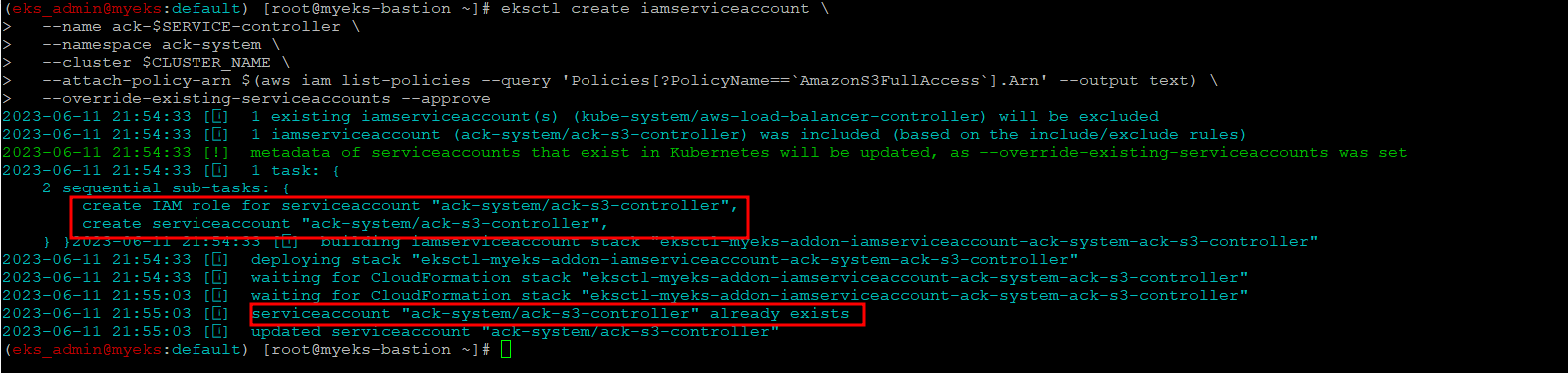

Create an iamserviceaccount

$> eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace ack-system \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonS3FullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

Iam Role 확인

$> eksctl get iamserviceaccount --cluster $CLUSTER_NAME

AWS Web Console 의 Cloudformation Stack에서도 확인 가능

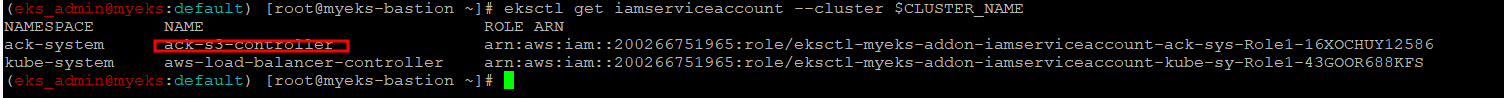

Service Account에 Role 할당 확인

$> kubectl get sa -n ack-system

$> kubectl describe sa ack-$SERVICE-controller -n ack-system

Restart ACK service controller deployment

Service Account 인 ack-s3-controller에 Role을 할당을 반영하기 위하여 재 배포

$> kubectl -n ack-system rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

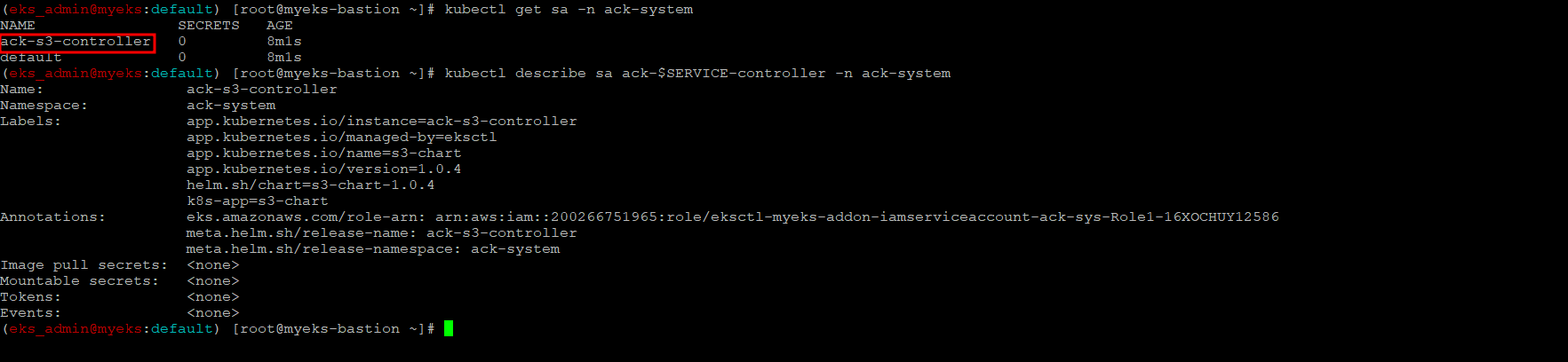

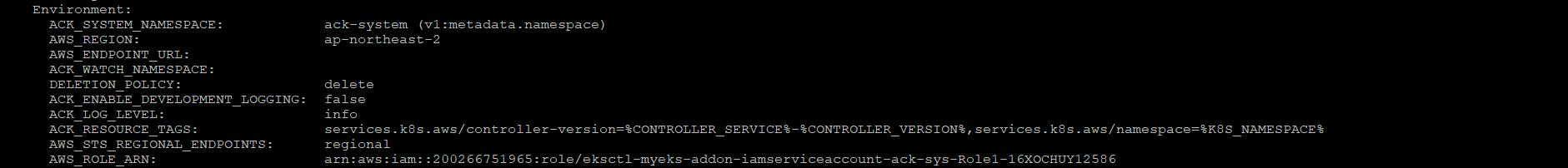

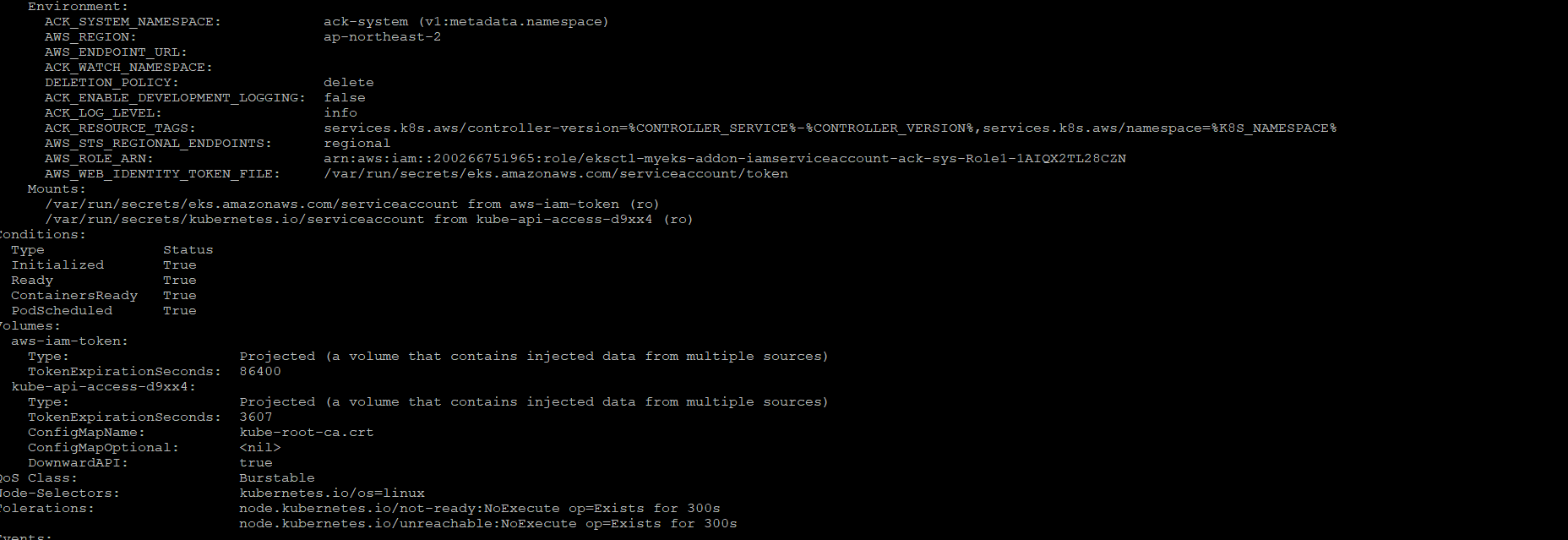

IRSA 적용으로 자동으로 생성된 Volume, Env 확인

$> kubectl describe pod -n ack-system -l k8s-app=$SERVICE-chart

S3 버킷 생성, 업데이트, 삭제

S3 버킷 생성을 위한 설정 파일 생성

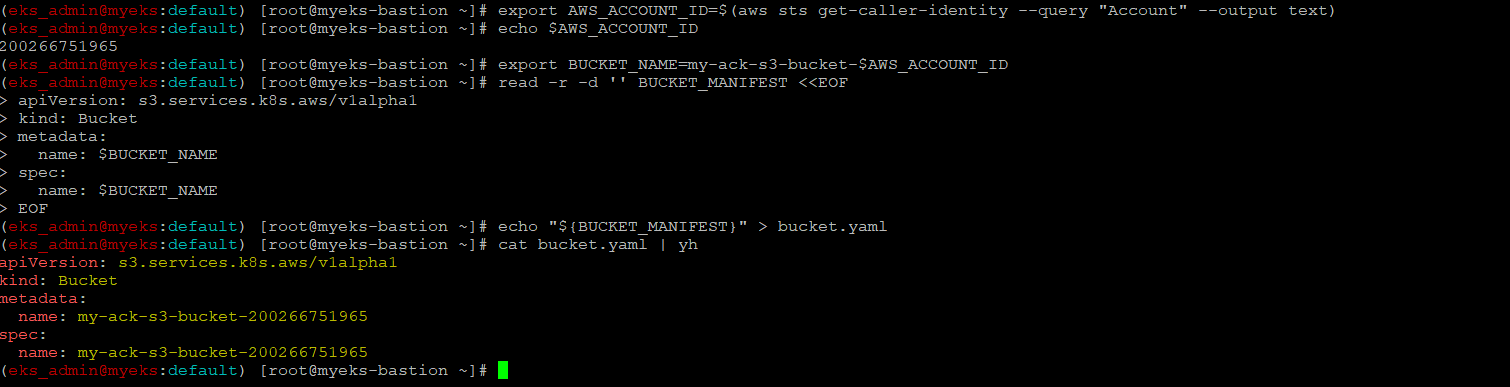

$> export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query "Account" --output text)

$> export BUCKET_NAME=my-ack-s3-bucket-$AWS_ACCOUNT_ID

$> read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

EOF

$> echo "${BUCKET_MANIFEST}" > bucket.yaml

$> cat bucket.yaml | yh

S3 버킷 생성

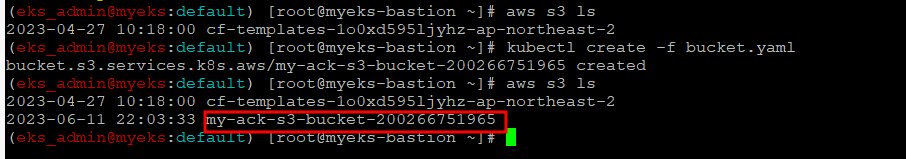

$> aws s3 ls

$> kubectl create -f bucket.yaml

$> aws s3 ls

S3 버킷 확인

$> aws s3 ls

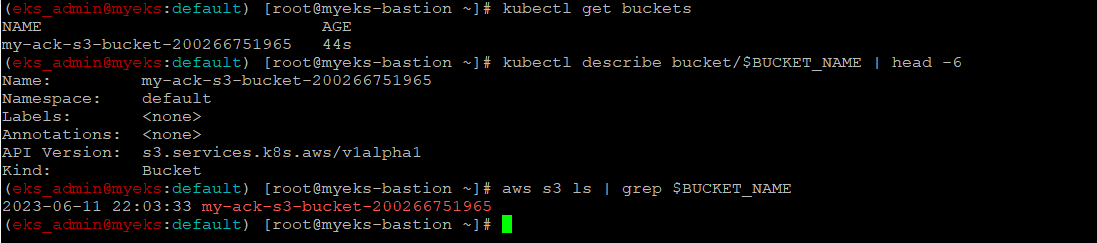

$> kubectl get buckets

$> kubectl describe bucket/$BUCKET_NAME | head -6

$> aws s3 ls | grep $BUCKET_NAME

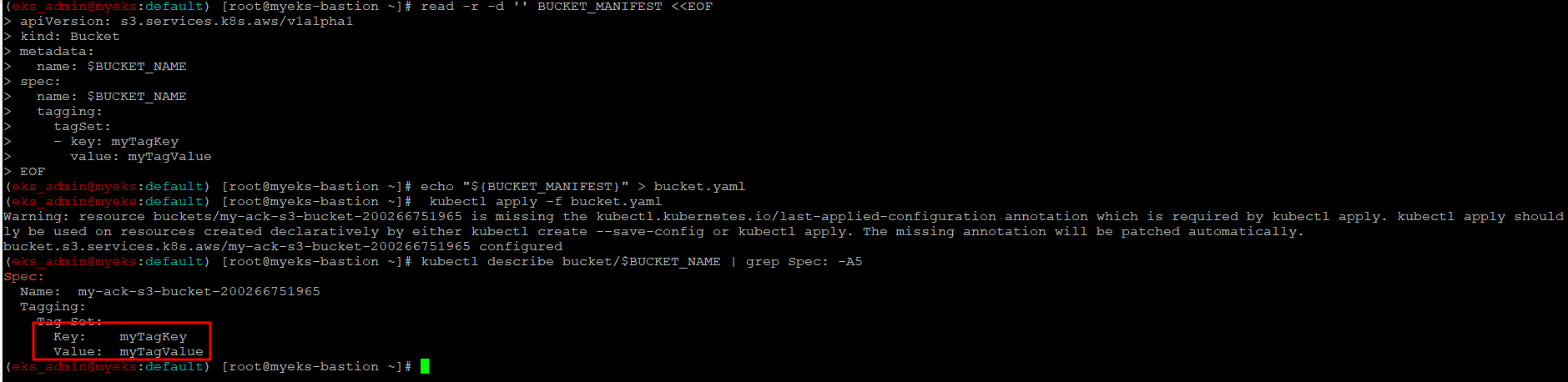

S3 버킷 업데이트 : 태그 정보 입력

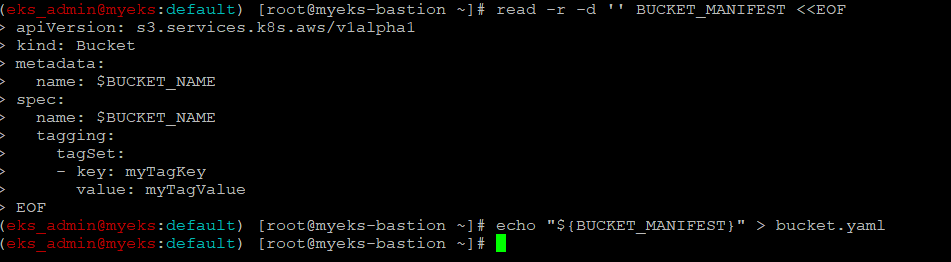

$> read -r -d '' BUCKET_MANIFEST <<EOF

apiVersion: s3.services.k8s.aws/v1alpha1

kind: Bucket

metadata:

name: $BUCKET_NAME

spec:

name: $BUCKET_NAME

tagging:

tagSet:

- key: myTagKey

value: myTagValue

EOF

$> echo "${BUCKET_MANIFEST}" > bucket.yaml

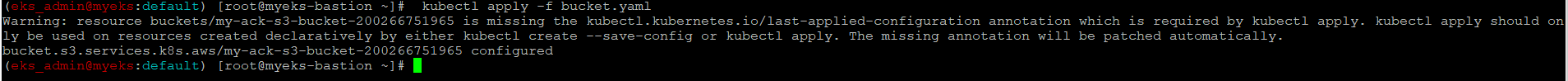

S3 버킷 설정 업데이트 실행

$> kubectl apply -f bucket.yaml

참고로 kubectl apply와 create와의 차이점은 URL 참조 : https://may9noy.tistory.com/302

S3 버킷 확인

$> kubectl describe bucket/$BUCKET_NAME | grep Spec: -A5

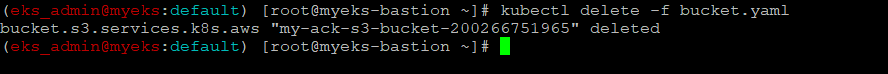

S3 버킷 삭제

$> kubectl delete -f bucket.yaml

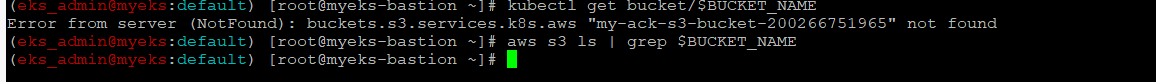

삭제 최종 확인

$> kubectl get bucket/$BUCKET_NAME

$> aws s3 ls | grep $BUCKET_NAME

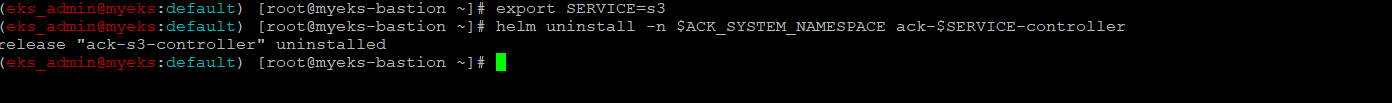

S3 ACK Controller 삭제

S3 ACK Controller Helm Chart 삭제

$> export SERVICE=s3

$> helm uninstall -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller

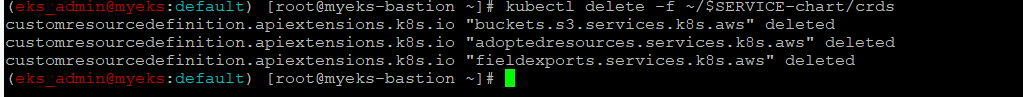

ACK S3 Controller 관련 crd 삭제

?> Helm Chart 삭제를 하면 자동으로 함께 삭제가 되지 않나?

$> kubectl delete -f ~/$SERVICE-chart/crds

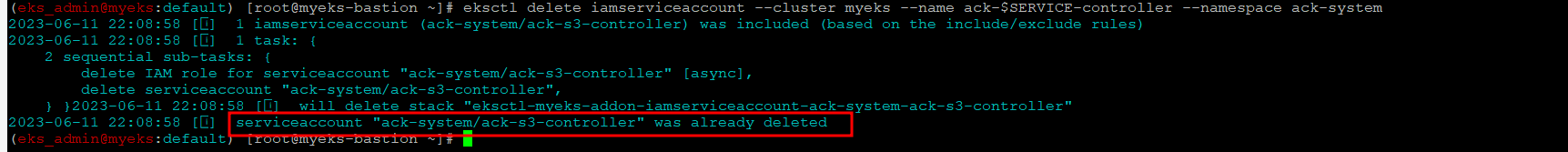

IRSA 삭제

$> eksctl delete iamserviceaccount --cluster myeks --name ack-$SERVICE-controller --namespace ack-system

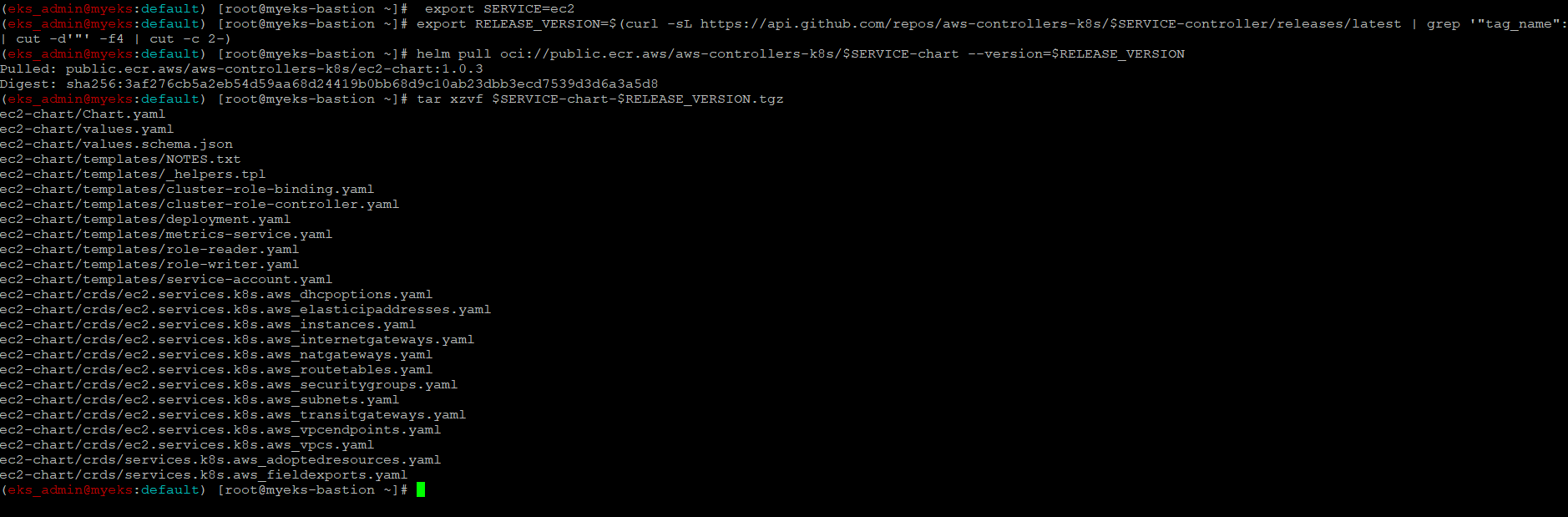

EC2-Controller를 통한 VPC , Subnet 실습

ACK EC2-Controller 설치

서비스명 변수 지정 및 helm 차트 다운로드

$> export SERVICE=ec2

$> export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$> helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

$> tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

helm chart 확인

$> tree ~/$SERVICE-chart

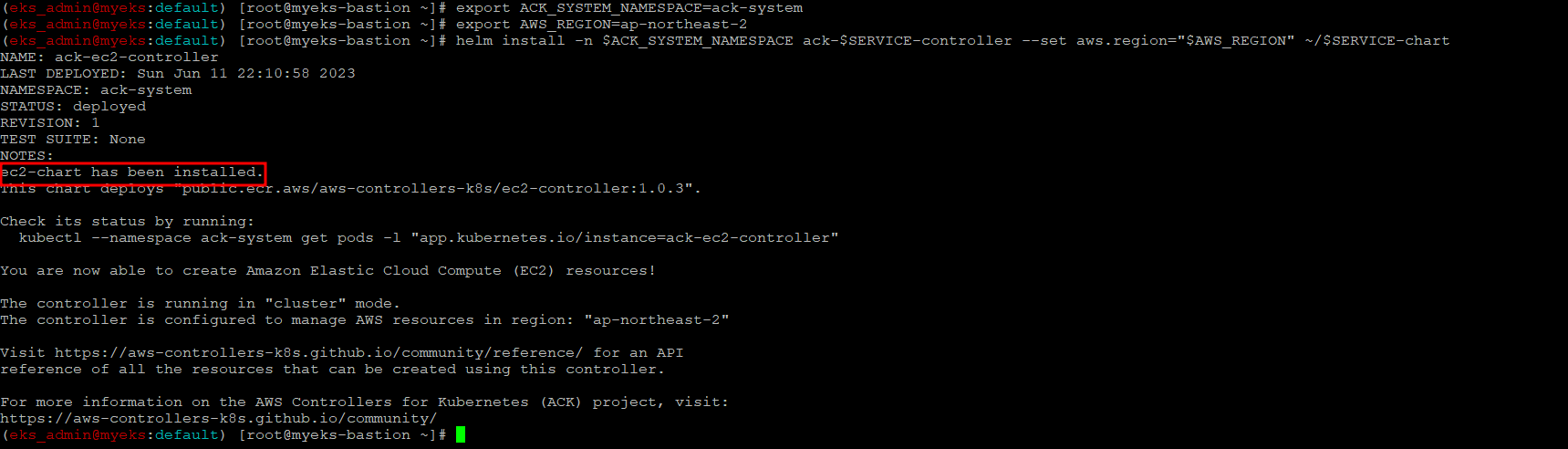

ACK EC2-Controller 배포

$> export ACK_SYSTEM_NAMESPACE=ack-system

$> export AWS_REGION=ap-northeast-2

$> helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

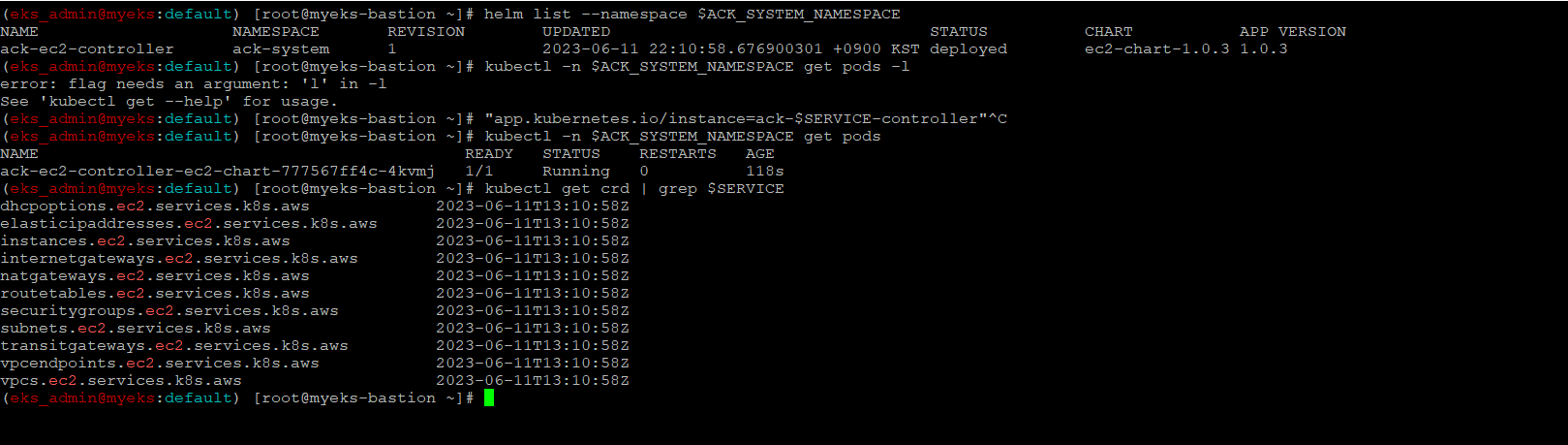

배포 확인

$> helm list --namespace $ACK_SYSTEM_NAMESPACE

$> kubectl -n $ACK_SYSTEM_NAMESPACE get pods

"app.kubernetes.io/instance=ack-$SERVICE-controller"

$> kubectl get crd | grep $SERVICE

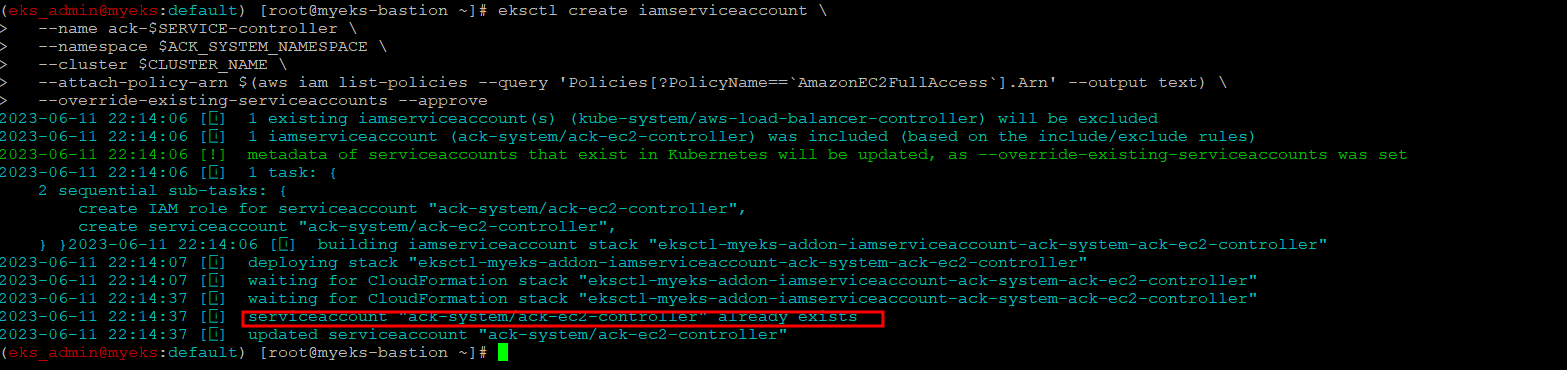

IRSA 설정

Create an iamserviceaccount

$> eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace $ACK_SYSTEM_NAMESPACE \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonEC2FullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

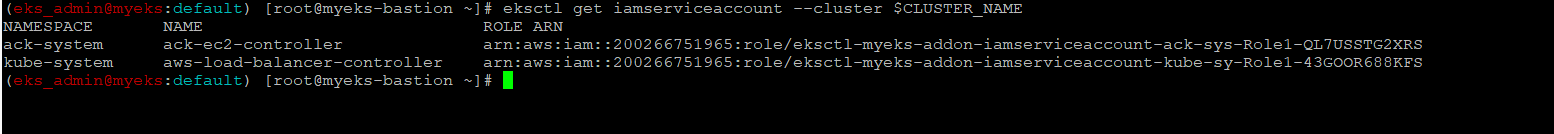

IAM Role 생성 확인

$> eksctl get iamserviceaccount --cluster $CLUSTER_NAME

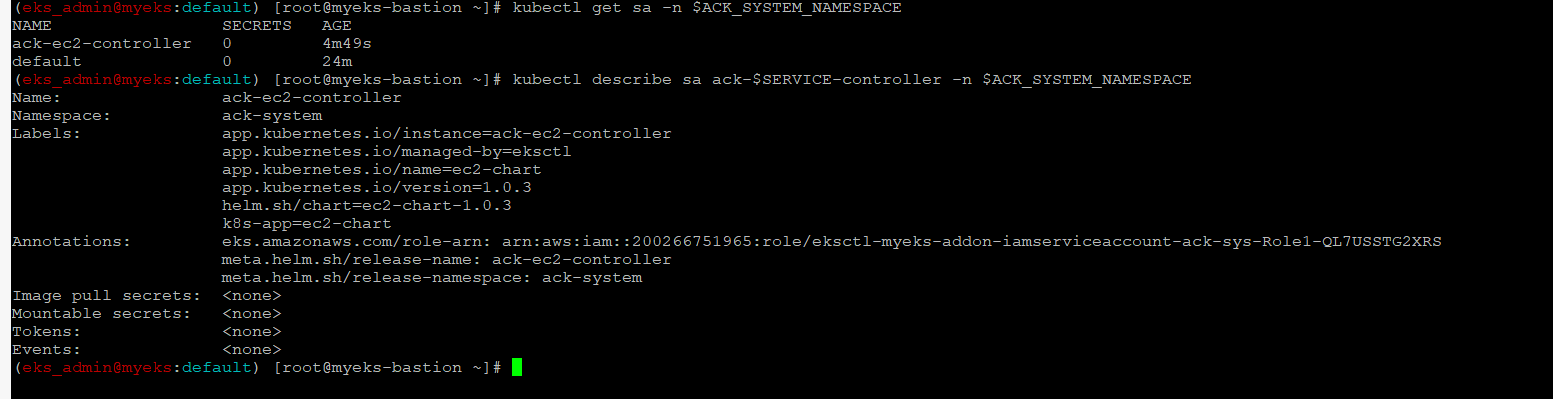

Service Account 생성 확인

$> kubectl get sa -n $ACK_SYSTEM_NAMESPACE

$> kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

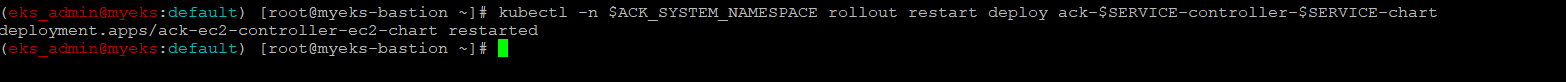

Restart ACK service controller deployment

$> kubectl -n $ACK_SYSTEM_NAMESPACE rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

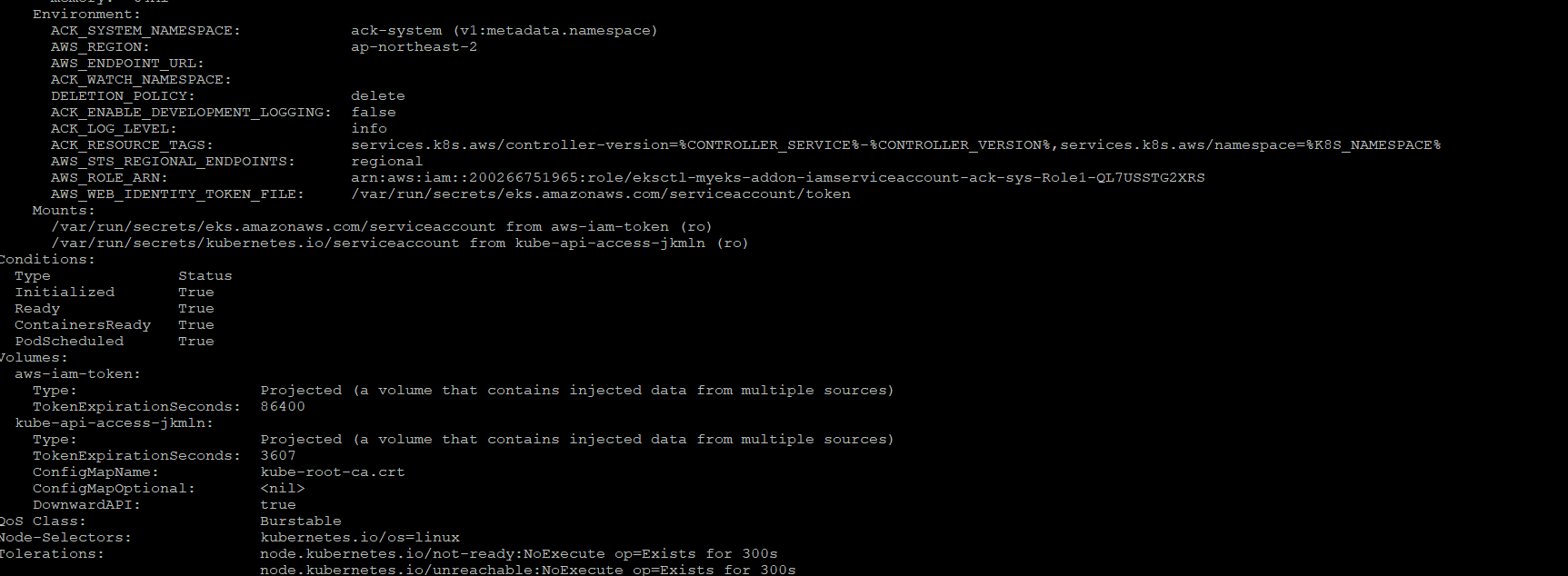

IRSA 적용으로 Env, Volume 추가 확인

$> kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chart

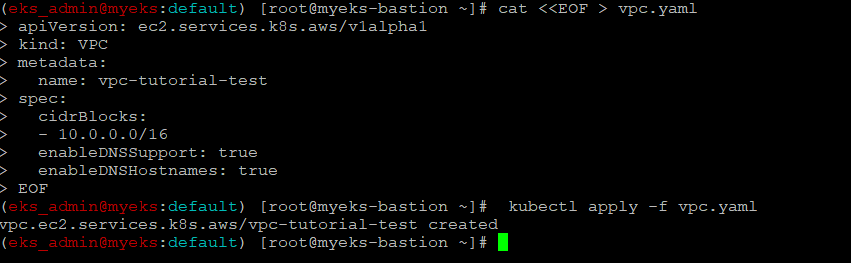

VPC, Subnet 생성 및 삭제

VPC 생성

$> cat <<EOF > vpc.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: VPC

metadata:

name: vpc-tutorial-test

spec:

cidrBlocks:

- 10.0.0.0/16

enableDNSSupport: true

enableDNSHostnames: true

EOF

$> kubectl apply -f vpc.yaml

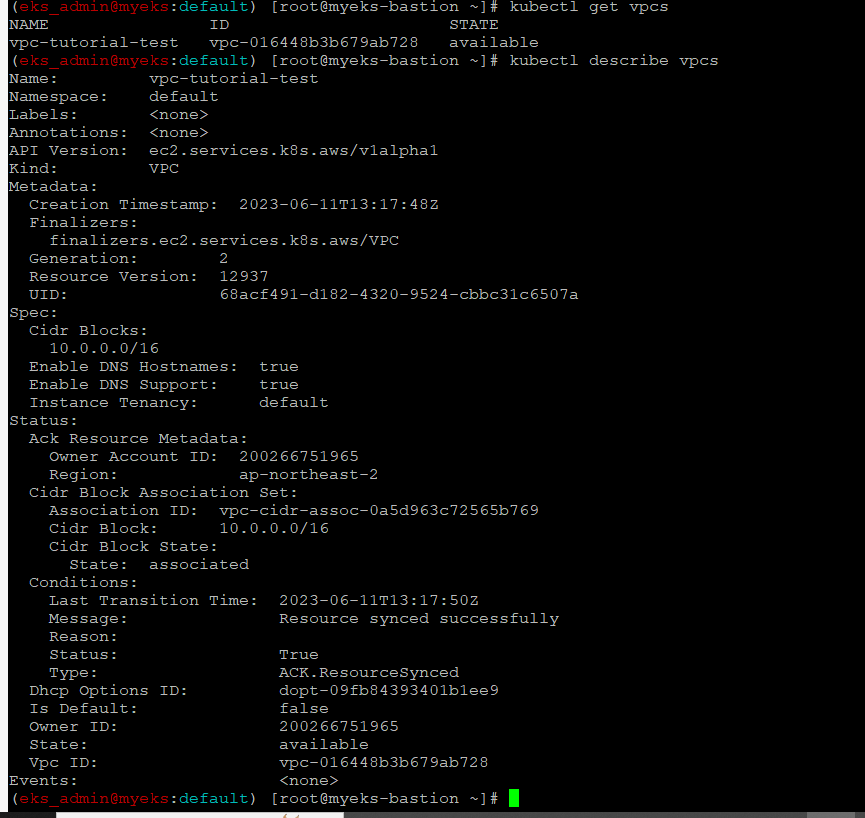

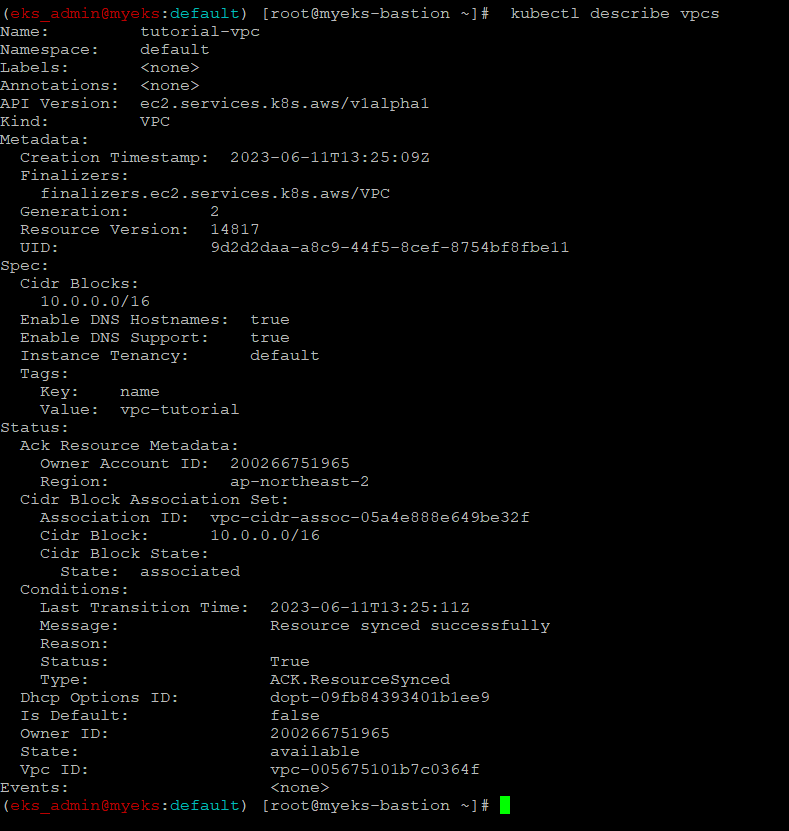

VPC 생성 확인

$> kubectl get vpcs

$> kubectl describe vpcs

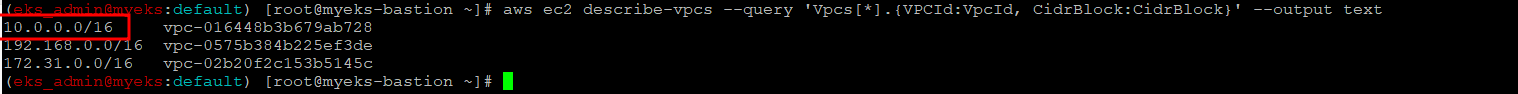

$> aws ec2 describe-vpcs --query 'Vpcs[*].{VPCId:VpcId, CidrBlock:CidrBlock}' --output text

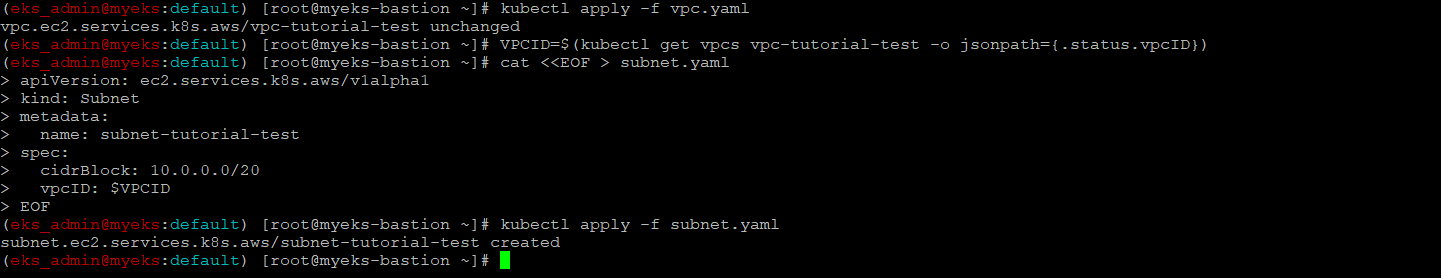

서브넷 생성

$> VPCID=$(kubectl get vpcs vpc-tutorial-test -o jsonpath={.status.vpcID})

$> cat <<EOF > subnet.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: subnet-tutorial-test

spec:

cidrBlock: 10.0.0.0/20

vpcID: $VPCID

EOF

$> kubectl apply -f subnet.yaml

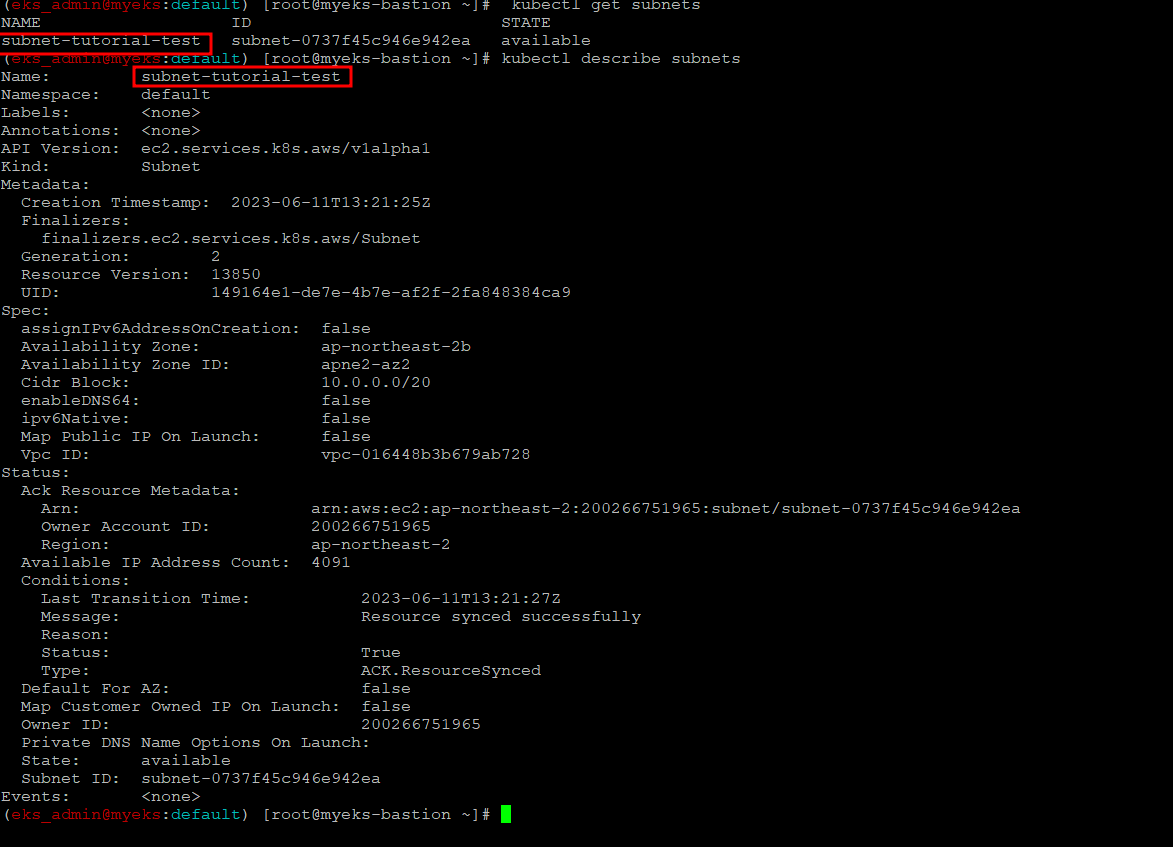

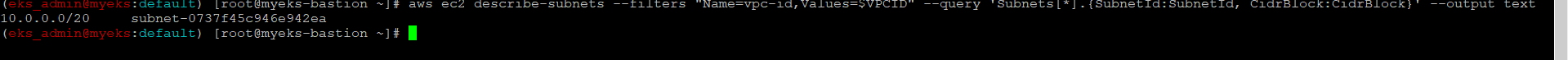

서브넷 생성 확인

$> kubectl get subnets

$> kubectl describe subnets

$> aws ec2 describe-subnets --filters "Name=vpc-id,Values=$VPCID" --query 'Subnets[*].{SubnetId:SubnetId, CidrBlock:CidrBlock}' --output text

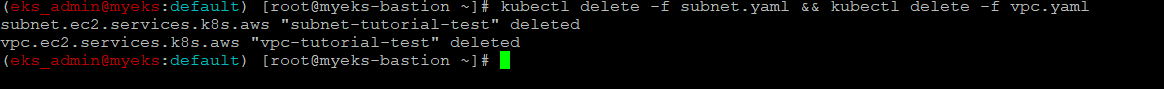

Subnet & Vpc 삭제

$> kubectl delete -f subnet.yaml && kubectl delete -f vpc.yaml

Create a VPC Workflow

이번 실습은 VPC와 관련된 여러 자원들을 생성할 예정이다. 아래가 생성할 자원들의 종류와 각각 생성될 갯수들이다.

1 VPC

1 Instance

1 Internet Gateway

1 NAT Gateways

1 Elastic IPs

2 Route Tables

2 Subnets (1 Public; 1 Private)

1 Security Group

EC2를 제외한 나머지 자원 생성 - VPC, Internet Gateway, NAT Gateways,Elastic IPs,Route Tables,Subnets (1 Public; 1 Private),SecurityGroup 생성

$> cat <<EOF > vpc-workflow.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: VPC

metadata:

name: tutorial-vpc

spec:

cidrBlocks:

- 10.0.0.0/16

enableDNSSupport: true

enableDNSHostnames: true

tags:

- key: name

value: vpc-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: InternetGateway

metadata:

name: tutorial-igw

spec:

vpcRef:

from:

name: tutorial-vpc

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: NATGateway

metadata:

name: tutorial-natgateway1

spec:

subnetRef:

from:

name: tutorial-public-subnet1

allocationRef:

from:

name: tutorial-eip1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: ElasticIPAddress

metadata:

name: tutorial-eip1

spec:

tags:

- key: name

value: eip-tutorial

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: RouteTable

metadata:

name: tutorial-public-route-table

spec:

vpcRef:

from:

name: tutorial-vpc

routes:

- destinationCIDRBlock: 0.0.0.0/0

gatewayRef:

from:

name: tutorial-igw

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: RouteTable

metadata:

name: tutorial-private-route-table-az1

spec:

vpcRef:

from:

name: tutorial-vpc

routes:

- destinationCIDRBlock: 0.0.0.0/0

natGatewayRef:

from:

name: tutorial-natgateway1

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: tutorial-public-subnet1

spec:

availabilityZone: ap-northeast-2a

cidrBlock: 10.0.0.0/20

mapPublicIPOnLaunch: true

vpcRef:

from:

name: tutorial-vpc

routeTableRefs:

- from:

name: tutorial-public-route-table

---

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Subnet

metadata:

name: tutorial-private-subnet1

spec:

availabilityZone: ap-northeast-2a

cidrBlock: 10.0.128.0/20

vpcRef:

from:

name: tutorial-vpc

routeTableRefs:

- from:

name: tutorial-private-route-table-az1

---

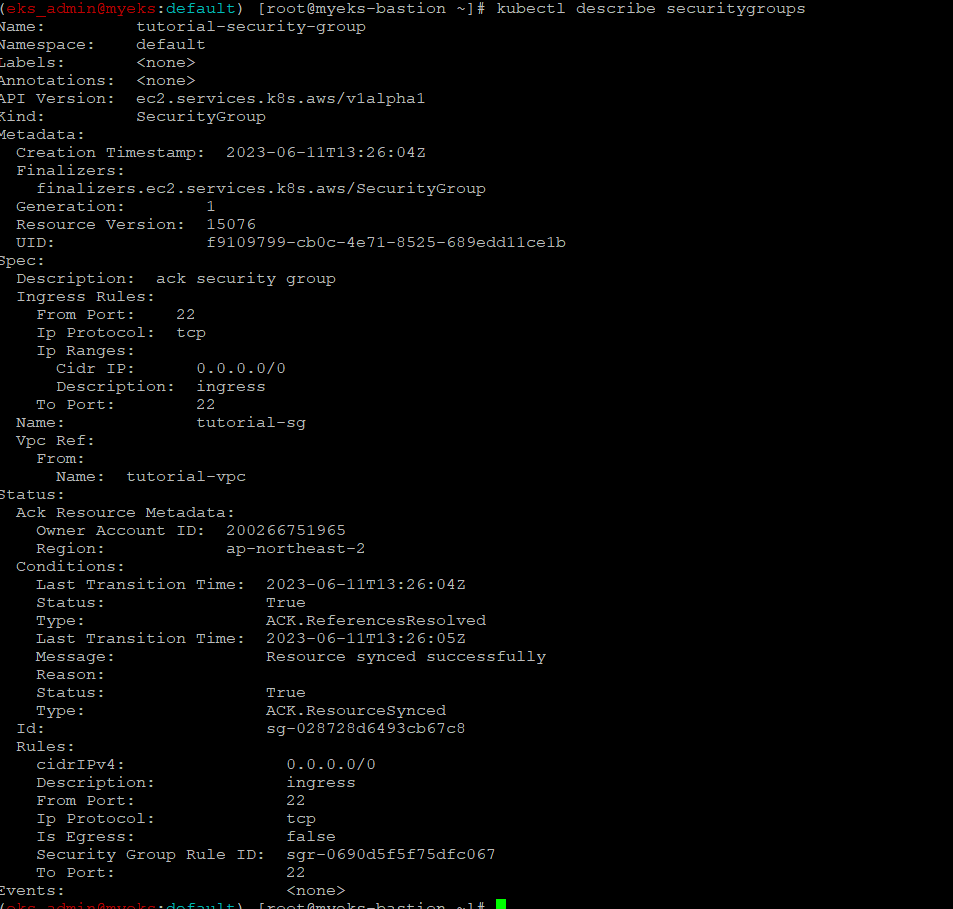

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: SecurityGroup

metadata:

name: tutorial-security-group

spec:

description: "ack security group"

name: tutorial-sg

vpcRef:

from:

name: tutorial-vpc

ingressRules:

- ipProtocol: tcp

fromPort: 22

toPort: 22

ipRanges:

- cidrIP: "0.0.0.0/0"

description: "ingress"

EOF

$> kubectl apply -f vpc-workflow.yaml위 AWS 자원이 5분 정도 소요

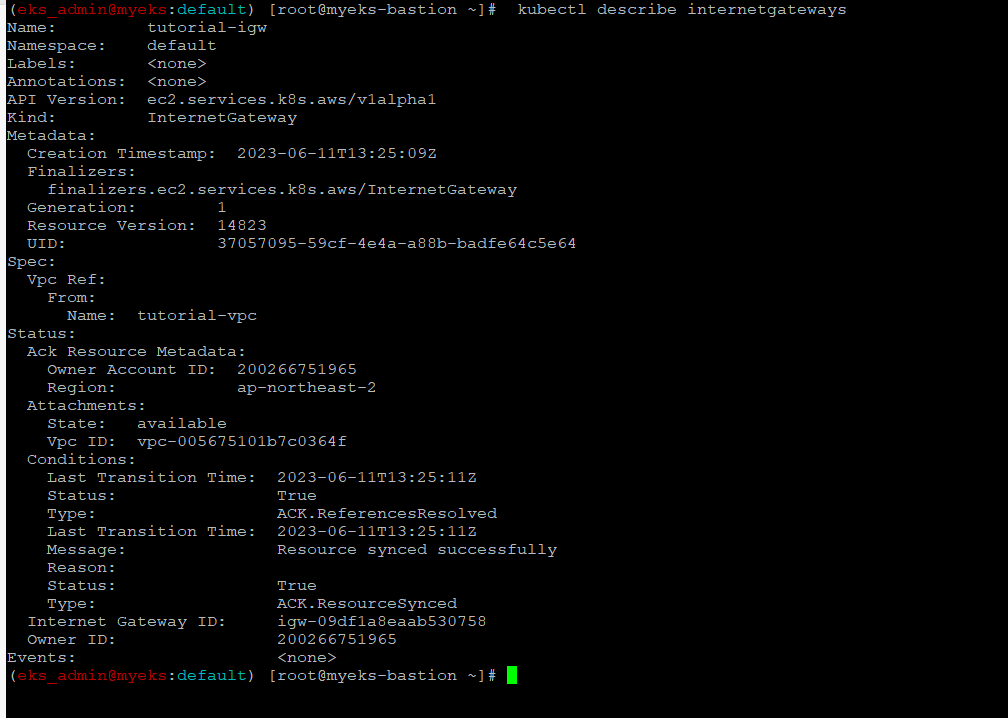

각 AWS 자원 생성 확인

$> kubectl describe vpcs

$> kubectl describe internetgateways

$> kubectl describe routetables

$> kubectl describe natgateways

$> kubectl describe elasticipaddresses

$> kubectl describe securitygroups

퍼블릭 서브넷에 인스턴스 생성

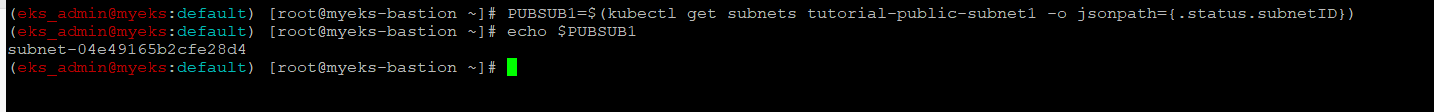

public 서브넷 ID 확인

$> PUBSUB1=$(kubectl get subnets tutorial-public-subnet1 -o jsonpath={.status.subnetID})

$> echo $PUBSUB1

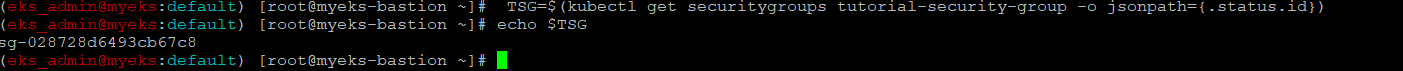

보안그룹 ID 확인

$> TSG=$(kubectl get securitygroups tutorial-security-group -o jsonpath={.status.id})

$> echo $TSG

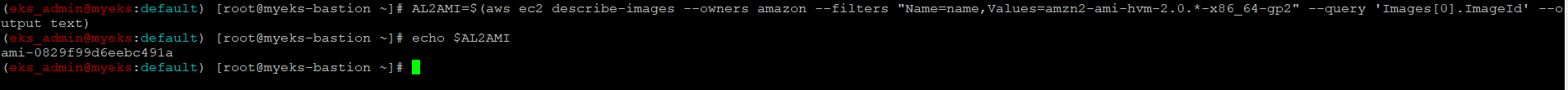

Amazon Linux 2 최신 AMI ID 확인

$> AL2AMI=$(aws ec2 describe-images --owners amazon --filters "Name=name,Values=amzn2-ami-hvm-2.0.*-x86_64-gp2" --query 'Images[0].ImageId' --output text)

$> echo $AL2AMI

SSH 키페어 이름 변수 지정

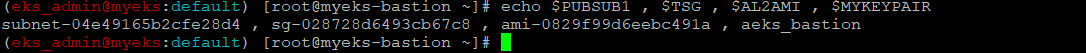

$> MYKEYPAIR=aeks_bastion최종적으로 설정된 변수 확인

$> echo $PUBSUB1 , $TSG , $AL2AMI , $MYKEYPAIR

인스턴스 생성

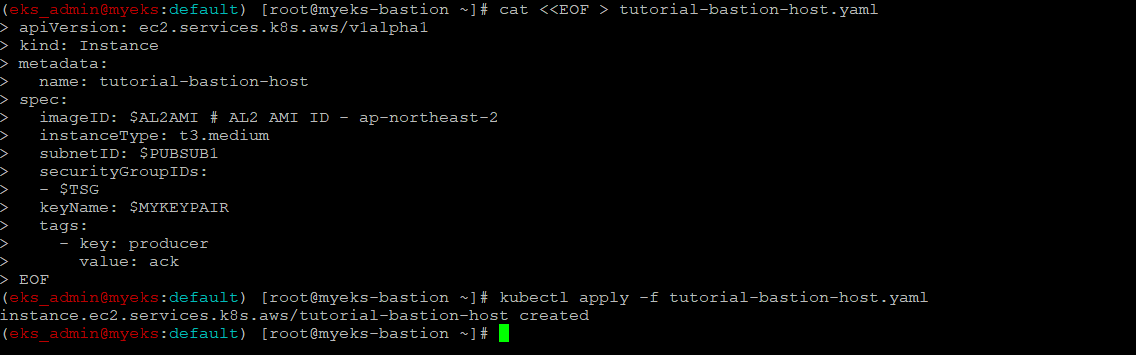

$> cat <<EOF > tutorial-bastion-host.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Instance

metadata:

name: tutorial-bastion-host

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: t3.medium

subnetID: $PUBSUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

$> kubectl apply -f tutorial-bastion-host.yaml

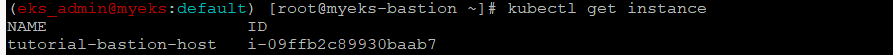

인스턴스 생성 확인

$> kubectl get instance

$> kubectl describe instance

$> aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

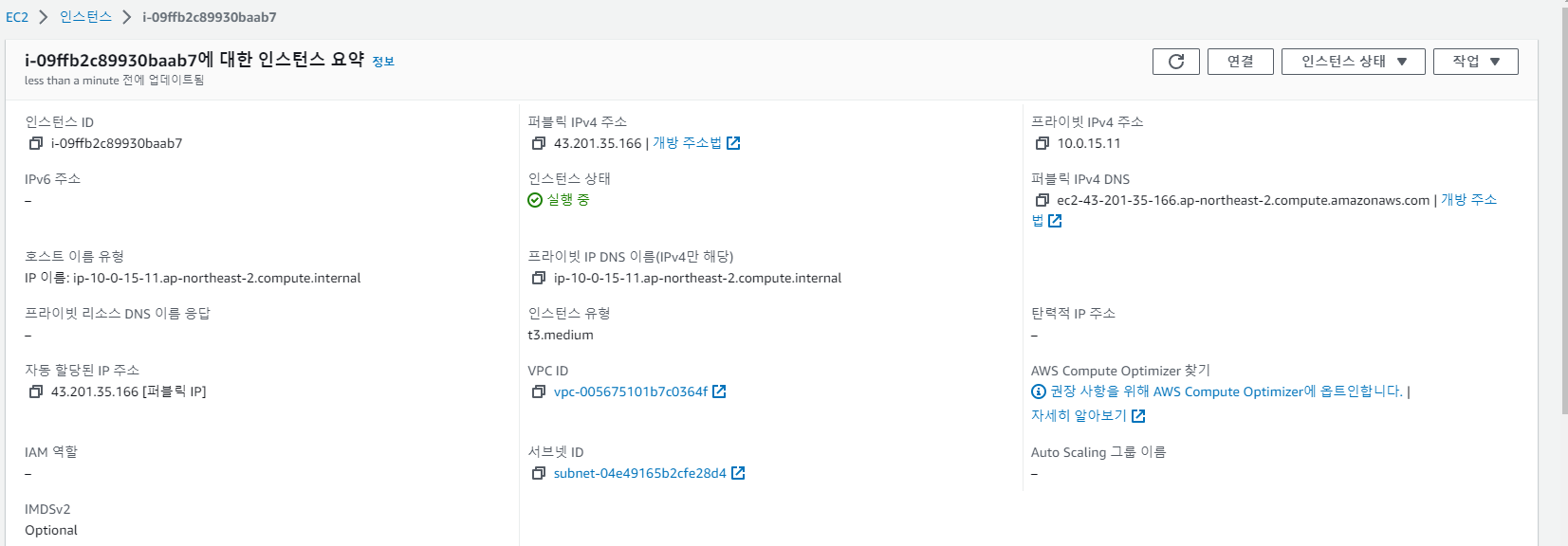

AWS WEB Console에서는 아래와 같은 정보로 표시

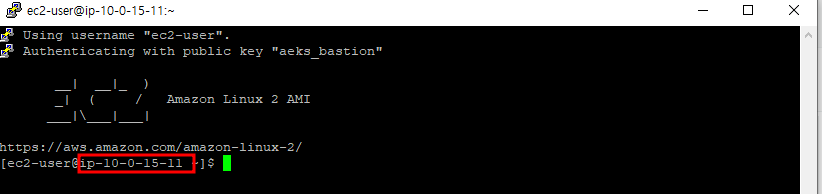

인스턴스 접속

프라이빗 서브넷에 인스턴스 생성

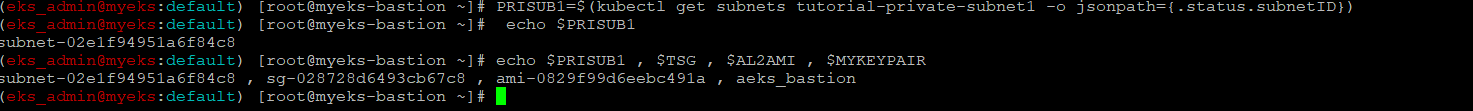

private 서브넷 ID 확인

$> PRISUB1=$(kubectl get subnets tutorial-private-subnet1 -o jsonpath={.status.subnetID})

$> echo $PRISUB1

$> echo $PRISUB1 , $TSG , $AL2AMI , $MYKEYPAIR

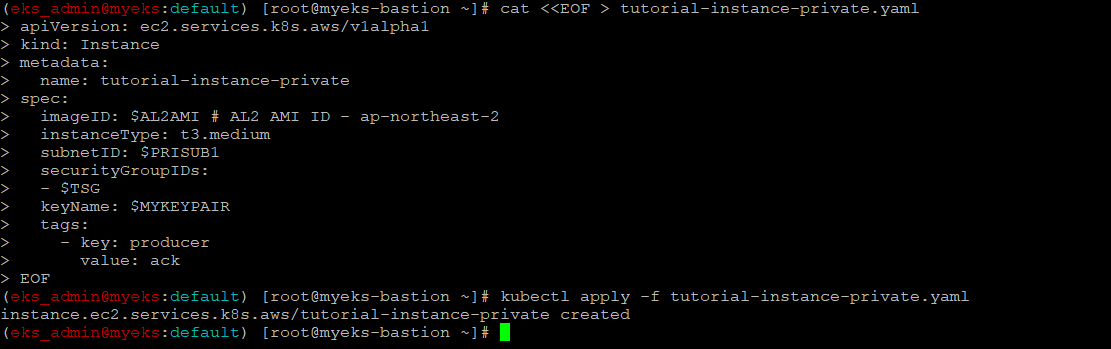

Private Subnet에 인스턴스 생성

$> cat <<EOF > tutorial-instance-private.yaml

apiVersion: ec2.services.k8s.aws/v1alpha1

kind: Instance

metadata:

name: tutorial-instance-private

spec:

imageID: $AL2AMI # AL2 AMI ID - ap-northeast-2

instanceType: t3.medium

subnetID: $PRISUB1

securityGroupIDs:

- $TSG

keyName: $MYKEYPAIR

tags:

- key: producer

value: ack

EOF

$> kubectl apply -f tutorial-instance-private.yaml

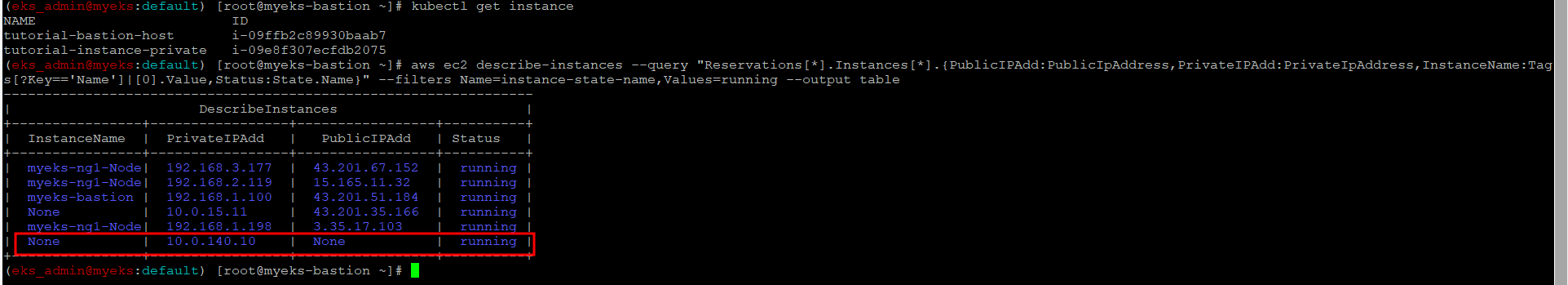

인스턴스 생성 확인

$> kubectl get instance

$> kubectl describe instance

$> aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

public 서브넷에 인스턴스에 SSH 터널링 설정

$> ssh -i <자신의 키페어파일> -L <자신의 임의 로컬 포트>:<private 서브넷의 인스턴스의 private ip 주소>:22 ec2-user@<public 서브넷에 인스턴스 퍼블릭IP> -v접속 확인

Putty로 접속을 확인하고자 했으나 접속 실패,

$> ssh -i <자신의 키페어파일> -p <자신의 임의 로컬 포트> ec2-user@localhostResource 삭제

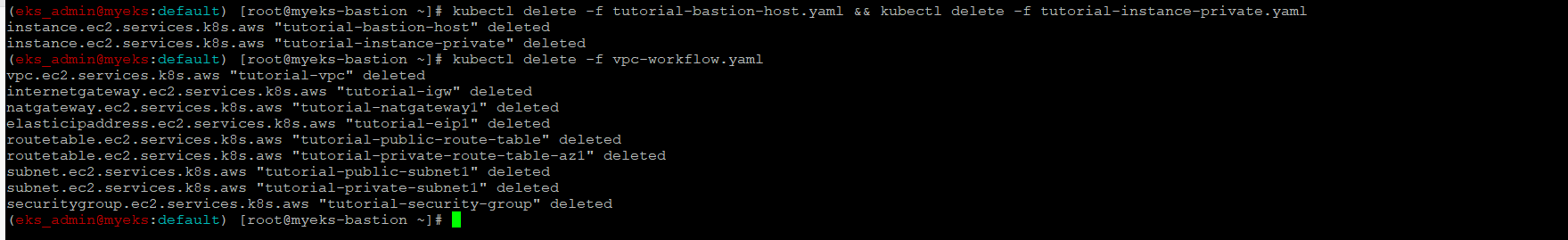

$> kubectl delete -f tutorial-bastion-host.yaml && kubectl delete -f tutorial-instance-private.yaml

$> kubectl delete -f vpc-workflow.yaml

RDS 실습

RDS ACK Contrller 설치 후 ACK Controller를 통해서 RDS Database 생성 후 RDS 접속 확인

ACK RDS Controller 설치 with Helm

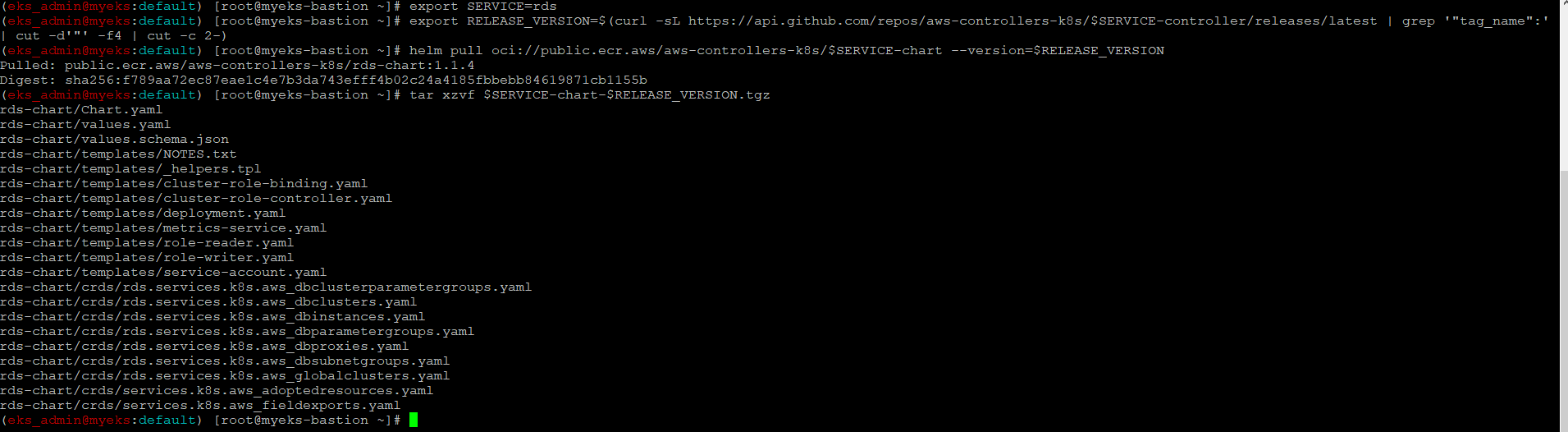

서비스명 변수 지정 및 helm 차트 다운로드

$> export SERVICE=rds

$> export RELEASE_VERSION=$(curl -sL https://api.github.com/repos/aws-controllers-k8s/$SERVICE-controller/releases/latest | grep '"tag_name":' | cut -d'"' -f4 | cut -c 2-)

$> helm pull oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart --version=$RELEASE_VERSION

$> tar xzvf $SERVICE-chart-$RELEASE_VERSION.tgz

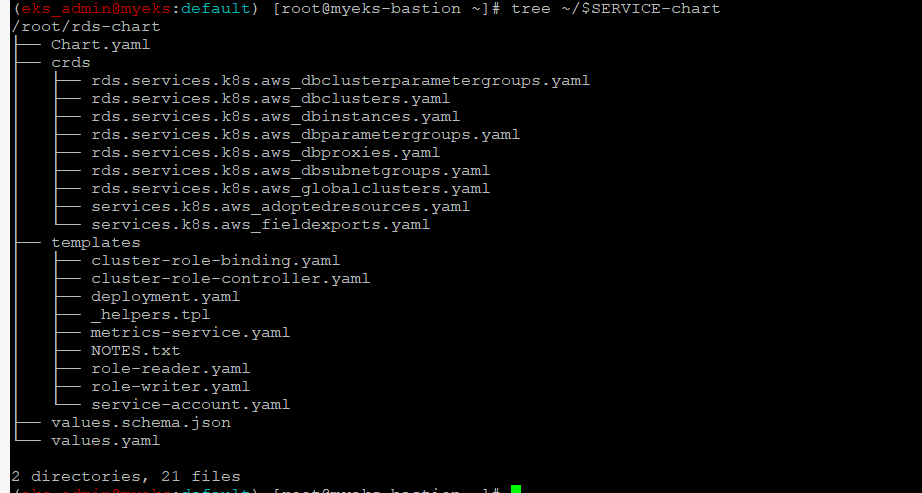

helm chart 확인

$> tree ~/$SERVICE-chart

ACK RDS Controller 배포

$> export ACK_SYSTEM_NAMESPACE=ack-system

$> export AWS_REGION=ap-northeast-2

$> helm install -n $ACK_SYSTEM_NAMESPACE ack-$SERVICE-controller --set aws.region="$AWS_REGION" ~/$SERVICE-chart

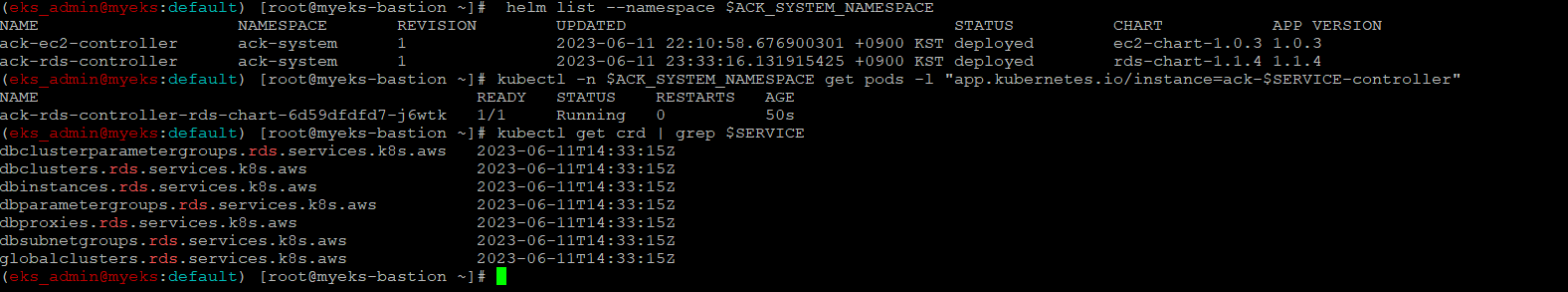

배포 확인

$> helm list --namespace $ACK_SYSTEM_NAMESPACE

$> kubectl -n $ACK_SYSTEM_NAMESPACE get pods -l "app.kubernetes.io/instance=ack-$SERVICE-controller"

$> kubectl get crd | grep $SERVICE

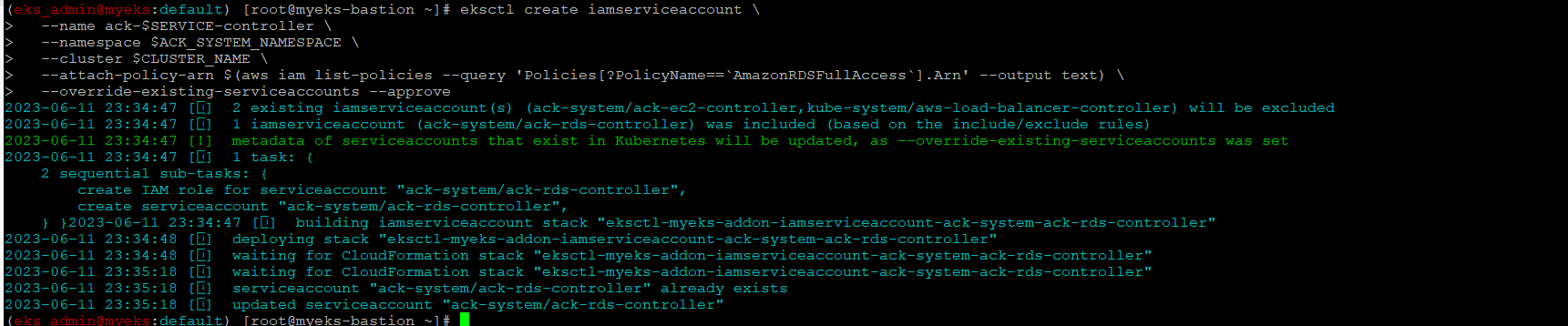

IRSA 설정 : 설정 시 권장 정책 AmazonRDSFullAccess

Create an iamserviceaccount

$> eksctl create iamserviceaccount \

--name ack-$SERVICE-controller \

--namespace $ACK_SYSTEM_NAMESPACE \

--cluster $CLUSTER_NAME \

--attach-policy-arn $(aws iam list-policies --query 'Policies[?PolicyName==`AmazonRDSFullAccess`].Arn' --output text) \

--override-existing-serviceaccounts --approve

IAM Role 생성 확인

$> eksctl get iamserviceaccount --cluster $CLUSTER_NAME

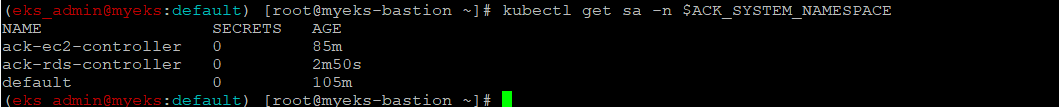

Service Account 생성 확인

$> kubectl get sa -n $ACK_SYSTEM_NAMESPACE

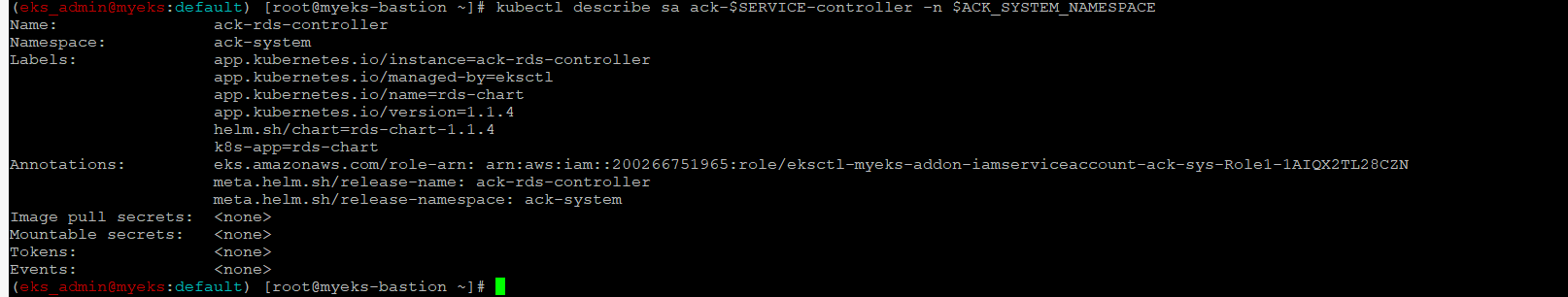

$> kubectl describe sa ack-$SERVICE-controller -n $ACK_SYSTEM_NAMESPACE

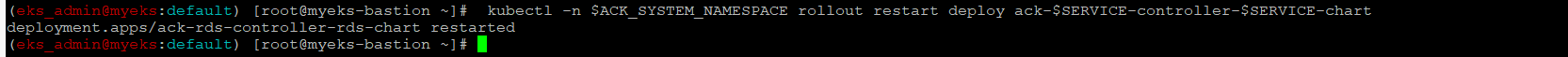

Restart ACK service controller deployment for applying new role

$> kubectl -n $ACK_SYSTEM_NAMESPACE rollout restart deploy ack-$SERVICE-controller-$SERVICE-chart

IRSA 적용으로 Env, Volume 추가 확인

$> kubectl describe pod -n $ACK_SYSTEM_NAMESPACE -l k8s-app=$SERVICE-chart

AWS RDS for MariaDB 생성

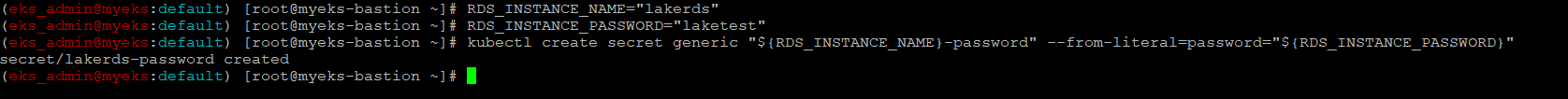

DB 암호를 위한 secret 생성

$> RDS_INSTANCE_NAME="lakerds"

$> RDS_INSTANCE_PASSWORD="laketest"

$> kubectl create secret generic "${RDS_INSTANCE_NAME}-password" --from-literal=password="${RDS_INSTANCE_PASSWORD}"

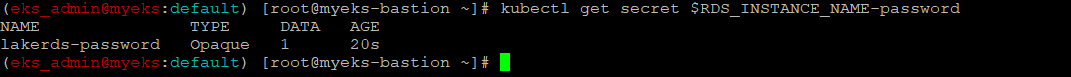

Secret 생성 확인

$> kubectl get secret $RDS_INSTANCE_NAME-password

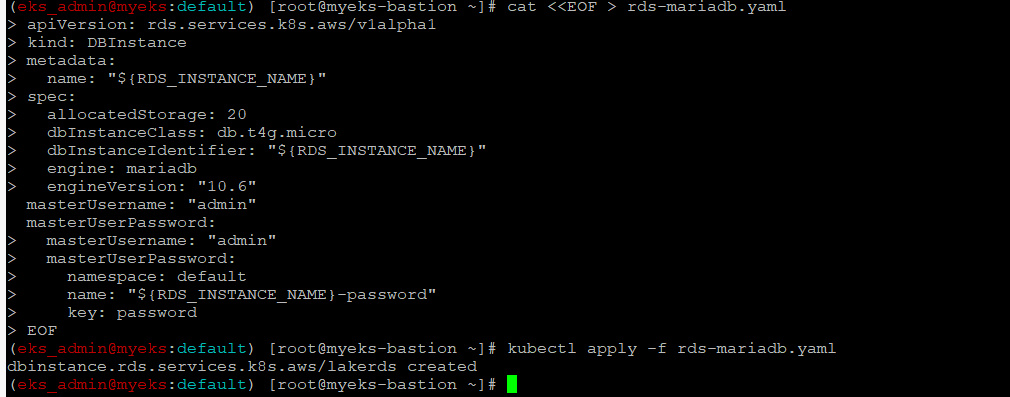

RDS 배포

$> cat <<EOF > rds-mariadb.yaml

apiVersion: rds.services.k8s.aws/v1alpha1

kind: DBInstance

metadata:

name: "${RDS_INSTANCE_NAME}"

spec:

allocatedStorage: 20

dbInstanceClass: db.t4g.micro

dbInstanceIdentifier: "${RDS_INSTANCE_NAME}"

engine: mariadb

engineVersion: "10.6"

masterUsername: "admin"

masterUserPassword:

namespace: default

name: "${RDS_INSTANCE_NAME}-password"

key: password

EOF

$> kubectl apply -f rds-mariadb.yaml

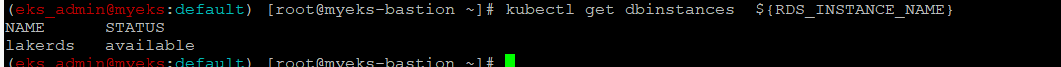

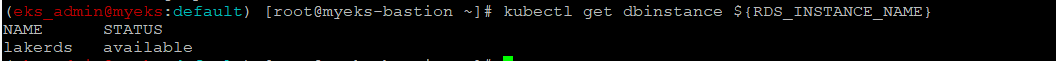

RDS 생성 확인

$> kubectl get dbinstances ${RDS_INSTANCE_NAME}

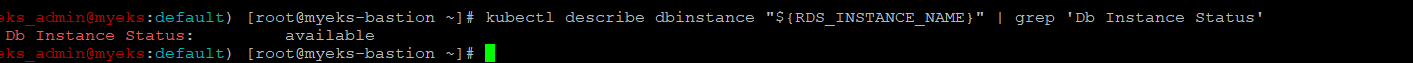

$> kubectl describe dbinstance "${RDS_INSTANCE_NAME}"

$> aws rds describe-db-instances --db-instance-identifier $RDS_INSTANCE_NAME | jq

$> kubectl describe dbinstance "${RDS_INSTANCE_NAME}" | grep 'Db Instance Status'

DB 접속

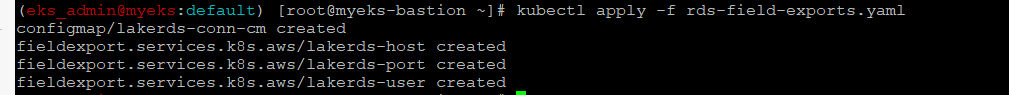

fieldexport Resource 생성

FiledExport 는 AWS Resource Field(Spec or Status) 값을 ConfigMap이나 Secret으로 데이타를 복사하는 기능

AWS Resource(정확한 표현은 ACK resource)Field 값 변경시 자동으로 ConfigMap이나 Secret으로 반영

ConfigMap이나 Secret에서 반영된 값은 Pods가 환경변수 값 형태로 사용

보다 자세한 정보는 URL 참조 : https://aws-controllers-k8s.github.io/community/docs/user-docs/field-export/

아래 DB 접속 관련 정보인 DB Host, Port, UserName 정보를 ConfigMap으로 복사를 한다는 정의

$> RDS_INSTANCE_CONN_CM="${RDS_INSTANCE_NAME}-conn-cm"

cat <<EOF > rds-field-exports.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: ${RDS_INSTANCE_CONN_CM}

data: {}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-host

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".status.endpoint.address"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-port

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".status.endpoint.port"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

---

apiVersion: services.k8s.aws/v1alpha1

kind: FieldExport

metadata:

name: ${RDS_INSTANCE_NAME}-user

spec:

to:

name: ${RDS_INSTANCE_CONN_CM}

kind: configmap

from:

path: ".spec.masterUsername"

resource:

group: rds.services.k8s.aws

kind: DBInstance

name: ${RDS_INSTANCE_NAME}

EOF

$> kubectl apply -f rds-field-exports.yaml

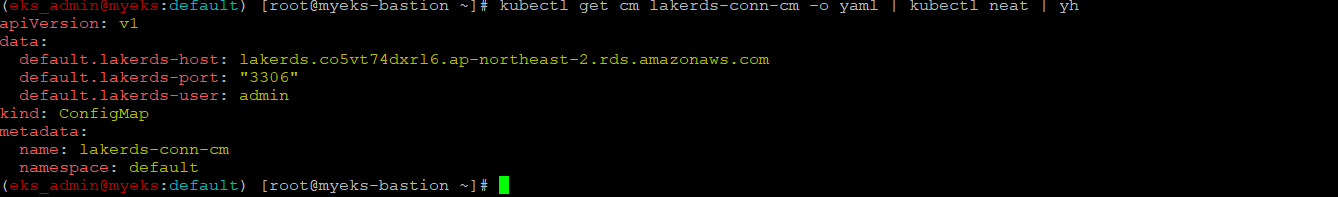

ConfigMap 확인

$> kubectl get cm lakerds-conn-cm -o yaml | kubectl neat | yh

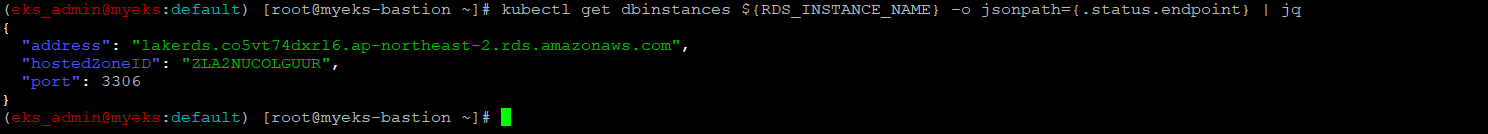

RDS address와 port 정보 확인

$> kubectl get dbinstances ${RDS_INSTANCE_NAME} -o jsonpath={.status.endpoint} | jq

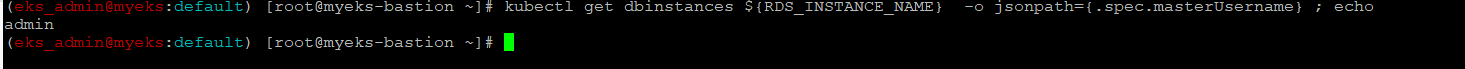

MasterUserName 정보 확인

$> kubectl get dbinstances ${RDS_INSTANCE_NAME} -o jsonpath={.spec.masterUsername} ; echo

RDS에 접속하는 Pod 배포

앞서 FiledExport Resource에서 정의한 ConfigMap을 Source로 하는 환경변수를 정의

Pod 배포

$> APP_NAMESPACE=default

$> cat <<EOF > rds-pods.yaml

apiVersion: v1

kind: Pod

metadata:

name: app

namespace: ${APP_NAMESPACE}

spec:

containers:

- image: busybox

name: myapp

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

env:

- name: DBHOST

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-host"

- name: DBPORT

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-port"

- name: DBUSER

valueFrom:

configMapKeyRef:

name: ${RDS_INSTANCE_CONN_CM}

key: "${APP_NAMESPACE}.${RDS_INSTANCE_NAME}-user"

- name: DBPASSWORD

valueFrom:

secretKeyRef:

name: "${RDS_INSTANCE_NAME}-password"

key: password

EOF

$> kubectl apply -f rds-pods.yaml

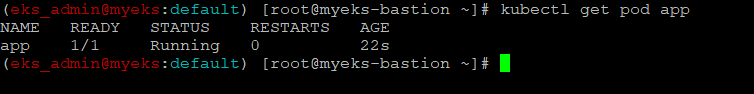

배포 확인

$> kubectl get pod app

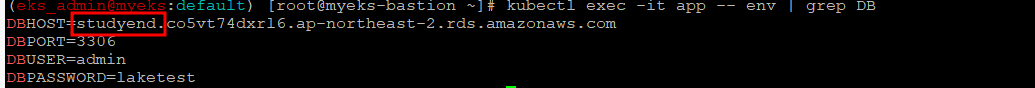

파드의 환경 변수 확인

$> kubectl exec -it app -- env | grep DB

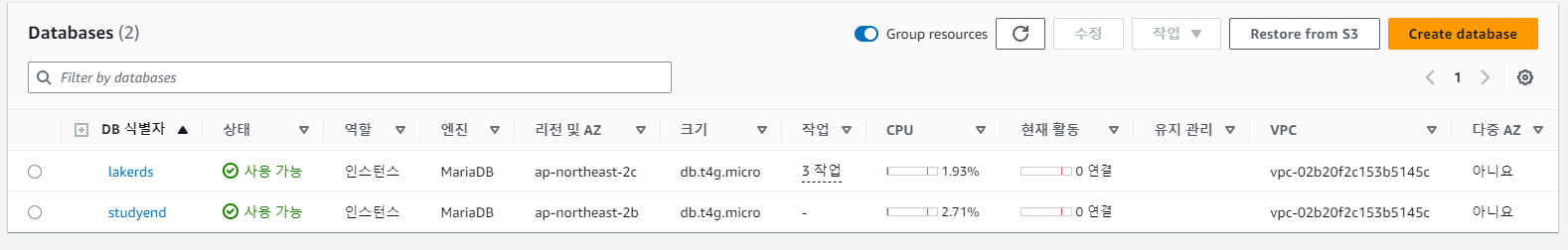

RDS 의 DB Indentifier 변경 실습을 통한 RDS의 동작과 ConfigMap의 값 변경을 확인

RDS DB Identifer 변경

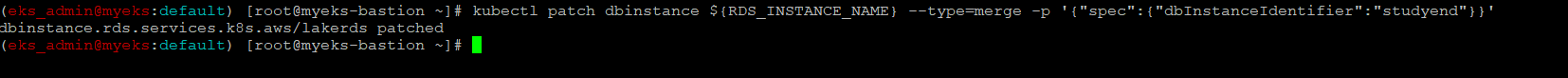

$> kubectl patch dbinstance ${RDS_INSTANCE_NAME} --type=merge -p '{"spec":{"dbInstanceIdentifier":"studyend"}}'

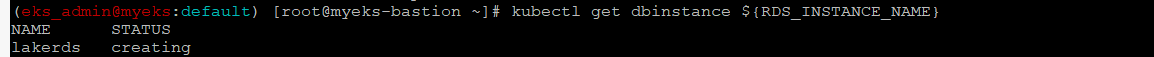

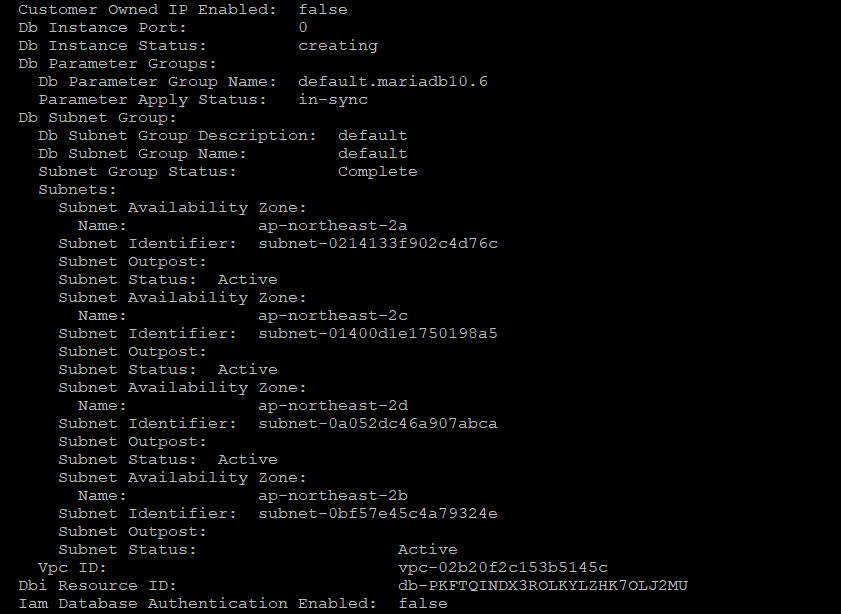

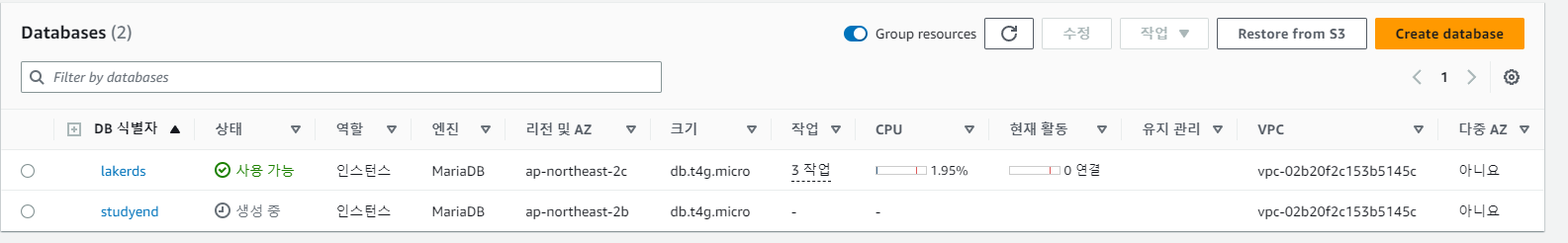

RDS DB Instance 상태 확인

AWS Console에서는 DB Identifer 변경 시 2개의 DB Identifer를 표시하고 구 Identifier는 사용가능이라고 표시

아마도 중단없는 서비스를 가능하게 하기 위해 구 DB Identifer로 서비스를 제공하고, 새로운 DB Identifer는 신규로 생성

$> kubectl get dbinstance ${RDS_INSTANCE_NAME}

$> kubectl describe dbinstance ${RDS_INSTANCE_NAME}

최종적으로 아래와 같은 상태가 표시

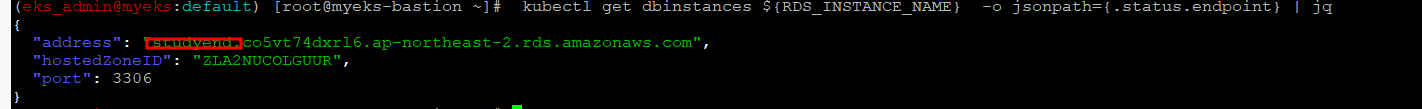

RDS DB address 확인

$> kubectl get dbinstances ${RDS_INSTANCE_NAME} -o jsonpath={.status.endpoint} | jq

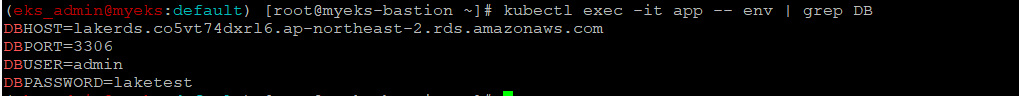

Pod의 환경변수 변경 확인

RDS의 DB Identifer는 변경이 되었어도 이를 Pod로 자동 반영이 안된 상황

$> kubectl exec -it app -- env | grep DB

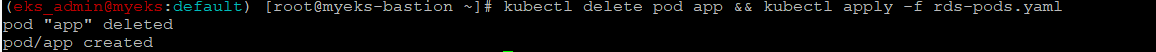

파드 삭제 후 재생성 후 확인

$> kubectl delete pod app && kubectl apply -f rds-pods.yaml

Pod의 환경변수 변경 확인

$> kubectl exec -it app -- env | grep DB

구 DB Identifer는 삭제

AWS WEB Console에서 직접 삭제

Pod 삭제

$> kubectl delete pod app

RDS 삭제

$> kubectl delete -f rds-mariadb.yamlFlux

소개

Kubenetes를 위한 ArgoCD와 유사한 gitops 도구중 하나로써, source의 mainfest 정보를 자동으로 Kubenetes에 배포하는 도구

자동으로 sync 기능이 있어, source의 정보가 변경되면 자동으로 이를 Flux가 감지하여 Kebenetes에 반영

이번 실습에서는 GitHub를 Source로 지정하여 GitHub에 있는 Mainfest 파일을 Kubenetes에 배포를 한다.

Flux CLI 설치 및 Bootstrap

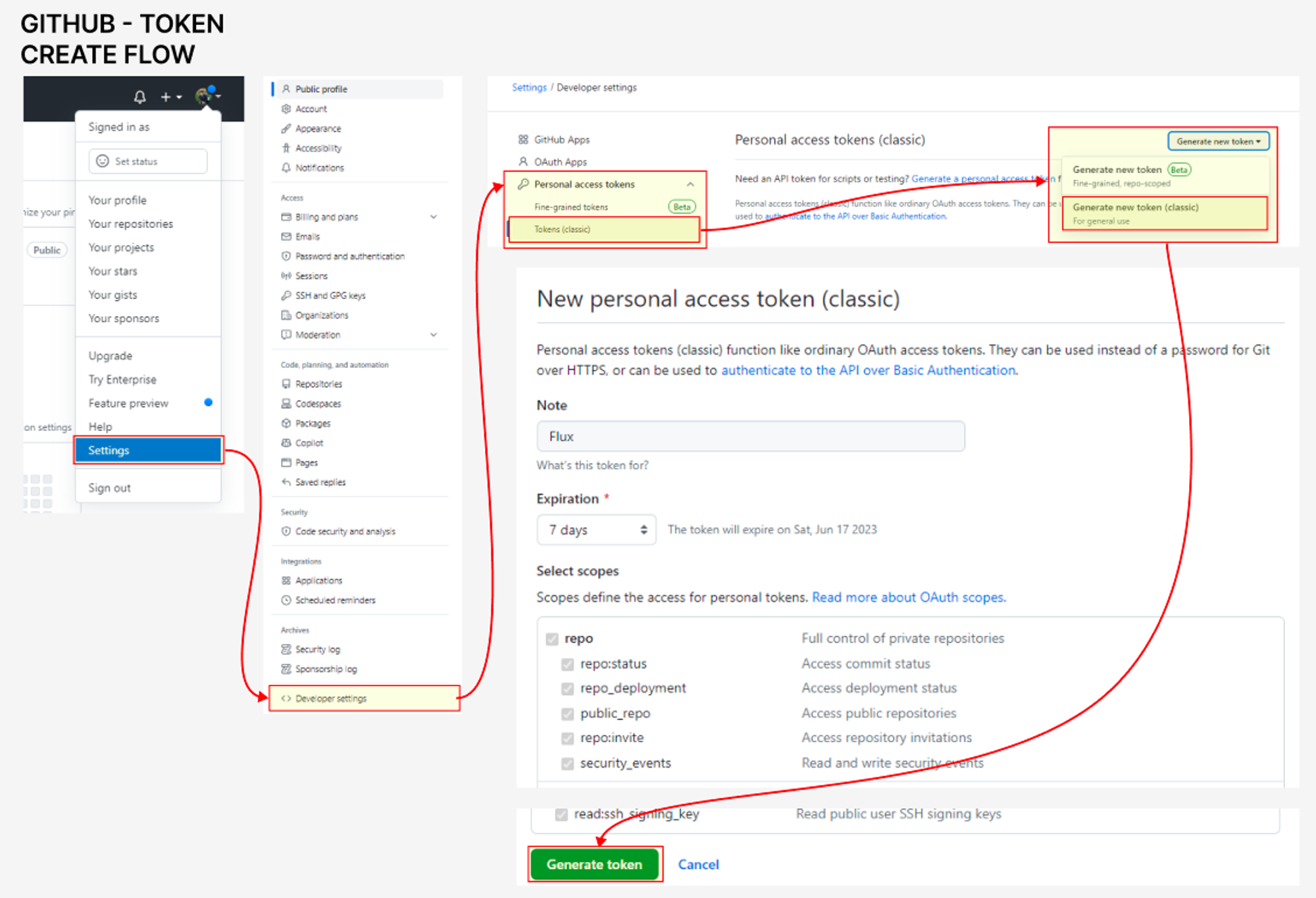

Github Token 발급

flux bootstrap 명령어을 통해서 Flux Resource를 설치하며, 이때 Flux Resource 설치와 설정을 위해서 특정 git source에 관련 정보를 설치한다.

보다 자세한 Flux 설치와 설정은 URL 참조 : https://fluxcd.io/flux/installation/

Flux Resource를 Git에 저장하기 위해 Gip 접근 Token이 필요

아래는 Token 발급을 위한 참조 이미지

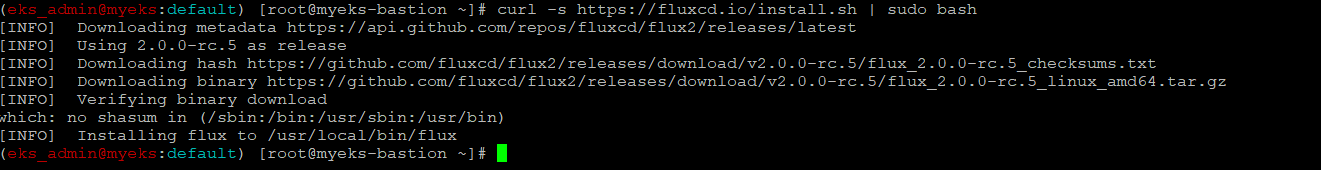

Flux CLI 설치

Flux CLI Binary 설치

$> curl -s https://fluxcd.io/install.sh | sudo bash

$> . <(flux completion bash)

위 flux Completion bash는 flux 명령어 사용시 자동으로 option이나 명령어 문을 bash shell 상에서 완성을 시키주는 부분이며, 이를 시행하기 위해서 미리 완성하고자 하는 명령어나 option 등을 등록을 해야 한다.

자세한 사항은 URL 참조 : https://kubernetes.io/ko/docs/tasks/tools/included/optional-kubectl-configs-bash-linux/

Version 확인

$> flux --version

Bootstrap를 통한 Flux Resource 배포

GITHUB 환경 변수 설정

$> export GITHUB_TOKEN=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

$> export GITHUB_USER=lakefake위 환경변수를 설정의 자세한 사항은 URL 참조 : https://docs.github.com/ko/actions/security-guides/automatic-token-authentication

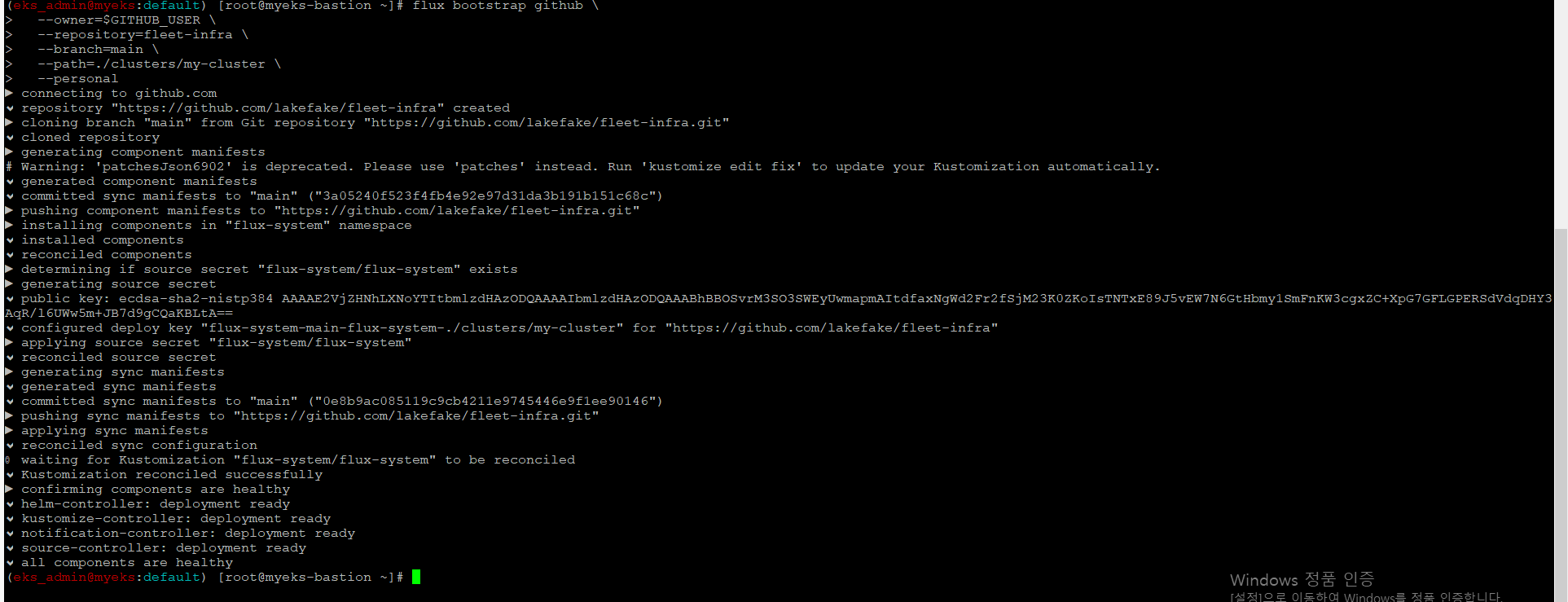

Bootstrap

$> flux bootstrap github \

--owner=$GITHUB_USER \

--repository=fleet-infra \

--branch=main \

--path=./clusters/my-cluster \

--personal

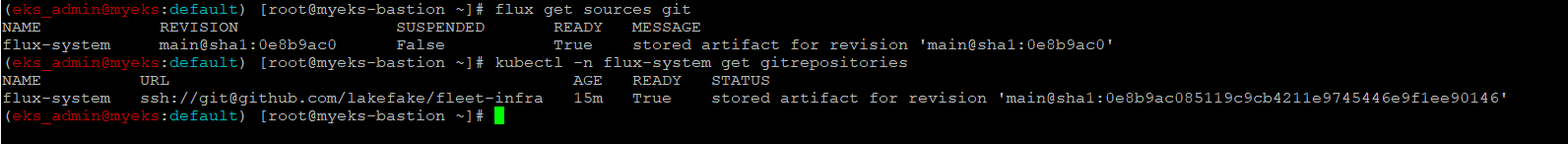

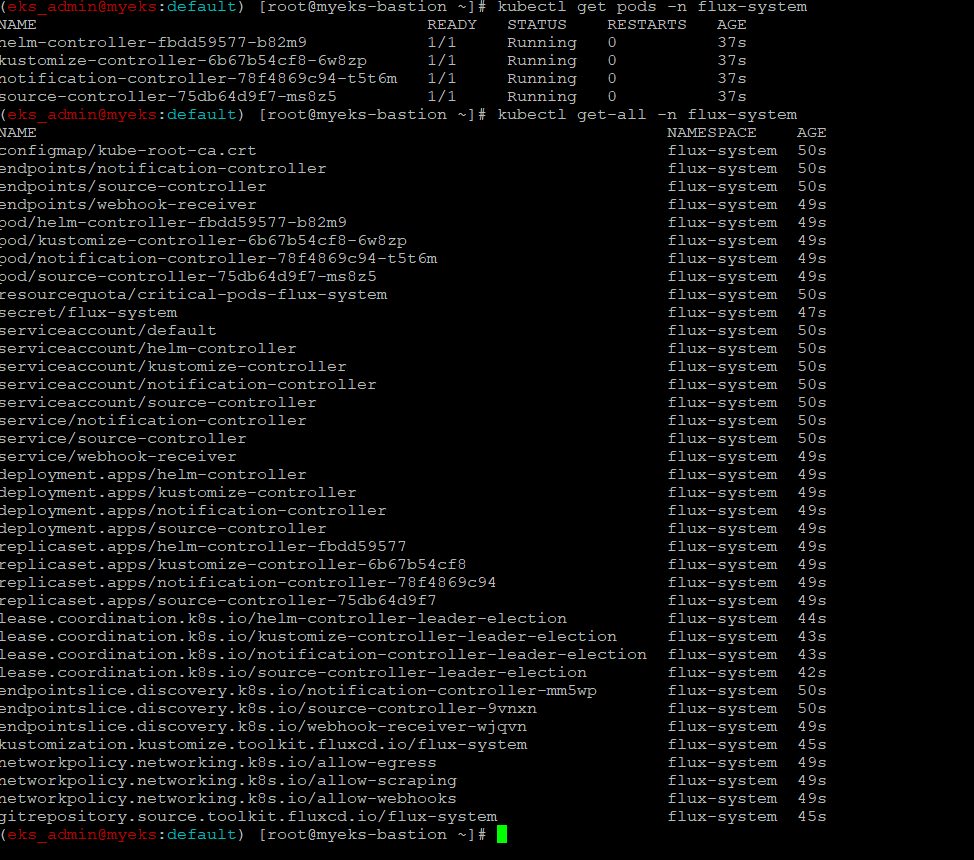

배포 확인

$> kubectl get pods -n flux-system

$> kubectl get-all -n flux-system

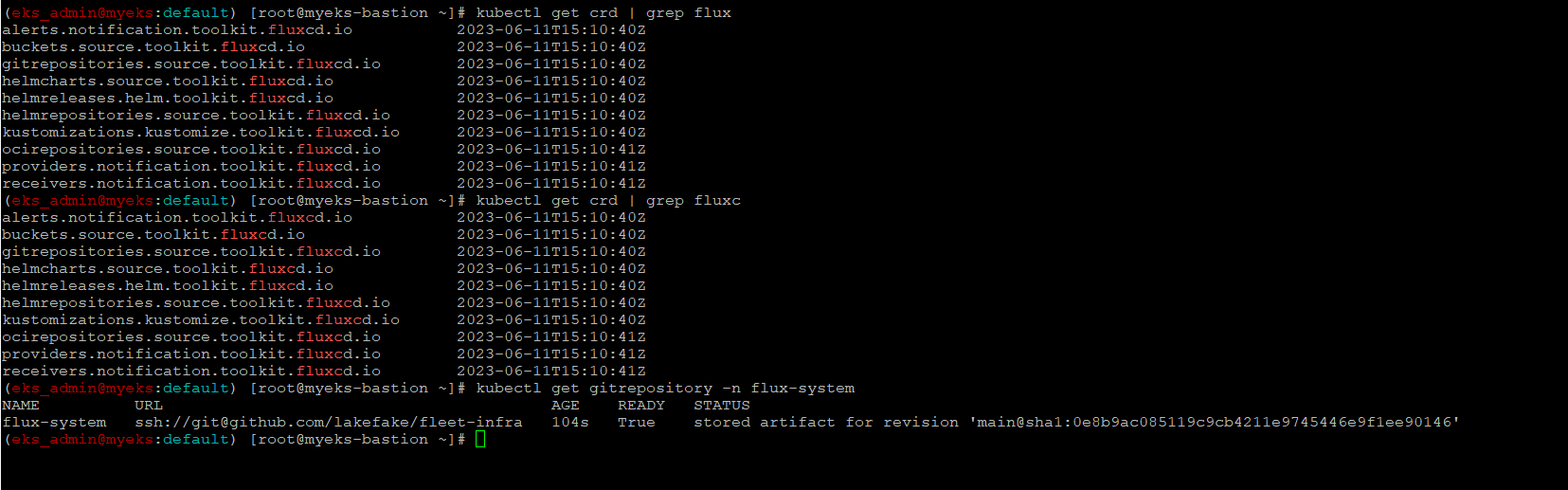

$> kubectl get crd | grep flux

$> kubectl get gitrepository -n flux-system

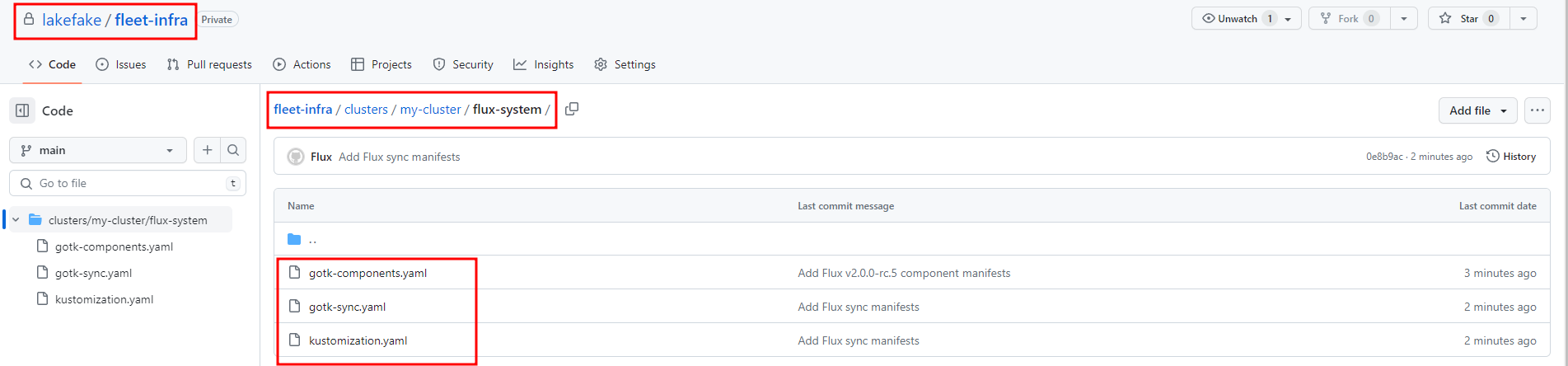

github 에서 아래와 같이 private type의 fleet-infra main branch가 생성이 되었으며, mycluster 다렉토리 아래에 Flux 관련 manifest file이 생성

Flux Bootstrap 관련 Git source에 설치된 정보 확인

gitops 도구 설치_Flux 관련 관제 WEB UI

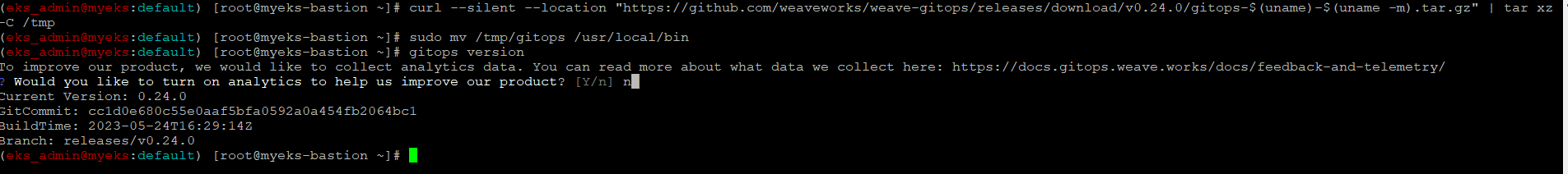

gitops 도구 설치

$> curl --silent --location "https://github.com/weaveworks/weave-gitops/releases/download/v0.24.0/gitops-$(uname)-$(uname -m).tar.gz" | tar xz -C /tmp

$> sudo mv /tmp/gitops /usr/local/bin

$> gitops version

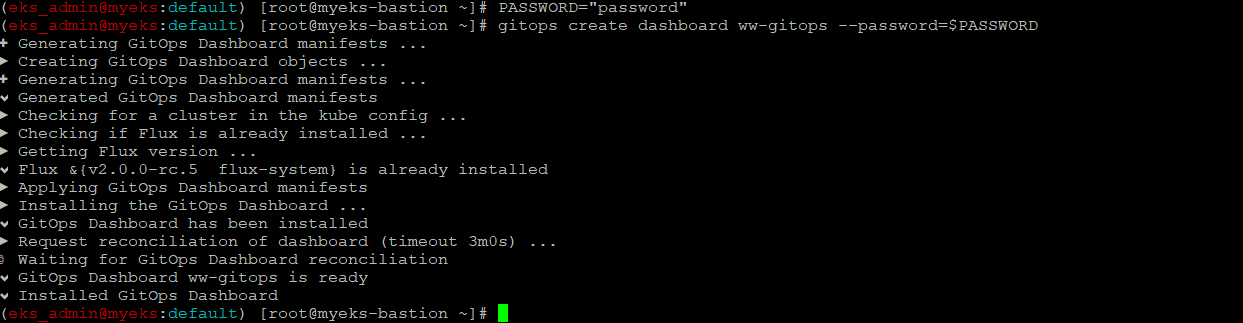

flux 대시보드 설치

$> PASSWORD="password"

$> gitops create dashboard ww-gitops --password=$PASSWORD

배포 확인

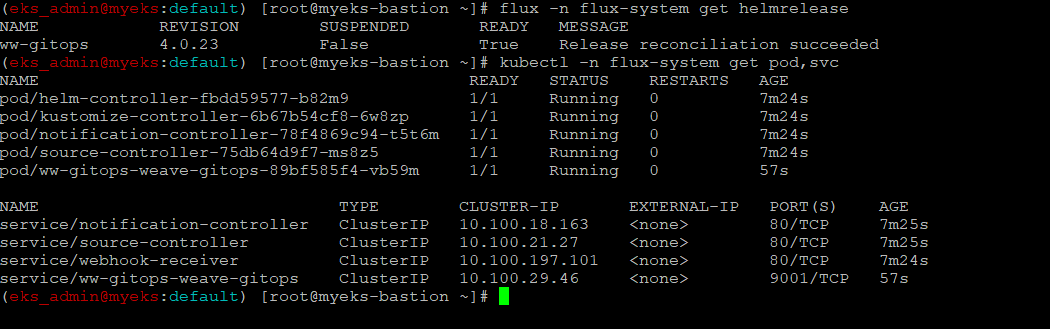

$> flux -n flux-system get helmrelease

$> kubectl -n flux-system get pod,svc

gitops ingress 설정

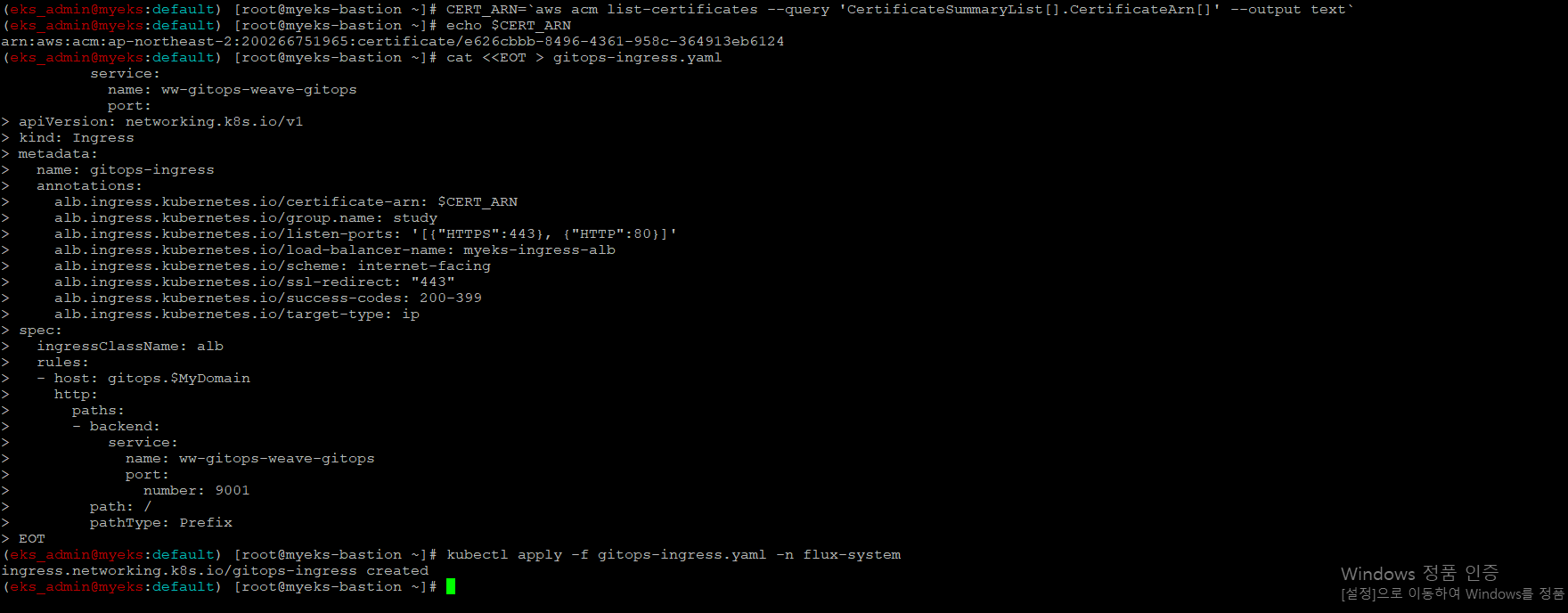

$> CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

$> echo $CERT_ARN

$> cat <<EOT > gitops-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: gitops-ingress

annotations:

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/ssl-redirect: "443"

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- host: gitops.$MyDomain

http:

paths:

- backend:

service:

name: ww-gitops-weave-gitops

port:

number: 9001

path: /

pathType: Prefix

EOT

$> kubectl apply -f gitops-ingress.yaml -n flux-system

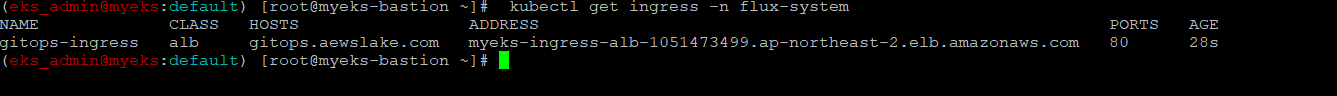

Ingress 배포 확인

$> kubectl get ingress -n flux-system

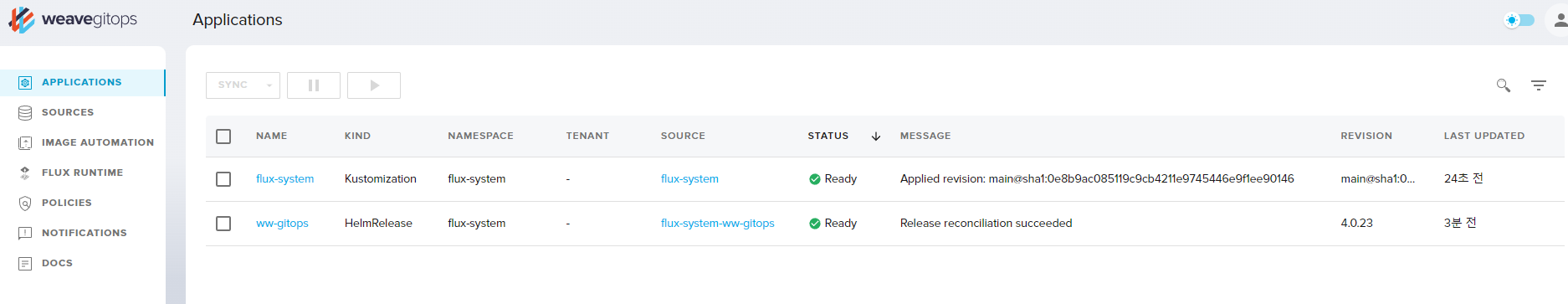

Gitops Dashboard 웹 접속 후 정보 확인

$> echo -e "GitOps Web https://gitops.$MyDomain"실제 접속 성공에는 약간의 시간(1분 정도)이 필요

Flux 를 통한 application 배포

github에 있는 mainifest 파일을 Flux를 통해서 Kubenetes에 배포하는 과정을 실습

먼저 Source를 정의를 하고 이 후 Application 정의를 하여 Git Source와 Kubenetes를 연결

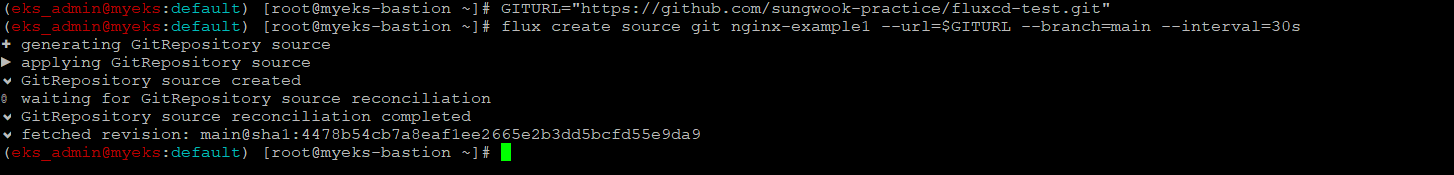

Flux Source 생성

$> GITURL="https://github.com/sungwook-practice/fluxcd-test.git"

$> flux create source git nginx-example1 --url=$GITURL --branch=main --interval=30s

Flux Source 생성 확인

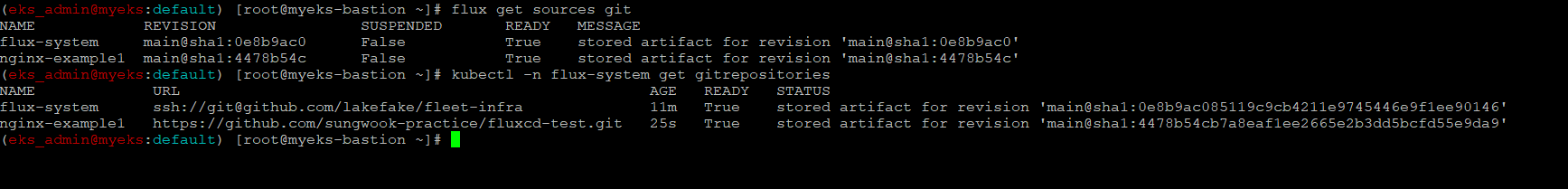

$> flux get sources git

$> kubectl -n flux-system get gitrepositories

Flux application 생성

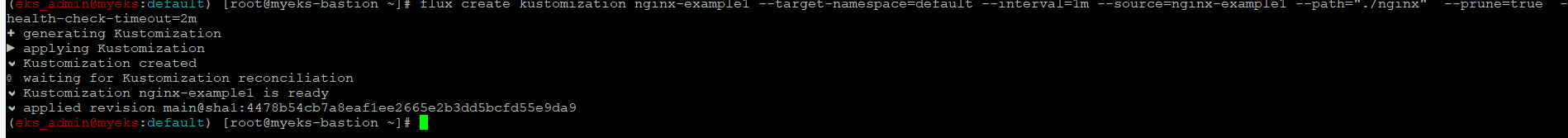

$> flux create kustomization nginx-example1 --target-namespace=default --interval=1m --source=nginx-example1 --path="./nginx" --prune=true --health-check-timeout=2m

Flux application 생성 확인

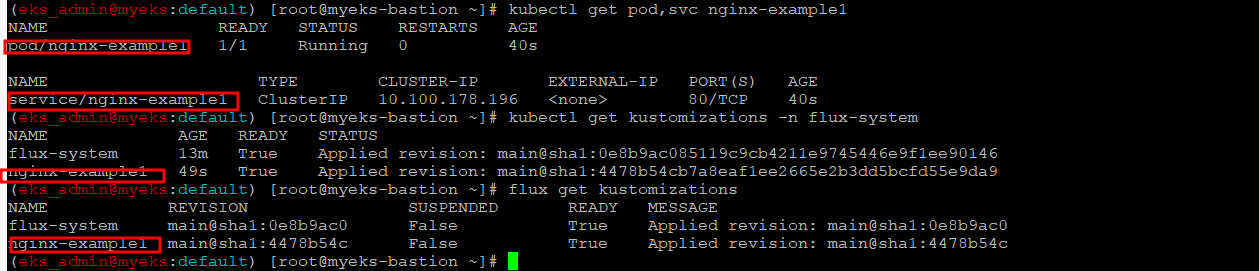

$> kubectl get pod,svc nginx-example1

$> kubectl get kustomizations -n flux-system

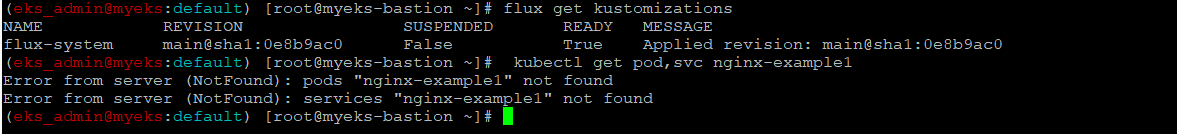

$> flux get kustomizations

Flux application 삭제

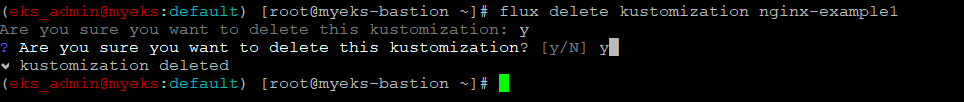

Prune option에 따라 pod의 자동 삭제 여부가 결정, False이면 pod는 종료하지 않고 그대로 유지 되며, true인 경우 application 삭제 시 자동 Pod 종료

$> flux delete kustomization nginx-example1

Flux application 삭제 확인

$> flux get kustomizations

$> kubectl get pod,svc nginx-example1

Flux source 삭제

$> flux delete source git nginx-example1

Flux source 삭제확인

$> flux get sources git

$> kubectl -n flux-system get gitrepositories