📕 Volume 이어서

📔 NFS

## node1, node2 모두 설치

sudo apt -y install nfs-kernel-server

sudo systemctl enable --now nfs-server

sudo systemctl status nfs-server

## node 1 에서 nfs server 설치

sudo vi /etc/exports

/data_dir *(rw,sync,no_root_squash,no_subtree_check)

yji@k8s-node1:~$ sudo netstat -nlp | grep 2049

tcp 0 0 0.0.0.0:2049 0.0.0.0:* LISTEN -

tcp6 0 0 :::2049 :::* LISTEN -

udp 0 0 0.0.0.0:2049 0.0.0.0:* -

udp6 0 0 :::2049 :::* -

## node2 에서 nfs clinet 설치

yji@k8s-node2:~$ sudo apt -y install nfs-common

sudo mkdir -p /data_dir/nfs

## 다시 node1

sudo systemctl restart nfs-server

sudo systemctl status nfs-server

## 다시 node2

yji@k8s-node2:~$ sudo mount -t nfs k8s-node1:/data_dir /data_dir/nfs

yji@k8s-node2:~$ df -h

Filesystem Size Used Avail Use% Mounted on

k8s-node1:/data_dir 66G 17G 49G 26% /data_dir/nfs📕 용량 제한

- docker container 내부 용량 제한은

--storage-opt size=> /- mount된 dir의 용량을 제어는

dd명령 임시 파일 -> fdisk -> mount - 단, 이 방법의 단점은 OS 의존적

- 쿠버네티스는 PV. PVC로 OS 의존적인 문제를 보완한다.

yji@k8s-node2:~$ sudo su -

root@k8s-node2:~# dd if=/dev/zero of=temphdd.img count=512 bs=1M

512+0 records in

512+0 records out

536870912 bytes (537 MB, 512 MiB) copied, 8.4066 s, 63.9 MB/s

root@k8s-node2:~# ls -lh temphdd.img

-rw-r--r-- 1 root root 512M 10월 6 06:30 temphdd.img

root@k8s-node2:~# mkfs.xfs temphdd.img

meta-data=temphdd.img isize=512 agcount=4, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=131072, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=1368, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

## temp.img를 backup 디렉토리에 붙이겠다

root@k8s-node2:~# mkdir /backup_dir

root@k8s-node2:~# mount -o loop temphdd.img /backup_dir

root@k8s-node2:~# df -h | grep /backup_dir

/dev/loop3 507M 30M 478M 6% /backup_dir

# user가 디렉토리 사용할 수 있도록

root@k8s-node2:~# chown -R yji.yji /backup_dir/

## 어제만들었던 pod를 host에 붙여보자

(hostpath_NFS 폴더)

vim host-path2.yaml

## 10/06 아까 만든 backup dir 사용해보자

apiVersion: v1

kind: Pod

metadata:

name: pod-vol5

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node2

containers:

- image: dbgurum/k8s-lab:initial

name: container

volumeMounts:

- name: host-path

mountPath: /backup

volumes:

- name: host-path

hostPath:

path: /backup_dir

type: DirectoryOrCreate

yji@k8s-master:~/LABs/hostpath_NFS$ kubectl apply -f host-path2.yaml

pod/pod-vol5 created

yji@k8s-master:~/LABs/hostpath_NFS$ kubectl describe po pod-vol5

Volumes:

host-path:

Type: HostPath (bare host directory volume)

Path: 💙/backup_dir 잘 붙어있다.

yji@k8s-master:~/LABs/hostpath_NFS$ kubectl exec -it pod-vol5 -- df -h

Filesystem Size Used Avail Use% Mounted on

/dev/loop3 507M💙 아까만든 500MB 정도의 폴더에 붙음 30M 478M 6% /backup

-

지금까지 과정은 OS에 의존적임. HostOS의 명령어를 자꾸 사용하게 됨

-> 이를 k8s의 PV, PVC로 해결 가능 -

먼저 PV

가 아닌가 /? 왠 구글

구글의 GCE의 disk를 빌려서 pod에 붙여본다.

클라우드가 직접 마스터 노드를 관리한다.

10G, Gi, Gib

## 터미널로 자원 빌리기

## SDK 콘솔

C:\k8s>gcloud compute disks create mongo-storage --size=10G --zone=asia-northeast3-a

C:\k8s>gcloud compute disks list

NAME LOCATION LOCATION_SCOPE SIZE_GB TYPE STATUS

gke-k8s-cluster-k8s-node-pool-3e9e423a-42v6 asia-northeast3-a zone 100 pd-standard READY

gke-k8s-cluster-k8s-node-pool-3e9e423a-gfwg asia-northeast3-a zone 100 pd-standard READY

gke-k8s-cluster-k8s-node-pool-3e9e423a-wnnk asia-northeast3-a zone 100 pd-standard READY

mongo-storage asia-northeast3-a zone 10 pd-standard READY

kubectl apply -f gce-mongo.yaml

kubectl describe po gce-mongo-pod

Volumes:

mongodb-data:

Type: GCEPersistentDisk (a Persistent Disk resource in Google Compute Engine)

PDName: mongo-storage

FSType: ext4📕 PV

- Persistent Volume: 지속적 볼륨, 가상 스토리지 인스턴스 (dd)

- OS, Cloud 등에서 실제 storage의 물리적 공간을 활용

- 용량, 권한(AccessModes)

📕 PVC

-

Persistent Volume Claim: 사용자(개발자)에 의해 PV를 프로비저닝하는 요청

- 용량, 권한 부여

- 매칭되는 PV에 자동 연결(Bound)

- 용량, 권한 부여

-

권한(AccessModes)

- ReadWriteOnce(RWO) : once: 하나의 노드가 볼륨을 RW 가능하도록 mount

- ReadWriteMany(RWX) : many: 여러 노드가 볼륨을 RW 가능하도록 mount

- RadnOnlyMany(ROX) : 여러노드가 볼륨을 RO 가능하도록 mount

# yaml 파일에 적을 mode 부터 검색

kubectl api-resources | grep -i persi

persistentvolumes pv v1 false 💚PersistentVolume

// 🤔 PV 재활용 정책.. !

persistentVolumeReclaimPolicyvim pv01.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain # Retain(default), Recycle, Delete

local:

path: /data_dir

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- {key: kubernetes.io/hostname, operator: In, values: [k8s-node1]}

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 1Gi RWO Retain Available 4m15s

pv2 1Gi ROX Retain Available 2m9s

pv3 2Gi RWX Retain Available 2m5s

pvc가 pv를 선택한다. (조건과 용량을 매칭해서 ! )

vim pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

spec:

accessModes:

- ReadOnlyMany

resources:

requests:

storage: 1G

storageClassName: ""

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc2

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1G

storageClassName: ""

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5G

storageClassName: ""

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc4

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1G

storageClassName: ""

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv1 1Gi RWO Retain Bound default/pvc2 10m

persistentvolume/pv2 1Gi ROX Retain Bound default/pvc1 8m2s

persistentvolume/pv3 2Gi RWX Retain Bound default/pvc4 7m58s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc1 Bound pv2 1Gi ROX 62s

persistentvolumeclaim/pvc2 Bound pv1 1Gi RWO 62s

persistentvolumeclaim/pvc3 Pending 61s

persistentvolumeclaim/pvc4 Bound pv3 2Gi RWX 61s

pvc 실습: os 의존성 배제

vim mynode.yaml

apiVersion: v1

kind: Pod

metadata:

name: mynode-pod

spec:

containers:

- image: ur2e/mynode:1.0

name: mynode-container

ports:

- containerPort: 8000

volumeMounts:

- name: mynode-path

mountPath: /mynode

volumes:

- name: mynode-path

persistentVolumeClaim:

claimName: pvc1

yji@k8s-master:~/LABs/pv-pvc$ kubectl exec -it mynode-pod -- bash

root@mynode-pod:/# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda1 66G 17G 49G 26% /mynode

root@mynode-pod:/# echo 'hi k8s~' > /mynode/pvc1.txt

root@mynode-pod:/# ls /mynode/

nfs1 pvc1.txt

### node 1에서

yji@k8s-node1:~$ ls -l /data_dir/

total 4

-rw-r--r-- 1 root root 0 10월 6 06:21 nfs1

-rw-r--r-- 1 root root 8 10월 6 08:22 pvc1.txt

pv,pvc는 저장방식와 용량에 따라 자동매칭되는 기법을 사용함

pvc 수동선택

vim

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv4

labels:

name: pv4

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

local:

path: /data_dir

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- {key: kubernetes.io/hostname, operator: In, values: [k8s-node1]}

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc5

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1G

storageClassName: ""

selector:

matchLabels:

name: pv4

yji@k8s-master:~/LABs/pv-pvc$ kubectl apply -f pv-pvc-selecotr.yaml

persistentvolume/pv4 created

persistentvolumeclaim/pvc5 created

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv1 1Gi RWO Retain Bound default/pvc2 44m

persistentvolume/pv2 1Gi ROX Retain Bound default/pvc1 41m

persistentvolume/pv3 2Gi RWX Retain Bound default/pvc4 41m

persistentvolume/pv4 1Gi RWO Retain Bound default/pvc5 3s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc1 Bound pv2 1Gi ROX 34m

persistentvolumeclaim/pvc2 Bound pv1 1Gi RWO 34m

persistentvolumeclaim/pvc3 Pending 34m

persistentvolumeclaim/pvc4 Bound pv3 2Gi RWX 34m

persistentvolumeclaim/pvc5 Bound pv4 1Gi RWO 3s

## 바로바로 pv의 용량 등 의 정보를 바꿀 수 있음

yji@k8s-master:~/LABs/pv-pvc$ kubectl edit pv pv3

Edit cancelled, no changes made.

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv1 1Gi RWO Retain Bound default/pvc2 47m

pv2 1Gi ROX Retain Bound default/pvc1 45m

pv3 2Gi RWX Retain Bound default/pvc4

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv-volume 10Gi RWO Retain Bound default/pvc-volume manual 63s

kubectl edit pv pv-volume (10G -> 20G로 변경

)

yji@k8s-master:~/LABs/pv-pvc$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pv-volume 20Gi RWO Retain Bound default/pvc-volume manual 63s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-volume Bound pv-volume 100Gi RWO manual 63s

결론: source를 cp 하지 않고 데이터를 공유할 수 있다.# mongo_pv_pvc_pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mongodb-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

- ReadOnlyMany

persistentVolumeReclaimPolicy: Retain

gcePersistentDisk:

pdName: mongo-storage

fsType: ext4

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongodb-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: mongo-pod

spec:

volumes:

- name: web-pv-storage

persistentVolumeClaim:

claimName: pvc-volume

containers:

- name: mongodb

image: mongo

ports:

- containerPort: 27017

protocol: TCP

volumeMounts:

- name: mongo-data

mountPath: /data/db

volumes:

- name: mongo-data

persistentVolumeClaim:

claimName: mongodb-pvcgit

pod의 볼륨을 정의할 때 git repo를 넣는다.

yji@k8s-master:~/LABs/gitrepo$ vi gitrepo.yaml

apiVersion: v1

kind: Pod

metadata:

name: gitrepo-pod

spec:

containers:

- image: nginx:1.23.1-alpine

name: web-container

volumeMounts:

- name: gitrepo

mountPath: /usr/share/nginx/html

volumes:

- name: gitrepo

gitRepo:

repository: https://github.com/ur2e/whatisyourmotto.git

revision: main

directory: .

yji@k8s-master:~/LABs/gitrepo$ kubectl apply -f gitrepo.yaml

Warning: spec.volumes[0].gitRepo: deprecated in v1.11

pod/gitrepo-pod created

yji@k8s-master:~/LABs/gitrepo$ kubectl get po

NAME READY STATUS RESTARTS AGE

gitrepo-pod 0/1 ContainerCreating 0 12s

github에 반영사항이랑 동기화되지 않는 문제가 있다.

그래서 지금은 deprecated 인 듯-

config map

- key: value 구조의 데이터 저장

- 자주 사용하는 환경변수

- 전달해야하는 명령의 인수(args)

- 구성 파일 (*.conf)

-

secret

- key: value 구조의 데이터 저장

- 암호, 기밀데이터 저장.

- 암호화하지 않고 인코딩함.(base64, why? 가벼움)

- ex. MYSQL_ROOT_PASSWORD, openssl

- *.crt -> https(tls)

-

사용법

*kubectl create configmap --from-literal --from-literal --from-literal--from-literal=key=vlaue|--from-literal key=value- ``

config map 생성 및 Pod로 참조하기

참조기법(3)

# cm 생성

yji@k8s-master:~/LABs$ kubectl create configmap log-level-cm --from-literal=LOG_LEVEL=DEBUG

configmap/log-level-cm created

# 생성한 configmap 조회

yji@k8s-master:~/LABs$ kubectl get cm

NAME DATA AGE

kube-root-ca.crt 1 6d21h

log-level-cm 1 6s

# 만든 configmap 자세한 조회

yji@k8s-master:~/LABs$ kubectl describe cm log-level-cm

Name: log-level-cm

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

LOG_LEVEL:

----

DEBUG

BinaryData

====

Events: <none>

## cm을 yaml로 확인해보기

yji@k8s-master:~/LABs$ kubectl get cm log-level-cm -o yaml

apiVersion: v1

data:

LOG_LEVEL: DEBUG

kind: ConfigMap

metadata:

creationTimestamp: "2022-10-06T02:19:02Z"

name: log-level-cm

namespace: default

resourceVersion: "208803"

uid: 623ff9f0-8081-4ac1-8c2b-8f6744cb3f4f

//참조 기법 알아보기

yji@k8s-master:~/LABs$ vi cm-pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: log-level-pod

spec:

containers:

- image: nginx:1.23.1-alpine

name: log-level-container

envFrom:

- configMapRef:

name: log-level-cm

yji@k8s-master:~/LABs$ kubectl apply -f cm-pod1.yaml

pod/log-level-pod created

yji@k8s-master:~/LABs$ kubectl get po

NAME READY STATUS RESTARTS AGE

log-level-pod 1/1 Running 0 12s

yji@k8s-master:~/LABs$ kubectl exec -it log-level-pod -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/

NJS_VERSION=0.7.6

PKG_RELEASE=1

💚LOG_LEVEL=DEBUG 💚 아까 cm으로 넣어준 환경변수를 확인할 수 있다.

### 2번째 기법

vim cm-pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: log-level-pod2

spec:

containers:

- image: nginx:1.23.1-alpine

name: log-level-container

volumeMounts:

- name: cm-volume

mountPath: /etc/config

volumes:

- name: cm-volume

configMap:

name: log-level-cm

kubectl apply -f cm-pod2.yaml

yji@k8s-master:~/LABs$ kubectl exec -it log-level-pod2 -- ls /etc/config

LOG_LEVEL

yji@k8s-master:~/LABs$ kubectl exec -it log-level-pod2 -- cat /etc/config/LOG_LEVEL

✍ cm 생성 시

필요한 환경 값의 개수만큼 -- 옵션 붙인다.

yji@k8s-master:~/LABs$ kubectl create cm env-cm --from-literal key1=values1 --from-literal=key2=value2

configmap/env-cm created

yji@k8s-master:~/LABs$ kubectl get cm

NAME DATA AGE

env-cm 2 5s

kube-root-ca.crt 1 6d21h

log-level-cm 1 14m

yji@k8s-master:~/LABs$ kubectl describe cm env-cm

Name: env-cm

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

key2:

----

value2

key1:

----

values1

BinaryData

====

Events: <none>

✍ 이번에는 redis라는 인메모리라는 값을

config라는 파일로 만들자

yji@k8s-master:~/LABs$ vi redis.conf

yji@k8s-master:~/LABs$ cat redis.conf

key1=kubernetes

key2=docker

key3=linux

key4=gke

==> 💭 아걸 config로 만들자

yji@k8s-master:~/LABs$ kubectl create cm redis-cm --from-file=redis.conf

configmap/redis-cm created

yji@k8s-master:~/LABs$ kubectl get cm

NAME DATA AGE

redis-cm 1 22s

yji@k8s-master:~/LABs$ kubectl describe cm redis-cm

...

Data

====

redis.conf:

----

key1=kubernetes

key2=docker

key3=linux

key4=gke

...

====

✍이제 redis cm 을 참조하는 pod를 만들자

vim redis-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: redis-cm-pod

spec:

containers:

- image: redis

name: redis-container

volumeMounts:

- name: redis-volume

mountPath: /opt/redis-config

volumes:

- name: redis-volume

configMap:

name: redis-cm

kubectl apply -f redis-pod.yaml

yji@k8s-master:~/LABs$ kubectl exec -it redis-cm-pod -- cat /opt/redis-config/redis.conf

key1=kubernetes

key2=docker

key3=linux

key4=gke

---

cka 시험 버전

kubectl create configmap my0pwd --from-literal=mypwd=k8spass# --dry-runc=client -o yaml > py-pwd.yaml

kubectl run mymeb --images=nginx:1.2.3.1-alpine --dry-run=client -o yaml > myweb.yaml

파드 설계기ㅓㅂ , 앰배서ㄷ 사이드카.//- Ambassador pod design pattern

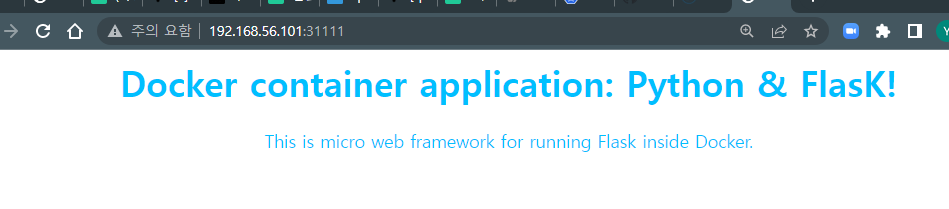

#flaskapp-conf.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-conf

data:

nginx.conf: |-

user nginx;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream flaskapp {

server localhost:9000;

}

server {

listen 80;

location / {

proxy_pass http://flaskapp;

proxy_redirect off;

}

}

}

---

# flaskapp-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: flaskapp-pod

labels:

app: flaskapp

spec:

containers:

- image: nginx:1.21-alpine

name: proxy-container

volumeMounts:

- name: nginx-proxy-config

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

ports:

- containerPort: 80

protocol: TCP

- image: ur2e/py_flask:1.0

name: flaskapp-container

ports:

- containerPort: 9000

protocol: TCP

volumes:

- name: nginx-proxy-config

configMap:

name: nginx-conf

---

# flaskapp-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: flaskapp-svc

spec:

selector:

app: flaskapp

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 31111

type: NodePortsecret

yji@k8s-master:~/LABs$ kubectl create secret generic my-pwd --from-literal=mypwd=1234

secret/my-pwd created

yji@k8s-master:~/LABs$ kubectl get secret

NAME TYPE DATA AGE

my-pwd Opaque 1 4s

yji@k8s-master:~/LABs$ kubectl describe secrets my-pwd

Name: my-pwd

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

mypwd: 4 bytes

yji@k8s-master:~/LABs$ kubectl get secret -o yaml

apiVersion: v1

items:

- apiVersion: v1

data:

mypwd: 💚MTIzNA==💚 인코딩된 정보

kind: Secret

...

## 디코딩해보기

yji@k8s-master:~/LABs$ echo MTIzNA== | base64 -d

1234⭐ 파드가 시크릿을 참조하면 자동으로 디코딩된다 !

## 시크릿 주입하는 첫번째 기법

vim sec-pod-1.yaml

apiVersion: v1

kind: Pod

metadata:

name: sec-pod

spec:

containers:

- image: nginx:1.23.1-alpine

name: sec-container

envFrom:

- secretRef:

name: my-pwd

yji@k8s-master:~/LABs$ kubectl exec -it sec-pod -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

NJS_VERSION=0.7.6

PKG_RELEASE=1

💙mypwd=1234

## 시크릿 주입하는 2번째 기법

vim sec-pod-2.yaml

apiVersion: v1

kind: Pod

metadata:

name: sec-pod2

spec:

containers:

- image: redis

name: redis-container

volumeMounts:

- name: redis-volume

mountPath: /opt/redis-config

volumes:

- name: redis-volume

secret:

secretName: my-pwd

yji@k8s-master:~/LABs$ kubectl apply -f sec-pod-2.yaml

yji@k8s-master:~/LABs$ kubectl exec -it sec-pod2 -- ls /opt/redis-config/

mypwd

yji@k8s-master:~/LABs$ kubectl exec -it sec-pod2 -- cat /opt/redis-config/mypwd

1234configmap과 secret 동시에 사용 가용 ? ㅇㅇ

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-dev

data:

SSH: 'false'

User: kevin

---

apiVersion: v1

kind: Secret

metadata:

name: sec-dev

data:

key: YnJhdm9teWxpZmU=

---

apiVersion: v1

kind: Pod

metadata:

name: cm-sec-pod

spec:

containers:

- name: cm-sec-container

image: dbgurum/k8s-lab:initial

envFrom:

- configMapRef:

name: cm-dev

- secretRef:

name: sec-dev

yji@k8s-master:~/LABs$ kubectl exec -it cm-sec-pod -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

HOSTNAME=cm-sec-pod

container=docker

User=kevin

key=bravomylife

📔 특정 키만 참조하는 방법

yji@k8s-master:~/LABs$ kubectl create secret generic mysecret --from-literal=user_name=myuser\

> --from-literal=password=mypassword

secret/mysecret created

yji@k8s-master:~/LABs$ kubectl get secrets

NAME TYPE DATA AGE

my-pwd Opaque 1 27m

mysecret Opaque 1 4s

sec-dev Opaque 1 10m

yji@k8s-master:~/LABs$ kubectl describe secrets mysecret

Name: mysecret

Namespace: default

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

user_name: 40 bytes

yji@k8s-master:~/LABs$ kubectl run nginx-pod --image=nginx --dry-run=client -o yaml > nginx-pod.yaml

vim nginx-pod.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: nginx-pod

name: nginx-pod

spec:

containers:

- image: nginx

name: nginx-pod

env:

- name: USER_NAME

valueFrom:

secretKeyRef:

name: mysecret

key: user_name

resources: {}

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

kubectl apply -f nginx-pod.yaml

kubectl get po

kubectl exec -it nginx-pod -- env

yji@k8s-master:~/LABs$ kubectl exec -it nginx-pod -- env

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

PKG_RELEASE=1~bullseye

💚USER_NAME=myuser .. 이 키에만 접속했다 !

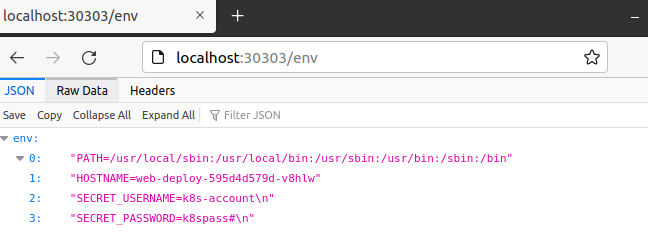

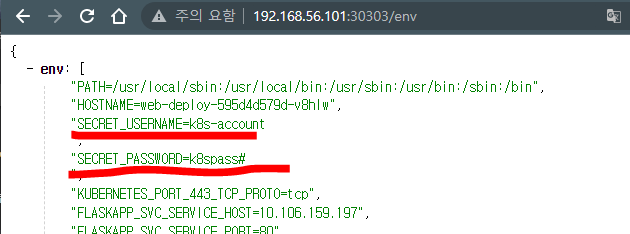

시크릿 실습

- 환경변수를 채우기 위해 사용한다.

- 시크릿 생성 + Deployment + 조회

## 환경변수로 쓸 파일 생성

yji@k8s-master:~/LABs/sec_deploy$ echo 'k8s-account' > k8s-account.txt

yji@k8s-master:~/LABs/sec_deploy$ echo 'k8spass#' > k8s-pwd.txt

## 시크릿 생성

yji@k8s-master:~/LABs/sec_deploy$ kubectl create secret generic account-pwd-secret \

> --from-file=k8s-account.txt --from-file=k8s-pwd.txt

secret/account-pwd-secret created

## Deployment 생성

yji@k8s-master:~/LABs/sec_deploy$ vim deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-deploy

labels:

app: sec-app

spec:

replicas: 2

selector:

matchLabels:

app: sec-app

template:

metadata:

labels:

app: sec-app

spec:

containers:

- name: testapp

image: dbgurum/k8s-lab:secret-1.0

ports:

- containerPort: 8080

env:

- name: SECRET_USERNAME

valueFrom:

secretKeyRef:

name: account-pwd-secret

key: k8s-account.txt

- name: SECRET_PASSWORD

valueFrom:

secretKeyRef:

name: account-pwd-secret

key: k8s-pwd.txt

---

apiVersion: v1

kind: Service

metadata:

labels:

app: sec-app

name: web-deploy-svc

spec:

ports:

- nodePort: 30303

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: sec-app

type: NodePort

yji@k8s-master:~/LABs/sec_deploy$ kubectl apply -f deploy.yaml

deployment.apps/web-deploy created

yji@k8s-master:~/LABs/sec_deploy$ kubectl get deploy,po,svc -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/web-deploy 2/2 2 2 107s testapp dbgurum/k8s-lab:secret-1.0 app=sec-app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/web-deploy-595d4d579d-qldcl 1/1 Running 0 107s 10.109.131.55 k8s-node2 <none> <none>

pod/web-deploy-595d4d579d-v8hlw 1/1 Running 0 107s 10.111.156.92 k8s-node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/web-deploy-svc NodePort 10.100.165.119 <none> 8080:30303/TCP 40s app=sec-app

-

master 노드에서 접근

-

외부 host(윈도우)에서 접근

메모장

🐳 📕 ⭐ 📔 💭 🤔 ✍ 💙 💚

대쉬보드 접근

-> kubectl proxy