멀티컨테이너 파드 -> ?

📕 yaml 파일 까서 자세히 알아보기

## kubectl get po [파드 이름] -o yaml을 보기편하게 redirection 해준다.

kubectl get po mynode-pod1 -o yaml > net-pod1.yaml📔 node에 라벨 붙이기

yji@k8s-master:~$ kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 5d19h v1.24.5

k8s-node1 Ready <none> 5d19h v1.24.5

k8s-node2 Ready <none> 5d19h v1.24.5

yji@k8s-master:~$ kubectl label nodes k8s-node1 node-role.kubernetes.io/worker=worker

node/k8s-node1 labeled

yji@k8s-master:~$ kubectl label nodes k8s-node2 node-role.kubernetes.io/worker=worker

node/k8s-node2 labeled

yji@k8s-master:~$ kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane 5d19h v1.24.5

k8s-node1 Ready worker 5d19h v1.24.5

k8s-node2 Ready worker 5d19h v1.24.5- pod cnt(large cluster) ---> QoS

- node / 110 ~ 5000(max)

- all node -> 150,000개

- all container -> 300,000개

- 4대 리소스

- cpu + memory + disk + network

📔 taint(오염) 관련 실습

yji@k8s-master:~$ kubectl describe no | grep -i 💭 taint = 오염

Taints: node-role.kubernetes.io/control-plane:💭NoSchedule

Taints: <none>

Taints: <none>

=> 결론) masternode에는 NoSchedule, 파드 만들 때 노드 마스터에는 만들지마

# ✍ 확인해보자

# taint-pod 생성

yji@k8s-master:~/LABs/mynode$ cat mynode.yaml

apiVersion: v1

kind: Pod

metadata:

name: taint-pod

labels:

run: nodejs

spec:

⭐ nodeSelector: ⭐ pod를 master에 만들어라

kubernetes.io/hostname: k8s-master

containers:

- image: dbgurum/mynode:1.0

name: mynode-container

ports:

- containerPort: 8000

yji@k8s-master:~/LABs/mynode$ kubectl get po -o wide | grep taint

taint-pod 0/1 ⭐Pending⭐ 0 13s <none> <none> <none> <none>

⭐: Pending 상태 ! master에 node 만들 수 없음

yji@k8s-master:~/LABs/mynode$ kubectl logs taint-pod

✍ 아무 일도 일어나지않음 마스터에 접근조차 못함 !

node 유지 관리

node 유지 관리를 위한 명령어

- taint

- NoScehdule을 걸어서 Pod 할당을 방지하겠다.

- <-->

- drain

- 특정 node의 모든 pod를 제거

- api-server, etcd, scheduler, kubelet, controller

- master node의 drain은 5가지 구성 요소는 제외.

- cordon / uncordon

- cordon: 특정 node의 할당 방지, 기존 pod 유지

yji@k8s-master:~/LABs/mynode$ kubectl label no k8s-master node-role.kubernetes.io/master=master

yji@k8s-master:~/LABs/mynode$ kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 5d19h v1.24.5

k8s-node1 Ready worker 5d19h v1.24.5

k8s-node2 Ready worker 5d19h v1.24.5

vim taint-pod.yaml

-> 버전 문제 ... docker-compose

-

docker run -p -->

kompose-> pod.yaml && service.yaml 변환

: docker compose 파일을kompose로 변환해서 pod랑 service 생성할 수 있다. -

docker run -p 는 kube proxy와 연관

📕 Service

-

kube-proxy를 통해 트래픽 전달docker-proxy와 유사 (-p 옵션을 통해)- 조회하려면

netstat -nlp | grep 8081- PID 따서

ps -ef PID하면 된다 ~

- PID 따서

-

Service Object가 필요한 이유

- ClusterIP, NodePort, LoadBalancer ..???

-

외부에서 Pod로 접근하기 위해 Service IP:port 사용

-

Service는 Pod의 접근하기위한 PodIP:port를 보유 -> endpoint(route)

-

multi pod와 연결(label)시 자체 LB 기능을 수행

-

Service의 동작

- 서비스로 내용이 들어온 다음에 파드까지 어떻게 가..?

- setup

- 3개의 Pod에 Service를 연결

- 기본적으로 Service IP를 거쳐서 Pod 쪽의 IP를 가지고 접근한다고 말씀하셨음

- 먼저, 서비스를 생성하면, ⭐coredns⭐(kube-dns)에 자동 등록된다. => 서비스명과 IP를 등록해서 서비스명:IP로 접근이 가능하다.

- 결과적으로 외부에서 트래픽이 서비스를 타고 들어오면 서비스 IP:port 가 endpoint를 타고 podIP:port로 변경이된다. (이게 우리가 알고있는 NAT, NAPT이고, 이걸 구사하는 애가

kube-proxy, 이때 kube-proxy는 coredns(kube-dns)로부터 정보를 확인한다.)- service IP:port -> EP -> PodIP:port

- NATm NAPT, iptables(rule)

- kube-proxy (kube-dns로부터 정보를 확인)

-

pall 하면 kube-proxy 나옴. 우리는 왜 3개야? Node가 3개라서. 1 Node = 1 kube-proxy

-

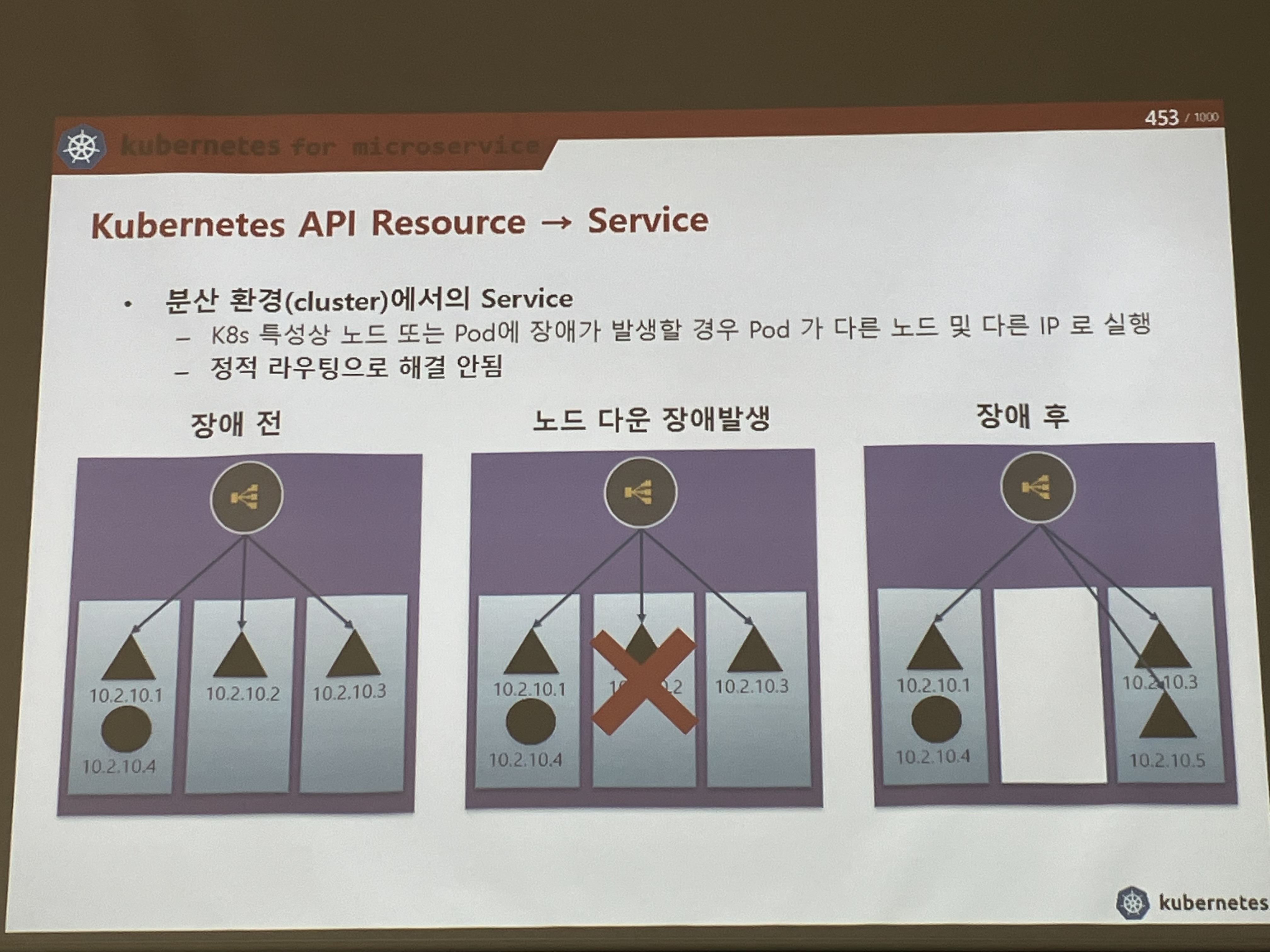

서비스의 목적

- k8s 특성상 노드 또는 pod에 장애가 발생할 경우, pod가 다른 노드 및 다른 IP로 실행 -> 서비스는 파드를 라벨로 식별한다. 서비스가 라우터 역할을 해서 노드를 대체하는 것이 가능.. ?

- k8s 특성상 노드 또는 pod에 장애가 발생할 경우, pod가 다른 노드 및 다른 IP로 실행 -> 서비스는 파드를 라벨로 식별한다. 서비스가 라우터 역할을 해서 노드를 대체하는 것이 가능.. ?

📔 실습1) kube-proxy 동작모드 (Cluster IP)

-

내부에서만 사용가능한 IP를 제공

-

IP 대역은 어디로부터 받어? 똑같이. 처음에 init할 때 잡았던

--pod-network-cidr에 영향. 사실 이건 Pod IP ... 10.96.0.0/12 .. 백만개- 10.96.0.0/12 -> 10.x 대역으로 할당된다.

-

--service-cidr로 서비스 ip 대역을 할당하자 (20.96.0.0/13) ... 백만개의 절반 -

frontend - backend - db (web-was-db) -> 3 Tier

- 서비스를 설계한다. frontend한테는 NodePort, LoadBalancer를 주면 된다. (외부 연결-

- backend와 db는 직접 연결할라면, 어짜피 내부에 들어와있으니까 ClusterIP 만 주면 ok)

- DB는 vpn 잡고 회사 branch에서 접속이 가능하도록 연결을 하기도 한다.

- DB는 회사의 자산이기때문에 on-premise 형태로 회사 서버실에 DB를 따로 두어 네트워크 연결을 따로 관리하기도 한다.

- 아니면 회사 서버실 DB는 백업용으로 쓰기위해 동기화만 해놓는 경우도 있음

type: ClusterIP 이거 안 써두됨. 이게 default임

- ClusterIP를 가지면 어디까지 접근이 가능할까?

- master, worker1, worker2 까진 가능하다.

- 즉, 내부용 같은 클러스터 내에서는 가능함 !

- 윈도우(외부)에서 접근 가능하려면

NodePort랑LoadBalancer가 필요하다.

yji@k8s-master:~/LABs/clusterip-test$ vim clusterip-test.yaml

yji@k8s-master:~/LABs/clusterip-test$ kubectl apply -f clusterip-test.yaml

pod/clusterip-pod created

service/clusterip-svc created

yji@k8s-master:~/LABs/clusterip-test$ kubectl get pod/clusterip-pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

✍clusterip-pod 1/1 Running 0 11s 10.111.156.110 ✍k8s-node1 <none> <none>

yji@k8s-master:~/LABs/clusterip-test$ kubectl get service/clusterip-svc -o yaml | grep IP

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"clusterip-svc","namespace":"default"},"spec":{"ports":[{"port":9000,"targetPort":8080}],"selector":{"app":"backend"},"type":"ClusterIP"}}

clusterIP: ✍10.108.13.251

clusterIPs:

- IPv4

type: ClusterIP

yji@k8s-master:~/LABs/clusterip-test$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

clusterip-svc ClusterIP ✍10.108.13.251 <none> 9000/TCP 32s

yji@k8s-master:~/LABs/clusterip-test$ kubectl describe svc clusterip-svc | grep -i endpoint

Endpoints: ✍10.111.156.110:8080

yji@k8s-master:~/LABs/clusterip-test$ kubectl get endpoints clusterip-svc

NAME ENDPOINTS AGE

clusterip-svc 10.111.156.110:8080 2m17s

## cluster의 모든 node 내에서 cluster-ip로 조회 가능

yji@k8s-master:~/LABs/clusterip-test$ curl 10.108.13.251:9000/hostname

Hostname : clusterip-pod- coredns -> dnsutils -> dig, /etc/resolv.conf

yji@k8s-master:~$ kubectl apply -f https://k8s.io/examples/admin/dns/dnsutils.yaml

pod/dnsutils created

yji@k8s-master:~$ kubectl get po

NAME READY STATUS RESTARTS AGE

dnsutils 1/1 Running 0 22s

yji@k8s-master:~$ kubectl exec -it dnsutils -- nslookup kubernetes.default

Server: 10.96.0.10

Address: ✍ 10.96.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1Server: 10.96.0.10

Address: 10.96.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.96.0.1

yji@k8s-master:~$ kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

✍kubernetes ClusterIP ✍ 10.96.0.1 같다! <none> 443/TCP 5d21h <none>

yji@k8s-master:~$ kubectl exec -it dnsutils -- cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.96.0.10

options ndots:5

yji@k8s-master:~$ kubectl get po -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-6d4b75cb6d-bppcr 1/1 Running 2 (26h ago) 5d21h

coredns-6d4b75cb6d-d5r65 1/1 Running 2 (26h ago) 5d21h

## dns 테스트를 위해 deployment를 만들고 서비스를 붙여보자

vim dns-test.yaml

## DNS TEST -> PodIP, ServiceIP(ClusterIP) -> core dns에 등록

## -> nslookup으로 잘 등록되었는지 확인하자

apiVersion: v1

kind: Pod

metadata:

name: dns-pod

labels:

dns: verify

spec:

containers:

- image: redis # in-memory db -> cloud에서는 cache 주로 사용(memCache)

name: redis-dns

ports:

- containerPort: 6379

---

apiVersion: v1

kind: Service

metadata:

name: dns-svc

spec:

selector:

dns: verify

ports:

- port: 6379 # docker run -p 앞

targetPort: 6379 # 뒤

yji@k8s-master:~/LABs/dns-test$ kubectl apply -f dns-test.yaml

pod/dns-pod created

service/dns-svc created

yji@k8s-master:~/LABs/dns-test$ kubectl get po,svc -o wide | grep dns

pod/dns-pod 1/1 Running 0 39s 💚10.111.156.111 (podIP) k8s-node1 <none> <none>

service/dns-svc ClusterIP 💙10.97.202.207 (clusterIP) <none> 6379/TCP 39s dns=verify

# dns test를 위한 busy box (10mb) 띄워서 확인하기 !

## nslookup이 잘 작동한다. ~ == coredns가 제 역할을 잘 하고 있다 ~

yji@k8s-master:~/LABs/dns-test$ kubectl run dnspod-verify --image=busybox --restart=Never --rm -it -- nslookup 💚10.111.156.111

Server: 10.96.0.10

Address: 10.96.0.10:53

111.156.111.10.in-addr.arpa name = 10-111-156-111.dns-svc.default.svc.cluster.local

pod "dnspod-verify" deleted ⭐ : --rm 옵션줘서 바로 삭제된다.

yji@k8s-master:~/LABs/dns-test$ kubectl run dnspod-verify --image=busybox --restart=Never --rm -it -- nslookup 💙10.97.202.207

Server: 10.96.0.10

Address: 10.96.0.10:53

207.202.97.10.in-addr.arpa name = dns-svc.default.svc.cluster.local

pod "dnspod-verify" deleted🐳 NodePort

-

external, 외부 연결용

-

30000 ~ 32767 range에서 random으로 마스터를 포함한 모든 노드의 port open

-

kube-proxy에 트래픽 정보가 들어오면 rotue table을 보유 -> 외부 트래픽이 nodePort 서비스에 연결되고.. 대상 pod로 연결된다.

- kube-proxy -> route table 보유 -> 외부 트래픽 -> (dest) Service(NP) -> (src) Pods

-

port -> 자동(랜덤) 할당 / 수동 지정

ports:

- port: 8080

targetPort: 80

nodePort: 31111

## 🧡 트래픽이 들어오는 포트 경로 : 외부트래픽 in -> 31111 -> 8080(Host) -> 80(Pod)📔 윈도우에서 확인해보기

vim nodePort.yaml

apiVersion: v1

kind: Pod

metadata:

name: mynode-pod

labels:

app: hi-mynode

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node1

containers:

- name: mynode-container

image: ur2e/mynode:1.0

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: mynode-svc

spec:

selector:

app: hi-mynode

type: NodePort

ports:

- port: 8899

targetPort: 8000

yji@k8s-master:~/LABs/nodePort$ kubectl apply -f nodePort.yaml

pod/mynode-pod created

service/mynode-svc created

yji@k8s-master:~/LABs/nodePort$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynode-pod 1/1 Running 0 3s 10.111.156.114 ⭐k8s-node1 <none> <none>

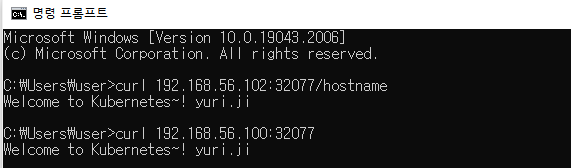

yji@k8s-master:~/LABs/nodePort$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mynode-svc NodePort 10.108.244.115 <none> 8899:⭐32077/TCP 62s

yji@k8s-master:~/LABs/nodePort$ kubectl get endpoints mynode-svc

NAME ENDPOINTS AGE

mynode-svc 10.111.156.114:8000 111s

yji@k8s-master:~/LABs/nodePort$ curl 192.168.56.100:32077/hostname

Welcome to Kubernetes~! yuri.ji

yji@k8s-master:~/LABs/nodePort$ curl 192.168.56.100:32077

Welcome to Kubernetes~! yuri.ji

yji@k8s-master:~/LABs/nodePort$ curl 192.168.56.101:32077/hostname

Welcome to Kubernetes~! yuri.ji

yji@k8s-master:~/LABs/nodePort$ curl 192.168.56.102:32077/hostname

Welcome to Kubernetes~! yuri.ji

- 윈도우에서도 접속 가능 !

실습) lb pod

vim lb-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: lb-pod1

labels:

lb: pod

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node1

containers:

- image: dbgurum/k8s-lab:v1.0

name: pod1

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Pod

metadata:

name: lb-pod2

labels:

lb: pod

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node2

containers:

- image: dbgurum/k8s-lab:v1.0

name: pod2

ports:

- containerPort: 8080

yji@k8s-master:~/LABs/lb$ kubectl apply -f lb-pod.yaml

pod/lb-pod1 created

pod/lb-pod2 created

yji@k8s-master:~/LABs/lb$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

lb-pod1 1/1 Running 0 66s 10.111.156.115 k8s-node1 <none> <none>

lb-pod2 1/1 Running 0 66s 10.109.131.42 k8s-node2 <none> <none>

yji@k8s-master:~/LABs/lb$ vim lb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: nodep-svc

spec:

selector:

lb: pod

ports:

- port: 9000

targetPort: 8080

nodePort: 32568

type: NodePort

yji@k8s-master:~/LABs/lb$ kubectl apply -f lb-svc.yaml

service/nodep-svc created

yji@k8s-master:~/LABs/lb$ kubectl get po,svc -o wide | grep lb

pod/lb-pod1 1/1 Running 0 3m30s 10.111.156.115 k8s-node1 <none> <none>

pod/lb-pod2 1/1 Running 0 3m30s 10.109.131.42 k8s-node2 <none> <none>

service/nodep-svc NodePort 10.96.146.245 <none> 9000:32568/TCP 29s lb=pod

## LB 작동하는지 확인

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

###

kubectl edit svc nodep-svc

5 apiVersion: v1

6 kind: Service

7 metadata:

8 annotations:

9 kubectl.kubernetes.io/last-applied-configuration: |

10 {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"nodep-svc" ,"namespace":"default"},"spec":{"ports":[{"nodePort":32568,"port":9000,"targetPort":8080} ],"selector":{"lb":"pod"},"type":"NodePort"}}

11 creationTimestamp: "2022-10-05T03:20:56Z"

12 name: nodep-svc

13 namespace: default

14 resourceVersion: "132800"

15 uid: 87775134-adbd-499f-af09-a72150a70493

16 spec:

17 clusterIP: 10.96.146.245

18 clusterIPs:

19 - 10.96.146.245

⭐ 20 externalTrafficPolicy: Cluster⭐ : 기본값이 LB 가능하다 ~ 라는 뜻. 이거랑 21번 라인을 Local로 바꿔보자

21 internalTrafficPolicy: Cluster

22 ipFamilies:

23 - IPv4

24 ipFamilyPolicy: SingleStack

25 ports:

26 - nodePort: 32568

27 port: 9000

28 protocol: TCP

29 targetPort: 8080

30 selector:

31 lb: pod

32 sessionAffinity: None

33 type: NodePort

34 status:

35 loadBalancer: {}

## 다시 curl -> LB 작동 안된다 ! !

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

## 다시 Cluster로 원상복구

yji@k8s-master:~/LABs/lb$ kubectl edit svc nodep-svc

service/nodep-svc edited

## LB 다시 잘 작동한다

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod1

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2

yji@k8s-master:~/LABs/lb$ curl 192.168.56.101:32568/hostname

Hostname : lb-pod2💭 애플리케이션 설계 시 주요 속성 (분산 | 로컬)

- NodePort: externalTrafficPolicy: Cluster | Local

- ClusterIP:

sessionAffinity와 유사한 속성(enable로 해주면 고정 값. likeLocal속성)

? ?

- sessionAffinity 확인

None: 분산

enable: 고정

kubectl get svc mongo-svc -o yaml > sassaff.yaml

vim sassaff.yaml

apiVersion: v1

kind: Service

name: mongo-svc

...

⭐sessionAffinity: None ⭐ => 분산

type: ClusterIP- LoadBalancer

- -> External-IP에 Public IP 제공하는 기법

- cloud 환경에서 주로 사용

- VM 환경에서는 MetalLB를 통해 구현 가능

in GCP

notepad lb-test.yaml

apiVersion: v1

kind: Pod

metadata:

name: lb-pod

labels:

lb: pod

spec:

containers:

- image: dbgurum/k8s-lab:v1.0

name: pod1

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: nodep-svc

spec:

selector:

lb: pod

ports:

- port: 9000

targetPort: 8080

type: LoadBalancer

실제로 들어갈 때는 nodePort 기법을 사용하는 것을 알 수 있음

📔 실습) deployment로 lb test

- GKE 에서 클러스터 생성해서 LB 알고리즘 확인 (RR? Random?)

자기 멋대로 나온다. -> NodePort 이용한 분산 ..

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-w98mh

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-rdvvw

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-7b5b4

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-w98mh

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-7b5b4

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-w98mh

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-w98mh

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-w98mh

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-7b5b4

C:\k8s\LABs>curl 34.64.241.16:9000/hostname

Hostname : lb-deploy-55d7988bf7-rdvvw📕 Desired state management 확인

- replicas: 3을 지키기 위해 pod가 삭제되면 바로 다시 생성한다.

apiVersion: apps/v1

kind: Deployment

metadata:

name: lb-deploy

labels:

app: lb-app

spec:

replicas: 3 # apply 하면 pod를 3개 뿌리세용

selector:

matchLabels:

app: lb-app

template: # pod 돌다가 죽으면 생성할 pod의 정보

metadata:

labels:

app: lb-app

spec:

containers:

- image: dbgurum/k8s-lab:v1.0

name: lb-container

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: lb-svc

spec:

selector:

app: lb-app

ports:

- port: 9000

targetPort: 8080

type: LoadBalancer

# 1번 터미널창

C:\k8s\LABs>kubectl delete po lb-deploy-55d7988bf7-w98mh lb-deploy-55d7988bf7-rdvvw

pod "lb-deploy-55d7988bf7-w98mh" deleted

pod "lb-deploy-55d7988bf7-rdvvw" deleted

삭제하자마자 바로 아래 명령어 실행..!!

# 💙2번 터미널창 -> replicas: 3을 지키기 위해 바로 컨테이너를 생성한다 !

# 💙 컨테이너 생성은 yaml 파일의 template 옵션을 기준으로 한다.

kubectl get deploy,po -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/lb-deploy 1/3 3 1 5m28s lb-container dbgurum/k8s-lab:v1.0 app=lb-app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/lb-deploy-55d7988bf7-2j5bm 0/1 ⭐ ContainerCreating 0 1s <none> gke-k8s-cluster-k8s-node-pool-3e9e423a-gfwg <none> <none>

pod/lb-deploy-55d7988bf7-7b5b4 1/1 Running 0 5m28s 10.12.2.9 gke-k8s-cluster-k8s-node-pool-3e9e423a-42v6 <none> <none>

pod/lb-deploy-55d7988bf7-pl9sr 0/1 ⭐ ContainerCreating 0 1s <none> gke-k8s-cluster-k8s-node-pool-3e9e423a-wnnk <none> <none>

pod/lb-deploy-55d7988bf7-rdvvw 1/1 Terminating 0 5m28s 10.12.0.8 gke-k8s-cluster-k8s-node-pool-3e9e423a-wnnk <none> <none>

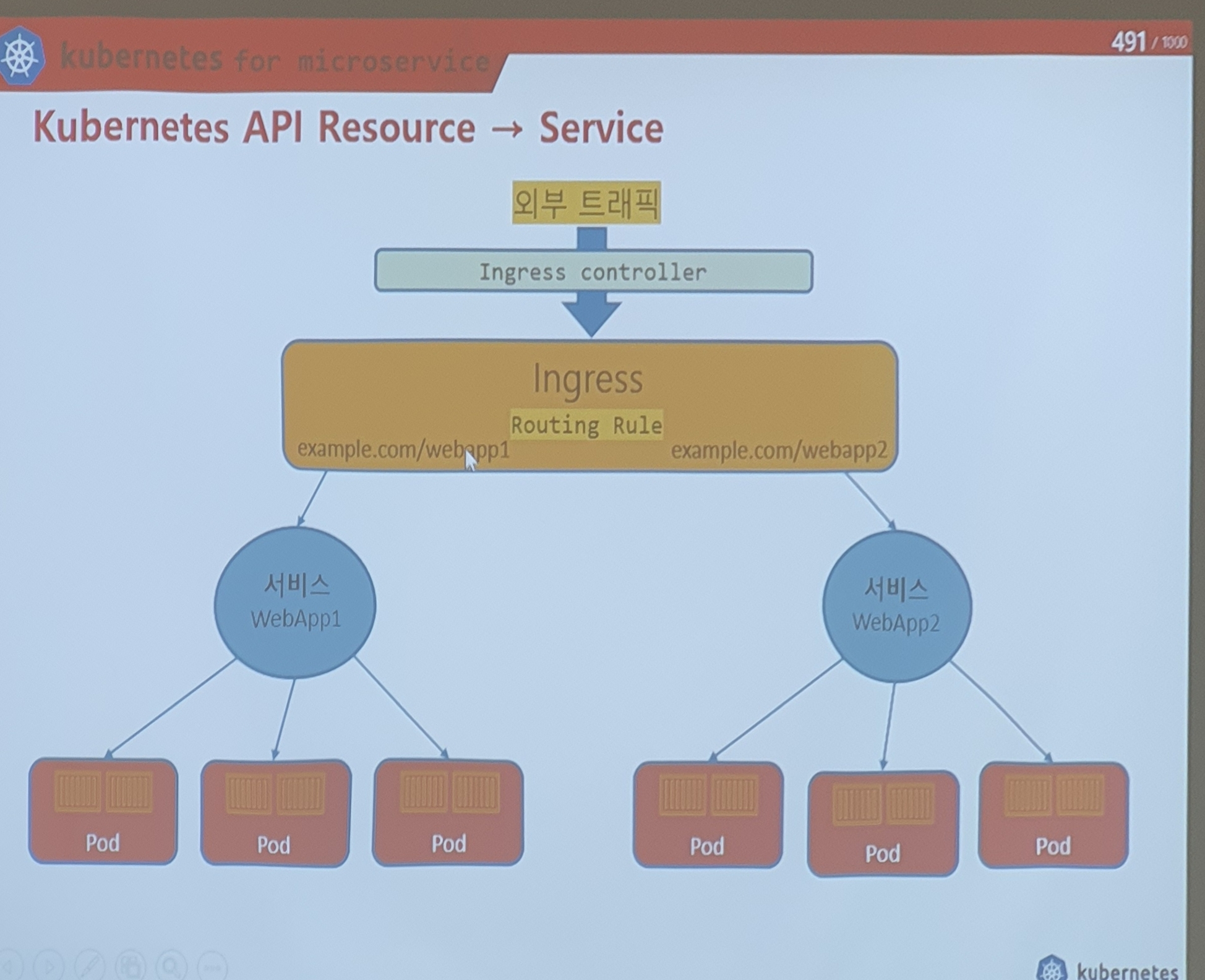

pod/lb-deploy-55d7988bf7-w98mh 1/1 Terminating 0 5m28s 10.12.1.9 gke-k8s-cluster-k8s-node-pool-3e9e423a-gfwg <none> <none>📕 ingress

- (L7) http(80), https(443 -> tls -> openssl -> *.crt, Secret)

오늘은 http로만 실습할게용 - rules? 트래픽이 들어오면 어디로 routing 할지 정해주는 것

- 그래서 smart router라고도 부름(사용자지정)

-

www.example.com -> rule -> server1 -> Pods

-

www.example.com/customer -> rule -> service2 -> Pods -> Container -> Application

-

www.example.com/payment -> rule -> server3 -> Pods

-

따라서, ingress의 역할:

routing -

(Ingress) --- (LB) --- (biz logic)

-

[ingress controller] -> nginx -> nodeport 방식의 proxy로 구성된 서비스

-

http기반의 서비스를 구축하기위해 사용 ! -> MSA 아키텍쳐에 적합

1) ingress controller

- nginx 기반의 서비스 -> https://kubernetes.github.io/ingress-nginx/deploy/#bare-metal-clusters 설치 -> kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.4.0/deploy/static/provider/baremetal/deploy.yaml

2) ingress object

3) ingress service

4) pod

ingress에서 가장 중요한거 = rule

### /로 들어왔을 떄 어디로 가라. 라는 간단한 규칙 만들어보겠다.

## 1) ingress controller 시작

yji@k8s-master:~$ kubectl get all -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.111.33.223 <none> ✍80:30486✍/TCP,443:30477/TCP 2m55s

yji@k8s-master:~/LABs/ingress$ kubectl api-resources | grep -i ingress

ingressclasses networking.k8s.io/v1 false IngressClass

ingresses ing networking.k8s.io/v1 true Ingress

vim ing-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: hi-pod

labels:

run: hi-app

spec:

containers:

- image: dbgurum/ingress:hi

name: hi-container

args:

- "-text:HI! Kubernets." # curl IP:30486

---

apiVersion: v1

kind: Service

metadata:

name: hi-svc

spec:

selector:

run: hi-app

ports:

- port: 5678

vim ingress.yaml

# www.example.com -> .com/hi -> service(hi-svc)로 이동

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-hi

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /hi # /hi로 요청이 들어오면

pathType: Prefix # 그 뒷 단에

backend:

service:

name: hi-svc # hi-svc를 출력해

port:

number: 5678

yji@k8s-master:~/LABs/ingress$ kubectl describe ingress http-hi

Name: http-hi

Labels: <none>

Namespace: default

Address: 192.168.56.102

Ingress Class: <none>

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/hi hi-svc:5678 ()

Annotations: ingress.kubernetes.io/rewrite-target: /

kubernetes.io/ingress.class: nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 9s (x2 over 28s) nginx-ingress-controller Scheduled for sync

yji@k8s-master:~/LABs/ingress$ kubectl get ing,po,svc -o wide | grep -i hi

ingress.networking.k8s.io/http-hi <none> * 192.168.56.102 80 94s

pod/hi-pod 0/1 CrashLoopBackOff 6 (3m40s ago) 9m28s 10.111.156.118 k8s-node1 <none> <none>

service/hi-svc ClusterIP 10.103.209.40 <none> 5678/TCP 9m28s run=hi-app

service/mynode-svc NodePort 10.108.244.115 <none> 8899:32077/TCP 179m app=hi-mynode

yji@k8s-master:~/LABs/ingress$ curl 192.168.56.102:30486/hi

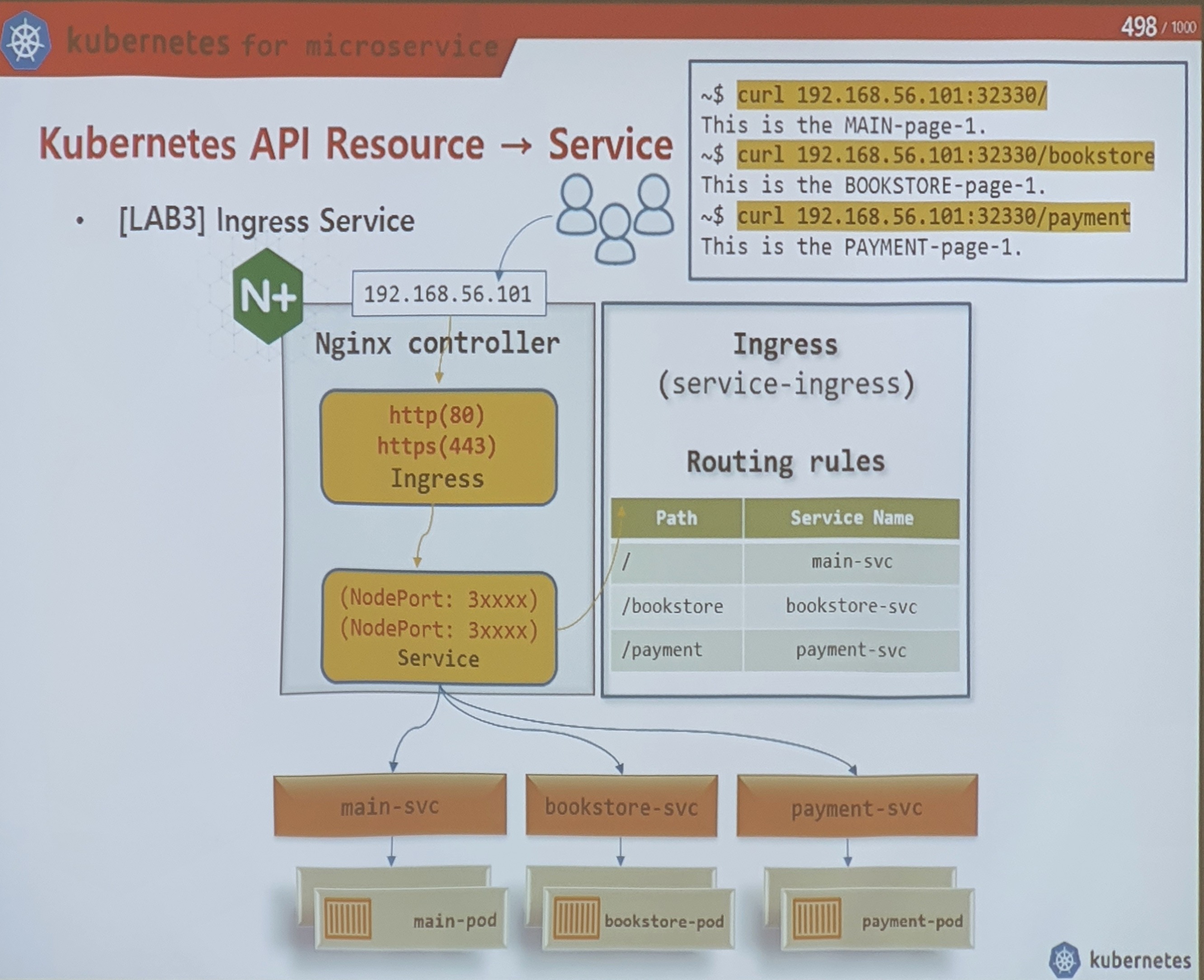

Hi! kubernetes📕 실습 ingrees service

vim ingress_lab3.yaml

apiVersion: v1

kind: Pod

metadata:

name: main-pod

labels:

run: main-app

spec:

containers:

- image: dbgurum/ingress:hi

name: hi-container

args:

- "-text=This is the MAIN-page-1."

---

apiVersion: v1

kind: Pod

metadata:

name: bookstore-pod

labels:

run: bookstore-app

spec:

containers:

- image: dbgurum/ingress:hi

name: hi-container

args:

- "-text=This is the BOOKSTORE-page-1."

---

apiVersion: v1

kind: Pod

metadata:

name: payment-pod

labels:

run: payment-app

spec:

containers:

- image: dbgurum/ingress:hi

name: hi-container

args:

- "-text=This is the PAYMENT-page-1."

---

apiVersion: v1

kind: Service

metadata:

name: main-svc

spec:

selector:

run: main-app

ports:

- port: 5678

---

apiVersion: v1

kind: Service

metadata:

name: bookstore-svc

spec:

selector:

run: bookstore-app

ports:

- port: 5678

---

apiVersion: v1

kind: Service

metadata:

name: payment-svc

spec:

selector:

run: payment-app

ports:

- port: 5678

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: http-hi

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: main-svc

port:

number: 5678

- path: /bookstore

pathType: Prefix

backend:

service:

name: bookstore-svc

port:

number: 5678

- path: /payment

pathType: Prefix

backend:

service:

name: payment-svc

port:

number: 5678

yji@k8s-master:~/LABs/ingress$ kubectl apply -f lab-ing.yaml

ingress.networking.k8s.io/http-hi created

yji@k8s-master:~/LABs/ingress$ curl 192.168.56.102:30486/bookstore

This is the BOOKSTORE-page-1.

yji@k8s-master:~/LABs/ingress$ curl 192.168.56.102:30486/main

This is the MAIN-page-1.

yji@k8s-master:~/LABs/ingress$ curl 192.168.56.102:30486/bookstore

This is the BOOKSTORE-page-1.

yji@k8s-master:~/LABs/ingress$ curl 192.168.56.102:30486/payment

This is the PAYMENT-page-1.

yji@k8s-master:~/LABs/ingress$ kubectl describe ingress http-hi

Name: http-hi

Labels: <none>

Namespace: default

Address: 192.168.56.102

Ingress Class: <none>

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ main-svc:5678 (10.111.156.65:5678)

/bookstore bookstore-svc:5678 (10.111.156.74:5678)

/payment payment-svc:5678 (10.109.131.48:5678)

Annotations: ingress.kubernetes.io/rewrite-target: /

kubernetes.io/ingress.class: nginx

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 7m41s (x2 over 8m7s) nginx-ingress-controller Scheduled for sync

-> 이거 윈도우에서도 가능

volume & data

- emptyDir(in Pod)

- Hostpath (container to host)

- 외부: NFS, gitRepo

- PV / PVC -> Pod

- volume

- 공유, 지속성 유지(life cycle이 pod와 같니 안 같니)

volume 1 실습 ) emptyDir

- 휘발성입니다.

yji@k8s-master:~/LABs/emptydir$ vim empty-1.yaml

apiVersion: v1

kind: Pod

metadata:

name: vol-pod1

spec:

containers:

- image: dbgurum/k8s-lab:initial

name: c1

volumeMounts:

- name: empty-vol

mountPath: /mount1

- image: dbgurum/k8s-lab:initial

name: c2

volumeMounts:

- name: empty-vol

mountPath: /mount2

volumes:

- name: empty-vol

emptyDir: {}

yji@k8s-master:~/LABs/emptydir$ kubectl apply -f empty-1.yaml

pod/vol-pod1 created

yji@k8s-master:~/LABs/emptydir$ kubectl get po -o wide | grep vol

vol-pod1 2/2 Running 0 9s 10.111.156.75 k8s-node1 <none> <none>

## c1 컨테이너에 접속

yji@k8s-master:~/LABs/emptydir$ kubectl exec -it vol-pod1 -c c1 -- bash

[root@vol-pod1 /]#

[root@vol-pod1 /]#df -h

Filesystem Size Used Avail Use% Mounted on

overlay 66G 17G 49G 26% /

tmpfs 64M 0 64M 0% /dev

/dev/sda1 66G 17G 49G 26% 💙/mount1

shm 64M 0 64M 0% /dev/shm

tmpfs 3.8G 12K 3.8G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 2.0G 0 2.0G 0% /proc/acpi

tmpfs 2.0G 0 2.0G 0% /proc/scsi

tmpfs 2.0G 0 2.0G 0% /sys/firmware

💙 가상 디스크처럼 보일 뿐이다 ~

[root@vol-pod1 /]# cd /mount1/

[root@vol-pod1 mount1]# echo 'i love k8s' > k8s-1.txt

[root@vol-pod1 mount1]# cat k8s-1.txt

i love k8s

[root@vol-pod1 mount1]# exit

exit

yji@k8s-master:~/LABs/emptydir$ kubectl exec -it vol-pod1 -c c2 -- bash

[root@vol-pod1 /]# cd /mount2/

[root@vol-pod1 mount2]# ls

k8s-1.txt

[root@vol-pod1 mount2]# cat k8s-1.txt

i love k8s

💙 c1 컨테이너의 mount1과 c2컨테이너의 mount2는 공유된다.

---

## 컨테이너 안의 폴더를 호스트로 뽑기

✍ /mount1 아니고 mount1임. 밖에서 보면 /가 아님 ?

yji@k8s-master:~/LABs/emptydir$ kubectl cp vol-pod1:mount1/k8s-1.txt -c c1 ./k8s-1.txt

yji@k8s-master:~/LABs/emptydir$ ls

empty-1.yaml k8s-1.txt

⭐⭐⭐ 지금 이 메모리는 영구적이지 않다. == pod와 life cycle이 같다. == 휘발성 ! 확인해보자

yji@k8s-master:~/LABs/emptydir$ kubectl delete -f empty-1.yaml

pod "vol-pod1" deleted

yji@k8s-master:~/LABs/emptydir$ kubectl apply -f empty-1.yaml

pod/vol-pod1 created

yji@k8s-master:~/LABs/emptydir$ kubectl exec -it vol-pod1 -c c1 -- bash

[root@vol-pod1 /]# cd /mount1/

[root@vol-pod1 mount1]# ls

[root@vol-pod1 mount1]# 없당 !

yji@k8s-master:~/LABs/emptydir$ kubectl describe po vol-pod1

Name: vol-pod1

Namespace: default

Priority: 0

Node: k8s-node1/192.168.56.101

Start Time: Wed, 05 Oct 2022 16:38:12 +0900

Labels: <none>

Annotations: cni.projectcalico.org/containerID: 717111d310bbb0023bc6e29d2e944df2e76c4337cb7d31aa5a252af33daec54f

cni.projectcalico.org/podIP: 10.111.156.73/32

cni.projectcalico.org/podIPs: 10.111.156.73/32

Status: Running

IP: 10.111.156.73

IPs:

IP: 10.111.156.73

Containers:

c1:

Container ID: containerd://b4616e8ff8b33783f1eb96dc84d61666b8be0b842efbf218d24c32bc569a25fd

Image: dbgurum/k8s-lab:initial

Image ID: docker.io/dbgurum/k8s-lab@sha256:99effab9fe2d87744053ebe96c5a92dc6f141b6504fac970a293c5dd1a726fbd

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 05 Oct 2022 16:38:13 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

💚/mount1 from empty-vol 💚 (rw) : read write다. -> 그럼 read only도 옵션으로 줄수 있겠구나 ~

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-cq5rh (ro)

c2:

Container ID: containerd://33e842814edbf13b4ed2c072480311910bdf6f042ee2cccf2e2a147d551f8af0

Image: dbgurum/k8s-lab:initial

Image ID: docker.io/dbgurum/k8s-lab@sha256:99effab9fe2d87744053ebe96c5a92dc6f141b6504fac970a293c5dd1a726fbd

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 05 Oct 2022 16:38:13 +0900

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/mount2 from empty-vol (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-cq5rh (ro)

read-only(ro)

volumeMounts:

- name: empty-vol

mountPath: /mount1

readOnly: true # 이 옵션 추가 ! volume 2 실습 ) HostOS

/var/www/html

/usr/share/nginx/html -> nginx

vim hostpath-no1.yaml

vim hostpath-no2.yaml

yji@k8s-master:~/LABs/hostpath$ kubectl apply -f hostpath-no1.yaml

pod/pod-vol2 created

yji@k8s-master:~/LABs/hostpath$ kubectl apply -f hostpath-no2.yaml

pod/pod-vol3 created

hostPath + NFS 이용한 주요 데이터 보호 -> 데이터 지속성

vim hostpath_NFS.yaml

apiVersion: v1

kind: Pod

metadata:

name: weblog-pod

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node2

containers:

- image: nginx:1.21-alpine

name: nginx-web

volumeMounts:

- name: host-path

mountPath: /var/log/nginx

volumes:

- name: host-path

hostPath:

path: /data_dir/web-log

type: DirectoryOrCreate

---

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

spec:

nodeSelector:

kubernetes.io/hostname: k8s-node2

containers:

- name: mysql-container

image: mysql:8.0

volumeMounts:

- name: host-path

mountPath: /var/lib/mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "password"

volumes:

- name: host-path

hostPath:

path: /data_dir/mysql-data

type: DirectoryOrCreate

yji@k8s-master:~/LABs/hostpath_NFS$ kubectl apply -f test.yaml

pod/weblog-pod created

pod/mysql-pod configured

yji@k8s-master:~/LABs/hostpath_NFS$ kubectl get po

NAME READY STATUS RESTARTS AGE

mysql-pod 1/1 Running 0 2m26s

weblog-pod 1/1 Running 0 111s

### node2로 이동

yji@k8s-node2:/$ cd /data_dir/

yji@k8s-node2:/data_dir$ ls

mysql-data web-log

## mysql 볼륨 확인

yji@k8s-node2:/data_dir$ ls mysql-data/

auto.cnf client-key.pem '#innodb_temp' server-cert.pem

binlog.000001 '#ib_16384_0.dblwr' mysql server-key.pem

binlog.000002 '#ib_16384_1.dblwr' mysql.ibd sys

binlog.index ib_buffer_pool mysql.sock undo_001

ca-key.pem ibdata1 performance_schema undo_002

ca.pem ibtmp1 private_key.pem

client-cert.pem '#innodb_redo' public_key.pem

## nginx 볼륨확인

yji@k8s-node2:/data_dir$ ls web-log/

access.log error.log

메모장

🐳 📕 ⭐ 📔 💭 🤔 ✍ 💙 💚

라벨 삭제는 키 값 뒤에 '-'를 붙인다.

-

Pod를 위한 Object

- Container

- Label

- Node schedule

-

Service(network)를 위한 Object ....

kube proxy- clusterIP (default, internal)

- NodePort( L4: 30000~32767)

- LoadBalancer (외부노출:GCP.. / MetalLB)

- Ingress (L7: http, https)

- externalIPs

- NetworkPolicy

-

Controller

- Replication controller

- Replicaset

- Deployment

- HPA

-

Volume을 위한 object

- emptyDir

- Hostpath

- 외부: NFS, iSCSi

- PVC / PV

-

환경 구성을 위한 object

- configMap (env, file)

- Secret (암호키, 암호)

https 이용할라믄 TLS 구축해야함 TLS 구축하려면 open SSL로 인증서 받아?

service와 관련된 object는 clusterIP, NodePort, LoadBalancer ~ ingress는 독립적인 아이

데몬셋이뭐라고?

- metalLB 구축해보세요

docker, kubernetes, MetalLB

Bare metal clusters = VM 기반

cilium Hubble... 구현하래... ㅇㅅㅇ ..