CloudNet@ - 가시다(gasida) 님이 진행하는 KANS 스터디

환경 설정

kind-svc-2w.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

featureGates:

"InPlacePodVerticalScaling": true #실행 중인 파드의 리소스 요청 및 제한을 변경할 수 있게 합니다.

~~~~ "MultiCIDRServiceAllocator": true #서비스에 대해 여러 CIDR 블록을 사용할 수 있게 합니다.

nodes:

**- role: control-plane**

labels:

mynode: control-plane

topology.kubernetes.io/zone: ap-northeast-2a

extraPortMappings: #컨테이너 포트를 호스트 포트에 매핑하여 클러스터 외부에서 서비스에 접근할 수 있도록 합니다.

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- containerPort: 30002

hostPort: 30002

- containerPort: 30003

hostPort: 30003

- containerPort: 30004

hostPort: 30004

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

apiServer:

extraArgs: #API 서버에 추가 인수를 제공

runtime-config: **api/all=true** #모든 API 버전을 활성화

**** controllerManager:

extraArgs:

bind-address: 0.0.0.0

etcd:

local:

extraArgs:

listen-metrics-urls: http://0.0.0.0:2381

scheduler:

extraArgs:

bind-address: 0.0.0.0

- |

kind: KubeProxyConfiguration

metricsBindAddress: 0.0.0.0

**- role: worker**

labels:

mynode: worker1

topology.kubernetes.io/zone: ap-northeast-2a

**- role: worker**

labels:

mynode: worker2

topology.kubernetes.io/zone: ap-northeast-2b

**- role: worker**

labels:

mynode: worker3

topology.kubernetes.io/zone: ap-northeast-2c

**networking:

podSubnet: 10.10.0.0/16** #파드 IP를 위한 CIDR 범위를 정의합니다. 파드는 이 범위에서 IP를 할당받습니다.

**serviceSubnet: 10.200.1.0/24** #서비스 IP를 위한 CIDR 범위를 정의합니다. 서비스는 이 범위에서 IP를 할당받습니다.- k8s Cluster 설치

kind create cluster --config kind-svc-2w.yaml --name myk8s --image kindest/node:v1.31.0

- 노드에 기본 툴 설치

docker exec -it **myk8s-control-plane sh -c '**apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping **git vim** **arp-scan** -y'

for i in **worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **sh -c '**apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; done- kind network 중 컨테이너(노드) IP(대역) 확인 worker, worker2, worker3, control-plane 노드

~/kans docker ps -q | xargs docker inspect --format '{{.Name}} {{.NetworkSettings.Networks.kind.IPAddress}}'

/myk8s-worker 172.18.0.3

/myk8s-control-plane 172.18.0.5

/myk8s-worker3 172.18.0.2

/myk8s-worker2 172.18.0.4- 파드CIDR 과 Service 대역 확인 : CNI는 kindnet 사용

- Subnet

하나의 네트워크가 분할돼 나눠진 작은 네트워크

-podSubnet/cluster-cidr: Pod IP 주소 범위

-serviceSubnet/service-cluster-ip-range: Service의 IP 주소 범위192.168.1.0/24에서 192.168.1.0는 서브넷 네트워크 주소, 192.168.1.255는 브로드캐스팅 주소라고 한다.

- Subnet

~/kans kubectl get cm -n kube-system kubeadm-config -oyaml | grep -i subnet

kubectl cluster-info dump | grep -m 2 -E "cluster-cidr|service-cluster-ip-range"

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.1.0/24

"--service-cluster-ip-range=10.200.1.0/24",

"--cluster-cidr=10.10.0.0/16",- 노드마다 할당된 dedicated subnet (podCIDR) 확인

~/kans kubectl get nodes -o jsonpath="{.items[*].spec.podCIDR}"

10.10.0.0/24 10.10.3.0/24 10.10.2.0/24 10.10.1.0/24%- kube-proxy configmap 확인

~/kans kubectl describe cm -n kube-system kube-proxy

...

mode: iptables

iptables:

localhostNodePorts: null

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 1s

syncPeriod: 0s

...- 노드 별 네트워트 정보 확인 : CNI는 kindnet 사용

ip -c route: 라우팅 테이블 출력ip -c addr: 네트워크 인터페이스의 IP 주소 정보 출력 (interface, ip, 상태, MAC 주소, broadcast 주소) -c 는 컬러를 입혀주는 옵션

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **cat /etc/cni/net.d/10-kindnet.conflist**; echo; done

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -c route**; echo; done

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -c addr**; echo; done- arp scan

- ARP(Address Resolution Protocol)

IP 주소를 MAC 주소와 매칭시키기 위한 프로토콜

단말 간 통신에서 IP를 이용하여 목적지를 지정하지만 실제 데이터 이동을 위해 MAC 주소를 이용

ARP는 IP 주소와 MAC 주소를 1대1 매칭하여 LAN(L2)에서 목적지를 제대로 찾도록 도움

- ARP(Address Resolution Protocol)

docker exec -it myk8s-control-plane arp-scan --interfac=eth0 --localnet

- mypc 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 지정 없이 혹은 지정 해서 사용

docker run -d --rm --name **mypc** --network **kind** --**ip 172.18.0.100** nicolaka/**netshoot** sleep infinity

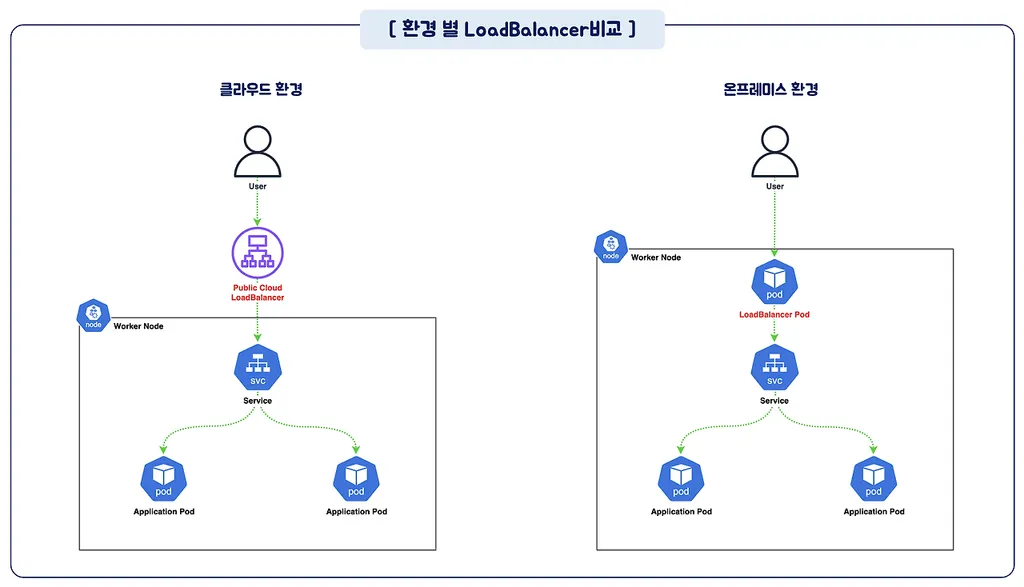

LoadBalancer

LoadBalancer 타입의 Kubernetes 서비스는 외부에서 접근할 수 있도록 외부 로드 밸런서를 사용해 서비스를 노출합니다.

- Kubernetes 자체적으로는 로드 밸런싱 기능을 제공하지 않으며, 사용자는 로드 밸런서를 직접 제공하거나 클라우드 제공업체와 통합해야 합니다.

- LoadBalancer 서비스를 구현할 때, Kubernetes는 기본적으로 NodePort와 유사한 방식으로 시작합니다. 클라우드 컨트롤러 매니저가 외부 로드 밸런서를 설정하여 해당 NodePort로 트래픽을 전달하도록 구성합니다.

- 클라우드 제공업체가 지원하는 경우, NodePort 할당을 생략한 로드 밸런스된 서비스를 설정할 수도 있습니다.

spec.allocateLoadBalancerNodePorts: false- link

출처 - https://kimalarm.tistory.com/102

- AWS LoadBalancer

- CLB(Classic Load Balancing)

NLB / ALB에 비해서 가장 적은 기능 제공, 가장 오래된 로드밸런서 - NLB(Network Load Balancing)

CLB / ALB에 비해서 처리 속도가 가장 빠름, L4 동작

TCP / UDP / TLS 트래픽 처리

- ALB(Application Load Balancing)

HTTP / HTTPS / gRPC 트래픽을 전문으로 처리, L7 동작

Ingress 리소스 생성 시 사용

- Ingress

클러스터 외부에서 내부 서비스로 HTTP(S) 트래픽을 라우팅하기 위한 API 객체, 더 복잡한 규칙을 사용해 트래픽을 여러 서비스로 라우팅할 수 있는 기능 제공

- CLB(Classic Load Balancing)

MetalLB

BareMetalLoadBalancer, 온프레미스 환경에서 표준 프로토콜을 사용해서 LoadBalancer 서비스를 구현해주는 프로그램

- DaemonSet으로 Speaker Pod를 생성하여

External IP전파 - Speaker Pod는

External IP전파를 위해 표준 프로토콜인ARP(Layer 2 Mode) orBGP를 사용- BGP (Boarder Gateway Protocol)

글로벌 인터넷에서 서로 다른 네트워크(AS - Autonomous System) 간 라우팅 정보를 교환하는데 사용하는 프로토콜

라우터가 다른 라우터와 피어링 관계를 맺은 뒤, 라우팅 정보를 공유함으로써 네트워크 간에 가장 효율적인 경로로 패킷을 전달할 수 있다.

⇒ 주변의 여러 라우터들에게 어떤 라우터가 어느 AS에 속해 있는지에 대한 정보를 소문 내는 것

- BGP (Boarder Gateway Protocol)

- 대부분의 퍼블릭 클라우드 플랫폼 환경에서 동작하지 않음

⇒ 가상서버의 IP에 매칭되는 MAC 주소가 아닌 IP에 대한 ARP 요청을 차단하기 때문, External IP는 퍼블릭 클라우드 사업자가 할당한 IP가 아니므로 ARP 요청이 차단 돼 동작이 불가능하다. - 일부 CNI 동작 이슈 존재

e.g. Calico IPIP 모드에 BGP를 사용하면서 MetalLB BGP 모드 사용 시 충돌

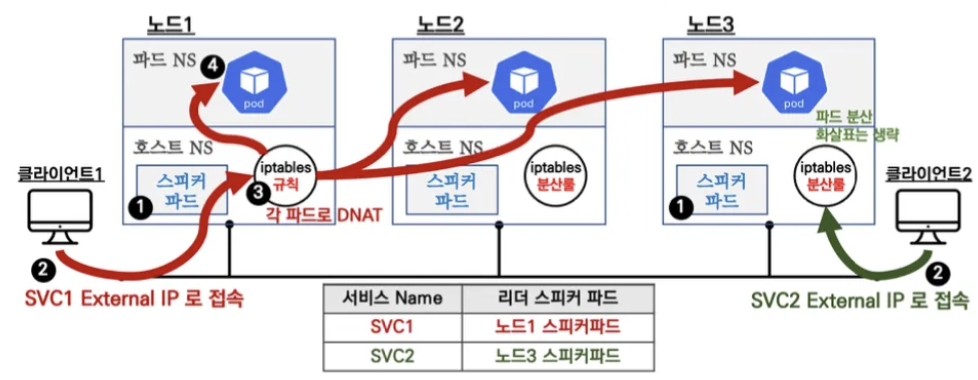

Layer2 Mode

ARP를 통한 External IP 전파

동일 네트워크 내부에서 통신을 위해 상대방 장비의 MAC 주소 필요

ARP 프로토콜을 통해 상대방 IP 주소에 해당하는 MAC 주소 요청, 응답을 통해 획득

- LoadBalancer 생성 시 MetalLB 스피커 파드들 중 Leader 선출

Leader는 ARP를 통해 해당 LoadBalancer 서비스의 External IP 전파 - SVC 접속 시도 → 해당 SVC의 스피커 파드의 노드 → iptables 규칙 → ClusterIP와 동일하게 엔드포인트 파드로 랜덤 부하분산

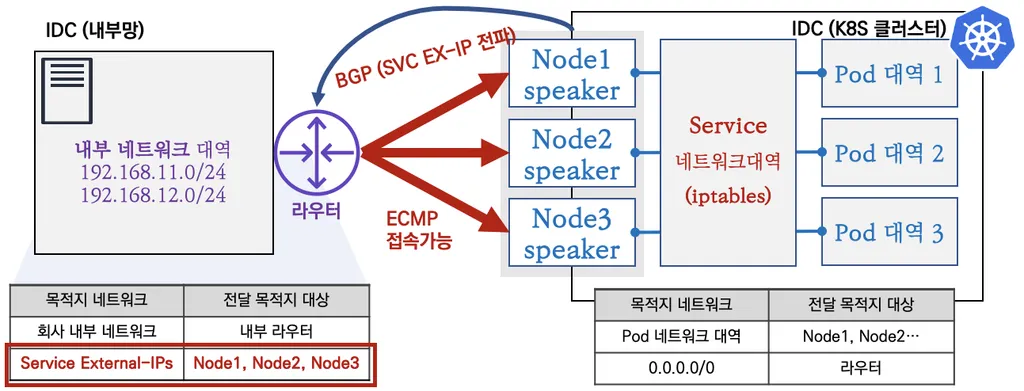

BGP Mode

- Speaker Pod가 BGP로 External IP 전파, 외부에서 라우터를 통해 ECMP 라우팅으로 부하 분산 접속

- ECMP(Equal-Cost Multi-Path)

네트워크에서 여러 경로가 동일한 비용을 가질 때, 트래픽을 그 경로들 사이에 분산하여 전송하는 라우팅 기법 5-tuple(프로토콜, 출발지IP, 목적지IP, 출발지Port, 목적지Port) 기준으로 동작

- ECMP(Equal-Cost Multi-Path)

- 라우터에서 서비스로 인입이 되기 때문에, 라우터 관련 설정이 중요!

⇒ 네트워크 팀과 협업을 적극 권장

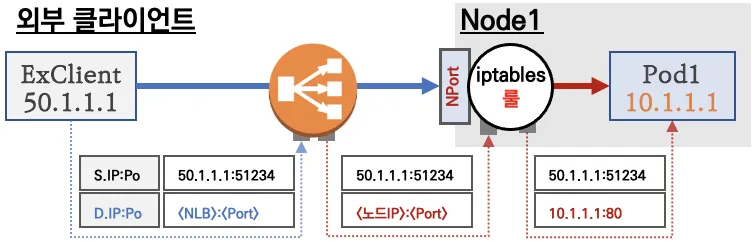

LoadBalancer 통신 흐름

- 로드밸런서 → NodePort 이후 기본 과정은 NodePort와 동일

- DNAT 2번 동작

- 로드밸런서 접속 후

- 노드의 iptables 룰에서 Pod IP 전달

LoadBalancer 부족한 점

- 서비스(LoadBalancer) 생성시 마다 LB가 생성돼 비효율적

- HTTP / HTTPS 처리 일부 부족

- On-premise 환경에서 외부 서비스 필요(MetalLB, OpenELB)

⇒ 1, 2번의 경우 Ingress를 통해 보완할 수 있다!

그렇다면 Ingress만 있으면 될 것 같은데 왜 LoadBalancer가 있는 걸까?

⇒ L4 스위치로 TCP/UDP 트래픽을 처리하는 데 적합하여 단순한 트래픽 전달에 유리하기 때문이라고 생각

실습

webpod1, webpod2 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: webpod1

labels:

app: webpod

spec:

nodeName: myk8s-worker

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: webpod2

labels:

app: webpod

spec:

nodeName: myk8s-worker2

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

EOFWPOD1=$(kubectl get pod webpod1 -o jsonpath="{.status.podIP}")

WPOD2=$(kubectl get pod webpod2 -o jsonpath="{.status.podIP}")MetalLB 설치

manifests 로 설치 진행

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/refs/heads/main/config/manifests/metallb-native-prometheus.yaml

> kubectl get ds,deploy -n metallb-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/speaker 4 4 4 4 4 kubernetes.io/os=linux 101s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/controller 1/1 1 1 101s⇒ Demonset으로 speaker 4개와 controller 1개로 이뤄진 모습

- IPAddressPool 생성 : LoadBalancer External IP로 사용할 IP 대역

MetalLB는 서비스를 위한 외부 IP 주소를 관리하고, 서비스가 생성될 때 해당 IP 주소를 동적으로 할당할 수 있습니다.

kubectl explain ipaddresspools.metallb.io

cat <<EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: my-ippool

namespace: metallb-system

spec:

addresses:

- 172.18.255.200-172.18.255.250

EOF> kubectl get ipaddresspools -n metallb-system

NAME AUTO ASSIGN AVOID BUGGY IPS ADDRESSES

my-ippool true false ["172.18.255.200-172.18.255.250"]- L2Advertisement 생성 : 설정한 IPpool을 기반으로 Layer2 모드로 LoadBalancer IP 사용 허용

Kubernetes 클러스터 내의 서비스가 외부 네트워크에 IP 주소를 광고하는 방식을 정의

kubectl explain l2advertisements.metallb.io

cat <<EOF | kubectl apply -f -

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: my-l2-advertise

namespace: metallb-system

spec:

ipAddressPools:

- my-ippool

EOF> kubectl get l2advertisements -n metallb-system

NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES

my-l2-advertise ["my-ippool"]- 서비스(LoadBalancer 타입) 생성

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Service

metadata:

name: svc1

spec:

ports:

- name: svc1-webport

port: 80

targetPort: 80

selector:

app: webpod

type: LoadBalancer # 서비스 타입이 LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: svc2

spec:

ports:

- name: svc2-webport

port: 80

targetPort: 80

selector:

app: webpod

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

name: svc3

spec:

ports:

- name: svc3-webport

port: 80

targetPort: 80

selector:

app: webpod

type: LoadBalancer

EOF- arp scan

docker exec -it myk8s-control-plane arp-scan --interfac=eth0 --localnet> docker exec -it myk8s-control-plane arp-scan --interfac=eth0 --localnet 172.18.0.1 02:42:b4:f2:34:bd (Unknown: locally administered) 172.18.0.2 02:42:ac:12:00:02 (Unknown: locally administered) 172.18.0.3 02:42:ac:12:00:03 (Unknown: locally administered) 172.18.0.4 02:42:ac:12:00:04 (Unknown: locally administered) 172.18.0.100 02:42:ac:12:00:64 (Unknown: locally administered) 172.18.255.200 02:42:ac:12:00:03 (Unknown: locally administered) 172.18.255.202 02:42:ac:12:00:02 (Unknown: locally administered) 172.18.255.201 02:42:ac:12:00:03 (Unknown: locally administered) kubectl describe svc svc1

speaker 배포된 노드가 리더 역할 하는지 확인> kubectl describe svc svc1 ... Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal IPAllocated 2m12s metallb-controller Assigned IP ["172.18.255.200"] Normal nodeAssigned 2m12s metallb-speaker announcing from node "myk8s-worker" with protocol "layer2"kubectl get svc svc1 -o json | jq> kubectl get svc svc1 -o json | jq "spec": { "allocateLoadBalancerNodePorts": true, # NodePort 거칠 것인지? "clusterIP": "10.200.1.151", "clusterIPs": [ "10.200.1.151" ], "externalTrafficPolicy": "Cluster", "internalTrafficPolicy": "Cluster", "ipFamilies": [ "IPv4" ], "ipFamilyPolicy": "SingleStack", "ports": [ { "name": "svc1-webport", "nodePort": 32754, "port": 80, "protocol": "TCP", "targetPort": 80 } ], "selector": { "app": "webpod" }, "status": { "loadBalancer": { "ingress": [ { "ip": "172.18.255.200", # 외부 접근 IP "ipMode": "VIP" # Virtual IP 의미 } ] } } }

- 현재 SVC EXTERNAL-IP를 변수에 지정

SVC1EXIP=$(kubectl get svc svc1 -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

SVC2EXIP=$(kubectl get svc svc2 -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

SVC3EXIP=$(kubectl get svc svc3 -o jsonpath='{.status.loadBalancer.ingress[0].ip}')-

for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do docker exec -it mypc arping -I eth0 -f -c 1 $i; done

arping- arp로 반응을 하는지 확인> for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do docker exec -it mypc arping -I eth0 -f -c 1 $i; done ARPING 172.18.255.200 from 172.18.0.100 eth0 Unicast reply from 172.18.255.200 [02:42:AC:12:00:03] 0.810ms Sent 1 probes (1 broadcast(s)) Received 1 response(s) ARPING 172.18.255.201 from 172.18.0.100 eth0 Unicast reply from 172.18.255.201 [02:42:AC:12:00:03] 0.641ms Sent 1 probes (1 broadcast(s)) Received 1 response(s) ARPING 172.18.255.202 from 172.18.0.100 eth0 Unicast reply from 172.18.255.202 [02:42:AC:12:00:02] 0.730ms Sent 1 probes (1 broadcast(s)) Received 1 response(s) -

for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do docker exec -it mypc ping -c 1 -w 1 -W 1 $i; done

⇒ kubernetes 의 서비스는 ICMP 지원을 하지 않는다! (TCP UDP SCTP 지원)다음 모두 loss 가 일어난 모습

> for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do docker exec -it mypc ping -c 1 -w 1 -W 1 $i; done PING 172.18.255.200 (172.18.255.200) 56(84) bytes of data. --- 172.18.255.200 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms PING 172.18.255.201 (172.18.255.201) 56(84) bytes of data. --- 172.18.255.201 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms PING 172.18.255.202 (172.18.255.202) 56(84) bytes of data. --- 172.18.255.202 ping statistics --- 1 packets transmitted, 0 received, 100% packet loss, time 0ms -

for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do docker exec -it mypc ping -c 1 -w 1 -W 1 $i; done -

for i in 172.18.0.2 172.18.0.3 172.18.0.4 172.18.0.5; do docker exec -it mypc ping -c 1 -w 1 -W 1 $i; done -

docker exec -it mypc ip -c neigh | sortmypc에 arp table이 갱신

172.18.255.200은02:42:ac:12:00:03과 통신⇒ 172.18.0.3 (

myk8s-worker)> docker exec -it mypc ip -c neigh | sort 172.18.0.2 dev eth0 lladdr 02:42:ac:12:00:02 STALE 172.18.0.3 dev eth0 lladdr 02:42:ac:12:00:03 STALE 172.18.0.4 dev eth0 lladdr 02:42:ac:12:00:04 STALE 172.18.0.5 dev eth0 lladdr 02:42:ac:12:00:05 STALE 172.18.255.200 dev eth0 lladdr 02:42:ac:12:00:03 STALE 172.18.255.201 dev eth0 lladdr 02:42:ac:12:00:03 STALE 172.18.255.202 dev eth0 lladdr 02:42:ac:12:00:02 STALE -

for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do echo ">> Access Service External-IP : $i <<" ;docker exec -it mypc curl -s $i | egrep 'Hostname|RemoteAddr|Host:' ; echo ; done접속 테스트

⇒ RemoteAddr 와 Host를 비교 했을 때 SNAT가 적용되는 것을 알 수 있다.> for i in $SVC1EXIP $SVC2EXIP $SVC3EXIP; do echo ">> Access Service External-IP : $i <<" ;docker exec -it mypc curl -s $i | egrep 'Hostname|RemoteAddr|Host:' ; echo ; done >> Access Service External-IP : 172.18.255.200 << Hostname: webpod2 RemoteAddr: 172.18.0.3:44335 Host: 172.18.255.200 >> Access Service External-IP : 172.18.255.201 << Hostname: webpod2 RemoteAddr: 172.18.0.3:34334 Host: 172.18.255.201 >> Access Service External-IP : 172.18.255.202 << Hostname: webpod1 RemoteAddr: 172.18.0.2:33866 Host: 172.18.255.202 -

부하 분산 테스트

> docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $SVC1EXIP | grep Hostname; done | sort | uniq -c | sort -nr" docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $SVC2EXIP | grep Hostname; done | sort | uniq -c | sort -nr" docker exec -it mypc zsh -c "for i in {1..100}; do curl -s $SVC3EXIP | grep Hostname; done | sort | uniq -c | sort -nr" 56 Hostname: webpod1 44 Hostname: webpod2 53 Hostname: webpod2 47 Hostname: webpod1 54 Hostname: webpod1 46 Hostname: webpod2

IPVS Proxy 모드

- 환경

cat <<EOT> kind-svc-2w-ipvs.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

featureGates:

"InPlacePodVerticalScaling": true

~~~~ "MultiCIDRServiceAllocator": true

nodes:

- role: control-plane

labels:

mynode: control-plane

topology.kubernetes.io/zone: ap-northeast-2a

extraPortMappings:

- containerPort: 30000

hostPort: 30000

- containerPort: 30001

hostPort: 30001

- containerPort: 30002

hostPort: 30002

- containerPort: 30003

hostPort: 30003

- containerPort: 30004

hostPort: 30004

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

apiServer:

extraArgs:

runtime-config: api/all=true

controllerManager:

extraArgs:

bind-address: 0.0.0.0

etcd:

local:

extraArgs:

listen-metrics-urls: http://0.0.0.0:2381

scheduler:

extraArgs:

bind-address: 0.0.0.0

- |

kind: KubeProxyConfiguration

metricsBindAddress: 0.0.0.0

ipvs:

strictARP: true

- role: worker

labels:

mynode: worker1

topology.kubernetes.io/zone: ap-northeast-2a

- role: worker

labels:

mynode: worker2

topology.kubernetes.io/zone: ap-northeast-2b

- role: worker

labels:

mynode: worker3

topology.kubernetes.io/zone: ap-northeast-2c

networking:

podSubnet: 10.10.0.0/16

serviceSubnet: 10.200.1.0/24

kubeProxyMode: "ipvs"

EOT- 클러스터 설치 및 기본 툴 설치

kind create cluster --config kind-svc-2w-ipvs.yaml --name myk8s --image kindest/node:v1.31.0

docker exec -it **myk8s-control-plane sh -c '**apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping **git vim** **arp-scan** -y'

for i in **worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **sh -c '**apt update && apt install tree psmisc lsof wget bsdmainutils bridge-utils net-tools dnsutils ipset ipvsadm nfacct tcpdump ngrep iputils-ping arping -y'; echo; donekubectl describe cm -n kube-system kube-proxy

...

mode: ipvs

ipvs: # 아래 각각 옵션 의미 조사해보자!

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: true # MetalLB 동작을 위해서 true 설정 변경 필요

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

...-

excludeCIDRs : 특정 CIDR qjadnlfmf ipvs에서 제외

-

minSyncPeriod : ipvs 라우터가 클러스터의 모든 노드와 라우팅 정보 동기화시 최소 주기

-

scheduler : 트래픽 분산 방식(스케줄러 알고리즘)

-

strictARP : 활성화시, 자신에게 할당된 IP 주소에 대해서만 ARP 응답

⇒ 로드 밸런싱할 때 ARP 패킷이 잘못된 인터페이스로 전달되는 문제 방지 -

syncPeriod : ipvs 노드 간 동기화 주기

-

tcpFinTimeout : FIN 패킷 전송 후 연결 유지 시간

-

tcpTimeout : TCP 연결 시간 초과 설정

-

udpTimeout : UDP 연결 시간 초과 설정

-

노드 별 네트워트 정보 확인 : kube-ipvs0 네트워크 인터페이스 확인

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -c route**; echo; done

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -c addr**; echo; done

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -br -c addr show kube-ipvs0**; echo; done

for i in **control-plane worker worker2 worker3**; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i **ip -d -c addr show kube-ipvs0**; echo; done- kube-ipvs0 에 할당된 IP(기본 IP + 보조 IP들) 정보 확인

kubectl get svc,ep -A

- ipvsadm

- Virtual server table을 관리하는데 사용

- TCP/UDP를 지원하며 NAT, Tunneling, Direct routing을 지원하고 RR, WRR, LC, WLC를 포함하여 총 8개의 Load balancing 알고리즘 지원

- ipvsadm 툴로 부하분산 되는 정보 확인 : 서비스의 IP와 서비스에 연동되어 있는 파드의 IP 를 확인

Service IP(VIP) 처리를 ipvs 에서 담당 -> 이를 통해 iptables 에 체인/정책이 상당 수준 줄어듬> for i in control-plane worker worker2 worker3; do echo ">> node myk8s-$i <<"; docker exec -it myk8s-$i ipvsadm -Ln ; echo; done >> node myk8s-control-plane << IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.200.1.1:443 rr -> 172.18.0.5:6443 Masq 1 3 0 TCP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 TCP 10.200.1.10:9153 rr -> 10.10.0.2:9153 Masq 1 0 0 -> 10.10.0.4:9153 Masq 1 0 0 UDP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 >> node myk8s-worker << IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.200.1.1:443 rr -> 172.18.0.5:6443 Masq 1 0 0 TCP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 TCP 10.200.1.10:9153 rr -> 10.10.0.2:9153 Masq 1 0 0 -> 10.10.0.4:9153 Masq 1 0 0 UDP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 >> node myk8s-worker2 << IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.200.1.1:443 rr -> 172.18.0.5:6443 Masq 1 0 0 TCP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 TCP 10.200.1.10:9153 rr -> 10.10.0.2:9153 Masq 1 0 0 -> 10.10.0.4:9153 Masq 1 0 0 UDP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 >> node myk8s-worker3 << IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.200.1.1:443 rr -> 172.18.0.5:6443 Masq 1 0 0 TCP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0 TCP 10.200.1.10:9153 rr -> 10.10.0.2:9153 Masq 1 0 0 -> 10.10.0.4:9153 Masq 1 0 0 UDP 10.200.1.10:53 rr -> 10.10.0.2:53 Masq 1 0 0 -> 10.10.0.4:53 Masq 1 0 0

- IPSET 확인

IP 주소, 네트워크 CIDR, 포트 등의 여러 요소를 하나의 세트로 관리해 네트워크 필터링 규칙을 더 효율적으로 만듭니다.

iptables 규칙에서 IPSet을 사용해 특정 세트에 포함된 IP에 대한 트래픽을 필터링하거나 제한할 수 있습니다.docker exec -it myk8s-worker ipset -h docker exec -it myk8s-worker ipset -L - mypc 컨테이너 기동 : kind 도커 브리지를 사용하고, 컨테이너 IP를 직접 지정 혹은 IP 지정 없이 배포

docker run -d --rm --name mypc --network kind --ip 172.18.0.100 nicolaka/netshoot sleep infinity

- pod 생성

**cat <<EOT> 3pod.yaml**

apiVersion: v1

kind: Pod

metadata:

name: **webpod1**

labels:

app: webpod

spec:

**nodeName: myk8s-worker**

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: **webpod2**

labels:

app: webpod

spec:

**nodeName: myk8s-worker2**

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

---

apiVersion: v1

kind: Pod

metadata:

name: **webpod3**

labels:

app: webpod

spec:

**nodeName: myk8s-worker3**

containers:

- name: container

image: traefik/whoami

terminationGracePeriodSeconds: 0

**EOT**CIP=$(kubectl get svc svc-clusterip -o jsonpath="{.spec.clusterIP}")

CPORT=$(kubectl get svc svc-clusterip -o jsonpath="{.spec.ports[0].port}")

SVC1=$(kubectl get svc svc-clusterip -o jsonpath={.spec.clusterIP})- 컨트롤플레인 노드에서 ipvsadm 모니터링 실행 : ClusterIP 접속 시 아래 처럼 연결 정보 확인됨

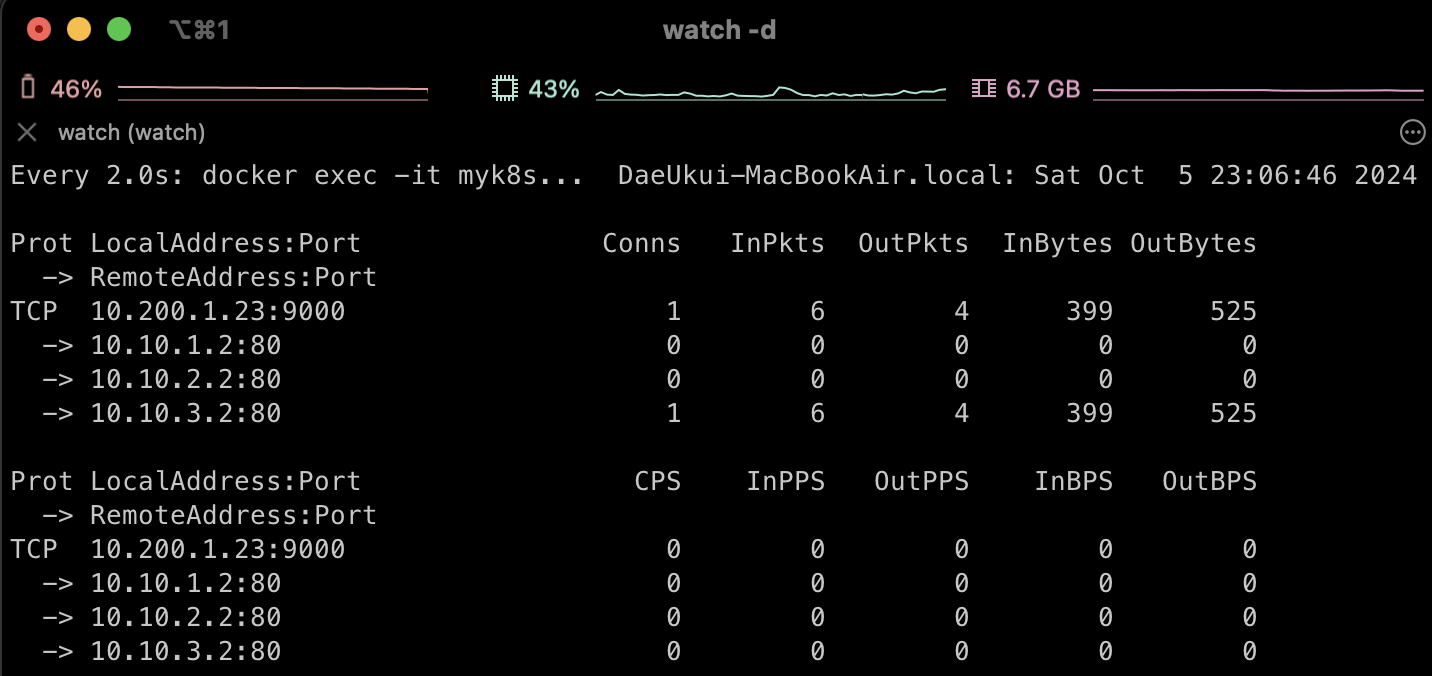

watch -d "docker exec -it myk8s-control-plane ipvsadm -Ln -t $CIP:$CPORT --stats; echo; docker exec -it myk8s-control-plane ipvsadm -Ln -t $CIP:$CPORT --rate"

kubectl exec -it net-pod -- zsh -c "for i in {1..1000}; do curl -s $SVC1:9000 | grep Hostname; done | sort | uniq -c | sort -nr"

334 Hostname: webpod1

333 Hostname: webpod3

333 Hostname: webpod2⇒ 이전 iptables의 부하분산 보다 균등한 분배

⇒ 아마 Round Robin 알고리즘으로 인해서 당장 그렇다고 생각

⇒ 만약 서버마다 성능이 다르다던지 등에 따라 알고리즘을 변경할 수 있다는 점에서 유연함

IPVS Proxy vs iptables

- 트래픽 처리 방식 - iptables는 rule이 많다!

- IPVS는 Linux 커널 내부 동작

- IPVS - 로드밸런싱 알고리즘의 다양성