상황

raspberry pi 5 v2.1 카메라 모듈에서 udp 스트리밍 코드를

작성하기 앞서, gstreamer 로 tcp 스트리밍 동작을 확인해보려 한다.

기본 gstreamer syntax

- gst-launch-1.0 ELEMENT PADS ! ELEMENT PADS ! ...

caps 체크

imx219@10 에서 지원하는 caps 확인

ssw@raspberrypi:~ $ gst-device-monitor-1.0 Video/Source

Device found:

name : /base/axi/pcie@120000/rp1/i2c@80000/imx219@10

class : Source/Video

caps : video/x-raw, format=GRAY8, width=160, height=120

video/x-raw, format=GRAY8, width=240, height=160

video/x-raw, format=GRAY8, width=320, height=240

...

video/x-raw, format=UYVY, width=2560, height=2048

video/x-raw, format=UYVY, width=3200, height=1800

video/x-raw, format=UYVY, width=3200, height=2048

video/x-raw, format=UYVY, width=3200, height=2400

video/x-raw, format=UYVY, width=[ 32, 3280, 2 ], height=[ 32, 2464, 2 ]

gst-launch-1.0 libcamerasrc camera-name="/base/axi/pcie\@120000/rp1/i2c\@80000/imx219\@10" ! ...

ssw@raspberrypi:~ $

양품 확인

libcamera-hello 를 사용, 카메라모듈 양품임을 확인

ssw@raspberrypi:~ $ libcamera-hello --list-cameras

Available cameras

-----------------

0 : imx219 [3280x2464 10-bit RGGB] (/base/axi/pcie@120000/rp1/i2c@80000/imx219@10)

Modes: 'SRGGB10_CSI2P' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [41.85 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

'SRGGB8' : 640x480 [206.65 fps - (1000, 752)/1280x960 crop]

1640x1232 [83.70 fps - (0, 0)/3280x2464 crop]

1920x1080 [47.57 fps - (680, 692)/1920x1080 crop]

3280x2464 [21.19 fps - (0, 0)/3280x2464 crop]

ssw@raspberrypi:~ $

ssw@raspberrypi:~ $ rpicam-hellosyntax 확인

gst-launch-1.0 libcamerasrc camera-name="/base/axi/pcie\@120000/rp1/i2c\@80000/imx219\@10" !

이후에 가능한 element / pads / caps 를 확인

### element 중 libcamerasrc 확인

ssw@raspberrypi:~ $ gst-inspect-1.0

...

libcamera: libcamerasrc: libcamera Source

### libcamerasrc 가 지원하는 pads 확인

ssw@raspberrypi:~ $ gst-inspect-1.0 libcamerasrc

맨 위에서, gst-device-monitor-1.0 Video/Source 에서 imx219@10 가 지원하는 caps 를 사용하려면,

'capsfilter' 라는 element 를 써야 가능

ssw@raspberrypi:~ $ gst-launch-1.0 libcamerasrc ! capsfilter caps=video/x-raw,width=1920,height=1080,format=RGBx

모니터에서 테스트 출력을 확인하기 위해 chatgpt 에게 문의

--> videoconvert 와 autovideosink 를 사용

ssw@raspberrypi:~ $ gst-inspect-1.0

...

videoconvertscale: videoconvert: Video colorspace converter

...

autodetect: autovideosink: Auto video sink

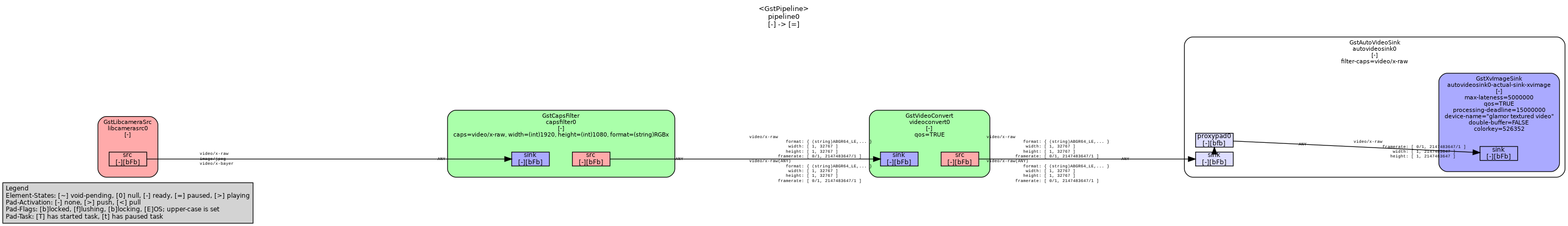

로컬 테스트

완성된 명령어 실행

ssw@raspberrypi:~ $ gst-launch-1.0 libcamerasrc ! capsfilter caps=video/x-raw,width=1920,height=1080,format=RGBx ! videoconvert ! autovideosink

Setting pipeline to PAUSED ...

[7:42:10.188460663] [5953] INFO Camera camera_manager.cpp:325 libcamera v0.3.2+27-7330f29b

[7:42:10.204027175] [5958] INFO RPI pisp.cpp:695 libpisp version v1.0.7 28196ed6edcf 29-08-2024 (16:33:32)

[7:42:10.218462728] [5958] INFO RPI pisp.cpp:1154 Registered camera /base/axi/pcie@120000/rp1/i2c@80000/imx219@10 to CFE device /dev/media2 and ISP device /dev/media0 using PiSP variant BCM2712_C0

Pipeline is live and does not need PREROLL ...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

[7:42:10.221408238] [5961] INFO Camera camera.cpp:1197 configuring streams: (0) 1920x1080-XBGR8888

[7:42:10.221572719] [5958] INFO RPI pisp.cpp:1450 Sensor: /base/axi/pcie@120000/rp1/i2c@80000/imx219@10 - Selected sensor format: 1920x1080-SBGGR10_1X10 - Selected CFE format: 1920x1080-PC1B

Redistribute latency...

WARNING: from element /GstPipeline:pipeline0/GstAutoVideoSink:autovideosink0/GstXvImageSink:autovideosink0-actual-sink-xvimage: Pipeline construction is invalid, please add queues.

Additional debug info:

../libs/gst/base/gstbasesink.c(1249): gst_base_sink_query_latency (): /GstPipeline:pipeline0/GstAutoVideoSink:autovideosink0/GstXvImageSink:autovideosink0-actual-sink-xvimage:

Not enough buffering available for the processing deadline of 0:00:00.015000000, add enough queues to buffer 0:00:00.015000000 additional data. Shortening processing latency to 0:00:00.000000000.

WARNING: from element /GstPipeline:pipeline0/GstAutoVideoSink:autovideosink0/GstXvImageSink:autovideosink0-actual-sink-xvimage: Pipeline construction is invalid, please add queues.

Additional debug info:

../libs/gst/base/gstbasesink.c(1249): gst_base_sink_query_latency (): /GstPipeline:pipeline0/GstAutoVideoSink:autovideosink0/GstXvImageSink:autovideosink0-actual-sink-xvimage:

Not enough buffering available for the processing deadline of 0:00:00.015000000, add enough queues to buffer 0:00:00.015000000 additional data. Shortening processing latency to 0:00:00.000000000.

^Chandling interrupt.

Interrupt: Stopping pipeline ...

Execution ended after 0:00:22.482502964

Setting pipeline to NULL ...

Freeing pipeline ...

ssw@raspberrypi:~ $

ssw@raspberrypi:~ $ ls *.dot

0.00.00.068734669-gst-launch.NULL_READY.dot 0.00.00.448240577-gst-launch.warning.dot

0.00.00.070051639-gst-launch.READY_PAUSED.dot 0.00.04.691039371-gst-launch.error.dot

0.00.00.447208068-gst-launch.PAUSED_PLAYING.dot 0.00.04.692076806-gst-launch.PLAYING_PAUSED.dot

0.00.00.447833451-gst-launch.warning.dot 0.00.04.703469147-gst-launch.PAUSED_READY.dot

ssw@raspberrypi:~ $ 디버깅 옵션

파이프라인 이해를 위한 명령 작성을 하게 될 경우, 사용할수 있는 디버깅 옵션

- https://stackoverflow.com/questions/33613109/gstreamer-why-do-i-need-a-videoconvert-before-displaying-some-filter

- https://developer.ridgerun.com/wiki/index.php/How_to_generate_a_GStreamer_pipeline_diagram

ssw@raspberrypi:~ $ GST_DEBUG_DUMP_DOT_DIR=. gst-launch-1.0 libcamerasrc ! capsfilter caps=video/x-raw,width=1920,height=1080,format=RGBx ! videoconvert ! autovideosink

ssw@raspberrypi:~ $ dot -Tpng *.dot > pipeline.png

====

tcp 스트리밍 테스트

chatgpt 내용을 참고

tcpserversink : rpi5

ssw@raspberrypi:~ $ GST_DEBUG_DUMP_DOT_DIR=. gst-launch-1.0 libcamerasrc ! capsfilter caps=video/x-raw,width=640,height=320,format=RGBx,framerate=30/1 ! videoconvert ! x264enc tune=zerolatency bitrate=500 ! h264parse ! mpegtsmux ! tcpserversink host=172.30.1.100 port=5000

Setting pipeline to PAUSED ...

[8:14:57.114029342] [6994] INFO Camera camera_manager.cpp:325 libcamera v0.3.2+27-7330f29b

[8:14:57.130679385] [6998] INFO RPI pisp.cpp:695 libpisp version v1.0.7 28196ed6edcf 29-08-2024 (16:33:32)

[8:14:57.146629690] [6998] INFO RPI pisp.cpp:1154 Registered camera /base/axi/pcie@120000/rp1/i2c@80000/imx219@10 to CFE device /dev/media2 and ISP device /dev/media0 using PiSP variant BCM2712_C0

Pipeline is live and does not need PREROLL ...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

[8:14:57.160903615] [7004] INFO Camera camera.cpp:1197 configuring streams: (0) 640x320-XBGR8888

[8:14:57.168420372] [6998] INFO RPI pisp.cpp:1450 Sensor: /base/axi/pcie@120000/rp1/i2c@80000/imx219@10 - Selected sensor format: 1920x1080-SBGGR10_1X10 - Selected CFE format: 1920x1080-PC1B

Redistribute latency...

Redistribute latency...

Redistribute latency...

WARNING: from element /GstPipeline:pipeline0/GstTCPServerSink:tcpserversink0: Pipeline construction is invalid, please add queues.

Additional debug info:

../libs/gst/base/gstbasesink.c(1249): gst_base_sink_query_latency (): /GstPipeline:pipeline0/GstTCPServerSink:tcpserversink0:

Not enough buffering available for the processing deadline of 0:00:00.020000000, add enough queues to buffer 0:00:00.020000000 additional data. Shortening processing latency to 0:00:00.000000000.

WARNING: from element /GstPipeline:pipeline0/GstTCPServerSink:tcpserversink0: Pipeline construction is invalid, please add queues.

Additional debug info:

../libs/gst/base/gstbasesink.c(1249): gst_base_sink_query_latency (): /GstPipeline:pipeline0/GstTCPServerSink:tcpserversink0:

Not enough buffering available for the processing deadline of 0:00:00.020000000, add enough queues to buffer 0:00:00.020000000 additional data. Shortening processing latency to 0:00:00.000000000.

^Chandling interrupt.

Interrupt: Stopping pipeline ...

Execution ended after 0:00:28.451893352

Setting pipeline to NULL ...

Freeing pipeline ...

ssw@raspberrypi:~ $

tcpclientsrc : pc (ubuntu22 vm)

user@user-virtual-machine:~$ gst-launch-1.0 tcpclientsrc host=172.30.1.100 port=5000 ! tsdemux ! h264parse ! avdec_h264 ! videoconvert ! autovideosink

Setting pipeline to PAUSED ...

Pipeline is PREROLLING ...

Redistribute latency...

Redistribute latency...

Pipeline is PREROLLED ...

Setting pipeline to PLAYING ...

New clock: GstSystemClock

Redistribute latency...

Got EOS from element "pipeline0".

Execution ended after 0:00:22.863227681

Setting pipeline to NULL ...

Freeing pipeline ...

user@user-virtual-machine:~$ 3개의 댓글

디바이스 붙은걸 확인할때 사용하는 명령

ssw@ssw-desktop:~$ v4l2-ctl --list-devices

NVIDIA Tegra Video Input Device (platform:tegra-camrtc-ca):

/dev/media0

vi-output, e-con_cam 10-0042 (platform:tegra-capture-vi:2):

/dev/video0

ssw@ssw-desktop:~$지원 frame 확인

ssw@ssw-desktop:~$ v4l2-ctl --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Type: Video Capture

[0]: 'BG10' (10-bit Bayer BGBG/GRGR)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x2288

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x2288

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 2028x1112

Interval: Discrete 0.004s (240.000 fps)

Size: Discrete 2028x1112

Interval: Discrete 0.004s (240.000 fps)

[1]: 'BG12' (12-bit Bayer BGBG/GRGR)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x3040

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x2288

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 4056x2288

Interval: Discrete 0.017s (60.000 fps)

Size: Discrete 2028x1112

Interval: Discrete 0.004s (240.000 fps)

Size: Discrete 2028x1112

Interval: Discrete 0.004s (240.000 fps)

ssw@ssw-desktop:~$답글 달기

실시간 영상 대비, latency 가 6초정도 발생하고 있는데, 이 부분에 대해선 어느정도 고려해두고 실 프로그램 작성이 필요할듯 싶다.