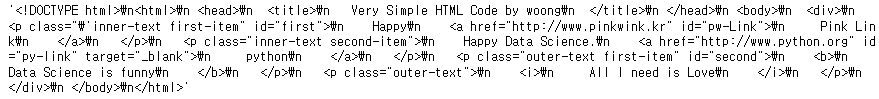

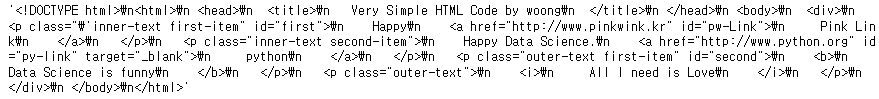

BeautifulSoup

from bs4 import BeautifulSoup

page = open('zerobase.html', 'r').read()

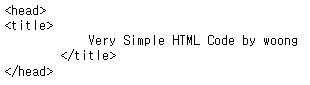

soup = BeautifulSoup(page, 'html.parser')

soup.prettify()

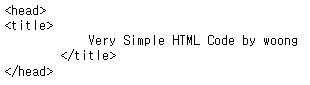

# head 확인

soup.head

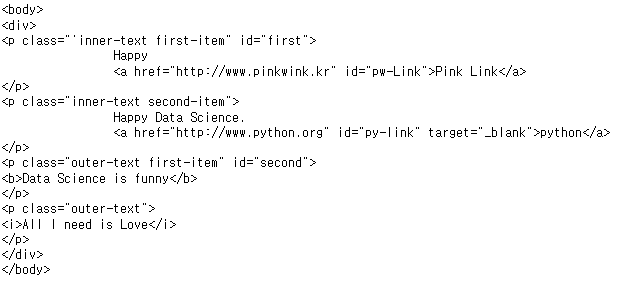

# body 확인

soup.body

# p 태그 확인

# 처음 발견한 p태그 반환

# find()

soup.p

soup.find('p')

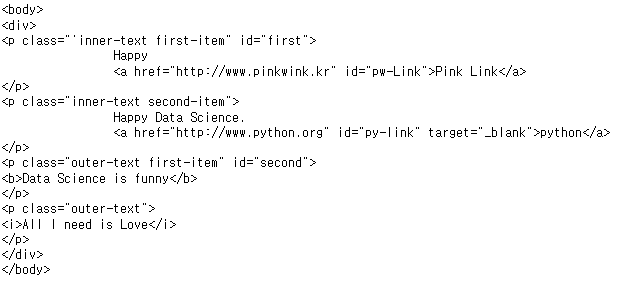

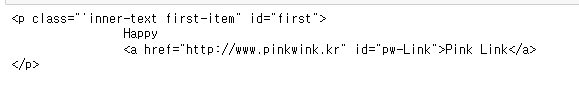

# 조건

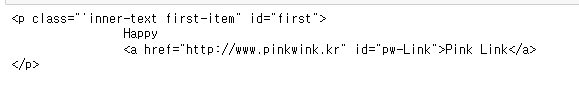

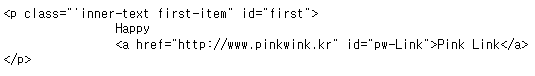

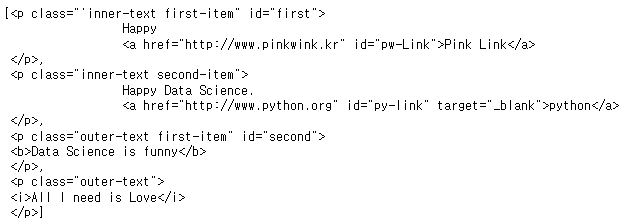

soup.find('p', class_="'inner-text first-item")

soup.find('p', {'class':"'inner-text first-item", 'id':'first'})

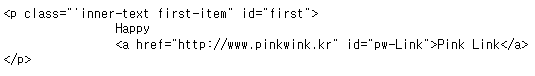

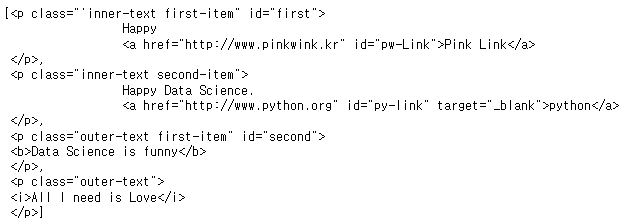

# 여러 태크 반환

# 리스트 형태로 반환

# find_all

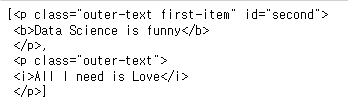

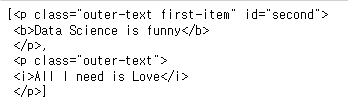

soup.find_all('p')

soup.find_all(class_='outer-text')

BeautifulSoup 예제 1-1 네이버 금융

from urllib.request import urlopen

from bs4 import BeautifulSoup

url = 'https://finance.naver.com/marketindex/'

page = urlopen(url)

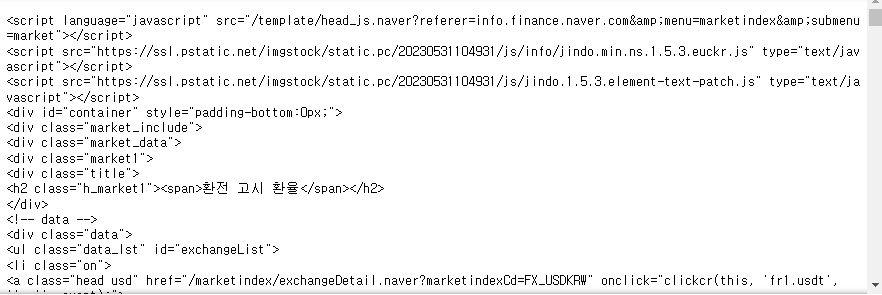

soup = BeautifulSoup(page, 'html.parser')

soup

# 동일한 기능 1

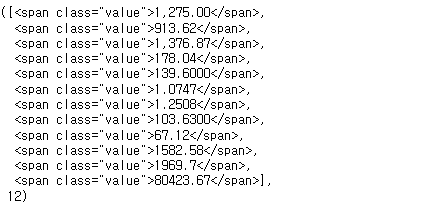

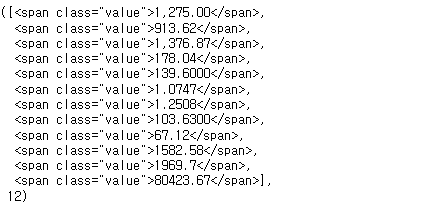

soup.find_all('span', 'value'), (len(soup.find_all('span', 'value')))

# 동일한 기능 2

soup.find_all('span', class_='value'), (len(soup.find_all('span', class_='value')))

# 동일한 기능 3

soup.find_all('span', {"class":'value'}), (len(soup.find_all('span', {"class":'value'})))

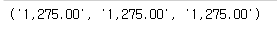

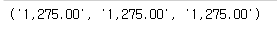

soup.find_all('span', {"class":'value'})[0].text,soup.find_all('span', {"class":'value'})[0].string,soup.find_all('span', {"class":'value'})[0].get_text()

from urllib.request import urlopen

from bs4 import BeautifulSoup

url = 'https://finance.naver.com/marketindex/'

response = urlopen(url)

# soup = BeautifulSoup(page, 'html.parser')

# soup

response.status # http 상태 코드

BeautifulSoup 예제 1-2 네이버 금융

- requests

- find, find_all = select_one, select

- find, select_one : 단일선택

- find_all, select : 다중선택

import requests

# from urllib.request.Request

from bs4 import BeautifulSoup

import pandas as pd

url = 'https://finance.naver.com/marketindex/'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

soup

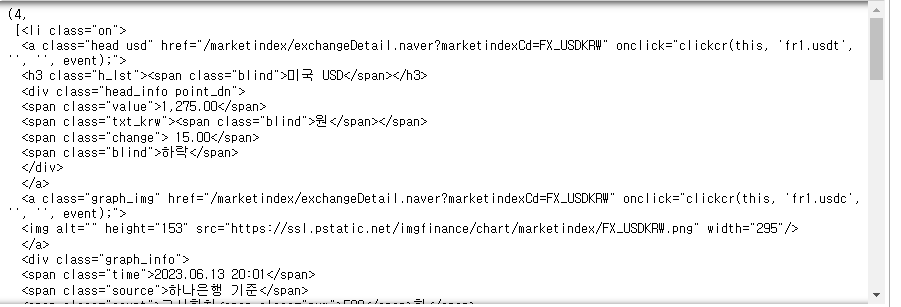

#soup.find_all('li', 'on')

# id => #, class => .

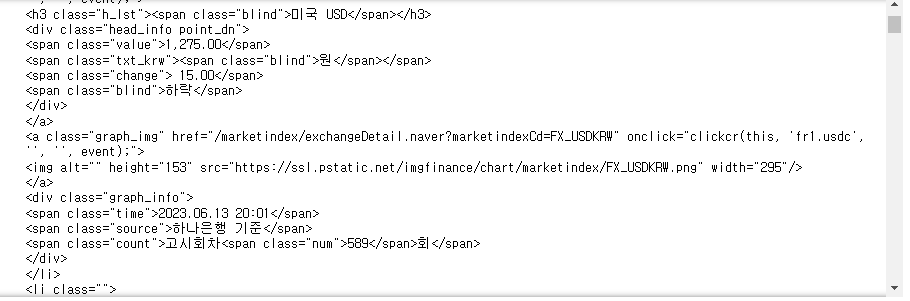

exchangeList = soup.select('#exchangeList > li')

len(exchangeList), exchangeList

title = exchangeList[0].select_one('.h_lst').text

exchage = exchangeList[0].select_one('.value').text

change = exchangeList[0].select_one('.change').text

updown = exchangeList[0].select_one('div.head_info.point_dn >.blind').text

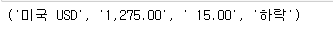

title, exchage, change, updown

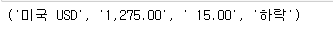

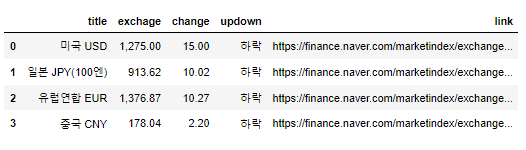

exchage_datas = []

baseUrl = 'https://finance.naver.com'

for item in exchangeList:

data = {

'title' : item.select_one('.h_lst').text,

'exchage' : item.select_one('.value').text,

'change' : item.select_one('.change').text,

'updown' : item.select_one('div.head_info.point_dn >.blind').text,

'link' : baseUrl + item.select_one('a').get('href')

}

exchage_datas.append(data)

exchage_datas

df = pd.DataFrame(exchage_datas)

df.to_excel('naverfinance.xlsx', encoding='utf-8')

df

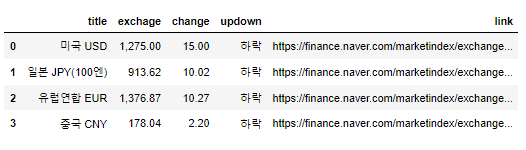

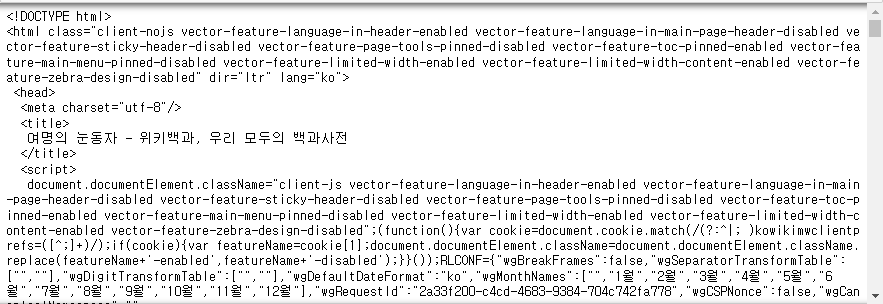

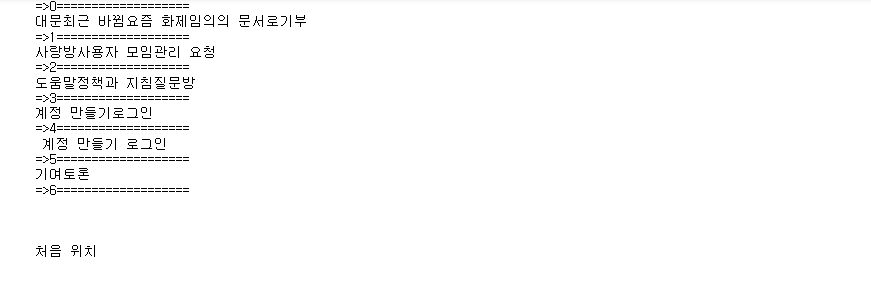

위키백과 문서 정보 가져오기

from urllib.request import urlopen, Request

import urllib

html = 'https://ko.wikipedia.org/wiki/{search_words}'

req = Request(html.format(search_words=urllib.parse.quote('여명의_눈동자')))

response = urlopen(req)

soup = BeautifulSoup(response, 'html.parser')

print(soup.prettify())

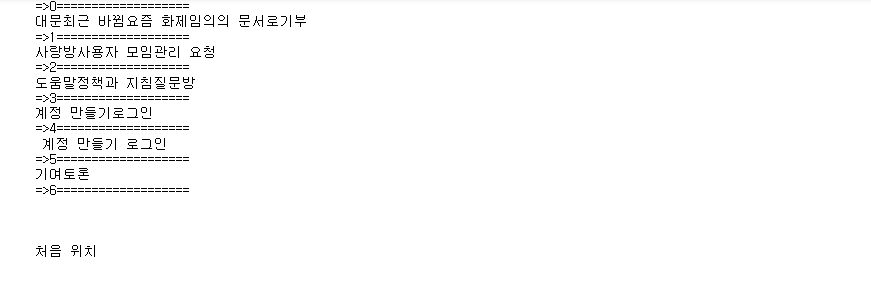

n = 0

for each in soup.find_all('ul'):

print('=>' + str(n) + '===================')

print(each.get_text())

n += 1

soup.find_all('ul')[32].text.strip().replace('\xa0', '').replace('\n', '')

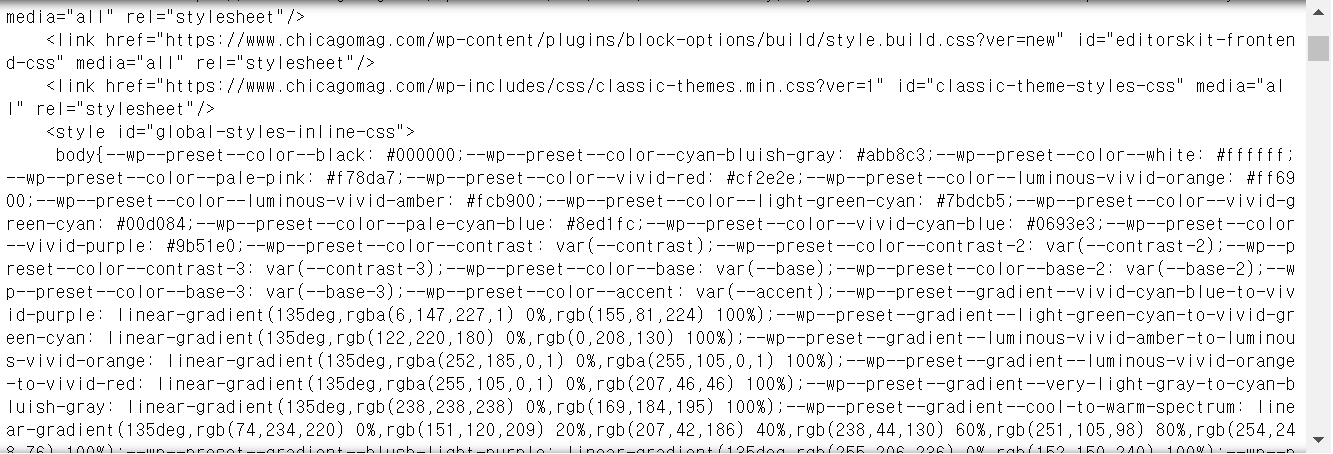

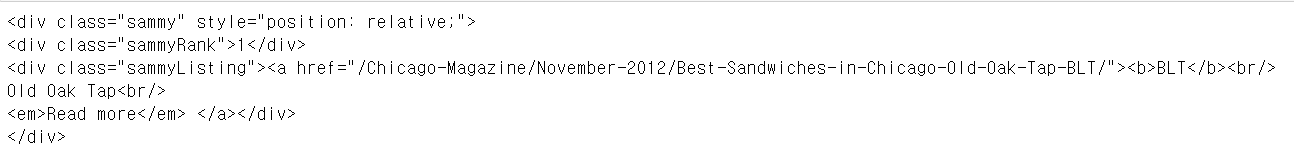

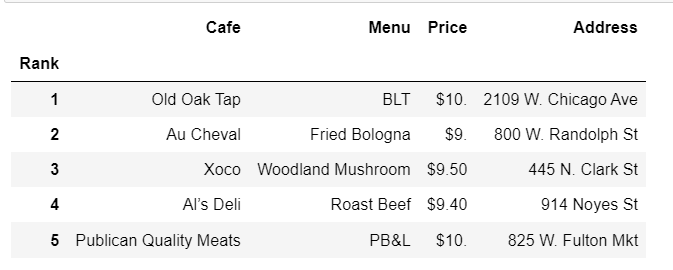

시카고 맛집

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

url_base = 'https://www.chicagomag.com/'

url_sub = 'chicago-magazine/november-2012/best-sandwiches-chicago/'

url = url_base + url_sub

req = Request(url, headers={'User-Agent' :'Chrome'})

html = urlopen(req)

soup = BeautifulSoup(html, 'html.parser')

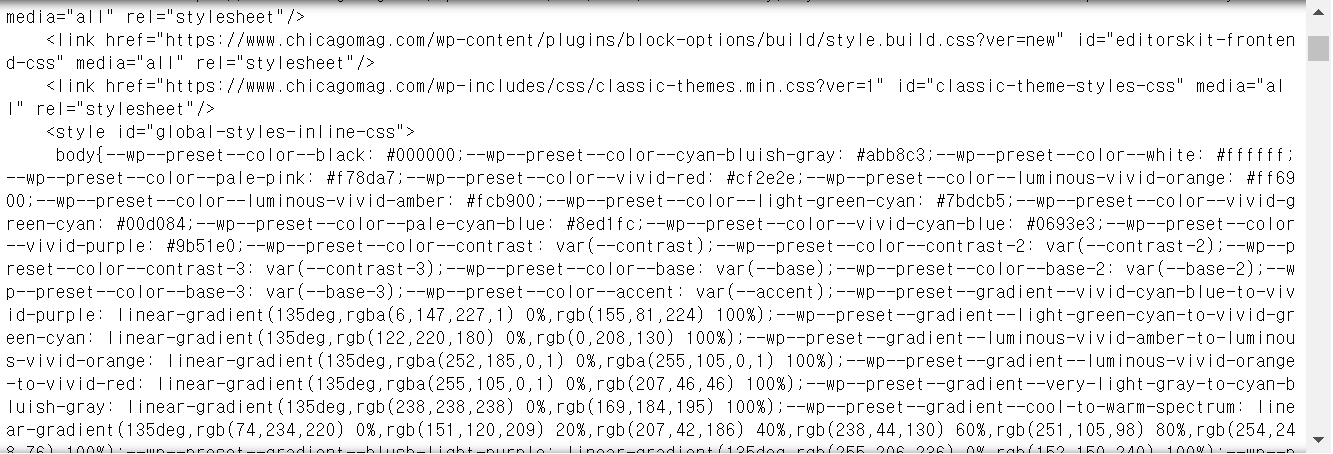

print(soup.prettify())

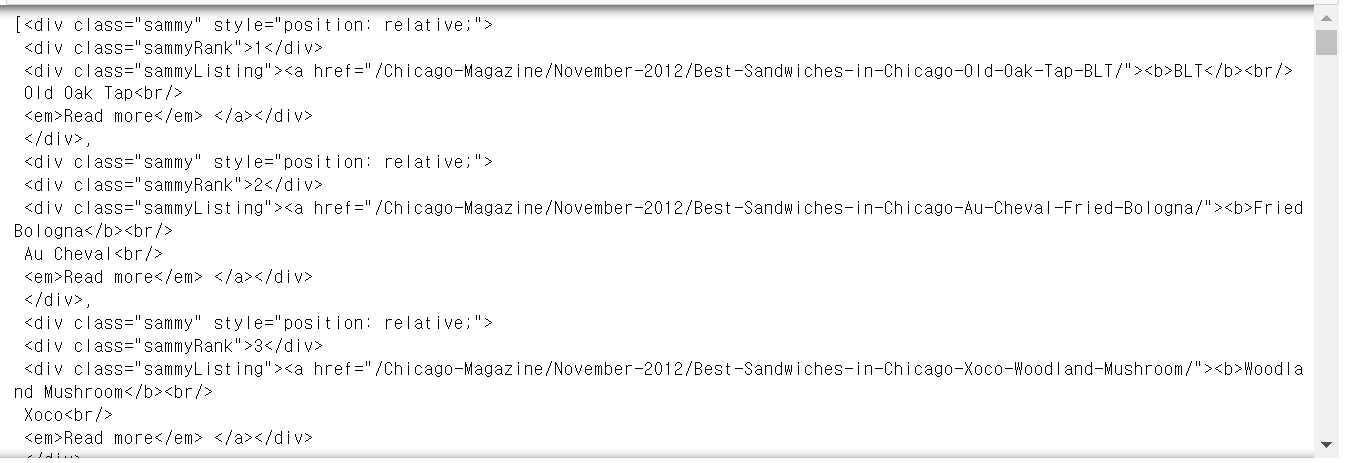

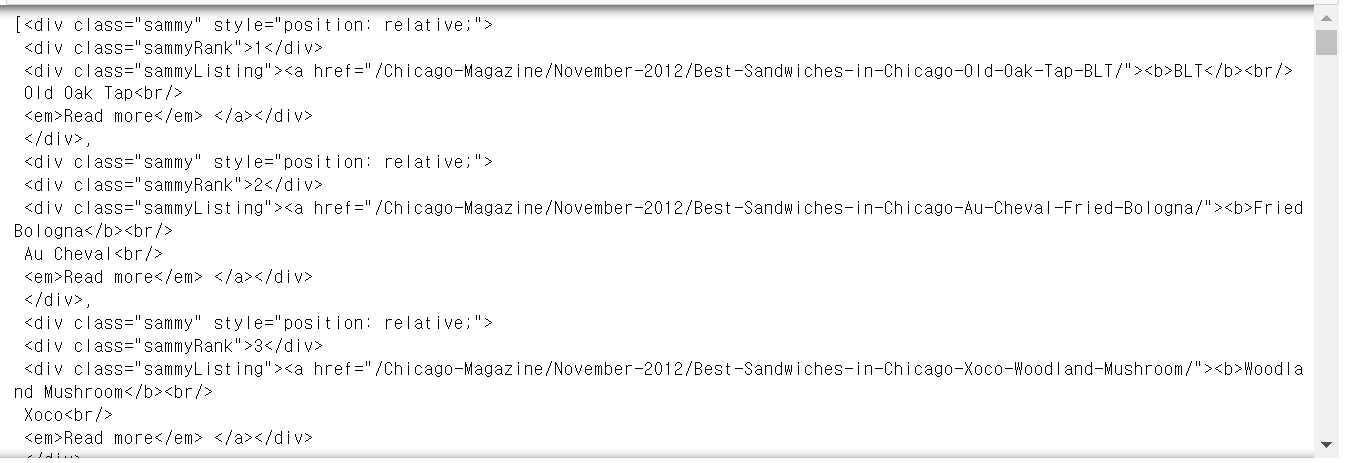

soup.find_all('div', 'sammy')

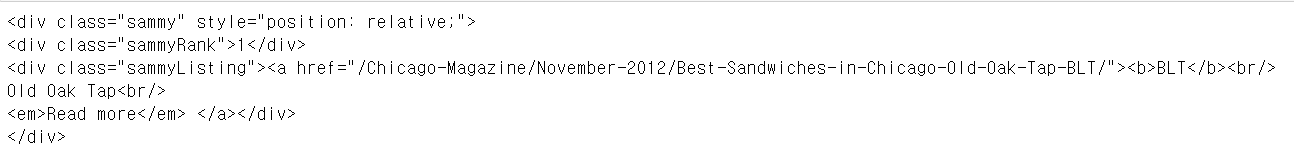

temp_one = soup.find_all('div', 'sammy')[0]

temp_one

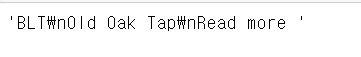

temp_one.find('div', {'class' : 'sammyListing'}).get_text()

import re

tmp_string=temp_one.find(class_='sammyListing').get_text()

re.split(('\n|\r\n'),tmp_string)

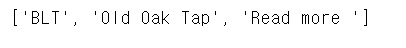

from urllib.parse import urljoin

url_base = 'https://www.chicagomag.com/'

rank = []

main_menu = []

cafe_name = []

url_add = []

list_soup = soup.find_all('div', 'sammy')

for item in list_soup:

rank.append(item.find(class_='sammyRank').get_text())

tmp_string=item.find(class_='sammyListing').get_text()

main_menu.append(re.split(('\n|\r\n'),tmp_string)[0])

cafe_name.append(re.split(('\n|\r\n'),tmp_string)[1])

url_add.append(urljoin(url_base, item.find('a')['href']))

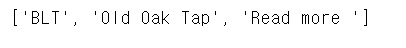

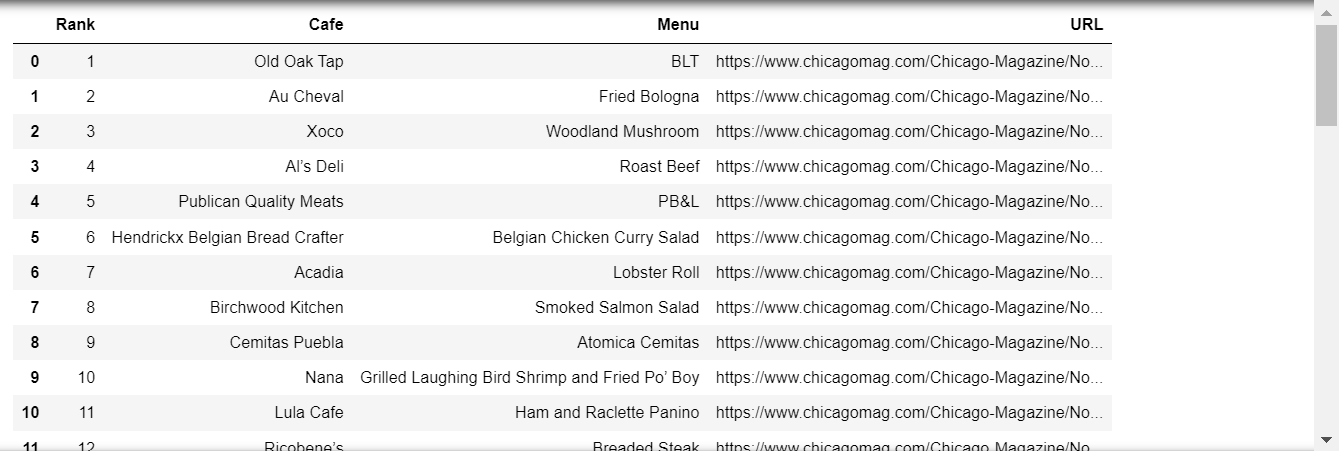

import pandas as pd

data = {

'Rank' : rank,

'Menu' : main_menu,

'Cafe' : cafe_name,

'URL' : url_add

}

df = pd.DataFrame(data, columns=['Rank', 'Cafe', 'Menu', 'URL'])

df

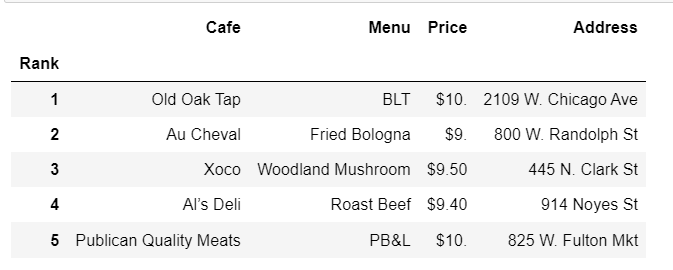

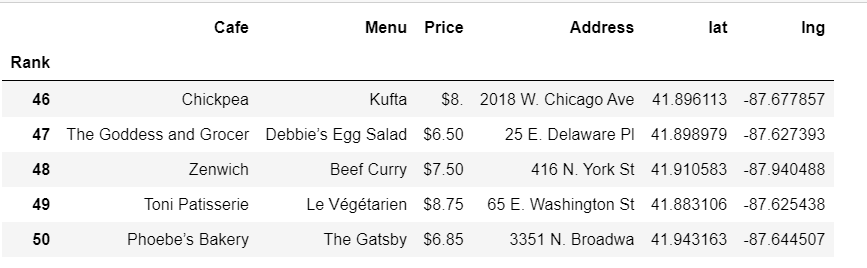

시카고 맛집 데이터 하위페이지

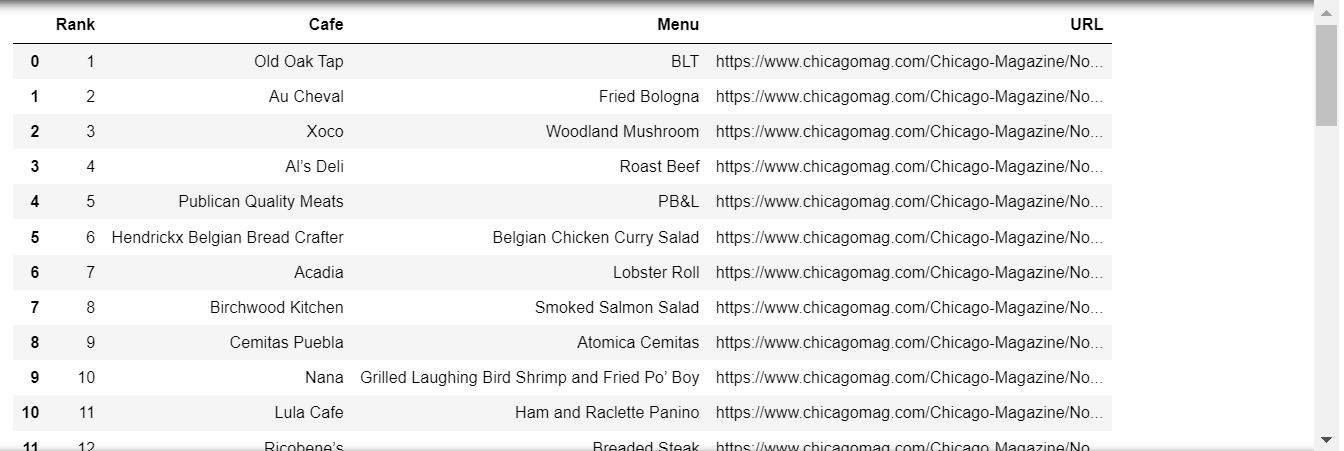

import pandas as pd

from urllib.request import urlopen, Request

from bs4 import BeautifulSoup

df = pd.read_csv('chicago.csv', index_col=0)

df

req = Request(df['URL'][0], headers={'User-Agent' :'Chrome'})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, 'html.parser')

soup_tmp.find('p', 'addy')

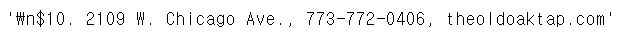

price_tmp = soup_tmp.find('p', 'addy').text

price_tmp

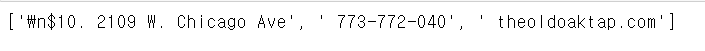

import re

re.split('.,', price_tmp)

price_tmp = re.split('.,', price_tmp)[0]

price_tmp

from tqdm import tqdm

price = []

address = []

for idx, row in tqdm(df.iterrows()):

req = Request(row['URL'], headers={'User-Agent' :'Chrome'})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, 'html.parser')

gettings = soup_tmp.find('p', 'addy').get_text()

price_tmp = re.split('.,', gettings)[0]

tmp = re.search('\$\d+\.(\d+)?', price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp)+2:])

print(idx)

df['Price'] = price

df['Address'] = address

df = df.loc[:, ["Rank", "Cafe", "Menu", "Price", "Address"]]

df.set_index('Rank', inplace=True)

df.head()

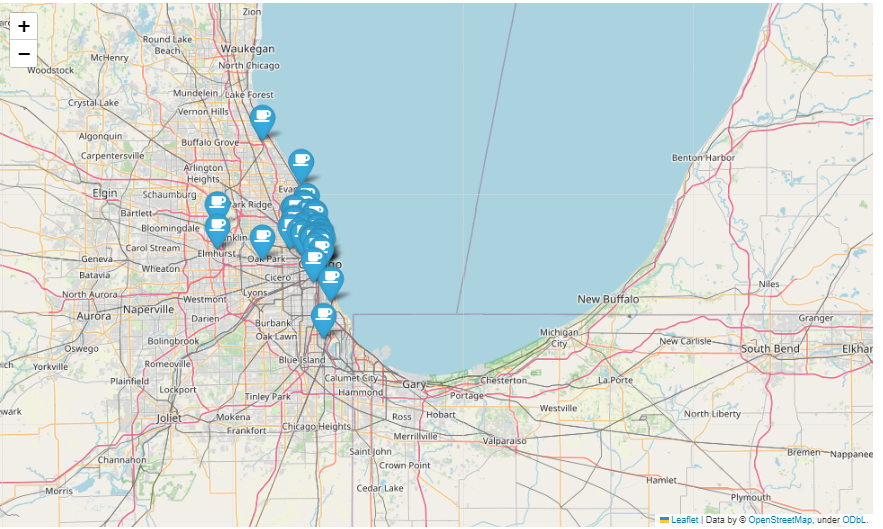

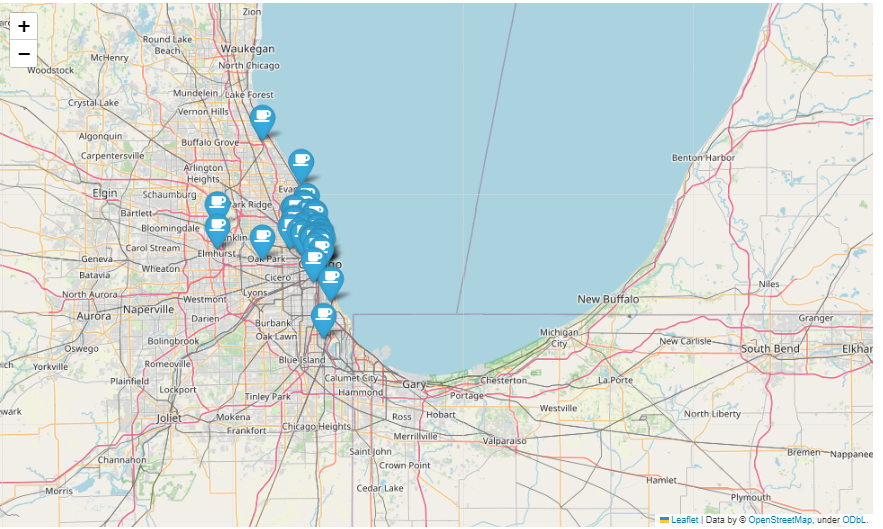

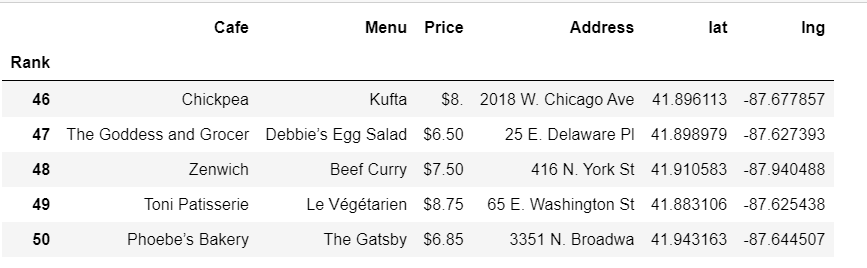

시카고 맛집 데이터 지도 시각화

import folium

import pandas as pd

import numpy as np

import googlemaps

from tqdm import tqdm

lat = []

lng = []

for idx, row in tqdm(df.iterrows()):

if not row["Address"] == "Multiple location":

target_name = row["Address"] + ", " + "Chicago"

# print(target_name)

gmaps_output = gmaps.geocode(target_name)

location_ouput = gmaps_output[0].get("geometry")

lat.append(location_ouput["location"]["lat"])

lng.append(location_ouput["location"]["lng"])

# location_output = gmaps_output[0]

else:

lat.append(np.nan)

lng.append(np.nan)

df["lat"] = lat

df["lng"] = lng

df.tail()

mapping = folium.Map(location=[41.8781136, -87.6297982], zoom_start=11)

for idx, row in df.iterrows():

if not row["Address"] == "Multiple location":

folium.Marker(

location=[row["lat"], row["lng"]],

popup=row["Cafe"],

tooltip=row["Menu"],

icon=folium.Icon(

icon="coffee",

prefix="fa"

)

).add_to(mapping)

mapping