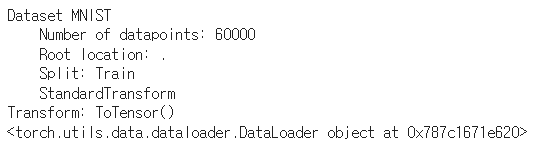

Dataset

from torch.utils.data import DataLoader

from torchvision.datasets import MNIST

from torchvision.transforms import ToTensor

BATCH_SIZE = 32

train_ds = MNIST(root='.', train=True, transform=ToTensor(), download=True)

train_loader = DataLoader(dataset=train_ds, batch_size=BATCH_SIZE, shuffle=True)데이터가 다운로드 되고 데이터 정보를 출력해 볼 수 있다.

Model

import torch.nn as nn

from torchsummary import summary

class MLP(nn.Module):

def __init__(self):

super(MLP, self).__init__()

self.fc1 = nn.Linear(in_features=784, out_features=128)

self.fc1_act = nn.Sigmoid()

self.fc2 = nn.Linear(in_features=128, out_features=64)

self.fc2_act = nn.Sigmoid()

self.fc3 = nn.Linear(in_features=64, out_features=10)

self.softmax = nn.Softmax(dim=1)

def forward(self, x):

x = self.fc1(x)

x = self.fc1_act(x)

x = self.fc2(x)

x = self.fc2_act(x)

x = self.fc3(x)

x = self.softmax(x)

return x저번 글에서 해당 모델에 대한 설명을 적어놓았다.

학습에 필요한 object 만들기

from torch.optim import SGD # x := x - alpha * dJ/dx

import torch.nn as nn

LR = 0.1

DEVICE = 'cuda' # nvidia graphic card

model = MLP().to(DEVICE)

loss_fn = nn.CrossEntropyLoss()

optimizer = SGD(model.parameters(), lr=LR)학습시키기

from tqdm import tqdm

EPOCHS = 20

N_TRAIN_SAMPLES = 60000

for epoch in range(EPOCHS):

loss_epoch = 0.

for imgs, labels in tqdm(train_loader):

imgs = imgs.to(DEVICE).reshape(imgs.shape[0], -1)

labels = labels.to(DEVICE)

preds = model(imgs)

loss = loss_fn(preds, labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_epoch += loss.item() * imgs.shape[0] # loss.item(): tensor -> int or float

loss_epoch /= N_TRAIN_SAMPLES

print(f"\nEpoch: {epoch + 1}")

print(f"Train Loss: {loss_epoch:.4f}\n")