LLM2LLM: Boosting LLMs with Novel Iterative Data Enhancement

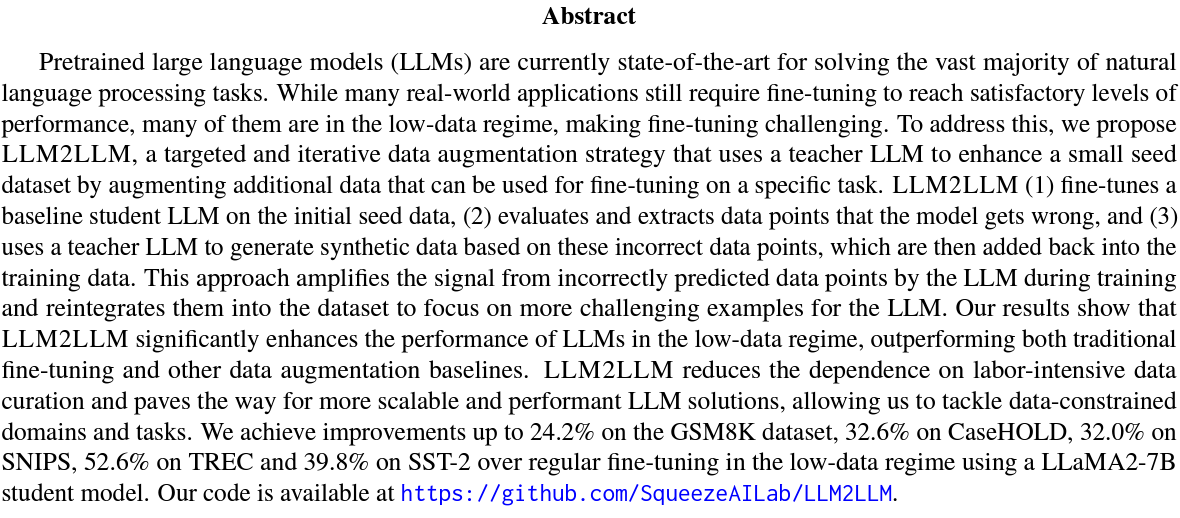

Abstract

- 문제 상황: low-data regime, making fine-tuning challenging

- LLM2LLM, a targeted and iterative data augmentation strategy that uses a teacher LLM to enhance a small seed dataset by augmenting additional data that can be used for fine-tuning on a specific task.

- (1) fine-tunes a baseline student LLM on the initial seed data

- (2) evaluates and extracts data points that the model gets wrong

- (3) uses a teacher LLM to generate synthetic data based on these incorrect data points, which are then added back into the training data

- This approach amplifies the signal from incorrectly predicted data points by the LLM during training and reintegrates them into the dataset to focus on more challenging examples for the LLM.

Introduction